Mid-air Imaging for a Collaborative Spatial Augmented Reality

System

Dashlen Naidoo, Glen Bright and James Edward Thomas Collins

Mechatronics and Robotics Research Groups (MR

2

G), University of KwaZulu-Natal, Durban, South Africa

Keywords: Spatial Augmented Reality, Mid-air Image, Collaboration, User Evaluation, Quality of Experience.

Abstract: Aerial imaging can be used to deliver mid-air imagining in a collaborative Spatial Augmented Reality system.

This research aimed to overcome the current disadvantages of Augmented Reality headsets, which include

physical discomfort, visual discomfort, high cost and its single user operation. The concept design presented

delivered multiple user interaction simultaneously while delivering an increased field of view. This was done

through the ASKA3D aerial imaging plate used to deliver mid-air projection, in conjunction with a camera

used for view dependant rendering of mid-air images. This design delivered an Augmented Reality experience

without the need for robust technology and solely focused on the method of mid-air image projection. The

system was successful in delivering a high-quality mid-air image. A Quality of Experience model was found

to be the most suited method for user-assessment of this multimedia device. The overall average percentage

rating for the system was 69.4% which was considered successful given that what was evaluated was only

one part of a whole system to be built.

1 INTRODUCTION

To improve the state of technology in our time there

are a variety of approaches that one can take but none

have received as much interest as Augmented Reality

(AR). Augmented Reality brings the real world and

the digital world together. This is done through

overlaying digital information onto the world around

us. This should not be confused with Virtual Reality

(VR) which brings the user to a digital environment

isolating the user from the real world (Marr, 2019).

Augmented reality can be used in numerous ways

within different fields.

In the field of design, AR is commonly used at the

end of the design process, it is never used to obtain

the final design solution. In the case of mechanical

design, the systems are developed so that they can be

viewed in 3D using a phone, tablet or a Head

Mounted Display (HMD). Inspecting the object in 3D

can help the designer identify possible faults with the

design so that they can implement a corrective

procedure before construction of the part. In civil

design AR has been used give the designers the ability

to preview the inside of a building before construction

is finished. This way designers can walk through a

building while it is just brick and mortar and preview

what the inside of the building will look like using AR.

This research presents a comparative study on

current methods of holographic projection using the

half-slivered mirror approach. It then suggests a new

method of projection. This new method of projection

will increase the number of possible users interacting

with the system simultaneously while delivering a

greater field of view. The focus of the design is one

of user collaboration.

2 RELATED WORK

Common AR systems have the display for the system

situated on the user, either through a HMD or a hand-

held device. There is another type of AR system that

promotes multiple user collaboration unlike common

AR devices, these systems are called Spatial

Augmented Reality (SAR) systems. To create this

collaborative system SAR separates the display from

the user of the system thus allowing multiple users on

a single device (IGI Global, n.d.). Unlike common

AR systems, SAR systems are not commercially

available. SAR systems are still undergoing research

and development, this is the main reason behind its

unavailability to the public.

AR is more commonly used on hand-held devices;

since most hand-held devices are equipped with a

Naidoo, D., Bright, G. and Collins, J.

Mid-air Imaging for a Collaborative Spatial Augmented Reality System.

DOI: 10.5220/0009779103850393

In Proceedings of the 17th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2020), pages 385-393

ISBN: 978-989-758-442-8

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

385

display, sensor technology and a camera it makes it a

perfect platform for AR applications (Kim,

Takahashi, Yamamoto, Maekawa, and Naemura,

2014). AR is perceived as using an HMD that will let

us see digital images that are not there. HMD’s do

have their own weaknesses such as incorrect focus

cues, small field of view, tracking inaccuracies and

inherent latency as presented by Hilliges, Kim, Izadi,

Weiss and Wilson (2012), all of which result in user

discomfort.

Radkowski and Oliver (2014) present a discussion

about natural visual perception not being present in

some AR devices. This means that virtual content can

only be viewed at a specific position and if viewed

any other way the delivered image would be distorted.

Overcoming this requires view dependent rendering,

this is a method that will adapt the perspective of the

virtual information to the viewing position of the user

(Radkowski & Oliver, 2014). This method is based

on head tracking.

Deering M (1993) writes that without

headtracking present in the system the result will be a

fixed viewing system. Using headtracking as a

possible solution will allow corrective viewing but

will limit the number of users on the system.

2.1 Mid-air Imaging

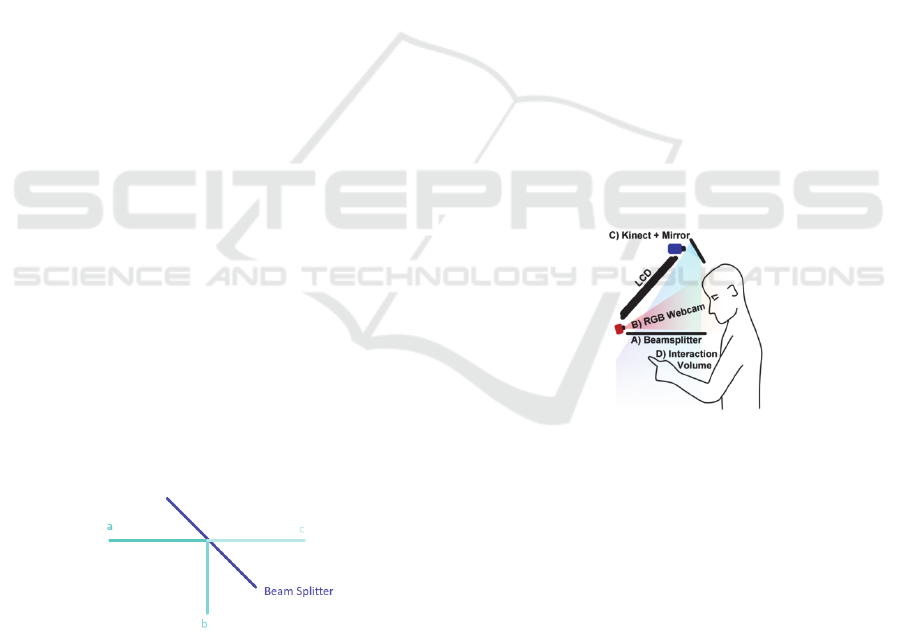

The half-slivered mirror approach to mid-air imaging

was selected as the foundation for the proposed

system. The half-slivered mirror approach involves

using a light source (an LCD screen) and a beam

splitter. The light source is what the system desires to

project into a hologram while the beam splitter

reflects the image from the light source as the desired

hologram. The characteristics of a beam splitter can

be seen illustrated in Fig 1 where “a” is the light

source, “b” is the reflected light and “c” is the

transmitted light (Aspect & Brune, 2017).

Figure 1: Diagram of beam splitter behaviour.

The Mid-air Augmented Reality Interaction with

Objects (MARIO) (Kim et al, 2014) and HoloDesk

(Hilliges et al., 2012) are two systems that achieve

their projection using half-slivered mirrors, but their

implementation is different.

The HoloDesk is a system that allowed users

precise 3D interaction with holograms without the

need of any wearables. The precise interaction was

due to their new proposed technique for

understanding raw Kinect data to approximate and

track objects while supporting physics inspired

interactions between virtual and real objects (Hilliges

et al., 2012). Hilliges et al. (2012) used a Kinect

sensor for the hand and object tracking, an LCD

screen as a light source, an RGB webcam to track the

users head position and a half-slivered mirror beam

splitter. The purpose of head tracking in this system

is to allow viewpoint corrected rendering of the

hologram. This gives the users a sense of reality of

the virtual object as the scene changes the objects to

different depths depending on how the user views the

scene. The system set-up of the HoloDesk can be seen

in Fig 2, the systems’ design is unique due to the

position of the beam splitter. The HoloDesk system

has the light source projected at an angle of 45

degrees with the beam splitter at an angle of 0

degrees. Due to the beam splitter used, it only allowed

the user to view the digital images so long as the user

was looking through the beam splitter. In this way the

area underneath the beam splitter would be the

interaction volume which they ensured had a black

background in order to view the projected images

clearly.

Figure 2: HoloDesk system overview (Hilliges et al., 2012).

The MARIO system proposed by Kim et al.

(2014) was a novel design that overcame the

limitations of half-slivered mirror designs while

enabling users to control mid-air images with their

hands or objects. The interaction permitted on the

MARIO system allows the user to influence the

position of the mid-air image by placing an object or

the users hand in the interaction volume. This does

not allow precise interactions with holograms like

what is delivered on the HoloDesk. Kim et al. (2014)

performs an analysis on the type of beam splitter to

use for their system without losing the advantage of

distortion free imaging that a half-slivered mirror

design gives. One of the main limitations of a half-

slivered mirror design is that virtual images can only

ICINCO 2020 - 17th International Conference on Informatics in Control, Automation and Robotics

386

be placed behind the half-slivered mirror and not in

front, resulting in design limitations (Kim et al.,

2014). The MARIO system overcomes this limitation

by analysing different types of beam splitters that

either deliver distortion free imaging or imaging in-

front of the beam splitter. From the analysis, two

types of beam splitters were found to deliver both

above mention properties; the first was the Dihedral

Corner Reflector Array (DCRA) the second was the

Arial Imaging Plate (AIP).

The AIP was selected as the beam splitter for the

MARIO design and its implementation has the light

source at 0 degrees with the AIP angled at 45 degrees

to deliver the image directly in front of the AIP, as

seen in Fig 3. Kim et al. (2014) further went on to

define a geometric relation between the AIP and the

display, this relationship was given as equations

describing the horizontal and vertical viewing angles

of the AIP. Kim et al. (2014) expected that the closer

the light source (“z”) the greater the viewing angle

both horizontally and vertically.

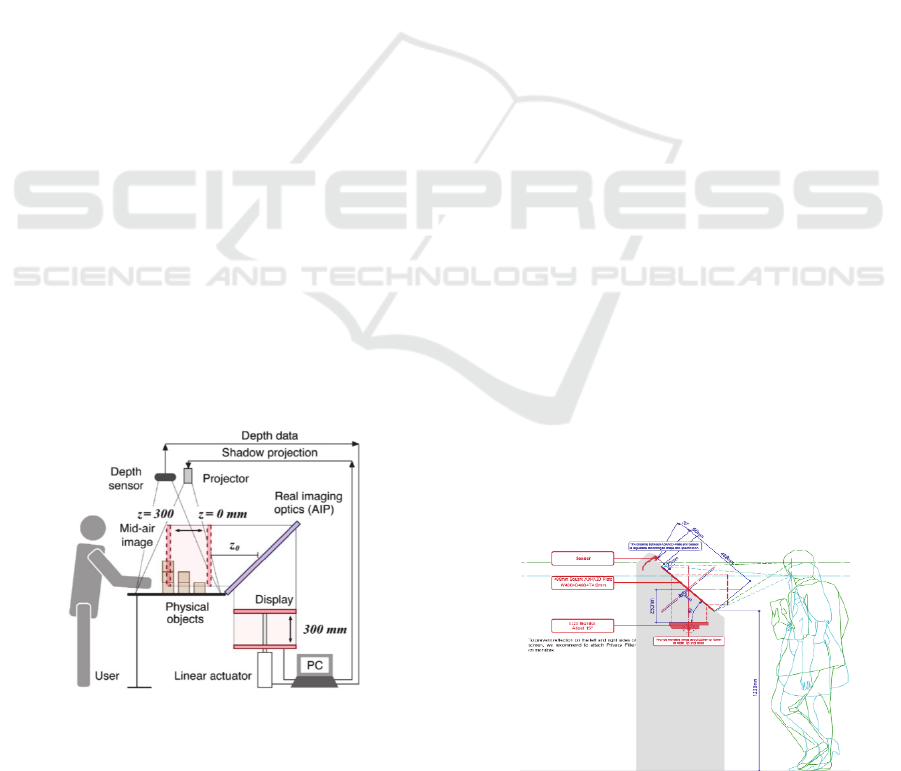

The system overview of MARIO can be seen in

Fig 3, the overall system comprises of three sub-

systems namely object detection, mid-air imaging

display and shadow projection (Kim et al., 2014). The

object detection system has a Kinect depth sensor

mounted directly above the interaction volume to

track user interaction within the mid-air image. The

mid-air imaging display has an LED backlit display

as the light source mounted on a linear actuator that

changes the distance between the AIP and the light

source, this will affect the position of the mid-air

image. The shadow projection system gives the users

of MARIO a sense of 3D since the MARIO system

only displays 2D mid-air images, shadows are placed

underneath the mid-air images displayed in real time

using a projector.

Figure 3: MARIO system overview (Kim et al., 2014).

When comparing the MARIO and HoloDesk

systems each have their own strengths. The HoloDesk

focuses on the precise control techniques and MARIO

focused on a new type of imagine display delivering

the interaction volume in front of the beam splitter.

3 SYSTEM DESIGN

The ideal system should have the strengths of the

HoloDesk and MARIO systems without their

weaknesses. The precise control granted by the

HoloDesk for hand interaction was essential to have

in the system; this was on the basis that the algorithm

used to deliver the hand interaction was available.

The strength of the MARIO system was its ability to

deliver mid-air images in-front of the beam splitter

without having to look through the beam splitter to

see the virtual images.

The work presented by Kim et al. (2014) did not

specify a supplier for the AIP or DCRA beam splitter.

Research into possible suppliers of these beam

splitters led to a company called ASKA3D. ASKA3D

is a company that solely produces AIP’s that deliver

mid-air images. The company prides itself in creating

a product that does not require any complicated

equipment to deliver videos and objects projected in

mid-air (ASKA3D, n.d.). Furthermore, the images are

projected in such a way that allows you to interact

with your hands. The company’s only product is

AIP’s which are separated in two categories: one

being Plastic ASKA3D-Plates and the other being

Glass ASKA3D-Plates. The plastic plates are

manufactured at one size and are rated to only have a

transmittance of 20% while the glass plates come in

four different sizes which are rated to have a

transmittance of 50% (ASKA3D, n.d.). The company

provides two methods of projection with their

product, one of these methods can be seen in Fig 4

and will be the layout used in the proposed system

design. Additionally, ASKA3D will provide

customers with the viewing angle calculations for

each layout when purchasing their product.

Figure 4: ASKA3D AIP Layout (ASKA3D, n.d.).

Mid-air Imaging for a Collaborative Spatial Augmented Reality System

387

The strength of the HoloDesk system relied in its

method of interaction with the virtual images, this

was due to the physics enabled interaction the system

provided.

The HoloDesk was able to achieve this with its

sophisticated new algorithm including the use of the

Kinect sensor which provided the real time depth data

required to deliver the virtual scene and interaction

capability (Hilliges et al., 2012). Therefore, to enable

the same precise interaction the design of the new

system must have a Kinect sensor position in line with

the interaction volume and access to the HoloDesk

system code must be requested. If the code was not

accessible user interaction must be done through

another algorithm for precise hand interaction or

using glove technology. The MARIO system’s

strength was in its method of projection, using an AIP

as the beam splitter which allowed front projection

compared to systems like the HoloDesk which

required the user to interact with the virtual scene

behind the beam splitter. Therefore, the design of the

new system uses an AIP from ASKA 3D.

An important point noted was that head tracking

was used in the HoloDesk system and not in the

MARIO system. The MARIO system was not

designed for users to view 3D digital objects

therefore, it lacks an element of head tracking, but the

system does give the user an illusion of 3D movement

of the digital objects due to its shadow projection

subsystem. The new system must be an evaluative

platform for users; therefore, it would need an

element of head tracking allowing view corrective

rendering of virtual images. The new system will use

a face tracking software called OpenFace 2.2.0: a

facial behaviour analysis toolkit.

A variety of concept designs were generated for

the following system all of which differed on the

method and techniques they used to obtain a

collaborative system. A single concept design stood

out among the rest and was explained below.

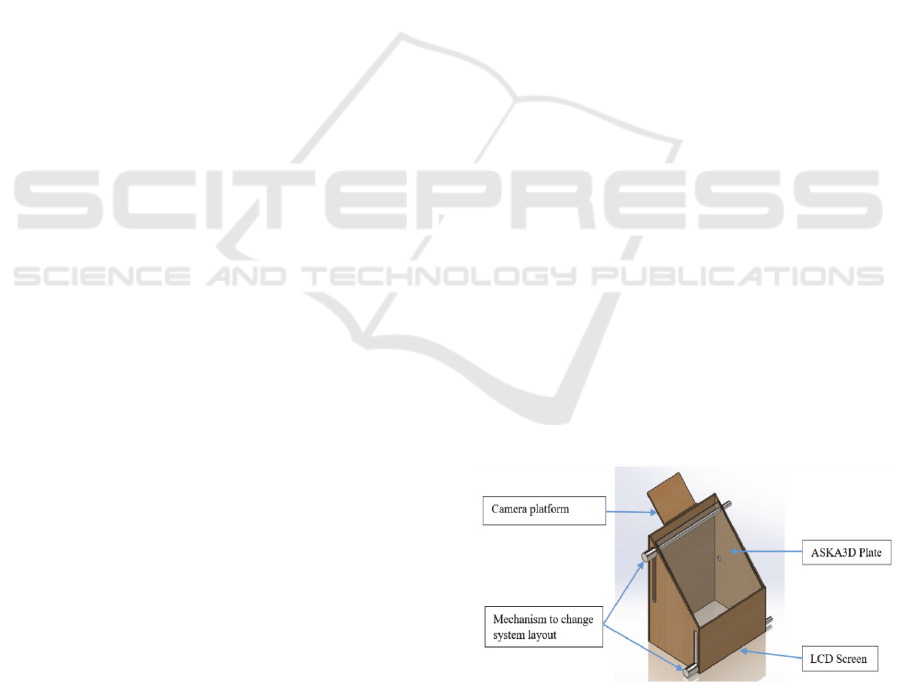

3.1 Concept Design

This concept design proposed a desktop system that

was not robust in size but still delivered an SAR

experience to users of the system. It achieved a

collaborative environment through only one viewing

position. This was due to the ASKA3D plate that was

used as the beam splitter of the device, while it was

not stated by the company, they illustrate that more

than one person can view the mid-air image at the

same time so long as they are within the viewing

angle of the system, this can be seen in Fig 4. This is

a property that will need to be tested in order to

confirm it validity. This concept has the capability to

allow users to switch between the two different

layouts for an ASKA3D plate, allowing users the

freedom and comfort to choose how they interact with

the mid-air images.

The system was designed to house an LCD screen

and an ASKA3D plate in some form of mechanical

linkage that changed the positions of the screen and

plate to conform to the two layouts the plate can be

used in. The design has a platform located at the top

of the system which allowed a camera to be

positioned aligned with the user. This allowed view

dependent rendering of the mid-air images. In this

system the view dependent rendering function was

controlled by the user. In situations where there are

multiple users observing the mid-air images view

dependent rendering cannot take place since it is not

possible to track multiple users’ visions and change

the scene to match every single user view. Only when

there was one user on the device can view dependent

rending be used. Interaction on this system took place

by using an AR glove known as the CaptoGlove

(CaptoGlove, n.d.), this glove allowed physical

interaction with AR images.

This system was not a stand-alone system since it

did not require a dedicated computer to deliver the

mid-air images. The idea behind this system was to

allow SAR projection without the need of robust

technology or complicated requirements. This system

operated by connecting a laptop to the LCD screen

and duplicated or extended the laptop workspace,

then the user would start the software for this AR

system on their laptop. This proposed system

delivered a collaborative environment with a high-

quality SAR experience in a small scale, which

resulted in a reduced overall workspace and a highly

portable device. An example of the proposed system

can be seen in Fig 5.

Figure 5: Conceptual design.

ICINCO 2020 - 17th International Conference on Informatics in Control, Automation and Robotics

388

4 EXPERIMENT AND RESULTS

When exploring possible testing methods to evaluate

AR systems, it was discovered that AR devices were

tested through user interaction as these are

multimedia devices. The work presented by Kim et

al. (2014) and Hilliges et al. (2012), allowed users to

operate their respective systems and asked the users

to provide feedback on the quality of experience

delivered by the AR device. A paper written by

Zhang, Dong, and Saddik (2018) suggested a quality-

of-experience (QoE) model can better evaluate an AR

device from the user’s perspective, Zhang et al.

(2018) went on to present a QoE framework and

modelled it with a fuzzy-interface-system to evaluate

the device. The framework they proposed to evaluate

a holographic AR multimedia device comprised of

four major categories; Content Quality, Hardware

Quality, Environment Understanding and User

interaction. They allowed users to interact with a

Microsoft HoloLens and play two different games,

after which they asked the users to answer a

questionnaire based on their experience with the

system. The experiment performed by this paper will

be similar to the one performed by Zhang et al. (2018)

except the results obtained by the questionnaire will

not be compared to a fuzzy model but rather the user

responses will be depicted on a graph and evaluated

to understand the current strengths and weaknesses of

the system so that future changes can be made.

The focus of this paper was design of an SAR

system; since the beam splitter for this system was

purchased from ASKA3D there was a limitation on

the layout of the system. This paper evaluated the

quality of experience granted by the ASKA3D plate

executed in the layout seen in Fig 4.

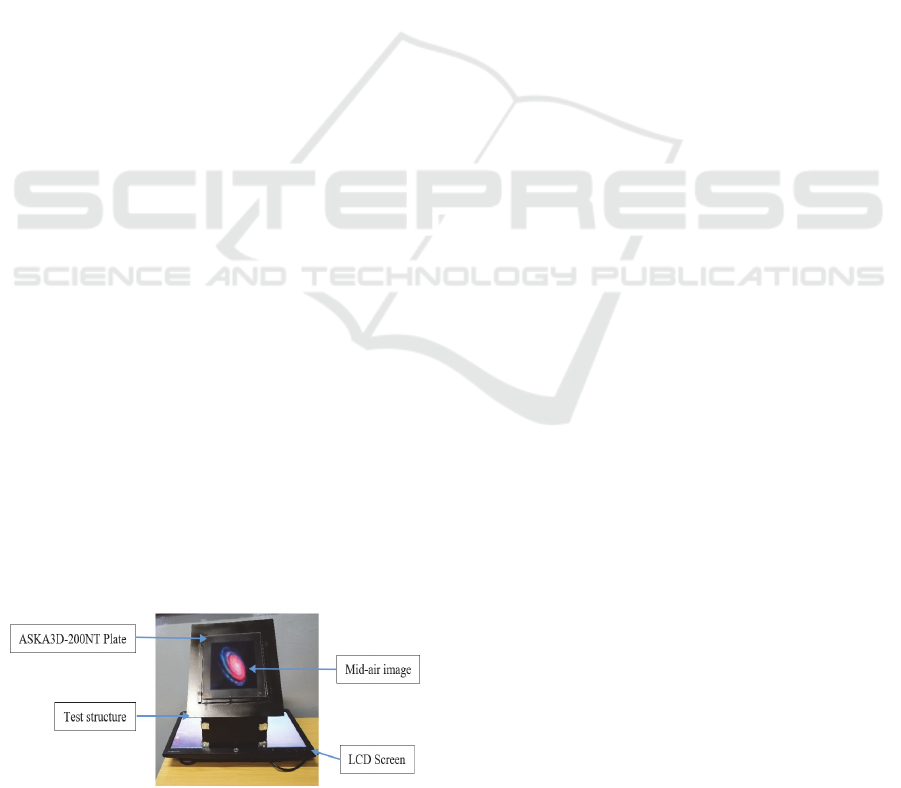

A test structure was constructed to fit the layout in

Fig 4. It was sized to fit the ASKA3D-200NT plate.

A HP Compaq LE2002x monitor screen acted as the

light source for the test structure. The ASKA3D plate

was purchased and the test structure was built, the test

system can be seen in Fig 6. To deliver the best

possible mid-air image, any solid surfaces (frame or

coverings) was painted black so that the reflected

Figure 6: Test system setup.

light was not absorbed by bright coloured surfaces

preventing the reduction of quality of the image and

delivering transparent virtual images.

Based on the equations provided by ASKA3D the

viewing area was dependant on the viewing distance

of the user, therefore, a definite value for these

measurements cannot be given as users were not

standing at one fixed distance when evaluating the

system. Additionally, the value of the viewing angle

was dependent on the size of the image show and

what distance in front of the plate the image was

shown.

Once the test structure was built, a questionnaire

was drawn up based on the questionnaire used by

Zhang et al. (2018), however, some questions that

were changed to relate to the new SAR system that

was being developed. This questionnaire was given to

users after they interacted with the mid-air images

shown to them. The questionnaire first collected basic

information such as age, name, gender and whether

users have previously interacted with AR or VR

technology. The questions were Likert scale

questions as seen in (Zhang, Dong, & Saddik, 2018)

where the user responded to the questions with a

number from 1 to 5 with each number representing a

different response. The questionnaire was split into

four sections where each section was centred on one

of the four main categories in the QoE framework.

Firstly, the user was asked general questions that

relate to a specific category (i.e. Content Quality)

before they give a final rating on the category in

question. The user did this for each category before

they gave an overall system rating out of 100. Finally,

the users were encouraged to comment on their

experience and suggest system errors or

recommendations.

4.1 Experimental Method

What follows was the procedure taken to test the

system seen in Fig 6.

An HP Compaq LE2002x monitor was

placed to lay flat on a table.

The test structure was then placed on top of

the monitor screen.

The ASKA3D-200NT plate was placed onto

the test structure.

A sample group of five people was selected

to evaluate this system, whose ages and

experience with AR and VR technology

varied.

Mid-air Imaging for a Collaborative Spatial Augmented Reality System

389

Individually, the users were exposed to two

types of mid-air images, static images and

dynamic images.

The static images displayed varied from

colourful images and scenes of sunsets,

planets, galaxies and text.

The dynamic images displayed varied from

rotating planets, moving gears and internal

combustion cycles.

The users were show both static and

dynamic images under two different light

conditions, the first being no presence of

artificial or natural light and the second

being allowing natural light or artificial

light.

During the test the users could interact and

move however they wanted when viewing

the mid-air image.

Once all the users underwent the test

individually, they were all brought together

to test the system with multiple users

following the same methods used in the

individual test.

The users were then asked to complete a

questionnaire about the SAR experience

they had received

4.2 Results

The following data was obtained from the scores

given by each user evaluating the four main

categories of interest as well as the users overall score

for the system:

Table 1: Evaluation data of system properties.

Participant

No.:

1 2 3 4 5

Content

Quality [5]:

4 4 4 4 4

Hardware

Quality [5]:

3 4 4 3 3

Environment

Understanding

[5]:

3 3 4 3 2

User

interaction [5]:

1 1 1 1 5

Overall system

rating [100]:

60 80 80 55 72

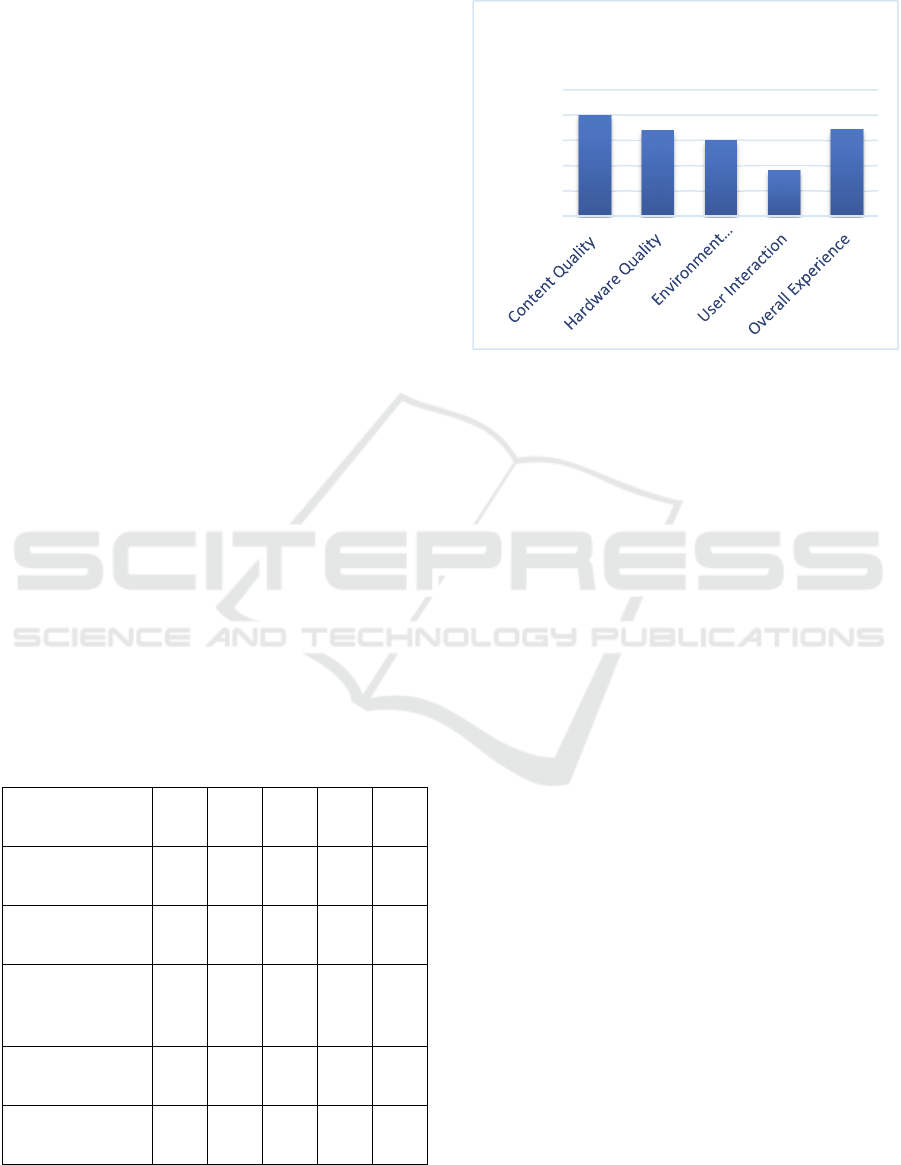

What follows is a bar graph displaying the average

percentage rating for each system property;

Figure 7: Bar graph of average percentage ratings.

5 DISCUSSION

High tier AR devices such as AR headsets have been

the face of AR technology and have delivery,

currently the best possible AR environment for single

users. Due to the advanced technology involved in

building these AR headsets they have a high cost and

limited availability, so even though this AR

technology exists it is not accessible to most people.

Furthermore, having this technology which was

limited to one user at a time for a single device is an

issue. Consumers should question whether this

limitation was worth the price, coupled with the fact

that one would need to wear these systems on their

body, which results in discomfort. Physical

discomfort was the not the only disadvantage of this

system, in some cases users had eye discomfort due

to incorrect focus cues and other visual latencies

while using these headsets. Therefore, there was a

need for a solution these problems. If a new AR

system could be developed that could deliver mid-air

projection, not only to one user but many users,

without the need to wear a headset, at price

consumers can afford, it may be possible to deliver an

AR experience to users without the shortcomings of

AR headsets.

This research presented a solution to the

disadvantages of AR headsets in the form of a

Collaborative Spatial Augmented Reality System.

The goal of this system was to remove the need for

users to wear a headset to view AR images. This new

idea of AR projection is called Spatial Augmented

80

68

60

36

69.4

0

20

40

60

80

100

PercentageRating[%]

SystemProperties

Average%ratingforeach

systemproperty

ICINCO 2020 - 17th International Conference on Informatics in Control, Automation and Robotics

390

Reality and using SAR techniques the proposed

system allowed multiple users to view AR images at

the same time. The proposed system used an AIP to

deliver mid-air images to users, coupled together with

a Kinect sensor for hand interaction or AR glove

technology it allowed users to physically influence

the AR image seen in mid-air. The system contained

camera that performed head tracking, allowing view

corrective rending of virtual images.

A single concept design was presented in this

paper although other designs were developed, they

are not covered, though they differ in their

implementation.

These other concept designs were intended to be

a robust system that had a large workspace. This type

of design was flawed due to its redundancy, there are

too many components in these systems that was

meant to be simple yet elegant. Furthermore, these

systems heavily relied on the code presented by

Hilliges et al. (2012) that would allow precise hand

interaction with mid-air images. The code had been

requested from the authors but there was no response.

The concept design seen in Fig 5 was created to

be a simpler version of previous designs, that was

more flexible in its method of projection, since it

allowed both layouts granted by the ASKA3D AIP.

The current design iteration (Fig 5) does make an

allowance for a Kinect sensor and a web-camera to be

mounted on the system. This system (Fig 5) was

created as a desktop system that did not require a

dedicated computer to operate the system but rather a

laptop. This would make it highly accessible to

everyday consumers wanting to experience AR

technology. User interaction with this system (Fig 5)

did not come through direct hand interaction but

through glove interaction where users were able to

influence the AR image, if they were wearing AR

glove technology. CaptoGlove was selected as the

AR glove technology to use for this system. There

was an allowance for a camera to be positioned on the

system to allow view dependant rendering.

This research covered a single part of a whole

system, which was the physical hardware that will be

required to implement a Collaborative SAR system.

The concept design meets the desired goal of the

system and will be the design used when building the

final system. The concept will need to be redesigned

after further review before it can be complete.

Since the method of projection relied on whether

the ASKA3D plate performed mid-air projection as

intended a test structure was created to test the mid-

air image projection (Fig 6). The image projection

delivered far exceeded what was expected. Since it

was possible to deliver a mid-air image an experiment

was created to evaluate the quality of experience

granted by the projection technique. The

experimented used for testing was based on the QoE

evaluation framework created by Zhang et al. (2018).

The experiment allowed users to view and interact

with the mid-air images and evaluate their

experiences through a questionnaire they had to

complete. The answered questionnaires can be found

by following the link provided: https://github.com/

Dashlen/Questionnair-results-for-SAR-System/issu

es /1#issue-564724513.

The data was then tabulated (Table 1) and graph

showing the average percentage rating for system

properties was created (Fig 7). The feedback from

users showed an average rating of 80% for the

Content Quality. The high percentage of this result

showed that based on user evaluation the images

perceived by users were realistic and did not require

intense focus from users to observe the mid-air

images.

The average rating for Hardware Quality,

concerning user mobility and comfort, was 68%.

Users found the experience both physically and

visually comfortable, since no headset was required,

furthermore no eye soreness was reported. The reason

behind the moderate percentage rating was due to the

limited visual freedom granted by the system. This

may be attributed to the users’ exiting the viewing

angle of the system, the size of the ASKA3D plate

and how far it is situated from the LCD screen.

The average rating for Environment Understanding

was 60%. While the system was able to deliver images

that could fit any environment the images projected

could not interact with foreign objects, any interaction

with physical objects would result in the mid-air image

losing its holographic effect on the users.

User Interaction was given a low average rating at

36%, since the users were unable to control the image

they were viewing. As a result, they could only rate

the interaction granted by the system as “very bad”.

Originally, a software was designed to be used on the

system that would allow users to change the scene of

the object they were observing, but the projection of

this scene was too big to be projected correctly. One

of the users concluded that user interaction with

regards to how precise and how fast the system

responds to user input would not depend on the

ASKA3D plate but rather the LCD screen being used

and how good a response time and refresh rate it had.

After further consideration their statement was found

to be correct.

The overall experience rating was given as 69.4%,

this rating was given by the users when they

considered the entire experience granted by the mid-

Mid-air Imaging for a Collaborative Spatial Augmented Reality System

391

air image. This showed that even though the system

was not complete and still has some errors it still

made users want to interact with the system.

At the end of the questionnaire users were able to

comment on what they experienced and leave

recommendations.

Most users felt that the system was very effective

in displaying a digital image in mid-air. One of the

users commented that viewing the image slightly off

centre improved the image quality, this might have

been an error on the user’s part since none of the other

users reported such a thing. Users observed that

objects surrounded by dark backgrounds were more

effective in generating a floating effect and images

with boarders reduced the quality of the floating

effect. It was also discovered that when the image

touches the edge of the viewing area the holographic

qualities were lost. To ensure this does not happen in

future a bigger ASKA3D plate should be used with a

smaller image being displayed. One user felt that they

had to be in a single position to view the image

correctly. This could be addressed by increasing the

size of the screen which will in turn increase the

viewing angle of the image. Users observed a wider

viewing capability with an increase in distance

between the user and the mid-air image. This was

expected as it conforms with the equations provided

by ASKA3D. One major discovery made was the

ability to produce a mid-air image under natural and

artificial lighting. Some users noted that they were

able to better identify the mid-air images while in

natural and artificial light. Although they were able to

better identify the mid-air image it was noted that the

image loses a portion of its sharpness in artificial or

natural lighting. At the end of the individual user

evaluation all the users were asked to observe the

mid-air image together to prove a hypothesis

involving multiple user viewing. The users reported

being able to see the image when being observed by

five people. This was able to prove that the proposed

system will allow multiple user observation.

The data obtained from QoE evaluation helped

identify strengths and weaknesses in the current

system. This information will help in creating the

final system which will then be evaluated under the

same conditions with the same questionnaire.

Thereafter, the results obtained will be compared

against the current results.

6 CONCLUSION

The focus of this research was to design the hardware

of a collaborative SAR system based on previous AR

design systems that used beam splitters to obtain their

projections. Two systems were analysed in this paper,

the HoloDesk and MARIO systems. Using the

information drawn from these systems the current

design iteration was proposed, promoting a unique

approach on a collaborative system. This system (Fig

5) could deliver mid-air images, view dependant

rendering and physical interacting in a simple and

elegant way. Furthermore, it can be run by connecting

a laptop to the system instead of a desktop computer

with high processing power. The method of

projection was evaluated using a quality of evaluation

framework that allowed users to give feedback on

their interaction with the projected images delivered

by the test structure (Fig 6). This data was captured

and further evaluated.

Performing this evaluation helped identify

weaknesses in the system that will need to be

addressed in the final system design. Currently, the

system has an overall average rating of 69.4% (Fig 7),

showing that users find the system interesting, and are

inclined to use it.

The most important discovery that was made

through user testing was not the overall quality

experienced by the users but rather that multiple users

could comfortable view the mid-air image at the same

time without any viewing issues, in fact it was found

that the further back you were the greater the viewing

angle granted by the system. This discovery will

affect how the system can be used which will have to

be described after further testing. This ability to allow

multiple users to view the mid-air image comes from

using an ASKA3D AIP in the system design.

Future work on this system will involve the full

software design that creates a scene allowing mid-air

mechanical assembly, final mechanical design

iteration and developing precise glove or hand

interaction. This paper has shown the initial

collaborative effect the final system will have through

its method to allow multiple user viewing, the final

step is to allow multiple user interaction to become a

finished collaborative system.

REFERENCES

ASKA3D. (n.d.). ASKA3D. Retrieved 02 11, 2020, from

https://aska3d.com/en/

Aspect, A., & Brune, M. (Directors). (2017). Quantum

Optics - Beam splitter in quantum optics [Motion

Picture]. Georgia.

CaptoGlove. (n.d.). CaptoGlove: Take Control of Your

Technology. (CaptoGlove) Retrieved 02 11, 2020, from

https://www.captoglove.com/

ICINCO 2020 - 17th International Conference on Informatics in Control, Automation and Robotics

392

Deering, M. F. (1993). Explorations of Display Interfaces

for Virtual Reality. California: IEEE.

Hilliges, O., Kim, D., Izadi, S., Weiss, M., & Wilson, A. D.

(2012, May 5). HoloDesk: Direct 3D Interactions with

a Situated See-Through Display. Morphing & Tracking

& Stacking: 3D Interaction, (pp. 2421-2430). Austin,

Texas. Retrieved October 8, 2019.

IGI Global. (n.d.). What is Spatial Augmented Reality

(SAR). (IGI Global) Retrieved May 13, 2019, from

https://www.igi-global.com/dictionary/spatial-

augmented-reality-sar/56556

Kim, H., Takahashi, I., Yamamoto, H., Maekawa, S., &

Naemura, T. (2014). MARIO: Mid-air Augmented

Reality Interaction with Objects. Entertainment

Computing 5, I(1), 223-241.

Marr, B. (2019, January 14). 5 Important Augmented And

Virtual Reality Trends For 2019 Everyone Should

Read. Retrieved January 24, 2019, from

https://www.forbes.com/sites/bernardmarr/2019/01/14

/5-important-augmented-and-virtual-reality-trends-for-

2019-everyone-should-read/#432aefcc22e7

Radkowski, R., & Oliver, J. (2014). Enhanced Natural

Visual Perception for Augmented Reality-Workstations

by Simulation of Perspective. Journal of Display

Technology , 10(5), 333-344.

Zhang, L., Dong, H., & Saddik, A. E. (2018). Towards a

QoE Model to Evaluate Holographic Augmented

Reality Devices: A HoloLens Case Study. Ottawa:

ResearchGate.

Mid-air Imaging for a Collaborative Spatial Augmented Reality System

393