From Standardized Assessment to Automatic Formative Assessment

for Adaptive Teaching

Alice Barana

1 a

, Cecilia Fissore

3 b

and Marina Marchisio

2 c

1

Department of Mathematics, University of Turin, Via Carlo Alberto 10, 10123 Turin, Italy

2

Department of Molecular Biotechnology and Health Sciences, University of Turin, Via Nizza 52, 10126, Turin, Italy

3

Department of Foreign Languages, Literatures and Modern Culture, Via Giuseppe Verdi, 10, 10124 Turin, Italy

Keywords: Automatic Formative Assessment, Automatic Assessment System, Adaptive Teaching, Interactive Feedback,

Self-regulation, Teacher Training, Mathematics.

Abstract: The use of technology in education is constantly growing and can integrate the experience of school learning

allowing an adaptive teaching. One of the teaching practices in which technology can play a fundamental role

is the assessment: standardized, summative and formative. An Automatic Assessment System can offer

fundamental support to teachers and to students, and it allows adaptive teaching: promoting practices of

formative assessment, providing effective and interactive feedback, and promoting self-regulated learning.

Our university has successfully developed and tested a model for automatic formative assessment and

interactive feedback for STEM through the use of an Automatic Assessment System. This article presents a

training course for teachers focused on the adaptation of questions designed for standardized assessment to

questions for formative assessment to develop mathematical skills, problem solving and preparation for

INVALSI (national standardized tests). The teachers created questions with automatic formative assessment,

reflecting on how to adapt the requests to the different needs of students and how to create guided learning

paths. The activities created by the teachers, their reflections on the training module and on the activity carried

out with students are analyzed and discussed.

1 INTRODUCTION

In recent years, educational technology is constantly

evolving and growing and can be used to integrate the

experience of school learning and offer adaptive

teaching. Thanks to the new technological tools,

teachers can have concrete support in offering all

students personalized teaching to their different needs

and their different cognitive styles. Technology can

be an additional resource in the classroom and at

home, able to support and help students in the

cognitive, educational and training process. Certainly

technology is able to improve learning only if it helps

effective teaching strategies, for example when it

allows to increase the time dedicated to learning,

when it facilitates cognitive processes, when it

supports collaboration between students, when it

allows to apply different teaching strategies to

a

https://orcid.org/0000-0001-9947-5580

b

https://orcid.org/0000-0003-1007-5404

c

https://orcid.org/0000-0001-8398-265X

different groups of students or when it helps to

overcome specific learning difficulties.

One of the teaching practices in which technology

can offer fundamental support to teachers but also to

students is assessment. For a standardized or

summative assessment, where the goal is to measure

students' learning outcomes, an Automatic

Assessment System (AAS) offers the possibility to

automatically evaluate, collect and analyze student

responses, saving time in correcting tests. In Italy, the

best known example of national standardized

assessment are INVALSI tests (https://invalsi-

areaprove.cineca.it/) in the Mathematics, English and

Italian disciplines (Bolondi et al., 2018, Cascella et

al., 2020). From the past three years onwards, the tests

for grades 8, 10 and 13 are computer-based while the

tests for grades 2 and 5 are still taking place on paper.

Barana, A., Fissore, C. and Marchisio, M.

From Standardized Assessment to Automatic Formative Assessment for Adaptive Teaching.

DOI: 10.5220/0009577302850296

In Proceedings of the 12th International Conference on Computer Supported Education (CSEDU 2020) - Volume 1, pages 285-296

ISBN: 978-989-758-417-6

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

285

An AAS can offer fundamental support also for a

formative assessment. Formative assessment is a

process in which students are active protagonists and

have the opportunity to understand what has been or

has not been learned and how to learn it. Students can

also understand the progress made and the difficulties

they have in learning. For example, through formative

assessment they can be trained in self-assessment to

better prepare themselves the INVALSI tests by

receiving feedback on the quality of their work and

advice on how to improve.

Our university has successfully developed and

tested a model for automatic formative assessment

through the use of an AAS for STEM and other

disciplines. This article presents a training course for

teachers focused on the adaptation of questions

designed for standardized assessment to questions for

formative assessment with the use of an AAS to

develop mathematical skills, problem solving and

preparation for INVALSI tests. The 8-hour course

was aimed at teachers of lower and upper secondary

schools within the national Problem Posing and

Solving project and was carried out entirely online.

17 teachers attended the course. After 4 weekly

synchronous online meetings lasting one hour,

teachers were asked to create at least 3 questions with

automatic assessment for a formative test (one for

assessing knowledge, one for solving a problem and

one for justification) and experiment them with one

of their classes. In creating the questions, the teachers

reflected on how to create questions that would adapt

to the different needs of students and guide them in

preparing the tests.

The teachers were also asked to answer two

questionnaires, one at the end of the preparation of the

questions and one after the experimentation in the

classroom. In the results section, the activities created

by the teachers and their reflections and observations

on the proposed training module and on the activity

carried out in the classroom with students are

analyzed and discussed.

2 THEORETICAL FRAMEWORK

2.1 Adaptive Teaching, Formative

Assessment and Feedback

Every teacher, in their class, deals with a great variety

of students. For example, there may be multicultural

classes, students may have different learning styles,

individual attitudes and inclinations or learning

disabilities. However, the learning objectives are

common to all students. In order to ensure that all

students achieve the same objectives, tailor-made

teaching of each student's characteristics, needs and

sometimes even curiosities can be adopted. Adaptive

teaching is defined as "applying different teaching

strategies to different groups of students so that the

natural diversity prevalent in the classroom does not

prevent each student from achieving success"

(Borich, 2011).

Some of the strategies for adaptive teaching are

formative assessment, feedback and self-regulated

learning.

The definition of formative assessment that we

adopt is that of Black and Wiliam (2009), well known

in the literature: “Practice in a classroom is formative

to the extent that evidence about student achievement

is elicited, interpreted, and used by teachers, learners,

or their peers, to make decisions about the next steps

in instruction that are likely to be better, or better

founded, than the decisions they would have taken in

the absence of the evidence that was elicited”. The

authors conceptualize formative assessment through

the following five key strategies:

▪ Clarifying and sharing learning intentions and

criteria for success;

▪ Engineering effective classroom discussions

and other learning tasks that elicit evidence of

student understanding;

▪ Providing feedback that moves learners

forward;

▪ Activating students as instructional resources;

▪ Activating students as the owners of their own

learning.

Sadler conceptualizes formative assessment as the

way learners use information from judgments about

their work to improve their competence (Sadler,

1989). Formative assessment is opposed to

summative assessment (assessment where the focus

is on the outcome of a program) because it is an

ongoing process that should motivate students to

advance in the learning process and provide feedback

to move forward. In Mathematics education,

summative assessment design is generally affected by

psychometric tradition, that requires that test items

satisfy the following principles (Osterlind, 1998):

▪ Unidimensionality: each item should be strictly

linked to one trait or ability to be measured;

▪ Local independence: the response of an item

should be independent from the answer to any

other item;

▪ Item characteristic curve: low ability students

should have low probability to answer correctly

to an item;

CSEDU 2020 - 12th International Conference on Computer Supported Education

286

▪ Non-ambiguity: the question should be written

in such a way that students are led into the only

correct answer.

Questions built according to this model are

generally limited in the Mathematics that they can

assess. The possible problems are reduced to those

with one only solution, deducible through the data

provided in the question text. If problems admit

multiple solving strategies, the only information

detected is the solution given by students, thus

removing the focus from the process, which is

essential for assessing Mathematics understanding

(Van den Heuvel-Panhuizen & Becker, 2003).

Having no information on the reasoning carried out

by students, on the resolutive strategies adopted by

them and on the registers of representation used, if the

student provides a wrong answer, it is not possible to

understand the type of error made by students and to

provide correct feedback.

In adaptive teaching, feedback takes on a very

important role to reduce the discrepancy between

current and desired understanding (Hattie &

Timperley, 2007). Effective feedback must answer

three main questions: “Where am I going?”, “How am

I going?”, “Where to next?”. That is, it should

indicate what the learning goals are, what progress is

being made toward the goal and what activities need

to be undertaken to make better progress. A feedback

can work at four levels: task level (giving information

about how well the task has been accomplished);

process level (showing the main process needed to

perform the task); self-regulation level (activating

metacognitive process); self-level (adding personal

assessments and affects about the learner).

Self-regulated learning is a cyclical process,

wherein the students plan for a task, monitors their

performance, and then reflects on the outcome.

According to Pintrich and Zusho (2007), “self-

regulated learning is an active constructive process

whereby learners set goals for their learning and

monitor, regulate, and control their cognition,

motivation, and behaviour, guided and constrained by

their goals and the contextual features of the

environment”. In the self-regulated learning students

become masters of their own learning processes.

Technologies can help offer increasingly adaptive

teaching: to activate effective strategies for formative

assessment, to give feedback that differs according to

level and to students' responses and to activate self-

regulated learning (Kearns, 2012).

An Adaptive Educational System (AES) uses data

about students, learning processes, and learning

products to provide an efficient, effective, and

customized learning experience for students. The

system achieves this by dynamically adapting

instruction, learning content, and activities to suit

students' individual abilities or preferences.

Our model of automatic formative assessment

allows to offer adaptive teaching (Barana, Marchisio,

& Sacchet, 2019; Marchisio et al., 2018), to assign

different activities to students according to their level

and to promote engagement in Mathematics at school

level (Barana, Marchisio, & Rabellino, 2019). The

AAS also allows you to automatically collect and

analyze all students' answers, the results of the checks

and the data on their execution (start, end, duration,

number of attempts, etc.). In this way, the model

allows at the same time to provide students with high

quality information about their learning and to

provide teachers with information that can be used to

adapt teaching.

2.2 Our Model of Formative Automatic

Assessment and Interactive

Feedback

In a Virtual Learning Environment (VLE), formative

assessment can be easily automatized in order to

provide students immediate and personalized

feedback. Using Moebius AAS

(https://www.digitaled.com/products/assessment/),

our research group has designed a model for the

formative automatic assessment for STEM, based on

the following principles (Barana, Conte, et al., 2018):

▪ Availability of the assignments to students who

can work at their own pace;

▪ Algorithm-based questions and answers, so

that at every attempt students are expected to

repeat solving processes on different values;

▪ Open-ended answers, going beyond the

multiple-choice modality;

▪ Immediate feedback, returned to students at a

moment that is useful to identify and correct

mistakes;

▪ Contextualization of problems in the real

world, to make tasks relevant to students;

▪ Interactive feedback, which appears when

students give the wrong answer to a problem. It

has the form of a step-by step guided resolution

which interactively shows a possible process

for solving the task.

This model relies on other models of online

assessment and feedback developed in literature, such

as Nicol and Macfarlane‐Dick’s principles for the

development of self-regulated learning (Nicol &

Macfarlane-Dick, 2006) and Hattie’s model of

feedback to enhance learning (Hattie & Timperley,

From Standardized Assessment to Automatic Formative Assessment for Adaptive Teaching

287

2007). The model was initially developed to improve

the learning of STEM disciplines but in recent years

we have also experimented with the model for

language learning (Barana, Floris, Marchisio, et al.,

2019; Marello et al., 2019).

2.3 Adaptive Teaching in the National

PP&S Project

The model developed for automatic formative

assessment is also used within the Italian PP&S

Problem Posing and Solving Project of the Ministry

of Education (Barana, Brancaccio, et al., 2018;

Brancaccio et al., 2015). The PP&S Project promotes

the training of Italian teachers of lower and upper

secondary schools on innovative teaching methods

and on the creation of a culture of Problem Posing and

Solving with the use of ICT. The PP&S Project

adopts the following technologies as essential tools

for professional growth and for the improvement of

teaching and learning: a VLE, a Moodle-learning

platform, available at www.progettopps.it, integrated

with an Advanced Computing Environment, an AAS

and a web conference system. The tools used within

the PPS project support adaptive teaching:

▪ The VLE allows synchronous and

asynchronous discussions, collaborative

learning, interactivity and interaction,

integration with tools for computing and

assesment, activity tracking;

▪ The ACE allows interactive exploration of

possible solutions to a problem, different ways

of representation and feedback from automatic

calculations and interactive explorations;

▪ The AAS allows students to carry out the

necessary exercises independently, to have

step-by-step guided solutions to learn a method

and to make repeated attempts of the same

exercise with different parameters and values.

The AAS promotes students’ autonomy and

awareness of their skills and facilitates class

management for teachers.

The PP&S Project offers various training

activities that allow teachers to reconsider their

teaching using adaptive teaching with technologies:

face-to-face training, online training modules, weekly

online tutoring, online asynchronous collaboration

and collaborative learning within a learning

community. In this case, we proposed 8-hour online

training module entitled "Automatic formative

assessment for preparation for INVALSI tests”, to

reason and reflect on the adaptation of questions

designed for standardized assessment to questions for

formative assessment and to create activities with

automatic assessment for developing skills and for

preparation for INVALSI tests. INVALSI test

materials were proposed in the training module,

created to measure students' skills, in terms of

formative evaluation, with the aim of training skills.

The INVALSI question repository is full of very valid

and interesting questions, which can be used to make

lessons in the classroom every day for teaching.

3 FROM STANDARDIZED

ASSESSMENT TO FORMATIVE

ASSESSMENT

Standardized assessment and formative assessment

have very different characteristics. In the

standardized assessment, each item responds to a

single goal, the answer to each item is independent of

the answers to the other items and each question has

only one possible correct answer. In formative

assessment problems can have different solutions,

answers to items can be dependent on each other and

the solution process should be considered more

important than the answer to the question (Barana et

al., in press). The following lines present an example

of how to transform a question for standardized

assessment into a question with formative assessment

through the use of the automatic assessment system.

The question is of the area "relations and

functions" in the dimension of "knowing" and is made

for grade 13 students. The purpose of the question is

to identify the graph of a piecewise function verbally

described in a real context. The text of the question

is: "A city offers a daily car rental service that

provides a fixed cost of 20 euros, a cost of 0.65 euros

per km for the first 100 km and a cost of 0.4 euros per

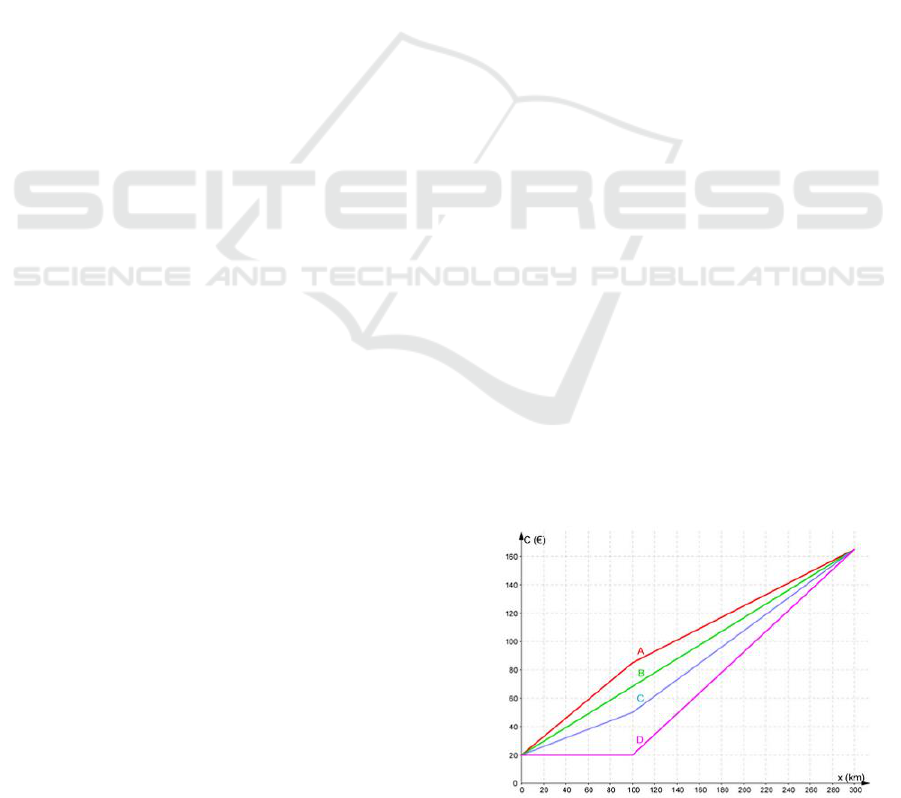

km over the first 100 km ". Given a figure (Fig. 1)

with the graphs of four car rental contracts, the

student must select the graph corresponding to the

proposed offer in a multiple choice task.

Figure 1: Graphs of four possible car rental contracts.

CSEDU 2020 - 12th International Conference on Computer Supported Education

288

For the formative assessment this question can be

expanded into three sub-questions in order of

difficulty, keeping the same text of the problem but

setting the algorithmic values. In this way the student

can practice answering several times always having

new data. In the first part, students are asked how

much they would spend on a 10 km journey. The

student (as shown in Fig. 2) can enter the numerical

value and, by clicking the "verify" button, has two

attempts to see if the answer is correct.

Figure 2: Response area to the first sub-question with

"verify" button.

In the second part, the student is asked to choose

the correct graph among the four graphs proposed (as

in the previous question in Fig.1) but the advantage is

that each time the problem data change, the four

graphs also automatically change. In the last part of

the question, the student must enter the expression of

the function that expresses how the taxi fare varies

according to the minutes t (Fig. 3).

Figure 3: Response area to the last sub-question with

"verify" button.

The question proposes three sub-questions of

increasing level with three different types of

representation of the same mathematical concept

(graphic, numerical and symbolic). Students can test

themselves by having immediate feedback.

Immediate feedback, shown while the student is

focused on the activity, facilitates the development of

self-assessment and helps students stay focused on

the task. In addition, the interactive feedback with

multiple attempts available encourages students to

test themselves and immediately rethink the

reasoning and correct themselves.

4 METHODOLOGIES

The training module proposed to analyze the

characteristics of the INVALSI math tests in order to

create activities with automatic formative assessment

for developing mathematical skills. The three

dimensions of mathematical competence evaluated

by the INVALSI tests were analysed (knowing,

solving problems and justifying) through the creation

of examples of questions for grade 8, 10 and 13. The

course did not require prerequisites and was open to

all teachers of the PPS Community, to those who

already had experience with the automatic assessment

system and to those who had never used it. The

duration of the course was 4 weeks and included 4

one-hour synchronous online meetings, carried out

through a web conference service integrated with the

platform of the Project. In the following months, the

teachers were asked to create 3 questions with

automatic assessment: one designed to assess

knowledge, one for solving a problem and one for

justification. In particular, the teachers could choose

a question with standardized assessment and modify

it in a question with formative assessment, reflecting

on how the transformation was carried out. After that,

the teachers experimented the questions with students

in one of their classes and shared the questions with

the PPS Teacher Community. The video recording of

the online meetings was also made available to all the

teachers of the Community.

To understand the appreciation of the training

module and to see how the teachers dealt with the

process from the standardized to the formative

assessment, we analyzed the questions created by the

teachers and the answers to the two questionnaires.

The teachers were asked to answer the initial

questionnaire at the end of the four synchronous

online training meetings. In this questionnaire the

teachers were asked if they had already used the

automatic assessment system, if they liked different

aspects of the training course and the proposed

methodologies and which aspects according to them

are favored by the use of the automatic formative

assessment with students. The teachers also had to

explain in detail the questions created for the

formative assessment and the class of students chosen

for testing the activities created.

For each question, the teachers had to indicate:

▪ The dimension of the question (knowing,

solving problems or justifying);

▪ The title of the question in the AAS;

▪ The main goal of the question;

▪ The topic of the question;

From Standardized Assessment to Automatic Formative Assessment for Adaptive Teaching

289

▪ The material from which they took inspiration

for the creation of the question (from an

INVALSI question, from a textbook, from the

internet, from none of these because they

invented the question);

▪ The strategies adopted to adapt the question to

the automatic formative assessment.

After making the students carry out the activities

they created, the teachers had to answer a final

questionnaire. In this questionnaire, the teachers were

asked to explain the activity carried out with the

students. In particular they had to describe:

▪ Where the activity was carried out by students

(in the classroom, in the in the computer lab of

the school or at home);

▪ Which students were involved (all students or

only some of them);

▪ Students' appreciation of the activity;

▪ Difficulties reported to the students;

▪ Changes made to the activities after the

experimentation;

▪ The aspects favored by the use of the automatic

formative assessment with students.

To carry out the analysis, a group of experts (the

group of researchers who conducted the analysis)

examined all the 51 questions and classified them

according to the characteristics described by the

teachers (size, main topic and main objective, etc.)

and according to the completeness of the question, the

number of sub-questions for adaptivity and the type

of register required of students. For each category and

sub-category the various parts of each question and

the types of response areas chosen by the teachers

were discussed, to reason about the possible

difficulties that the teachers had (both in the transition

from a question for standardized assessment to a

question for formative assessment and in the use of

the automatic assessment system). For each

dimension, the strategies used by the teachers to adapt

the question to the formative assessment were

collected and analyzed.

Then an example of question was chosen for each

dimension, which was more exemplary of all the

questions created by the teachers.

5 RESULTS

5.1 General Overview of the

Participants in the Module and the

Activities Carried out

17 teachers from the PPS Community, one from the

lower secondary school and all the others from the

upper secondary school, took an active part in the

course. 70% of teachers had already taken a course on

the use of an automatic assessment system and 65%

of teachers had already used it with a class or group

of students. The typology of institute in which the

teachers teach was very varied: Linguistic High

School, Industrial Technical Institute, Scientific High

School, lower secondary school, Technological-

Electro-technical Institute, Classical High School.

The subjects taught by the teachers are: mathematics

(59%), mathematics and physics (24%), mathematics

and computer science (12%) and mathematics and

sciences (6%).

The teachers created three questions each for a

total of 51 questions, one for each dimension. They

used several sources to choose the standardized

assessment question to be transformed into the

formative assessment question: an INVALSI

question (37%), an exercise/problem found on a

textbook (37%), an exercise/problem found on

internet (6%), an exercise/problem found in the

Maple TA repository of the PPS (10%). In the

remaining 10% of cases, the teachers directly created

a new question.

Regarding the dimension of "knowing" the

strategies they used to adapt the question to the

formative assessment were:

▪ After a first closed-ended question by inserting

an adaptive section in which, in the event of an

incorrect answer, the student is guided in the

resolution procedure;

▪ Creating an algorithmic question so that you

can carry it out several times with different

data;

▪ Inserting a final feedback that allows students

who have made mistakes to understand them

and to correct themselves;

▪ Making an algorithmic multiple choice

question to bring out the most common

misconceptions about a topic;

▪ Inserting multiple areas of answers to

understand different aspects.

In the dimension of "solving problems" the

strategies that the teachers used to adapt the question

to the formative assessment were:

CSEDU 2020 - 12th International Conference on Computer Supported Education

290

▪ Asking questions with different registers

(tracing a graph, filling in a table and inserting

a formula);

▪ Contextualizing the problem in a real situation;

▪ Making the question algorithmic;

▪ Setting up computations to recognize the

correct solution process in a multiple choice

question and adding a guided path in case of

errors;

▪ Guiding the student in the resolution in the

event that students do not immediately respond

correctly;

▪ Using an adaptive question to help students

who cannot solve the problem by guiding them

step by step to the final solution.

In the "justifying" dimension, the strategies that

teachers used to adapt the question to the formative

assessment were:

▪ Inserting after a closed-ended question a

question with gaps in the text with missing

words to choose from a list;

▪ Giving students the opportunity to rephrase

their justification after seeing the correct

answer;

▪ Developing a theoretical reasoning step by

step;

▪ Realization of a demonstration by inserting

various multiple choice questions;

▪ Adding to an open answer the possibility to

draw the graph of the solution.

We asked the teachers how useful the various

tools proposed were for the creation of the questions.

Table 1 shows the responses of the teachers on a scale

from 1="not at all" to 5="very much".

Table 1: Tools used by teachers for creating questions.

Explanations followed during online

meetings

4.8

Notes taken during online meetings

4.5

Videos of online tutoring

4.6

The material available on the platform

on the use of Maple TA

3.9

INVALSI site

3.9

INVALSI tests archive

(https://www.gestinv.it/)

3.6

Other materials found on the internet

3.0

Tutor support via forums

3.8

Support from other teachers via forums

2.9

5.2 Examples of Questions Created by

Teachers

5.2.1 Dimension of Knowing

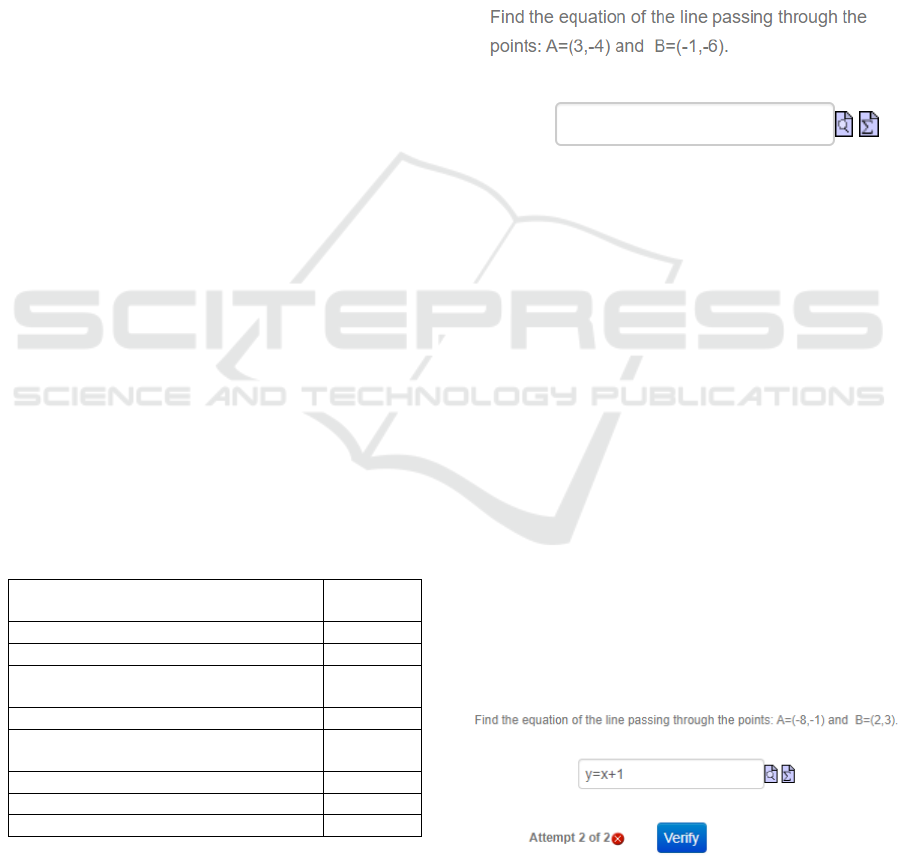

In the following example, adaptation of questions

designed for standardized assessment to questions for

formative assessment is done in several ways. For the

creation of the question, the teacher took inspiration

from an exercise/problem found in the Maple TA

repository of the PPS. In the starting question (Fig. 4),

students were simply asked to enter the equation of a

line.

Figure 4: Example of question for standardized evaluation

of the dimension "Knowing".

The student can find the equation of the line in

many different ways and using different

representation registers (formulas, graphs, tables,

etc.). At the end of the reasoning, in this type of

question, students insert only the final equation they

have found. In this case, an incorrect answer does not

provide the teacher with precise information on the

nature of the student's difficulty. At the same time, the

simple "wrong answer" feedback does not give

students information about their mistake and how to

overcome it.

In the question created by the teacher, the request

is divided into two sub-questions. In the first part

(Fig. 5), the student must insert the equation of the

straight line passing through two given points,

however having two attempts available. By clicking

on the "verify" button, the student can know if the

answer entered is right or wrong. In case of wrong

answer, the student can reason again to the question

and try to give a new answer.

Figure 5: First part of the example question of the

dimension "Knowing".

From Standardized Assessment to Automatic Formative Assessment for Adaptive Teaching

291

If the answer is correct, the question ends. In the

case of an incorrect answer, a two-step guided path is

proposed to students to review the necessary theory

and correctly answer the question (Fig. 6). Students

next to the response area also have a button to preview

the inserted answer and an equation editor to insert it.

Figure 6: Second part of the example question of the

dimension "Knowing".

Finally, the question has been made algorithmic,

randomly varying the coordinates of the given points.

In this way the student can try to answer several times

and each time the points and the straight line passing

through them will be different. Automatically the

student has a lot of exercises with interactive and

immediate feedback to practice.

5.2.2 Dimension of Solving Problems

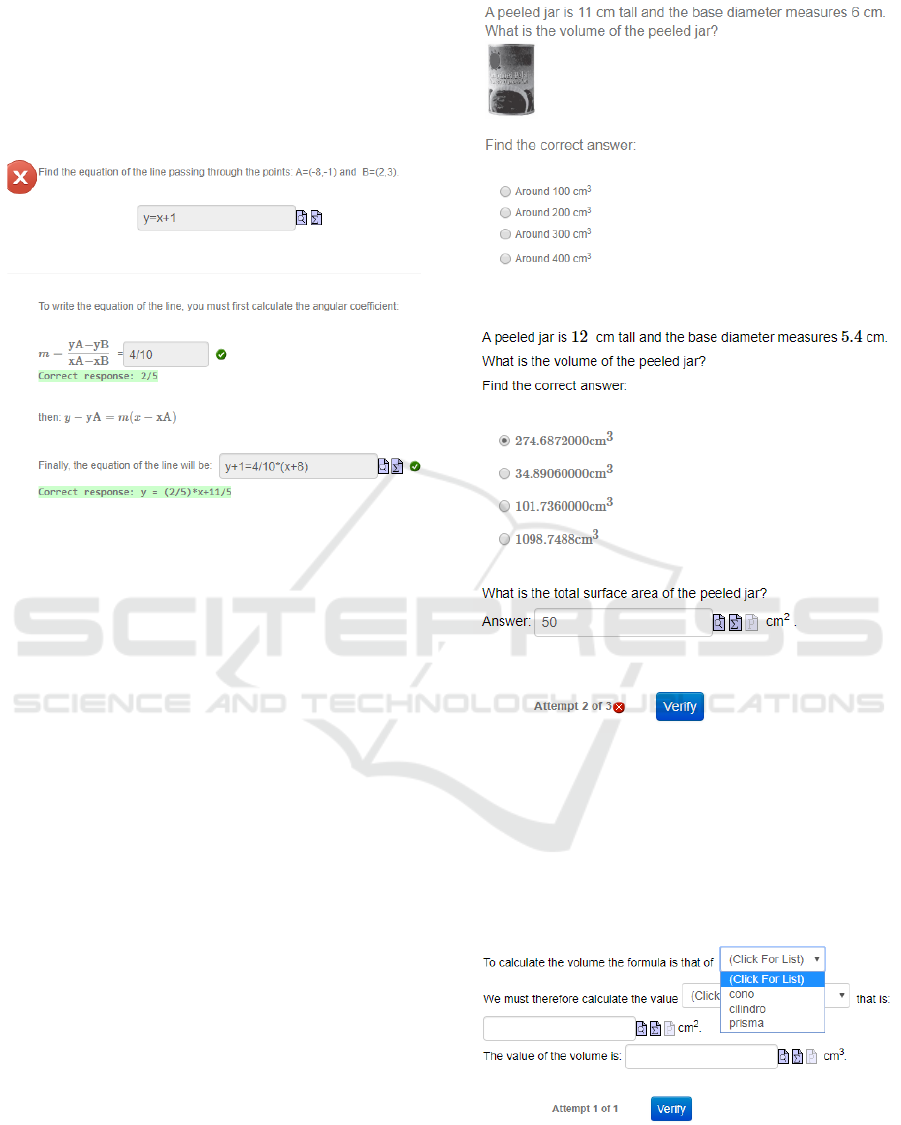

The following question proposes a contextualized

problem of solid geometry. For the creation of the

question, the teacher took inspiration from an

INVALSI question, shown in Figure 7. The main

objective of the question was to calculate the area and

volume of the most common solid figures and to give

estimates of objects of daily life.

Compared to the starting question, the teacher

added the total surface of the peeled jar as a second

request and gave the possibility of having three

attempts to answer (Fig. 8). The goal of the question

has become to calculate the volume and the the total

surface of a cylinder. The strategy adopted to adapt

the question to the automatic formative assessment

was to make the question algorithmic and insert the

request to calculate the total area. The question was

created to guide the student in the resolution.

Figure 7: INVALSI question of solid geometry.

Figure 8: First part of the example question of the

dimension "Solving problems".

After this first part, regardless of the correctness

of the answer entered by the student, the student is

guided in solving the problem (Fig. 9), through the

use of different response areas (numerical value,

drop-down menu or multiple choice).

Figure 9: Second part of the example question of the

dimension "Solving problems".

The student can reflect on the correct answer and

review the theoretical contents. Each phase of the

CSEDU 2020 - 12th International Conference on Computer Supported Education

292

procedure is characterized by immediate and

interactive feedback so that the student remains

focused on the task and is more motivated to move

forward. This type of question helps clarify to

students what good performance is and offers

opportunities to bridge the gap between current and

desired performance. The question is algorithmic so

students can try to answer several times and each time

the data of the problem are different. In this way,

students can practice several times and consolidate a

resolution strategy.

5.2.3 Dimension of Justifying

The following question proposes an exercise

concerning the tessellation of the floor, with tiles

having different shapes represented by regular

polygons. For the creation of the question, the teacher

took inspiration from an exercise/problem found on a

textbook and used an adaptive question to lead

students to the correct answer by following relevant

steps.

In the first part of the question, students must

identify which is the regular polygon with the

smallest number of sides that cannot be used for

tessellation and enter the correct name in the text box.

Then they must follow a guided path to justify the

correct answer (Fig. 10).

The path for a guided demonstration is divided

into three parts, in which the student must deal with

different types of requests and different response

areas (multiple choice, number values, choice from a

list). Students have only one attempt available for

each answer. The same path is shown to students who

answer correctly or incorrectly. However, if the

students answer incorrectly, they are shown the

correct answer through interactive feedback. In this

way students can rethink the reasoning done and

reason correctly on the next request. This type of

question can be very useful to train students to justify

an answer by proposing a possible method. Finally, it

can be very useful to alternate this type of question

with open-ended questions where the student is asked

to justify an answer and reason freely.

5.3 Observations on Experimentation

with Students

Analyzing the responses of the teachers to the

questionnaire requested at the end of the

experimentation with students, it emerged that

students carried out the activity mainly in the

computer lab of the school (47%), in the second case

at home (35%) and finally in class with mobile

Figure 10: Example question of the dimension "Justifying".

devices (18%). In almost all cases (94%) the activity

was carried out individually by students and not in

groups and was mandatory for all students.

The activity with students gave the teachers the

opportunity to receive very useful feedback and to

reflect on the prepared activities. Half of the teachers

said that at the end of the experimentation with

students they would modify the proposed questions:

by modifying the text of the question; adding

explanations on how to answer; making the questions

more accessible by proposing exercises for level

groups (basic, intermediate and advanced); changing

the methods of administration not as self-employment

at home but as a compulsory classroom activity.

In some cases, the teachers said that students had

some difficulty in carrying out the activity. They were

of different types. In some cases, students

encountered technical difficulties in using the

technology or in using the automatic assessment

system (because they used it for the first time or

because they did not read the instructions correctly)

and in others, they encountered difficulties in

correctly interpreting requests.

From Standardized Assessment to Automatic Formative Assessment for Adaptive Teaching

293

5.4 Teachers' Observations on the

Training Module

The teachers liked the contents and methodologies

proposed within the training module very much.

Table 2 shows the teachers' evaluations in different

aspects of the module, on a scale of "1 = not at all"

and "5 = very much".

Table 2: Satisfaction of teachers on the training module.

Clarity of explanations

4.9

Adequacy of the themes

4.8

Completeness of the justifications

4.6

Method of conducting the course

4.8

Opportunity to interact with the tutor

4.9

Usefulness of the materials left

available on the platform

4.9

88% of the teachers said they were satisfied with

the training module and believed that it offered

interesting ideas for educational activities. All

teachers would recommend the attendance of this

training module to a colleague.

Teachers were asked how much they believe that

the use of automatic formative assessment with its

class can favor students with different aspects

reported in Table 3. The teachers had to answer with

a value on a scale of 1 to 5, where 1 = "not at all" and

5 = "very much".

Table 3: Aspects promoted by the use of automatic

formative assessment for students.

Review knowledge

4.6

Understand the contents

4.2

Develop problem solving strategies

4.4

Develop autonomy in problem solving

4.4

Develop justificatory skills

4.1

Opportunity to practice for tests

4.6

Possibility to practice for the INVALSI

tests

4.7

Facilitate study autonomy

4.2

Promote metacognitive reflection

3.9

Promote student involvement in learning

4.1

Increase students' awareness of their

abilities

4.2

Understanding mistakes

4.2

Increase motivation for the subject

3.8

Inclusion of students with BES / DSA

4.1

Student empowerment

4.1

Personalization of educational activities

4.0

The values shown in table 3 are all higher than 3.8.

This means that teachers believe that the use of

automatic formative assessment can be an effective

methodology for students, favoring multiple aspects.

According to the teachers, among the aspects most

promoted by the use of automatic formative

assessment for students there are the possibility of

developing problem solving skills, the possibility of

reviewing the theoretical contents, and the possibility

of practicing for math test and for the INVALSI test.

Finally, teachers were asked how much they

believe that the use of automatic formative

assessment with the class can favor teachers with the

different aspects reported in Table 4.

Table 4: Aspects promoted by the use of automatic

formative assessment for teachers.

Quality of teaching materials

4.4

Greater attention to the activities proposed

to students

4.3

Professional development

4.5

Greater understanding of student

difficulties

4.4

Control of student activities

4.7

Also in this case the values shown in table 4 are

all higher than 4.3. This means that teachers believe

that the use of automatic formative assessment can be

an effective methodology also for teachers

themselves. In particular, its use can be useful to have

quality feedback on the activities carried out by

students, to better understand their difficulties and to

intervene with activities adapted to their needs.

Further aspects identified by the teachers were:

▪ Greater reflection by the teacher on the content

of the questions and on the way they are

proposed;

▪ Make choices that respect the different learning

styles of students, in consideration of the

possibility of formulating questions of different

types;

▪ Promote a collective discussion and dialogue

lessons;

▪ Increase student engagement at home;

▪ Organize didactic activities that facilitate the

students' different learning styles.

6 CONCLUSIONS

Our training module allowed the teachers to reflect on

the characteristics of the formative assessment and on

the adaptation of questions designed for standardized

assessment to questions for formative assessment.

Through the use of an automatic assessment system,

they designed and transformed questions for

standardized assessment into questions for formative

assessment, reasoning about the didactic context, the

CSEDU 2020 - 12th International Conference on Computer Supported Education

294

topic and the objective of the application. Then they

asked students to answer the questions and reflected

on the activity carried out in the classroom. The

teachers reflected on the aspects favored by the

proposed methodologies, for students and for the

teachers themselves. At the end of the module, the

teachers shared the material created with all the

teachers of the PP&S community so that everyone

could use them and discuss them.

The teachers who participated in the training

module appreciated the proposed contents and

methodologies very much. They believe they have

received enough tools to work independently with an

automatic assessment system. The teachers are

satisfied that they have experienced the activities

created with students and all the teachers will

continue to use the automatic assessment system

during the school year with students.

The teachers believe that the use of an automatic

formative assessment system can have important

advantages for students, for example for the

development of skills, for reviewing and for

preparing for tests. There are also significant

advantages for teachers, in particular for

understanding the difficulties and needs of students

and for proposing adaptive teaching. The importance

of immediate and interactive feedback, in the

proposed methodology, is essential for both students

and teachers.

Certainly students may have technical

difficulties in using the automatic assessment system

and in particular in inserting the correct syntax.

However the rigidity of technological tools can

educate them to read the instructions carefully.

Furthermore, it is very important that students learn

to use technologies also for educational purposes. It

can therefore be very important to increase the

training of teachers on the use of an automatic

assessment system for formative assessment and to

train students to use this tool.

This type of training activity can be further

developed by collaborating with the teachers in

carrying out the activities in the classroom with

students, in order to support them and to directly

receive students' feedback on the proposed activity.

The use of automatic formative assessment

supports adaptive teaching. This type of methodology

supports adaptive teaching. Through the development

of recent big data theory and learning analytics

(Barana et al., 2019), we think it may be possible in

the future to propose an adaptive educational system

(AES), that uses data about students, learning

processes, and learning products to provide an

efficient, effective, and customized learning

experience for students.

REFERENCES

Barana, A., Brancaccio, A., Esposito, M., Fioravera, M.,

Fissore, C., Marchisio, M., Pardini, C., & Rabellino, S.

(2018). Online Asynchronous Collaboration for

Enhancing Teacher Professional Knowledges and

Competences. The 14th International Scientific

Conference ELearning and Software for Education,

167–175. https://doi.org/10.12753/2066-026x-18-023

Barana, A., Conte, A., Fioravera, M., Marchisio, M., &

Rabellino, S. (2018). A Model of Formative Automatic

Assessment and Interactive Feedback for STEM.

Proceedings of 2018 IEEE 42nd Annual Computer

Software and Applications Conference (COMPSAC),

1016–1025.

https://doi.org/10.1109/COMPSAC.2018.00178

Barana, A., Conte, A., Fissore, C., Marchisio, M., &

Rabellino, S. (2019). Learning Analytics to improve

Formative Assessment strategies. Italian e-Learning

Association. ISSN: 18266223, doi: 10.20368/1971-

8829/1135057

Barana, A., Floris, F., Marchisio, M., Marello, C.,

Pulvirenti, M., Rabellino, S., & Sacchet, M. (2019).

Adapting STEM Automated Assessment System to

Enhance Language Skills. Proceedings of the 15th

International Scientific Conference ELearning and

Software for Education, 2, 403–410.

https://doi.org/10.12753/2066-026X-19-126

Barana, A., Marchisio, M. & Miori, R. MATE-BOOSTER:

Design of Tasks for Automatic Formative Assessment

to Boost Mathematical Competence, in press.

Barana, A., Marchisio, M., & Rabellino, S. (2019).

Empowering Engagement through Automatic

Formative Assessment. 2019 IEEE 43rd Annual

Computer Software and Applications Conference

(COMPSAC), 216–225.

https://doi.org/10.1109/COMPSAC.2019.00040

Barana, A., Marchisio, M., & Sacchet, M. (2019).

Advantages of Using Automatic Formative Assessment

for Learning Mathematics. In S. Draaijer, D. Joosten-

ten Brinke, & E. Ras (A c. Di), Technology Enhanced

Assessment (Vol. 1014, pagg. 180–198). Springer

International Publishing. https://doi.org/10.1007/978-

3-030-25264-9_12

Black, P., & Wiliam, D. (2009). Developing the theory of

formative assessment. Educational Assessment,

Evaluation and Accountability, 21(1), 5–31.

https://doi.org/10.1007/s11092-008-9068-5

Bolondi, G., Branchetti, L., & Giberti, C. (2018). A

quantitative methodology for analyzing the impact of

the formulation of a mathematical item on students

learning assessment. Studies in Educational Evaluation,

58, 37–50.

https://doi.org/10.1016/j.stueduc.2018.05.002

Borich, G. (2011). Effective teaching methods. Pearson.

From Standardized Assessment to Automatic Formative Assessment for Adaptive Teaching

295

Brancaccio, A., Marchisio, M., Palumbo, C., Pardini, C.,

Patrucco, A., & Zich, R. (2015). Problem Posing and

Solving: Strategic Italian Key Action to Enhance

Teaching and Learning Mathematics and Informatics in

the High School. Proceedings of 2015 IEEE 39th

Annual Computer Software and Applications

Conference, 845–850.

https://doi.org/10.1109/COMPSAC.2015.126

Cascella, C., Giberti, C., & Bolondi, G. (2020). An analysis

of Differential Item Functioning on INVALSI tests,

designed to explore gender gap in mathematical tasks.

Studies in Educational Evaluation, 64, 100819.

Hattie, J., & Timperley, H. (2007). The Power of Feedback.

Review of Educational Research, 77(1), 81–112.

https://doi.org/10.3102/003465430298487.

Kearns, L.R. (2012). Student Assessment in Online

Learning: Challenges and Effective Practices.

MERLOT J. Online Learn. Teach. 8, 198–208.

Marchisio, M., Di Caro, L., Fioravera, M., & Rabellino, S.

(2018). Towards Adaptive Systems for Automatic

Formative Assessment in Virtual Learning

Communities. Proceedings of 2018 IEEE 42nd Annual

Computer Software and Applications Conference

(COMPSAC), 1000–1005.

https://doi.org/10.1109/COMPSAC.2018.00176

Marello, C., Marchisio, M., Pulvirenti, M., & Fissore, C.

(2019). Automatic assessment to enhance online

dictionaries consultation skills. proceedings of 16th

INTERNATIONAL CONFERENCE on COGNITION

AND EXPLORATORY LEARNING IN THE

DIGITAL AGE (CELDA 2019), 331–338.

Nicol, D.J., Macfarlane ‐ Dick, D. (2006). Formative

assessment and self‐regulated learning: a model and

seven principles of good feedback practice. Stud. High.

Educ. 31, 199–218.

Osterlind, S.J. (1998). Constructing test items. Springer,

Dordrecht.

Pintrich, P. R., & Zusho, A. (2007). Student motivation and

self-regulated learning in the college classroom. In R.

P. Perry & J. C. Smart, The Scholarship of Teaching

and Learning in Higher Education: An Evidence-Based

Perspective. Springer, Dordrecht.

Sadler, D. R. (1989). Formative assessment and the design

of instructional systems. Instructional Science, 18(2),

119–144.

Van den Heuvel-Panhuizen, M., Becker, J. (2003). Towards

a Didactic Model for Assessment De-sign in

Mathematics Education. In: Bishop, A.J., Clements,

M.A., Keitel, C., Kilpatrick, J., and Leung, F.K.S. (eds.)

Second International Handbook of Mathematics

Education. Springer Netherlands, Dordrecht, 689–716.

CSEDU 2020 - 12th International Conference on Computer Supported Education

296