Facing the Appeal of Social Networks: Methodologies and Tools to

Support Students towards a Critical Use of the Web

Elisa Puvia, Vito Monteleone

a

, Giovanni Fulantelli

b

and Davide Taibi

c

Consiglio Nazionale delle Ricerche, Istituto per le Tecnologie Didattiche, Palermo, Italy

Keywords:

Cognitive Style, Analytical Thinking, Social Networks, Educational Settings, Fake News Detection, Artificial

Intelligence in Education, Instagram-like Platform.

Abstract:

In the present contribution, we introduce an integrated approach, grounded both on cognitive and computer

science, to strengthen the capacity of adolescent students in discerning information on the Web and on social

networks. The proposed approach includes methodologies and tools aimed at promoting critical thinking in

students. It has been structured in four main operational phases to facilitate implementation and replication,

and it is currently tested with 77 high-school students (14− 16 years old). Preliminary insights from this pilot

study are also presented in this paper. We argue that the integrated approach can be comprised in a more

general framework designed to boost competences of reasoning of students, which are crucial in promoting

fake news detection and, consequently, in preventing the spreading of on line false information.

1 INTRODUCTION

The Internet is a global phenomenon, affecting pri-

vate and public life immensely. It has evolved into

a ubiquitous digital environment in which people ac-

cess content (e.g. Web), communicate (e.g. social

networks), and seek information (e.g. search en-

gines). The Internet search engines are more and

more often used as a source of information on the

Web, and also in educational contexts they are widely

used by pupils in the acquisition of new knowledge on

a specific topic (Taibi et al., 2017; Taibi et al., 2020).

For example, Search As Learning is a recent research

topic aimed at investigating the learning activity as an

outcome of the information seeking process (Ghosh

et al., 2018). However, search engines are optimized

for acquiring factual knowledge and they are effective

for specific types of search, but they do not support

searching as learning tasks (Krathwohl and Anderson,

2009; Marchionini, 2006). In fact, search engines are

not purposely designed to facilitate learning activities

such as understanding or synthesis, given that they do

not offer mechanisms to support iteration, reflection

and analysis of results by the searcher.

Social networks play a predominant role in the

communication between individuals, and their use

a

https://orcid.org/0000-0001-7600-1251

b

https://orcid.org/0000-0002-4098-8311

c

https://orcid.org/0000-0002-0785-6771

in the educational settings have been widely investi-

gated. In particular, social networks have been used

by teachers to share information and communicate

with pupils, they have been also used to support self-

regulated learning to connect informal and formal

learning. The structure of the most used social net-

works such as Facebook, Twitter and Instagram has

been also investigated in terms of their ability to foster

interactions and contents sharing (Thompson, 1995;

Chelmis and Prasanna, 2011), feelings (Kaplan and

Haenlein, 2010), opinions, and sentiments expres-

sions (Pang et al., 2008). However, if from one hand

the Internet and its tools support new communication

dynamics able to reach a wider number of persons,

on the other hand these characteristics have generated

issues that cannot be ignored. Indeed, Internet has

also introduced challenges that imperil the well-being

of individuals and the functioning of democratic soci-

eties, such as the rapid spread of false information and

online manipulation of public opinion (e.g., (Brad-

shaw and Howard, 2019; Kelly et al., 2017), as well as

new forms of social malpractice such as cyberbullying

(Kowalski et al., 2014) and online incivility (Ander-

son et al., 2014).

The negative effects of these challenges are even

more exacerbated when it comes to young adoles-

cents, specially when they use the Web to shape their

understanding and acquire knowledge on a new topic.

Moreover, the Internet is no longer an unconstrained

Puvia, E., Monteleone, V., Fulantelli, G. and Taibi, D.

Facing the Appeal of Social Networks: Methodologies and Tools to Support Students towards a Critical Use of the Web.

DOI: 10.5220/0009571105670573

In Proceedings of the 12th International Conference on Computer Supported Education (CSEDU 2020) - Volume 1, pages 567-573

ISBN: 978-989-758-417-6

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

567

and independent cyberspace but a highly controlled

environment. Online, whether people are access-

ing information through search engines or social me-

dia, their access is regulated by algorithms and rec-

ommender systems with little transparency or public

oversight. We argued that one way to address this

imbalance is with interventions that empower adoles-

cents, as Internet users, to gain some control over their

digital environments, in part by boosting their infor-

mation literacy and their cognitive resistance to ma-

nipulation. As a consequence, adolescents need to be

equipped with a more informed use of social media

and the Internet.

In this contribution we present an integrated ap-

proach that includes methodologies and tools aimed

at promoting critical thinking in adolescent students.

This approach will help students in perceiving and

elaborating on line information in a more critical way,

in order to face the challenges posed by the digital en-

vironments in which they are imbued.

2 RECOMMENDER SYSTEMS,

ALGORITHMS AND OTHER

DIGITAL TRAPS

One of the main challenging aspects of online en-

vironments is represented by the way in which they

shape information search. Indeed, information is

filtered and mediated by personalized recommender

systems and algorithmic filtering. Algorithmic filter-

ing and personalization are not inherently malicious

technologies. On the contrary, they are helpful mech-

anisms that support people in navigating the over-

whelming amount of information on the Internet. In

a similar vein, news feeds on social media strive to

show news that is interesting to users. So, filtering in-

formation on the Internet is indispensable and helpful.

All in all, these mechanisms act as filters between the

abundance of information and the scarcity of human

attention. However, they are not without some notable

problems. One general problem is that the decision on

such personalized content is being delegated to a vari-

ety of algorithms without a clear understanding of the

mechanisms underlying the resulting decisions.

Delegating decisions results in a people’s gradual

loss of control over their personal information and a

related decline in human agency and autonomy (An-

derson et al., 2018; Mittelstadt et al., 2016; Zarsky,

2016).

Another closely related concern is the impact of

AI-driven algorithms. For example, on what informa-

tion should be presented and in what order (Tufekci,

2015). Another challenging consequence of algorith-

mic filtering is the algorithmic bias (Bozdag, 2013;

Corbett-Davies et al., 2017; Fry, 2018), which intro-

duces biases in data processing and consequences at a

societal level, such as discrimination (e.g., gender or

racial biases).

However, at a closer look, algorithms are designed

by human beings, and they rely on existing data gen-

erated by human beings. They are therefore likely

not only to generate biases due to technical limita-

tions, but also reinforce existing biases and beliefs.

Relatedly, it has been argued that personalized filter-

ing on social media platforms may be instrumental

in creating “filter bubbles” (Pariser, 2011) or “echo

chambers” (Sunstein, 2018). Both filter bubbles and

echo chambers are environments in which individu-

als are exposed to information selected by algorithms

according to a viewer’s previous behaviours (Bakshy

et al., 2015), amplifying as a result the confirmation

bias – a way to search for and interpret information

that reinforces pre-existing beliefs.

Concerning social networks, a wide variety of ar-

tificial intelligence algorithms is adopted to target the

purposed audience on social media. The fundamental

idea of the use of these algorithms on social networks

is to optimize the content for the users. There is a

wide literature on the application of AI in social me-

dia to capture the trends and the moods of users (Sal-

loum et al., 2017; Ching et al., 2015; van Dam and

Van De Velden, 2015; Del Vicario et al., 2017). Previ-

ous studies have shown that young adolescents are not

aware of the presence of AI algorithms during their

experiences in social networks and the contents that

are shown to them are considered as spontaneous. As

an interdisciplinary science of thinking, psychologi-

cal science can inform interventions to counteract the

challenges that digital environments pose (Kozyreva

et al., 2019). Indeed, cognitive science has developed

some very general insights into how we perceive and

elaborate on line information that can be critical in

understanding general cognitive preferences.

It has been proposed that one of the factors that

could explain the success of online misinformation

is that it appeals to general cognitive preferences, in

that it fits with our cognitive predispositions (Acerbi,

2019). While we acknowledge that several boosts

could be implemented, we decided to elaborate an in-

tegrated approach grounded both on cognitive science

and technological tools to strengthen the capacity of

students in discerning information on the Internet. In

the next section a general overview of the integrated

approach is provided. Afterwards, a more detailed de-

scription of the four main phases of this approach will

be presented.

CSEDU 2020 - 12th International Conference on Computer Supported Education

568

3 INTEGRATED APPROACH

In the present contribution, an integrated approach

that leverages cognitive and technological tools will

be introduced and discussed. We argued that these

integrated tools can be comprised in a more general

framework designed to boost competences of reason-

ing of adolescent students to prevent the spread of

false information. Among the possible human cog-

nitive factors, our approach is focused on cognitive

style of thinking. A crucial individual difference

that emerges from human cognitive architecture is

the propensity to think analytically (Pennycook et al.,

2015).

Analytic thinking can be acknowledged as a ten-

dency to solve problems through understanding of

logical principals and the evaluation of evidence com-

pared to a more intuitive, emotional and/or imagina-

tive way. We argue that understanding individual dif-

ferences in the cognitive processing mechanisms of

novel information - such as in the domain of fake

news – can be crucial in promoting fake news de-

tection and in turn preventing fake news spreading.

Analytic thinking has been proved to be the strongest

predictor (among several) of a reduction in conspir-

acy theory (Swami et al., 2014), religious (Gervais

and Norenzayan, 2012), and paranormal beliefs (Pen-

nycook et al., 2012). Most relevant in the present

context, previous work has shown a negative associa-

tion between a tendency to think analytically and fake

news susceptibility (Bronstein et al., 2019; Penny-

cook and Rand, 2018). The integrated approach, con-

sequently consists of the following four main phases:

• Phase 1: Assessment of students’ cognitive style

and Internet habits.

• Phase 2: Fostering students’ analytic thinking

mindset.

• Phase 3: Assessment of students’ abilities to de-

tect fake news.

• Phase 4: Promoting knowledge of social network

algorithms.

From the technological point of view, the tasks re-

lated to the phases 1 to 3 have been conducted by

using the Lime Survey open source software. The

tasks of the final phase have been carried out on the

PixelFed platform, an Instagram like platform based

upon the ActivityPub federated network. The Activi-

tyPub is a W3C recommendation and allows server-

server and client-server communication through in-

boxes and outboxes mechanism. It is a decentral-

ized social networking protocol that makes possible

for a user to interact with many different Internet ap-

plications. In the ActivityPub context each user plays

as an actor who is represented as an account on the

server. Each actor has an inbox where he receives

his messages and an outbox where he can send mes-

sages. Anyone can listen to someone’s outbox to get

messages they post, and people can post messages

to someone’s inbox for them. Most of the federated

projects are open-source and besides Pixelfed, many

services such as PeerTube (a YouTube open-source

alternative) or Mastodon (a Twitter open-source alter-

native) are integrated over the ActivityPub protocol

and form what is known as the Fediverse: an universe

of open-source and interconnected Internet applica-

tions. It is possible to post a video to PeerTube and to

get a notification on Mastodon, respond to the video

post on Mastodon, and the message shows up as a

comment on the video.

3.1 Assessment of Students’ Cognitive

Style and Internet Habits

The proposed integrated approach was tested with a

sample of seventy-seven secondary school students.

Students’ age ranged from 14 to 16 years old (M =

15.07; SD = .442). A majority of students (80.5%)

were male. In order to evaluate pre-experimental in-

dividual stable differences in cognitive style, students

completed the Rational/Experiential Multimodal In-

ventory (REIm) (Norris and Epstein, 2011). The

REIm contains 42 items,12 of which in the Rational

scale, and 30 in the Experiential scale. The 12 items

that compose the Rational scale measure an analytic

thinking style (e.g., ‘I enjoy problems that require

hard thinking’). The 30 items that compose the Expe-

riential scale measure an experiential thinking style. It

consists of three 10-item subscales, namely Intuition

(a tendency to solve problems intuitively and based

on effect), Emotionality (a preference for intense and

frequent string effect), and Imagination (a tendency to

engage in, and appreciate, imagination, aesthetic pro-

ductions, and imagery). Examples of the items are: ‘I

often go by my instincts when deciding on a course

of action’; ‘I like to rely on my intuitive impres-

sions’, and ‘I tend to describe things by using images

or metaphors, or creative comparisons’, respectively.

All items are rated on 5 points scale (1 = Strongly

disagree, 5 = Strongly agree) and subscale scores are

computed as the mean of associated items. From

a principal component factorial analysis two factors

were extracted: Rational and Experiential. The over-

all scale (Cronbach’s α = .75) as well as both sub-

scales (Cronbach’s α = .68 and α = . 77 for the ratio-

nal and experiential subscales, respectively) showed

a good internal consistency. Finally, students’ Inter-

net habits were assessed in order to evaluate whether

the quality of students’ habits, in terms of the con-

Facing the Appeal of Social Networks: Methodologies and Tools to Support Students towards a Critical Use of the Web

569

tents they are exposed to mostly, can affect their abil-

ity to detect online fake news. Students reported to

be Instagram (89.6%), Facebook (51.9%), and What-

sApp (24.7%) users. Most of them reported to use

their smartphone (98.7%) to connect to Internet. Ta-

ble 1 reports the top ten contents students mostly re-

search on line. No correlations emerged between the

quality of students’ Internet habits and their ability to

discern between true and false on line information (r’s

>.05). However, given the exploratory nature of the

present investigation, this result has to be carefully in-

terpreted.

Table 1: Top ten online contents searched by students.

Content %

School 32.5

Football 23.4

Music 18.2

News 18.2

Sport 15.6

Video 13.0

Engines 10.4

Sports 10.4

Games 9.1

Memes 9.1

The full set of contents is summarized in the word

cloud displayed in Figure1.

Figure 1: What students search online.

3.2 Fostering Students’ Analytic

Thinking Mindset

In the second phase, students completed a priming

task used to activate analytic thinking without explicit

awareness (Gervais and Norenzayan, 2012). In this

task, students received 10 different sets of five ran-

domly arranged words (e.g., man away postcard the

walked). For each set of five words, students dropped

one word and rearranged the others to form a mean-

ingful phrase (e.g., the man walked away). The an-

alytic condition included five-word sets containing a

target prime word related to analytic or rational rea-

soning (analyse, reason, ponder, think, rational). In

the control condition, the scrambled sentences con-

tained neutral words (e.g., chair, shop). Alterna-

tively, an even more subtle experimental manipula-

tion can be used to elicit analytic thinking in which

students are randomly assigned to view four images

of either artwork depicting a reflective thinking pose

(Rodin’s The Thinker) or control artwork matched for

surface characteristics like color, posture, and dimen-

sions (e.g., Discobolous of Myron). All students re-

ceive instructions to look at each picture for 30 sec-

onds before moving on to the next portion of the ex-

periment. This novel visual prime measure has been

proven to successfully trigger analytic thinking (Ger-

vais and Norenzayan, 2012). In order to make sure

that the manipulation was successful, students were

asked to complete the one item Moses Illusion Task

(Erickson and Mattson, 1981): ’How many of each

kind of animal did Moses take on the Ark?’, a mea-

sure used to assess analytic versus experiential pro-

cessing (Song and Schwarz, 2008). Results of this

preliminary study showed that students were signifi-

cantly more likely to respond with the correct answer

(e.g., ‘Moses did not have an ark’ or ‘Cannot say’) in

the analytic condition (31%) compared with the con-

trol condition (8%).

3.3 Assessment of Students’ Abilities to

Detect Fake News

After being exposed to either analytical or experi-

ential processing, students, in the third phase, were

required to detect the accuracy of news headlines.

Those students who have been exposed to an ana-

lytic mindset are expected to be more effective in fake

news detection compare to those who did not receive

such input. Specifically, three factually accurate sto-

ries (real news) and three subtly untrue stories (fake

news) were presented. After the reading of each news

headline, students were required to indicate if a) they

have seen or heard about the story before, b) evaluate

how accurate (namely, detailed, true in their under-

standing and real) the headlines was, and c) evaluate

their willingness to share the story on line (for exam-

ple, through Facebook or Twitter).

CSEDU 2020 - 12th International Conference on Computer Supported Education

570

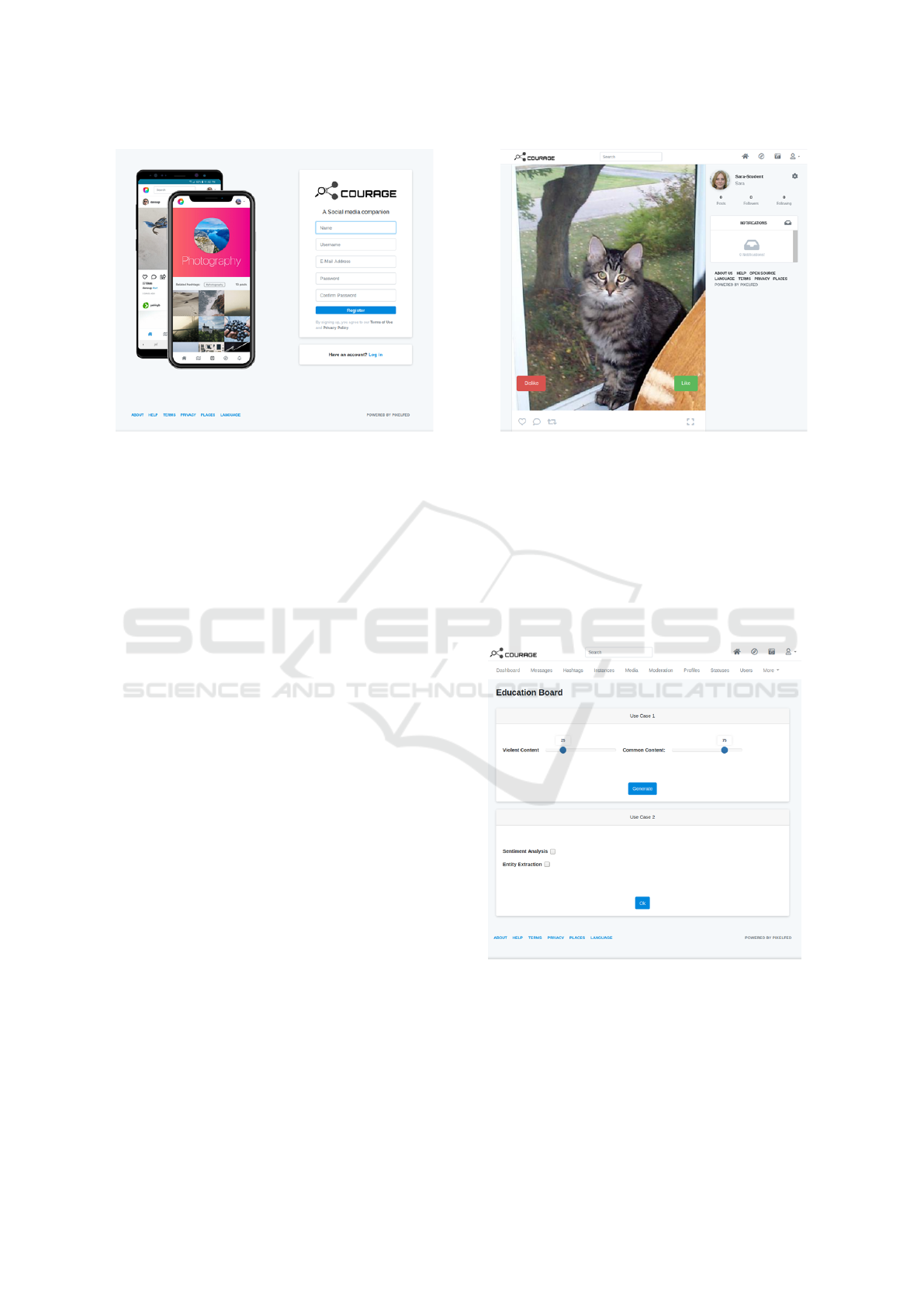

Figure 2: In this figure is shown our customized version of Pixelfed. It is possible to run different use cases in order to collect

user data for future analysis.

3.4 Promoting Knowledge of Social

Network Algorithms

The aim of this phase is to analyse how the social net-

works work in order to make students more aware of

the hidden mechanisms that are implemented. As we

stated above, in this phase we took advantage of Pix-

elfed, an open-source alternative to Instagram, to de-

sign use cases to make young adolescents aware of the

presence of artificial intelligence algorithms in social

networks. Pixelfed is an ethical photo sharing plat-

form software based on the federated open web pro-

tocol named ActivityPub. The federated term is re-

ferred to a distributed network of multiple social web-

sites, where users of each site communicate with users

of any of the involved sites of the network.

Thanks to Pixelfed we are able, on the one hand,

to show to young adolescents how the response of a

system changes by changing the parameters provided

by the designer and, on the other hand, what are the

implications in a social network architecture by pur-

suing certain actions instead of others. Pixelfed is

equipped with the most mainstream features of a so-

cial network. Users are able to create personal profiles

and friend lists, post status updates, follow activity

streams, and subscribe to be notified of other users’

actions within the environment. Thanks to Pixelfed

we are able to provide the users with posts containing

specific images or texts and collect their reactions in

real-time by adopting different types of interactions

such as like/dislike buttons. Fig.2 gives a shot of the

Pixelfed social network design.

We decided to adopt and to customize our ex-

periments on Pixelfed, due to the fact that the new

technological trend has led adolescents (especially in

the age range under investigation in our piloting) to

prefer the use of applications such as Instagram or

TikTok, instead of the old fashioned social networks

like Facebook. Providing adolescents with an envi-

ronment they are familiar with and that is equipped

with the newest features allow us to record interac-

tions that more closely resemble those, that adoles-

cents perform every day.

Figure 3: The dashboard teacher should use to tune the pub-

lishing content algorithms.

The use of PixelFed will ensure to have control over

an open source social network to analyze the user

behavior. We also develop a teacher dashboard (see

Fig.3) through which it is possible to tune the algo-

rithms used to publish the content, so that students

Facing the Appeal of Social Networks: Methodologies and Tools to Support Students towards a Critical Use of the Web

571

can observe how their interactions could modify the

behaviour of the algorithms in the social network.

4 CONCLUSIONS

The main objective of the integrated approach pre-

sented in this paper is to provide school students with

opportunities to reflect upon effective strategies for

using the Web and social networks. To this aim, in

addition to the project phases reported in the paper,

further opportunities to unveil concerns that are cen-

tral to understanding these strategies have been given

to the students participating to the pilot study. Specifi-

cally, during each session the debate methodology has

been adopted in order to discuss the most relevant is-

sues emerged during the class activities and stimulate

comparison of points of view amongst students.

Furthermore, even though we are aware about the

fact that critical thinking is a complex activity, we ar-

gue that lower-level skills on which analytical think-

ing is built up can be acquired and ultimately taught.

An effective way to promote such skills is diagram-

ming arguments, namely, argument mapping (Gelder,

2005) in which students are invited to visually repre-

sent arguments through diagrams. Students can see

the reasoning and they can more easily identify im-

portant issues, such as whether and assumption has

been articulated, whether a premise needs further sup-

port, or whether an objection has been addressed. In

short, the use of diagrams makes the core operations

of critical thinking more straightforward, resulting in

faster growth in critical-thinking skills. The experi-

mental phase is still at a very preliminary stage; nev-

ertheless, some initial results can be reported: firstly,

it has been confirmed that students’ knowledge on the

algorithms governing social networks and Web search

engines is scarce; similarly, students’ awareness on

the negative consequences arising from uncritical ac-

ceptance of Internet news is limited to specific and

well-known circumstances; finally, a correlation be-

tween the boost of analytical thinking and the ability

to discriminate true information from false informa-

tion has emerged, even if further analysis is necessary

to confirm our hypotheses. The expected results will

shed light on important individual factors that may

predict the ability to better discern between real (true)

and fake (false) news in adolescent students.

ACKNOWLEDGEMENTS

This work has been developed in the framework of the

project COURAGE - A social media companion safe-

guarding and educating students (no. 95567), funded

by the Volkswagen Foundation in the topic Artificial

Intelligence and the Society of the Future.

REFERENCES

Acerbi, A. (2019). Cognitive attraction and online misin-

formation. Palgrave Communications, 5(1):1–7.

Anderson, A. A., Brossard, D., Scheufele, D. A., Xenos,

M. A., and Ladwig, P. (2014). The “nasty effect:” on-

line incivility and risk perceptions of emerging tech-

nologies. Journal of Computer-Mediated Communi-

cation, 19(3):373–387.

Anderson, J., Rainie, L., and Luchsinger, A. (2018). Arti-

ficial intelligence and the future of humans. Pew Re-

search Center.

Bakshy, E., Messing, S., and Adamic, L. A. (2015). Ex-

posure to ideologically diverse news and opinion on

facebook. Science, 348(6239):1130–1132.

Bozdag, E. (2013). Bias in algorithmic filtering and per-

sonalization. Ethics and information technology,

15(3):209–227.

Bradshaw, S. and Howard, P. N. (2019). The global disin-

formation order: 2019 global inventory of organised

social media manipulation. Project on Computational

Propaganda.

Bronstein, M. V., Pennycook, G., Bear, A., Rand, D. G.,

and Cannon, T. D. (2019). Belief in fake news is as-

sociated with delusionality, dogmatism, religious fun-

damentalism, and reduced analytic thinking. Jour-

nal of Applied Research in Memory and Cognition,

8(1):108–117.

Chelmis, C. and Prasanna, V. K. (2011). Social networking

analysis: A state of the art and the effect of semantics.

In 2011 IEEE Third International Conference on Pri-

vacy, Security, Risk and Trust and 2011 IEEE Third

International Conference on Social Computing, pages

531–536. IEEE.

Ching, A., Edunov, S., Kabiljo, M., Logothetis, D., and

Muthukrishnan, S. (2015). One trillion edges: Graph

processing at facebook-scale. Proceedings of the

VLDB Endowment, 8(12):1804–1815.

Corbett-Davies, S., Pierson, E., Feller, A., Goel, S., and

Huq, A. (2017). Algorithmic decision making and

the cost of fairness. In Proceedings of the 23rd

ACM SIGKDD International Conference on Knowl-

edge Discovery and Data Mining, pages 797–806.

Del Vicario, M., Zollo, F., Caldarelli, G., Scala, A., and

Quattrociocchi, W. (2017). Mapping social dynam-

ics on facebook: The brexit debate. Social Networks,

50:6–16.

Erickson, T. D. and Mattson, M. E. (1981). From words

to meaning: A semantic illusion. Journal of Memory

and Language, 20(5):540.

Fry, H. (2018). Hello World: How to be Human in the Age

of the Machine. Random House.

Gelder, T. V. (2005). Teaching critical thinking: Some

lessons from cognitive science. College teaching,

53(1):41–48.

CSEDU 2020 - 12th International Conference on Computer Supported Education

572

Gervais, W. M. and Norenzayan, A. (2012). Ana-

lytic thinking promotes religious disbelief. Science,

336(6080):493–496.

Ghosh, S., Rath, M., and Shah, C. (2018). Searching as

learning: Exploring search behavior and learning out-

comes in learning-related tasks. In CHIIR ’18.

Kaplan, A. M. and Haenlein, M. (2010). Users of the world,

unite! the challenges and opportunities of social me-

dia. Business horizons, 53(1):59–68.

Kelly, S., Truong, M., Shahbaz, A., Earp, M., and White,

J. (2017). Freedom on the net 2017: Manipulating

social media to undermine democracy. freedom of the

net project.

Kowalski, R. M., Giumetti, G. W., Schroeder, A. N., and

Lattanner, M. R. (2014). Bullying in the digital age:

A critical review and meta-analysis of cyberbully-

ing research among youth. Psychological bulletin,

140(4):1073.

Kozyreva, A., Lewandowsky, S., and Hertwig, R. (2019).

Citizens versus the internet: Confronting digital chal-

lenges with cognitive tools. PsyArXiv.

Krathwohl, D. R. and Anderson, L. W. (2009). A taxonomy

for learning, teaching, and assessing: A revision of

Bloom’s taxonomy of educational objectives. Long-

man.

Marchionini, G. (2006). Exploratory search: from find-

ing to understanding. Communications of the ACM,

49(4):41–46.

Mittelstadt, B. D., Allo, P., Taddeo, M., Wachter, S.,

and Floridi, L. (2016). The ethics of algo-

rithms: Mapping the debate. Big Data & Society,

3(2):2053951716679679.

Norris, P. and Epstein, S. (2011). An experiential think-

ing style: Its facets and relations with objective and

subjective criterion measures. Journal of personality,

79(5):1043–1080.

Pang, B., Lee, L., et al. (2008). Opinion mining and senti-

ment analysis. Foundations and Trends

R

in Informa-

tion Retrieval, 2(1–2):1–135.

Pariser, E. (2011). The filter bubble: What the Internet is

hiding from you. Penguin UK.

Pennycook, G., Cheyne, J. A., Seli, P., Koehler, D. J.,

and Fugelsang, J. A. (2012). Analytic cognitive style

predicts religious and paranormal belief. Cognition,

123(3):335–346.

Pennycook, G., Fugelsang, J. A., and Koehler, D. J. (2015).

Everyday consequences of analytic thinking. Current

Directions in Psychological Science, 24(6):425–432.

Pennycook, G. and Rand, D. G. (2018). Who falls for fake

news? the roles of bullshit receptivity, overclaiming,

familiarity, and analytic thinking. Journal of person-

ality.

Salloum, S. A., Al-Emran, M., and Shaalan, K. (2017).

Mining social media text: extracting knowledge from

facebook. International Journal of Computing and

Digital Systems, 6(02):73–81.

Song, H. and Schwarz, N. (2008). Fluency and the de-

tection of misleading questions: Low processing flu-

ency attenuates the moses illusion. Social Cognition,

26(6):791–799.

Sunstein, C. R. (2018). # Republic: Divided democracy in

the age of social media. Princeton University Press.

Swami, V., Voracek, M., Stieger, S., Tran, U. S., and Furn-

ham, A. (2014). Analytic thinking reduces belief in

conspiracy theories. Cognition, 133(3):572–585.

Taibi, D., Fulantelli, G., Basteris, L., Rosso, G., and Pu-

via, E. (2020). How do search engines shape reality?

preliminary insights from a learning experience. In

Popescu, E., Hao, T., Hsu, T.-C., Xie, H., Temperini,

M., and Chen, W., editors, Emerging Technologies for

Education, pages 370–377, Cham. Springer Interna-

tional Publishing.

Taibi, D., Fulantelli, G., Marenzi, I., Nejdl, W., Rogers,

R., and Ijaz, A. (2017). Sar-web: A semantic web

tool to support search as learning practices and cross-

language results on the web. In 2017 IEEE 17th In-

ternational Conference on Advanced Learning Tech-

nologies (ICALT), pages 522–524.

Thompson, J. B. (1995). The media and modernity: A social

theory of the media. Stanford University Press.

Tufekci, Z. (2015). Algorithmic harms beyond facebook

and google: Emergent challenges of computational

agency. Colo. Tech. LJ, 13:203.

van Dam, J.-W. and Van De Velden, M. (2015). Online

profiling and clustering of facebook users. Decision

Support Systems, 70:60–72.

Zarsky, T. (2016). The trouble with algorithmic decisions:

An analytic road map to examine efficiency and fair-

ness in automated and opaque decision making. Sci-

ence, Technology, & Human Values, 41(1):118–132.

Facing the Appeal of Social Networks: Methodologies and Tools to Support Students towards a Critical Use of the Web

573