A User Centered Approach in Designing Computer Aided Assessment

Applications for Preschoolers

Adriana-Mihaela Guran

1 a

, Grigoreta-Sofia Cojocar

1 b

and Anamaria Moldovan

2 c

1

Computer Science Department, Babes¸ - Bolyai University, 1 M. Kog

˘

alniceanu, Cluj - Napoca, Romania

2

Albinut¸a Kindergarten, Cluj - Napoca, Romania

Keywords:

Preschooler, User Centered Design, Computer Aided Assessment.

Abstract:

The children of nowadays are growing surrounded by technology. The appropriate use of technology is im-

portant in determining their attitude towards it, and education should support the right approach in this sense.

Adjusting the teaching and evaluation methods to the current trends is necessary, starting from very young

ages. In this paper, we present a User Centered Approach for developing Computer-Aided Assessment appli-

cations for preschoolers from our country. We describe our approach and present a case study of applying it,

together with discussions about the challenges, lessons learned and future development.

1 INTRODUCTION

Nowadays, more and more children are exposed to

technology from their early childhood (Crescenzi and

Grane, 2016; Nesset and Large, 2004; Robertson,

1994). In the European Union (EU), there is a grow-

ing interest to improve the digital skills of its citizens,

so a Digital Agenda has been created and adopted by

the member states (International Computer and Infor-

mation Literacy Study, 2018; Kiss, 2017). It is con-

sidered that the improvement of digital skills should

be led by the educational system, where more and

more focus goes around the digital skills of young

people. Currently, it is considered that the children’s

digital skills are of same importance as literacy and

numeracy (Bukova, 2017; Fraillon et al., 2016; UN-

ESCO, 2011).

The educational system in Romania has faced sev-

eral reforms in the last thirty years regarding curricu-

lum and forms of organization, redefining the objec-

tives of education according to the EU requirements.

It is organized in three stages: preschool stage (chil-

dren aged 3 to 6), school stage (children aged 6/7

to 18-primary, secondary and high school) and uni-

versity stage. Political, social and economic devel-

opment following the transition from dictatorship to

democracy came with mandatory changes regarding

a

https://orcid.org/0000-0002-6172-8156

b

https://orcid.org/0000-0003-0066-2752

c

https://orcid.org/0000-0002-6517-9705

education. Along the way, several measures were

taken as a means to improve the teaching-learning

process and to obtain better results at national exams

and international contests, as well as developing com-

petencies and skills needed to integrate youngsters in

different work fields. Thus the need to have digital

skills formed from early ages, i.e., preschool stage.

ICT classes are organized for the primary school,

but no measures address the preschool system, even

though every kindergarten classroom is equipped with

a PC.

Presently, only primary and secondary school are

compulsory, but further legislation in the area states

that preschool stage will become mandatory, too. It

started with preparing teachers, giving them the possi-

bility to enroll to dedicated courses in order to achieve

certain digital competencies, promoting e-learning,

and continued with introducing computers, useful de-

vices (cameras, printers) and internet in schools in or-

der to provide resources to improve, ease and increase

the benefits of education. It was followed by prepar-

ing children, an ongoing process that needs a special

attention. Using technology in teaching and teaching

children how to learn using it seems to be the chal-

lenge of the 21st century in Romania.

At preschool stage, kindergarten time is domi-

nated by discovering the world through games and

playing (Piaget, 1970). This stage is the proper start

in making acquaintance with using devices in a joyful

and pleasant way, in making a transition from listen-

ing to a song or a story to pressing a button to listen

506

Guran, A., Cojocar, G. and Moldovan, A.

A User Centered Approach in Designing Computer Aided Assessment Applications for Preschoolers.

DOI: 10.5220/0009565505060513

In Proceedings of the 15th International Conference on Evaluation of Novel Approaches to Software Engineering (ENASE 2020), pages 506-513

ISBN: 978-989-758-421-3

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

to them, to interact according to rules, and to pay at-

tention to the process of interacting. This brought the

idea of building interactive applications to support the

teaching activities as well as the learning process and

to also cover the entertainment side of the process.

Inspired by the idea of building edutainment applica-

tions for preschoolers (Guran et al., 2019), we have

decided to go a step further, by introducing technol-

ogy in the evaluation process of preschoolers. In this

paper we present our user centered approach in de-

signing Computer Aided Assessment applications for

preschoolers.

The paper is structured as follows. Section 2 de-

scribes essential aspects of preschoolers’ assessment

and the characteristics of Computer Aided Assess-

ment. Section 3 presents our view on the design and

development process of Computer Aided Assessment

of preschoolers together with a case study. The re-

sults of applying the proposed approach are discussed

in Section 4. Section 5 presents some conclusions and

future work ideas.

2 EARLY CHILDHOOD

ASSESSMENT

The kindergarten is one of the educational environ-

ments with a big impact on the process of develop-

ment and socialization of the child.

The preschoolers’ evaluation is a complex didac-

tic process, that is structurally and functionally inte-

grated into the kindergarten activity. The theory and

practice of assessment in education has a wide va-

riety of ways of approaching and understanding the

role of evaluative actions. In the kindergarten activ-

ity, the evaluation act aims to measure and assess the

knowledge and the skills acquired by children during

the educational act. At the same time, the evalua-

tion also follows the formative aspects of the educa-

tor’s work, materialized in the ways of approaching

the change, in the attitudes and behaviors acquired by

the preschool child through the educational process.

Evaluation should not inhibit children or demotivate

them. Instead, it should stimulate them to learn better.

The knowledge, the skills, and the abilities acquired

during that period are reviewed, with the explicit pur-

pose of reinforcing and consolidating the new learned

behaviors.

2.1 Preschoolers’ Assessment in Our

Country

In our country, at the beginning of each school year

the first two weeks are used for collecting data about

the children, called the initial evaluation. Teachers

observe the children during different moments of the

daily program and talk to the children and their par-

ents in order to build an image as accurate as possible

on each child psycho-somatic development, knowl-

edge, understanding and skills. The same provision

applies to children enrolled during the school year. A

two-weeks final evaluation is recommended, but not

mandatory, in order to assess the overall progress of

each child during the school year or during all pre-

primary education years. Based on the results of the

final evaluation, teachers determine the educational

strategy to be applied the next school year and/or

make recommendations for the children ready to en-

roll in primary education. The curriculum promotes

the idea of encouraging children and helping them to

develop a positive self-image, and to gain confidence

in their own abilities and individual progress at one’s

own pace (EURYDICE, 2019). Currently, the sum-

mative evaluation of cognitive skills is paper-based,

an evaluation session consists in three or four evalu-

ation fiches that must be filled in by the child, with

content from the curricula domains studied during the

evaluated period.

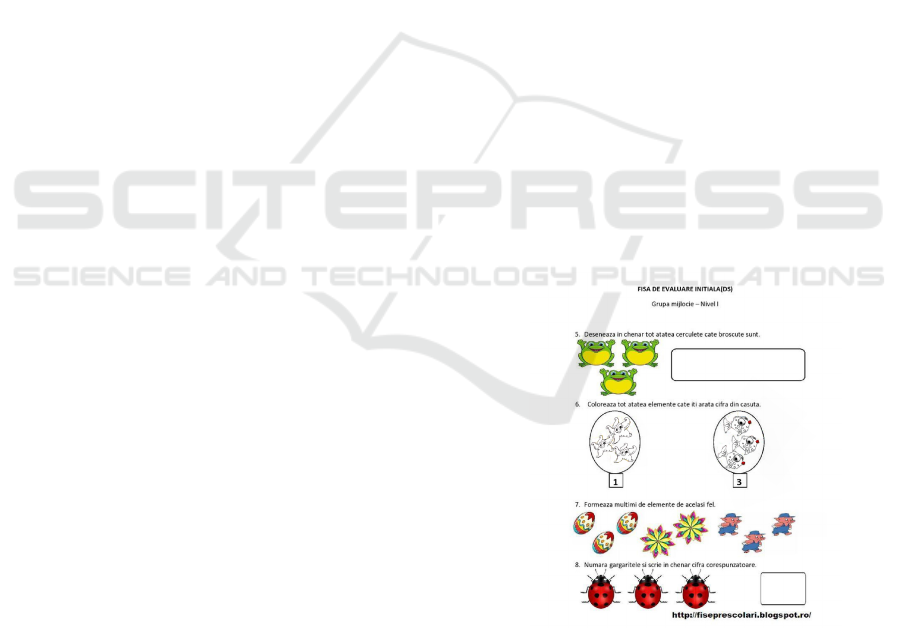

Figure 1: Paper-based evaluation example.

In Figure 1 an evaluation fiche is presented. The tasks

from the fiche are: to draw the number of lines equals

to the number of frogs, to paint the number of ele-

ments indicated by the number in the rectangle, to

mark the sets of identical elements and to write down

the digit corresponding to the number of ladybugs.

A User Centered Approach in Designing Computer Aided Assessment Applications for Preschoolers

507

2.2 Computer Aided Assessment

Computers have been successfully used to assess

older children and adults, and there is much research

comparing computer-based testing (CBT) to tradi-

tional paper-and-pencil testing (PPT) with older stu-

dents and adults (Sim and Horton, 2005; Sim et al.,

2014). While for adult users (e.g. faculty students)

there are advanced approaches in building adaptive

computer-based assessment tools (Chrysafiadi et al.,

2018; Krouska et al., 2018; Troussas et al., 2019;

Troussas et al., 2020), there are only a few attempts in

studying the appropriateness of computer-based test-

ing with typically developing preschool children. In

(Barnes, 2010) it is shown that preschool children can

successfully perform computer-based testing. The

main issue that was discovered is the children’s lack

of digital skills that brought difficulties in performing

the test. In this paper we describe our approach in

designing, implementing and evaluating a computer

aided assessment system for middle group preschool-

ers (4-5 years old) from our country. The intended

users are preschoolers who have participated in teach-

ing activities with the support of edutainment appli-

cations. Practitioners from software design and edu-

cation can benefit from the insights on the user cen-

tered design process, content, dealing with mistakes,

tasks, and evaluation that we have performed during

this case study implementation.

3 OUR USER CENTERED

APPROACH

To design successful Computer Aided Assessment ap-

plications, people with different backgrounds should

participate. We consider that at least people from

the following domains should be involved: educa-

tion (i.e., cognitive and developmental psychology),

design (i.e., interaction, industrial, UX, game), and

software engineering, together with preschool chil-

dren and their parents. As the final users of our in-

tended product are preschoolers, many constraints on

the design process occur. We consider that applying

User Centered Design (UCD) we have the opportu-

nity of building an appropriate evaluation tool. Still,

the UCD process needs adaptation, such as the final

users to be present or at least represented during all

the stages. In the following we describe our UCD ap-

proach in designing a Computer Aided Assessment

application together with a case study.

3.1 Participants

Two preschool education experts (kindergarten teach-

ers), three software designers (Software Engineering

master students), one interaction designer, five chil-

dren (two boys and three girls, aged 4-5 years) and

three parents participated in the design and evaluation

process.

3.2 Users’ Needs Identification

To understand clients’ requirements and to get to

know our real users we have conducted user studies

through observation and interviews with preschoolers

and kindergarten teachers. We have started the de-

sign process with the design team meeting the kinder-

garten teachers. In this meeting the teachers have pre-

sented the requirements for the evaluation software,

stating the following:

• the software application should not require inter-

net connection (as it is not always available);

• it should not require additional software licenses;

• it should not require installation;

• the children shall be capable of using it without

the help of an adult;

• the application should be built around a

story/game;

• the test shall not take longer than 10 minutes;

• the children shall be able to see, hear and answer

15 questions from topics they have studied during

the year;

• children shall have an audio helper providing in-

structions at each step;

• along the whole test, the children shall be able to

interact with the interface by clicking buttons and

by hovering over an audio helper;

• a child’s progress through the test shall be dis-

played at each step;

• at the end of the test the result will be displayed;

• a time limit for each question will be allocated;

• children will have the option of requesting addi-

tional help by hovering over the audio helper;

• the application will not collect any per-

sonal/private data and will comply with the

children privacy policy;

• the tasks children are required to perform should

cover all the domains from the preschool curricula

(if it is not possible, then most of the domains).

ENASE 2020 - 15th International Conference on Evaluation of Novel Approaches to Software Engineering

508

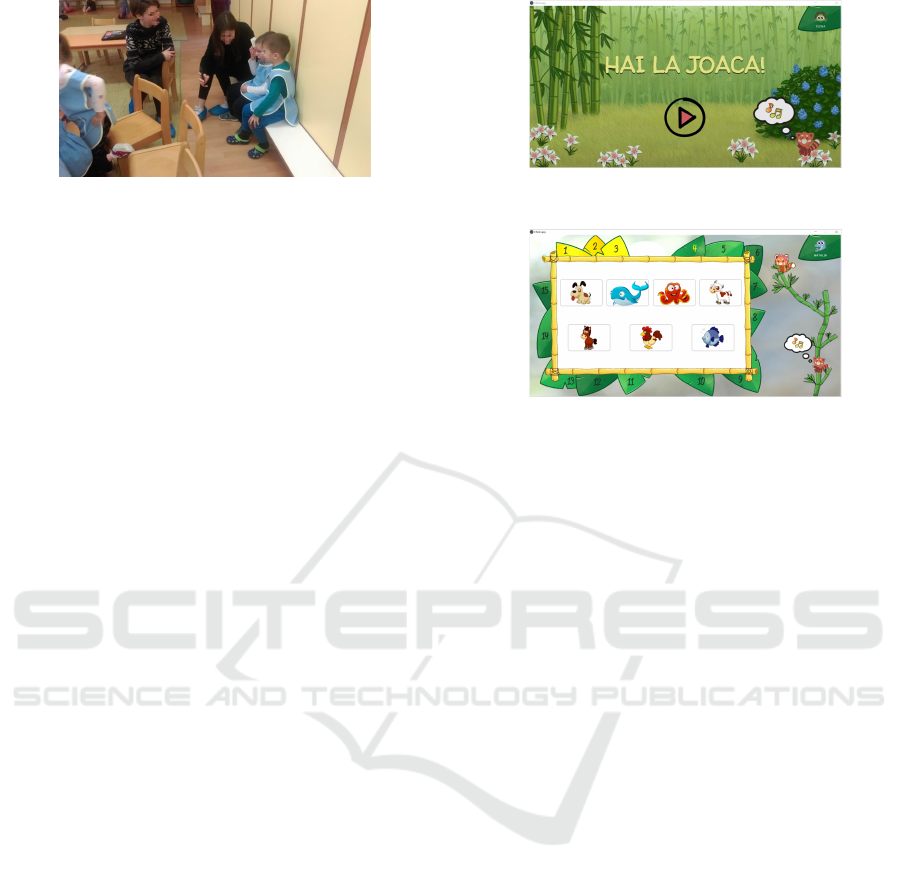

Figure 2: Field study at the kindergarten.

During the interviews with the kindergarten teachers,

information about the evaluation goals, methods, and

current situation has been gathered. Afterwards, the

design team has visited the kindergarten in order to

meet the final users of the system (see Figure 2).

The design team members have played with the

children, asking them questions in order to get an ini-

tial idea on their knowledge from different curricula

domains. Then, the children have been invited to play

on a laptop using the mouse, such that the digital skills

of the little users have been observed. At the same

time, the members of the design team have studied

the curricula and Bloom’s taxonomy of learning ob-

jectives (Bloom et al., 1956) to accommodate with

real users capabilities and limitations.

3.3 Design Alternatives and Prototyping

In the next step, the design team has proposed design

alternatives which were evaluated only by the kinder-

garten teachers. In this step the kindergarten teachers,

playing the role of the surrogate for the real users,

provided feedback on the narrative chosen by the de-

sign team to envelop the evaluation process, on the

proposed characters, and most important, on the tasks

proposed for the evaluation goal. Based on the teach-

ers’ feedback, the design team has started creating the

executable prototype. The prototype was conceived

as a quiz, exposing question and answer exercises or

sorting tasks, wrapped in game specific features. The

software encourages children into solving the quiz by

using means of games and interactive exercises, to

support them to engage, explore and think. The in-

terface is self-explanatory and designed in a way that

would enable the children to use it without adult su-

pervision. An audio guide provides information and

instructions along every step of the interaction, as an

alternative to the fact that preschoolers are not yet able

to read the questions on their own. We wanted to en-

sure that the users would receive help at all times,

without having the teachers instruct them into using

the application. The scenario, designed to encapsu-

late the evaluation tasks, exposed a red panda, called

Tibi, that had to solve a set of tasks with child’s help

Figure 3: First window in the evaluation application.

Figure 4: Status and progress view in the application.

(see Figure 3). If the child correctly solves a task, Tibi

will climb higher in the tree, thus getting closer to his

friend, Lin, which lives on the top of a tree (see Figure

4, on the right hand side of the screen). Each success-

fully solved task benefits from an advance in climb-

ing the tree for Tibi and, also, a bamboo leaf. In the

end, if all tasks are successfully completed, the two

friends meet and share the bamboo leaves. If some

tasks haven’t been completed, Tibi won’t get to Lin,

but it will still receive a basket of bamboo leaves (a

bamboo leaf for each correctly solved task).

While taking the test, a user knows at each step

his/her current status (the leaves surrounding the test

window, corresponding to the answered questions,

change their colour from green to yellow) and ac-

tual progress in the game, as Tibi climbs higher in

the bamboo tree from the right side of the screen with

each correct answer (see Figure 4).

The application does not allow a child to give up

when more challenging questions appear. After a

time limit of 45 seconds, if the child fails to deliver

an answer, a hint is presented to him/her (see Fig-

ure 5, where the first image that should be selected

is marked by 1). At the end of the test, after the child

finishes going through all of the tasks, the application

displays the result (number of tasks correctly solved,

together with a basket of leaves representing their per-

formance in the test, consisting in one bamboo leave

for each correct task), as a form of reward. The reward

is different based on how well the children answered

the questions (see Figure 6 and Figure 7).

A User Centered Approach in Designing Computer Aided Assessment Applications for Preschoolers

509

Figure 5: A hint in solving the task.

Figure 6: Evaluation end page when children failed to cor-

rectly answer all questions.

Figure 7: Evaluation end with all tasks successfully com-

pleted.

3.4 Evaluation

Evaluation of the prototype with real users has been

performed in the kindergarten. The children had the

opportunity to choose if they would like to interact

with the application. First, a boy and a girl decided

to test the application, than another boy and two girls

have interacted with it (see Figure 8).

We allowed for evaluation goal to let more than

one child interact with the application in order to sim-

ulate the think-aloud protocol used for adult users.

This way, we have extracted information about the

children’s thoughts on the game they were exposed

to. In the end, the children have been rewarded with

stickers with the characters of the narrative and diplo-

mas with elements from the evaluation game.

Data Collection and Analysis. We captured video

and audio recordings during the play-testing sessions

(see Figure 8) and during the satisfaction evaluation.

We also took observational notes during the evalua-

tion session. With the help of the video and audio

Figure 8: Play-testing sessions with real users.

recordings, and handwritten notes, we prepared a fo-

cus group with the children’s parents. We were inter-

ested in how the children described their experience

in their familiar environment.

Results. During the play-testing sessions we have fo-

cused on children’s performance. We have been inter-

ested in the number of tasks the children solved cor-

rectly, but also the number of tasks that needed hints

to be presented in order to be solved. Four children

have actively participated to the test. One child has

only observed his colleagues interacting, but did not

want to participate. From the 15 tasks the children had

to perform, one child has successfully completed 14,

the others a number between 10 and 13. We have ob-

served that children encountered difficulties in tasks

where the interaction steps were very specific. For

example, when the children needed to sort some ob-

jects in ascending or descending order, they selected

the largest and smallest object, but did not know how

to effectively select the elements in the required or-

der. Although hints were provided (by marking the

first element from the solution, as shown in Figure

5), we have observed that children did not manage to

perform better. We consider that a small video tutorial

will improve their performance.

In order to have an image of children satisfaction,

we have used post-interview sessions where questions

like Would you like to show to your best friend this

game? or Would you like to play again this game?

have been addressed. All children have answered that

they had a good time playing and two of them did

take the test again. As we know that children are very

willing to satisfy adults, we wanted to validate their

answers by asking them to fill in a dragonmeter (see

Figure 9). The dragonmeter is similar to a smiley-

ometer (Read and MacFarlane, 2006), but children in

this group are using it in their everyday activities to

express emotions. The dragonmeter presents the fol-

lowing emotions: brave, calm, sad, happy, bored, fu-

rious. Three children have chosen the happy dragon,

and two children have chosen the brave dragon. To

ENASE 2020 - 15th International Conference on Evaluation of Novel Approaches to Software Engineering

510

Figure 9: Dragonmeter example.

add more details to our understanding of children’s

view of their experience with the evaluation software,

we have involved their parents in a focus group. We

asked the parents to describe what the children told

them about the activity. Three parents agreed to par-

ticipate to the focus group. All the parents reported

that the children have mentioned the fact that there

was a game with a red panda. The children have

mentioned different objects (geometric shapes, ani-

mals, fruits) and some of the tasks they had to per-

form. They were very enthusiastic about the stick-

ers and diplomas they have received after the session.

The parents have mentioned that the children were

very enthusiastic about their experience. They have

also mentioned they would like to participate in fur-

ther similar activities. Their only concern was about

the period of time the children were exposed to the

computer. Although we have ensured them that from

our experience they did not sit in front of the com-

puter more than 10 minutes, they were sceptic, as

from children enthusiasm they believed the children

would never stop playing. From all these assessment

methods, we have drawn the conclusion that children

had a great time interacting with our assessment ap-

plication. They did not mention anywhere that they

had been evaluated or tested.

4 DISCUSSION AND LESSONS

LEARNED

Designing for children is different than designing for

other stakeholders, as there are additional constraints

brought by their physical and psychological develop-

ment. In the following we will present how the usual

software design phases need adaptation such that the

clients and the final users of the system participate in

the design process. Also, implementation of function-

ality that requires no supplementary effort when the

users are adults, brings the necessity of design and im-

plementation decisions when the users are small chil-

dren who cannot read or write.

4.1 Considerations on the Software

Engineering Process

Requirements. The process of gathering information

about their characteristics and needs must be adapted.

Spending time with the children in their familiar envi-

ronment provides useful information about their inter-

ests, their skills, and their knowledge. The entire ac-

tivity of requirements gathering should be organized

as a play activity, to encourage children participation

and to help them connect with the design team mem-

bers. When the goal of the designed product is educa-

tional, further assistance from the educational experts

needs to be integrated in the process. They can pro-

vide information about the developmental stages of

children, their knowledge on a specific domain and

further educational goals. Parents are valuable stake-

holders in the design process, as they can provide their

view on children’ knowledge, interests and interac-

tion skills.

Alternative Designs. In order to evaluate the alter-

native designs, there are two possible options: cre-

ating abstract representations of the design solutions

and involving an educational expert only to provide

feedback, or implementing executable prototypes of

the designed solutions such that the preschoolers are

able to give feedback. Although it is more comfort-

able to interact with the educational experts (adults)

to identify possible interaction problems, there are

some aspects that cannot be predicted by the educa-

tion experts. Task formulation accepted by the adult

users might be misinterpreted by the children. For

example, if a task required the children to count the

number of objects on the screen, the children always

answered verbally, without interacting with the inter-

face. Such situations cannot be identified without ob-

serving a child interacting with an executable proto-

type.

Prototyping. As we have previously mentioned, the

most appropriate approach when working with such

small children is to merge the design alternatives and

prototyping step. This means that more development

effort is involved in the early project steps, but the

children can participate to a larger degree in the de-

sign process. Involving children in the process is es-

sential, as we consider that the acceptance and en-

gagement of children in interaction is determinant on

their task performance.

Evaluation. Evaluation of computer assisted assess-

ment evaluation tools requires multiple aspects to be

taken into consideration. An evaluation from the ed-

ucation experts is needed to validate the content, nav-

igation, and task sequences. The evaluation with the

children is required to provide information about their

A User Centered Approach in Designing Computer Aided Assessment Applications for Preschoolers

511

interaction with the product, their satisfaction in in-

teraction and their understanding of the product. As

evaluation with preschool children is influenced by

their willingness to satisfy the adults, the use of mul-

tiple methods to identify their opinion is needed. As

such, parents should be involved in the evaluation step

to provide information on how the children have de-

scribed their experience about using the products. Au-

tomatic evaluation methods to identify children’ emo-

tions during the interaction can also provide meaning-

ful insights.

4.2 Discussions on Implementation

Challenges

4.2.1 Error Handling

New challenges occur when trying to create evalua-

tion software for preschoolers, as there are no guide-

lines in this domain. When paper-based testing is per-

formed, the kindergarten teacher observes the chil-

dren’ reaction and provides immediate support and

help. When using a computer aided assessment sys-

tem that is intended to be used by children with-

out adult intervention, the designers should try to in-

tegrate in the software the part of the kindergarten

teacher support. Taking the decisions on how long

to wait for an answer, how to provide feedback, how

to keep the children focused, how many hints to pro-

vide and how to count the performance (when chil-

dren needs guidance) is very challenging. A lot of

time was spent on deciding how to handle the situ-

ation when the child gives the wrong answer. Giv-

ing multiple chances to answer a question seemed

to be the right approach. But then, a new question

aroused: how many times should the application show

the same question? We decided that after an incorrect

answer, the application should present the child the

same question, together with a hint. If the child gives

another incorrect answer, the application goes to the

next task. If the child does not answer the question in

45 seconds, than the application automatically goes to

the next question. A better approach for this problem

would be to identify the child’s emotion and to guide

the interaction based on it (if the child gets frustrated

then hints would be provided to solve the task, if the

child is bored a new audio message should be pre-

sented to make him/her gain focus again).

4.2.2 User Authentication

Another essential aspect in developing assessment

tools is the authentication procedure. As the

preschoolers cannot read or write, is very difficult to

Figure 10: Authentication window.

identify the user taking the test. Our approach was

to take the symbols (avatars) from their objects in the

classroom, and it seemed to be an appropriate one (see

Figure 10). Still, you cannot be sure a child would

not use (intentionally or by mistake) another child’s

identifier. Another solution we envision is to use al-

gorithms for face recognition that will correctly estab-

lish the user taking the test.

5 CONCLUSIONS AND FUTURE

WORK

In this paper we have presented our experience in

building and evaluating computer aided assessment

applications for preschoolers. We have presented and

analyzed the difficulties we have encountered during

the design process. In the future, we will focus on the

following aspects:

• creating a larger repository of questions;

• assessing the validity of the developed computer

aided assessment tool;

• developing and integrating an automatic face

recognition module to precisely associate test re-

sults to the child taking the test;

• improving the satisfaction evaluation by using an

automatic tool that identifies emotions on chil-

dren.

ACKNOWLEDGMENTS

We thank all the children and their parents participat-

ing in the design and evaluation of the application. We

would like to thank for their patience, effort, and pas-

sion to the master students designing, implementing,

evaluating, and redesigning the application.

REFERENCES

Barnes, S. K. (2010). Using computer-based testing with

young children. Nera Conference Proceedings.

ENASE 2020 - 15th International Conference on Evaluation of Novel Approaches to Software Engineering

512

Bloom, B. S., Engelhart, M. D., Furst, E. J., and Hill,

W. H. (1956). Taxonomy of Educational Objectives:

The Classification of Educational Goals. Handbook 1:

Cognitive Domain. New York: David McKay. David

McKay, New York.

Bukova, I. (2017). Unesco working group on education:

Digital skills for life and work.

Chrysafiadi, K., Troussas, C., and Virvou, M. (2018).

A framework for creating automated online adaptive

tests using multiple-criteria decision analysis. 2018

IEEE International Conference on Systems, Man, and

Cybernetics (SMC), pages 226–231.

Crescenzi, L. and Grane, M. (2016). An analysis of the in-

teraction design of the best educational apps for chil-

dren aged zero to eight. Comunicar, 46(3-4):77–85.

EURYDICE (2019). Assessment in early childhood educa-

tion and care. Text.

Fraillon, J., Ainley, J., Schulz, W., Friedman, T., , and Geb-

hardt, E. (2016). Preparing for life in a digital age:

the iea international computer and information liter-

acy study international report.

Guran, A. M., Cojocar, G., and Moldovan, A. (2019). Ap-

plying ucd for designing learning experiences for ro-

manian preschoolers. a case study. In INTERACT (4),

pages 589–594. Springer.

International Computer and Information Literacy Study

(2018). The IEA international computer and

information literacy study. international report.

https://www.iea.nl/icils. Accessed: 2019-01-02.

Kiss, M. (2017). Eprs in-depth analysis: Digital skills in the

eu labour market.

Krouska, A., Troussas, C., and Virvou, M. (2018). Comput-

erized adaptive assessment using accumulative learn-

ing activities based on revised bloom’s taxonomy. In

Knowledge-Based Software Engineering: 2018, Pro-

ceedings of the 12th Joint Conference on Knowledge-

Based Software Engineering (JCKBSE 2018) Corfu,

Greece, pages 252–258.

Nesset, V. and Large, A. (2004). Children in the informa-

tion technology design process: A review of theories

and their applications. Library & Information Science

Research, 26(2):140–161.

Piaget, J. (1970). The origin of Intelligence in the Child.

Routledge & Kegan, London.

Read, J. C. and MacFarlane, S. (2006). Using the fun toolkit

and other survey methods to gather opinions in child

computer interaction. In Proceedings of the 2006 Con-

ference on Interaction Design and Children, IDC ’06,

pages 81–88, New York, NY, USA. ACM.

Robertson, J. W. (1994). Usability and children’s software:

a user-centred design methodology. Journal of Com-

puting in Child-hood Education, 5(3-4):257–271.

Sim, G., Holifield, P., and Brown, M. (2014). Implementa-

tion of computer assisted: Lessons from the literature.

ALT-J., 12(3):215–229.

Sim, G. and Horton, M. (2005). Performance and attitude of

children in computer based versus paper based testing.

online.

Troussas, C., Chrysafiadi, K., and Virvou, M. (2019). An

intelligent adaptive fuzzy-based inference system for

computer-assisted language learning. Expert Syst.

Appl., 127:85–96.

Troussas, C., Krouska, A., and Sgouropoulou, C. (2020).

Collaboration and fuzzy-modeled personalization for

mobile game-based learning in higher education.

Comput. Educ., 144.

UNESCO (2011). Digital literacy in education.

A User Centered Approach in Designing Computer Aided Assessment Applications for Preschoolers

513