stARS: Proposing an Adaptable Collaborative Learning Environment to

Support Communication in the Classroom

Tommy Kubica, Ilja Shmelkin, Robert Peine, Lidia Roszko and Alexander Schill

Faculty of Computer Science, Technische Universit

¨

at Dresden, Dresden, Germany

Keywords:

Learning Environment, Audience Response System, Backchannel System, stARS.

Abstract:

The usage of technology provides a powerful opportunity to support classic classroom scenarios. In addition

to improve the presentation of a lecturer’s content, technical tools are able to increase the communication to

the students or between students. Although many approaches exist that are able to support such interactions,

the lecturer has to adjust his/her teaching strategy to the corresponding system. To overcome this problem,

our goal is to allow lecturers to create their personal scenarios in an intuitive manner. As a solution, we

propose an approach called stARS (scenario-tailored Audience Response System) that builds on top of a

uniform (meta-)model. It provides a graphical editor as a user interface to create customized application

models that represent teaching scenarios. In addition to classic Audience Response functions such as learning

or survey questions, collaborative functionality is provided – specifically, group formations with associated

interactions within these groups (e.g., discussion or voting functionalities) are examined. In order to evaluate

our approach, in the first step, a user study was conducted to reason about the average user’s modeling abilities

with the graphical editor. Next, we target to evaluate both the functionality and the opportunities of our created

prototype in real-life scenarios.

1 INTRODUCTION

In recent years, technology has increasingly found its

way into teaching. While technical tools that allow

to present content to the students are omnipresent, the

potential of technology to improve communication in

the classroom is still a subject of research. Although a

lot of investigations have been conducted in the past,

e.g., (Lingnau et al., 2003) or (Dragon et al., 2013),

they were limited to small, non-anonymous scenarios.

Current approaches such as Audience Response

Systems, Classroom Response Systems, or Backchan-

nel Systems overcome those limitations by targeting

to involve students anonymously using their personal

mobile devices. They allow students to answer pre-

pared questions or to ask their own questions during

the ongoing lecture that can be discussed with other

students. A variety of systems exist, e.g., as listed by

(Hara, 2016), (Meyer et al., 2018) or (Kubica et al.,

2019a). As we do not need to distinguish different

types of systems in this paper, we will use the generic

term learning environments from now on.

Although the usage of these systems provides

a lot of promising opportunities to improve classroom

teaching (Nikou and Economides, 2018), they

suffer from heterogeneity. Instead of implementing

their teaching strategy in mind, lecturers have to

adapt their strategy to the system’s limited functional

scope and it’s predefined settings, e.g., the number

of repetitions a student got to answer a question

correctly.

Accordingly, our goal is to allow lecturers to

configure the system’s functionality to their personal

teaching strategy. As a solution, we present the pro-

totype of an approach called stARS

1

, which gives lec-

turers the opportunity to (1) adapt function blocks by

different parameters, (2) build sequences of function

blocks and add conditions in order to define differ-

ent learning paths, (3) link function blocks to coop-

erate and (4) support collaboration between students

by introducing novel function blocks for group forma-

tions and associated interactions. A user study will be

discussed, showing that users with different modeling

abilities are able to create customized scenarios. In

addition, the usage within realistic scenarios is mo-

tivated to show the applicability and opportunities of

our presented approach.

1

The running prototype is provided on: https://

stars-project.com/ (accessed 3/19/20).

390

Kubica, T., Shmelkin, I., Peine, R., Roszko, L. and Schill, A.

stARS: Proposing an Adaptable Collaborative Learning Environment to Support Communication in the Classroom.

DOI: 10.5220/0009489103900397

In Proceedings of the 12th International Conference on Computer Supported Education (CSEDU 2020) - Volume 2, pages 390-397

ISBN: 978-989-758-417-6

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

The remainder of this paper is structured as fol-

lows. Section 2 presents related work while specif-

ically targeting the customization of functionality in

these systems. In section 3, we present preliminary

work on the (meta-)model and the base on the sys-

tem’s concept. Next, section 4 introduces an infras-

tructure and describes each part of it in more detail.

In addition, the opportunities provided for the lecturer

are motivated. Section 5 presents the results retrieved

from an initial user study and discusses the evalua-

tion within realistic scenarios. Finally, section 6 con-

cludes this paper and discusses the limitations and fu-

ture work to be done.

2 RELATED WORK

Current literature presents many systems that tar-

get the support communication in classic classroom

teaching, e.g., as listed by (Hara, 2016), (Meyer et al.,

2018), or (Kubica et al., 2019a). In order to adjust

the functional scope to the lecturer’s personal teach-

ing strategy, different approaches exist, which will be

presented in the following.

The most straightforward way to customize the

range of functions is to select specific functions. E.g.,

in Tweedback

2

, the lecturer can enable or disable

the features called “Chatwall”, “Quiz” and “Panic-

Buttons”. Furthermore, GoSoapBox

3

allows a more

fine-granular selection of the system’s functionality

by extending the selection of its core features called

“Barometer”, “Quizzes”, “Polls”, “Instant Polling”,

“Discussion” and “Social Q&A” by additional fea-

tures (or filters), namely a “Profanity Filter”, “Math

Formatting” and “Names Required”. In order to

help the lecturer to choose the system’s functional

scope, ARSnova

4

allows to select predefined use

cases. E.g., by selecting “Clicker Questions”, all sim-

ple question types such as Multiple Choice, Single

Choice, Yes – No, Likert Scale and Grading are en-

abled. Furthermore, (Kubica et al., 2017) investigates

a proposal-based function selection that is able to es-

tablish a connection between the considered scenario

and the system’s functional scope. The scenario is

characterized by entering values for predefined influ-

ence factors, e.g., the amount of students participating

in the lecture. Afterwards, a suitable functional scope

is proposed that can be adjusted manually to match

the lecturer’s personal preferences.

Although the mentioned function selections help

at targeting the function scope at a certain degree, they

2

https://tweedback.de/?l=en (accessed 3/19/20).

3

https://www.gosoapbox.com/ (accessed 3/19/20).

4

https://arsnova.eu/mobile/ (accessed 3/19/20).

lack at their limited functional scope as well as at pre-

defined limitations, e.g., the number of repetitions to

answer a question or the feedback whether a possible

correct answer is displayed to the students or not.

(Sch

¨

on, 2016) presents an approach that goes be-

yond a static functional scope by proposing a new

generic model that allows to define customized teach-

ing scenarios. The model consists of objects with at-

tributes, and rules with conditions and actions. Due

to the high generics of the model, almost any scenario

can be created. In MobileQuiz2

5

, the model was im-

plemented and evaluated in several realistic scenar-

ios. During the evaluation, the modeling task turned

out to be very complex. Although a scenario editor

did help the users to model valid scenarios, it could

not make the modeling process easier to understand.

For this reason, a didactic expert is required to de-

fine the application model of a custom scenario when

MobileQuiz2 is used. Another issue got obvious dur-

ing execution. Due to the problem that the generic

model produces deep-nesting objects, it lacks in per-

formance as soon as the participation count raises.

In summary, two directions can be recognized: On

the one side, approaches exist targeting the system’s

functional scope by function selections. On the other

side, a generic model was proposed that is able to ex-

press scenarios without predefined elements. Nev-

ertheless, both groups have their individual limita-

tions, which motivated us to create an approach that

overcomes those and surpasses existing approaches.

In order to give any lecturer the opportunity to tar-

get the function scope to his/her teaching strategy in

mind, collaborative functional blocks will to be pro-

vided, which have so far only been investigated in

smaller, non-anonymous scenarios, e.g., as presented

by (Lingnau et al., 2003) or (Dragon et al., 2013).

3 PRELIMINARY WORK

This section presents preliminary work that was done

in advance of the prototype creation. First, the con-

cept of an adaptable learning environment is de-

scribed. Second, the fundament of our concept is pre-

sented, namely a (meta-)model for defining elements,

parameters, and rules.

3.1 Concept of an Adaptable Learning

Environment

Our main concept combines the strength of both ap-

proaches, the application models with static func-

5

http://www.mobilequiz.org (accessed 3/19/20).

stARS: Proposing an Adaptable Collaborative Learning Environment to Support Communication in the Classroom

391

customizedscenario functionalscopescenarioeditor

functionblocks

andparameters

(meta-)model applicationmodel runtimeenvironment

creates adapts

Figure 1: The concept of an adaptable learning environment that allows to create and execute customized scenarios in order

to support lecturers’ personal teaching strategies. (Kubica, 2019)

tional scopes and the flexible generic models allow-

ing for highly customizable scenarios. In addition, it

focuses on solving the respective limitations of these

approaches. Therefore, it builds on ideas derived from

two concepts, namely Model-Driven Software Devel-

opment (MDSD) and End User Development (EUD).

MDSD is described as the generation of software

from models, whereas the complexity of the model is

significantly easier to understand than the generated

code. The syntax of such models and the interrela-

tionships between elements are typically defined by

(meta-)models. (Stahl et al., 2007)

According to (Sendall and Kozaczynski, 2003),

different types of methods to transform the model to a

running software can be recognized, whereby the in-

termediate representation, meaning that the model is

exported in a standardized form (e.g., XML or JSON)

that can be used by external tools, is the most promis-

ing option for our concept.

Motivated by the results on modeling from

(Sch

¨

on, 2016), one major goal of our concept will be

to provide an intuitive opportunity for end-users (i.e.,

lecturers with different abilities in computer science)

to customize their scenarios. This is strongly related

to the discipline of EUD, which is defined as “a set

of methods, techniques, and tools that allow users of

software systems, who are acting as non-professional

software developers, at some point to create, mod-

ify or extend a software artifact” (Lieberman et al.,

2006). E.g., instead of entering code as it is done

in classic programming languages, the user will be

able to compose visual elements, e.g., blocks, and

connect or link them, resulting in a reduced complex-

ity. Our concept adopts this idea in a graphical editor

that gives lecturers the opportunity to customize their

teaching scenarios (resulting in application models).

In order to execute these application models, our

concept includes a runtime environment that needs to

interpret those and target its functional scope and set-

tings accordingly. Since performance is key in learn-

ing environments, especially during real-time func-

tionality (e.g., learning or survey questions), it has to

be designed in a way that can handle sudden changes

in resource demand; hence we propose a scalable in-

frastructure.

To summarize, the overall concept consists of differ-

ent components. First, a (meta-)model has to be cre-

ated that defines the system’s elements with their pa-

rameters and rules for relationships. Next, a graphical

editor, which builds on top of the (meta-model) will

support the lecturer in creating customized scenarios

(i.e., application models). Finally, a scalable infras-

tructure has to be created, which is able to interpret

these application models. Figure 1 summarizes the

concept in a graphical manner.

3.2 stARS (Meta-)model

The processes which take place during a lecture are

similar to the concept of workflows. As the lecture

moves on, events happen (e.g., students give answers

to questions, or a group session takes place) that allow

to decide how the lecture is continued.

The previously presented concept is centered

around a (meta-)model, which allows to create work-

flow models. Each workflow (i.e., the derived appli-

cation model) represents one specific lecture and can

be created by the lecturer with the help of a graphi-

cal editor. Which workflow elements exist and how

they connect to each other is defined explicitly by the

(meta-)model. Furthermore, each element has a set of

parameters that describe it more concretely. As work-

flows have similar structural elements across multi-

ple known modeling languages, our (meta-)model in-

corporates those conventions. Therefore each derived

workflow uses exactly one start node and an arbitrary

amount of end nodes. In between, an arbitrary amount

of function blocks can be used to describe a specific

lecture. All elements (i.e., start nodes, end nodes and

function blocks) are connected by transitions, which

determine the order of the activities of a lecture.

During design time, a lecturer cannot foresee how

a lecture will proceed, hence different types of transi-

tions exist:

• The OR-fork is used, when multiple paths in the

workflow can be taken based on the outcome of a

connected function block,

• AND-forks are used to design parallel activities,

by splitting the control-flow into several sub-flows

CSEDU 2020 - 12th International Conference on Computer Supported Education

392

• and a join connects several sub-flows again.

Each function block represents a unique functionality

of a learning environment. In general, we distinguish

seven groups of functions, each having several sub-

functions and a number of parameters for configura-

tion:

• learning questions have one or more correct an-

swer(s) and can be solved by the students,

• survey questions are used to poll an opinion of the

students,

• an open discussion allows students to ask own

questions and discuss them with other students,

• closed feedback gives students the opportunity to

provide instant feedback on predefined feedback

dimensions,

• group interactions form groups of students and

provide interactions within these groups,

• result presentation displays a result on students’

devices

• and media presentation is used to display a media

content on those.

Finally, a pause block exists, which allows lecturers

to build breaks into their workflows and enable the

modeling of a complete 90 minute lecture. A more de-

tailed description of the (meta-)model’s structure and

which (sub-)functions and parameters it provides is

presented in (Kubica et al., 2019b).

4 stARS PROTOTYPE

As motivated by (Bruff, 2019), each lecturer coming

to class has a teaching strategy in mind. This results in

lecturers that want to be able to use the learning envi-

ronment in a way so that it supports this strategy. This

section introduces our stARS prototype that targets to

accomplish this task by implementing the previously

described concept. First, a scalable infrastructure is

presented, which can handle sudden changes in re-

source demand by design. Afterward, each part of

this infrastructure is described in more detail. Last,

the options for the lecturer are summarized.

4.1 Infrastructure

System performance is key to provide a good user ex-

perience. Although teaching scenarios can range from

simple classroom teaching (i.e., 10 to 30 students)

to crowded lectures (i.e., 1000 students or more),

a system has to be able to deliver constant perfor-

mance, even when multiple sessions take place simul-

taneously. Performance from monolithic applications

can suffer during heavy load (i.e., “slash-dot effect”).

Therefore, to provide a good user experience con-

stantly, it is necessary to create a scalable distributed

infrastructure that is able to run multiple application

models simultaneously without interference. The in-

frastructure was proposed in (Kubica et al., 2019b)

and consists out of:

• A backend server to provide access to the

(meta-)model, the database and administrative

functions,

• a graphical editor frontend for the lecturer to cre-

ate application models and start or stop scenarios,

• an arbitrary amount of cloud servers which run the

individual application models based on a docker-

ized runtime

• and a user interface for the students to participate

in scenarios.

4.2 Backend Server

The backend represents the main entry point of ad-

ministrative components. It allows administrators to

manage the registration of cloud servers, i.e., new

cloud servers can be added, or existing ones can be

removed. Furthermore, it allows lecturers to create

instances of their customized application models (the

result of the modeling task using the graphical edi-

tor frontend). The retrieved model is checked against

the (meta-)model to ensure valid sequences. During

startup, the created instance is executed as a container

on a cloud server. An automatic selection for an ap-

propriate server is performed to avoid an overload of

individual servers. In addition to administrative func-

tions, the backend handles user authentification, i.e.,

the retrieval of user tokens for both backend and run-

ning instances. Last, it connects to a scalable database

that stores data which is generated during the execu-

tion of instances. This ensures that instances can be

resumed on failure, e.g., during server crashes.

4.3 Editor Frontend

The graphical editor frontend serves as a user in-

terface for lecturers. It allows to customize their

scenarios by composing different visual elements

that represent the function blocks introduced by the

(meta-)model. The main focus is the creation of an

intuitive solution that lecturers with varying modeling

abilities are able to use. For this reason, the concept

uses ideas derived from the User-Centered Design

stARS: Proposing an Adaptable Collaborative Learning Environment to Support Communication in the Classroom

393

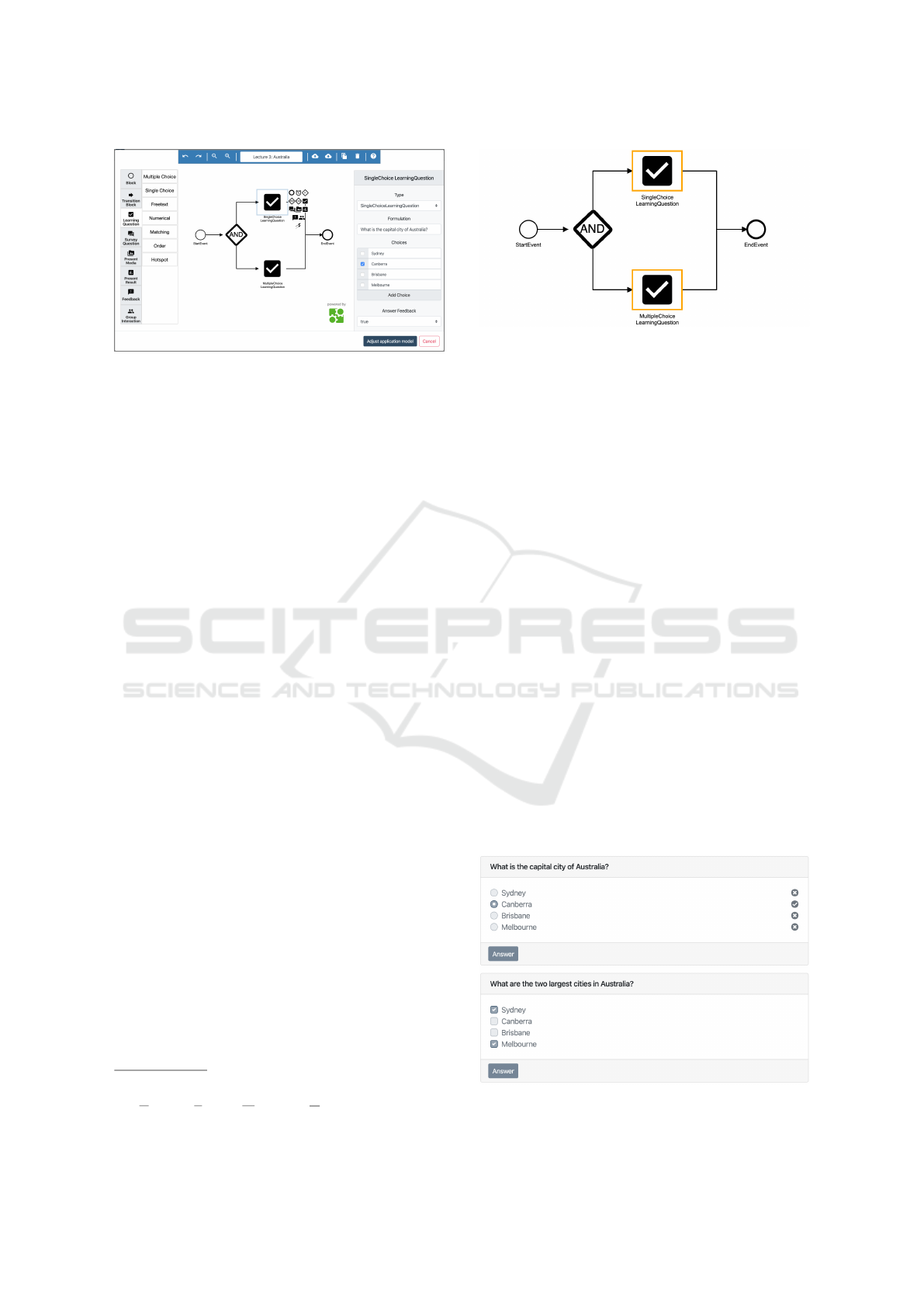

Figure 2: The graphical editor to create custom scenarios.

(UCD) approach that describes “design processes in

which end-users influence how a design takes shape”

(Abras et al., 2004). During an initial survey, the

opinion of users for basic components of the editor

(e.g., the position of the main menu or the strategy

for inserting elements) was requested. Based on the

results, a first conceptual prototype was developed.

Open questions were discussed in user interviews,

e.g., how the representation of elements should look

like. The results retrieved from these interviews were

combined in a final concept and implemented using

bpmn-js

6

(a rendering toolkit and web modeler for

BPMN 2.0

7

), as described by (Roszko, 2019) in more

detail. A screenshot of an extended version of the ed-

itor is displayed in Figure 2.

4.4 Cloud Servers

The application infrastructure supports the integration

of an arbitrary amount of cloud servers whereby the

number of cloud servers increments as the resource

demand increases. Each cloud server is able to run

multiple instances of a runtime as a docker container,

which, in turn, executes an application model spec-

ified by a lecturer. Each runtime allows a set of

specified people (i.e., administrator, lecturer, speci-

fied students) to manage answers, finish currently ac-

tive function blocks or retrieve results for a set of

questions. Each runtime maintains a WebSocket con-

nection to the backend as well as to each connected

user (e.g., lecturer, student) to inform users in real-

time about changes in the currently executed applica-

tion models. While executing an application model,

the runtime processes each function block as well as

each transition individually and decides, based on the

rules provided by the (meta-)model, which functions

have to be executed and provided to the user.

6

https://bpmn.io/toolkit/bpmn-js/ (accessed 3/19/20).

7

Business Process Model and Notation – A modeling

language for workflows.

Figure 3: The display of the current scenario with its active

function blocks.

4.5 User Interface

The user interface is the entry point for users to com-

municate with the system. According to the set user

roles, different views are to be differentiated: The lec-

turer and student view.

The lecturer view displays a list of created in-

stances with their respective application models. In

addition, it allows to create new instances using the

editor presented in subsection 4.3. Clicking on a spe-

cific instance will open a dashboard for managing

it. The created application model is displayed on the

top, and the currently active function blocks are high-

lighted in color, as depicted in Figure 3.

In the student view, these currently active function

blocks are displayed. According to the set parameters,

their respective functionality is adapted. E.g., a learn-

ing question with the parameter answerFeedback set

to true will display immediate feedback after an an-

swer has been given, as displayed in Figure 4. If the

parameter is set to false (which is the default value of

it), students do not receive feedback on the correct-

ness of their answers until the lecturer evaluates the

question. Setting these parameters properly enables

the implementation of different teaching strategies.

Figure 4: The answering of two questions with different

answerFeedback parameter set.

CSEDU 2020 - 12th International Conference on Computer Supported Education

394

Figure 5: The result view of the questions displayed within

Figure 4. Clicking on a specific container will open an ex-

tended view for evaluation.

In the lecturer view, the real-time evaluation of the

currently active function blocks will be presented be-

low the application model. For function blocks that

allow the answering of students, e.g., learning or sur-

vey questions, the results are presented using charts,

as depicted in Figure 5. Clicking on those overview

items will open a modal for the presentation and dis-

cussion of these questions. For other function blocks,

this view varies, e.g., for closed feedback, a modified

student view with additional buttons for managing the

discussions is displayed.

4.6 Options for the Lecturer

In order to configure the learning environment in a

way that it supports the lecturer’s personal teaching

strategy, different options exist and are presented in

the following.

First, lecturers are able to adapt each function

block to their special needs by setting different pa-

rameters. E.g., lecturers that will discuss the results

of a question with their students will disable the an-

swerFeedback, while a lecturer using those questions

as a self-test for students will not.

Second, lecturers can build sequences of function

blocks and define conditions that allow for the realiza-

tion of different learning paths. E.g., if the results of a

question are not satisfying, another question could be

displayed that will uncover the reason for the answers.

Third and as already motivated in the last exam-

ple, function blocks can be linked to cooperate. E.g.,

the results of one or a set of questions can serve as

the condition for different learning paths, or as the in-

put of result blocks that allow to display results on the

students’ devices.

The fourth and final described option is the ad-

dition of novel collaborative functionality, motivated

by the problem that peer or small group discussions

are hard to accomplish in large lectures. We present

an approach to move those discussions to an online

Figure 6: An example for a group discussion with partici-

pants having randomly generated pseudonyms.

learning environment. In addition to the support of

a class-wide open discussion functionality, a group

builder is introduced that allows the formation of

groups based on different algorithms. Within these

groups, different group interactions can be defined,

e.g., group discussions or voting. An example of a

group interaction is depicted in Figure 6 and visual-

izes the anonymous discussion between two students

that answered a previous question differently.

5 EVALUATION

Since this work consolidates multiple different types

of contributions based on several submissions, also

different evaluation strategies apply for each of them.

The following section summarizes those strategies.

5.1 Paper-based Evaluation of the

(Meta-)model

As the (meta-)model was no fundamentally new idea

but incorporated ideas of the according related work,

the evaluation was primarily conducted to test if the

weak points of prior approaches (i.e., very compli-

cated modeling process, insufficient modeling capa-

bilities) were still present. As the prototypical im-

plementation of the system’s backend and frontend as

well as the graphical editor were still in development,

a paper-based approach was chosen to simulate the

system’s capabilities. The evaluation was executed

by 20 participants who predominantly agreed that the

presented (meta-)model was easy to use and intuitive

and that they therefore would use it in future teaching

scenarios (Kubica et al., 2019b). During the evalu-

ation, it was not feasible to test all functions which

the (meta-)model provides. Therefore, a representa-

tive subset was chosen that was accepted very well by

the participants of the study, although they did not use

similar functions before.

stARS: Proposing an Adaptable Collaborative Learning Environment to Support Communication in the Classroom

395

5.2 Evaluation of the Prototypical

Graphical Editor

In (Roszko, 2019), the prototype of the graphical edi-

tor was evaluated. The evaluation included five parts

and was executed by 19 participants as follows: First,

the participants were asked to fill in general informa-

tion about their prior knowledge on graphical editors

and the concept of workflows. Second, three different

tasks were presented to the participants, which should

be solved using the prototype. Each task checked spe-

cific abilities: While the participants were asked to

insert, connect and parameterize function blocks in

the first task, they should use abstract function blocks

in a second more complex task and delete and adjust

function blocks in the final task. Third, each compo-

nent of the prototype was rated using a Likert scale.

Fourth, a System Usability Scale (SUS; cf. (Brooke

et al., 1996)) was determined before the fifth part con-

cluded the evaluation with qualitative feedback from

both positive and negative perspectives.

Although participants with different prior knowl-

edge were part of this evaluation, we could not ob-

serve a significant difference in the results between

those groups. Both groups of participants with prior

knowledge and without were able to solve the tasks

of the second part without major difficulties. This is

also reflected in the SUS score, which ranges between

70 and 95. In particular, only 2 out of 19 participants

rated a score under 85. The average score of 88 in-

dicates a good to excellent usability. Moreover, the

third part of our evaluation was able to verify our pro-

posed concept. Only minor changes were necessary

to implement the feedback of the participants, e.g.,

removing an alternative theme or additional buttons

to duplicate or delete an element. Finally, the feed-

back retrieved from the qualitative questions was also

added to the editor before it was integrated within the

overall system.

5.3 Implementation in Realistic

Scenarios

In the next step, we plan to extend these user studies

by implementing our prototype in real scenarios, i.e.,

giving lecturers the prototype on hand and let them

model and execute their customized scenarios. In ad-

dition to monitoring the system’s behavior, interviews

will be conducted to receive feedback from the lectur-

ers. Our goal is to evaluate both the functionality and

the opportunities provided by our prototype. Our cre-

ated prototype is open to use for every lecturer.

6 CONCLUSIONS

In this paper, the prototype of an adaptable learn-

ing environment was presented. It gives lecturers

the opportunity to target the system’s functionality

to their personal teaching strategy. They can adapt

function blocks by different parameters, build condi-

tional sequences of those function blocks, link func-

tion blocks to cooperate, and choose from novel col-

laborative functions as an addition to known Audience

Response, or Backchannel functions. The results re-

trieved from user studies for the (meta-)model and the

graphical editor were presented. Future applications

within real-life scenarios were proposed to evaluate

the overall concept and check the interplay of our

individual components, namely the backend server,

cloud servers, graphical editor frontend and user in-

terface.

Due to design, our approach is targeted to lecturer-

paced scenarios, i.e., the lecturer has to unlock the

specific functionality before it can be used by the stu-

dents. Our prototype does not investigate scenarios, in

which the students can iterate the created application

models by themselves. Nevertheless, an extension to

such scenarios will be investigated in the future.

Having in mind that the presented prototype is in

an early stage, improvements regarding the usability

have to be investigated. In order to improve the user

experience for lecturers even more, a proposal-based

function is planned that will suggest the implementa-

tion of appropriate methods during the ongoing exe-

cution within a lecture.

ACKNOWLEDGEMENTS

This work is funded by the German Research Foun-

dation (DFG) within the Research Training Group

“Role-based Software Infrastructures for continuous-

context-sensitive Systems” (GRK 1907). Special

thanks to Tenshi Hara for his feedback and proof-

reading.

REFERENCES

Abras, C., Maloney-Krichmar, D., Preece, J., et al. (2004).

User-centered design. Bainbridge, W. Encyclopedia of

Human-Computer Interaction. Thousand Oaks: Sage

Publications, 37(4):445–456.

Brooke, J. et al. (1996). Sus-a quick and dirty usability

scale. Usability evaluation in industry, 189(194):4–7.

Bruff, D. (2019). Intentional Tech: Principles to Guide the

Use of Educational Technology in College Teaching.

West Virginia University Press.

CSEDU 2020 - 12th International Conference on Computer Supported Education

396

Dragon, T., Mavrikis, M., McLaren, B. M., Harrer, A.,

Kynigos, C., Wegerif, R., and Yang, Y. (2013).

Metafora: A web-based platform for learning to learn

together in science and mathematics. IEEE Transac-

tions on Learning Technologies, 6(3):197–207.

Hara, T. C. (2016). Analyses on tech-enhanced and anony-

mous Peer Discussion as well as anonymous Control

Facilities for tech-enhanced Learning. PhD thesis,

Technische Universit

¨

at Dresden.

Kubica, T. (2019). Adaptable Collaborative Learning En-

vironments. In DCECTEL 2019 – Proceedings of the

14th EC-TEL Doctoral Consortium.

Kubica, T., Hara, T., Braun, I., Kapp, F., and Schill, A.

(2017). Guided selection of IT-based education tools.

In FIE 2017 – Proceedings of the 47h Frontiers in Ed-

ucation Conference.

Kubica, T., Hara, T., Braun, I., Kapp, F., and Schill, A.

(2019a). Choosing the appropriate Audience Re-

sponse System in different Use Cases. In ICETI 2019

– Proceedings of the 10th International Conference on

Education, Training and Informatics.

Kubica, T., Shmelkin, I., and Schill, A. (2019b). Towards

a Development Methodology for adaptable collabora-

tive Audience Response Systems. In ITHET 2019 –

Proceedings of the 18th International Conference on

Information Technology Based Higher Education and

Training. IEEE.

Lieberman, H., Patern

`

o, F., Klann, M., and Wulf, V. (2006).

End-user development: An emerging paradigm. In

End user development, pages 1–8. Springer.

Lingnau, A., Kuhn, M., Harrer, A., Hofmann, D., Fendrich,

M., and Hoppe, H. U. (2003). Enriching traditional

classroom scenarios by seamless integration of inter-

active media. In Proceedings 3rd IEEE International

Conference on Advanced Technologies, pages 135–

139. IEEE.

Meyer, M., M

¨

uller, T., and Niemann, A. (2018). Serious

Lecture vs. Entertaining Game Show – Why we need

a Combination for improving Teaching Performance

and how Technology can help. In EDULEARN 2018 –

Proceedings of the 10th International Conference on

Education and New Learning Technologies.

Nikou, S. A. and Economides, A. A. (2018). Mobile-based

assessment: A literature review of publications in ma-

jor referred journals from 2009 to 2018. Computers &

Education, 125:101–119.

Roszko, L. (2019). Entwicklung eines graphischen Editors

zur Erstellung von beliebigen Lernszenarien in Audi-

ence Response Systemen.

Sch

¨

on, D. (2016). Customizable Teaching on Mobile De-

vices in Higher Education. PhD thesis, Universit

¨

at

Mannheim.

Sendall, S. and Kozaczynski, W. (2003). Model transfor-

mation: The heart and soul of model-driven software

development. IEEE software, 20(5):42–45.

Stahl, T., V

¨

olter, M., Efftinge, S., and Haase, A. (2007).

Modellgetriebene Softwareentwicklung: Techniken.

Engineering, Management, 2:64–71.

stARS: Proposing an Adaptable Collaborative Learning Environment to Support Communication in the Classroom

397