Eye Gaze Tracking for Detecting Non-verbal Communication in Meeting

Environments

Naina Dhingra

a

, Christian Hirt

b

, Manuel Angst and Andreas Kunz

c

Innovation Center Virtual Reality, ETH Zurich, Zurich, Switzerland

Keywords:

Eye Gaze, Eye Tracker, OpenFace, Machine Learning, Support Vector Machine, Regression, Data Processing.

Abstract:

Non-verbal communication in a team meeting is important to understand the essence of the conversation.

Among other gestures, eye gaze shows the focus of interest on a common workspace and can also be used

for an interpersonal synchronisation. If this non-verbal information is missing and or cannot be perceived by

blind and visually impaired people (BVIP), they would lack important information to get fully immersed in

the meeting and may feel alienated in the course of the discussion. Thus, this paper proposes an automatic

system to track where a sighted person is gazing at. We use the open source software ’OpenFace’ and develop

it as an eye tracker by using a support vector regressor to make it work similarly to commercially available

expensive eye trackers. We calibrate OpenFace using a desktop screen with a 2 × 3 box matrix and conduct a

user study with 28 users on a big screen (161.7 cm x 99.8 cm x 11.5 cm) with a 1 × 5 box matrix. In this user

study, we compare the results of our developed algorithm for OpenFace to an SMI RED 250 eye tracker. The

results showed that our work achieved an overall relative accuracy of 58.54%.

1 INTRODUCTION

One important factor of non-verbal communication is

that people are often looking at artifacts on the com-

mon work space or at the other person when collab-

orating with each other. Eye gaze provides informa-

tion on emotional state (Bal et al., 2010), text entry

(Majaranta and R

¨

aih

¨

a, 2007), or concentration for an

object (Symons et al., 2004) given by the user, to in-

fer visualization tasks and a user’s cognitive abilities

(Steichen et al., 2013), to enhance interaction (Hen-

nessey et al., 2014), to have communication via eye

gaze patterns (Qvarfordt and Zhai, 2005), etc. How-

ever, such information cannot be accessed by blind

and visually impaired people (BVIP) as they cannot

see where the other person in the meeting room is

looking at (Dhingra and Kunz, 2019; Dhingra et al.,

2020). Therefore, it is important to track eye gaze in

the meeting environment to provide the relevant in-

formation to them.

Eye gaze tracking is locating the position where a

person is looking at. This specific spatial position is

known as the point of gaze (O’Reilly et al., 2019). It

has been employed for research in scan patterns and

a

https://orcid.org/0000-0001-7546-1213

b

https://orcid.org/0000-0003-4396-1496

c

https://orcid.org/0000-0002-6495-4327

attention in human-computer interaction, as well as in

psychological analysis. Eye gaze tracking technology

is categorized into two categories, i.e., head-mounted

systems and remote systems where head-mounted eye

trackers are mobile and remote systems are stationary

trackers.

Early eye tracking systems were based on metal

contact lenses (Agarwal et al., 2019a), while today’s

eye trackers use an infrared camera and a bright or

dark pupil technique (Duchowski, 2007). These tech-

niques locate the pupil’s center. The tracker can then

locate the target’s position on the screen where the

person is gazing at using the relative position of the

corneal reflection and the pupil center. Other eye

trackers which are based on high speed video cameras

are more expensive than the infrared based eye track-

ers, but are also more accurate than webcam based eye

trackers (Agarwal et al., 2019a). In such eye trackers,

the measurement is done based on deep learning and

computer vision applications (Kato et al., 2019), (Yiu

et al., 2019).

Based on the possibility to improve the accessibil-

ity of non-verbal communication for BVIP, our work

will use eye gaze tracking to detect where people are

looking at. Based on the availability and known ad-

vantages of the systems, the OpenFace and SMI RED

250 were chosen to be included in the analysis. Open-

Face is an open-source software for real-time face

Dhingra, N., Hirt, C., Angst, M. and Kunz, A.

Eye Gaze Tracking for Detecting Non-verbal Communication in Meeting Environments.

DOI: 10.5220/0009359002390246

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 2: HUCAPP, pages

239-246

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

239

embedding visualization and feature extraction that

works with webcams. The commercialized SMI RED

250 remote eye tracker comes with the iView software

to process the data.

The main contributions of this work are as fol-

lows: (1) We developed an overall low-cost eye

tracker which uses a webcam and the OpenFace soft-

ware together with support vector machines to im-

prove accuracy; (2) we designed this eye tracking sys-

tem to be used in real-time in meeting environments;

(3) we performed a user study with 28 users to evalu-

ate the performance of this new eye gaze tracking ap-

proach; and (4) we evaluated the effect of users wear-

ing glasses and not wearing glasses on performance.

The motivation of our work is the use of a low

cost webcam along with a free open source face fea-

ture detection software, since commercial eye track-

ing systems are usually not available in typical meet-

ing rooms and are very expensive.

This paper is organized as follows: Section 2 de-

scribes the state of the art in eye tracking. Section

3 briefly describes the methods and techniques used

in our system, while Section 4 gives details about

the experimental setup. In section 5, we show an

overview of the conducted user study and discusses

the achieved results. Finally, Section 6 concludes the

paper with future work and improvements of the cur-

rent system.

2 STATE OF THE ART

The advancement of computers and peripheral hard-

ware led to several different applications which are

based on gaze interactions. These applications can

be divided into various sub-categories: TV panels

(Lee et al., 2010), head-mounted displays (Ryan et al.,

2008), automotive setups (Ji and Yang, 2002), desk-

top computers (Dong et al., 2015), (Pi and Shi, 2017),

and hand-held devices (Nagamatsu et al., 2010). Nu-

merous researchers have worked on other eye gaze in-

teractions such as public and large displays. (Drewes

and Schmidt, 2007) worked on eye movements and

gaze gestures for public display application. Another

work by (Zhang et al., 2013) built a system for detect-

ing eye gaze gestures to the right and left directions.

In such systems, either hardware-based or software-

based eye tracking is employed.

2.1 Hardware-based Eye Gaze Tracking

Systems

Hardware-based eye gaze trackers are commercially

available and usually provide high accuracy that

comes with a high cost of such devices. Such eye gaze

trackers can further be categorized into two groups,

i.e., head-mounted eye trackers and remote eye track-

ers. Head-mounted devices usually consist of a num-

ber of cameras and near-infrared (NIR) light emitting

diodes (Eivazi et al., 2018), being integrated in the

frame of goggles. Remote eye trackers on the other

hand are stationary. We used an SMI RED 250 eye

tracker which is one of the remote eye trackers. Using

this device, we built an automatic eye gaze tracking

system for users sitting in the meeting environment.

2.2 Software-based Eye Gaze Tracking

Systems

Software-based eye gaze tracking uses features ex-

tracted from a regular camera image by computer vi-

sion algorithms. In (Zhu and Yang, 2002), the cen-

ter of the iris is identified using an interpolated Sobel

edge detection. Head direction also plays a signif-

icant role in the eye gaze tracking, e.g. in (Valenti

et al., 2011) where a combination of eye location and

head pose is used. In (Torricelli et al., 2008), a general

regression neural network (GRNN) is used to map ge-

ometric features of the eye position to screen coordi-

nates. The accuracy of GRNN is depending on the

input vectors. A low cost eye gaze tracking system is

developed in (Ince and Kim, 2011) using the eye pupil

center detection and movement. It performed well on

a low resolution video but had the drawback of be-

ing dependant on head movement and pose. Webcam-

based gaze tracking has also been researched in sev-

eral different works using computer vision techniques

(Dostal et al., 2013; Agarwal et al., 2019b). These

works implemented the feature detection in a propri-

etary way, whereas we are implementing our system

with an open source software which detects feature

with good accuracy and we tuned the mapping from

detected features to screen coordinates based on a

commercially available eye tracker using support vec-

tor machine regression.

3 METHODOLOGY

3.1 OpenFace

OpenFace (Baltru

ˇ

saitis et al., 2016) is an open-source

software which can be used in real-time for analyz-

ing facial features. The software has various features:

facial landmark detection (Amos et al., 2016), facial

landmark and head pose tracking, eye gaze tracking,

facial action unit detection, behavior analysis (Bal-

tru

ˇ

saitis et al., 2018), etc. We used OpenFaceOf-

HUCAPP 2020 - 4th International Conference on Human Computer Interaction Theory and Applications

240

fline out of various applications available in the Open-

Face package. OpenFace can analyze videos, images,

image sequences, and live webcam videos. Gaze

recordings include gaze directions for both eyes sep-

arately, an averaged gaze angle, and eye landmarks

in two-dimensional image coordinates and in three-

dimensional coordinates of the camera’s coordinate

system. Additionally, the timestamp and the success

rate are recorded automatically.

3.2 Eye Tracker SMI RED 250 with

iView

The SMI RED 250 comes with the modular design

which can be integrated into numerous configurations

ranging from a small desktop screen to big televi-

sion screens or projectors. It utilizes head movement

and eye tracking along with the pupil and gaze data

to achieve accurate results. SMI claims to have ro-

bust results regardless of the age of the user, glasses,

lenses, eye color, etc. The system needs to be cali-

brated which takes a few seconds and maintains its ac-

curacy during the duration of the experiments. It can

track eye gaze up to 40 degrees in horizontal direction

and 60 degrees in vertical direction. iView software

is provided by SMI along with the eye tracker for the

data output and processing.

3.3 Support Vector Machine Regression

Support vector machines (SVMs) were developed as

a binary classification algorithm to increase the gap

between different categories or classes from the train-

ing set (Suykens and Vandewalle, 1999). SVMs are

also used as a regression tool with an intuition to build

hyperplanes which are as close as possible to train-

ing examples. For more detailed mathematical in-

formation on SVMs for regression, refer to (Smola

and Sch

¨

olkopf, 2004). In our system, we will use

SVMs to assign measurement values to the predefined

classes although such data might not precisely match

the training data.

3.4 Basic Pipeline

In the experiments, we used the SMI eye tracker with

the iView software and compared it to a regular we-

bcam together with the open-source software Open-

Face with some adjustments to their output using the

aforementioned SVMs. Since the output from Open-

Face was given in terms of eye position and eye gaze

direction, we used a mathematical and geometrical

manipulation to convert these given vectors to screen

coordinates.

Figure 1: Setup for the small desktop screen.

For the comparison, two different setups were

used: a 22” monitor at 0.6m distance (see Figure 1),

and a 65” screen at a distance of 3m to the user (see

Figure 4). We also performed a user study with 28

users on this 65” screen. In both cases, the SMI eye

tracker and the webcam were placed at a distance of

0.6m to the user. These two different setups were used

to prove the robustness of our system as well as to

validate our algorithm. It also evaluates that this sys-

tem can be employed in meeting environments where

sighted people might look at two screens placed at dif-

ferent distances having different measurement noise.

3.5 Desktop/Small Screen Setup

The setup used for the experiments with the desk-

top screen is shown in Figure 1. The user had to

look at different regions on the desktop screen while

his eye gaze was measured by the commercial eye

gaze tracker (using iView) as well as by the the we-

bcam (using OpenFace without any correction algo-

rithm). The experiments with this setup showed that

the screen coordinates from OpenFace are accumu-

lated at a sub-region of the whole screen as shown in

Figure 5.

Because of the accumulation of points, we used

an SVM algorithm to convert those coordinates into a

similar form as the output coordinates from the iView

software. Figure 6 shows the scatterplot for iView and

OpenFace coordinates after using the SVM algorithm

for correction. We used 70% of the data for training

and 30% of the data for testing.

We used a 2 × 3 matrix as shown in Figure 2 to

evaluate the manipulated output from OpenFace and

SMI RED 250 in terms of 6 classes. The user was

told to look at the numbered fields of this matrix and

his eye gaze was simultaneously measured using the

webcam with OpenFace and using the SMI RED 250

with iView. The measurements stemming from the

SMI RED 250 were taken as ground truth. Figure 3

Eye Gaze Tracking for Detecting Non-verbal Communication in Meeting Environments

241

shows the accuracy per box for the 2 × 3 matrix. It is

evident that OpenFace performs better for the middle

boxes than for the boxes 4 and 6. The results are also

shown in Table 1. We achieved an accuracy of 57.69%

on the test data using the SVM algorithm for regress-

ing points from OpenFace to iView coordinates.

Figure 2: 2 ×3 matrix for the comparison of OpenFace and

iView after applying the correction with the SVM.

1 2 3 4 5 6

iView truth box number

0

10

20

30

40

50

60

70

80

90

100

Accuracy per box [%]

SVM

Figure 3: Accuracy per box for OpenFace (with SVM ap-

plied) when the iView box number is considered as ground

truth.

Table 1: The accuracy of OpenFace with SVM using the

SMI RED 250 eye tracker as reference. Values are in %.

Box Number OpenFace with SVM

Overall 57.69

1 62.82

2 65.92

3 75.36

4 35.84

5 60.47

6 47.54

4 EXPERIMENTAL SETUP FOR

USER STUDY

The setup consists of a demo environment where a

sighted person is looking at a screen. We are aiming

to provide the useful information to the BVIP about

the location of the sub-region of the screen where a

person in the meeting is looking at. We used 1 ×

5 boxes in the matrix shown to the user at the time

during the user study. In our application, we are con-

cerned about the region of interest of the person gaz-

ing at the screen, but not in the particular location.

Accordingly, we assume that the screen is divided into

5 sub-parts and aim to provide high accuracy for pre-

dicting the sub-part of interest of the user. The exper-

imental setup is shown in 4.

The user was asked to look at a numbered region

for few seconds and then the next region number was

given. The sequence of region numbers a user had to

look at was the same for every user to keep the unifor-

mity. The SMI RED 250 eye-tracker with iView and

OpenFace were used to take measurements simulta-

neously. The data was refined and processed for the

sample values which had the same time stamp.

Figure 4: Experimental setup for user study.

5 USER STUDY AND RESULTS

We conducted the user study with 28 users which had

a mix of people with and without spectacles or lenses.

We used the SMART Board

R

400 series interactive

overlay flat-panel display named as SBID-L465-MP.

It has a 65” screen diagonal and its dimensions are:

161.7 cm × 99.8 cm × 11.5 cm. The SMI RED 250

eye-tracker with iView and the webcam with Open-

Face were used to take measurements simultaneously.

We used the same approach as for the small desktop

screen. But in this case instead of training the Open-

Face points to perform similar to the iView points, we

train to compare the performance using the ground

truth given by the known positions of the 5 different

fields. We used the SVM algorithm to perform a spa-

tial manipulation of the data so that the accumulated

HUCAPP 2020 - 4th International Conference on Human Computer Interaction Theory and Applications

242

OpenFace data are similar to the iView data as shown

in Figures 5 and 6.

-200 0 200 400 600 800 1000 1200 1400 1600 1800 2000

x coordinate [px]

-200

0

200

400

600

800

1000

1200

y coordinate [px]

iView

OpenFace

Figure 5: Scatter plots showing the output data from iView

and OpenFace. It is shown that the output from the Open-

Face is concentrated in the certain area of the the screen.

-200 0 200 400 600 800 1000 1200 1400 1600 1800 2000

x coordinate [px]

-200

0

200

400

600

800

1000

1200

y coordinate [px]

iView

SVM

Figure 6: Support vector machines algorithm is used to

regress the data in a way so that OpenFace produces results

similar to iView.

Figure 7: Numbers displayed on the big screen at a distance

of 3m from the user.

We asked each user to sit in front of the big screen

at a distance of 3 meters. The screen displayed a ma-

trix of 1×5 numbers. The users were asked to look at

those numbers as shown in Figure 7. Whenever a user

looked at some number, that region was highlighted in

green. We gave the same sequence of numbers to all

28 users to look at. Then, we saved the raw data from

both software, i.e., iView and OpenFace.

Table 2: Accuracy of the SMI RED 250 eye tracker com-

pared to OpenFace with SVM.

Box Number SMI RED 250 OpenFace with SVM

Overall 81.48 58.54

1 84.03 42.25

2 81.72 62.89

3 78.85 67.46

4 81.04 70.41

5 81.74 49.71

1 2 3 4 5

Ground truth box number

0

10

20

30

40

50

60

70

80

90

100

Accuracy per box [%]

iView

SVM

Figure 8: Accuracy per box for all the users.

1 2 3 4 5

Ground truth box number

0

500

1000

1500

2000

2500

Number of successful detections

Ground truth

iView

SVM

Figure 9: Number of successful detections for the test data

in user study.

We analyzed the raw data to know whether the

measured position coincides with the original posi-

tion of the corresponding box number on the screen.

We compared the output results from both software at

the same time stamps with the ground truth numbers

at which the user was asked to look at for a particu-

lar time stamp. The average accuracy for iView was

Eye Gaze Tracking for Detecting Non-verbal Communication in Meeting Environments

243

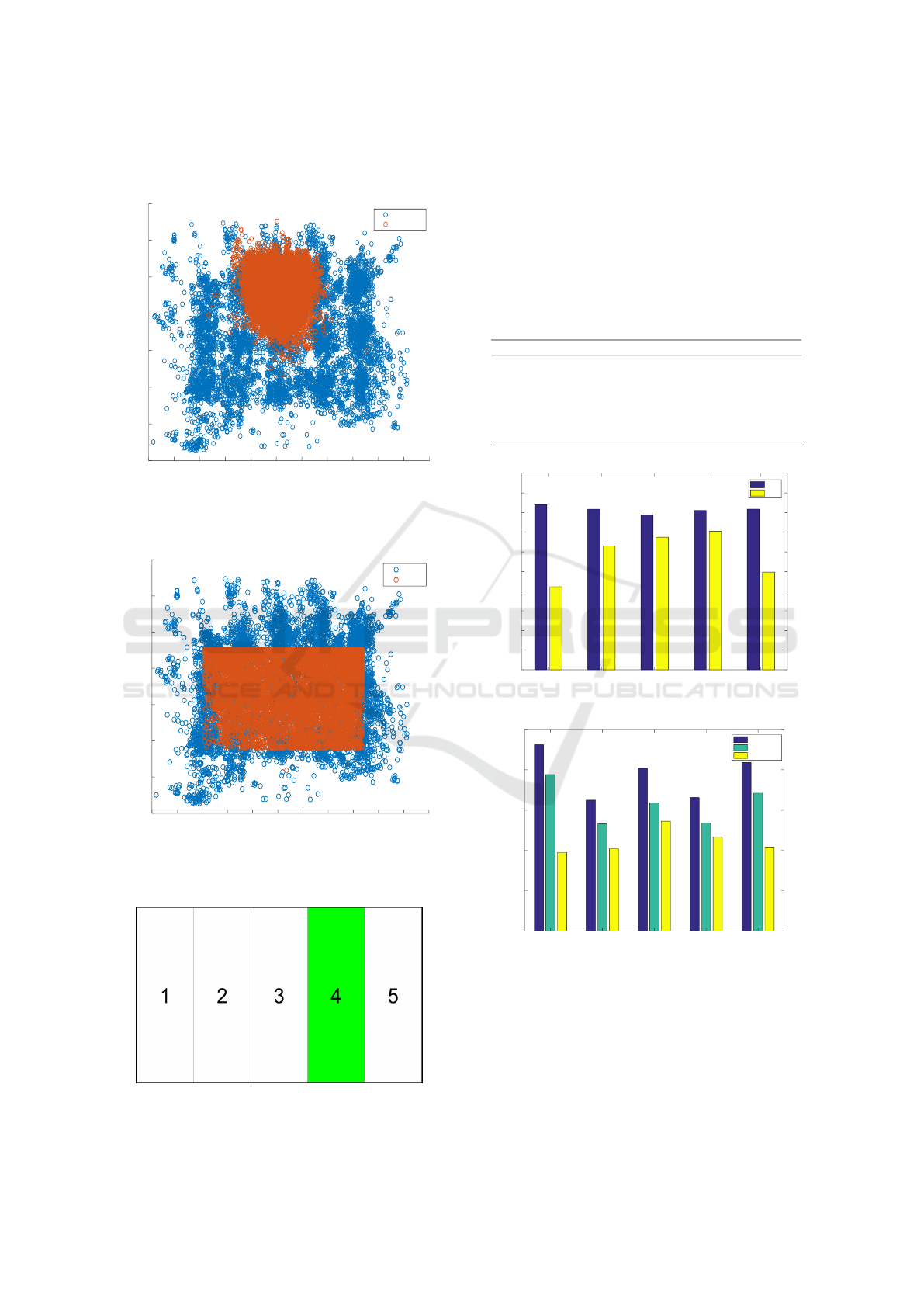

Figure 10: Accuracy per user for the big screen.

1 2 3 4 5

Ground truth box number

0

10

20

30

40

50

60

70

80

90

100

Accuracy per box [%]

iView

SVM

Figure 11: Accuracy per box for users with glasses.

Table 3: The accuracy of the SMI RED 250 eye tracker

compared to OpenFace with SVM for users with and with-

out glasses.

SMI RED 250 OpenFace with SVM

glasses 75.02 50.90

no glasses 85.96 66.79

81.48% and for the OpenFace with SVM was 58.54%.

We further analyzed the accuracy of each box of

a matrix as shown in Table 2. We see that the ac-

curacy for the box number 1 and 5 is the lowest for

OpenFace, which means that it is unable to recognize

1 2 3 4 5

Ground truth box number

0

10

20

30

40

50

60

70

80

90

100

Accuracy per box [%]

iView

SVM

Figure 12: Accuracy per box for users without glasses.

correctly for the eye gaze at the corner boxes, while

it performs better for the boxes in the middle. Figure

8 describes the performance comparison of our setup

which shows that there is a potential of the open-

source software such as OpenFace to work as an eye

gaze tracker. Figure 9 shows the number of hits for

each out of 5 boxes, which further tells us that the

corner boxes, i.e., box number 1 and 5 have lowest

number of hits which is in accordance to the accuracy

achieved per box as shown in Figure 8.

Figure 10 shows the accuracy of each of the 28

users in our user study. This shows that for certain

HUCAPP 2020 - 4th International Conference on Human Computer Interaction Theory and Applications

244

users our system outperformed the iView SMI RED

250 eye tracker.

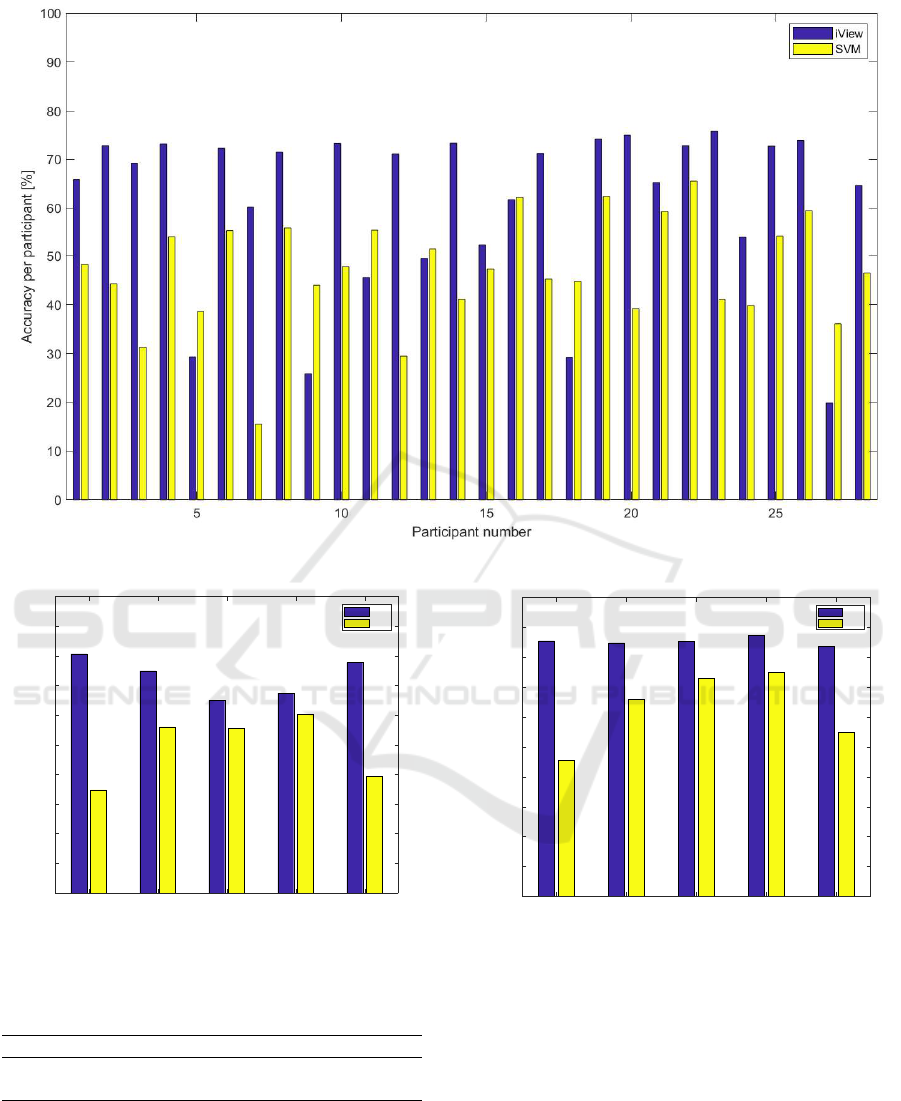

We also evaluated the results for the users wearing

glasses and without glasses as shown in Figure 11 and

Figure 12. It is evident that both systems work better

with the user without glasses compared to users with

glasses. Table 3 details the accuracy for the compared

systems for users with and without glasses.

6 CONCLUSION

We worked on the eye gaze location detection on the

screen for team meetings to help BVIP to get im-

mersed in the conversation. We built a prototype of

an automatic eye gaze tracking system which can be

available at low cost using an open source software

’OpenFace’. We geometrically converted the eye gaze

vectors and eye position coordinates to screen coor-

dinates and manipulated those coordinates using an

SVM regression algorithm to work in a similar man-

ner to the commercially available SMI 250 RED eye

tracker. We used a small desktop screen with 2 × 3

box matrix to calibrate our proposed system for eye

gaze tracking.

In our user study, we evaluated our automatic sys-

tem with 28 users. We found out that our system

works quite comparable to the SMI RED 250 eye

tracker for the numbers which are inside the box ma-

trix on the screen but inversely for the corner boxes

on the screen which led to the accuracy difference be-

tween the proposed system and the SMI RED 250.

We compared the performance of users with spec-

tacles and without spectacles which showed that the

users with spectacles had less accuracy than without

spectacles which might be due to the extra reflection

due to presence glasses.

In future work, we will convert the output in such

a way that it can be made accessible to BVIP by au-

dio or haptic feedback. We will also work on improv-

ing the accuracy of our system using neural networks

which have proven to perform better than the classical

computer vision techniques in other problems.

ACKNOWLEDGEMENTS

This work has been supported by the Swiss Na-

tional Science Foundation (SNF) under the grant no.

200021E 177542 / 1. It is part of a joint project be-

tween TU Darmstadt, ETH Zurich, and JKU Linz

with the respective funding organizations DFG (Ger-

man Research Foundation), SNF and FWF (Austrian

Science Fund). We also thank Dr. Quentin Lohmeyer

and Product Development Group Zurich for lending

us the SMI RED 250 eye tracker.

REFERENCES

Agarwal, A., JeevithaShree, D., Saluja, K. S., Sahay, A.,

Mounika, P., Sahu, A., Bhaumik, R., Rajendran, V. K.,

and Biswas, P. (2019a). Comparing two webcam-

based eye gaze trackers for users with severe speech

and motor impairment. In Research into Design for a

Connected World, pages 641–652. Springer.

Agarwal, A., JeevithaShree, D., Saluja, K. S., Sahay, A.,

Mounika, P., Sahu, A., Bhaumik, R., Rajendran, V. K.,

and Biswas, P. (2019b). Comparing two webcam-

based eye gaze trackers for users with severe speech

and motor impairment. In Chakrabarti, A., editor, Re-

search into Design for a Connected World, pages 641–

652, Singapore. Springer Singapore.

Amos, B., Ludwiczuk, B., Satyanarayanan, M., et al.

(2016). Openface: A general-purpose face recogni-

tion library with mobile applications. CMU School of

Computer Science, 6.

Bal, E., Harden, E., Lamb, D., Van Hecke, A. V., Denver,

J. W., and Porges, S. W. (2010). Emotion recognition

in children with autism spectrum disorders: Relations

to eye gaze and autonomic state. Journal of autism

and developmental disorders, 40(3):358–370.

Baltru

ˇ

saitis, T., Robinson, P., and Morency, L.-P. (2016).

Openface: an open source facial behavior analysis

toolkit. In 2016 IEEE Winter Conference on Applica-

tions of Computer Vision (WACV), pages 1–10. IEEE.

Baltru

ˇ

saitis, T., Zadeh, A., Lim, Y. C., and Morency, L.-

P. (2018). Openface 2.0: Facial behavior analysis

toolkit. In 2018 13th IEEE International Conference

on Automatic Face & Gesture Recognition (FG 2018),

pages 59–66. IEEE.

Dhingra, N. and Kunz, A. (2019). Res3atn-deep 3D resid-

ual attention network for hand gesture recognition in

videos. In 2019 International Conference on 3D Vi-

sion (3DV), pages 491–501. IEEE.

Dhingra, N., Valli, E., and Kunz, A. (2020). Recognition

and localisation of pointing gestures using a RGB-D

camera. arXiv e-prints, page arXiv:2001.03687.

Dong, X., Wang, H., Chen, Z., and Shi, B. E. (2015).

Hybrid brain computer interface via bayesian inte-

gration of eeg and eye gaze. In 2015 7th Interna-

tional IEEE/EMBS Conference on Neural Engineer-

ing (NER), pages 150–153. IEEE.

Dostal, J., Kristensson, P. O., and Quigley, A. (2013). Sub-

tle gaze-dependent techniques for visualising display

changes in multi-display environments. In Proceed-

ings of the 2013 international conference on Intelli-

gent user interfaces, pages 137–148. ACM.

Drewes, H. and Schmidt, A. (2007). Interacting with

the computer using gaze gestures. In IFIP Confer-

ence on Human-Computer Interaction, pages 475–

488. Springer.

Duchowski, A. T. (2007). Eye tracking methodology. The-

ory and practice, 328(614):2–3.

Eivazi, S., K

¨

ubler, T. C., Santini, T., and Kasneci, E.

(2018). An inconspicuous and modular head-mounted

Eye Gaze Tracking for Detecting Non-verbal Communication in Meeting Environments

245

eye tracker. In Proceedings of the 2018 ACM Sympo-

sium on Eye Tracking Research & Applications, page

106. ACM.

Hennessey, C. A., Fiset, J., and Simon, S.-H. (2014). Sys-

tem and method for using eye gaze information to en-

hance interactions. US Patent App. 14/200,791.

Ince, I. F. and Kim, J. W. (2011). A 2D eye gaze esti-

mation system with low-resolution webcam images.

EURASIP Journal on Advances in Signal Processing,

2011(1):40.

Ji, Q. and Yang, X. (2002). Real-time eye, gaze, and face

pose tracking for monitoring driver vigilance. Real-

time imaging, 8(5):357–377.

Kato, T., Jo, K., Shibasato, K., and Hakata, T. (2019). Gaze

region estimation algorithm without calibration using

convolutional neural network. In Proceedings of the

7th ACIS International Conference on Applied Com-

puting and Information Technology, page 12. ACM.

Lee, H. C., Luong, D. T., Cho, C. W., Lee, E. C., and

Park, K. R. (2010). Gaze tracking system at a distance

for controlling iptv. IEEE Transactions on Consumer

Electronics, 56(4):2577–2583.

Majaranta, P. and R

¨

aih

¨

a, K.-J. (2007). Text entry by gaze:

Utilizing eye-tracking. Text entry systems: Mobility,

accessibility, universality, pages 175–187.

Nagamatsu, T., Yamamoto, M., and Sato, H. (2010). Mo-

bigaze: Development of a gaze interface for handheld

mobile devices. In CHI’10 Extended Abstracts on

Human Factors in Computing Systems, pages 3349–

3354. ACM.

O’Reilly, J., Khan, A. S., Li, Z., Cai, J., Hu, X., Chen, M.,

and Tong, Y. (2019). A novel remote eye gaze track-

ing system using line illumination sources. In 2019

IEEE Conference on Multimedia Information Pro-

cessing and Retrieval (MIPR), pages 449–454. IEEE.

Pi, J. and Shi, B. E. (2017). Probabilistic adjustment

of dwell time for eye typing. In 2017 10th Inter-

national Conference on Human System Interactions

(HSI), pages 251–257. IEEE.

Qvarfordt, P. and Zhai, S. (2005). Conversing with the user

based on eye-gaze patterns. In Proceedings of the

SIGCHI conference on Human factors in computing

systems, pages 221–230. ACM.

Ryan, W. J., Duchowski, A. T., and Birchfield, S. T. (2008).

Limbus/pupil switching for wearable eye tracking un-

der variable lighting conditions. In Proceedings of the

2008 symposium on Eye tracking research & applica-

tions, pages 61–64. ACM.

Smola, A. J. and Sch

¨

olkopf, B. (2004). A tutorial on

support vector regression. Statistics and computing,

14(3):199–222.

Steichen, B., Carenini, G., and Conati, C. (2013). User-

adaptive information visualization: using eye gaze

data to infer visualization tasks and user cognitive

abilities. In Proceedings of the 2013 international

conference on Intelligent user interfaces, pages 317–

328. ACM.

Suykens, J. A. and Vandewalle, J. (1999). Least squares

support vector machine classifiers. Neural processing

letters, 9(3):293–300.

Symons, L. A., Lee, K., Cedrone, C. C., and Nishimura, M.

(2004). What are you looking at? acuity for triadic eye

gaze. The Journal of general psychology, 131(4):451.

Torricelli, D., Conforto, S., Schmid, M., and D’Alessio, T.

(2008). A neural-based remote eye gaze tracker un-

der natural head motion. Computer methods and pro-

grams in biomedicine, 92(1):66–78.

Valenti, R., Sebe, N., and Gevers, T. (2011). Combining

head pose and eye location information for gaze es-

timation. IEEE Transactions on Image Processing,

21(2):802–815.

Yiu, Y.-H., Aboulatta, M., Raiser, T., Ophey, L., Flanagin,

V. L., zu Eulenburg, P., and Ahmadi, S.-A. (2019).

Deepvog: Open-source pupil segmentation and gaze

estimation in neuroscience using deep learning. Jour-

nal of neuroscience methods.

Zhang, Y., Bulling, A., and Gellersen, H. (2013). Sideways:

a gaze interface for spontaneous interaction with situ-

ated displays. In Proceedings of the SIGCHI Confer-

ence on Human Factors in Computing Systems, pages

851–860. ACM.

Zhu, J. and Yang, J. (2002). Subpixel eye gaze tracking. In

Proceedings of Fifth IEEE International Conference

on Automatic Face Gesture Recognition, pages 131–

136. IEEE.

HUCAPP 2020 - 4th International Conference on Human Computer Interaction Theory and Applications

246