A Taxonomy of Augmented Reality Annotations

Inma García-Pereira

1

, Jesús Gimeno

1

, Pedro Morillo

1

and Pablo Casanova-Salas

2

1

Department of Computer Science, Universitat de València, Spain

2

Institute of Robotics and Information and Communication Technologies (IRTIC), Universitat de València, Spain

Keywords: Augmented Reality, Annotation, Data Model, Taxonomy.

Abstract: Annotations have become a major trend in Augmented Reality (AR), as they are a powerful way of offering

users more information about the real world surrounding them. There are many contributions showing ad hoc

tools for annotation purposes, which make use of this type of virtual information. However, there are very

few works that have tried to theorize on this subject to propose a generalized work system that solves the

problem of incompatibility between applications. In this work, we propose and develop not only a taxonomy,

but also a data model that seek to define the general characteristics that any AR annotation must incorporate.

With this, we intend to provide a framework that can be used in the development of any system that makes

use of this type of virtual elements.

1 INTRODUCTION

Annotation is an essential interaction method in daily

life. Traditionally, people have used handwritten

annotations on paper as a tool to summarize and

highlight important elements of written texts (using

underlined or highlighted words) or to add reminders,

translations, explanations or messages for others in

shared documents (through side notes, for example).

But not only the text or paper-based information is

annotated. In general, any physical object in our

environment can be annotated (Hansen, 2006), for

example: we can paste a Post-it note next to a switch

to explain its functionality.

As text became to be digitized, new tools were

developed in order to take annotations in (and

through) the new computer systems. Subsequently,

the hypermedia systems (Grønbæk, Hem, Madsen, &

Sloth, 1994) and the advantages of the web (Kahan &

Koivunen, 2001) were exploited to enrich the note-

taking processes. With the advent of mobile and

ubiquitous computing devices, digital annotation has

been extended even further. Since the information is

virtual and, therefore, is not physically placed on real-

world objects through paper notes. Instead, it is stored

on servers and different methods are used to identify

the physical objects to which each annotation refers.

A step further in the virtualization of annotations

has been achieved thanks to the development of

Augmented Reality (AR). In fact, annotations are an

intrinsic component of this technology. As described

before, humans leave notes in the physical world to

share information. In parallel, they place texts,

images, audios, etc. in digital format to communicate

through the virtual world. Thanks to the AR, it is

possible to blur the boundary between physical and

virtual world so that virtual information can be

presented in the same location as the element of the

physical world with which it relates.

One of the main advantages of AR is that it has

the ability to contextualize and locate virtual

information. Annotation is one of the most common

uses of AR as it is a powerful way to offer users more

information about the world around them (Wither,

DiVerdi, & Höllerer, 2009). However, until 2009 this

virtual element was not defined in a reasonably

general and well-grounded way to be used in the

future literature. Most of the published works show

applications developed for specific uses and very few

have tried to define and categorize AR annotations.

Once we have reviewed the published literature

on this subject, we have found it is necessary to

complete a study from the point of view of software

engineering that results in a generic data model that

can be used in any type of application with AR

annotations. This generic model attempts to solve the

incompatibility problem that currently exists between

the different AR annotation systems: annotations

made with a specific application can only be seen

with the same application.

412

García-Pereira, I., Gimeno, J., Morillo, P. and Casanova-Salas, P.

A Taxonomy of Augmented Reality Annotations.

DOI: 10.5220/0009193404120419

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 1: GRAPP, pages

412-419

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

In this paper, we define an AR annotation.

Subsequently, we analyze the characteristics of this

type of virtual element that over the years have been

contributed by different authors. Finally, we develop

a theoretical model that allows defining any AR

annotation.

2 RELATED WORK

Although the term Augmented Reality was not coined

until 1992 in (Caudell & Mizell, 1992), in 1981 Tom

Furness already developed the Super Cockpit system

(Furness, 1986), which can be considered as one of

the first applications with AR annotations. The

system consisted of a see-through head-based display

mounted to the user's helmet through which the pilots

of an airplane could see their environment augmented

with virtual information.

Immediately, studies on applications of AR

annotations began to emerge (Feiner, MacIntyre, &

Seligmann, 1992; Rekimoto & Nagao, 1995). Today,

it is a hot topic and there are numerous publications

on this type of development, such as (Bruno et al.,

2019; Chang, Nuernberger, Luan, & Höllerer, 2017;

García-Pereira, Gimeno, Pérez, Portalés, & Casas,

2018). However, the theorization of the concepts

related to AR annotations has been very scarce, since

most of the works focus on the development of ad hoc

tools in different areas of application. That is why the

definition, characterization and categorization of this

type of virtual elements is dispersed in the literature.

One of the first authors to theorize about AR

annotations was Hansen in (Hansen, 2006), who

analyzes the annotation techniques of different

systems, such as: open hypermedia, Web based,

mobile and augmented reality. This aims to illustrate

different approaches to the central challenges that

ubiquitous annotation systems have to deal with.

Subsequently, Wither et al. defined in (Wither et al.,

2009) the concept of annotation in the context of AR.

In their paper, they propose a taxonomy for this type

of virtual elements. Although this work is one of the

most complete to date, in the last decade there have

been new studies that analyze some aspects that were

not contemplated by Wither et al. One of them is

(Tönnis, Plecher, & Klinker, 2013), where the authors

present the main dimensions that cover the principles

of representation of virtual information in relation to

a physical environment through AR. These

dimensions are perfectly applicable to AR

annotations, as we will see later. In (Keil, Schmitt,

Engelke, Graf, & Olbrich, 2018), the authors describe

and categorize the visual elements of AR based on

their level of mediation between the physical and the

virtual world. Again, their concepts can be used to

classify AR annotations.

In this paper, we analyze the contributions made

to date on AR annotations (or on virtual elements

applicable to annotations) with the aim of unifying

them in a data model capable of supporting any type

of AR annotation.

3 DEFINITION OF AR

ANNOTATION

Wither et al. defined in (Wither et al., 2009) the

concept of AR annotation. Theirs objective was to

cover a wide range of uses, so it is a fairly general

definition: “An augmented reality annotation is

virtual information that describes in some way, and is

registered to, an existing object”.

As the authors explain, this definition allows that

virtual information can adopt different formats (texts,

images, sounds, 3D models...). Also, the relationship

between virtual information and the object annotated

can be defined indirectly. This second point presents

discrepancy in the existing literature, since some

authors, such as (Hansen, 2006), consider that an AR

annotation must necessarily be in or next to the object

annotated and clearly related to it. For example: an

arrow that directs a user to a destination is linked to

the final destination, which is the object annotated,

but does not appear next to it or with a clear visual

relationship, so Hansen does not consider it as an

annotation as opposed to Wither et al. In this work,

we use the Wither's point of view since it

encompasses many uses of AR annotations that

would otherwise not be analyzed.

From the definition of Wither et al., we can extract

four basic elements that must be defined when

designing an AR annotation:

1) Virtual information

2) Object annotated

3) Spatial relationship between 1) and 2)

4) What kind of description does 1) of 2)

To clearly differentiate what an annotation is from

what is not, the authors define two essential

components that every AR annotation must

necessarily have: a spatially dependent component

and a spatially independent component. The first one

must link the virtual information with the object that

is being annotated, so it is a relationship between the

virtual world and the physical world. This means that

any annotation must be registered to a particular

existing object and not only to a point in the

A Taxonomy of Augmented Reality Annotations

413

coordinate system. The spatially independent

component implies that there must be some difference

between virtual content and what the user sees from

the physical world. For example, a perfect 3D model

of an object that is used to make occlusions is not an

annotation, even if it was spatially dependent.

Instead, if the same 3D model has some modification

in relation to its physical homonym (it changes a

texture, for example), it would already be considered

an annotation since it adds information and modifies

the user's perception of the physical world.

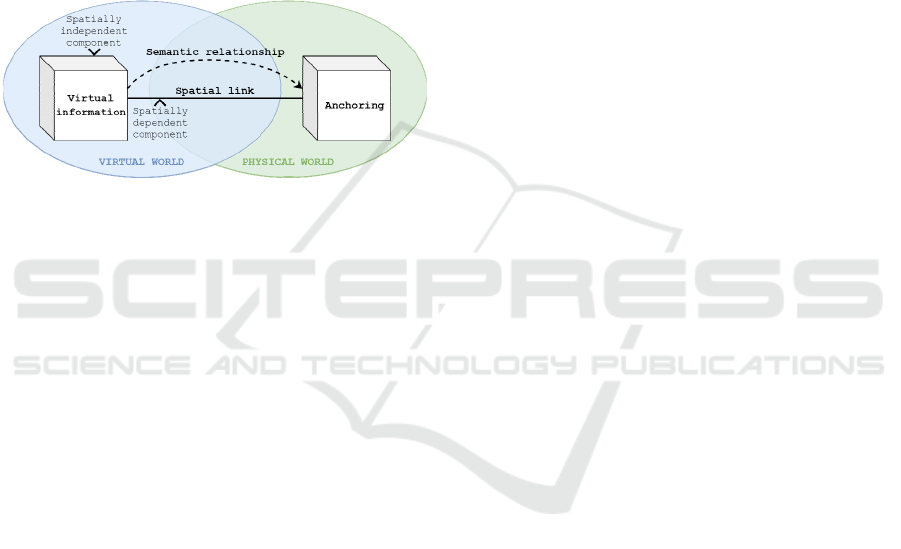

Figure 1 shows a graphic representation of the

definition given by Wither et al. for an AR annotation

and all the concepts associated

.

Figure 1: Components of an AR annotation.

4 CHARACTERISTICS OF AR

ANNOTATIONS

As explained in the previous section, some studies

present taxonomies to characterize the AR virtual

elements or, more specifically, the AR annotations.

The different visions of each author are explained

below in order to synthesize their contributions later.

Wither et al. present in (Wither et al., 2009) six

orthogonal dimensions that serve to describe and

classify the annotations in a more concrete way:

- Location Complexity: The obligation to have some

spatially dependent component means that all

annotations have an associated location in the

physical world. However, the complexity of this

location can vary greatly from one annotation to

another, from a single 3D point to a 2D or 3D region

or even a 3D model.

- Location Movement: of the virtual part of the

annotation (not of the physical element annotated or

of the animations contained in the virtual

information). The freedom of movement of virtual

information and the allowed distance from its

anchoring depend on the application and, where

appropriate, on the user's preferences.

- Semantic Relevance: Indicates how the virtual

information of a given annotation and anchoring are

related. The descriptors that provide more direct

information about the annotated element and have a

greater semantic relevance are those that name or

describe it. On the contrary, those descriptors that add

information, modify the annotated physical element

or guide the user usually provide information that is

not directly related to the anchoring and have less

semantic relevance.

- Content Complexity: This dimension can vary

greatly from one annotation to another: from those

that are a single point that marks an object of interest

to that whose content is an animated 3D model with

sound. The complexity of the content can be

determined both by the amount of information

transmitted by the annotation to the user and by the

visual complexity of the annotation itself.

- Interactivity: Wither et al. differentiate between

four levels of interactivity: annotations that the user

can only view but without interacting with them,

annotations with which the user can interact but

without editing or adding information, annotations

whose content can be modified and annotations that

the user can create when using the system.

- Annotation Permanence: An annotation does not

always has to be visible to the user, as in cases where

you want to avoid information overload. To control

the permanence of an annotation, the authors list five

basic strategies: permanent annotations, time-

controlled permanence, user-controlled permanence,

permanence based on the user's location and filtered

annotations based on the information and current

status of the application and the user.

Wither et al. compare their six dimensions of AR

annotations with the four challenges for ubiquitous

annotations described in (Hansen, 2006), which are:

- Anchoring: The linking of the virtual information

with the annotated physical object is essential and the

precision with which it is carried out is decisive in

achieving a communication objective.

- Structure: The data model used by the

identification technologies to link the virtual

information with the annotated elements must be

general enough to: 1) allow any object that has been

identified to be annotated and linked and 2) to be able

to use different anchoring techniques.

- Presentation: Hansen differentiates between three

types of virtual information presentation: presented in

the annotated object, separated from the annotated

object but in its environment and completely

separated from the annotated object.

- Editing: Providing the user with the ability to edit

the annotations displayed or generate new ones is

very desirable in certain scenarios, therefore

developers should not limit themselves to annotation

GRAPP 2020 - 15th International Conference on Computer Graphics Theory and Applications

414

systems that only allow the user to consult the

information previously loaded into the system.

As Wither et al. point out, the Anchor, Structure

and Presentation challenges defined by Hansen

describe how and where an annotation is placed in

relation to the object that it annotates, so they are

directly related to what they call Location complexity

and Location movement. For its part, Hansen’s

challenge of Editing is directly linked to the

Interactivity dimension of Wither et al. Hansen's

work does not take into account the information

contained in the annotations, only its relation to the

annotated objects and with the user. Wither et al.,

instead, adds the dimensions of Semantic relevance,

Content complexity and Annotation permanence in

order to evaluate the content of the annotation and its

temporal dimension.

As a complement to the six orthogonal

dimensions of Wither et al., the work of (Tönnis et al.,

2013) presents five other dimensions that cover the

representation principles of virtual information in AR

related to the physical environment. Therefore, the

authors do not speak specifically of annotations but

their classification complements that of Wither et al.

and can be extrapolated to the scope that concerns us.

These dimensions are:

- Temporality: It depends on the existence of the

virtual information, regardless of whether it is within

the field of vision or not. The authors differentiate

between permanently represented information and

information that occasionally exists in the augmented

world, as it depends on specific events.

- Dimensionality: Methods used to visualize and

integrate virtual information in the physical

environment: 2D or 3D.

- Viewpoint Reference Frame: Method to represent

the virtual information in relation to the point of view

of both the user and the virtual camera of the system.

They differentiate between: egocentric (first person),

exocentric (third person) and egomotion (displaced).

- Mounting and Registration: Spatial relationship

between virtual information and the physical world.

Mounting refers to what virtual information is linked

to (user, environment, world or multiple).

Registration is the technical part of the mounting, that

is: how to accurately determine a location in the

physical world (the anchoring) to place the virtual

information.

- Type of Reference: The extent to which a virtual

object refers to an element of the physical world. This

dimension depends on the visibility of physical

objects. The authors differentiate three types of

references: direct (physical objects and their

augmented information are visible to the user),

indirect (virtual information reveals hidden physical

objects) and pure (virtual objects provide references

to physical objects that are not in the field of view).

Tönis et al. compare their dimensions with those

of Wither et al. The authors relate their dimension of

Temporality with that Wither et al. call Annotation

permanence. However, we believe that, although they

are related concepts, it is interesting to study them

separately because the Wither et al. classification is

based on the fact that an annotation does not always

have to be visible to the user while that of Tönis et al.

depends on the existence of the annotation, regardless

of whether it is within the field of vision or not.

Moreover, Tönis et al. relate their dimension of

Dimensionality to the Semantic Relevance and

Content complexity of Wither et al. However, Tönis

et al. differentiate between 2D and 3D information

objects while the Wither’s classification is much

more detailed. The Viewpoint reference frame

dimension is exclusive to Tönis et al. since it is a

generic concept of AR. For its part, the Type of

reference dimension is described by Tönis et al. as a

sub concept within the Semantic relevance dimension

of Wither et al., so it might be interesting to study

these two dimensions separately (Wither et al. focus

more on how the content of the annotation contributes

to the object annotated while Tönis et al. focus more

on the relationship between them). Finally, the

dimension of Mounting and registration are related to

the dimensions of Location complexity and Location

movement but, again, Wither et al. granulate the

problem much more.

To complete the dimensions presented by Wither

et al. and Tönis et al., it is also important to analyze

the recent work of (Müller, 2019). His study focuses

on how to represent information with AR to support

manual procedure tasks. Although his work speaks, in

general, of information and focuses on a very specific

use of AR, its definitions and classifications can be

applied to the specific case of AR annotations. Müller

describes seven characteristics:

- Spatial Relation: Virtual information is spatially

related and located in the physical world, which

implies a link between physical and virtual objects

and the registration of virtual information in specific

coordinates of space.

- Connectedness: Virtual information is connected to

the physical world not only spatially but also

semantically.

- Discrete Change: Virtual information may be

subject to change over time.

- Manipulability: It is possible to interact and edit

virtual information through software. However, it

A Taxonomy of Augmented Reality Annotations

415

should be borne in mind that this may cause a loss of

relevant spatial information.

- Combination: The combined view that the user has

of the virtual information and the environment varies

depending on their own point of view and, therefore,

is not always controllable. In the same way, the

environment can change making the perception of

information objects not as precise as intended.

- Fluctuation: The difficulties in achieving a precise

and stable alignment of the physical world with the

virtual world mean that the combination of virtual

information, the environment and the point of view is

affected by uncontrollable fluctuations.

- Reference Systems: Virtual information can be

placed and oriented using a different reference system

than the one used to locate its anchoring. The authors

differentiate two basic types of reference systems:

world and spectator coordinate systems.

The characteristics described by Müller are much

more generic and less detailed than the dimensions

presented by Wither et al. and Tönis et al., although

they are related and can give us new nuances at

certain points, as will be seen in the next section.

The generic definitions and categorizations

described above contrast with other works that

perform classifications at a lower level, as is the case

of (Keil et al., 2018). The objective of this work is to

describe, categorize and organize the visual elements

of AR to later discuss the level of mediation achieved

by each of them and their suitability according to their

context of use. The visual elements identified by Keil

et al. in this work are the following:

- Annotations and Labels: In this category, the

authors include 1) labels connected to the anchoring

by means of a line and whose position can be relative

to the element annotated or fixed on the screen and 2)

elements such as icons that are always placed in the

anchoring, oriented towards the user and that they can

behave as objects that trigger events or display more

information once they are activated. Thus, the authors

group under this category the elements that extend the

physical world by adding information even if they do

not visually fit the augmented object.

- Highlights: Zones, objects or parts of objects that

are visually highlighted through the use of its shape

(for example: a table drawer is illuminated to attract

the user's attention to it). The purpose of this type of

elements is emphasize the physical world.

- Aids, Guides and Visual Indicators:

Complementary visual elements, such as arrows or

other markers, guiding elements or metaphorical

indicators, such as light effects. They are usually 2D

or 3D sprites or geometries, animated or not, that are

anchored to particular points of interest. They

emphasize caution and attention to certain details that

would otherwise go unnoticed by the user.

- X-ray: Additive elements that show hidden,

occluded or imperceptible structures. The illusion is

created by artificially removing the occlusive parts of

the objects of the physical world. These visual

elements reveal spatial and semantic relationships

between hidden and visible objects.

- Explosion Diagrams: Additive elements that show

the relationship or the order of assembly of several

parts of an object. Like the previous ones, it can be

seen as an additional layer that enriches the current

scene. It is necessary to ensure that the virtual element

coexists in a consistent way with its physical

homonym, without disorder, ambiguity or occlusion.

- Transmedia Material: Audiovisual material that

can take any form, from sprites to video sequences.

These elements overlap in the user's view and align

with a real object and its viewing context.

As can be seen, all these virtual elements

described by Keil et al. fit the definition given by

Wither et al. for annotations, provided they have a

spatially dependent component and a spatially

independent component. Therefore, if we call the first

classification of Keil et al. "Icons and labels" (instead

of "Annotations and labels"), we can use this

taxonomy to categorize more accurately the

annotations described generically by Wither et al.

Besides, Keil et al. define three basic objectives

that can be achieved thanks to them: extending

,

emphasizing or enriching the physical world. These

objectives are closely related to the Semantic

relevance dimension of Wither et al.

5 DATA MODEL

Once analyzed the most relevant contributions on the

characteristics of the AR virtual elements and,

specifically, of the AR annotations, it is essential to

obtain a unique model that brings together all this

information. As described in the previous section, the

proposals analyzed complement each other but often

the information is overlapped and repeated. The next

step is to decide which characteristics are selected,

which are combined and which are discarded. As a

result of this synthesis task, a data model capable of

characterizing any type of AR annotation has been

obtained. With this, it is intended that the design of

AR annotation systems will be much more transversal

than the ad hoc tools developed to date.

All the conceptual connections that exist between

the works analyzed in the previous section (Hansen,

2006; Keil et al., 2018; Müller, 2019; Tönnis et al.,

GRAPP 2020 - 15th International Conference on Computer Graphics Theory and Applications

416

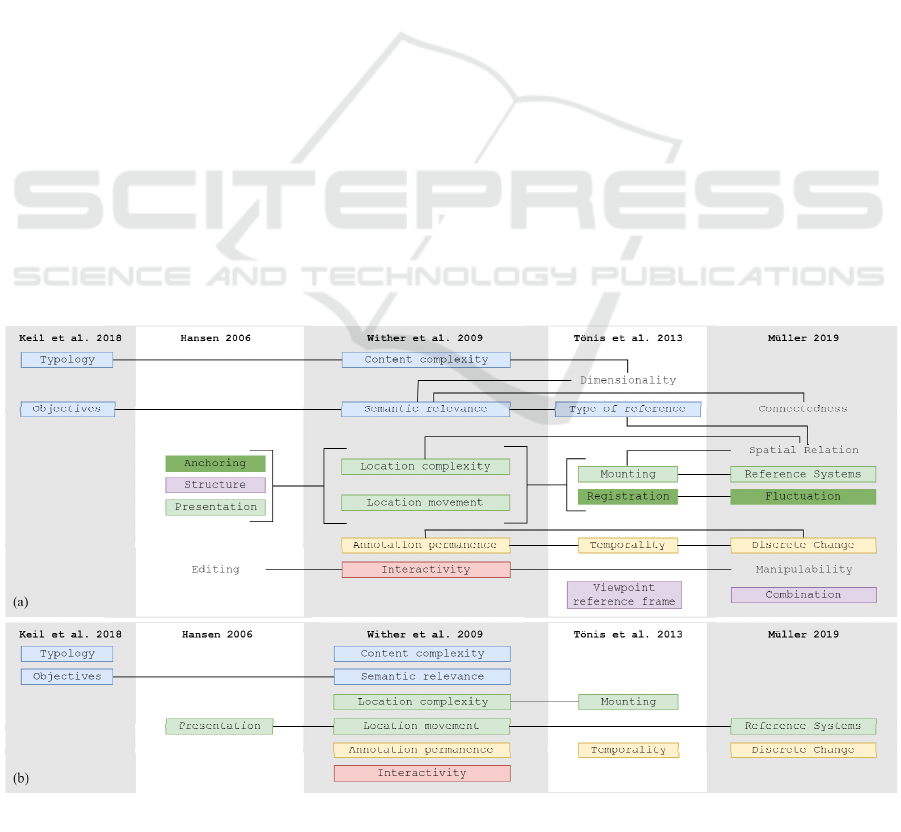

2013; Wither et al., 2009) are reflected in Figure 2a.

It shows the characteristics and dimensions described

by each author and how they interrelate with each

other. From the analysis of all these interrelations, all

characteristics have been classified based on the type

of information they provide about the AR

annotations. In Figure 2 the characteristics have been

marked in different colors according to the category

to which they belong, leaving without color those that

do not contribute any new concept and, therefore, are

included in what other authors have already

explained. The blue characteristics refer to concepts

related to the content of the annotations, the green

ones have to do with the spatial dimension, the yellow

ones with the temporal dimension and the red ones

with the interactivity. These four axes are explained

later. The three characteristics marked in purple are

generic concepts of AR, so they will not be included

as specific to the annotations. Finally, those that have

been marked in dark green have more to do with the

technical part than with the conceptual part of the

annotations, so they will not be discussed here.

Once all the characteristics have been classified

and analyzed, those that are redundant or that can be

included in more generic ones have been eliminated.

In addition, some interrelations have been modified

to identify which characteristics are going to be

treated together and which are separately. The result

of this analysis is shown in Figure 2b.

Based on this analysis and the result obtained in

Figure 2b, the essential characteristics that must be

defined during the design of an AR annotation are

presented below. These characteristics are grouped

around four axes: the content, the location (both of the

anchoring and of the virtual information), the

temporality and interaction allowed to the user.

To design the content of an annotation, it is

essential to define, on the one hand, its functionality

(extend, emphasize or enrich the physical world) in

order to choose between annotations that name,

describe, add, modify or direct. On the other hand, it

is necessary to determine the degree of complexity

that it will have, taking into account the amount of

information and its visual composition. Once these

two aspects are delimited, it is necessary to choose

what type of annotation will be developed (labels,

icons, highlights, aids, indicators, X-rays, explosion

diagrams or transmedia material).

In addition to the content, it is essential to make a

good design of the spatial dimension of the

annotation. To do this, the location of the anchoring

and the location of the virtual information must be

defined. Both must have a reference system that they

do not necessarily have to share, for example: the

anchoring of an annotation can use the coordinate

system of the world while virtual information can use

the one of the user and move following him or her. In

addition, virtual information can use a reference

system for position and a different one for orientation,

for example: a label that is located at a fixed point in

the world but is always user-oriented. The possible

reference systems are: user, physical object and

world. Besides the reference system, the degree of

complexity of the anchoring location must be

Figure 2: Conceptual relationship, classification (a) and synthesis (b) of the AR annotations characteristics.

A Taxonomy of Augmented Reality Annotations

417

determined. On the other hand, the location of the

virtual information must have limited both its

freedom of movement and the distance to which it can

be from its anchoring. Depending on this distance and

the characteristics of the application, it may be

necessary to draw a line or some type of connector

between the virtual information and the anchoring.

In the temporal dimension of the application,

three aspects must be taken into account. The first one

is variability, that is: how virtual information changes

over time. The second one is visibility, since an

annotation does not have to always be visible to the

user. There are five strategies to control the visibility

of annotations: fixed (virtual information is always

visible), temporary (they are only visible at a specific

moment and for a certain time), spatial (they are

visible when the user is in a certain location), on

demand (it is the user who controls which annotation

is visible at each moment) and filtered (the visibility

of the annotation depends on the current state of the

application and the user). The third one is existence,

that is: if virtual information exists constantly

(regardless of whether it is always visible or if it is

only shown in certain circumstances) or if, on the

contrary, only exists as a result of certain events.

Finally, in the design phase of an AR annotation,

the degree of interaction that is allowed to the user

must be determined. For this, it is necessary to choose

between: annotations that are created offline and that

are static (they can only be viewed but not interact

with them); annotations that are interactive but cannot

be edited; annotations that can be edited; and

annotations that are created online by the users, who

choose both the content and the location.

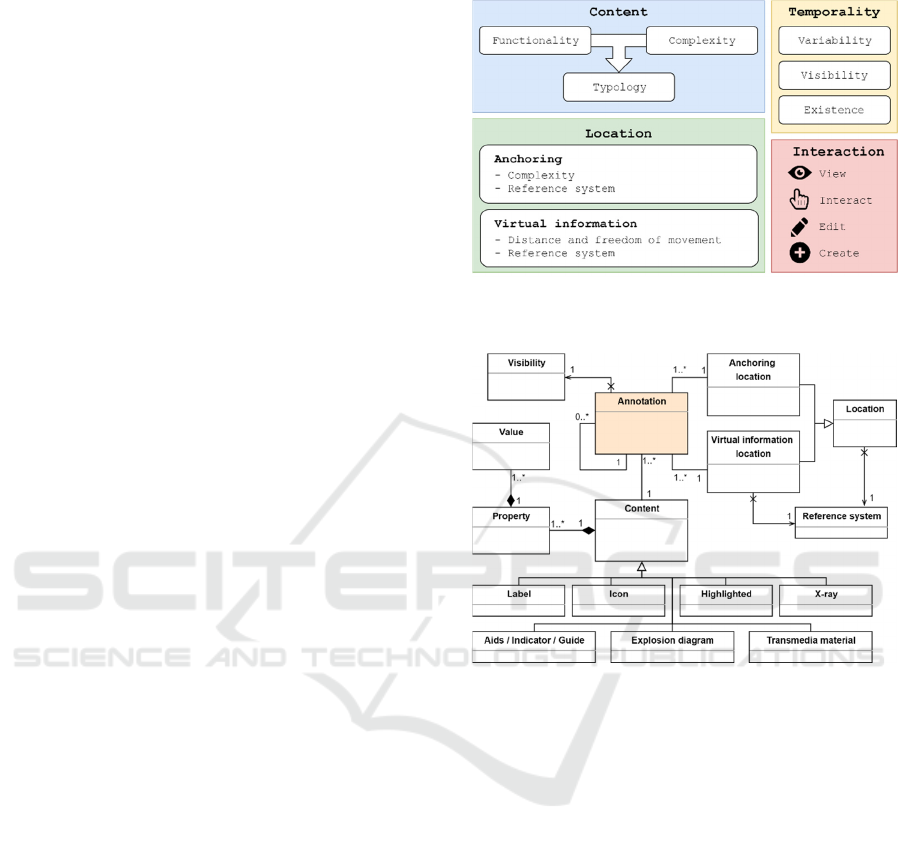

Figure 3 shows the four essential axes that must

be defined when designing an AR annotation and the

characteristics of each of them. From this taxonomy

of the AR annotations, a data model has been

designed with the aim of being able to support any

type of AR annotation, regardless of its typology,

functionality and device in which it is developed and

with which it visualized. The simplified class diagram

representing this data model is shown in Figure 4.

In our model, the proposed main class is

“Annotation”, which has as its attributes, among

others, an object of the following classes: “Anchoring

location”, “Virtual information location”, “Content”

and “Visibility”. In addition, annotations that are

editable have a collection of “Annotation” objects

whose purpose is to store the history of changes.

Other important attributes of this class are the author

and the creation date. It also has the necessary

methods to manage the subscription to certain events

of the application. The “Visibility” class allows

managing the permanence of the annotation, whether

fixed, temporary, spatial, on demand or filtered.

Figure 3: Main characteristics to be defined when designing

an AR annotation.

Figure 4: Data model of an AR annotation.

The “Anchoring location” and “Virtual

information location” classes inherit their attributes

from the “Location” class. One of them is a

“Reference system” object for the anchoring position

and virtual information respectively. In addition, the

“Anchoring location” object has an attribute to store

coordinates that allow the anchoring to be positioned

correctly based on the chosen reference system.

These coordinates can be from a simple point in space

to a complex cloud of points. The “Virtual

information location” object has an additional

attribute of the “Reference system” class to know the

orientation of the virtual information. In addition, it

has attributes to store the minimum and maximum

distance of the virtual information to the anchoring

and/or the user, the allowed area where virtual

information can be placed and, in the case of existing,

the visual union of the virtual information with the

anchoring.

Depending on the complexity and functionality of

the annotation, designers choose the type of AR

GRAPP 2020 - 15th International Conference on Computer Graphics Theory and Applications

418

annotation to implement. Following the recent work

of (Keil et al., 2018), our class diagram differentiates

between “Label”, “Icon”, “Highlighted”, “X-ray”,

“Aids / Indicator / Guide”, “Explosion diagram” or

“Transmedia material”. All these objects inherit from

the “Content” class, which requires developers to

define a set of key-value pairs. In our data model, this

is defined by a set of “Property” objects within the

“Content” class. This “Property” class, in addition to

the id, name and type attributes, has a set of “Value”

objects, with their respective id and value attributes.

In this way, any type of annotation can be

implemented based on a list of properties.

6 CONCLUSION AND FUTURE

WORK

This work presents a study and characterization of

AR annotations from the point of view of software

engineering. To do this, different works have been

analyzed that theorize, in general, on virtual elements

in AR or, in particular, on AR annotations. After the

analysis of the existing literature, a taxonomy of the

AR annotations has been obtained, which proposes to

classify the characteristics of these virtual elements

around four axes: content, location, temporality and

interaction. This has been done based on a generic

definition of AR annotation that encompass all virtual

element that meets the requirement proposed in

(Wither et al., 2009): having a spatially dependent

component and a spatially independent component.

This taxonomy has allowed us to propose a data

model capable of supporting any type of AR

annotation, regardless of the hardware used. After this

first model proposal, the next step will be to

implement a system based on a more detailed version

of the class diagram proposed and perform the

relevant tests to perfect it. Thanks to this, we could

offer a final solution to the incompatibility problem

of AR annotation systems. Due to the increase in

applications that make use of AR annotations, we

believe that having a common framework is of great

importance as it facilitates the work of developers and

offers users greater transversality when interacting

with different types of AR annotations.

ACKNOWLEDGEMENTS

I.G-P acknowledges the Spanish Ministry of Science,

Innovation and Universities (program: “University

Teacher Formation”) to carry out this study.

REFERENCES

Bruno, F., Barbieri, L., Marino, E., Muzzupappa, M.,

D’Oriano, L., & Colacino, B. (2019). An augmented

reality tool to detect and annotate design variations in

an Industry 4.0 approach. The International Journal of

Advanced Manufacturing Technology.

Caudell, T. P., & Mizell, D. W. (1992). Augmented reality:

An application of heads-up display technology to

manual manufacturing processes. Proceedings of the

Twenty-Fifth Hawaii International Conference on

System Sciences, 659–669 vol.2.

Chang, Y. S., Nuernberger, B., Luan, B., & Höllerer, T.

(2017). Evaluating gesture-based augmented reality

annotation. 2017 IEEE Symposium on 3D User

Interfaces (3DUI), 182–185.

Feiner, S., MacIntyre, B., & Seligmann, D. D. (1992).

Annotating the real world with knowledge-based

graphics on a see-through head-mounted display.

Furness, T. A. (1986). The Super Cockpit and its Human

Factors Challenges. Proceedings of the Human Factors

Society Annual Meeting, 30(1), 48–52.

García-Pereira, I., Gimeno, J., Pérez, M., Portalés, C., &

Casas, S. (2018). MIME: A Mixed-Space Collaborative

System with Three Immersion Levels and Multiple

Users. 2018 IEEE International Symposium on Mixed

and Augmented Reality Adjunct (ISMAR), 179–183.

Grønbæk, K., Hem, J. A., Madsen, O. L., & Sloth, L. (1994).

Cooperative hypermedia systems: A Dexter-based

architecture. Communications of the ACM, 37(2), 64–74.

Hansen, F. A. (2006). Ubiquitous Annotation Systems:

Technologies and Challenges. Proceedings of the

Seventeenth Conference on Hypertext and Hypermedia,

121–132.

Kahan, J., & Koivunen, M.-R. (2001). Annotea: An Open

RDF Infrastructure for Shared Web Annotations.

Proceedings of the 10th International Conference on

World Wide Web, 623–632.

Keil, J., Schmitt, F., Engelke, T., Graf, H., & Olbrich, M.

(2018). Augmented Reality Views: Discussing the

Utility of Visual Elements by Mediation Means in

Industrial AR from a Design Perspective. In J. Y. C.

Chen & G. Fragomeni (Eds.), Virtual, Augmented and

Mixed Reality: Applications in Health, Cultural

Heritage, and Industry (pp. 298–312).

Müller, T. (2019). Challenges in representing information

with augmented reality to support manual procedural

tasks. ElectrEng 2019, Vol. 3, Pages 71-97.

Rekimoto, J., & Nagao, K. (1995). The World Through the

Computer: Computer Augmented Interaction with Real

World Environments. Proceedings of the 8th Annual

ACM Symposium on User Interface and Software

Technology, 29–36.

Tönnis, M., Plecher, D. A., & Klinker, G. (2013).

Representing information – Classifying the Augmented

Reality presentation space. Computers & Graphics,

37(8), 997–1011.

Wither, J., DiVerdi, S., & Höllerer, T. (2009). Annotation

in outdoor augmented reality. Computers & Graphics,

33(6), 679–689.

A Taxonomy of Augmented Reality Annotations

419