DA-NET: Monocular Depth Estimation using Disparity Maps Awareness

NETwork

Antoine Billy

1,2

and Pascal Desbarats

1

1

Laboratoire Bordelais de Recherches en Informatique, Universit

´

e de Bordeaux, France

2

Innovative Imaging Solutions, Pessac, France

Keywords:

Monocular Depth Estimation, Disparity Images, Stereo Vision, Convolutional Networks, U-NET.

Abstract:

Estimating depth from 2D images has become an active field of study in autonomous driving, scene reconstruc-

tion, 3D object recognition, segmentation, and detection. Best performing methods are based on Convolutional

Neural Networks, and, as the process of building an appropriate set of data requires a tremendous amount of

work, almost all of them rely on the same benchmark to compete between each other : The KITTI benchmark.

However, most of them will use the ground truth generated by the LiDAR sensor which generates very sparse

depth map with sometimes less than 5% of the image density, ignoring the second image that is given for

stereo estimation. Recent approaches have shown that the use of both input images given in most of the depth

estimation data set significantly improve the generated results. This paper is in line with this idea, we devel-

oped a very simple yet efficient model based on the U-NET architecture that uses both stereo images in the

training process. We demonstrate the effectiveness of our approach and show high quality results comparable

to state-of-the-art methods on the KITTI benchmark.

1 INTRODUCTION

The problem of estimating depth in an image got ad-

dressed by many authors as a crucial factor in scene

understanding applications like, for instance, au-

tonomous driving (Urmson et al., 2008), robot assis-

tance (Cunha et al., 2011), object detection (Volkhardt

et al., 2013) or augmented reality systems (Ong and

Nee, 2013). Being able to generate a dense depth map

from a 2D image turned out to be strongly appealing

as modern solutions require a specific equipment such

as LiDAR (Schwarz, 2010) in which costs might be-

come expensive, RGB-D camera (Henry et al., 2014)

only sustainable for indoor scenarios or stereo vision

systems (Bertozzi and Broggi, 1998) with a decreas-

ing precision for large-scale scenes. Predicting depth

from an image is relatively easy for a human brain

thanks to perspective and the known size of ordinary

objects but it can still be deceived by very simple op-

tical illusions. Monocular depth estimation by a com-

puter has thus proven to be a key challenge in recent

studies and several benchmarks

1

appeared during the

last decade, requiring more precision and accuracy for

the incoming methods.

1

http://www.cvlibs.net/datasets/kitti/eval depth.php

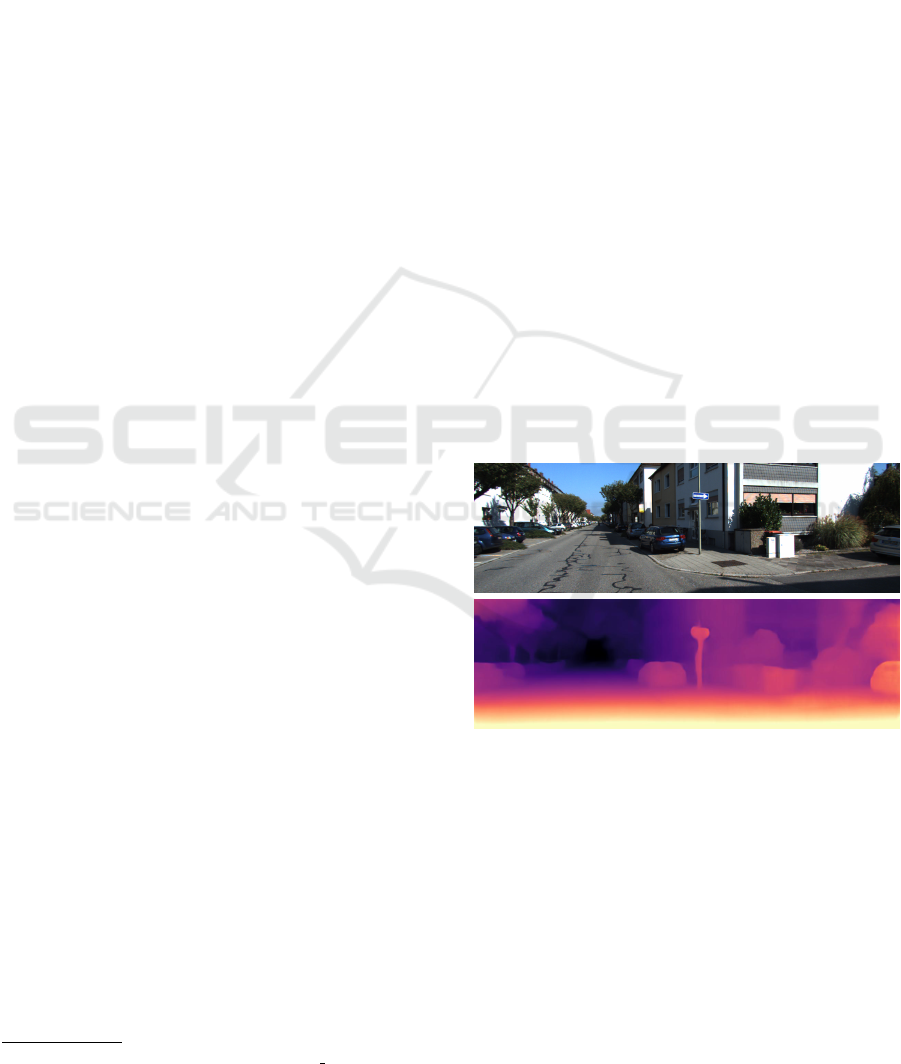

Figure 1: Example output from our model. From Top to

Bottom: Original image and its predicted depth.

Thanks to these benchmarks, it is obvious to see

that all of the best performing methods (Fu et al., ;

D

´

ıaz, ; Ren et al., 2019) make use of deep learn-

ing approaches, and more specifically Convolutional

Neural Networks. To do so, a large amount of data

is required for the models to be trained. Even with

self-supervised methods (Godard et al., 2017; Zhu

et al., 2018; Zhong et al., 2017) the need of ei-

ther a stereo system or a strict acquisition process

with strongly overlapping frames is needed. Building

such a database is a colossal job, this is why most of

Billy, A. and Desbarats, P.

DA-NET: Monocular Depth Estimation using Disparity Maps Awareness NETwork.

DOI: 10.5220/0009174405290535

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 5: VISAPP, pages

529-535

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

529

modern methods use the already existing data sets.

This moreover allows these methods to compare

themselves with each other on the same scenarios.

These data sets provide several sequences of images

with their corresponding depth as ground truth. There

is a limited number of solutions allowing the data

builder to acquire this information. Synthetic dataset

(Mayer et al., 2016; Billy et al., 2019) have been

developed to ensure perfect ground truth generation.

These images however lack of realism and fail to de-

pict real world artifacts regularly occurring in exter-

nal acquisitions. The use of a RGB-D camera such as

the Kinect (Zhang, 2012) has been exploited in (Sil-

berman et al., 2012). This sensor is suitable for close-

range indoor scenarios but can not be applied for large

outdoor scenes. LiDAR sensors appear to be the best

candidates for our application but requires high en-

try costs. Moreover, a LiDAR sensor will generate

a sparse depth map that will need both realignment

and interpolation processes. Finally, a stereo sys-

tem might be embedded to generate dense depth map.

This layout is yet not enough on its own because of

the lack of precision increasing with the scene depth.

(Geiger et al., 2012) took advantage of the last two so-

lutions, combining the strength of the LiDAR and the

consistency of stereo vision models, to build a widely

used dataset : The so-called KITTI benchmark. De-

spite the fact that the data from KITTI allow us to use

both LiDAR and stereo acquisitions, almost all of the

existing methods only use the LiDAR depth map for

the training. The role of this paper is to show that by

taking into account both stereo images and building

their corresponding disparity map, we can perform

state of the art results with a very simple network ar-

chitecture.

2 RELATED WORKS

Depth prediction from single view has gained in-

creasing attention in the computer vision community

thanks to the recent advances in deep learning. Si-

multaneous Localization and Mapping (Thrun, 2008)

or Structure from Motion (Ullman, 1979) methods

turned out to be powerful tools able to accomplish this

mission but will require several images of the same

scene with an important overlap between two con-

secutive frames in order to work efficiently and can

not be classified in the single image depth estimation

category. Classic depth prediction approaches em-

ploy hand-crafted features and probabilistic graphical

models (Delage et al., 2007), (Mutimbu and Robles-

Kelly, 2013), (Saxena et al., 2007) to yield regularized

depth maps, usually making strong assumptions on

the scene geometry. Moreover, formulating depth es-

timation as a Markov Random Field (MRF) learning

problem as in (Schwarz, 2010) might result to some

issues. As exact MRF learning and inference are in-

tractable in general, most of these approaches em-

ploy approximation methods such as multiconditional

learning (MCL) or particle belief propagation (PBP).

These approximations require complex modeling pro-

cess and limit their applications to very specific sce-

narios. Furthermore, recently developed deep convo-

lutional architectures significantly outperformed pre-

vious methods in terms of depth estimation accuracy,

speed and versatility.

An exhaustive review of CNN single-image depth

estimator has been made by (Koch et al., ) in ad-

dition with a standardized evaluation protocol. One

of the first truly competitive CNN for single-image

depth prediction has been developed by (Eigen et al.,

2014) using a two scale deep network. Unlike most

other previous work in single image depth estimation,

they do not rely on hand crafted features or an initial

oversegmentation and instead learn a representation

directly from the raw pixel values. Several works have

built upon the success of this approach using tech-

niques, improving the overall precision of the estima-

tion. These approaches however rely on having high

quality, pixel aligned, ground truth depth at training

time, and are only based on LiDAR velodyne data.

(Zhu et al., 2018; Zhong et al., 2017) proposed self-

supervised methods whose training phase does not re-

quire ground truth data. Similarly, (Garg et al., 2016)

offered an unsupervised CNN for depth prediction.

They train a network for monocular depth estimation

using an image reconstruction loss. However, their

image formation model is not fully differentiable and

thus have to perform a Taylor approximation to lin-

earize their loss, resulting in an objective that is more

challenging to optimize and again do not take fully

advantage of the stereo data.

This principle have been exploited with the build of

the DispNet architecture (Mayer et al., 2016), later

improved by (Godard et al., 2017). They introduce the

integration of disparity in their loss function by tak-

ing account of the synthetic depth map developed by

(Mayer et al., 2016) and the left-right consistency em-

ployed in (Godard et al., 2017). Our approach is based

on the use of unsupervised cues such as (Godard et al.,

2017) and use both images in the stereo pair equiva-

lently to define our loss function but use the strength

of supervised methods to enhance the generated re-

sults. A quantitative comparison between our meth-

ods and (Godard et al., 2017) is detailed in a further

section, showing that we outperform their prediction

in almost all metrics.

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

530

3 THE DA-NET MODEL

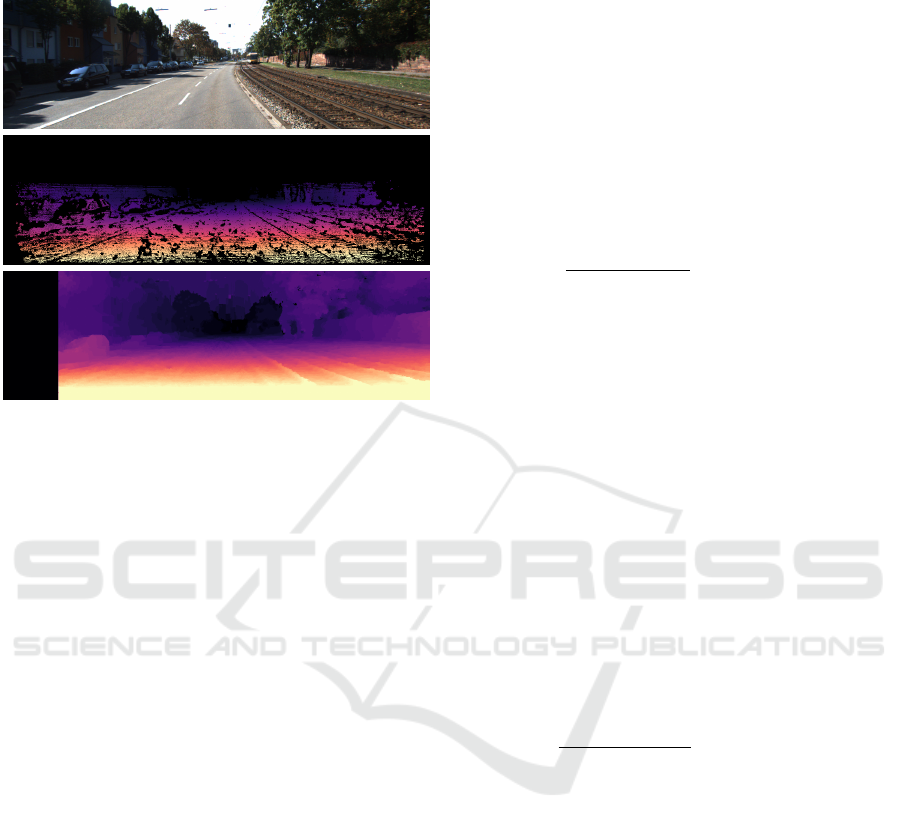

Figure 2: Example data given by KITTI. Top to Bottom:

original RGB image, LiDAR depth map, Computed dispar-

ity map.

This section describes our single image depth pre-

diction network. We introduce a new depth estima-

tion training loss, including a Disparity Map aware-

ness term. This allow us to train our model with fully

dense images. Indeed, as shown in figure 2, the depth

image generated by LiDAR acquisition is very sparse

and might need strong interpolation to generate a fi-

nal dense image. This interpolation process generates

a rough interpretation of the acquired scene and could

easily miss fine details or even small objects such as

road signs, trees or,far away, even pedestrians or cars.

On the other hand, a disparity map generated by a

stereo vision algorithm will directly generate a depth

value for every pixel in the image, resulting in a

denser depth estimation. A thorough study of stereo

vision algorithms has been exposed by (Scharstein

et al., 2001) and several approaches might be used

to generate the finest disparity map from rectified im-

ages. Semi Global matching (SGBM) (Hirschm

¨

uller,

2008) is known to produce more accurate depths and

is an integral part of many of the state-of-the-art stereo

algorithms. We train a CNN to model the complex

non-linear transformation which converts an image to

a depth-map. The loss we use for learning this CNN

is the photometric difference between the input im-

age, and the inverse warped target image (the other

image in the stereo pair). This loss is highly corre-

lated with the prediction error as it can be used to ac-

curately rank two different depth-maps even without

using ground-truth labels.

Training Loss. Similar to (Godard et al., 2017), we

also formulate our problem as the minimization of a

photometric reprojection error at training time. Our

final loss L (4) is computed as a combination of three

main terms.

Photometric Loss. Following (Pillai et al., 2019),

the similarity between the target image I

t

and the syn-

thesized target image image

ˆ

I

t

is computed using the

Structural Similarity (SSIM) (Pillai et al., 2019) term

combined with a L1 norm as shown in 3:

L

p

= α

1

1 − SSIM(I

t

,

ˆ

I

t

)

2

+ (1 −α

1

)

I

t

−

ˆ

I

t

(1)

Smoothness Loss. In order to regularize the dispar-

ities in textureless low-image gradient regions, we in-

corporate an edge-aware term based on the generated

disparity map. The effect of each of the pyramid-

levels is decayed by a factor of 2 on downsampling,

starting with a weight of 1 for the 0

th

pyramid level.

L

s

=

|

δ

x

δ

t

|

e

−

|

δ

x

I

t

|

+

|

δ

y

δ

t

|

e

−

|

δ

y

I

t

|

(2)

Disparity Awareness Loss. Finally, our main con-

tribution is in the integration of our Disparity Aware-

ness loss. This loss is extremely similar to the first

criterion, however, instead of comparing the gener-

ated image I

t

with the LiDAR output

ˆ

I

t

, we compute

its difference with the generated disparity map D

t

.

L

d

= α

2

1 − SSIM(I

t

, D

t

)

2

+ (1 − α

2

)

k

I

t

− D

t

k

(3)

Final Loss. Ultimately, a binary mask is applied

both the the LiDAR and Disparity images. This mask

ensures that non relevant pixels (LiDAR wholes or

left-most pixels on the disparity map) don’t interfere

in the final loss computation.

L = αL

p

+ βL

d

+ γL

s

(4)

Whereas L

p

forces the reconstructed image to be sim-

ilar to the LiDAR input and L

d

to the Disparity map,

L

s

smoothes the generated depth estimation.

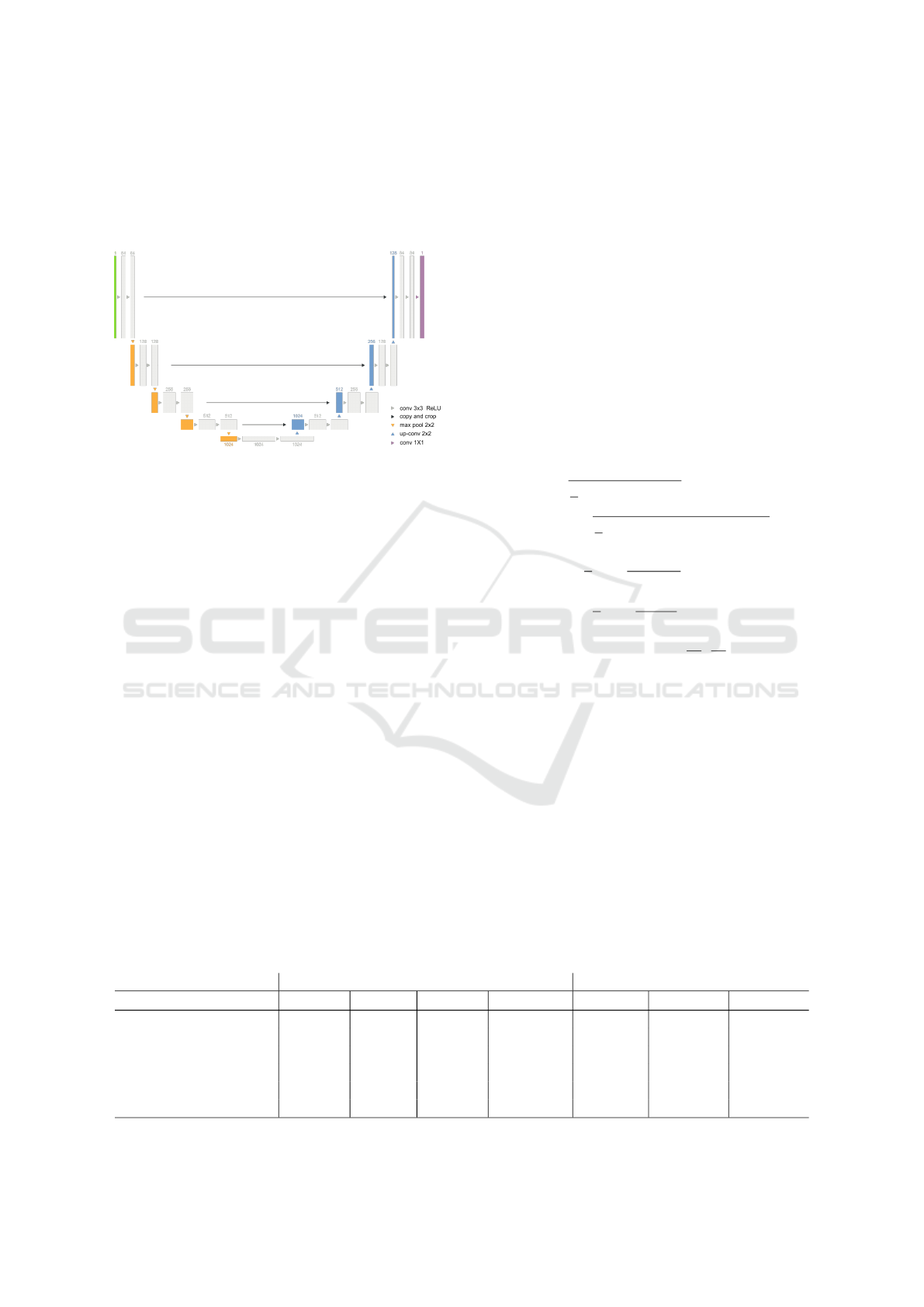

3.1 Network Architecture

Our depth estimation network is based on the gen-

eral U-Net architecture (Ronneberger et al., 2015). As

shown in Figure 3, this architecture enables to repre-

sent the input image at different scales, while taking

DA-NET: Monocular Depth Estimation using Disparity Maps Awareness NETwork

531

advantage of high level features for each step. Al-

though such method has been introduced for medical

image segmentation, we demonstrate hereafter how it

can be efficiently used for monocular depth prediction

when trained using our proposed loss function.

Figure 3: Our network is based on the general U-Net archi-

tecture.

4 EXPERIMENTS AND RESULTS

In this section, we compare the performances of our

approach to several state-of-the-art methods. We

applied straightforward data augmentation by hori-

zontally flipping the images and performed random

gamma, brightness, and color shifts by sampling from

uniform distributions for each color channel sepa-

rately. We compare two different variants of our

method, with (ours) and without (ours No DA) Dis-

parity Awareness to evaluate its impact. We evalu-

ate our models, on the Eigen data split KITTI to al-

low comparison with previously published monocular

methods.

4.1 The KITTI Dataset

The KITTI dataset by (Geiger et al., 2012) was intro-

duced in IJRR in 2013. Instead of utilizing the entire

KITTI dataset, it is common to follow the Eigen split.

This split contains 23,488 images from 32 scenes for

training and 697 images from 29 scenes for testing.

We use the data split of (Eigen et al., 2014). Ex-

cept in ablation experiments, for training we follow

Zhou et al.’s pre-processing to remove static frames.

This results in 39,810 monocular images for train-

ing and 4,424 for validation. We use the same in-

trinsic parameters for all images, setting the principal

point of the camera to the image center and the focal

length to the average of all the focal lengths in KITTI.

For fair comparison with state-of-the-art single view

depth prediction, we evaluate our results on the same

cropped region of interest.

4.2 Evaluation Protocol

In addition with the dataset, a development kit is also

given by KITTI to evaluate our methods. The follow-

ing metrics are computed in this kit:

• RMSE:

q

1

T

∑

T

iεT

d

i

− d

gt

i

2

• RMSE log:

q

1

T

∑

T

iεT

log(d

i

) − log(d

gt

i

)

2

• Sq. relative:

1

T

∑

T

iεT

k

d

i

−d

gt

i

k

2

d

gt

i

• Abs. relative:

1

T

∑

T

iεT

|

d

i

−d

gt

i

|

d

gt

i

• Accuracies: % of d

i

s.t. max(

d

i

d

gt

i

,

d

gt

i

d

i

) = δ < thr

Even though these statistics are good indicators for

the general quality of predicted depth maps, they

could be delusive. Particularly, the standard metrics

are not able to directly assess the planarity of planar

surfaces or the correctness of estimated plane orienta-

tions. Furthermore, it is of high relevance that depth

discontinuities are precisely located, which is not re-

flected by the standard metrics. This allows us yet to

easily compare our results with others approaches as

shown in Tables 1 and 2.

Table 1: Quantitative results. Comparison of our method to existing methods on the KITTI Eigen split. Best results are shown

in bold. Except for (Eigen et al., 2014), all the results have been directly taken from their papers, as we use the exact same

split for evaluation. This table shows that our method outperforms state of the art approaches in almost all metrics.

Lower is better Higher is better

Method Abs Rel Sq Rel RMSE RMSE log δ < 1.25 δ < 1.25

2

δ < 1.25

3

Train Set Mean 0.361 4.826 8.102 0.377 0.638 0.804 0.894

(Eigen et al., 2014) 0.214 1.605 6.563 0.292 0.673 0.884 0.957

(Yang et al., 2018) 0.198 1.202 5.977 0.266 0.72 0.901 0.932

(Godard et al., 2017) 0.148 1.344 5.927 0.247 0.803 0.922 0.964

Ours No DA 0.166 1.401 6.321 0.284 0.701 0.899 0.918

Ours 0.112 0.961 5.641 0.223 0.786 0.944 0.971

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

532

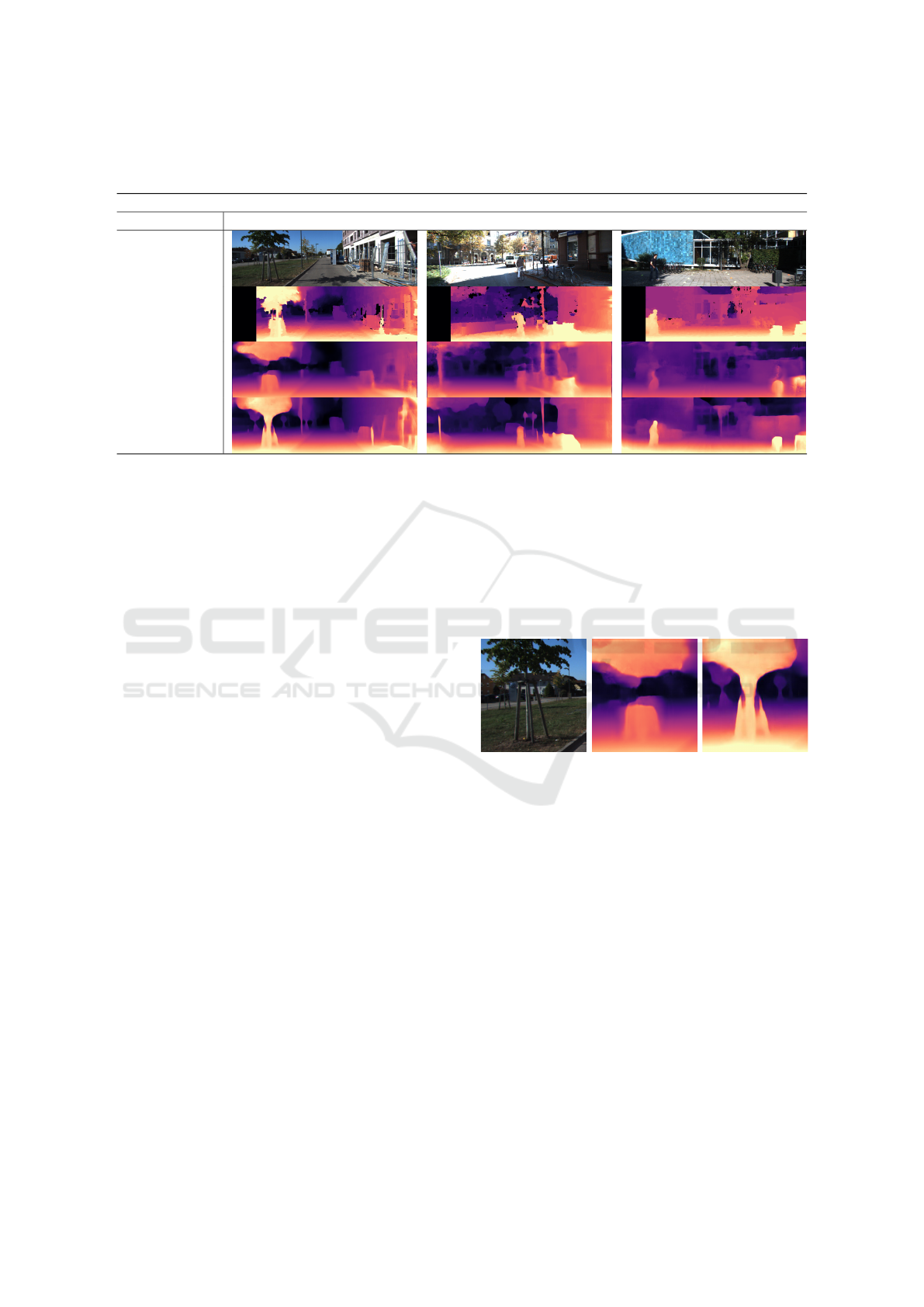

Table 2: Qualitative results on KITTI. Our method produces superior visual results on distinct scenarios thanks to its Disparity

Awareness. Smaller foreground objects such as trees or road signs are clearly better detected in our method.

Qualitative results on the KITTI Eigen Split

Method Scenario 1 Scenario 2 Scenario 3

Input image

(Hirschm

¨

uller,

2008)

(Godard et al.,

2017)

Ours

4.3 Results Analysis

Quantitative results in Table 1 unambiguously high-

light the competitiveness of our method with respect

to state-of-the-art approaches.

Our scores outperform all the tested methods in

every pixel-wise metrics that average the offset be-

tween the prediction and the ground truth. This

proves that our proposed model provides more ac-

curate depth prediction compared to other methods.

This is confirmed by the accuracy evaluation where

our method provides better scores for both δ = 1.25

2

and δ = 1.25

3

. However, for δ < 1.25 (Godard et al.,

2017) produces better results. This is due to the fact

that the depth maps generated by SGBM tend to be

less precise as the depth increases. Indeed, the depth

value of a pixel is directly correlated with the offset

from its correspondence in the second image. As this

offset is a discrete value in pixel unit, pixels corre-

sponding to faraway objects only have a limited set of

values. In contrast, foreground pixels are significantly

easier to distinguish, allowing a visible improvement

of state-of-the-art results as illustrated in Table 2.

Here, we can see that SGBM tends to create arti-

facts in the reconstruction, as the consistency of the

disparity map lacks of regularization. The method

presented in (Godard et al., 2017) succeeds in gener-

ating smoother results. However, fine structures such

as tree trunks or sign post might disappear in the re-

construction. Our approach achieves in both smooth

prediction with no artifacts, thanks to the combination

of photometric loss and disparity awareness, as well

as fine structure recovering as the network encoder-

decoder architecture is able to handle a large variety

of structure scale during prediction.

This scenario is outlined even bolder in Figure 4

where a comparison is made between (Godard et al.,

2017) and our method. This points out expressly the

advantage of using disparity maps. The tree is clearly

visible in the input image but its outlines are partially

erased in (Godard et al., 2017) cutting the tree in two

halves. Thanks to the Disparity Awareness, our meth-

ods successfully segments the contours and the pre-

dicted depth fits the real world scenario.

(a) Input image (b) Godard et al. (c) Our method

Figure 4: Qualitative results on KITTI. These zoomed depth

maps show that our approach visually outperforms (Godard

et al., 2017) for the foreground objects segmentation, pro-

ducing better results thanks to its Disparity Awareness.

5 CONCLUSION

In this paper we have presented a simple yet efficient

deep neural network for monocular depth estimation.

We proposed a novel photometric loss that is able

to take advantage of disparity map consistency while

ensuring the regularity of the predicted depth image.

Along with the proposed loss, we showed that the use

of both stereo images to generate a dense disparity

map instead of just the one given by the LiDAR sen-

sor is a strong tool to predict satisfying dense depth

maps that even outperform qualitatively and quanti-

tatively state-of-the-art methods. Further works will

DA-NET: Monocular Depth Estimation using Disparity Maps Awareness NETwork

533

include evaluation on other datasets as well as stud-

ies on temporal consistency to apply our methods to

videos.

REFERENCES

Bertozzi, M. and Broggi, A. (1998). GOLD: A parallel real-

time stereo vision system for generic obstacle and lane

detection. IEEE Transactions on Image Processing,

7(1):62–81.

Billy, A., Pouteau, S., Desbarats, P., Chaumette, S., and

Domenger, J. P. (2019). Adaptive slam with synthetic

stereo dataset generation for real-time dense 3d re-

construction. In VISIGRAPP 2019 - Proceedings of

the 14th International Joint Conference on Computer

Vision, Imaging and Computer Graphics Theory and

Applications, volume 5, pages 840–848.

Cunha, J., Pedrosa, E., Cruz, C., Neves, A., and Lau, N.

(2011). Using a depth camera for indoor robot local-

ization and navigation. In DETI/IEETA-University of

Aveiro.

Delage, E., Lee, H., and Ng, A. Y. (2007). Automatic

single-image 3d reconstructions of indoor Manhattan

world scenes. Springer Tracts in Advanced Robotics,

28.

D

´

ıaz, R. Soft Labels for Ordinal Regression. Technical

report.

Eigen, D., Puhrsch, C., and Fergus, R. (2014). Depth map

prediction from a single image using a multi-scale

deep network. In Advances in Neural Information

Processing Systems, volume 3, pages 2366–2374.

Fu, H., Gong, M., Wang, C., Batmanghelich, K., and Tao,

D. Deep Ordinal Regression Network for Monocular

Depth Estimation. Technical report.

Garg, R., Vijay Kumar, B. G., Carneiro, G., and Reid, I.

(2016). Unsupervised CNN for single view depth es-

timation: Geometry to the rescue. In Lecture Notes in

Computer Science (including subseries Lecture Notes

in Artificial Intelligence and Lecture Notes in Bioin-

formatics), volume 9912 LNCS, pages 740–756.

Geiger, A., Lenz, P., and Urtasun, R. (2012). Are we ready

for autonomous driving? the KITTI vision benchmark

suite. In Proceedings of the IEEE Computer Society

Conference on Computer Vision and Pattern Recogni-

tion, pages 3354–3361.

Godard, C., Mac Aodha, O., and Brostow, G. J. (2017). Un-

supervised monocular depth estimation with left-right

consistency. In Proceedings - 30th IEEE Conference

on Computer Vision and Pattern Recognition, CVPR

2017, volume 2017-Janua, pages 6602–6611.

Henry, P., Krainin, M., Herbst, E., Ren, X., and Fox,

D. (2014). RGB-D mapping: Using depth cameras

for dense 3D modeling of indoor environments. In

Springer Tracts in Advanced Robotics, volume 79,

pages 477–491. Springer Verlag.

Hirschm

¨

uller, H. (2008). Stereo processing by semiglobal

matching and mutual information. IEEE Transac-

tions on Pattern Analysis and Machine Intelligence,

30(2):328–341.

Koch, T., Liebel, L., Fraundorfer, F., and K

¨

orner, M. Eval-

uation of CNN-based Single-Image Depth Estimation

Methods. Technical report.

Mayer, N., Ilg, E., Hausser, P., Fischer, P., Cremers, D.,

Dosovitskiy, A., and Brox, T. (2016). A Large Dataset

to Train Convolutional Networks for Disparity, Opti-

cal Flow, and Scene Flow Estimation. In Proceedings

of the IEEE Computer Society Conference on Com-

puter Vision and Pattern Recognition, volume 2016-

Decem, pages 4040–4048.

Mutimbu, L. and Robles-Kelly, A. (2013). A relaxed fac-

torial Markov random field for colour and depth es-

timation from a single foggy image. In 2013 IEEE

International Conference on Image Processing, ICIP

2013 - Proceedings, pages 355–359.

Ong, S. and Nee, A. (2013). Virtual and augmented reality

applications in manufacturing.

Pillai, S., Ambrus¸, R., and Gaidon, A. (2019). SuperDepth:

Self-supervised, super-resolved monocular depth esti-

mation. In Proceedings - IEEE International Confer-

ence on Robotics and Automation, volume 2019-May,

pages 9250–9256.

Ren, H., El-khamy, M., and Lee, J. (2019). Deep Robust

Single Image Depth Estimation Neural Network Us-

ing Scene Understanding.

Ronneberger, O., Fischer, P., and Brox, T. (2015). U-

net: Convolutional networks for biomedical image

segmentation. In Lecture Notes in Computer Science

(including subseries Lecture Notes in Artificial Intel-

ligence and Lecture Notes in Bioinformatics), volume

9351, pages 234–241. Springer Verlag.

Saxena, A., Sun, M., and Ng, A. Y. (2007). Learning 3-D

scene structure from a single still image. In Proceed-

ings of the IEEE International Conference on Com-

puter Vision.

Scharstein, D., Szeliski, R., and Zabih, R. (2001). A

taxonomy and evaluation of dense two-frame stereo

correspondence algorithms. In Proceedings - IEEE

Workshop on Stereo and Multi-Baseline Vision, SMBV

2001, pages 131–140.

Schwarz, B. (2010). industry perspective technology focus

lIDAR Mapping the world in 3D. Technical report.

Silberman, N., Hoiem, D., Kohli, P., and Fergus, R.

(2012). Indoor segmentation and support inference

from RGBD images. In Lecture Notes in Computer

Science (including subseries Lecture Notes in Artifi-

cial Intelligence and Lecture Notes in Bioinformatics),

volume 7576 LNCS, pages 746–760.

Thrun, S. (2008). Simultaneous localization and mapping.

Ullman, S. (1979). The interpretation of structure from mo-

tion. Proceedings of the Royal Society of London. Se-

ries B, Containing papers of a Biological character.

Royal Society (Great Britain), 203(1153):405–426.

Urmson, C., Anhalt, J., Bagnell, D., Baker, C., Bittner,

R., Clark, M. N., Dolan, J., Duggins, D., Galatali,

T., Geyer, C., Gittleman, M., Harbaugh, S., Hebert,

M., Howard, T. M., Kolski, S., Kelly, A., Likhachev,

M., McNaughton, M., Miller, N., Peterson, K., Pil-

nick, B., Rajkumar, R., Rybski, P., Salesky, B., Seo,

Y.-W., Singh, S., Snider, J., Stentz, A., Whittaker,

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

534

W. R., Wolkowicki, Z., Ziglar, J., Bae, H., Brown, T.,

Demitrish, D., Litkouhi, B., Nickolaou, J., Sadekar,

V., Zhang, W., Struble, J., Taylor, M., Darms, M., and

Ferguson, D. (2008). Autonomous driving in urban

environments. Journal of Field Robotics, 25(8):425–

466.

Volkhardt, M., Schneemann, F., and Gross, H. M. (2013).

Fallen person detection for mobile robots using 3D

depth data. In Proceedings - 2013 IEEE International

Conference on Systems, Man, and Cybernetics, SMC

2013, pages 3573–3578.

Yang, N., Wang, R., Stuckler, J., and Cremers, D. (2018).

Deep Virtual Stereo Odometry : Monocular Direct

Sparse Odometry. European Conference on Computer

Vision, pages 1–17.

Zhang, Z. (2012). Microsoft kinect sensor and its effect.

Zhong, Y., Dai, Y., and Li, H. (2017). Self-Supervised

Learning for Stereo Matching with Self-Improving

Ability.

Zhu, A., Yuan, L., Chaney, K., and Daniilidis, K. (2018).

EV-FlowNet: Self-Supervised Optical Flow Estima-

tion for Event-based Cameras. Robotics: Science and

Systems Foundation.

DA-NET: Monocular Depth Estimation using Disparity Maps Awareness NETwork

535