Plant Species Identification using Discriminant Bag of Words (DBoW)

Fiza Murtaza

1 a

, Umber Saba

1 b

, Muhammad Haroon Yousaf

1,2 c

and Serestina Viriri

3 d

1

Computer Engineering Department, University of Engineering and Technology Taxila, Pakistan

2

Swarm Robotics Lab-NCRA, University of Engineering and Technology Taxila, Pakistan

3

University of KwaZulu-Natal, Durban, South Africa

Keywords:

Plant Species Identification, SURF, Kernel Descriptors, ImageClef, Flavia, Bag of Words.

Abstract:

Plant species identification is necessary for protecting biodiversity which is declining rapidly throughout the

world. This research work focuses on plant species identification in simple and complex background using

Computer Vision techniques. Intra-class variability and inter-class similarity are the key challenges in a large

plant species dataset. In this paper, multiple organs of plants such as leaf, flower, stem, fruit, etc. are classified

using hand-crafted features for identification of plant species. We propose a novel encoding scheme named

as Discriminant Bag of Words (DBoW) to identify multiple organs of plants. The proposed DBoW extracts

the class specific codewords, and assigns the weights to codewords in order to signify discriminant power of

the codewords. We evaluated our proposed method on two publicly available datasets: Flavia and ImageClef.

The experimental results achieved classification accuracy rates of 98% and 94% on FLAVIA and ImageClef

datasets, respectively.

1 INTRODUCTION

The identification of plants using manual method is

time consuming, complex and frustrating for a layper-

son because of specialized botanical terms. The dif-

ficulties encountered in manual classification have

prompted the necessity of computer vision to auto-

mate the process (Kala et al., 2016a). Nowadays,

researchers have developed approaches to automate

the process of plant species identification. The auto-

matic classification of plant species is important for

scientists and botanist who desire to obtain the se-

ries of features that describe plant structure. The

availability of relevant technologies, remote access

to databases, new techniques in image processing

and pattern recognition let the idea of automated

species identification become a reality. Automatic

plant species identification using computer vision has

applications in weeds identification, species discov-

ery, plant taxonomy, natural reserve park manage-

ment, etc.

The datasets can be categorized based on plant or-

gans which are used for plant identification. The most

a

https://orcid.org/0000-0001-8259-7389

b

https://orcid.org/0000-0000-0000-0000

c

https://orcid.org/0000-0001-8255-1145

d

https://orcid.org/0000-0002-2850-8645

common organ used for identification is leaf because

it is the most abundant organ in nature and easiest

to obtain in the field study throughout the year. Al-

though leaf, flower, stem, fruit and entire plant can

also be used for identification of plant species, they

are not addressed adequately in literature due to their

complexity and unavailability throughout the year.

Some examples of different organs of plant are shown

in Figure 1.

A combination of hand-crafted and deep learning

features is proposed in (Nguyen et al., 2017) for the

identification of plant species using leaf and flower

organs. Convolution Neural Networks (CNN) and

Kernel descriptors (KDES) are used in parallel for

comparison purpose in (Nguyen et al., 2017). Exper-

imental results show that hand-crafted features out-

perform deep features in case of simple images with

plain background. However, deep learning performs

better in case of natural and complex environment, but

data augmentation is required to convert small dataset

into a large dataset. Furthermore, the techniques like

Borda Count (BC) (Go

¨

eau et al., 2012) and Inverse

Rank Position (IRP) (Go

¨

eau et al., 2012) are used for

combining results of different plant organs but they

did not perform well for all types of organs.

A deep learning-based model LeafNet is proposed

in (Barr

´

e et al., 2017) for plant identification using

Murtaza, F., Saba, U., Yousaf, M. and Viriri, S.

Plant Species Identification using Discriminant Bag of Words (DBoW).

DOI: 10.5220/0009161004990505

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 5: VISAPP, pages

499-505

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

499

Branch

Entire

Leaf

LeafScan

Flower

Fruit

Stem

Figure 1: Different organs of the plant species.

Flavia dataset (Wu et al., 2007). Flavia is a small

dataset with 32 plant species so data augmentation

has been performed to generate 44242 images from

1526 images before training the network and achieved

97.9% classification accuracy. The use of deep learn-

ing approach on smaller datasets is dependent on data

augmentation, which is time consuming and an addi-

tional overload. Kala et. al. (Kala et al., 2016b) pro-

posed an approach for leaf classification using con-

vexity moment of polygon. In (Kala et al., 2016b) se-

ries of experiments are performed on Flavia dataset

and 92% accuracy is achieved using Radial Basis

Function (RBF) classifier. In (Kala et al., 2016b) ge-

ometric features i.e. rectangularity, aspect ratio and

circularity are combined with convexity moment of

polygon for identification of plant species. The tech-

nique worked well for the small and plain background

images like in Flavia but not suitable for complex

datasets like ImageClef (Go

¨

eau et al., 2014a).

Ghazi et. al. (Mehdipour Ghazi et al., 2015) took

the challenge to use complex and large dataset with

natural background i.e. ImageClef for the validation

of their approach and used deep learning based Prin-

cipal Component Analysis Net (PCANet). PCANet is

a simple but efficient deep learning network for im-

age classification, that has been used with the combi-

nation of the scale-invariant feature transform (SIFT)

based VLAD features. Classification accuracy of

21.2% has been obtained for LeafScan organ using

PCANet. PlantNet (Go

¨

eau et al., 2014b) used hand-

crafted features Random Maximum Margin Hashing

(RMMH) for different types of organs of plant iden-

tification and classification accuracy of 51% has been

achieved using adaptive KNN rule.

To conclude the literature, two streams can be ob-

Leaf

LeafScan

Flower

Input Image

Segmented Image

Segmentation

Feature Extraction

KDES

DBoW

Feature

Concatenation

Classification

Output: Predicted

Plant Specie

Figure 2: Flow diagram of proposed methodology.

served for classification of plant species i.e. based

on hand-crafted features or deep learning. Although,

deep learning-based approaches obtained promising

results but at the cost of dedicated computational re-

sources and data augmentation for training. How-

ever, hand-crafted features give better results than

deep learning-based methods when small and sim-

ple datasets are involved. Deep learning-based ap-

proaches also demand more time for training as com-

pared to hand-crafted features.

A lot of research work has been done in this

emerging field such as describe in (Kala et al., 2016a;

Nguyen et al., 2017; Barr

´

e et al., 2017; Kala et al.,

2016b) but there still exist problems in this domain

which are still unaddressed by the researchers. We

can observe lot of work on computer vision-based

plant species identification in simpler scenarios but

identification of species with cluttered background,

non-uniform illumination and viewpoint variation is

still a challenging task and there is ample room for

researchers to propose new techniques in this domain.

In this paper, we have proposed a hand-crafted

feature-based approach for plant species identification

in simple as well as complex scenario. Our contribu-

tions are as follows: firstly, we extract multiple fea-

ture descriptors to identify plant species using multi-

ple organs e.g. leaf and flowers of plants for better ac-

curacy. Secondly, we proposed an encoding scheme

named as Discriminant Bag of Words (DBoW) that

can classify plant species with high accuracy on com-

plex and large datasets. The detailed methodology

and results are explained in Section 2 and Section 3,

respectively.

2 PROPOSED METHODOLOGY

The proposed methodology for the identification of

plant species is depicted in Figure 2. The steps re-

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

500

(a) (b) (c)

Figure 3: Segmentation results for (a) LeafScan, (b) Leaf and (c) Flower organ.

quired in the proposed methodology are preprocess-

ing/segmentation, feature description and classifica-

tion. Due to the discriminative nature of organs, dif-

ferent segmentation modules are introduced for dif-

ferent organ type. We can observe the simple back-

ground of LeafScan as compared to complex back-

ground of leaf in Figure 5. Similarly, flower has dif-

ferent color and shape feature as compared to leaf.

Two different feature descriptors i.e. KDES and

speeded up robust features (SURF) are merged in fea-

ture extraction module and then Support Vector Ma-

chine (SVM) is used for classification.

2.1 Preprocessing/Segmentation

Preprocessing enhances plant organs segmentation

before their feature extraction. CIELAB color space

is used for the segmentation of region of interest of

each organ. The color space L represents the bright-

ness level, in the range [0, 100] with the darkest black

at L = 0 and the brightest white at L = 100. The

color space A represents the green–red component,

with green in the negative direction and red in the

positive direction in the range: [−128, 127]. Whereas

the color space B represents the blue–yellow compo-

nent, with blue in the negative direction and yellow

in the positive direction in the range: [-128, 127].

CIELAB color space seems to be the most appropri-

ate in segmentation of all organ types. For example, if

we want green and yellow color in case of leaf organ,

we can accordingly set the threshold for color in A

and B space respectively. Moreover, we can also seg-

ment out dark and light part of image using L space

of CIELAB color space. For three organs (LeafScan,

flower and leaf), we set the threshold greater than 60

for L color space to ignore the dark objects.

For Leaf and LeafScan images, green color region

of the leaf is extracted by setting threshold less than

50 for channel A to ignore the dark red objects while

retrieving green objects. As the leaves may have yel-

low color, therefore, for channel B, we set threshold

greater than 0 to ignore the blue component i.e. the

sky region in the images while retrieving the yellow

objects. A sample image of segmentation for LeafS-

can and Leaf organ is shown in Figure 3. Note that in

Figure 3(a) the LeafScan image has plain background

therefore the boundary of the leaf can be easily ob-

tained by multiplying the binary image with the orig-

inal RGB image. For Leaf organ in Figure 3(b) the

background is not plain rather it has some of the back-

ground leaves and stems etc. therefore we cropped the

region where the leaf region is detected by applying

above defined threshold on the CIELAB color space.

As flower organ varies a lot in terms of color and

are surrounded by leaves, most segmentation algo-

rithms e.g. active contour, expectation maximization,

watershed etc. are not able to segment the flowers

accurately. However, flowers can be segmented us-

ing color thresholds on CIELAB color space. For A

channel we set the threshold greater than -1 to ignore

the green objects i.e. leaves are ignored. Similarly,

for channel B, we set threshold greater than -50 to ig-

nore the sky region the flower images. Results for the

segmentation for different flower organs are shown in

Figure 3(c).

2.2 Feature Extraction

After preprocessing, the next step is to extract dis-

criminant features from each organ image. We pro-

posed a novel feature encoding method named Dis-

criminant Bag of Words (DBoW) features which in-

cludes hand-crafted feature descriptor i.e. SURF de-

scriptor followed by discriminant encoding method as

shown in Figure 4. KDES and DBoW features are ex-

tracted from each image in parallel and they are con-

catenated before passing to the SVM classifier for fi-

nal classification. KDES and DBoW based feature

extraction method is explained in the following sub-

sections.

2.2.1 Kernel Descriptors (KDES) Features

Kernel Descriptors (Bo et al., 2010) have been proved

to be robust for visual object and scene recognition.

Different types of kernel are used in KDES i.e. Gra-

dient kernel (G-KDES), RGB kernel (RGB-KDES)

and Local Binary Pattern kernel (LBP-KDES). We

applied different types of KDES for different organs

Plant Species Identification using Discriminant Bag of Words (DBoW)

501

Training Images

Specie 1

Specie 2

Specie C

Feature Extraction

Specie 1

Specie 2

Specie C

Codebook

Generation

Codebook Weights

Generation

DBoW

DBoW Feature Vector

Feature ExtractionTest Image

Figure 4: Feature extraction using DBoW approach.

type and conclude that RGB-KDES works better for

flower organ and G-KDES works better for leaf organ.

In KDES, patch level and image level features are

extracted. With the help of uniform grid, a reduced

number of pixels are selected and patches of prede-

fined area around each pixel is then extracted. We

build a metric to find the similarity between two im-

age patches. On each patch level feature, k-means

clustering is applied and then visual word dictionary

is built. The last step is to compute image level feature

on a learned dictionary using spatial pyramid match-

ing (Bo et al., 2010).

2.2.2 Discriminant Bag of Words (DBoW)

Features

The proposed DBoW approach contains four steps.

Firstly, the SURF features are extracted from each or-

gan image. Secondly, the codebook is generated per

each plant species using k-means clustering. Thirdly,

the discriminant codewords are selected from each

codebook by learning the weights of each codeword

as proposed in (Murtaza et al., 2018). The idea behind

discriminant codewords is to generate codewords that

vote for the features of their own class. The major

challenge faced in complex datasets is inter-class sim-

ilarity and intra-class variability. Using the traditional

codewords, features not only vote for the codewords

of their own class but they also vote for the code-

words of other similar classes. Therefore, we pro-

posed to solve this issue by finding the codewords

from each class which have higher similarity with

their own classes. Finally, the discriminative code-

words are used to encode the features from the train-

ing and testing images into a fixed-length feature vec-

tor per image.

We integrate discriminate codewords with the tra-

ditional Bag of Words (BoW) methods therefore we

named our proposed method as DBoW. Main steps

required to extract DBoW features are given in Algo-

rithm 1.

When we multiply weight with codewords (Step 8

of Algorithm 1), we are actually enhancing the votes

of features that is actually part of that class and sup-

press the votes of those features that is not the part

of that class and hence increase in accuracy in resul-

tant.Both feature vectors, DBoW and KDES are fused

and passed to SVM classifier for training and testing

purpose resulting in plant species identification.

3 EXPERIMENTAL RESULTS

3.1 Datasets

We have used two publicly available datasets i.e.

Flavia (Wu et al., 2007) and ImageClef (Go

¨

eau et al.,

2014a) to validate our proposed approach on simple

as well as complex scenario. ImageClef dataset has

more than sixty thousand images belonging to 500

plants species existing in West Europe. In this paper,

leaf including Leaf and LeafScan and Flower organs

as images are used. Table 1 gives details of the train-

ing and testing set.

We also conducted experiments on Flavia dataset

(Wu et al., 2007) which only contains leaf organ com-

posed of 1907 leaf images with 32 species. Each

specie has about 50 to 60 images. As a standard prac-

tice, this dataset has been divided into 70% training

and 30% test set. The main challenges of ImageClef

are illustrated in Figure 5.

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

502

Algorithm 1: Steps required to extract DBoW features.

Input: Segmented images of plant organs.

Output: DBoW features for each plant organ.

1: Detect and extract SURF features for each organ

image.

2: Extract K clusters from each class by apply

k-means clustering on the training images of

each class separately to find the class-specific

codebooks. This process will result in K ×C

clusters where C represents total number of plant

species present in the dataset.

3: Initiate two vectors A and A

0

of length K × C

with zeros, where A represents within class

assignment and A

0

represents out of class

assignment.

4: Compare all feature vectors in the training set

one by with the K × C codewords and find the

best matching cluster i using Euclidian distance.

5: If the feature vector and its nearest codeword i

belong to the same class, then assign vote to A[i]

otherwise assign vote to A

0

[i]. This will result in

the within class and out of class assignments for

each codeword.

6: Then for each codeword find the discriminate

weight using following equation:

w[i] =

A[ j]

A[i] +q[i]

0

∀ j ∈ [1, 2, 3 · · · , K × C]

(1)

If w[i] has values closer to 1 then it means more

features vectors are assigned to codeword of its

own class

7: Extract BOW representation using these K × C

clusters.

8: Then the DBoW features are calculated by dot

multiplication of BOW representation with w

resulting in a K × C dimensional feature vector.

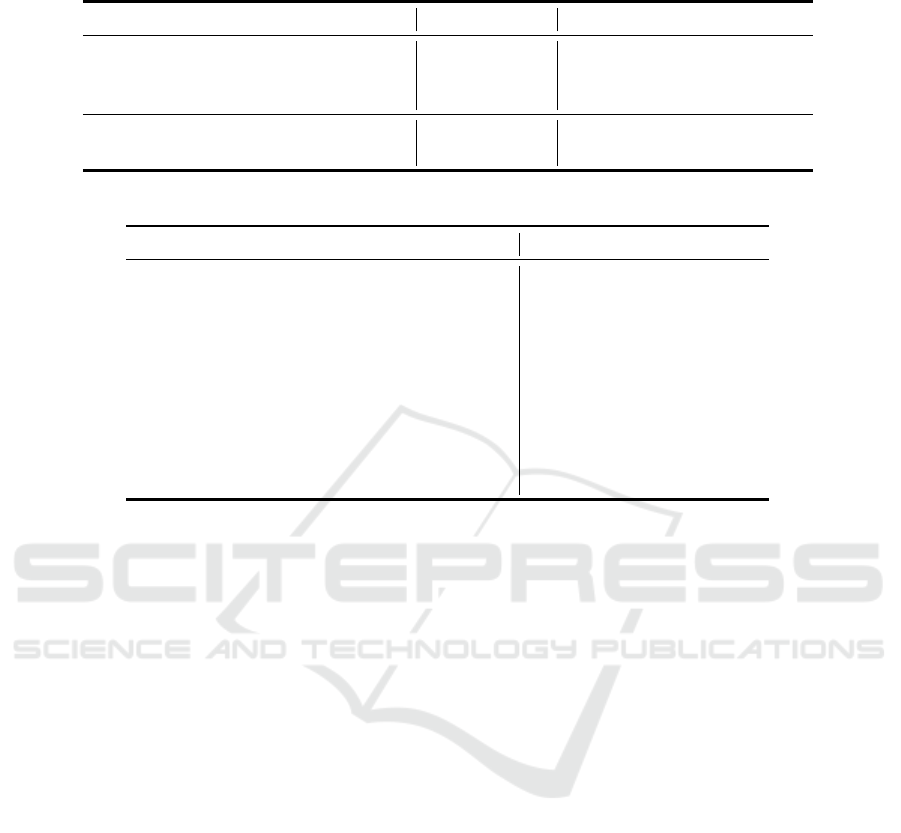

Table 1: Train and test set provided by ImageClef dataset.

Plant Organ Leaf LeafScan Flower

Training Set 7754 11335 13164

Test Set 2058 696 4559

Total 9812 12031 177223

Flavia is less complex dataset as compared to Image-

Clef and contain plain background of images. The

leaf images were acquired by scanners or digital cam-

eras on plain background therefore we did not per-

form segmentation on this dataset. The isolated leaf

images contain blades only, without petioles as shown

in Figure 6. For both datasets, accuracy is used as a

standard evaluation metric to evaluate our approach.

(a) (b)

(c) (d)

Figure 5: Some Challenging images of ImageClef are: (a)

same class but images with different resolution (b) images

of different plant species with similar shape (c) diversity of

leaf Image in same class (d) images of Complex, uncon-

trolled and cluttered environment.

(1) (2) (3) (4) (5) (6) (7) (8)

(9) (10) (11) (12) (13) (14) (15) (16)

(17) (18) (19) (20) (21) (22) (23) (24)

(25) (26) (27) (28) (29) (30) (31) (32)

Figure 6: Sample images from 32 species of Flavia Dataset.

3.2 Implementation Details

For ImageClef dataset, it has been observed that

dataset is imbalanced. There are some classes in test

dataset having no image present in train data and vice

versa so we discarded that classes at the time of evalu-

ation. Furthermore, it is also observed that classes are

imbalanced which affect the accuracy of the proposed

method therefore we balanced dataset by finding the

minimum number of images m from each class and

randomly selecting m number of images from each

class.

For KDES, in patch level feature extraction we

used 200 eigenvectors. For building dictionary, we

selected 1000 numbers of visual words. In image

level feature extraction, spatial pyramid matching is

applied on 3 layers. Hence, we have a feature vector

of length 21000 for each image.

For DBoW, we used SURF as a feature descriptor

and take only 256 valid points hence we have 256×64

length of features against each image. After that we

performed class specific k-means clustering with K =

128 resulting in K × C dimensional feature vector for

each image.

3.3 Results and Discussion

The classification results of proposed approach on

ImageClef dataset using different combination of the

Plant Species Identification using Discriminant Bag of Words (DBoW)

503

Table 2: Evaluation of the proposed method in terms of accuracy (%) on ImageClef dataset using KDES features and their

combination with DBoW features.

Variants of the proposed method Segmentation LeafScan Leaf Flower

G-KDES 7 85.45 36.91 34.50

G-KDES X 89.42 38.04 38.90

LBP-KDES X 85.50 40.11 35.02

G-KDES+LBP-KDES X 92.34 40.51 35.60

G-KDES+LBP-KDES+DBoW (Our) X 94.03 42.11 54.75

Table 3: Comparison results in terms of accuracy (%) on ImageClef dataset with the state-of-the-art methods.

Method LeafScan Flower Leaf

Mica (Nguyen et al., 2017) 78.3 10.9 24.3

Mica Run 3 (Go

¨

eau et al., 2012) 43.7 20.7 34.2

Mica Run 1 (Go

¨

eau et al., 2012) 43.7 19.5 34.2

Sabanci Run 1 (Mehdipour Ghazi et al., 2015) 21.6 18.9 11.1

PlantNet Run 4 (Go

¨

eau et al., 2014b) 54.1 36.6 16.5

FINKI Run 3 (Wu et al., 2007) 44.9 25.5 16.0

IBM AU Run 2 (Go

¨

eau et al., 2014a) 61.2 55.5 30.0

Sabanci Run 3 (Chen et al., 2014) 90.5 34.0 33.8

Sabanci Run 2 (Mehdipour Ghazi et al., 2015) 90.5 34.0 33.8

G-KDES+LBP-KDES+DBoW (Our) 94.0 54.7 42.1

KDES and DBoW based features are shown in Table

2. There are two different parts of results as shown

in the Table 2. First part shows the results with and

without segmentation of the different organ images.

Results show that segmentation step resulted in higher

accuracy on all of three organs as compared to with-

out segmentation. Using G-KDES with segmentation,

there is an increase of about 4% for Flower and Leaf-

Scan and 1% for Leaf organ.

In the second part of the Table 2, we compute

the results using the combination of the G-KDES and

LBP-KDES on the segmented images and it can be

seen that accuracy further improved using the com-

bination of these features. Finally we combine G-

KDES and LBP-KDES with the BoDW and the re-

sults show that the proposed DBoW features com-

plement the state-of-art KDES features. The accu-

racy achieved using our approach clearly shows the

efficiency of the proposed discriminant features that

boast the accuracy when combined with traditional

KDES because of class-wise clustering and generat-

ing discriminate codewords.

Table 3 presents the comparison results of the pro-

posed method with the stat-of-the-art methods on Im-

ageClef dataset. Our proposed method outperforms

the state-of-the-art methods because it enhances dis-

criminant codewords and suppress non-discriminant

codewords through discriminate encoding scheme.

The results show that this approach can help iden-

tification of multiple species in large and complex

dataset.

The classification accuracy of proposed approach

on Flavia dataset is shown in Table 4. The results

show that the proposed method achieved a higher ac-

curacy rate as compared to other methods. This shows

that our proposed method with discriminant code-

words resulted in discriminative representation of im-

ages using handcrafted features i.e. SURF features.

4 CONCLUSIONS

In this paper, discriminate codeword generation tech-

nique is proposed to identify plant species captured in

challenging background. The experiments have been

tested on two different types of datasets i.e. Flavia and

ImageClef. The Flavia dataset has images with sim-

ple background of 32 species while ImageClef is very

complex multi-organ images dataset captured in nat-

ural environment. The obtained results are promising

on both datasets. On Flavia dataset, 98% accuracy

rate has been achieved using 32 species while 94%

accuracy rate of LeafScan organ of ImageClef dataset

has been achieved using our proposed method.

For future work, it is envisioned that fusion tech-

niques can be used to combine different feature ex-

traction techniques for different plant organs. Al-

though flower and leaf are the most commonly used

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

504

Table 4: Comparison result of the proposed method on Flavia dataset with the state-of-the-art other methods.

Method Accuracy (%)

Thi-Lan Le,2014 (Nguyen et al., 2017) 97.5

Wu et al., 2007 (Wu et al., 2007) 90.3

Kadir, 2014 (Kadir, 2014)

97.2

Pierre Barr, 2017 (Barr

´

e et al., 2017) 97.9

Serestina Viriri, 2016 (Kala et al., 2016b) 95.0

G-KDES+LBP-KDES+DBoW (Our) 98.0

organs for plant species identification as they remain

available throughout the year, other organs of plant

such as stem and fruits can also be considered for dif-

ferent plant species identification. Furthermore, this

work can also be extended to achieve favorable results

by utilizing deep convolutional neural networks in or-

der to evaluate their ability to identify plant species at

a large-scale.

ACKNOWLEDGEMENTS

The authors would like to acknowledge the support

by the Centre for Computer Vision Research (C

2

VR)

and Swarm Robotics Lab-NCRA, University of Engi-

neering and Technology (UET) Taxila, Pakistan.

REFERENCES

Barr

´

e, P., St

¨

over, B. C., M

¨

uller, K. F., and Steinhage, V.

(2017). Leafnet: A computer vision system for au-

tomatic plant species identification. Ecological Infor-

matics, 40:50–56.

Bo, L., Ren, X., and Fox, D. (2010). Kernel descriptors for

visual recognition. In Advances in neural information

processing systems, pages 244–252.

Chen, Q., Abedini, M., Garnavi, R., and Liang, X. (2014).

Ibm research australia at lifeclef2014: Plant identifi-

cation task. In CLEF (Working Notes), pages 693–

704.

Go

¨

eau, H., Bonnet, P., Barbe, J., Bakic, V., Joly, A.,

Molino, J.-F., Barthelemy, D., and Boujemaa, N.

(2012). Multi-organ plant identification. In Proceed-

ings of the 1st ACM international workshop on Mul-

timedia analysis for ecological data, pages 41–44.

ACM.

Go

¨

eau, H., Joly, A., Bonnet, P., Selmi, S., Molino, J.-F.,

Barth

´

el

´

emy, D., and Boujemaa, N. (2014a). Lifeclef

plant identification task 2014. In CLEF2014 Work-

ing Notes. Working Notes for CLEF 2014 Conference,

Sheffield, UK, September 15-18, 2014, pages 598–

615. CEUR-WS.

Go

¨

eau, H., Joly, A., Yahiaoui, I., Baki

´

c, V., Verroust-

Blondet, A., Bonnet, P., Barth

´

el

´

emy, D., Boujemaa,

N., and Molino, J.-F. (2014b). Plantnet participation

at lifeclef2014 plant identification task.

Kadir, A. (2014). A model of plant identification system us-

ing glcm, lacunarity and shen features. arXiv preprint

arXiv:1410.0969.

Kala, J. R., Viriri, S., and Moodley, D. (2016a). Sinuos-

ity coefficients for leaf shape characterisation. In Ad-

vances in Nature and Biologically Inspired Comput-

ing, pages 141–150. Springer.

Kala, J. R., Viriri, S., Moodley, D., and Tapamo, J. R.

(2016b). Leaf classification using convexity measure

of polygons. In International Conference on Image

and Signal Processing, pages 51–60. Springer.

Mehdipour Ghazi, M., Yanıko

˘

glu, B., Aptoula, E., Muslu,

¨

O., and

¨

Ozdemir, M. C. (2015). Sabanci-okan sys-

tem in lifeclef 2015 plant identification competition.

CLEF (Conference and Labs of the Evaluation Fo-

rum).

Murtaza, F., HaroonYousaf, M., and Velastin, S. A. (2018).

Da-vlad: Discriminative action vector of locally ag-

gregated descriptors for action recognition. In 2018

25th IEEE International Conference on Image Pro-

cessing (ICIP), pages 3993–3997. IEEE.

Nguyen, T. T.-N., Le, T.-L., Vu, H., Nguyen, H.-H., and

Hoang, V.-S. (2017). A combination of deep learn-

ing and hand-designed feature for plant identification

based on leaf and flower images. In Asian Confer-

ence on Intelligent Information and Database Sys-

tems, pages 223–233. Springer.

Wu, S. G., Bao, F. S., Xu, E. Y., Wang, Y.-X., Chang, Y.-

F., and Xiang, Q.-L. (2007). A leaf recognition algo-

rithm for plant classification using probabilistic neural

network. In 2007 IEEE international symposium on

signal processing and information technology, pages

11–16. IEEE.

Plant Species Identification using Discriminant Bag of Words (DBoW)

505