Development of a Virtual Reality Environment for Rehabilitation of

Tetraplegics

Gabriel Augusto Ginja

1 a

, Renato Varoto

2 b

and Alberto Cliquet Jr.

1,2,3 c

1

Bioengineering Post Graduate Program, University of S

˜

ao Paulo, Av. Trabalhador S

˜

ao Carlense,

400, CEP 13566-590, S

˜

ao Carlos, Brazil

2

Electrical and Computing Engineering Department, University of S

˜

ao Paulo, S

˜

ao Carlos, SP, Brazil

3

Orthopedics & Traumatology Department, Faculty of Medical Sciences, University of Campinas, Campinas, SP, Brazil

Keywords:

Virtual Reality, Spinal Cord Injury, Biomechanics.

Abstract:

Treatments based on Virtual Reality have been successfully used in motor rehabilitation of issues such as

Spinal Cord Injury and Stroke. Highly immersive Virtual environments combined with biofeedback can be

utilized to train functional activities on patients with these motor disabilities. This work details the develop-

ment of a portable Virtual Reality environment to train upper limbs activities on Spinal Cord Injury subjects.

The Virtual Reality environment depicts a personalized physiotherapy room where the user trains elbow and

shoulder by reaching 5 spots on table. Also the user have a biofeedback of both hands’ position. Finally this

system will be integrated a pilot biomechanical analysis using The Motion Monitor system to compare a group

of patients before and after a 6 week intervention with Virtual Reality.

1 INTRODUCTION

Spinal Cord Injury (SCI) afflicts from 50 to 1218

cases per million worldwide according to Spinal Cord

Injury Evidence (Devivo and Chen, 2011) and most

of these cases are caused by vehicles accidents. SCI

is classified by the neurological level of the injury

so that motor and sensory functions below the in-

jury are disabled. Because of that, SCI impacts so-

cial life and it limits the capacity to execute Activities

of Daily Life (ADLs). Most of tetraplegic’s ADLs

use reach and grasp movements (Varoto and Cliquet,

2015) which involve complex biomechanical analysis

of upper limbs in order to develop new techniques of

rehabilitation of these subjects. Therefore, tetraplegic

patients have a increasingly demand for innovative

and creative solutions that integrate technology with

physiotherapy to improve rehabilitation.

One of the main goals of motor rehabilitation is to

induce neuroplasticity on the patients. Neuroplastic-

ity or neural adaptation is the foundation of learning

(Montgomery and Connolly, 2003) and it’s the brain

ability to reorganize itself as a response to external

stimuli and consequently recover partially or com-

a

https://orcid.org/0000-0002-1021-0701

b

https://orcid.org/0000-0001-5333-7123

c

https://orcid.org/0000-0002-9893-5204

pletely motor and sensory functions. Some examples

of stimuli are Functional Electrical Stimulation (FES)

(Bergmann et al., 2019) and Motor Imagery. Mental

practice of a muscular activity as well of the visual-

ization of a muscular movement activates the corre-

sponding area of the brain that would be activated in

the actual movement (Edmund Jacobson, 1932). An

application of motor imagery is on training of athletes

by imaging the movement that would be executed dur-

ing a real competition. Mizuguchi (Mizuguchi et al.,

2012) made a study which stated that around 70 to 90

percent of a group of elite athletes agreed that motor

imagery alongside real training improved their perfor-

mance in real competition. Also his study concluded

that motor imagery treatment could be used in motor

rehabilitation.

An important example of motor imagery is Vir-

tual Reality (VR). VR can be defined as an advance

interface system that represents a 3D environment in

real time. It encompasses both non-immersive sys-

tems such as video games or pc and immersive like

Oculus Rift and HTC Vive. Villiger (Villiger et al.,

2015) performed a study with incomplete SCI sub-

jects using VR to train lower limbs by flexing ankle

and knee in the VR environment. The results were a

boost in motivation and in motor performance as well

as increase of brain capacities.

Ginja, G., Varoto, R. and Cliquet Jr., A.

Development of a Virtual Reality Environment for Rehabilitation of Tetraplegics.

DOI: 10.5220/0009103002210226

In Proceedings of the 13th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2020) - Volume 1: BIODEVICES, pages 221-226

ISBN: 978-989-758-398-8; ISSN: 2184-4305

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

221

Other VR based systems helped to enhance motor

rehabilitation. Park (An and Park, 2018) used a semi-

immersive VR system to evaluate standing position in

SCI subjects. Other example was a VR environment

alongside an instrumented glove (Dimbwadyo-Terrer

et al., 2016) used to train reach movements also on

SCI subjects.

This paper describes the development of a VR en-

vironment to train upper limbs’ exercises in SCI sub-

jects. The hardware chosen was Oculus Quest (Face-

book Technologies, Irvine, California, EUA) and the

VR environment was made with Unity 3D (Unity

Technologies, Copenhagen, Denmark). Oculus Quest

is a portable device thus it can be easily used in hospi-

tals and small places. This project is part of a collab-

orative work of Laboratory of Biocybernetics and Re-

habilitation Engineering from University of S

˜

ao Paulo

and Laboratory of Biomechanics and Rehabilitation

of the Locomotor System of University of Campinas

that contributed by developing the VR system and for

the treatment of SCI patients. This paper’s final part

is the description of the biomechanical analysis that

will be used to validate the VR Environment.

2 METHODOLOGY

2.1 Characterizing SCI Population and

ADL Protocol

The criteria for selection SCI population for this study

were the following: Tetraplegics, neurological level

of lesion from C4 to C8 according to American Im-

pairment Scale (AIS) from American Spinal Injury

Association (ASIA), no autonomic dysreflexia and

more than one year of lesion.

The main goal of this work is to improve ADLs

of SCI tetraplegics by training functional activities

with VR. C8 patients may still have hands movement

whereas C4 patients barely can move arms. For this

reason, the protocol chosen to be trained in the VR

environment is a movement that utilizes shoulder and

elbow to hands to reach 5 spots in a table. Starting

with the left hand in the bottom left spot, the patient

tries to reach every other spot in the table. Similarly,

the same protocol is made with the right hand.

All the patient will be divided in two groups: a

control group and a group that will make an interven-

tion with VR. Subsequently, both groups will make an

initial biomechanical analysis of upper limbs. Lastly,

the intervention group will be subjected to a 6 week

training with VR and, afterwards, another biomechan-

ical analysis.

2.2 Head Mounted Display and Oculus

Quest

Head Mounted Display (HMD) is a headset used to

display VR. Each HMD has two lens and each one of

them represents a image slightly displaced between

them resulting in a 3D perception of the image. This

effect is called stereoscopy and is one of the basis of

VR since it turns a 2D non-immersive experience into

a 3D immersive experience.

Another aspect of HMD that improves the immer-

sive experience is the head tracking. Gyroscope and

Accelerometers from HMD are responsible for track-

ing rotation and position respectively. Both sensors

combined with stereoscopic vision results in 3D vi-

sual environment where the user can see objects as

if they where real objects. In addition to the visual

experience of VR some HMDs have input devices to

capture commands of the user and turn them into ac-

tions in the virtual environment.

Oculus Quest is a portable HMD with two con-

trollers with motion sensors that are used to track

hand position (figure 1). In order to use the controllers

with tetraplegics, each controller is attached to the

wrist with a ribbon. Even though the protocol trains

shoulder and elbow movement, Oculus Quest’s con-

trollers only need to collect hand’s position and rota-

tion data so the program can check if the user made

the correct movement.

Figure 1: Oculus Quest HMD and Controllers.

2.3 VR Development and Setup

The VR environmnent was made using Unity 3D

which is one of the most popular game engine world-

wide. Oculus have a library with prefabricated assets

BIODEVICES 2020 - 13th International Conference on Biomedical Electronics and Devices

222

that get data from the Oculus Quest HMD and con-

trollers and convert them into variables in Unity 3D.

Unity 3D assets are capable of inherit classes from

other library. As an example, the initial position in

the VR scene is inherited from HMD real position.

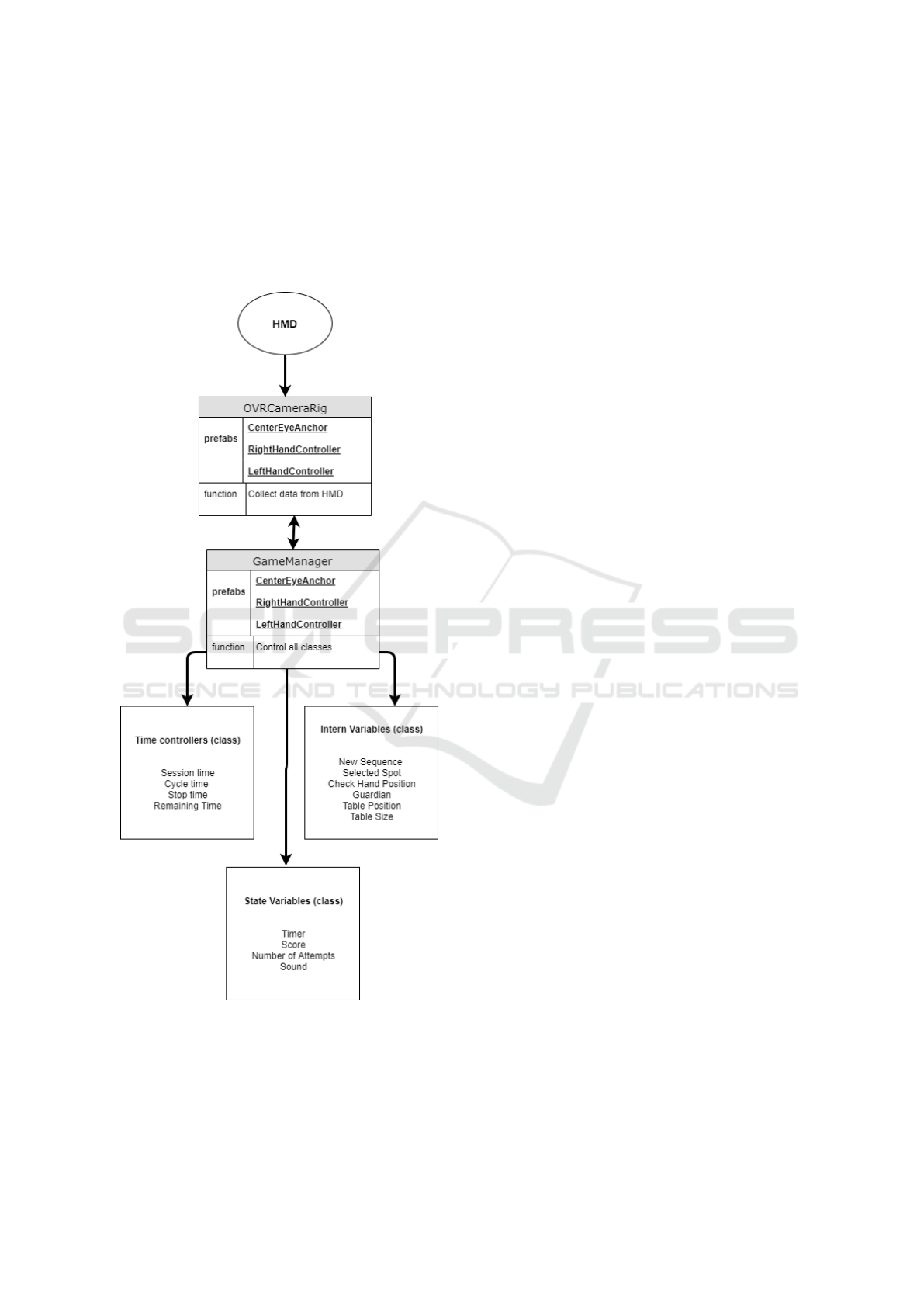

The figure 2 shows the main prefabs and scripts used

in the project.

Figure 2: Main prefabs, classes and variables used to con-

trol the vr project.

One of the most important prefabs is the OVR-

CameraRig which is the prefab that inherits data from

the HMD. It contains a player controller scripts that

allows the user to control the main camera and a

3D vector that have the same direction that the user

is looking. This vector is named CenterEyeAnchor.

Alongside the center anchor there are two other chil-

dren prefabs of the OVRCameraRig that are receiv-

ing data from the controllers. They are named Left-

HandAnchor and RightHandAnchor and their posi-

tion and rotation are referenced by the cylinder used

as the proprioceptive feedback.

Another significant aspect of the VR environment

is the main scene setup process. Before a VR ses-

sion the objects size and positioning must be defined

related to the real position of the user. This is made

by an introductory scene where the user looks to a

grid to select where the table will be created. Each

point of the grid corresponds to a potential initial po-

sition and the user stares at the desired spot for a

few seconds and it is selected. Tetraplegics could ac-

complish this procedure since it uses basically head

movements in order to select the spawn spot for the

table. Afterwards, he chooses the size of the table

and the blue spots are positioned automatically. In the

next step, the patient defines the size of the guardian.

The guardian of Oculus Quest is the region where the

user can move safely without hitting any objects in

the real room. If the patients somehow trespasses the

guardian, Oculus Quest will show the real room and

the guardian’s grid.

Finally, all the prefabs are organized by the Game-

Manager which is a singleton class that holds all other

classes of the project. Unity 3D allows the creation

of classes in form of scripts and each script contains

variables and functions used to control the game. As

shown in figure 2, the classes were separated in 3

types in order to help to identify any incidental er-

ror and correct it. Time controllers define when each

session and each cycle starts and ends as well they let

the user stop the game at any moment. Intern vari-

ables generate random sequences at the beginning of

each cycle and stores every action of the user. Finally,

state variables record time remaining, score and cy-

cles done. This last category is used as a feedback of

the user’s performance during the VR session.

2.4 User’s Interface (UI)

So far this paper has focused on movement based in-

teraction. However, some of the basic interaction such

as selecting an option or a scene should be adapted for

tetraplegics. The following section will discuss how

an adapted User’s Interface (UI) was created in a way

that only head movements are used to trigger events

in the VR environment.

Virtual Reality has a feature called Gaze Input

Development of a Virtual Reality Environment for Rehabilitation of Tetraplegics

223

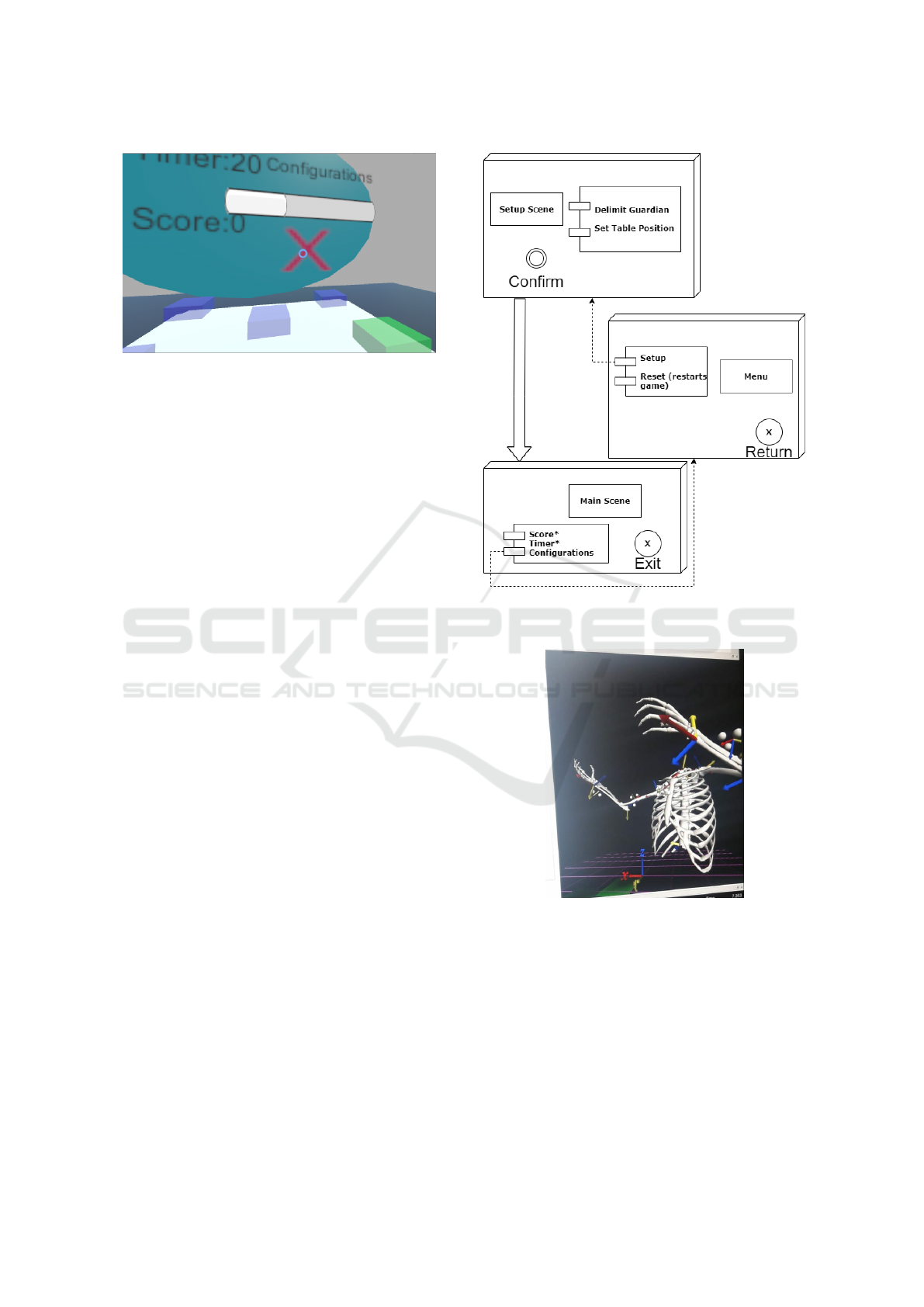

Figure 3: Gaze Input Controller and Slider example.

Controller (GIC) that is a pointer symbolized by a

small blue circle, as shown in the figure 3.

GIC uses a raycaster that inherits the direction of

the CenterEyeAnchor. Raycaster is a 3D vector used

to interact with Unity native UI. In other words, GIC

is a 3D vector that points to the direction where the

user is looking and it is used to trigger events in the

VR environment.

The UI is organized in several canvas and each

one of them has buttons that have a component called

event trigger which function is to switch in between

scenes. When the user looks at a canvas, GIC collides

with the canvas and a slider bar starts to charge. When

the slider is fully charged, the next scene in sequence

is displayed. The figure 4 shows all the scenes in the

VR environment and how they are related.

2.5 The Motion Monitor and

Biomechanical Analysis

This VR project will be used in a Biomechanical

analysis to validate if the VR intervention improved

the functional activities on tetraplegics. The Motion

Monitor system that will be used in this analysis in-

tegrates 12 Vicon (Vicon Motion Systems Ltd, Ox-

ford, United Kingdom) cameras with a data analysis

software as well it uses clusters instead of markers to

capture motion data. Hence, it reduces the number of

markers that are normally used with Vicon (over 20

markers) to a set of 9 cluster for a full body motion

capture.

For this study, only 5 clusters are used to capture

motion data from upper limbs: left and right arms

and forearms and thorax. For analysing motion data,

the Motion Monitor software reconstructs a 3D model

representing real time data of the upper limbs. The

figure 5 shows an example of upper limbs’ 3D model.

To verify if the clusters could be used on a

wheelchair, a healthy subject sat on a chair simulating

a SCI subject. Afterwards, 5 clusters were positioned

Figure 4: Diagram of main interfaces. Names with ”*” are

non-intractable.

Figure 5: Example of a 3D model created with Motion

Monitor.

on arms, forearms and thorax.

The kinematic data analyzed are angles and veloc-

ities from shoulders and elbows in 3 axes. During a

collect session, the patient have to move each arm in

5 spots disposed in a table in the same place that the

ones in the VR environment. This protocol is done

3 times for each hand to minimize eventual errors in

measure. The main goal is to evaluate maximum val-

ues for elbow flexion, shoulder abduction and veloci-

BIODEVICES 2020 - 13th International Conference on Biomedical Electronics and Devices

224

ties during the protocol before and after the VR treat-

ment and see if they were improved. Also a control

group will be submitted to the same analysis to com-

pare their performance with the intervention group.

3 RESULTS

3.1 VR Environment

The main scene of the VR Environment depicts a ta-

ble with 5 spots positioned in the center and in each

corner of the table (figure 6). In each cycle the user

have to move his hand to 5 different spots in 2 min-

utes. The sequence is generated randomly for each

cycle to prevent the patient to memorize the same se-

quence and to simulate movements more similar to

ADLs of reach.

Figure 6: Main Scene of the VR Environment.

The blue blocks indicate all the possible spots and

the next spot of the sequence is the green one. If the

patient successfully reaches the correct spot it returns

a sound and the next spot of the sequence becomes

green. In the other hand if he reaches the wrong

spot all blocks blinks a red colour and the sequence

restarts. Tetraplegics don’t have sensory functions be-

low the neurological level. Because of this, biofeed-

backs such as colour changes and sound effects are

important to indicate to the patient if an action taken

in the scene is correct or not. Similarly, SCI subjects

need a proprioceptive feedback from the VR system

to know the real position of their hands. The VR en-

vironment have a proprioceptive feedback in form of

two small purple cylinder that have both rotation and

position of the real hands depicted in real time. The

green arrow and the blue arrow of each cylinder in-

dicate parallel and orthogonal vectors related to each

palm respectively.

Each cylinder and each spot have an invisible col-

lider box to verify if the user correctly reached a se-

lected spot. When the cylinder’s collider enters in a

spot, the program checks if the spot is correct and,

when it leaves the spot, the next game action is taken.

Besides that, there is a small menu above the table

that displays time remaining, score, settings and exit

button.

3.2 Preliminary Tests

The VR environment is part of a pilot study where

the main goal is to test viability. Consequently, the

VR system was tested on healthy subjects to guaran-

tee that it would be safe to use it with SCI subjects.

The preliminary tests showed that the VR could check

correctly if the user reached the selected spot only ver-

ifying hand’s data. Other positive result was that the

GIC interface that could be used to navigate through-

out scenes alongside with the controllers. However,

the setup scene had problems such as the floor level of

the environment that was wrong in some cases. This

may occur due to the default configurations of Ocu-

lus Quest. Also the Setup Scene will need to consid-

erate additional information like height to calculate

the user’s baseline. Even though the protocol worked

fine, baseline would increase redundancy and reduce

errors of positioning.

First issue was holding controls using a rib-

bon. Although it hold still the controllers, a support

would be more appropriated to use with SCI subjects.

Nonetheless, both controller could capture data pre-

cisely while attached to the wrists.

3.3 Biomechanical Analysis Pilot

A Biomechanical analysis pilot was made with a

healthy subject sat on a wheelchair. While the arms

and forearms had no issues, some changes had to

be made before positioning the thorax cluster. Nor-

mally, the thorax cluster is positioned on the back,

however that’s not possible with the wheelchair. The

second one was to calibrate the baseline with the sub-

ject seated because it’s normally done with the sub-

ject standing. The solution found to resolve the first

was to change the axis of the 3D model in order to

change the cluster position to the chest. The second

one couldn’t be directly resolved but it didn’t inter-

fere drastically in the analysis. The protocol data can

Development of a Virtual Reality Environment for Rehabilitation of Tetraplegics

225

be segmented and normalized using elbow flexion and

shoulder flexion as reference to identify each part of

the protocol.

Figure 7: Graphics of Left Shoulder Flexion and Left Elbow

Flexion.

The figure 7 shows raw data from shoulder and

elbow of a test subject. Both graphs shows clearly

that the protocol starts around the 750th time sample

(sample rate of 100Hz) because both shoulder and el-

bow angles have a significant variance starting in this

timestamp. This pattern is repeated for each spot, in-

dicating that is possible to identify when the patient is

reaching each spot. Hence, it’s possible to calculate

maximum and minimum values for flexion angles.

4 CONCLUSIONS

In conclusion, the preliminary study has brought

some evidences that could lead on a innovative treat-

ment for tetraplegics. A few improvements such as

setup and an appropriate support for the controller

should be made before the VR environment is used

with SCI subjects. However, the main mechanics of

the VR environment worked which may suggest that

it could work with SCI. The next steps are: a) Create a

baseline calibration for VR environment; b) Polish the

3D models to create a better immersive experience;

c) Ethics committee approval for VR experiments on

SCI subjects; d) Validate the VR Environment with a

SCI population.

ACKNOWLEDGEMENTS

This work was supported by grants from S

˜

ao Paulo

Research Foundation (FAPESP) and from National

Council for Scientific and Technological Develop-

ment (CNPq)

REFERENCES

An, C. M. and Park, Y. H. (2018). The effects of semi-

immersive virtual reality therapy on standing bal-

ance and upright mobility function in individuals with

chronic incomplete spinal cord injury: A preliminary

study. Journal of Spinal Cord Medicine, 41(2):223–

229.

Bergmann, M., Zahharova, A., Reinvee, M., Asser, T.,

Gapeyeva, H., and Vahtrik, D. (2019). The effect of

functional electrical stimulation and therapeutic exer-

cises on trunk muscle tone and dynamic sitting bal-

ance in persons with chronic spinal cord injury: A

crossover trial. Medicina (Lithuania), 55(10).

Devivo, M. J. and Chen, Y. (2011). Epidemiology of trau-

matic spinal cord injury. In Spinal Cord Medicine:

Second Edition.

Dimbwadyo-Terrer, I., Trincado-Alonso, F., de los Reyes-

Guzm

´

an, A., Aznar, M. A., Alcubilla, C., P

´

erez-

Nombela, S., del Ama-Espinosa, A., Polonio-L

´

opez,

B., and Gil-Agudo,

´

A. (2016). Upper limb rehabili-

tation after spinal cord injury: a treatment based on

a data glove and an immersive virtual reality environ-

ment. Disability and Rehabilitation: Assistive Tech-

nology, 11(6):462–467.

Edmund Jacobson (1932). Electrophysiology of Mental

Activities. The American Journal of Psychology,

44(4):677–694.

Mizuguchi, N., Nakata, H., Uchida, Y., and Kanosue,

K. (2012). Motor imagery and sport performance.

The Journal of Physical Fitness and Sports Medicine,

1(1):103–111.

Montgomery, P. and Connolly, B. (2003). Clinical Applica-

tions for Motor Control.

Varoto, R. and Cliquet, A. (2015). Experiencing Func-

tional Electrical Stimulation Roots on Education, and

Clinical Developments in Paraplegia and Tetraplegia

With Technological Innovation. Artificial Organs,

39(10):E187–E201.

Villiger, M., Grabher, P., Hepp-Reymond, M. C., Kiper, D.,

Curt, A., Bolliger, M., Hotz-Boendermaker, S., Kol-

lias, S., Eng, K., and Freund, P. (2015). Relationship

between structural brainstem and brain plasticity and

lower-limb training in spinal cord injury: A longitu-

dinal pilot study. Frontiers in Human Neuroscience,

9.

BIODEVICES 2020 - 13th International Conference on Biomedical Electronics and Devices

226