Preliminary Study on the Use of Off-the-Shelf VR Controllers for

Vibrotactile Differentiation of Levels of Roughness on Meshes

Ivan Nikolov

a

, Jens Stokholm Høngaard

b

, Martin Kraus

c

and Claus B. Madsen

d

Computer Graphics Group, Aalborg University, Aalborg, Denmark

Keywords:

Virtual Reality, Touch, Haptics, Tactile Feedback, Perception, Roughness.

Abstract:

With the introduction of new specialized hardware, Virtual Reality (VR) has gained more and more popularity

in recent years. VR is particularly immersive if suitable auditory and haptic feedback is provided to users.

Many proposed forms of haptic feedback require custom hardware components that are often bulky, costly,

and/or require lengthy setup times. We explored the possibility of using the built-in vibrotactile feedback of

HTC Vive controllers to simulate the sensation of interacting with surfaces with varying degrees of roughness.

We conducted initial testing on the proposed system, which shows promising results as users could accurately

and within short time discern the amount of roughness of 3D models based on the vibrotactile feedback alone.

1 INTRODUCTION

In recent years there has been a steady rise of the

number and quality of VR solutions. All these sys-

tems aim to immerse users by using a combination of

visual and audio modalities together with a sense of

presence in the VR environment, which is achieved

by internal or external head and hand tracking. For

interacting with the VR environment, the state-of-

the-art solutions usually rely on controllers. How-

ever, the reliance on controllers and the impossibil-

ity to touch and feel models in the 3D environment

have hampered immersion. Virtual reality applica-

tions in many areas can benefit from the introduc-

tion of haptics, such as phantom limb pain (Henrik-

sen et al., 2017) and stroke rehabilitation (Afzal et al.,

2015), (Levin et al., 2015), interactions for blind users

(Schneider et al., 2018), data visualization (Englund

et al., 2018), cultural heritage (Jamil et al., 2018), etc.

Normally this is done through the use of custom hard-

ware, which makes reproducibility hard and expen-

sive.

In this paper we present an initial study on the

use of the built-in vibration motors in the HTC Vive

(HTC, 2016) controllers for detecting and differenti-

ating different levels of roughness on meshes in VR.

a

https://orcid.org/0000-0002-4952-8848

b

https://orcid.org/0000-0002-4849-309X

c

https://orcid.org/0000-0002-0331-051X

d

https://orcid.org/0000-0003-0762-3713

We tested our solution on fifteen participants of vary-

ing VR skill levels, using high detail 3D reconstruc-

tions of real world objects to achieve natural inter-

actions. The participants did not see the real rough-

ness of the object, but could only perceive it through

the tactile sensation provided by the vibrotactile feed-

back of the controller. All users, independent of their

skill level, managed to correctly distinguish the dif-

ferent levels of roughness of the 3D objects in a short

amount of time. Thus, the research in this paper

serves as a proof of concept that different levels of

roughness can be successfully communicated through

VR controllers without any additional hardware.

2 STATE OF THE ART

Haptic feedback has two main parts - kinesthetic and

tactile feedback. Kinesthetic feedback uses the feel-

ing coming from a person’s muscles and tenders to

distinguish the object that is being touched, grabbed,

or held. Tactile feedback comes from the feeling of

the skin sensors on the fingers and palms when an ob-

ject is touched and can convey the shape, texture, and

roughness. Introduction of haptic feedback to VR so-

lutions is a non-trivial problem. This paper is focused

solely on tactile feedback.

Haptic interfaces can be divided into passive and

active. Both types can be useful for different cases in

VR. Passive ones rely on the shape of the controller

334

Nikolov, I., Høngaard, J., Kraus, M. and Madsen, C.

Preliminary Study on the Use of Off-the-Shelf VR Controllers for Vibrotactile Differentiation of Levels of Roughness on Meshes.

DOI: 10.5220/0009101303340340

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 1: GRAPP, pages

334-340

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

and try to mimic real life objects or surfaces. Ex-

amples of these can be seen in the work of (Cheng

et al., 2018), (Zenner and Kr

¨

uger, 2017). Active hap-

tic feedback controller rely on moving parts, actuators

and sensors, to dynamically mimic changes in the en-

vironment or the virtual objects. Examples of active

haptics can be found in (Ryu et al., 2007), (Scheggi

et al., 2015), (Whitmire et al., 2018). Active haptic

controllers are of bigger interest to the current study.

Another possibility of active haptics is the intro-

duction of custom tactile controllers, as seen in the

work by (Choi et al., 2018), (Benko et al., 2016),

(Culbertson et al., 2017) or sensors directly attached

to the users’ fingertips (Schorr and Okamura, 2017),

(Yem et al., 2016). These controllers rely on a com-

bination of actuators, inertial measurement units and

electromagnetic coils to create a very precise sense of

touch, but they require custom hardware, are normally

quite bulky and are not readily available for the gen-

eral public. Work is also done on directly using the

controllers coming with the VR systems. Some re-

search focuses on augmenting the controllers with ad-

ditional functionality (Han et al., 2017), (Chen et al.,

2016), (Ryge et al., 2017), while others rely directly

on controllers’ vibration (Kreimeier and G

¨

otzelmann,

2018), (Brasen et al., 2018).

We base the study in this paper on the idea that

controller vibration can give an active haptics idea of

the surface of 3D objects in VR and help users differ-

entiate levels of roughness.

3 METHODOLOGY

Our proposed approach follows the research using in-

tegrated controllers, as this makes it easier to repli-

cate and test, as well as simpler to introduce and ex-

plain to users. This means that the sensation of tac-

tile feedback needs to be simulated to the user, so

the proper information is understood. Our hypothe-

sis is that the built-in vibrotactile features in the HTC

Vive controllers can achieve this sensation, but only

if the provided vibration motors are carefully con-

trolled. In this way, VR interactions with 3D models

can be achieved that are relatively close to touching a

surface with a hand-held stylus in the real world.

The implementation uses Unity with the SteamVR

plugin (Valve, 2015). The SteamVR API exposes

three parameters for modulating the vibration of the

VR controllers: amplitude, frequency and duration of

the vibration. Each of the se parameters has set value

constrains:

• Amplitude can take floating values between [0..1]

• Frequency accepts floating values between

[0..350]

• Duration of vibration accepts floating values for

the amount of seconds, with a lower bound value

determined by the hardware limitations of the vi-

bration motor

A limitation of the vibration feature is that the motors

can not run all the time, therefore we have set a heuris-

tic minimum distance of 5 mm between sampling

mesh surface points before which the controller’s mo-

tors are not started. This will ensure that there are

pauses between the repeated activations of the haptic

motor and will limited the produced vibration noise.

Additionally to mitigate the possibility of noise we

sample the surface of the object once every 0.1 sec-

onds.

Figure 1: Rendering of our virtual VR stylus connected to

the VR controller. The virtual stylus is used to virtually

”touch” objects. The stylus is only seen in VR and does

not exist on the real controller. The red rays cast from the

stylus are shown for easier explanation of method and are

not visible, when using the application.

Our system detects the underlying mesh roughness by

calculating the angle between two sampled surface

normals. To help with directing the users, in VR a

3D model of a stylus is placed on top of the Vive con-

troller as seen in Figure 1.

Rays are cast from each vertex point of the mesh

of the stylus in the direction of their surface normal

vectors. The intersections of these rays with other sur-

faces determine the contact point between the stylus

and another surface. The maximum allowed distance

of intersection points is dependent on the shape of the

stylus, with additional length to ensure contact at the

various possible orientations. All contact points are

checked starting from the tip of the stylus and going

down. When two contact points are sampled, the an-

gle between their normals is calculated and analysed.

This angle can be between [0, π], as seen in the work

of (Ioannou et al., 2012), as smooth movement on the

stylus on the surface is assumed.

Preliminary Study on the Use of Off-the-Shelf VR Controllers for Vibrotactile Differentiation of Levels of Roughness on Meshes

335

As this is a pilot study, it was decided that the

most straightforward approach is to lock the dura-

tion and leave the amplitude as the only actively ad-

justable quality in the experiments. Thus, the under-

lying surfaces can be approximated without the need

to map the physical surface profile to vibration fre-

quency. This introduces the problem that smaller sur-

face details cannot be communicated through the vi-

bration. To mitigate that and after analysing the dif-

ference between the normals, we simplify the under-

lying surface roughness to a binary classification for

the vibration controller:

• Surface patches with large angle between the nor-

mals result in vibration with high amplitude, i.e.,

momentary, tactile ”bumps” – approximating very

rough areas

• Surface patches with small angle between the nor-

mal result in low-amplitude, continuous vibration

– approximating ambient roughness.

The two cases are distinguished by considering the

calculated angle difference: if it is less than 6 de-

grees, then it is a low-amplitude vibration patch; if

it is greater than 6 degrees, then it is hard vibration

case. This threshold was selected heuristically after

multiple trials as a believable approximation of the

underlying tested surfaces. Thus our system has an

dynamic component in changing the amplitude and

passive component in changing between levels of pre-

determined frequency. The values of the frequency

levels are selected after a number of internal trials:

• In case of a large normal angle the vibration dura-

tion is set to 0.075 seconds and the frequency to

16 Hz.

• In case of a small normal angle the vibration du-

ration is set to 0.025 seconds with a frequency of

344 Hz.

The amplitude in both cases is dynamically modu-

lated depending on the difference between the nor-

mals and the distance between the sampled points.

The distance between samples is used to change the

amplitude, to better approximate the feeling of drag-

ging the stylus on a real surface. If we consider that

the motion on the surface is continuous, the larger the

distance between samples, taken at equal time steps,

the faster the stylus is moving. Our hypothesis is that

faster movement has the tendency to ”smooth out” the

feeling of a rougher surfaces.

The vibrations are only sent if the user’s finger is

on the trackpad since this is the part of the Vive con-

troller where the vibrotactile feedback is felt most dis-

tinctly due to the position of the vibration motor.

4 EXPERIMENT AND RESULTS

To test how much information about the object’s

roughness our proposed solution can offer users, we

designed an experiment, which relied solely on the

tactile information.

4.1 Experiment Setup

Three real world vases were selected and digitized

using Structure from Motion (SfM) reconstruction,

through the commercial software Agisoft Metashape

(Agisoft, 2018). The vases were selected because of

their roughness profiles. The two vases in Figure 2(a)

and Figure 2(b) have a simplified roughness profile of

a wave and a checkerboard pattern, which was useful

for the training phase of the experiment, where users

were familiarized with the setup and left to explore it,

until they felt comfortable with it. These patterns pro-

vided an easy way to understand the relation between

the visual appearance in VR and the tactile sensation

that the controller vibration provides when interact-

ing with the objects. A view from the initial training

part can be seen in Figure 3. The real world objects

were selected, to give participants an object that both

has small scale roughness, but also large scale surface

shape. Our hypothesis is that this will make distin-

guishing the different levels of roughness harder and

will limit the possible effects from users learning the

roughness from for example a planar shape.

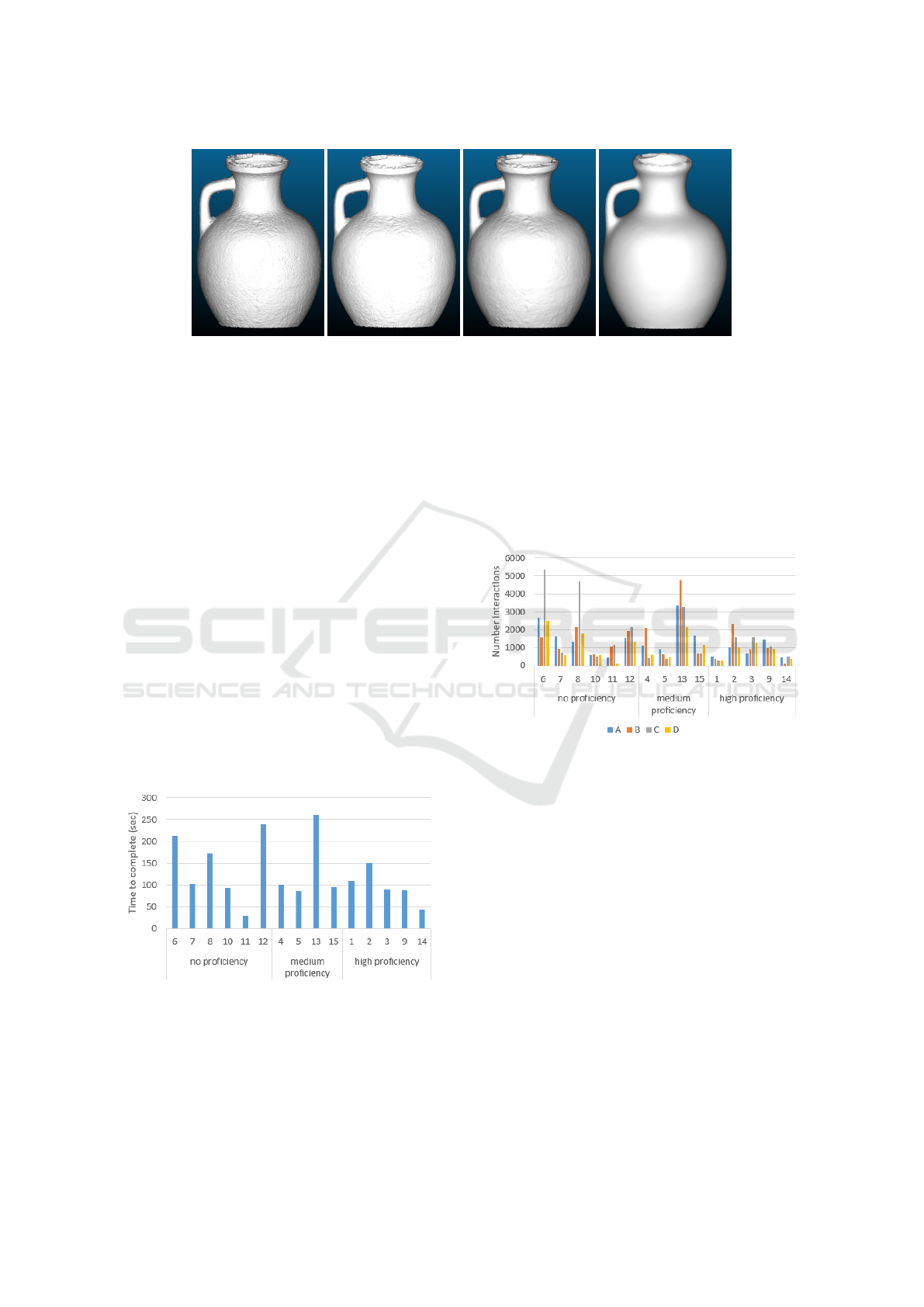

The third vase, seen in Figure 2(c), was used

as a basis for the experiment. Three copies of the

reconstructed 3D mesh were made and each was

smoothed. Three degrees of smoothing were uti-

lized which were generated by Laplacian smoothing

in Meshlab (Cignoni et al., 2008). The original re-

construction and the three smoothed copies can be

seen in Figure 5. Because we were not checking if

users can precisely measure roughness, but if users

can distinguish and order different degrees of rough-

ness, the degree of smoothness were chosen heuristi-

cally by experimenting with different configurations.

To be sure that the vase and the three smoothed copies

follow a ”smoothness progression”, a patch was taken

from each of them and the root mean square height S

q

was calculated from each one (Carneiro, 1995). The

least rough vase was almost completely smoothed, to

provide an almost blank slate compared to the other

three.

The four vases were set on pedestals in VR with

letters A, B, C, and D. The roughness levels, together

with the set label letters and the calculated S

q

are

shown in Table 1. The letters are purposely randomly

assigned depending on the roughness level. The ren-

GRAPP 2020 - 15th International Conference on Computer Graphics Theory and Applications

336

(a) (b) (c)

Figure 2: Three objects used in the experimental evaluation. The first two vases 2(a) and 2(b) are used in the initial training

phase, while the third vase is used in the testing part.

Figure 3: View from the training area, where the users could

try out and test the tactile feedback on the two simple sur-

face objects.

Table 1: The roughness levels of each of the four objects,

together with the labels denoting them and the calculated

root mean square height S

q

. The letters are given at random

to the different roughness levels and are used when testing

Roughness S

q

[mm] Label

Most 0.5569 D

Second 0.5114 A

Third 0.4784 B

Least 0.0304 C

dering of their meshes was deactivated with only the

colliders left. On top of each of them a completely

smooth model was rendered, so users had no way

of visually seeing the real roughness. The testing

setup can be seen in Figure 4. Between each user

test the positions of the vases were randomly rotated,

but the combination between letter and roughness was

not changed. We rotate the vases to avoid directional

bias from users, when checking which object they in-

teract most with. This bias can manifest in right or

left handed participants going always to the object on

their left or right, which without the rotation can al-

ways be the same. To help directing the attention of

the users, a specific patch of all the vases was selected,

where the roughness is particularly pronounced and

colored red. A close-up of underlying roughness of

the patch is shown in Figure 4, while the users just

saw a smooth red surface.

Figure 4: View from the testing area, with the four identi-

cally looking vases. Each of the vases has the collider of

a object with different level of roughness. The objects are

labeled and a red patch is selected on them, to help direct-

ing the attention of the users. A close-up of the underlying

patch roughness is shown for easier understanding and is

not visible for the users. The objects are rotated between

users.

4.2 Participants and Captured Data

Fifteen participants tested the system by using the ex-

perimental setup. The users had an age between 23

and 35 years and varying degrees of proficiency using

VR. Each user was left to first explore the training part

of the experimental VR setup, while the facilitator ex-

plained to them how to use the system. Once the user

was comfortable, they were teleported to the testing

part, where they were asked to try to order the four

identically looking vases depending on the perceived

roughness profile, when they interacted with them.

The users had unlimited time and were instructed to

use the specified red patch on the vases if they had a

hard time distinguishing the objects. Once the users

were ready, they gave their idea of the ordering of the

vases. The time between the start of the experiment

and the end was taken, as well as the amount of times

the user had interacted with each of the four vases.

Preliminary Study on the Use of Off-the-Shelf VR Controllers for Vibrotactile Differentiation of Levels of Roughness on Meshes

337

(a) Label D (b) Label B (c) Label A (d) Label C

Figure 5: The vase used for the testing phase (a), together with the other three progressively smoother copies (b) to (d). The

labels from A to D were set as seen in Table 1.

4.3 Results and Discussion

All fifteen participants could successfully order the

objects from roughest to smoothest. The average

completion time was 124.8 seconds, with a standard

deviation of 68.3 seconds. The large standard devia-

tion was caused by three participants, who took more

than 200 seconds to complete the experiment. All

three of the participants had little or no experience in

using VR, thus, their slower completion time can be

attributed to some extend to their inexperience. The

completion times for all users, depending on their pro-

ficiency, can be seen in Figure 6. Here it can be seen

that some of the people with no proficiency took a lot

of time, due to not being fully comfortable using a

VR controller and overall a lot more variation is seen

in their times. People with high proficiency have a

lower spread and do not require a lot of time to distin-

guish between roughness levels successfully.

Figure 6: Completion times for each user, grouped by the

three VR proficiency levels.

The number of times users interacted with each of the

four objects can give an overview, which object was

the most difficult to categorize. The results for each

user, depending on their proficiency level, for each

of the objects can be seen in Figure 7. These results

reflect the completion times, discussed above, with

some deviations, showing that some users were inter-

acting with the objects more, while other were more

passive. Again users with no proficiency required

more interactions and focus more on the smoothest

object C.

Figure 7: Number of times users interacted with each of the

four objects, grouped by the three VR proficiency levels.

Each time users touched the objects, this is counted as an

interaction.

Table 2 expands more on the captured results. The

values in Most Interactions denote the number of

times each of the four objects has been interacted with

the most by the users. On the other hand, Least In-

teractions denotes the number of times each object

has been interacted with the least amount of times.

The table shows that the object with the most rough-

ness D, has never been interacted the most times and

has been interacted the least amount of time by most

users. This shows that people generally really easily

decided how rough it was and did not need more inter-

action. On the other hand, the smoothest object C is

both the most interacted object by the most people, as

well as the second least interacted object. This points

to the fact that the order in which users interacted with

the objects is important for discerning their correct

roughness. As expected, starting with the roughest

GRAPP 2020 - 15th International Conference on Computer Graphics Theory and Applications

338

object is most beneficial. Finally, the two in-between

objects A and B, had approximately the same level

of difficulty, but once users made up their minds on

the most and least rough, they could decide on the in-

between ones easier. On top of that some participants

commented on that they could hear the haptic motors

spin, those who did comment on it were told to try

and ignore it. But it is unclear if it had an impact on

the results.

Table 2: The most and least interacted with labeled objects.

The results are created from the fifteen participants.

A B C D

Most Interactions 5 4 6 0

Least Interactions 3 3 4 5

5 CONCLUSION AND FUTURE

WORK

Our experiment demonstrated that information about

the surface roughness of 3D objects can be communi-

cated through the use of tactile sensation achieved by

the built-in vibration capabilities of HTC Vive con-

trollers. With the help of a virtual VR stylus, users

can use the same natural interactions as in real life

to ”feel” the surface of an object. The preliminary

experiment demonstrated that users can order objects

by their perceived vibration surface roughness with-

out visual cues. The test showed that users could rel-

atively fast decide which is the roughest of multiple,

visually identical objects, as well as the smoothest and

order them always correctly.

There are some limitations of this preliminary

study. The limited scope of the test and the limited

number of participants resulted in results which are

too homogeneous and do not show enough variation

to further improve the system. To address these short-

comings, additional experiments are planned. In par-

ticular, we want to investigate the influence of am-

plitude and frequency of the vibrotactile feedback on

the perceived roughness. Another experiment could

explore how much of an impact the sound of the con-

troller has on the haptic feeling as some of the test

participants mentioned that they could hear different

noises from the motors in the controllers.

ACKNOWLEDGEMENTS

We would like to thank the participants in the experi-

ment for their time and the anonymous reviewers for

providing helpful feedback and improving the content

of the paper.

REFERENCES

Afzal, M. R., Byun, H.-Y., Oh, M.-K., and Yoon, J. (2015).

Effects of kinesthetic haptic feedback on standing

stability of young healthy subjects and stroke pa-

tients. Journal of neuroengineering and rehabilita-

tion, 12(1):27.

Agisoft (2018). Metashape. www.agisoft.com. Accessed:

2019-08-15.

Benko, H., Holz, C., Sinclair, M., and Ofek, E. (2016).

Normaltouch and texturetouch: High-fidelity 3d hap-

tic shape rendering on handheld virtual reality con-

trollers. In Proceedings of the 29th Annual Symposium

on User Interface Software and Technology, pages

717–728. ACM.

Brasen, P. W., Christoffersen, M., and Kraus, M. (2018).

Effects of vibrotactile feedback in commercial vir-

tual reality systems. In Interactivity, Game Creation,

Design, Learning, and Innovation, pages 219–224.

Springer.

Carneiro, K. (1995). Scanning Tunnelling Microscopy

Methods for Roughness and Micro Hardness Mea-

surements. Directorate-General XIII.

Chen, Y.-S., Han, P.-H., Hsiao, J.-C., Lee, K.-C., Hsieh, C.-

E., Lu, K.-Y., Chou, C.-H., and Hung, Y.-P. (2016).

Soes: Attachable augmented haptic on gaming con-

troller for immersive interaction. In Proceedings of

the 29th Annual Symposium on User Interface Soft-

ware and Technology, pages 71–72. ACM.

Cheng, L.-P., Chang, L., Marwecki, S., and Baudisch, P.

(2018). iturk: Turning passive haptics into active hap-

tics by making users reconfigure props in virtual re-

ality. In Proceedings of the 2018 CHI Conference

on Human Factors in Computing Systems, page 89.

ACM.

Choi, I., Ofek, E., Benko, H., Sinclair, M., and Holz, C.

(2018). Claw: A multifunctional handheld haptic con-

troller for grasping, touching, and triggering in virtual

reality. In Proceedings of the 2018 CHI Conference

on Human Factors in Computing Systems, page 654.

ACM.

Cignoni, P., Callieri, M., Corsini, M., Dellepiane, M.,

Ganovelli, F., and Ranzuglia, G. (2008). MeshLab:

an Open-Source Mesh Processing Tool. In Scarano,

V., Chiara, R. D., and Erra, U., editors, Eurographics

Italian Chapter Conference. The Eurographics Asso-

ciation.

Culbertson, H., Walker, J. M., Raitor, M., and Oka-

mura, A. M. (2017). Waves: a wearable asymmet-

ric vibration excitation system for presenting three-

dimensional translation and rotation cues. In Proceed-

ings of the 2017 CHI Conference on Human Factors

in Computing Systems, pages 4972–4982. ACM.

Englund, R., Palmerius, K. L., Hotz, I., and Ynnerman, A.

(2018). Touching data: Enhancing visual exploration

of flow data with haptics. Computing in Science &

Engineering, 20(3):89–100.

Preliminary Study on the Use of Off-the-Shelf VR Controllers for Vibrotactile Differentiation of Levels of Roughness on Meshes

339

Han, P.-H., Chen, Y.-S., Yang, K.-T., Chuan, W.-S., Chang,

Y.-T., Yang, T.-M., Lin, J.-Y., Lee, K.-C., Hsieh, C.-

E., Lee, L.-C., et al. (2017). Boes: attachable hap-

tics bits on gaming controller for designing interactive

gameplay. In SIGGRAPH Asia 2017 VR Showcase,

page 3. ACM.

Henriksen, B., Nielsen, R., Kraus, M., and Geng, B. (2017).

A virtual reality system for treatment of phantom

limb pain using game training and tactile feedback.

In Proceedings of the Virtual Reality International

Conference-Laval Virtual 2017, page 13. ACM.

HTC (2016). Vive. https://www.vive.com/eu/. Accessed:

2019-08-15.

Ioannou, Y., Taati, B., Harrap, R., and Greenspan, M.

(2012). Difference of normals as a multi-scale op-

erator in unorganized point clouds. In 2012 Second

International Conference on 3D Imaging, Modeling,

Processing, Visualization & Transmission, pages 501–

508. IEEE.

Jamil, M. H., Annor, P. S., Sharfman, J., Parthesius, R.,

Garachon, I., and Eid, M. (2018). The role of hap-

tics in digital archaeology and heritage recording pro-

cesses. In 2018 IEEE International Symposium on

Haptic, Audio and Visual Environments and Games

(HAVE), pages 1–6. IEEE.

Kreimeier, J. and G

¨

otzelmann, T. (2018). Feelvr: Haptic

exploration of virtual objects. In Proceedings of the

11th PErvasive Technologies Related to Assistive En-

vironments Conference, pages 122–125. ACM.

Levin, M. F., Magdalon, E. C., Michaelsen, S. M., and

Quevedo, A. A. (2015). Quality of grasping and the

role of haptics in a 3-d immersive virtual reality en-

vironment in individuals with stroke. IEEE Transac-

tions on Neural Systems and Rehabilitation Engineer-

ing, 23(6):1047–1055.

Ryge, A., Thomsen, L., Berthelsen, T., Hvass, J. S., Ko-

reska, L., Vollmers, C., Nilsson, N. C., Nordahl, R.,

and Serafin, S. (2017). Effect on high versus low fi-

delity haptic feedback in a virtual reality baseball sim-

ulation. In 2017 IEEE Virtual Reality (VR), pages

365–366. IEEE.

Ryu, J., Jung, J., Kim, S., and Choi, S. (2007). Perceptually

transparent vibration rendering using a vibration mo-

tor for haptic interaction. In RO-MAN 2007-The 16th

IEEE International Symposium on Robot and Human

Interactive Communication, pages 310–315. IEEE.

Scheggi, S., Meli, L., Pacchierotti, C., and Prattichizzo, D.

(2015). Touch the virtual reality: using the leap mo-

tion controller for hand tracking and wearable tactile

devices for immersive haptic rendering. In ACM SIG-

GRAPH 2015 Posters, page 31. ACM.

Schneider, O., Shigeyama, J., Kovacs, R., Roumen, T. J.,

Marwecki, S., Boeckhoff, N., Gloeckner, D. A.,

Bounama, J., and Baudisch, P. (2018). Dualpanto: A

haptic device that enables blind users to continuously

interact with virtual worlds. In The 31st Annual ACM

Symposium on User Interface Software and Technol-

ogy, pages 877–887. ACM.

Schorr, S. B. and Okamura, A. M. (2017). Fingertip tac-

tile devices for virtual object manipulation and explo-

ration. In Proceedings of the 2017 CHI Conference on

Human Factors in Computing Systems, pages 3115–

3119. ACM.

Valve (2015). Steam vr. https://github.com/ValveSoftware/

steamvr. Accessed: 2019-08-20.

Whitmire, E., Benko, H., Holz, C., Ofek, E., and Sin-

clair, M. (2018). Haptic revolver: Touch, shear, tex-

ture, and shape rendering on a reconfigurable virtual

reality controller. In Proceedings of the 2018 CHI

Conference on Human Factors in Computing Systems,

page 86. ACM.

Yem, V., Okazaki, R., and Kajimoto, H. (2016). Fingar:

combination of electrical and mechanical stimulation

for high-fidelity tactile presentation. In ACM SIG-

GRAPH 2016 Emerging Technologies, page 7. ACM.

Zenner, A. and Kr

¨

uger, A. (2017). Shifty: A weight-shifting

dynamic passive haptic proxy to enhance object per-

ception in virtual reality. IEEE transactions on visu-

alization and computer graphics, 23(4):1285–1294.

GRAPP 2020 - 15th International Conference on Computer Graphics Theory and Applications

340