Player Tracking using Multi-viewpoint Images in Basketball Analysis

Shuji Tanikawa and Norio Tagawa

a

Graduate School of Systems Design, Tokyo Metropolitan University, Hino, Tokyo 191-0065, Japan

Keywords:

Basketball Analysis, Player Tracking, Occlusion Avoidance, Multiple Camera, Radon Transform, OpenPose.

Abstract:

In this study, we aim to realize the automatic tracking of basketball players by avoiding occlusion of players,

which is an important issue in basketball video analysis, using multi-viewpoint images. Images taken with

a hand-held camera are used, to expand the scope of application to uses such as school club activities. By

integrating the player tracking results from each camera image with a 2-D map viewed from above the court,

using projective transformation, the occlusion caused by one camera is stably solved using the information

from other cameras. In addition, using OpenPose for player detection reduces the occlusion that occurs in

each camera image before all camera images are integrated. We confirm the effectiveness of our method by

experiments with real image sequences.

1 INTRODUCTION

Research on sports video analysis has been conducted

actively in recent years, with the aim of improving

individual players’ ability and team competitiveness,

and providing effective information to audiences (Xu

et al., 2004)(Vaeyens et al., 2007). In the field of

basketball analysis, conventionally, there has been

detailed study of video processing after an individ-

ual player is cut out: for example, analysis of shoot

forms (Liu et al., 2011) and elucidation of the me-

chanics of the human body when an injury occurs

(Krosshaug et al., 2007). In addition, in recent years,

there has been advanced research on analysis of team

play, such as screen play and pick and roll (Chen

et al., 2009)(Chen et al., 2012)(Fu et al., 2011)(Liu

et al., 2011)(Lucey et al., 2014)(Liu et al., 2013)-

(Lucey et al., 2014). These studies often analyze a

non-occlusion image taken from above the court, to

easily realize and use player tracking (see Fig. 1), or

the player tracking results may be processed manually

by analysts. For football videos, many stable player

tracking methods using probabilistic techniques, such

as the Kalman filter or particle filter, have been pro-

posed. In basketball, in contrast to soccer, occlusion

between players occurs frequently because of differ-

ences in the size of the court and the camera view-

points, so a practical method for automatic player

tracking has not yet been established.

We do not assume a special environment—for ex-

a

https://orcid.org/0000-0003-0212-9265

ample, a stadium with multiple cameras placed on the

ceiling—to enable the player tracking method to be

applicable to club activities in high school and junior

high school. For these purposes, it is desirable to pro-

cess images that are captured by hand-held cameras

from the side, or obliquely above the court (Wen et al.,

2016)(Hu et al., 2011). In this case, occlusion be-

tween players is extremely likely to occur. Therefore,

we consider the use of multiple videos taken from

different viewpoints, which potentially allows play-

ers hidden from one camera to be captured by another

camera. In addition, as another advantage of using

multiple viewpoints, acquisition of three-dimensional

information about players becomes possible.

In this study, we assume three cameras with dif-

ferent viewpoints, and propose a method to integrate

the information about the players’ positions obtained

by them appropriately. Because each camera contin-

ually changes its viewpoint, it is necessary to perform

calibration efficiently, assuming real-time processing.

When detecting a player in each image, it is desirable

to use a method that is effective even in the presence

of occlusion. Integrating the information from each

camera requires a procedure to ensure sufficient sta-

bility when the player is hidden from a particular cam-

era or when the player is detected again. In this paper,

we propose a player tracking system that satisfies the

above requirements. The first feature of the proposed

method is to use OpenPose, a human joint detection

method based on Deep Neural Network architecture,

to avoid some occlusion of players. Another feature

Tanikawa, S. and Tagawa, N.

Player Tracking using Multi-viewpoint Images in Basketball Analysis.

DOI: 10.5220/0009097408130820

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 5: VISAPP, pages

813-820

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

813

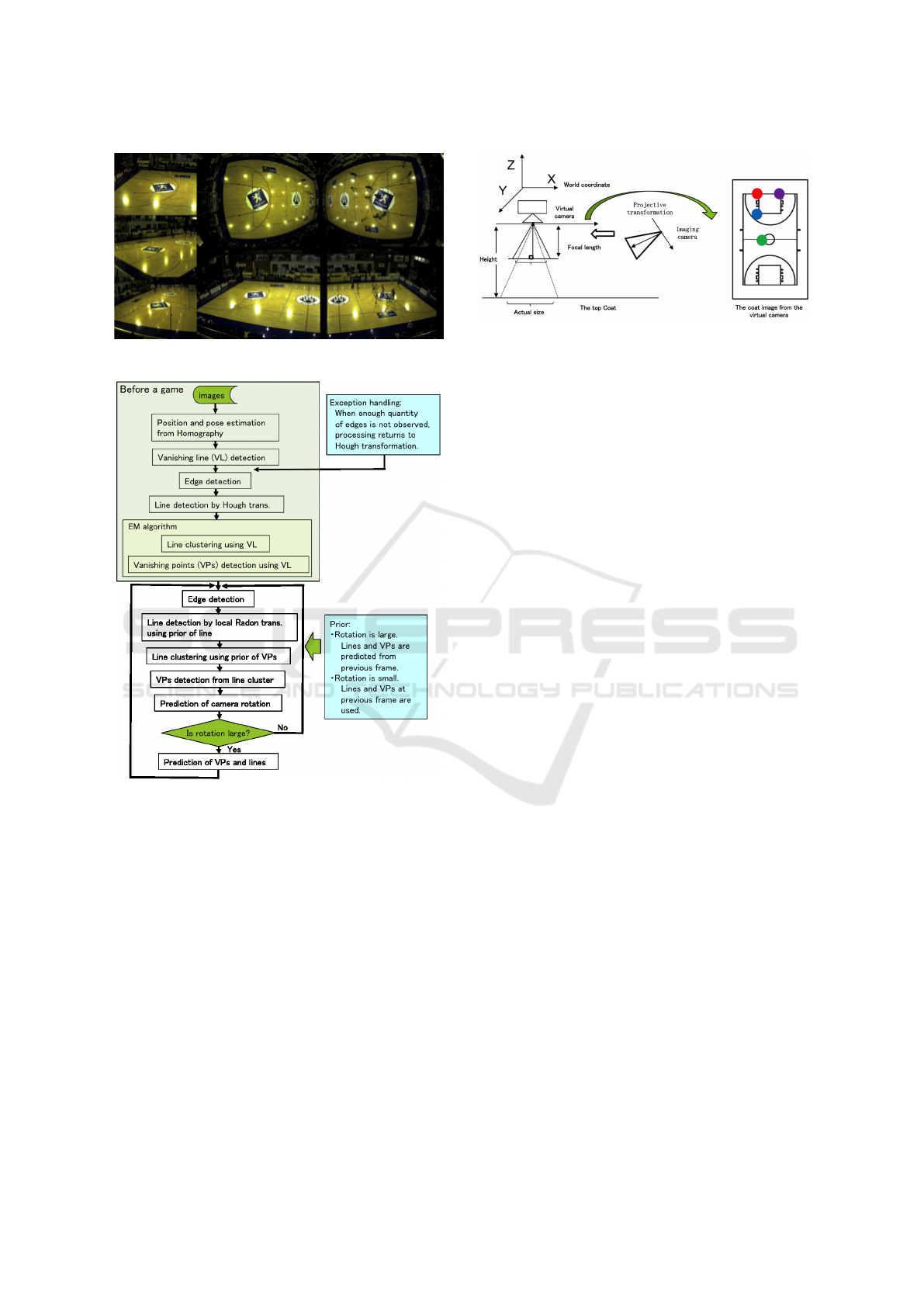

Figure 1: Examples of images captured above the court.

Figure 2: Outline of our camera pose estimation.

is that the position of the player detected from each

camera’s viewpoint is converted into a reference im-

age viewed from above the court, and the detection

results from multiple viewpoints in the reference im-

age are appropriately integrated. In consideration of

cases where a player cannot be seen due to occlusion

in a certain camera, a data structure and an algorithm

capable of handling the disappearance and occurrence

of a player position are developed. The performance

and effectiveness of our method has been confirmed

through real image experiments.

Figure 3: Camera layout and coordinates, and relation be-

tween captured image and reference image.

2 CALIBRATION OF CAMERA

POSE

Our method for calibrating the camera position and

direction has been proposed in (Idaka et al., 2017) in

advance. This section introduces the outline shown

in Fig. 2. Because a basketball game is played alter-

nately in each half court, the camera direction moves

back and forth between both half courts, depending

on the offense and defense. Therefore, before start-

ing the game, the standard position and direction cor-

responding to each half court should be determined.

Because an image without a player can be used, fea-

ture point correspondence, using a court corner or

similar feature, can easily be adopted. The homog-

raphy matrix

~

H representing the projective transfor-

mation is determined, and decomposed into camera

rotation and translation using the following equation

(Kanatani, 1993).

k

~

H

>

=

r

~

I +

p

q

r

A B C

~

R, (1)

where (p,q,r) denotes the plane Z = pX +qY +r cor-

responding to the court, (A, B,C) indicates the camera

position,

~

R indicates the camera direction,

~

I indicates

a 3 ×3 unit matrix, and k is an arbitrary value. The co-

ordinates of the virtual camera viewing a court from

directly above are used as the world coordinates, and

the two-dimensional map (2-D map) used in the fol-

lowing is defined by the image viewed by the virtual

camera. Figure 3 shows the relation between the vir-

tual camera and the actual imaging camera. The pose

of the imaging camera is measured with respect to

the world coordinates. The colored points in the right

panel of Fig. 3 are the feature points used to determine

~

H in Eq. 1.

In the processing during a game, under the as-

sumption that the camera position slightly changes,

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

814

N frame

N+1 frame

N+2 frame

Manage as a trajectory

y

x

Processing at each view point

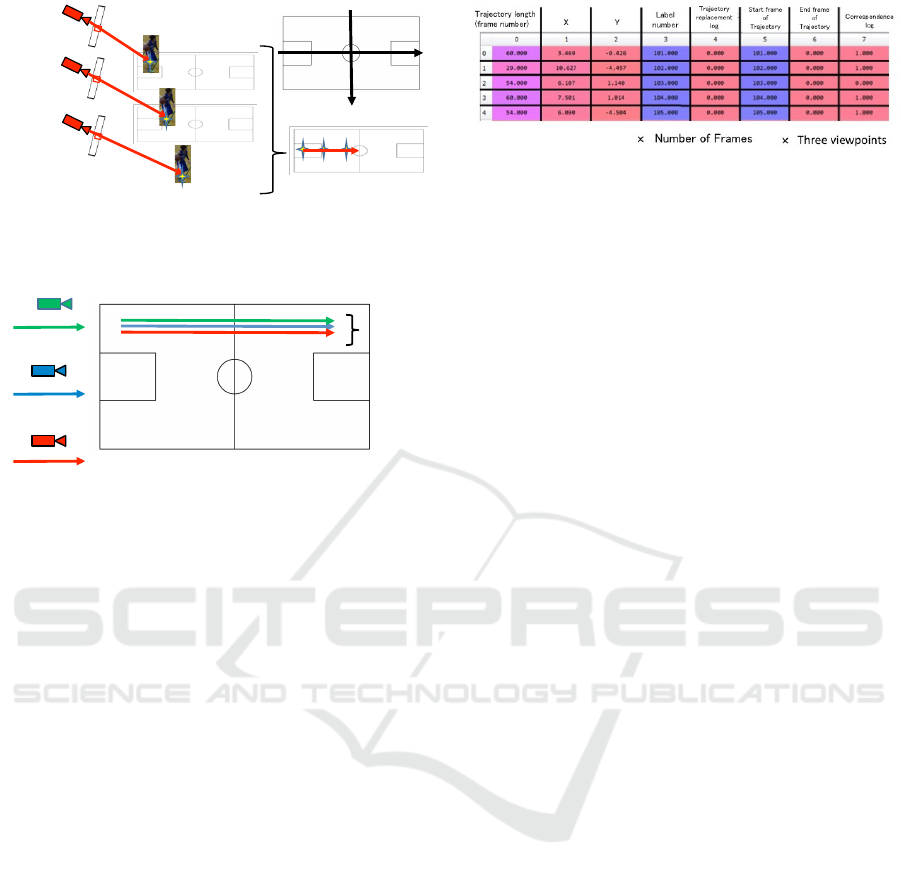

Figure 4: Generation of player’s trajectory from player de-

tection result in video from each viewpoint.

Viewpoint1

Viewpoint2

Viewpoint3

Manage

astrack

Figure 5: Trajectories from each viewpoint that are close on

the 2-D map in multiple successive frames are grouped as a

track.

the camera direction is estimated at each frame by de-

tecting two orthogonal vanishing points (VPs). When

the viewing direction is fixed, the camera may move

because of hand movement, which is expected to be

random and small. In contrast, when the viewing di-

rection is intentionally changed, a comparatively large

rotation should be considered. For a small rotation,

the VPs can be detected by the Radon transform, in-

stead of the Hough transform, to reduce the computa-

tional cost. By the Radon transform, the Hough pa-

rameter space can be evaluated locally around the pa-

rameters obtained in the previous frame. The details

is explained in (Idaka et al., 2017).

3 PLAYER TRACKING METHOD

3.1 Outline of Tracking

Our tracking method has the following features:

1. The results of detecting each player from each

viewpoint are projected onto a common 2-D map,

and a “trajectory” corresponding to each player

is determined while evaluating the temporal con-

tinuity between frames (see Fig. 4). Here, the

trajectory is defined as the tracking result of the

player from a single viewpoint. The tracking re-

sults from all viewpoints can be evaluated in the

same coordinate system.

Figure 6: Data structure representing information of play-

ers’ trajectories. Information of each player’s trajectory, the

start frame and end frame of the trajectory (0 indicates that

tracking is in progress), and other information are organized

and stored for each frame and each viewpoint. For “replace-

ment log” and “correspondence log,” refer to explanation in

the text.

2. By matching the trajectories from multiple view-

points, one consistent “track” is created for each

player. Occlusion can be overcome and the tra-

jectories of the bench players can be deleted (see

Fig. 5).

The following subsections explain the details.

3.2 Player Detection

We first detect the players based on the color infor-

mation of the uniform. Color information is essential

to identify a team, but if multiple players on the same

team approach and cause occlusion, they cannot be

distinguished.

In addition, OpenPose (Cao et al., 2017) has re-

cently been applied to various studies. OpenPose can

detect human joint information from an image; be-

cause skeletal information is used, it is possible to de-

tect the presence of a person even if some joints are

hidden. In this study, we investigate to what extent

the OpenPose method can avoid occlusion, compared

with using only color information.

Because the obtained image includes spectators

and reserve players around the court, the area of the

court (plus a margin of 1 m around the court) is pro-

cessed, and pixels outside the area are excluded by the

projective transformation.

3.3 Player Trajectory Generation

To integrate the player detection results from each

viewpoint and each frame, the detection results are

projected onto a 2-D map using the camera position

and direction obtained in advance. The correct detec-

tion results for a specific player in successive frames

are in similar locations, both in the image and on the

2-D map. Regardless of the viewpoint, to normalize

the measurement of proximity, it should be evaluated

not on the image but on the 2-D map. Therefore, if

Player Tracking using Multi-viewpoint Images in Basketball Analysis

815

Correspondence

label of Player 1

Correspondence

label of Player 2

Correspondence

label of Player 3

Correspondence

label of Player 4

Correspondence

label of Player 5

View

Frame

Figure 7: Data structure representing information of play-

ers’ tracks. It stores the trajectory number at each view-

point associated with each player. As the frames advance,

the structure extends downward.

X

X

Occlusion

Correspondence

Re-correspondence

Weunderstandthatitisoneplayer'strajectories.

It is visible only from this viewpoint.

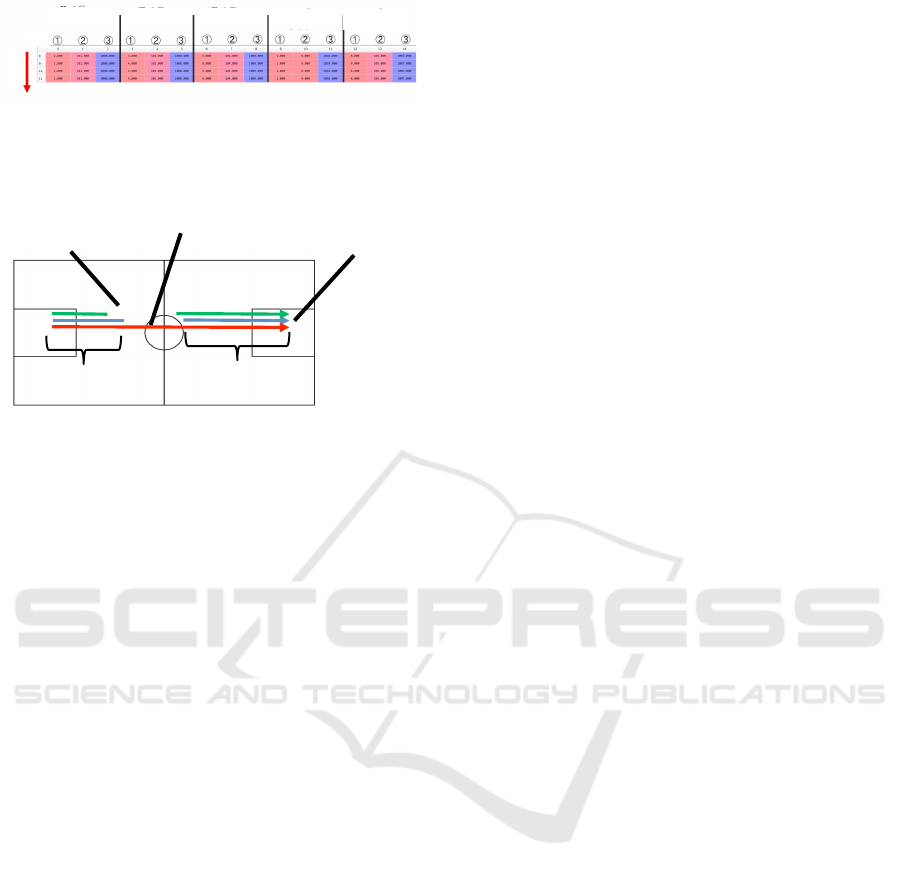

Figure 8: Correspondence of trajectories when occlusion

occurs.

there are player candidates projected within 1 m, for

both X and Y coordinates, on the 2-D map in succes-

sive frames, the set of points on the 2-D map is man-

aged as a trajectory. This procedure is illustrated in

Fig. 4. The threshold of 1 m is a value determined in

this experiment; it is necessary to consider a system-

atic method to determine this threshold in the future.

Trajectories are collectively managed as an array

data structure for each frame, from each viewpoint,

as shown in Fig. 6. It contains information such as

length, coordinates, label number (which is the man-

agement number of the trajectory), and the correspon-

dence log (which is the number of the viewpoint to

which the trajectory corresponds). Figure 6 shows the

data for one team, comprising five players. If there

are multiple projected player candidates within the

threshold, a trajectory is created based on the coordi-

nates of the player closest to the player in the previous

frame, and “1” is placed in the replacement log after

this frame. When processing the subsequent trajec-

tory generation, if the trajectory is not associated with

a trajectory obtained from another viewpoint, the tra-

jectory is regenerated using a more distant candidate

player by tracing back to the last frame having “1” in

the replacement log.

3.4 Player Track Generation

The correctness of the trajectory detected from each

viewpoint is confirmed by comparison with trajecto-

ries from other viewpoints. A specific player’s tra-

jectories should make the same movement on the 2-D

map. If the player’s trajectory projected in multiple

consecutive frames from one viewpoint exists close to

the player’s trajectory from another viewpoint, these

trajectories are considered to correspond, and they

are recognized as a collection of the player’s correct

trajectories. An example of a trajectory that is de-

tected incorrectly is the trajectory of a reserve player.

Even if the background is removed using the projec-

tive transform, the torso and head of the reserve player

on the near side tend to remain in the image more than

the reserve player on the far side of the court. These

areas are difficult to cut even if we use the colors or

the human joints of the players, and they are detected

as trajectories as long as they continue to appear in the

image, so the correspondence with other viewpoints is

used to distinguish them from the correct players.

Correspondence processing is performed for all

frames in temporal order. The trajectories from each

viewpoint in the frame being processed are compared

in a round-robin manner, and correspondence is made

using coordinates in a number of successive frames;

this number was defined as 10 frames in this study.

If a trajectory that has been interrupted by occlusion

is subsequently detected again, multiple tracks may

be present nearby. If we try to map the trajectory to

the track immediately, there are multiple candidates

and the ambiguity is high. Therefore, the frame is ad-

vanced until the player’s position on the track deviates

from the other players’ positions to a certain extent,

and then correspondence with the track is made. In

this study, we set the separation threshold to 1.5 m

experimentally.

The associated trajectories are recognized to be

the correct player’s trajectories, and the average value

of the trajectories, in each frame from their start frame

to their end frame, is taken as the coordinates of the

player in that frame; in addition, the coordinates in

successive frames become the track of the player. By

placing the number identifying the viewpoint in the

correspondence log in the corresponding trajectory,

it is made clear which viewpoint has been matched,

while avoiding double correspondence with the tra-

jectories of other players. The trajectories for which

correspondence has been made are managed collec-

tively using an array data structure. This array is

called a track array, and the label numbers of trajec-

tories of each viewpoint that are associated with each

player, as shown in Fig. 7, are stored for each frame.

Figure 7 shows an example in which the label num-

bers of the trajectories constituting the trajectories of

the 8th to 11th frames are represented. The coordi-

nates of player 1 are calculated from the 1st trajectory

of the 1st viewpoint, 101st trajectory of the 2nd view-

point, and 1001st trajectory of the 3rd viewpoint. The

details can be understood by referring to the frame

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

816

numbers and trajectory numbers in each trajectory ar-

ray.

Because there are five basketball players in each

team, it is desirable that five tracks exist in each

frame. However, there are cases where a trajectory

is interrupted because occlusion occurs. We assume

that if occlusion occurs from one viewpoint and the

trajectory is interrupted, the trajectory continues from

other viewpoints. Even if the trajectory is interrupted

at a viewpoint where occlusion occurs, tracking can

be performed while avoiding the occlusion by corre-

lating the trajectory restored thereafter with the con-

tinued trajectory from another viewpoint (see Fig. 8).

This is the feature of this method. Therefore, it is nec-

essary to confirm whether the trajectories already as-

sociated with each other correspond to the trajectories

that have appeared after occlusion in the viewpoint

image in which the discontinuation occurs. Even if

occlusion occurs, it is desirable that the trajectory of

one of the viewpoints is connected, but if occlusion

occurs in three or more players at one location, or oc-

clusion occurs continuously in a short time, the trajec-

tory is interrupted from all of the viewpoints. There-

fore, exception handling is required in the following

cases:

• One Player Whose Trajectory has been Lost

from Three Viewpoints. The track that has reap-

peared in the subsequent frame is assigned to this

player. In this way, it is possible to avoid mistakes

in trajectory assignment.

• Two or More Players Whose Trajectories have

been Lost from three Viewpoints. Although it

would be possible to track several players after the

occurrence of an occlusion, it is possible that the

player IDs initially assigned to trajectories may be

interchanged with one another. This is because it

may not be possible to distinguish between play-

ers from the same team in the image. If all of

the (two or more) trajectories are broken, when

the trajectories are restored again and associated

with players, each track is assigned to the player

whose position is closest to the position of the

player that was associated with the track before

the break. However, it is difficult to be certain

that the track can be reliably reassigned to the cor-

rect player. Therefore, the players and tracks that

may have been wrongly associated, and the corre-

sponding frame numbers, are managed as a batch.

After the processing is completed, track assign-

ments are manually confirmed; if track assign-

ments are confirmed, all such assignments made

after that frame are confirmed. This ensures the

correctness of tracking.

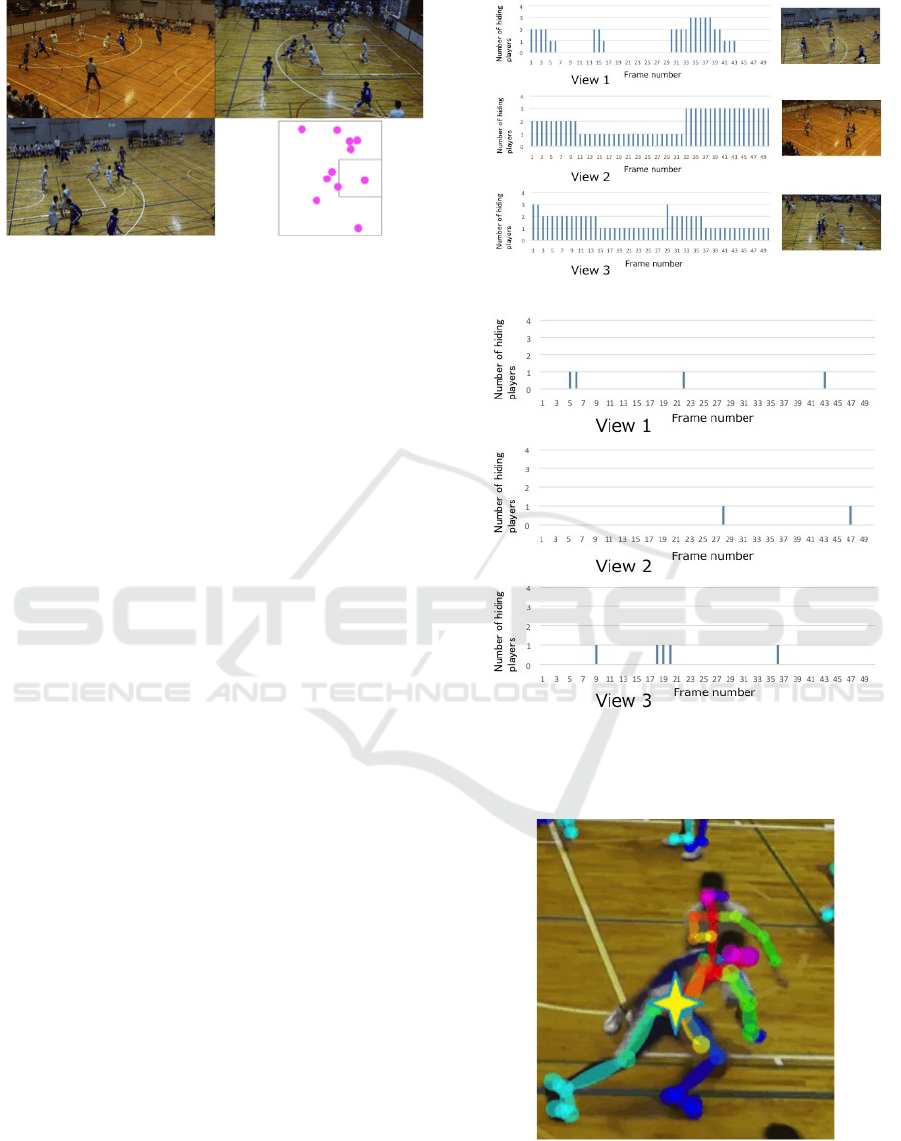

Figure 9: Result of removing the area outside the court us-

ing homography transformation.

Figure 10: Measurement of human joints by OpenPose.

4 EXPERIMENT

The experiment was performed using videos captured

from three viewpoints. The camera used was Pana-

sonic’s digital high-definition camera (‘Panasonic:

HC-V360M, resolution: 1920 x 1080, 30 frames/sec).

We selected 180 consecutive frames and tracked play-

ers while overcoming the problem of occlusion. Fig-

ure 9 shows a processing area in which the area out-

side the court has been removed using the homogra-

phy transfomation determined during camera calibra-

Figure 11: Multiple players detected using OpenPose.

Player Tracking using Multi-viewpoint Images in Basketball Analysis

817

Figure 12: Three views and players’ positions on 2-D map

using OpenPose.

tion.

Figure 10 is an example of joint information ob-

tained by OpenPose, and Fig. 11 shows how Open-

Pose detects multiple players in an image simulta-

neously from a certain viewpoint. The appearance

of one frame of the player tracking result, when us-

ing OpenPose, is shown in Fig. 12, together with the

three view images used. Figure 13 shows the num-

ber of hidden players in each frame, from each view-

point. Occlusion is clearly reduced by using Open-

Pose. When only color information was used, the

average occlusion rate per viewpoint was 0.14% for

three players, 0.26% for two players, 0.42% for one

player, and 0.21% for no occlusion. However, ex-

cept for one time, occlusion avoidance using multiple

viewpoints was performed correctly by the proposed

method. The failed case involved frames in which two

players could not be detected at the same time from all

of three viewpoints, and when the track was recalcu-

lated, player substitution occurred. In contrast, when

player detection was performed using joint informa-

tion from OpenPose, occlusion (from more than one

viewpoint) did not occur in the same frame. There-

fore, players were always detected from at least two

viewpoints, and occlusion was avoided in all cases.

Another advantage of using OpenPose is that the

resulting trajectories were stable, reducing apparent

position errors. With uniform color information, vari-

ous positions on the back and abdomen were detected

as player positions, whereas, when using OpenPose, it

was possible to identify and detect the position of the

waist with a relatively small difference between play-

ers. The star in Fig. 14 indicates the waist position de-

tected by OpenPose from an example image. There-

fore, when performing homography transformation, a

standard waist height could be used, and the position

error on the 2D map was reduced.

(a)

(b)

Figure 13: Time transition of the number of hidden players

by method using (a) color and (b) OpenPose.

Figure 14: Waist position detected by OpenPose is indicated

by a star.

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

818

5 CONCLUSIONS

In this study, we proposed a basketball player track-

ing method that integrates information from multi-

ple viewpoints appropriately. The method is based

on video captured by hand-held cameras from around

the court and from the spectator seats, and its appli-

cability is very high. We also confirmed that using

OpenPose for player detection is very effective, com-

pared with using the team uniform color alone. Be-

cause team distinction needs to use uniform color, we

plan to extract color information from the OpenPose

detection results.

To confirm the effectiveness of integrating infor-

mation from multiple cameras, we focused on the

implementation of algorithms to integrate trajecto-

ries obtained from each viewpoint on 2-D reference

maps. One of the features of the proposed method

is that player tracking at each viewpoint, called tra-

jectory generation, and integration of these trajecto-

ries, called trajectory generation, are all performed on

the same 2-D reference map using homography. This

makes it possible to evaluate the proximity of the de-

tected player position without depending on the posi-

tion of the player or the camera viewpoint. To oper-

ate this algorithm stably, it is necessary to accurately

detect trajectories from each viewpoint. Currently,

we identify players close to each other in successive

frames as the same player, but in the future we plan

to add statistical improvements, such as introducing

a Kalman Filter (Lu et al., 2013) and Bayesian eval-

uation (Xing et al., 2011). Building a motion model

using the game context (Liu et al., 2013) and mod-

eling the relationship between the ball and the player

(Maksai and X. Wang, 2016) are also important issues

for accurately tracking the player.

Since joint information by the OpenPose can be

used as it is for correspondence from different view-

points, three-dimensional recognition of joint place-

ment is easy to realize. Therefore, in addition to the

closeness of the player position between frames, we

are investigating whether the tracking of the player

can be made more accurate by using the inter-frame

matching of this three-dimensional joint information.

Future issues include three-dimensional recogni-

tion of players, application of this method to team

play and tactical analysis, and ball detection linked to

the recognition of dribbling, passing, and shots. For

this purpose, three-dimensional reconstruction from

joint information detected by the OpenPose is effec-

tive. In recent years, the application of Deep Neural

Network that handles time series to human behavior

recognition has been actively studied. Application to

sports analysis is also underway (Baccouche et al.,

2010), (Tsunoda et al., 2017), (Wang and Zemel,

2016). We plan to develop such a DNN-based method

using joint three-dimensional motion information as

input.

ACKNOWLEDGEMENTS

We would like to thank Dr. Shinji Ozawa, Emeritus

professor of Keio University, Japan, for valuable ad-

vice on this research. In addition, we thank Edanz

Group (https://en-author-services.edanzgroup.com/)

for editing a draft of this manuscript. Part of this

work was supported by JSPS KAKENHI, grant num-

ber 19K12046.

REFERENCES

Baccouche, M., Mamalet, F., Wolf, C., Garcia, C., and

Baskurt, A. (2010). Action classification in soccer

videos with long short-term memory recurrent neural

networks. In Proc. Int. Conf. on Artificial Neural Net-

works.

Cao, Z., Simon, T., Wei, S.-E., and Sheikh, Y. (2017). Real-

time multi-person 2d pose estimation using part affin-

ity fields. In Proc. IEEE CVPR, pages 7291–7299.

Chen, H.-T., Chou, C.-L., Fu, T.-S., Lee, S.-Y., and Lin, B.-

S. P. (2012). Recognizing tactic patterns in broadcast

basketball video using player trajectory. J. Vis. Com-

mun. Image R., 23:932–947.

Chen, H.-T., Tien, M.-C., Chen, Y.-W., Tsai, W.-J., and

Lee, S.-Y. (2009). Physics-based ball tracking and 3d

trajectory reconstruction with applications to shooting

location estimation in basketball video. J. Vis. Com-

mun. Image R., 20:204–216.

Fu, T.-S., Chen, H.-T., Chou, C.-L., Tsai, W.-J., and Lee, S.-

Y. (2011). Screen-strategy analysis in broadcast bas-

ketball video using player tracking. In Proc. IEEE

Visual Commun. and Image Process.

Hu, M.-C., Chang, M.-H., Wu, J.-L., and Chi, L. (2011).

Robust camera calibration and player tracking in

broadcast basketball video. IEEE Trans. Multimedia,

13(2):266–279.

Idaka, Y., Yasuda, K., Ho, Y., and Tagawa, N. (2017). Cost-

effective camera pose estimation for basketball analy-

sis using radon transform. In Proc. Int. Conf. Infomat-

ics, Electronics and Vision.

Kanatani, K. (1993). Geometric Computation for Machine

Vision. Oxford University Press, Oxford, U.K.

Krosshaug, T., Nakamae, A., Boden, B., and et al. (2007).

Mechanisms of anterior cruciate ligament injury in

basketball: Video analysis of 39 cases. The American

Journal of Sports Medicine, 35(3):359–367.

Liu, J., Carr, P., Collins, R.-T., and Liu, Y. (2013). Tracking

sports players with context-conditioned motion mod-

els. In Proc. IEEE CVPR, pages 1830–1837.

Player Tracking using Multi-viewpoint Images in Basketball Analysis

819

Liu, Y., Liu, X., and Huang, C. (2011). A new method

for shot identification in basketball video. Journal of

Software, 6(8):1468–1475.

Lu, W.-L., Ting, J.-A., Little, J. J., and Murphy, K. P.

(2013). Learning to track and identify players from

broadcast sports videos. IEEE Trans. Pattern Anal.

Machine Intell., 35(7):1704–1716.

Lucey, P., Bialkowski, A., Carr, P., Yue, Y., and Matthews,

I. (2014). How to get an open shot: Analyzing team

movement in basketball using tracking data. In Proc.

MIT SLOAN Sports Analytics Conf.

Maksai, A. and X. Wang, P. F. (2016). What players do with

the ball: A physically constrained interaction model-

ing. In Proc. IEEE CVPR, pages 972–981.

Tsunoda, T., Komori, Y., Matsugu, M., and Harada, T.

(2017). Football action recognition using hierarchical

lstm. In Proc. IEEE CVPR Workshops.

Vaeyens, R., Lenoir, M., Williams, A.-M., Mazyn, L., and

Philippaerts, R.-M. (2007). The effects of task con-

straints on visual search behavior and decision mak-

ing skill in youth soccer players. Jour. Sport Exercise

Psychology, 29(2):147–169.

Wang, K.-C. and Zemel, R. (2016). Classifying nba offen-

sive plays using neural networks. In Proc. MIT Sloan

Sports Analytics Conf.

Wen, P.-C., Cheng, W.-C., Wang, Y.-S., Chu, H.-K., Tang,

N.-C., and Liao, H.-Y.-M. (2016). Court reconstruc-

tion for camera calibration in broadcast basketball

videos. IEEE Trans. Visualization and Computer

Graphics, 22(5):1517–1526.

Xing, J., Ai, H., Liu, L., and Lao, S. (2011). Multiple

player tracking in sports video: A dual-mode two-

way bayesian inference approach with progressive ob-

servation modeling. IEEE Trans. Image Processing,

20(6):1652–1667.

Xu, M., Orwell, J., and Jones, G. (2004). Tracking foot-

ball players with multiple cameras. In Proc. IEEE Int.

Conf. Image Processing, volume 3, pages 2909–2912.

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

820