A New Approach Combining Trained Single-view Networks with

Multi-view Constraints for Robust Multi-view Object Detection and

Labelling

Yue Zhang

a

, Adrian Hilton

b

and Jean-Yves Guillemaut

c

Centre for Vision, Speech and Signal Processing (CVSSP), University of Surrey, Guildford, U.K.

Keywords:

Multi-view Object Detection, Multi-view Object Labelling.

Abstract:

We propose a multi-view framework for joint object detection and labelling based on pairs of images. The

proposed framework extends the single-view Mask R-CNN approach to multiple views without need for ad-

ditional training. Dedicated components are embedded into the framework to match objects across views by

enforcing epipolar constraints, appearance feature similarity and class coherence. The multi-view extension

enables the proposed framework to detect objects which would otherwise be mis-detected in a classical Mask

R-CNN approach, and achieves coherent object labelling across views. By avoiding the need for additional

training, the approach effectively overcomes the current shortage of multi-view datasets. The proposed frame-

work achieves high quality results on a range of complex scenes, being able to output class, bounding box,

mask and an additional label enforcing coherence across views. In the evaluation, we show qualitative and

quantitative results on several challenging outdoor multi-view datasets and perform a comprehensive compar-

ison to verify the advantages of the proposed method.

1 INTRODUCTION

Multi-view object detection and labelling is a com-

plex problem which has attracted considerable inter-

est in recent years and has been employed in many

application domains such as surveillance and scene

reconstruction (Luo et al., 2014). Compared to single-

view data, multi-view data provides a richer scene

representation by capturing additional cues through

the different viewpoints; these can help tackle the

problem of object detection and labelling more ef-

fectively by resolving some of the visual ambigui-

ties. However, dealing with multi-view features is

challenging due to large viewpoint variations, severe

occlusion, varying illumination and changes in reso-

lution (Chang and Gong, 2001).

Multi-view detection and labelling suffer from

two important limitations: First, few datasets for

multi-view object detection and tracking are avail-

able; Second, most approaches follow a tracking-by-

detection methodology which ignores the coupling

between detection and tracking. Beside, existing

a

https://orcid.org/0000-0002-3287-2474

b

https://orcid.org/0000-0003-4223-238X

c

https://orcid.org/0000-0001-8223-5505

Figure 1: Illustration of the advantages of proposed ap-

proach (right) compared to the classical Mask R-CNN for-

mulation (left) in the case of two views from the Football

dataset. By incorporating multi-view information within

the network, the proposed approach is able to reduce mis-

detections while at the same time producing more consistent

object labelling across views.

methods for multi-view object detection and labelling

usually rely on videos, as tracking objects in both

spatial and temporal domains has been shown to be

beneficial due to the complementary cues they afford.

However, being able to detect and consistently la-

bel objects across image views (without use of tem-

poral information) remains an important task in its

452

Zhang, Y., Hilton, A. and Guillemaut, J.

A New Approach Combining Trained Single-view Networks with Multi-view Constraints for Robust Multi-view Object Detection and Labelling.

DOI: 10.5220/0008991104520461

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 5: VISAPP, pages

452-461

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

own right with many practical applications. These in-

clude for example multi-view scene modelling from

a hand-held camera where no temporal information

is available or processing of CCTV data acquired

at a low frame-rate where temporal information is

too coarsely sampled. Further, multi-view image ap-

proaches are important to enable processing of key-

frames in multi-view video datasets, which in turn can

be used to guide multi-view video processing. It is

thus essential to develop effective algorithms for pro-

cessing multi-view images.

A major challenge with processing multi-view im-

ages as opposed to videos relates to the changes

in object location and appearance which are usually

significantly larger across views than across frames.

This makes detection and tracking across views sig-

nificantly more difficult in practice. Furthermore,

many multi-view approaches solve the detection and

tracking tasks separately, with the detection algorithm

serving to initialise multi-view tracking. This sequen-

tial approach suffers from the limitation that errors at

the detection stage propagate to the later stages of the

pipeline, affecting tracking performance and making

the approach sub-optimal.

In recent years, deep learning based approaches

have achieved impressive performance in various

single-view tasks such as classification, object de-

tection and semantic segmentation (He et al., 2017;

Levine et al., 2018; He et al., 2016). However, due to

the lack of multi-view data, existing multi-view track-

ing approaches cannot achieve end-to-end deep learn-

ing. Most of the existing approaches for multi-view

tasks use 3D convolution networks to train a model

or classify objects in the 3D domain. However, for

multi-view tasks, it is time-consuming and computa-

tionally expensive to obtain multi-view object track-

ing results with a 3D convolution network. On the

other hand, many methods combine deep learning

components separately for object detection or features

trained from person re-identification.

To overcome the problem of the limited num-

ber of multi-view datasets and connect detection with

labelling, we propose a new approach which inte-

grates an existing trained single-view network with

multi-view computer vision constraints. This new

joint multi-view detection and tracking approach does

not require further training thereby avoiding the need

for annotated multi-view training datasets which are

currently scarce. Our approach extends Mask R-

CNN (He et al., 2017), a state-of-the-art approach for

single-view image classification, detection and seg-

mentation. The proposed framework consists of two

branches, each with weights set as in the original

Mask R-CNN network, which are integrated with ad-

ditional components to enforce epipolar constraints,

appearance similarity and class consistency thus al-

lowing matching of instances between two views.

Moreover, our multi-view framework incorporates a

new branch for the label output compared with the

single-view method. Figure 1 illustrates the ad-

vantages of the multi-view extension which is able

to leverage multi-view information to reduce mis-

detections while at the same time adding label infor-

mation compared to a traditional Mask R-CNN im-

plementation.

Our approach makes the following key contribu-

tions. First, it extends a single-view deep learning

network to multiple views without further training for

pairs of images, introducing a framework for classi-

fication, detection, segmentation and labelling. Sec-

ond, we improve the performance in multiple object

detection and labelling by jointly solving these two

tasks and integrating them into a common framework.

The remainder of this paper is organised as fol-

lows. In Section 2, we review related work on object

detection as well as multi-view object tracking. The

proposed methodology is then presented in Section

3. Section 4 experimentally evaluates the approach

showing qualitative and quantitative results based on

pairs of views obtained from a range of challeng-

ing multi-view datasets and comparing against estab-

lished approaches. Conclusions and future work are

finally discussed in Section 5.

2 RELATED WORK

Object Detection. Object detection has been play-

ing a fundamental role in a wide variety of tasks

such as classification and object tracking. Thus we

do not intend to conduct a thorough review here, but

instead we concentrate on deep learning based tech-

niques and joint detection methods, which are most

relevant to our work. We refer the reader to recent

surveys for a more comprehensive review (Zhao et al.,

2018; Li et al., 2015; Hosang et al., 2016; Neel-

ima et al., 2015). Region proposal generation is typ-

ically the first part in the object detection pipeline,

where deep networks have been adopted to predict

the bounding boxes and generate regions (Sermanet

et al., 2013; Erhan et al., 2014; Szegedy et al., 2014).

Based on those techniques, Girshick et al. proposed

the R-CNN, which adopted an end-to-end training to

classify the proposed regions (Girshick et al., 2014).

To improve the detection efficiency, He et al. pro-

posed the SPP-net with shared information, together

with pyramid matching to correct geometric distor-

tion (He et al., 2015). It also should be mentioned

A New Approach Combining Trained Single-view Networks with Multi-view Constraints for Robust Multi-view Object Detection and

Labelling

453

that the use of the shared information in object detec-

tion has also been applied in (Dai et al., 2015; Gir-

shick, 2015; Ren et al., 2015), in which real-time pre-

diction has been achieved. Based on Faster R-CNN

(Ren et al., 2015), He et al. further developed Mask

R-CNN (He et al., 2017), which achieves state-of-

the-art performance for object detection and semantic

segmentation. Besides, several works have made use

of information from other images or views, aiming to

improve the detection accuracy by sharing informa-

tion among views. For examples, Xiao et al. built a

single deep neural network for labelling re-appearing

objects (Xiao et al., 2017). In this architecture, detec-

tion and identification cooperate together with shared

convolutional feature maps to improve the result. In

(L

´

opez-Cifuentes et al., 2018), a multi-camera system

is built to refine the bounding box for object detection.

In their work, a graph representation is proposed by

connecting different components together. Although

multi-view cues can help to improve the detection,

this is an area which remains relatively unexplored.

Multi-view Tracking. Multi-view tracking is a broad

area encompassing camera calibration, object detec-

tion, person re-identification, object tracking, etc. The

existing literature on multi-view tracking, however,

mostly incorporates several features and hand-crafted

pipelines to combine the temporal and spatial infor-

mation on multi-view videos (Luo et al., 2014). Ris-

tani et al. used correlation clustering optimization

to find trajectories based on the combination of ap-

pearance and motion features (Ristani and Tomasi,

2018). They then use post-processing to globally re-

fine the result. Multi-view tracking combines sev-

eral cues for representation and uses various strate-

gies to solve the tracking problem. Xu et al. used a

trained DCNN to represent the appearance of people,

and combine geometry as well as motion to build a

model. Then for each cue, a composition criterion is

set and then jointly optimised to achieve correct track-

ing (Xu et al., 2016). Hong et al. also combined mul-

tiple cues into a sparse representation (Hong et al.,

2013). Then they treated multi-view tracking as a

sparse learning problem. On the other hand, Morioka

et al. explored a colour-based model to identify ob-

ject correspondences among different views (Morioka

et al., 2006).

From previous works, it is clear that deep learn-

ing dramatically improves the performance for object

detection. However, most of the existing object de-

tection techniques focus on single-view images which

can be affected by pose, occlusion, etc. In contrast,

the multi-view object detection approach considered

in this paper can address this problem by exploit-

ing additional cues from other views. Further, multi-

view tracking methods mostly rely on initial detec-

tion result and are limited to videos, making those

techniques unsuitable for tasks lacking temporal in-

formation. We instead propose a method which can be

used across views without temporal information. Our

method leverages the superior detection performance

achieved by recent single-view deep learning archi-

tectures and extends them to the multi-view domain

through embedded components sharing information

between pairs of views.

3 METHODOLOGY

In this paper, we propose to extend a pre-trained

single-view detection network to a multi-view frame-

work with embedded components for object detec-

tion and labelling, thus capitalising on the advan-

tages of recent deep learning architectures while at the

same time overcoming the shortage of labelled multi-

view datasets that prevent direct training of multi-

view architectures. Our framework is built based on

Mask-RCNN (He et al., 2017) for extracting candi-

date bounding boxes. We enforce the epipolar geom-

etry to constrain the locations of instances matched

across two views, and compute an efficient person re-

identification feature to measure the appearance sim-

ilarity between matched instances. The Hungarian

algorithm is used in combination with a confidence

strategy to identify matching pairs and assign labels

in polynomial time without the requirement to know

the number of matching pairs. In our approach, we

jointly optimise instance detection and labelling for

optimal performance. In this section, we provide a

description of the framework in the case of pairwise

detection and labelling, leaving the generalisation to

three or more views to future work.

3.1 Framework Overview

The proposed multi-view framework for object detec-

tion and labelling extends the classical Mask R-CNN

approach by scaling it to multiple branches respon-

sible for the processing of the different input views

(two branches corresponding to two calibrated input

views considered in this paper). The weights used for

each branch of this extended framework are obtained

from the original Mask R-CNN which was trained on

the COCO dataset (Lin et al., 2014). The input to

the network consists of two calibrated images of the

scene captured from different viewpoints. The output

for each input image consists of class, bounding box,

mask and label information.

The two input images are first each fed to the deep

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

454

Figure 2: Overview of our proposed framework. First, candidate bounding boxes for each branch are extracted from a pair of

images respectively utilising a pre-trained Mask R-CNN. Then based on these candidate bounding boxes, an energy function

is defined by combining class, distance and appearance similarity matrices. Then the result characterising the class, bounding

box, mask and label for pair of images are extracted by solving a constrained global assignment problem.

learning network of their corresponding branch to ex-

tract convolutional features. The backbone for feature

extraction is composed of a 50-depth Resnet and a

feature pyramid network (Lin et al., 2017). Then a re-

gion proposal network generates candidate bounding

boxes based on the convolutional features. A confi-

dence score is obtained for each candidate bounding

box. Then based on each candidate bounding box, the

class is obtained using a fully-connected layer and the

mask is obtained using a fully convolutional network

(Long et al., 2015).

The key contribution is the introduction of em-

bedded components which are used to link the two

branches and enforce multi-view constraints. This is

a critical step to ensure that the branches are able to

cooperate when detecting and labelling objects. This

is achieved by defining an energy function enforc-

ing epipolar constraints, appearance similarity and

class consistency amongst pairs of candidate bound-

ing boxes between two input images. To efficiently

solve the problem, this multi-view detection and la-

belling task is treated as a global optimisation prob-

lem with unknown number of assignments. The entire

framework is illustrated in Figure 2.

3.2 Energy Formulation

Let us denote by I

1

and I

2

the two input images

from two views fed to the pipeline. For each im-

age, convolutional features are extracted by a 50-

depth Resnet. Subsequently, based on the convolu-

tional features, the region proposal network gener-

ates a number of candidate bounding boxes and cor-

responding confidence scores for each image. Each

bounding box represents a candidate instance. Within

each branch, these initial processing steps are sim-

ilar to those in Mask-RCNN. Let us denote by M

and N the number of candidate bounding boxes

for the two images I

1

and I

2

respectively. The

sets for candidate bounding boxes extracted from I

1

and I

2

are B

1

= {b

11

, b

12

, ..., b

1i

, ..., b

1M

} and B

2

=

{b

21

, b

22

, ..., b

2 j

, ..., b

2N

} respectively, where b

1i

de-

notes the i-th instance in I

1

and b

2 j

denotes the j-th

instance in I

2

. To optimally match instances across

the two views, we combine class, distance and ap-

pearance features to represent each instance.

Distance Matrix. To measure the distance between

two instances across the two views, first we represent

the location of each instance with two points: the mid-

point of the top line segment and the mid-point of bot-

tom line segment of the corresponding bounding box.

The location of an instance in I

1

can be represented as

(x

t

1i

, y

t

1i

), (x

b

1i

, y

b

1i

) and similarly as (x

t

2 j

, y

t

2 j

), (x

b

1 j

, y

b

1 j

)

in I

2

. Then we use the epipolar geometry to mea-

sure the consistency between the two instances from

I

1

and I

2

. For a given point x in one view, there is an

epipolar line l = Fx in the other view on which the

corresponding point x

0

must lie, where F is the fun-

damental matrix relating the two views (Hartley and

Zisserman, 2003). F can be obtained either directly

from the calibration information, if available, or oth-

erwise indirectly by establishing a sufficient number

of correspondences across views.

More specifically, for the i-th instance in I

1

, the

epipolar lines l

t

1i

, l

b

1 j

in I

2

can be calculated as:

l

t

1i

= F

12

(x

t

1i

, y

t

1i

, 1)

>

, (1)

l

b

1i

= F

12

(x

b

1i

, y

b

1i

, 1)

>

, (2)

where F

12

represents the fundamental matrix from I

1

to I

2

. We denote by D

12

(i, j ) the distance between

the top and bottom epipolar lines inferred from the i-

th instance in I

1

and j-th instance in I

2

. Conversely,

A New Approach Combining Trained Single-view Networks with Multi-view Constraints for Robust Multi-view Object Detection and

Labelling

455

Figure 3: Epipolar geometry for distance measurement. The

left image shows a bounding box in View 1 with the two

points representing its location. The right image shows the

two corresponding epipolar lines in View 2 inferred from

the two points in View 1. In the right image, the instance in

the yellow box denotes the correct correspondence while the

instance in the red box corresponds to an incorrect match.

D

21

( j, i) denotes the distance between the top and

bottom epipolar lines inferred from the j-th instance

in I

2

and i-th instance in I

1

.

Then for each pair (i, j), the distance between the

i-th instance in I

1

and the j-th instance in I

2

can be

calculated as:

D(i, j ) = D

12

(i, j ) + D

21

( j, i), (3)

where D denotes the distance matrix. An example for

distance measurement can be seen in Figure 3. As

illustrated in the figure, an object far from the cor-

responding epipolar lines will have a large distance

value compared to the correct matching object.

Appearance Similarity Matrix. To match instances

between two views, distance is not sufficient. An

efficient feature that can properly represent appear-

ance in multiple views is therefore necessary. For

multi-view instance labelling, the viewpoint change

can be quite large. The appearance feature should

therefore be robust to large viewpoint change and

variation in illumination. In our method, we use a

feature called Local Maximal Occurrence (LOMO)

feature and its metric learning for feature similar-

ity measurement. It was first proposed by Liao et

al. for person re-identification (Liao et al., 2015).

The LOMO feature is an efficient feature combin-

ing a colour descriptor and a scale invariant local

ternary pattern (SILTP). It uses a Retinex algorithm

(Jobson et al., 1997) to handle illumination variation

and extracts the maximal occurrence horizontally to

overcome viewpoint change. In our method, we re-

size all the candidate bounding boxes to 128 × 48 as

the same size used in person re-identification. Then

the extracted LOMO feature for instances in I

1

can

be represented as H

1

= (h

11

, h

12

, ..., h

1i

, ..., h

1M

) and

H

2

= (h

21

, h

22

, ..., h

2 j

, ..., h

2N

) in I

2

. According to the

metric learning in (Liao et al., 2015), the appearance

similarity between two instances in two views can be

calculated as:

Z(i, j) = (h

1i

− h

2 j

)

T

W (Σ

0

−1

I

− Σ

0

−1

E

)W

T

(h

1i

− h

2 j

),

(4)

where Z denotes the appearance matrix, W represents

a subspace, Σ

0

−1

I

and Σ

0

−1

E

represent the covariance

matrices of intrapersonal variations and the extraper-

sonal variations respectively. The above-mentioned

parameters used for appearance similarity calculation

were set to the values originally proposed in (Liao

et al., 2015), that is no additional training was per-

formed. The value in Z will be low when two in-

stances are similar.

Class Similarity Matrix. An additional considera-

tion for multi-view tracking is that the two instances

in I

1

and I

2

belonging to a pair should belong to the

same class. The class for a candidate bounding box in

I

1

can be represented as C

1

= {c

11

, c

12

, ..., c

1i

, ..., c

1M

}

and C

2

= {c

21

, c

22

, ..., c

2 j

, ..., c

2N

} in I

2

. Therefore,

we define the class matrix C as

C(i, j) =

0 if c

1i

= c

2 j

σ

cls

if c

1i

6= c

2 j

,

(5)

where σ

cls

is used to penalise inconsistent class as-

signments.

Energy Function. Finally we combine class, dis-

tance and appearance matrices as the representation

of similarity between two instances in two views.

Then the energy function for multi-view detection and

tracking can be defined as E and be represented as:

E = w

D

· D + w

Z

· Z + w

C

·C, (6)

where w

D

, w

Z

and w

C

are the weights for distance,

appearance and class respectively. The weights w

D

,

w

Z

and w

C

are all set to 1 in this paper.

3.3 Global Optimisation

Matching pairs across the two views are extracted by

minimising the energy function defined in the previ-

ous section. We regard this as an assignment problem

as an instance in one view can only have at most one

matched instance in the other view. However, this is

not a classical assignment problem in the sense that

the number of matching pairs are unknown. There-

fore, to further constrain the problem, eligible pairs

are required to satisfy the following two conditions.

Condition 1 (similarity). In practice, not all in-

stances in one view will have a corresponding in-

stance in the other view due to the visibility from dif-

ferent viewpoints. Therefore, a similarity threshold

denoted by λ is introduced to prevent instances that

are not sufficiently similar from being matched. This

can remove candidate object pairs with large distance

or appearance similarity. The value λ varies based

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

456

on various factors such as instance resolution, illu-

mination variation and distance between viewpoints.

This condition is set to prescribe a minimum similar-

ity to avoid selecting non-matching pairs. Therefore,

we only consider the elements in the energy matrix E

with values smaller than λ so that elements with val-

ues larger than λ are ignored.

Condition 2 (confidence score). In Mask R-CNN,

each candidate bounding box is generated with a con-

fidence score. We define the confidence score set as

S

1

= {s

11

, s

12

, ..., s

1i

, ..., s

1M

} corresponding to each

bounding box in I

1

and S

2

= {s

21

, s

22

, ..., s

2 j

, ..., s

2N

}

corresponding to each bounding box in I

2

. Due to dif-

ferent resolutions and viewpoints, the same instance

may have a low confidence score in one view while

having a high confidence score in another view. Com-

pared with detecting instances from a single view,

multiple views can provide a richer source of infor-

mation for detection. In particular, we consider pairs

of scores jointly instead of single confidence scores.

We define two confidence thresholds for each pair: β

n

and β

h

. β

n

is identical to the confidence threshold

used in Mask R-CNN and accounts for the possibility

that an object may only be visible in one view. In con-

trast, the threshold β

h

is introduced to help recover an

object which may have a low confidence score in one

view but a high confidence score in the other view.

For each pair, we adopt the strategy that if the sum of

confidence scores is higher than 2β

n

or if either of the

scores is larger than β

h

, then the pair is regarded as

eligible.

The global optimization problem can be formu-

lated as an assignment problem with two additional

conditions that are introduced to handle the unknown

number of assignments. More specifically, this is

achieved by finding the set of assignments P that min-

imises the previously defined energy function com-

bined with a term aiming to maximise the number of

assignments, namely

∑

i, j∈P

E(i, j) + (G − |P|) · λ

s.t. (1) S

1i

+ S

2 j

> 2β

n

or S

1i

> β

h

or S

2 j

> β

h

(2) E(i, j) < λ

(7)

where G = min{M,N} represents the maximum num-

ber of assignment in a M × N matrix, |P| denotes the

cardinality of the set of chosen assignments (from

a maximum of G possible assignments) and λ is

the threshold on the pairwise similarity mentioned

in condition 1. Solving this equation, we can cor-

rectly match objects in two views and extract the mis-

detected bounding box in one view with the additional

cues from the other view.

We use the Hungarian algorithm to find the opti-

Figure 4: Illustration of the process to extract matching

pairs in the presence of a low confidence score in one view

in the case of the football dataset. All three example match-

ing pairs shown are correctly detected across views despite

the low confidence score in some of the views which would

have resulted in mis-detections using the classical Mask R-

CNN approach applied to each view individually.

mal assignment number K = |P| and the correspond-

ing optimal assignments P. The Hungarian algorithm

is widely used in assignment problems as it achieves

the optimal assignment with minimum cost. How-

ever, in this method, the number of potential pairs is

unknown which means not all the assignments are eli-

gible. This prevents direct application of the Hungar-

ian algorithms which would also consider the pairs

with values exceeding the threshold λ or having a low

confidence scores when trying to achieve the mini-

mum cost, thereby resulting in assignments which do

not satisfy the imposed constraints.

To overcome this problem, we first cap all val-

ues in E at λ. Clamping the values ensure that the

assignments corresponding to hypotheses that do not

meet the constraints all bear the same penalty. Then

we apply the Hungarian algorithm on the updated ma-

trix and compute the optimal G = min{M, N} assign-

ments by applying the Hungarian algorithm. From the

G assignments, only assignments with a value smaller

than λ and satisfying the confidence score mentioned

in condition 2 qualify. Thus we remove the assign-

ments which have a value λ or which do not satisfy

the confidence score condition. We then obtain K as-

signments, each assignment representing a match be-

tween two views.

Finally, the single instances that only can be seen

in one view with no corresponding pair in the other

view are then extracted based on the confidence score

β

n

in the same manner as in the classical Mask R-

CNN formulation. An example for pairs assignment

with a low confidence score in one view is shown in

Figure 4. It demonstrates that our system can cor-

rectly assign pairs even when one of them has a low

confidence score.

A New Approach Combining Trained Single-view Networks with Multi-view Constraints for Robust Multi-view Object Detection and

Labelling

457

Table 1: Details of the different datasets used for evaluation.

Datasets View Number Pairs of Images Image Resolution

Campus 3 90 360 × 288

Terrace 4 180 360 × 288

Football 5 100 1920 × 1080

Basketball 4 180 360 × 288

4 EXPERIMENTAL EVALUATION

In this section, we start by introducing the datasets,

the methods used for comparison and the metrics used

for performance evaluation. Then we demonstrate the

qualitative and quantitative performance for labelling

multiple objects between views to show the advan-

tages of the proposed multi-view framework in the

context of a range of scenes with different degrees of

complexity.

4.1 Evaluation Protocol

Datasets. Multi-view image datasets of scenes con-

taining multiple objects are required for qualitative

and quantitative evaluation. Due to the lack of ded-

icated multi-view image datasets, we instead use four

challenging multi-view video datasets namely Cam-

pus, Terrace, Basketball and Football from which we

extract a number of image pairs distributed across

the sequences. The Campus, Terrace and Basketball

datasets are from EPFL (Fleuret et al., 2008). For the

Campus and Terrace, we use the corresponding first

sequences. The Football dataset is from (Guillemaut

and Hilton, 2011). These four datasets are challeng-

ing due to the wide baseline, severe occlusions, vary-

ing illumination and small object scale in some cases.

These datasets are well suited to evaluated the perfor-

mance and robustness of the proposed approach under

operating conditions with varying degrees complex-

ity.

The proposed method is applied on single frames.

For a fair evaluation, each dataset is sampled using

a fixed interval which is dependent on the length of

the sequence. More specifically, we extract 10 frames

from the shorter 100-frame Football video which con-

tains five viewpoints. With C

2

5

= 10 possible camera

pair combinations for each frame, this results in a total

of 100 pairs of images in this dataset. Similarly, we

extract 30 frames for each of the Campus, Terrace and

Basketball resulting in a total of 90, 180 and 180 im-

age pairs respectively. The details for these datasets

are listed in Table 1. For each dataset, ground truth is

generated by manually annotating the selected frames

according to the guidelines from VOC2011 annota-

tion (VOC, ).

Comparison. Limited work has been conducted in

multi-view detection and labelling in the image do-

main as most works tend to focus on video analysis

and rely on the available temporal information. This

severely limits the number of baseline approaches

that can be used for comparative evaluation in the

context of images only. Our approach is compared

against two approaches: one based on a sequential

tracking-by-detection approach; another one based

on the probabilistic occupancy map approach (POM)

(Fleuret et al., 2008). The tracking-by-detection ap-

proach uses the same components as in our proposed

approach but in a sequential manner instead of the in-

tegrated framework we proposed. Instead of directly

embedding components into the architecture to con-

nect multi-view cues and jointly optimize detection

and labelling between two views, the sequential ap-

proach first generates bounding boxes for each image

in a pair using the classical Mask R-CNN approach

before further processing to perform the labelling. We

also compare our method with the well-established

probabilistic occupancy map (POM) (Fleuret et al.,

2008) approach which is applied to pairs of views us-

ing the default settings recommended by the authors.

Object detection is restricted to the area of interest to

allow a fair comparison with the POM method.

Evaluation Metrics. Performance is evaluated based

on the selected set of pairs of images for all datasets

considered. In this paper, we use precision and recall

to quantitatively evaluate the performance for multi-

view multi-object labelling. Specifically, we first de-

fine the correct labelling number. To measure the cor-

rect labelling number, we calculate the intersection

over union (IoU) between the detected bounding box

and the ground truth for each instance. We denote

by δ the threshold for the IoU with δ set to 0.5. In-

stances appearing in only one view are regarded as a

correctly labelled object if the IoU is larger than δ.

For objects appearing in both views, if the IoU val-

ues for both are larger than δ and they share the same

label, we regard them both as correct. If they do not

satisfy the above conditions or only satisfy one con-

dition, we regard them both as false positive objects.

Then, the precision is defined as the ratio of correctly

labelled instances to all detected instances while the

recall is defined as the ratio of correctly labelled in-

stances to all ground truth instances. The quantitative

result for each dataset is based on pairs of views in all

datasets. We then use the mean value for all combi-

nations of pairs which includes not only pairs defined

by adjacent views but also between views in opposite

directions.

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

458

Table 2: Quantitative result for multi-view tracking in the Campus, Terrace, Basketball and Football datasets.

Campus Terrace Basketball Football

Precision Recall Precision Recall Precision Recall Precision Recall

POM (Fleuret et al., 2008) 72.01 70.26 67.81 49.90 59.04 22.21 49.93 17.51

Sequential framework 95.65 93.84 79.26 75.71 77.42 70.06 78.36 70.25

Proposed 95.25 94.29 79.24 78.30 73.26 72.33 79.14 73.94

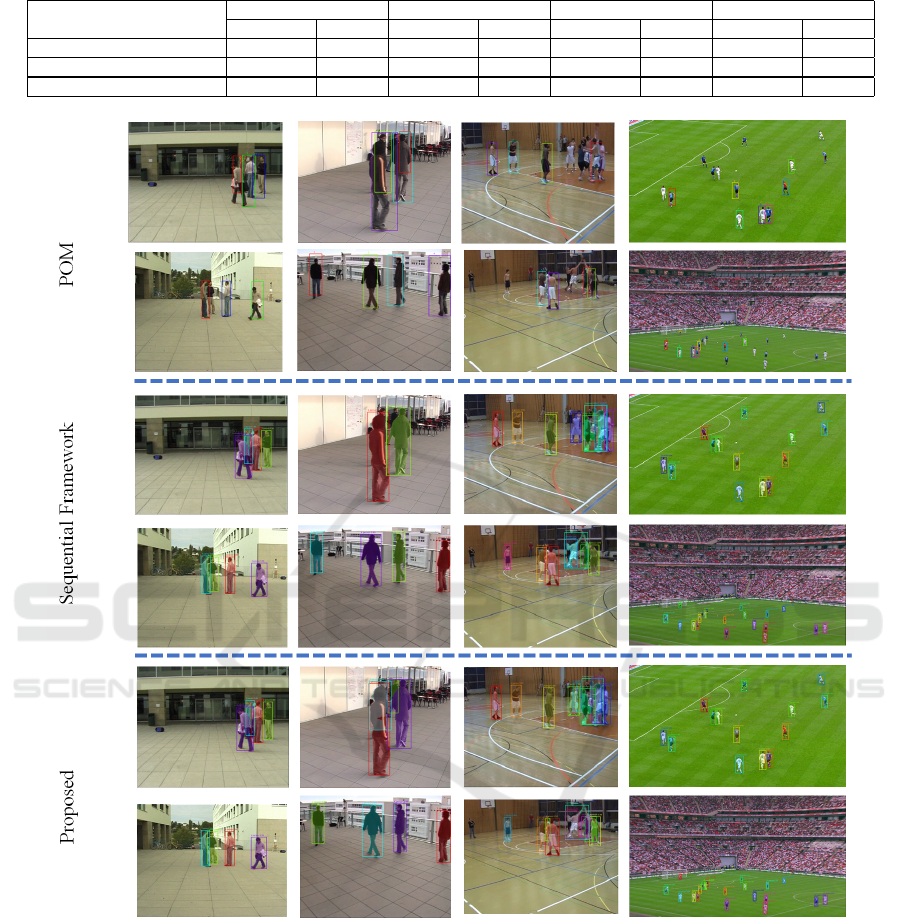

Figure 5: Qualitative result for the POM, the sequential approach and the proposed method. From left to right, we demonstrate

the results in the Campus, Terrace, Basketball and Football datasets. For each pair of images, the instance with same label is

represented in the same colour.

4.2 Quantitative Analysis

The quantitative results for multi-view labelling are

listed in Table 2. In the implementation, we discard

the candidate bounding boxes with confidence score

lower than 0.1 before matching to improve the effi-

ciency. The similarity threshold was set to λ = 300

for Football, λ = 400 for Basketball and λ = 450 for

Campus and Terrace, these being mainly influenced

by the baseline separating viewpoints and image res-

olution. From the table, we can see that the proposed

method outperforms both the sequential and the POM

approaches in all datasets in terms of the recall met-

ric. This indicates that the proposed approach is able

to recover objects which are otherwise undetected by

the other approaches. In terms of precision, the pro-

posed approach outperforms the POM approach on all

datasets, while it performs overall similarly to the se-

quential approach. The gain in precision is most ap-

parent in the case of the Football dataset, thus demon-

A New Approach Combining Trained Single-view Networks with Multi-view Constraints for Robust Multi-view Object Detection and

Labelling

459

strating the advantage of leveraging multi-view cues

and closely coupling the detection and labelling as the

complexity of the scene increases.

4.3 Qualitative Analysis

Qualitative results for multi-view labelling are shown

in Figure 5. This provides an illustration of the perfor-

mance of the proposed framework with outputs show-

ing the class, bounding box, mask and label on the

Campus, Terrace, Basketball and Football datasets,

with two pairs of images provided for each dataset re-

spectively. Results indicate that the proposed method

performs well even under large viewpoint change,

varying illumination and small instances. Moreover,

the proposed method can also be applied in labelling

objects in multiple classed by replacing person re-

identification appearance feature with other features.

We demonstrate some qualitative results on pairs of

images including a variety of common object classes

in Figure 6. The pair in first row in Figure 6 is ex-

tracted from the multi-view car dataset (Ozuysal et al.,

2009), while the other three pairs of images were cap-

tured as part of this project.

5 CONCLUSIONS AND FUTURE

WORK

In this paper, we have extended a state-of-the-art

single-view deep learning network into a joint multi-

view detection and labelling framework without need

for additional training. This is achieved by intro-

ducing a new architecture that extends a pre-trained

network to multiple branches with additional com-

ponents linking the different branches and enforcing

multi-view constraints on the geometry, appearance

and semantic content. By leveraging multi-view cues

and closely integrating them into the proposed archi-

tecture, we demonstrate that it is possible to recover

object instances which are otherwise hard to detected

in single views. The proposed network has the added

benefit of providing coherent multi-view labelling of

the detected instances.

In future work, we will extend the method to

multi-view videos. We anticipate that the temporal

information present in multi-view videos will provide

additional cues to resolve existing ambiguities and

further improve object detection and labelling perfor-

mance. Another interesting direction for future work

would be to train an end-to-end deep neural network

for multi-view object detection and labelling. This

would remove the current reliance on heuristics, but

Figure 6: Results for the proposed method applied to pairs

of images containing various common object classes.

would require access to large annotated multi-view

datasets which is currently problematic.

ACKNOWLEDGEMENTS

This work was supported by the China Scholarship

Council (CSC).

REFERENCES

VOC2011 annotation guidelines. http://host.robots.ox.ac.

uk/pascal/VOC/voc2011/guidelines.html. Accessed:

06 Jun 2019.

Chang, T.-H. and Gong, S. (2001). Tracking multiple peo-

ple with a multi-camera system. In Proc. IEEE Work-

shop on Multi-Object Tracking, pages 19–26. IEEE.

Dai, J., He, K., and Sun, J. (2015). Convolutional feature

masking for joint object and stuff segmentation. In

Proc. IEEE Conference on Computer Vision and Pat-

tern Recognition, pages 3992–4000.

Erhan, D., Szegedy, C., Toshev, A., and Anguelov, D.

(2014). Scalable object detection using deep neural

networks. In Proc. IEEE Conference on Computer Vi-

sion and Pattern Recognition, pages 2147–2154.

Fleuret, F., Berclaz, J., Lengagne, R., and Fua, P. (2008).

Multicamera people tracking with a probabilistic oc-

cupancy map. IEEE trans. Pattern Analysis and Ma-

chine Intelligence, 30(2):267–282.

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

460

Girshick, R. (2015). Fast R-CNN. In Proc. IEEE Interna-

tional Conference on Computer Vision, pages 1440–

1448.

Girshick, R., Donahue, J., Darrell, T., and Malik, J. (2014).

Rich feature hierarchies for accurate object detection

and semantic segmentation. In Proc. IEEE Conference

on Computer Vision and Pattern Recognition, pages

580–587.

Guillemaut, J.-Y. and Hilton, A. (2011). Joint multi-layer

segmentation and reconstruction for free-viewpoint

video applications. International Journal of Computer

Vision, 93(1):73–100.

Hartley, R. and Zisserman, A. (2003). Multiple view geom-

etry in computer vision. Cambridge university press.

He, K., Gkioxari, G., Doll

´

ar, P., and Girshick, R. (2017).

Mask R-CNN. In Proc. IEEE International Confer-

ence on Computer Vision, pages 2961–2969.

He, K., Zhang, X., Ren, S., and Sun, J. (2015). Spatial

pyramid pooling in deep convolutional networks for

visual recognition. IEEE trans. Pattern Analysis and

Machine Intelligence, 37(9):1904–1916.

He, K., Zhang, X., Ren, S., and Sun, J. (2016). Deep resid-

ual learning for image recognition. In Proc. IEEE

Conference on Computer Vision and Pattern Recog-

nition, pages 770–778.

Hong, Z., Mei, X., Prokhorov, D., and Tao, D. (2013).

Tracking via robust multi-task multi-view joint sparse

representation. In Proc. IEEE International Confer-

ence on Computer Vision, pages 649–656.

Hosang, J., Benenson, R., Doll

´

ar, P., and Schiele, B.

(2016). What makes for effective detection propos-

als? IEEE trans. Pattern Analysis and Machine Intel-

ligence, 38(4):814–830.

Jobson, D. J., Rahman, Z.-u., and Woodell, G. A. (1997). A

multiscale retinex for bridging the gap between color

images and the human observation of scenes. IEEE

Trans. on Image processing, 6(7):965–976.

Levine, S., Pastor, P., Krizhevsky, A., Ibarz, J., and Quillen,

D. (2018). Learning hand-eye coordination for robotic

grasping with deep learning and large-scale data col-

lection. The International Journal of Robotics Re-

search, 37(4-5):421–436.

Li, Y., Wang, S., Tian, Q., and Ding, X. (2015). Feature

representation for statistical-learning-based object de-

tection: A review. Pattern Recognition, 48(11):3542–

3559.

Liao, S., Hu, Y., Zhu, X., and Li, S. Z. (2015). Person re-

identification by local maximal occurrence represen-

tation and metric learning. In Proc. IEEE Conference

on Computer Vision and Pattern Recognition, pages

2197–2206.

Lin, T.-Y., Doll

´

ar, P., Girshick, R., He, K., Hariharan,

B., and Belongie, S. (2017). Feature pyramid net-

works for object detection. In Proc. IEEE Conference

on Computer Vision and Pattern Recognition, pages

2117–2125.

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P.,

Ramanan, D., Doll

´

ar, P., and Zitnick, C. L. (2014).

Microsoft coco: Common objects in context. In Euro-

pean Conference on Computer Vision, pages 740–755.

Springer.

Long, J., Shelhamer, E., and Darrell, T. (2015). Fully con-

volutional networks for semantic segmentation. In

Proc. IEEE Conference on Computer Vision and Pat-

tern Recognition, pages 3431–3440.

L

´

opez-Cifuentes, A., Escudero-Vi

˜

nolo, M., Besc

´

os, J.,

and Carballeira, P. (2018). Semantic driven

multi-camera pedestrian detection. arXiv preprint

arXiv:1812.10779.

Luo, W., Xing, J., Milan, A., Zhang, X., Liu, W., Zhao, X.,

and Kim, T.-K. (2014). Multiple object tracking: A

literature review. arXiv preprint arXiv:1409.7618.

Morioka, K., Mao, X., and Hashimoto, H. (2006). Global

color model based object matching in the multi-

camera environment. In IEEE/RSJ International Con-

ference on Intelligent Robots and Systems, pages

2644–2649. IEEE.

Neelima, C., Harsh, A., Aroma, M., and Dhruv, B. (2015).

Object-proposal evaluation protocol is ’gameable’.

CoRR, abs/1505.05836.

Ozuysal, M., Lepetit, V., and Fua, P. (2009). Pose estima-

tion for category specific multiview object localiza-

tion. In Proc. IEEE Conference on Computer Vision

and Pattern Recognition, pages 778–785. IEEE.

Ren, S., He, K., Girshick, R., and Sun, J. (2015). Faster R-

CNN: Towards real-time object detection with region

proposal networks. In Advances in Neural Informa-

tion Processing Systems, pages 91–99.

Ristani, E. and Tomasi, C. (2018). Features for multi-

target multi-camera tracking and re-identification. In

Proc. IEEE Conference on Computer Vision and Pat-

tern Recognition, pages 6036–6046.

Sermanet, P., Eigen, D., Zhang, X., Mathieu, M., Fergus,

R., and LeCun, Y. (2013). Overfeat: Integrated recog-

nition, localization and detection using convolutional

networks. arXiv preprint arXiv:1312.6229.

Szegedy, C., Reed, S., Erhan, D., Anguelov, D., and Ioffe, S.

(2014). Scalable, high-quality object detection. arXiv

preprint arXiv:1412.1441.

Xiao, T., Li, S., Wang, B., Lin, L., and Wang, X. (2017).

Joint detection and identification feature learning for

person search. In Proc. IEEE Conference on Com-

puter Vision and Pattern Recognition, pages 3415–

3424.

Xu, Y., Liu, X., Liu, Y., and Zhu, S.-C. (2016). Multi-view

people tracking via hierarchical trajectory composi-

tion. In Proc. IEEE Conference on Computer Vision

and Pattern Recognition, pages 4256–4265.

Zhao, Z., Zheng, P., Xu, S., and Wu, X. (2018). Ob-

ject detection with deep learning: A review. CoRR,

abs/1807.05511.

A New Approach Combining Trained Single-view Networks with Multi-view Constraints for Robust Multi-view Object Detection and

Labelling

461