A Framework for System-level Health Data Sharing

Mana Azarm

1

, Craig Kuziemsky

2

and Liam Peyton

1,*

1

Faculty of Engineering, University of Ottawa, 800 King Edward, Ottawa, Ontario, Canada

2

Office of Research Services, MacEwan University, 104 Avenue NW, Edmonton, Alberta, Canada

Keywords: System-level Health Data Sharing, Evaluation, Panel of Experts, Interoperability, Electronic Medical Record.

Abstract: Circle of care is the term that has been used to provide context for health data sharing that is allowed by

privacy regulation that occurs when a diverse team is collaborating to provide care to a patient. We introduce

the concept of system-level health data sharing to capture the totality of health data that exists for a patient in

a healthcare system across multiple health care organizations. MyPHR is a system-level health data-sharing

framework that guides any healthcare system to set up interoperable, patient-centred health data sharing. We

briefly introduce the components of MyPHR framework and then discuss its evaluation by a panel of experts

who reviewed a demonstration walkthrough of the interfaces and data sharing that the framework supports.

1 INTRODUCTION

Health data can be shared at three levels. First, it can

be shared within the boundaries of a single health care

organization (HCO). In this case, the HCO does not

interact with other HCOs so data is not shared outside

the HCO. Patients can be involved in their care

delivery through a HCO-specific portal that grants

access to their personal health data. We call this

single-HCO health data sharing (Azarm, Peyton,

Backman, & Kuziemsky, 2017).

Second, it can be shared within a group of HCOs

who agree to acquire and use a single system in order

to facilitate collaboration and data sharing. We call

this multi-HCO health data sharing. An example of

multi-HCO sharing is the TakeCare system (Cars, et

al., 2013) that a few hospitals in Stockholm use to

enable data, process, and contextual interoperability

(Kuziemsky, 2013) within this alliance. However, no

interaction is supported with HCOs outside of this

alliance.

Finally, at the third level there is HCO-

independent data sharing. Any HCO can share and

access health date through a medium that allows the

flow of data from and to the existing Electronic

Medical Record (EMR) systems. Although not as

successful as we would expect, Microsoft

HealthVault is an example of HCO-independent data

sharing. (Sunyaev, Kaletsch, & Krcmar, 2011).

*

https://engineering.uottawa.ca/people/peyton-liam

A framework for system-level health data sharing

offers the potential to support better patient-centred

care (Haux, 2006). A circle of care (Donga, Samavia,

& Topaloglou, 2015) refers to a patient and a team of

healthcare providers who are providing care to the

patient in order to address a common healthcare goal.

A system-level circle of care covers all healthcare

providers across an entire health system (Gaynor, Yu,

Andrus, Bradner, & Rawn, 2014) who are providing

care to a single patient to address multiple goals

without necessarily collaborating or being aware of

each other (Azarm, Backman, & Kuziemsky, 2019).

1.1 Interoperability

Interoperability can be defined as the ability to

exchange and use information across different

organizations, enabling cooperation between their

entities (Benson & Grieve, 2016). Three types of

healthcare interoperability have been defined: data,

process, and contextual (Kuziemsky, 2013). Data

interoperability refers to the syntactical level of data

and whether it is machine interpretable across different

systems. Process interoperability focuses on the system

users and the processes they engage in. Process

interoperability requires system users to be able to use

and interpret data across the various tasks of health care

delivery. Finally, contextual interoperability refers to

the political and social environment where the

514

Azarm, M., Kuziemsky, C. and Peyton, L.

A Framework for System-level Health Data Sharing.

DOI: 10.5220/0008986305140521

In Proceedings of the 13th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2020) - Volume 5: HEALTHINF, pages 514-521

ISBN: 978-989-758-398-8; ISSN: 2184-4305

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

healthcare providers operate and includes different

legislation and/or social norms that can help or hinder

the shared care provided to a patient when delivered

across different providers or settings.

1.2 Quadruple Aim

Berwick et al. introduced the Triple Aim framework

for improving the healthcare delivered to individuals

in a balanced manner. The triple aim- comprises three

principles: improving the individual experience of

care, improving the health of population, and

reducing per capital cost of care for population

(Berwick, Nolan, & Whittington, 2008). However,

the success of triple-aim principles is dependent on

effective healthcare organizations and healthcare

workforce (Sikka, Morath, & Leape, 2015).

Therefore, the quadruple aim framework emerged by

introducing a fourth principle of improving the

experience of providing care (Sikka, Morath, &

Leape, 2015). The fourth principle is about care of the

providers and enhancing the work life of providers to

address emerging issues such as physician burnout

(Bodenheimer & Sinsky, 2014). Through a

quantitative study, Quanjel et al. proved that how the

patient-perceived quality of care is improved by a

Primary Care Plus initiative in Netherlands. (Quanjel,

Spreeuwenberg, Struijs, & Baan, 2019).

1.3 Governance

Healthcare in Canada is legislated and funded by the

federal government and administered provincially

(Madore, 2005). Electronic health record (EHR)

governance models vary from country to country and

even from region to region. Various Scandinavian

countries, where health services are publicly funded,

have embarked on a successive path of regulating,

mandating and advancing use of electronic health

records. Their endeavours started in the 1990s to push

healthcare providers to deploy electronic health

record systems and continued until recent years when

they are moving towards the implementation of

national electronic health record systems. As an

example, Denmark published various national IT

strategies consisting of national action plans for

adoption of EHRs, pushing hospitals to employ HER

systems, and governing and harmonizing all EHR

systems in the country (Kierkegaard, 2015).

Although healthcare is delivered by the private

sector in the United States, the Health Information

Technology for Economic and Clinical Health Act

(HITECH) provisions of the American Reinvestment

and Recovery Act (ARRA) facilitated the adoption of

EHR systems by providing financial incentives for

those who succeed at digitizing their health data,

automation of their internal processes, and seamless

collaboration with other healthcare providers

(Marcotte, Kirtane, Lynn, & McKethan, 2014).

US hospitals embarked on a healthcare

automation journey from the 1960 with purchase of

mainframes to handle their administrative functions

(Collen & Ball, 2015). They continued purchasing

software to handle different business and admin

functions. In 1990s the hospitals were operating with

hospital information systems (HISs) and electronic

patient record (EPR) systems, when the

interoperability became an issue. With the new

vendors’ promise of better inter-organizational

interoperability, most hospitals could benefit from

exchange of information among their different

acquired software systems after 2010.

Multi-Hospital Information Systems (MHIS) -

systems serving three or more hospitals emerged in

late 1980s. They often entailed translation databases

and other technology to support the exchange of

information and forms across the organizations

involved (Collen & Ball, 2015). Meditech and Epic

are examples of EHR systems used worldwide.

2 MyPHR: A SYSTEM-LEVEL

HEALTH DATA SHARING

FRAMEWORK

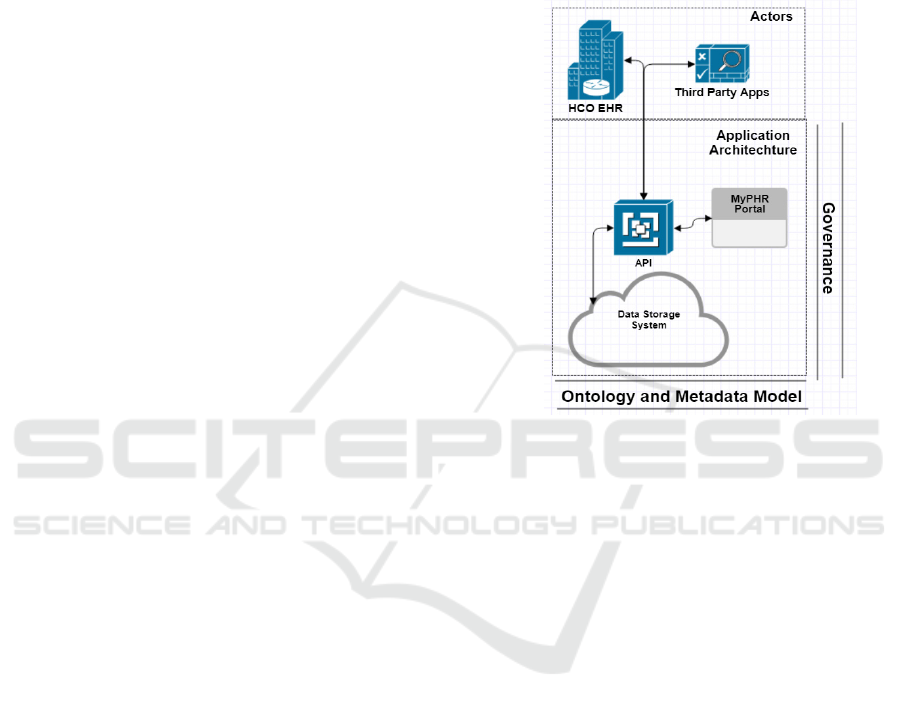

MyPHR is an application framework that guides a

healthcare system in setting up a system-level patient-

centered infrastructure so that they can have a

connected healthcare platform (Azarm, Backman, &

Kuziemsky, 2019). It has three components. First is

an information model that defines exactly what type

of information to share by everyone and in particular

has a simple definition of an episode so that all

stakeholders can understand what is shared without

being an expert. Second, it has an architecture that

defines a cloud hosted infrastructure like Gmail,

where the information can be stored and coordinated

amongst all people. And the final piece is the

governance model which defines who, how, and

when can access any piece of information in the

system, and who owns and maintains the system.

Healthcare systems in different political contexts

could use this framework to build a patient-centric

interoperable health information sharing platform.

MyPHR empowers patients to be more involved in

their care delivery, they have access to any piece of

information that’s shared on this platform about them,

A Framework for System-level Health Data Sharing

515

they can add/edit some information, and they can

always audit who and where has accessed their

information.

2.1 Demonstration Walkthrough

In order to test our proposed MyPHR architecture we

developed a prototype of the MyPHR portal pages as

well as a RESTful API that helps collect and share the

data that’s formatted in accordance with our proposed

ontology. Although, our ontology is mainly expressed

in a relational and schema-binding annotation, our

prototype data is stored in a No-SQL database

(Google Firebase). This speaks to the scalability of

our ontology.

Our MyPHR prototype web portal and API

include methods to facilitate the Client actions such

as viewing care history, updating health profile

information, view active practitioners, etc. The

prototype has methods that send updates to pull a

patient’s records from our API, collect information

entered by patients, and send them to the API for

permanent storage in a cloud environment. The API

has methods to register a patient with a healthcare

provider (through registering an episode), retrieve a

patient’s care history, update a patient’s care record

and terminate a patient’s episode of care with a

healthcare provider.

3 METHODOLOGY

Our research follows the guidelines of Design

Science Research (DSR) (Gregor, Müller, & Seidel,

2013) where we aim at improving the personal health

care experience through developing Health

Information Systems artifacts (Baskerville, Baiyere,

Gregor, Hevner, & Rossi, 2019). DSR is very relevant

when it comes to developing information system

artifacts that are innovative and solve real-world

problems (Hevner & Chatterjee, 2015). Our artifacts

include an architectural framework, web portal, API,

governance principles, and an ontology. Moreover,

through a literature review, we defined a customized

set of evaluation criteria for system-level healthcare

data sharing systems.

As per DSR guidelines, the project should start

with the specification of a problem/opportunity

(Baskerville, Baiyere, Gregor, Hevner, & Rossi,

2019). Therefore, through a Systematic Literature

Review (SLR) we aimed to identify the gaps in the

collaborative health care especially in Ontario, with

the possibility of finding a platform-independent

solution for interoperability (Azarm, Kuziemsky, &

Peyton, 2015). This step was achieved by studying

the current body of knowledge (Gregor, Müller, &

Seidel, 2013). At the end of the first iteration, we

proposed an architecture, defined a set of attributes

(minimum dataset) that flows through the proposed

architecture, and designed a web portal and an

underlying REST-full API.

Figure 1: MyPHR High Level Architecture.

In our second iteration of DSR, we honed in on

the idea of an ontology, and how we can make our

minimum data set more streamlined. Our ontology

was developed using an ontology development

methodology (Noy & McGuinness, 2001), and we

benchmarked HL7 FHIR (Azarm & Peyton, 2018).

Based upon Noy’s ontology development guidelines,

our benchmarking of the FHIR was to conform with

a popular health data standardization entity.

The third iteration was conducted to address

regulation, security and authorization concerns. We

focused on developing a set of data governance

principles in our third DSR iteration. We studied a

data sharing application that a group of researchers at

Elizabeth Bruyère hospital in Ottawa had developed

through a third party software company called NexJ.

We then studied their application (P2H) as a case with

regards to MyPHR framework to test our proposed

governance principles (Azarm, Backman, &

Kuziemsky, 2019). At the end of this iteration, we

came up with the concept of “system level circle of

care”.

Our fourth iteration involved prototyping the

architecture to pinpoint the actors and the interfaces.

We conducted a usability study through a Patient

Case Study that showcased our expectations from the

HEALTHINF 2020 - 13th International Conference on Health Informatics

516

prototype and how they were met. At the end of this

cycle, we conducted an evaluation with a

demonstration walkthrough reviewed by a panel of

experts (Agarwal, et al., 2016). We gathered a review

panel consisting of 5 experts in the healthcare

technology domain. This panel of experts included a

general practitioner, a nurse practitioner, two

healthcare technology directors, and a healthcare

management scholar and thought leader.

4 EVALUATION

4.1 Evaluation Criteria

We introduced a set of evaluation criteria to evaluate

frameworks and approaches in healthcare data

sharing. The evaluation criteria can be leveraged to

analyse any data sharing framework in the same

domain. We used the evaluation criteria to evaluate

our proposed application framework and compared it

against some related works.

The set of evaluation criteria identified in this

section were derived from:

1. Analysis of the related literature

2. Gap analysis of the current practices in hospital

and community care

3. Government regulations and industry norms and

concerns

4. Feedback from domain experts and practical

experience

5. Experiences we acquired while working through

our case studies.

We divided our evaluation criteria into 3 categories

depending on the domain and/or the source.

4.1.1 System-level Interoperability

System-level interoperability is our first set of

evaluation criteria where we discuss three aspects of

interoperability i.e. data, process, and context.

The main question in data interoperability is: Is

sharing of data available across various platforms?

With the patient’s care being transferred from one

HCO to another, there follows a need to transfer their

health data as fast as possible in a secure manner. The

systems that can enable their users to share data

regardless of their platform, will get a full score for

this criteria.

For process interoperability we ask: Are we able

to align and map processes across the boundaries of

organizations? When a system is not capable of

collaborating with other organizations and align their

processes with those of other healthcare providers,

they would get a NA; when the system has established

allegiance with a group of other healthcare systems

and they can all map their processes to each other,

they would be partially interoperable, hence a P is

assigned; Finally, when processes can be translated

and mapped within any organization, that system

would get an A.

Context interoperability investigates if a system

is capable of operating efficiently across different

political/legislative contexts? From this perspective,

a perfect system is capable of crossing various

political environments e.g. provinces or countries

without losing its cohesion and seamless integrity.

4.1.2 System-level Quality of Care

The Quadruple Aim framework that aims at

improving the healthcare experience for all

stakeholders is employed for this category of criteria.

The evaluation criteria in this category are also a

product of the Canadian Institute for Health

Information (CIHI) framework. Based on CIHI, a

care of quality is evidence-based, patient-centric,

timely, and safe (CIHI, 2011). The key to evidence-

based care is to be able to share previous experiments

and experiences. Below, we introduce the criteria in

this category.

Evidence-based: this metric comes from the

CIHI framework. It focuses on how easy it is to

support medical evidence or other processes across

different platforms? The perfect system supports

sharing of the evidence/process regardless of

platform type.

Right Level of Details: the fourth principle of the

quadruple aim framework is about improving the

experience of providing care. The amount of data we

gather in this day and age flood the healthcare

workers with enormous amount of information that

can leave them overwhelmed, and bear counter-

intuitive consequences. What can support many

healthcare workers is just the right amount of data at

the right time. Therefore, we will examine if the

framework under investigation can facilitate the

provision of just the right level of details in order to

support a healthcare worker in fulfilling their duties.

Patient-centric: this criterion crosses the two

frameworks of CIHI (patient-centric) and Quadruple

aim (patient’s care experience). It measures how

informed a patient is in their care delivery process.

However, it’s not only about the quantity of data, but

it’s also about the breadth of data, and the care

episodes and organizations. A perfect system would

enable the patients to access any piece of information

available on the data sharing platform.

A Framework for System-level Health Data Sharing

517

Timely: this criterion from CIHI framework

captures the essence of the two Quadruple Aim

principles of patient, and provider experience. If

required information is received in a timely manner,

this could have a positive impact on the experience of

the individuals on both ends of care delivery. It

assesses if system users (patients or providers) have

the means to autonomously access the information in

a timely manner. Here, the ideal system makes the

data available and accessible in real-time as they are

generated.

Cost: this criterion comes from the Quadruple

Aim criteria. It focuses on how the overall cost of

healthcare can be decreased. Therefore, the question

is if the system helps with any net savings. If the costs

of acquiring the system are less than the alternative

ways of solving the same problems, that health data

sharing system is regarded as successful.

Health: another Quadruple Aim criterion. Here

we talk about improving the health of the population.

Does the system under investigation help achieve this

goal in any way?

4.1.3 System-level Privacy and

Confidentiality

Within this category we introduce two criteria; one

for regulation compliance, and one for addressing

privacy concerns.

Regulations Compliant: in this section we

evaluate systems based on how complaint or

adaptable they are to health regulations. If a system

would not pass regulations without major

modifications, it is deemed as a failure.

Privacy: personal health information is

considered one of the most confidential type of

information. Therefore, it’s important that the system

we employ, keeps the integrity and confidentiality

requirements on the forefront of their specifications.

4.2 Panel of Experts Review

We gathered 5 experts to review a prototype of

MyPHR framework. In order to provide context for

the demo, we first gave an overview of MyPHR

framework and its three major components:

Ontology, Governance and architecture. Then, we

walked them through a demo of a MyPHR

framework-compliant prototype software application.

During the framework overview and demo

walkthrough there was much free-form discussion

and feedback. At the end of the session we asked the

experts to give us more structured feedback, using the

evaluation criteria we have set as the “objectives to

meet” for our research. For each criterion, we gave a

rubric that helped quantify the evaluation of the

criterion. The experts were also encouraged to give us

comments and free form feedback, either specific to

a criterion, or not.

4.2.1 Evaluation Rubric

Our evaluation rubric lists the evaluation criteria that

we laid out in section 4.1. We asked each expert to

rate our framework against our evaluation criteria

using a 5-point scale with the following values: Not

Satisfactory, Below Average, Average, Above

Average, or Satisfactory. To quantify our score

levels, we added a numerical scale (1-10) to our 5-

point scale of “Non-Satisfactory” to “Satisfactory”

levels. For example, a non-satisfactory evaluation of

a criterion could bear a numeric score of 1 or 2. We

also invited our experts to add any additional

comment in a free text and descriptive format. Our

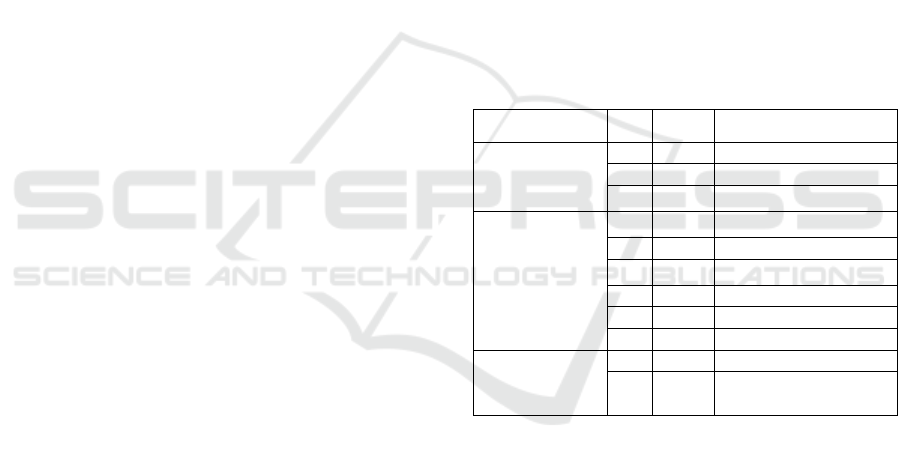

evaluation rubric is shown in Table 1.

Table 1: Evaluation rubric.

Domain ID Weight Criteria

System-Level

Interoperability

I1 5 Data interoperability

I2 3 Process interoperability

I3 1 Context interoperability

System-Level

Quality of Care

Q1 1 Evidence-based

Q2 1 Right level of details

Q3 5 Patient-centric

Q4 5 Timely

Q5 1 Cost

Q6 1 Health

System-Level

Privacy and

Confidentiality

P1 1 Privacy

P2 5 Regulations compliance

4.2.2 Expert Feedback

During the sessions and through written comments,

we received some valuable insights and suggestions

that we have summarized below:

1. There shall be a patient identification and

matching logic. In Ontario, OHIP number does

not include individuals from RCMP and the

military.

2. Experts were interested in seeing a place for

smart devices in our proposed architecture.

3. Experts were interested in seeing how

appointments fit into the Episode concept e.g. a

list for appointments that is separate from the list

of episodes.

4. Experts were interested in a different

presentation format for the health data. For

HEALTHINF 2020 - 13th International Conference on Health Informatics

518

example: Diagnoses in reverse chronological,

and with a short label/comment.

5. Caregiver information was not clear about

connotations such as power of attorney for

personal care; hierarchy of substitute decision

makers; primary care giver.

6. The data points that should be editable by the

Clients? e.g. diet, advanced directives.

7. The information presented on the prototype

portal such as dates did not always follow a

consistent format.

8. Experts were interested to see practitioners’

qualification/speciality level of primary

physician on the prototype portal.

9. Experts were looking for more clarification

around service language as it can be assumed

any of the following: preferred service

language, mother tongue, actual language of

service.

10. Experts were not certain where user comments

can be placed.

11. Experts needed more clarity on meta-data.

12. The governance principles around HICs’

visibility on patient data was discussed. Experts

suggested to allow HICs’ access to patient data

to be beyond the active status of their episodes.

13. Experts identified an opportunity for new and

improved functionalities that can be added to

HCO electronic record systems. They thought

the framework as presented likely encounters

few technical hurdles, and provides for a

platform agnostic approach for sharing data

across multiple healthcare information systems.

14. The experts suggested that while the framework

and API approach would provide a near real-

time solution, the various HCOs may determine

to interact with the data in either a batch mode

or the preferred triggers to flow information

across. Each HCO may also make

determinations as to which episodes of care

would be included in the information flow,

potentially causing inconsistency in the

frameworks picture of the patient/client.

15. The adoption barriers identified by experts

were: Financial barriers for HCOs to invest in

building the interfaces and rules to submit data

through to the API; Political barriers in

determining where the primary Health

Authority role, at a patient-centric service,

should lie; Perception of the shared data and

what it is providing, i.e. some HCOs will want

to increase the scope of what is shared, while

others may not be willing to participate; Privacy

controls may need to be enhanced, in that the

clients and/or HCOs will want to have some

control as to which episodes of care are visible

across all partners in the system, or just to some.

16. Experts also identified a few barriers to

implementation: Political barriers, where a

centralized Health Authority to host the

framework and centralized/consolidated data

needs to be determined. Within some regions,

the pendulum swings as to whether a centralized

or decentralized approach to a Health Authority

that would hold client level data, would be put

in place. HCOs, particularly individual family

physicians and Family Health Groups, may be

reluctant to participate, most likely out of cost.

The cost to update their systems to interface

with the API would be imposed to the individual

practices in many regions, if this would be a

mandated system to be used. To solve this, the

Health Authority may need to help fund vendors

to build interfaces, and thus help bring in the

smaller HCOs.

17. Some experts were not sure if the criterion “right

level of detail” is from patients point of view,

physicians, HCOs or the regulator.

4.2.3 Evaluation Results

After the Panel of Expert Review Session, we

reviewed both the structured and unstructured

feedback. The unstructured feedback consisted of the

verbal comments made during the session (notes were

taken) and the written comments appended to the

structured feedback that was laid out in section 4.2.2.

We categorized the comments into 5 groups. In

the review session, we had presented 4 components to

the experts: the demo data, the look and feel of the

prototype application, the MyPHR framework, and

our proposed evaluation criteria. Naturally, we

wanted to identify and isolate the comments related

to each of the 4 aforementioned review session

components. Therefore, we created 4 categories of

usability (for prototype application), demo data,

framework, and criteria. Furthermore, we noticed that

some expert feedback bore some degree of

misunderstanding or communication problems.

Therefore, we added a fifth category that

encompasses the comments entailing a

misunderstanding of a notion.

1. Prototype Usability: Feedback on the prototype

application (look and feel and organization)

2. Demo data: Feedback on the demo data used in

the presentation

3. Framework: Feedback on the elements of

MyPHR framework

A Framework for System-level Health Data Sharing

519

4. Criteria: Feedback on the appropriateness,

relevance or poor definition, or

misunderstanding of the criteria we had

specified to use for evaluation

5. Misunderstanding: The comments that indicated

certain aspects of the framework overview or

demo were either not properly communicated or

not properly understood in terms of the context,

or objectives.

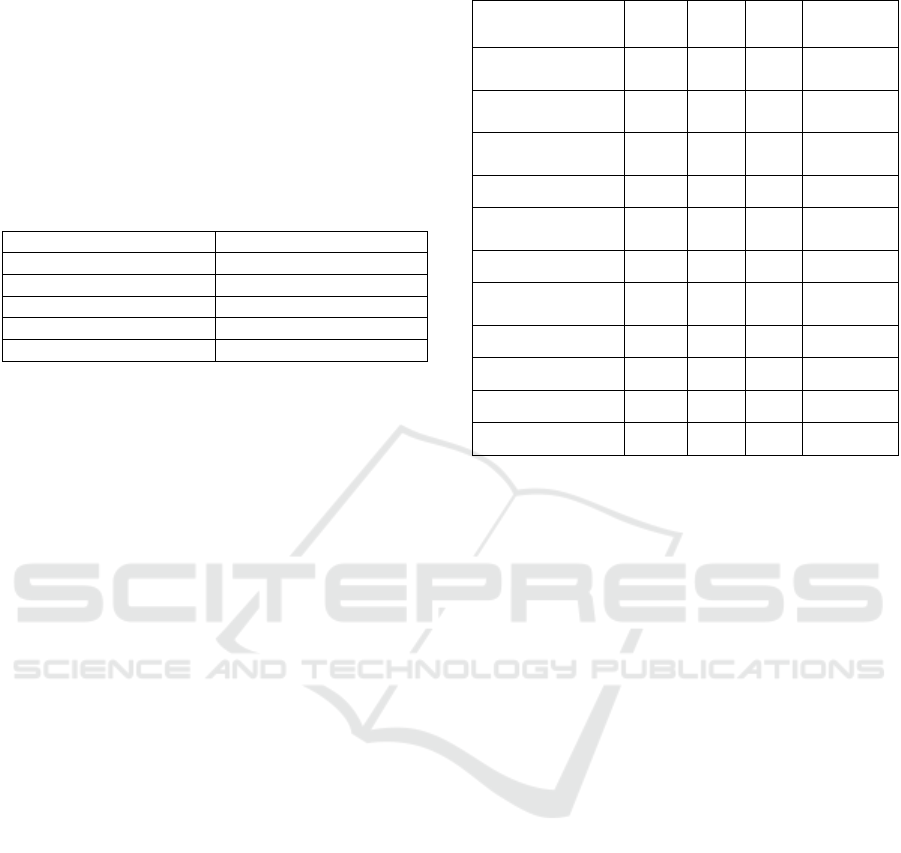

Table 2: Comment categories.

Category

Comment ID

Prototype Usability 7,8

Demo Data 3,4, 6,8,9,10

Framework 1,2,11,12,13,14,15,16

Criteria 17

Misunderstanding 5

Then, we analyzed the scores given to each

criterion by experts. As for the scores given, of all the

scores we received from the filled evaluation rubrics

(Table 1), the experts rated the prototypes 67% of the

times “above average”, 19% of the times “average”,

and 14% of the times “below average”.

When a criterion had a score variability (i.e.

difference between the highest score and the lowest

score given) of less than 4 among experts, we deemed

that criterion as having experts’ consensus, and when

there was 4 or more score variability, we deemed that

as divergent opinions about that criterion. We should

point out that when the score variability was between

3 and 5, we manually inspected the results to see

where the majority of the experts landed. As

summarized in Table 3, experts had consensus on 5

criteria and had divergent opinions on the other six.

Based on score averages, the experts scored MyPHR

average or above average on all criteria.

We can conclude from the evaluation results that

MyPHR appeared very strong on I1-Data

Interoperability, Q3-Patient Centric, Q4-Timely, and

P2-Regulations compliance, whereas I3-context

interoperability, and P1-privacy were not as strong.

Although our evaluation criteria touch on various

aspects of interoperable, patient-centric and

successful healthcare platforms, we did not put our

focus equally on all of them. We decided to

concentrate more on developing a patient-centric

framework that allows all healthcare stakeholders to

communicate their data in a timely and cost-efficient

manner. Therefore, not all our evaluation criteria have

the same weight for us. To address that issue we

created a three-scale numeric weight (1, 3, 5) based

on how influential they were in our research.

Table 3: Expert scores.

Criteria AVG Min Max Overall

Data

interoperability

7.9 6 9.5 consensus

Process

interoperability

6.5 4 8 divergent

Context

interoperability

5.0 4 6 consensus

Privacy 5.9 4 9.5 divergent

Regulations

compliance

8.9 8 9.5 consensus

Evidence-based 6.0 4 8 divergent

Right level of

details

6.6 4 8 divergent

Patient-centric 8.7 8 9.5 consensus

Timely 8.6 7 9.5 consensus

Cost 6.6 5 9.5 divergent

Health 6.6 2 8 divergent

5 CONCLUSIONS AND FUTURE

WORK

In this paper we evaluated a system-level patient-

centric health data sharing framework that we had

developed previously. One of the methods used for

this evaluation was a review by a panel of experts.

The review produced structured and unstructured data

that was analysed to improve our framework.

The reviewers also suggested features that can be

considered for the future work. Developing a

universal client matching algorithm and identification

system instead of using patient health card number

would perfectly fit in the next step agenda. Enabling

the flow of information from the personal smart

devices and the changes it would impose on our

proposed ontology is another good area for future

work. Furthermore, inclusion of richer metadata

would enable better patient-specific privacy and

security settings when it comes to setting proper and

access levels for different users. This is an area that

can be easily added to our existing ontology. Another

enhancement we may tap into is to open up HICs’

access to client information to beyond their active

status in the system-level circle of care, i.e. once a

healthcare provider takes on a client, they have

persistent access to that client’s information without

time constraints.

In the context of the structured review results, and

through factoring the weights into the scores we

HEALTHINF 2020 - 13th International Conference on Health Informatics

520

received from our experts, we came to a GPA of 70%

for all criteria considered. This figure tells us that our

proposed framework is viable, although there is a lot

of room for improvement. The proposed framework

is perceived to improve the current situation.

ACKNOWLEDGEMENTS

This research was funded by NSERC and OGS.

REFERENCES

Agarwal, S., LeFevre, A. E., Lee, J., L’Engle, K., Mehl, G.,

Sinha, C., & Labrique, A. (2016). Guidelines for

reporting of health interventions using mobile phones:

mobile health (mHealth) evidence reporting and

assessment (mERA) checklist. the bmj, 352-362.

Azarm, M., & Peyton, L. (2018). An Ontology for a Patient-

Centric Healthcare Interoperability Framework.

IEEE/ACM International Workshop on Software

Engineering in Healthcare Systems (SEHS).

Gothenburg, Sweden: IEEE Xplore.

Azarm, M., Backman, C., & Kuziemsky, C. (2019). System

Level Patient-Centered Data Sharing. 2019 IEEE/ACM

1st International Workshop on Software Engineering

for Healthcare (SEH) (pp. 45-48). Montreal: IEEE.

Azarm, M., Kuziemsky, C., & Peyton, L. (2015). A Review

of Cross Organizational Healthcare Data Sharing. The

5th International Conference on Current and Future

Trends of Information and Communication

Technologies in Healthcare (ICTH-2015). 63, pp. 425-

432. Berlin: Elsevier.

Azarm, M., Peyton, L., Backman, C., & Kuziemsky, C.

(2017). Breaking the Healthcare Interoperability

Barrier by Empowering and Engaging Actors in the

Healthcare System. Procedia Computer Science. 113,

pp. 326-333. Lund, Sweden: Elsevier.

Baskerville, R., Baiyere, A., Gregor, S., Hevner, A., &

Rossi, M. (2019). Design Science Research

Contributions: Finding a Balance between Artifact and

Theory. Journal of the Association for Information

Systems, 358-376.

Benson, T., & Grieve, G. (2016). Principles of Health

Interoperability SNOMED CT, HL7 and FHIR (Third

ed.). (T. Benson, Ed.) London, UK: Springer.

Berwick, D. M., Nolan, T. W., & Whittington, J. (2008).

The Triple Aim: Care, Health, And Cost. Health

Affairs, 27(3).

Bodenheimer, T., & Sinsky, C. (2014). From triple to

quadruple aim: care of the patient requires care of the

provider. Ann Fam Med, 573-576.

Cars, T., Wettermark, B., Malmstrom, R., Ekeving, G.,

Vikstrom, B., Bergman, U., ... Gustafsson, L. (2013).

Extraction of Electronic Health Record Data in a

Hospital Setting: Comparison of Automatic and Semi-

Automatic Methods Using Anti-TNF Therapy as

Model. Basic & Clinical Pharmacology & Toxicology,

112, 329-400.

CIHI. (2011). Learning From the Best: Benchmarking

Canada’s Health System. Canadian Institute for Health

Information.

Collen, M. F., & Ball, M. J. (2015). The History of Medical

Informatics in the United States. Baltimore , MD , USA:

Springer-Verlag London.

Donga, X., Samavia, R., & Topaloglou, T. (2015). COC:

An Ontology for Capturing Semantics of Circle of Care.

Procedia Computer Science. 63, pp. 589-594. Elsevier.

Gaynor, M., Yu, F., Andrus, C. H., Bradner, S., & Rawn, J.

(2014, March). A general frame work for

interoperability with applications to health care. Health

PolicyandTechnology, 3(1), 3-12.

Gregor, S., Müller, O., & Seidel, S. (2013). Reflection,

abstraction, and theorizing in design and development

research. Proceedings of European Conference on

Information Systems, (p. 74). Utrecht, The Netherlands:

ECIS.

Haux, R. (2006). Health information systems : past, present,

future. nternational journal of medical informatics ,

75(3), 268-281.

Hevner, A. R., & Chatterjee, S. (2015). Design Science

Research in Information Systems. Association for

Information Systems (AIS).

Kierkegaard, P. (2015). Governance structures impact on

eHealth. Health Policy and Technology, 4, 39-46.

Kuziemsky, C. (2013). Interoperability, A Multi-Tiered

Perspective on Healthcare. In M. Ángel Sicilia, & P.

Balazote, Interoperability in Healthcare Information

Systems Standards Management and Technology. IGI

Global.

Madore, O. (2005, May 17). Parliament of Canada.

Retrieved February 11, 2017, from http://www.lop.parl.

gc.ca/content/lop/researchpublications/944-e.pdf:

http://www.lop.parl.gc.ca/content/lop/researchpublicat

ions/944-e.pdf

Marcotte, L., Kirtane, J., Lynn, J., & McKethan, A. (2014).

Integrating Health Information Technology to Achieve

Seamless Care Transitions. Journal of Patient Safety.

Noy, N. F., & McGuinness, D. L. (2001). Ontology

Development 101: A Guide to Creating Your First

Ontology. Stanford University, Knowledge Systems

Laboratory Tech report KSL-01-05. Palo Alto: Stanford

University.

Quanjel, T., Spreeuwenberg, M. D., Struijs, J. N., & Baan,

C. A. (2019, May). Substituting hospital-based

outpatient cardiology care: The impact on quality,

health and costs. Plus One, 14(5).

Sikka, R., Morath, J. M., & Leape, L. (2015). The

Quadruple Aim: care, health, cost, and meaning in

work. The BMJ, 608-610.

Sunyaev, A., Kaletsch, A., & Krcmar, H. (2011).

Comparative Evaluation of Google Health API vs.

Microsoft Healthvault API. Proceedings of the Third

International Conference on Health Informatics

(HealthInf 2010) (pp. 195-201). Valencia, Spain:

SSRN.

A Framework for System-level Health Data Sharing

521