Evaluating the Effect of Justification and Confidence Information on

User Perception of a Privacy Policy Summarization Tool

Vanessa Bracamonte

1

, Seira Hidano

1

, Welderufael B. Tesfay

2

and Shinsaku Kiyomoto

1

1

KDDI Research, Inc., Saitama, Japan

2

Goethe University Frankfurt, Frankfurt, Germany

Keywords:

Privacy Policy, Automatic Summarization, Explanation, User Perception, Trust.

Abstract:

Privacy policies are long and cumbersome for users to read. To support understanding of the information

contained in privacy policies, automated analysis of textual data can be used to obtain a summary of their

content, which can then be presented in a shorter, more usable format. However, these tools are not perfect

and users indicate concern about the trustworthiness of their results. Although some of these tools provide

information about their performance, the effect if this information has not been investigated. In order to address

this, we conducted an experimental study to evaluate whether providing explanatory information such as result

confidence and justification influences users’s understanding of the privacy policy content and perception of

the tool. The results suggest that presenting a justification of the results, in the form of a policy fragment,

can increase intention to use the tool and improve perception of trustworthiness and usefulness. On the other

hand, showing only a result confidence percentage did not improve perception of the tool, nor did it help to

communicate the possibility of incorrect results. We discuss these results and their implications for the design

of privacy policy summarization tools.

1 INTRODUCTION

The introduction of regulations such as the

GDPR (European Parliament, 2016) has encour-

aged recent efforts to make privacy policies more

understandable to users. However, despite serving as

a defacto contractual document between the user and

service provider, privacy policies remain too long and

difficult for users to read and comprehend.

Alternatives to these lengthy pieces of text have

been proposed, such as shorter notices in graphical

and standardized formats (Gluck et al., 2016; Kelley

et al., 2010) that can work to communicate informa-

tion about the privacy policy to users. Since these

formats are not employed by all companies, there are

projects such as ToS;DR (ToSDR, 2019) which pro-

vides summaries of existing privacy policies. ToS;DR

relies on a community of users to manually analyze

and categorize the content of these privacy policies,

which makes it very difficult to scale the work to

cover every existing privacy policy.

There are also projects that propose to automatize

the analysis of privacy policies using machine learn-

ing techniques. Privacy policy summarization tools

are automated applications, implemented using differ-

ent machine learning and natural language processing

techniques, that analyze the content of a privacy pol-

icy text and provide a summary of the results of that

analysis (Figure 1).

Figure 1: Automated privacy policy summarization.

Examples of these projects are Privee (Zimmeck and

Bellovin, 2014), PrivacyCheck (Zaeem et al., 2018),

Polisis (Harkous et al., 2018) and PrivacyGuide (Tes-

fay et al., 2018b). These tools can provide a solution

to the problem of scale in the analysis of privacy poli-

cies, but they introduce a different challenge: users

express concern regarding the trustworthiness and ac-

curacy of a privacy policy summarization tool when

they know that the process is automated (Bracamonte

et al., 2019).

142

Bracamonte, V., Hidano, S., Tesfay, W. and Kiyomoto, S.

Evaluating the Effect of Justification and Confidence Information on User Perception of a Privacy Policy Summarization Tool.

DOI: 10.5220/0008986101420151

In Proceedings of the 6th International Conference on Information Systems Security and Privacy (ICISSP 2020), pages 142-151

ISBN: 978-989-758-399-5; ISSN: 2184-4356

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

The design of the result summary of automated

privacy policy summarization tools often follows

guidelines for usable formats aimed at presenting

privacy policy information. Research on alterna-

tive ways of presenting privacy policies indicates that

shortened versions of these texts, supplemented with

icons, can provide users with the necessary informa-

tion for them to understand their content (Gluck et al.,

2016). Not only the length of the privacy policy is

important, but also how the information is presented:

standardized graphical formats can better provide in-

formation than text (Kelley et al., 2009; Kelley et al.,

2010). In addition to these design considerations,

some privacy policy summarization tools also provide

information related to the reliability and performance

of the machine learning techniques used. However,

there are no studies that evaluate how this informa-

tion affects user perception of these type of tools. Al-

though studies have evaluated perception and under-

standing of usable privacy policy formats produced

by humans, aspects related to the reliability of the pri-

vacy policy information have naturally not been pre-

viously considered in the research.

The purpose of this study is to address this gap. To

achieve this, we conducted an experiment to evaluate

understanding and perception of the results of an au-

tomated privacy policy summarization tool. We cre-

ated different conditions based on whether the results

of the tool showed information about justification and

confidence of the results, and asked participants about

the content of the privacy policy and their perception

of the tool in each of these conditions. The results

show that justification information increased behav-

ioral intention and trustworthiness and usefulness per-

ception. Justification information also helped users

qualify the answers provided by the tool, although the

effect was not present for every aspect of the policy.

Confidence information, on the other hand, did not

have a positive effect on perception of the tool or on

understanding of the results of the tool. We discuss

these findings in the context of providing usable auto-

mated tools for privacy policy summarization and the

challenges for the design of these tools.

2 RELATED WORK

2.1 Information Provided by Automated

Privacy Policy Summaries

Automated privacy policy summarization tools

mainly provide information about the privacy policy

to the user: Privee (Zimmeck and Bellovin, 2014)

and PrivacyCheck (Zaeem et al., 2018), for exam-

ple, show a summary of a privacy policy based on

pre-established categories and risk levels, and the vi-

sual design of the summary includes icons and stan-

dard descriptions. PrivacyGuide (Tesfay et al., 2018a)

shows an icon-based result summary as well, and in

addition provides the fragment of the original privacy

policy. These tools are similar in the sense that they

provide a standardized category-based summary of

the privacy policy, although they use different crite-

ria for that categorization and for assigning risk lev-

els. Polisis (Harkous et al., 2018) takes a different and

more complex approach for the privacy policy analy-

sis and classification, but also provides standard cat-

egories and fragments of the original privacy policy

text. A related chatbot tool, PriBot, returns fragments

of the privacy policy in response to freely composed

questions from users.

The automated tools mentioned sometimes pro-

vide explanatory information about the results. Two

of the tools mentioned in the previous section, Pri-

vacyGuide and PriBot, include information that may

be considered as explanation of performance of the

tool. PrivacyGuide provides a fragment of the origi-

nal privacy policy that the tool identifies as related to

a privacy aspect and uses to assign a risk level. Pri-

Bot, on the other hand, shows a confidence percentage

that works as a proxy for how accurately the fragment

it returns answers a user’s question (Harkous et al.,

2018).

2.2 Explanations of Automated Systems

When results are provided by automated tools, users

have questions about the reliability of those results.

One way of influencing this perception is through pro-

viding some explanation about the system. For ex-

ample, offering some justification for outcomes can

positively influence perception of accuracy (Biran and

McKeown, 2017) and information about accuracy can

improve trust (Lai and Tan, 2019). Although there

is no predefined way of communicating to the user

about the performance of automated privacy policy

summarizing tools, existing tools provide some infor-

mation. PrivacyGuide shows a fragment of the pri-

vacy policy, which can be classified as a justification

or support explanation (Gregor and Benbasat, 1999).

Similarly, information about the confidence of results

such as the one provided by PriBot can also be consid-

ered a dimension of explanation (Wang et al., 2016).

This serves as a measure of uncertainty (Diakopoulos,

2016) of the results and therefore as an indication of

performance.

Explanation information, including confidence,

Evaluating the Effect of Justification and Confidence Information on User Perception of a Privacy Policy Summarization Tool

143

has been found to positively influence trust when

users interact with automation (Wang et al., 2016).

However, the effect of explanations is not always pos-

itive. Research indicates that certain types of explana-

tory information about the performance of a system,

for example an F-score accuracy measure, may not

be useful in applications intended for a general audi-

ence (Kay et al., 2015). In addition, it has also been

found that too much explanation could have a nega-

tive effect on aspects such as trust (Kizilcec, 2016).

These studies show that simply providing more infor-

mation may not result in a positive effect; therefore,

it is important to evaluate the effect of explanatory in-

formation, such as justification and confidence, pro-

vided by privacy policy summarization tools.

One limitation in this area is that there are few user

evaluations of automated privacy policy summariza-

tion tools. For Polisis, a user study was conducted

that evaluated the perception of the accuracy of re-

sults; however, it was conducted independent from the

interface (Harkous et al., 2018). The study found that

users considered the results were relevant to the ques-

tions, although this perception differed from the mea-

sure of accuracy of the predictive model. A user study

was also conducted to evaluate whether PrivacyGuide

results, which found that the tool partially achieved

the goal of informing users about the risk of a privacy

policy and increasing interest in its content (Braca-

monte et al., 2019). The study also found that users

indicated concern about the trustworthiness of the tool

and the accuracy of its results. However, these studies

have not considered the effect of justification or confi-

dence information shown by these tools, and how this

explanatory information might affect trust.

3 METHODOLOGY

3.1 Experiment Design

The experiment consisted of a task to view the results

of the analysis of the privacy policy of an fictional on-

line shop, and answer questions about the content of

the privacy policy and about the perception of the tool

in general. We used a between-subjects design, with

a total of six experimental conditions. We defined the

experimental conditions as follows. A Control con-

dition that included only information about the result

of the privacy policy summarization. A Confidence

condition that included all the information from the

Control condition and added a confidence percentage

for the results. A Justification condition that included

all the information from the Control condition and

added justification in the form of a short fragments

from the original privacy policy. A Highlight condi-

tion, which was a second form of justification where

relevant words were emphasized in the privacy policy

fragments. Finally, two conditions that showed both

confidence percentage and justification (Justification

+ Confidence and Highlight + Confidence).

3.2 Privacy Policy Summary Result

We based the design of the privacy policy summary on

PrivacyGuide and defined that the result would corre-

spond to a low risk privacy policy, as defined by (Bra-

camonte et al., 2019). In PrivacyGuide, an icon in a

color representing one of three levels of risk (Green,

Yellow and Red) is assigned to each result category

(privacy aspect) depending on the content of the pri-

vacy policy corresponding to that aspect (Tesfay et al.,

2018b).

The Control condition result interface included

icons and descriptions of the risk levels for each pri-

vacy aspect. The Justification condition result in-

terface was based on the Control condition, and in-

cluded in addition a text fragment for each privacy

aspect. We selected the fragments from real privacy

policies, by running PrivacyGuide on the English lan-

guage privacy policies of well known international

websites that also provided an equivalent Japanese

language privacy policy. We chose those fragment

results that matched the privacy aspect risk level we

had defined, but took the fragment from the matching

Japanese language privacy policy. This procedure re-

sulted in fragments that were obtained from different

privacy policies; therefore, we reviewed and modified

the texts so that they would be congruent with each

other in style and content. We also anonymized any

reference to the original company. The Highlight con-

dition result interface was based on the Justification

result, and in addition emphasized the justification by

highlighting words relevant to the corresponding pri-

vacy aspect.

The Confidence condition was based on the Con-

trol condition, and showed in addition a confidence

percentage for each privacy aspect result. The con-

fidence percentages were set manually. The Justi-

fication+Confidence and Highlight+Confidence con-

ditions result interfaces showed all the information

described previously for the Justified/Highlight and

Confidence. Regarding the values of the confidence

percentages, since confidence and justification infor-

mation would be shown together for these last two

conditions, we set the confidence values by manu-

ally evaluating how accurately the fragments repre-

sented the privacy aspect risk level. Confidence per-

centages for the privacy aspect results ranged from

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

144

Figure 2: Experiment result screens. (a) Full results for the Confidence condition. (b) Fragment corresponding to one privacy

aspect in the Justification + Confidence condition. (c) Fragment corresponding to one privacy aspect in the Highlight +

Confidence condition. The result screens for the Control, Justification and Highlight conditions are similar to (a), (b) and (c),

respectively, with the exception that the confidence percentage is not included.

70% to 95%, with the exception of Privacy Settings.

For Privacy Settings, we set the confidence percentage

to 45% and chose a fragment that did not accurately

represent the corresponding privacy aspect. This was

done to evaluate the influence of incorrect informa-

tion and low confidence on users’ response to ques-

tions about the content of the privacy policy. Figure 2

shows the details of the interface.

The interfaces also included a help section at the

top of the result interface screen, which described

every element of the results from the privacy aspect

name to the confidence percentage (where applica-

ble). Because users need time to familiarize them-

selves with elements in a privacy notice (Schaub et al.,

2015), we included the help section to compensate for

the lack of time, although such a section would not

normally be prominently displayed.

3.3 Questionnaire

We included questions about the privacy policy, to

evaluate participants’ understanding of the privacy

practices of the fictional company based on what was

presented on the result interface. The questions were

adapted from (Kelley et al., 2010) and addressed

each of the privacy aspects (Table 1). We created

the questions so that the correct option would be a

positive answer (Definitely yes or Possibly yes) for all

questions except for the question corresponding to the

Protection of Children privacy aspect, where the cor-

rect option was left ambiguous. The only other ex-

ception was the Privacy Settings aspect, which was

assigned low confidence percentage and an incorrect

justification fragment (as defined in the previous sec-

tion). Therefore, in the experimental conditions that

included these pieces of information, we expected the

Evaluating the Effect of Justification and Confidence Information on User Perception of a Privacy Policy Summarization Tool

145

Table 1: Privacy policy content questions. Response options: Definitely yes, Possibly yes, Possibly no, Definitely no, It

doesn’t say in the result, It’s unclear from the result.

Privacy aspect Question

Data Collection Does the online store (company) collect your personal information?

Privacy settings Does the online store give you options to manage your privacy preferences?

Account deletion Does the online store allow you to delete your account?

Protection of children Does the online store knowingly collect information from children?

Data security Does the online store have security measures to protect your personal information?

Third-party sharing Does the online store share your personal information with third parties?

Data retention Does the online store indicate how long they retain your data?

Data aggregation Does the online store aggregate your personal information?

Control of data Does the online store allow you to edit your information?

Policy changes Does the online store inform you if they change their privacy policy?

Table 2: Questionnaire items. Response scale: Completely agree, Agree, Somewhat agree, Somewhat disagree, Disagree,

Completely disagree.

Construct Item

Useful The application answers my questions about the privacy policy of the online store

The application addresses my concerns about the privacy policy of the online store

The application is useful to understand the privacy policy of the online store

The application does not answer what I want to know about the privacy policy of

the online store (Reverse worded)

Trustworthy The results of the application are trustworthy

The results of the application are reliable

The results of the application are accurate

Understandable The results of the application are understandable

The reason for the results is understandable

Intention I would use this application to analyze the privacy policy of various online stores

I would use this application to decide whether or not to use various online stores

AI use The use of AI is appropriate for this kind of application

correct answer to not be a positive answer.

We included items for measuring behavioral in-

tention and perception of usefulness, understandabil-

ity and trustworthiness of the tool (Table 2). The

items were rated on a six-point Likert scale, ranging

from Completely disagree to Completely agree. We

also included a question addressing the perceived ap-

propriateness of using AI for this use case.

3.3.1 Translation and Review

The questionnaire was developed in English; since we

conducted the survey in Japan with Japanese partic-

ipants, we translated the questionnaire with the fol-

lowing procedure. First, two native Japanese speakers

independently translated the whole questionnaire, in-

cluding the statements explaining the survey and pri-

vacy policy summarization tool. The translators and

a person fluent in Japanese and English reviewed the

translated statements one by one, verifying that both

translations were equivalent to each other and had the

same meaning as the original English statement.

The reviewers found no contradictions in mean-

ing in this first step. The reviewers then chose the

translated statements that more clearly communicated

the meaning of the questions, instructions or expla-

nations. Finally, the translators reviewed the whole

questionnaire to standardize the language, since they

had originally used different levels of formality.

3.4 Data Collection

We conducted the survey using an online survey com-

pany, which distributed an invitation to participate in

the survey to their registered users. We targeted the

recruitment process to obtain a sample with sex and

age demographics similar to those of the Japanese

population according to the 2101 census (Statistics

Bureau, Ministry of Internal Affairs and Communi-

cations, 2010), but limited participation to users who

were 18 years-old or older. Participants were com-

pensated by the online survey company.

Participants were randomly assigned to one of the

six experimental conditions and filled the survey on-

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

146

line. We received the pseudonymized data from par-

ticipants from the online survey company, which also

included demographic data. In addition, the survey

also registered the total time taken for the survey.

The survey was conducted from December 12-14,

2018.

3.5 Limitations

The study had the following limitations. In the study,

we do not manipulate the risk level of the privacy pol-

icy, we only consider a privacy policy defined as low

risk. The number of privacy aspects and correspond-

ing risk levels result in a large number of possible

combinations, making it impractical to test them all.

Consequently, it may be that the results of the study

are not generalizable to other risk levels besides the

one chosen for the study.

In addition, we did not include a process to

validate that the participants had indeed compre-

hended every aspect of the result interface, beyond

the straightforward questions about the content of the

privacy policy. We considered that if we included

more detailed questions, the behavior of the partici-

pant would deviate further from a normal interaction

with these type of tools. Nevertheless, this means that

the results of this study reflect an evaluation of per-

ception rather than objective measures of comprehen-

sion.

Lastly, in the study we used PrivacyGuide’s pri-

vacy aspect categorization, which is based on the Eu-

ropean Union’s GDPR, and showed it to Japanese par-

ticipants. However, we consider that the GDPR-based

categories are relevant for our Japanese participants.

For one, there is a degree of compatibility between

the GDPR and Japanese privacy regulation (European

Commission, 2019). And the Japanese language pri-

vacy policies used in the experiment come from inter-

national websites aimed at Japanese audiences, and

are direct translations of English privacy policies cre-

ated to comply with the GDRP.

4 ANALYSIS AND RESULTS

4.1 Data Cleanup

The online survey returned a total of 1054 responses.

We first identified suspicious responses, defined as

cases with no variability of extreme response (all

questions answered with 1 or 6, which included re-

verse worded items for this purpose) and cases where

the total survey answer time was lower than 125 sec-

onds. We calculated this time considering a high read-

Table 3: Sample Characteristics.

n %

Total 944 100%

Gender Male 458 49%

Female 486 51%

Age 19-20s 168 18%

30s 175 19%

40s 200 21%

50s 185 20%

60s 216 23%

Job Government employee 35 4%

Company Employee 373 40%

Own business 59 6%

Freelance 17 2%

Full-time homemaker 174 18%

Part time 112 12%

Student 42 4%

Other 27 3%

Unemployed 105 11%

ing speed and the number of characters in the online

survey plus the result screen for the Control condition.

With these criteria, we identified 110 cases which

were manually reviewed and removed from further

analysis.

4.2 Sample Characteristics

The sample after data cleanup consisted of 944 cases

(Table 3). 51% of the participants were female, and

the age range was 19-69 years. The distribution these

demographic characteristics is similar to that of the

Japanese population (Statistics Bureau, Ministry of

Internal Affairs and Communications, 2010), as es-

tablished in the data collection process.

4.3 Effect on Responses to Questions

about the Content of the Privacy

Policy

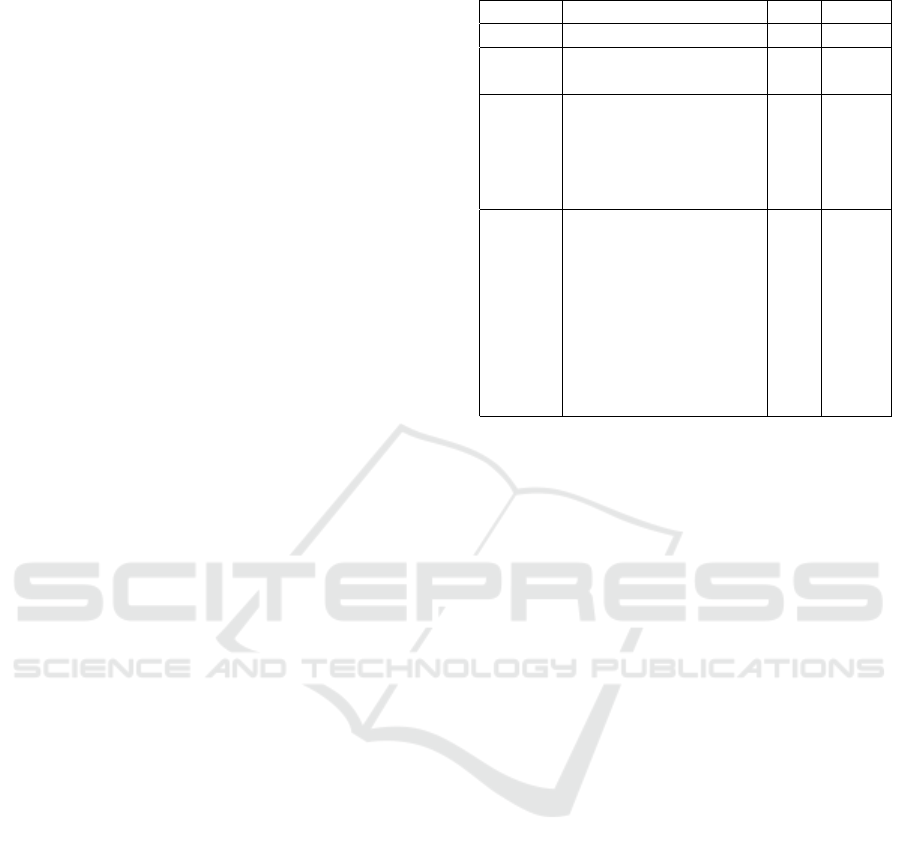

We analyzed the categorical responses to the ques-

tions about the privacy policy using chi-square tests.

We were interested in the differences between the

responses to each privacy aspect question, so we

used contingency tables to represent the relationship

between questions and answers in each experimen-

tal condition. As indicated previously, we wanted

to test whether participants had understood the re-

sults of the tool and whether differences in the in-

formation provided in each condition were reflected

in their answers. The results of the chi-square test

of independence are shown in Figure 3. Association

Evaluating the Effect of Justification and Confidence Information on User Perception of a Privacy Policy Summarization Tool

147

Figure 3: Association plot of the relationship between privacy policy questions and their responses for each experimental

condition. Blue and red areas indicate significantly higher and lower response proportion than expected (i.e if all questions

had the same response proportion), respectively.

plots (Meyer et al., 2006) were used to visualize ar-

eas with significantly higher or lower response pro-

portion, compared between privacy aspect questions.

The responses in the Control condition provide a

base for how participants understood the content of

the privacy policy from the results of the tool. The

majority of participants chose a positive answer for

all of the questions except the one corresponding to

Protection of Children, indicating that the result of

the tool communicated the expected information in

the Control condition. However, the results show

that there were no differences in the proportion of re-

sponses corresponding to the question regarding Pri-

vacy Settings compared to other aspects in any of the

experimental conditions. As can be observed in Fig-

ure 3, there are no significant differences in the pro-

portions of responses to the Privacy Settings question

compared to the responses to the Control of Data or

Data Aggregation questions, for example. This lack

of significant differences indicates that participants’

responses were not influenced by either the low confi-

dence percentage nor the incorrect justification in the

result for the Privacy Settings aspect. However there

is some evidence that at least some participants con-

sidered the justification information in their response.

For the conditions that include justification, the pro-

portion of Does not say responses in the Data Reten-

tion question is higher. A review of the fragment cor-

responding to this privacy aspect indicates that there

is no mention of a specific time for the retention of

the data. This lack of detail may have resulted in at

least some participants considering that the question

was not answered in the privacy policy. We do not

see this difference in the Control condition nor in the

Confidence condition, which do not include the justi-

fication fragment.

We also tested for differences in the time taken to

answer the full survey between conditions. The dis-

tribution of time was similar for all conditions and

highly skewed, so we used non-parametric Kruskal-

Wallis tests for the difference in median. We found no

significant differences, indicating that the additional

information of justification and confidence conditions

did not have an influence on the time taken to finish

the survey.

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

148

Control Justif. Highlight Conf.

Justif.

+Conf.

Highl.

+Conf.

5

10

15

Trustworthy

Control Justif. Highlight Conf.

Justif.

+Conf.

Highl.

+Conf.

5

10

15

Useful

Control Justif. Highlight Conf.

Justif.

+Conf.

Highl.

+Conf.

2

4

6

8

10

12

Intention

Control Justif. Highlight Conf.

Justif.

+Conf.

Highl.

+Conf.

2

4

6

8

10

12

Understandable

Figure 4: Box plots for all variables. The blue line indicates

the median for the Control condition. The detail of signif-

icant differences according to Dunn’s test are indicated in

Table 5.

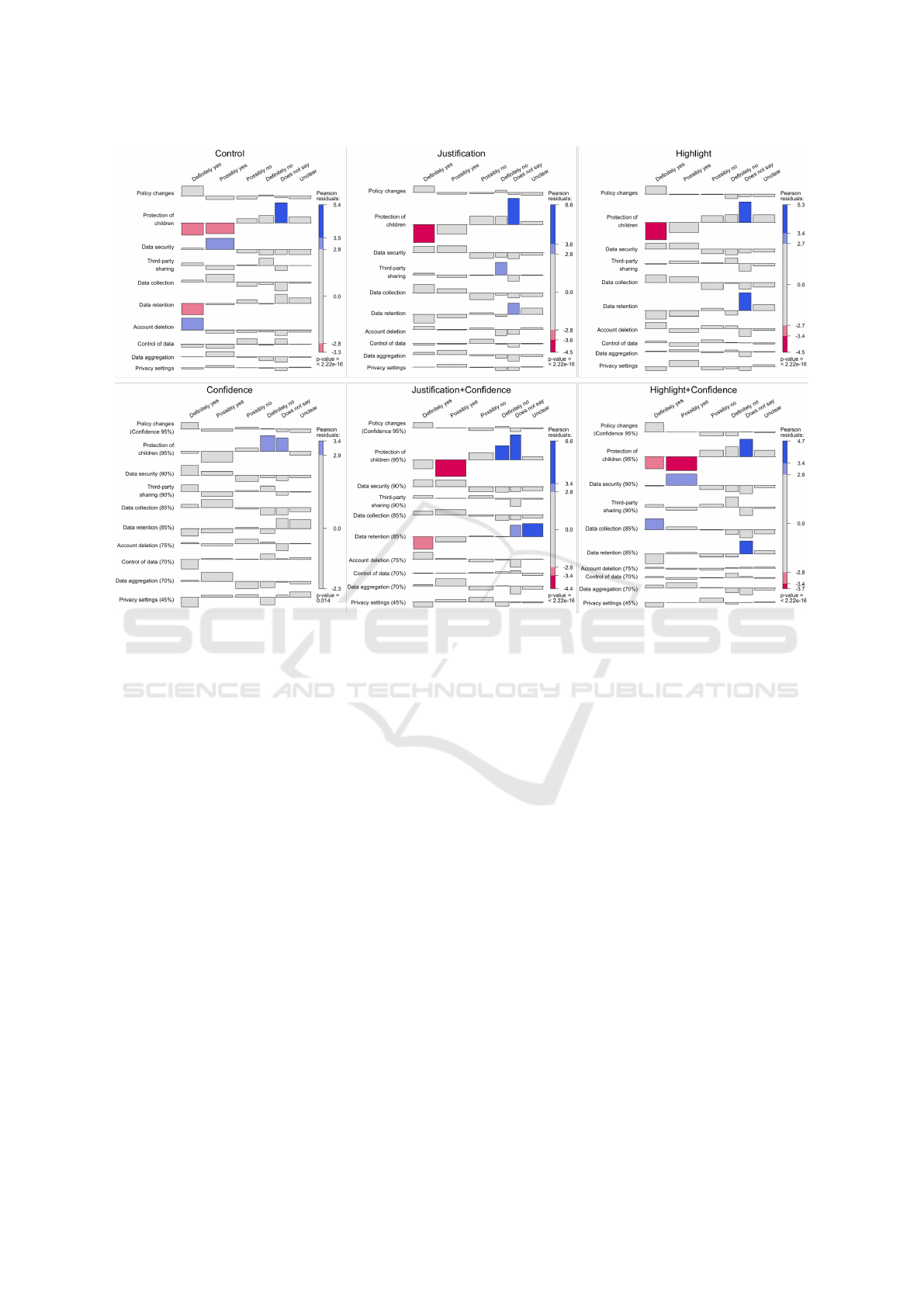

4.4 Effect on Perception of the Tool

We created composite variables by summing the items

corresponding to attitudes and perception of useful-

ness, understandability and trustworthiness. We cal-

culated a Cronbach’s alpha measure for the items cor-

responding to each composite variable. In the case of

usefulness, the recoded reverse-worded item was neg-

atively correlated and we removed it from the analy-

sis. After removal, Cronbach’s alpha values indicated

good internal consistency (all values above 0.9.) Fig-

ure 4 shows the median in each experimental condi-

tion for all variables.

All composite variables had a similar non-normal

distribution shape; therefore, we used non-parametric

Kruskal Wallis tests for the difference between their

medians, and Dunn’s test for multiple comparisons.

To control for false positives, p-values were adjusted

using the Benjamini–Hochberg procedure (Benjamini

and Hochberg, 1995). We found significant differ-

ences between groups for all variables, according to

the results of Kruskal-Wallis tests (Table 4). We con-

ducted post-hoc comparisons using Dunn’s tests to

evaluate which of the groups were significantly dif-

ferent. Table 5 shows the detailed results.

The Highlight and Highlight+Confidence condi-

tions were more positively perceived in terms of use-

fulness and trustworthiness than the Control condi-

tion. For behavioral intention, we found a significant

difference only between the Control and Justification

conditions. In addition, we found no differences in

Table 4: Results of Kruskal-Wallis’ test for the difference

in median between conditions.

Chi-squared (df=5) p

Intention 11.694 0.033

Useful 17.217 0.004

Understandable 11.715 0.039

Trustworthy 17.028 0.004

Table 5: Results of Dunn’s test for multiple comparisons.

P-values for comparisons that were non-significant (p>0.5)

are not shown. No significant differences were found for

perceived understandability.

Useful Trustw. Intention

Control 0.049 0.017

- Justification

Control 0.010 0.009

- Highlight

Control 0.048 0.026

- Highlight+Conf.

Highlight 0.035

- Confidence

the perception of any of the variables of interest be-

tween the Control and Confidence conditions. We

also did not find significant differences between the

Justification and Highlight conditions; we consider

that this may be due to the relatively subtle effect of

bolding the words. Nor were there significant differ-

ences between similar experimental conditions with

or without confidence information. In addition, al-

though the Kruskal-Wallis test indicated significant

difference for understandability, the post hoc Dunn

test did not find significant differences between any

condition, based on the adjusted p-value.

Finally, with regard to the appropriateness of us-

ing AI for summarizing privacy policies, the results of

a Kruskal-Wallis test showed that there were no sig-

nificant differences between conditions. The median

value was 4 (”Somewhat agree”) for all conditions ex-

cept the Control condition, which had a median value

of 3 (”Somewhat disagree”).

5 DISCUSSION

The results show that providing privacy policy infor-

mation fragments as justification, with and without

highlighted words, improved perception of the tool

compared to not showing that information, albeit on

different dimensions. On the other hand, the results

show that confidence information did not have any

influence. Based on previous research on the confi-

dence explanations (Wang et al., 2016), we had ex-

Evaluating the Effect of Justification and Confidence Information on User Perception of a Privacy Policy Summarization Tool

149

pected that showing a confidence percentage would

improve perception of trustworthiness in particular,

but there was no effect on any of the measured per-

ception variables.

The results show that the short summary format

(Control condition) can inform users of the overall

privacy policy contents as categorized by the tool.

This result is in line with research on shorter privacy

policy formats (Gluck et al., 2016). On the other

hand, the additional explanatory information of justi-

fication and result confidence included in other exper-

imental conditions did not greatly alter the responses

to the questions about the privacy policy content, even

when the fragment did not accurately justify the result

or the confidence percentage was low. One possibil-

ity is that participants may have relied mainly on the

privacy aspect’s icons and descriptions to answer the

questions about the privacy policy, even when there

was additional information that was in contradiction

of the overall result. This may have been due to the

fact that we did not explicitly bring attention to the

justification and confidence percentage, beyond in-

cluding its description in the help section of the inter-

face. In addition, the questions we asked participants

were straightforward and for the most part targeted in-

formation that was already available in the elements

of the Control condition interface.

However, the results indicate that participants did

consider justification information, at least to some ex-

tent, as evidenced by the answers to the Data Reten-

tion question in conditions where the privacy policy

fragment was shown. As for the incorrect fragment

corresponding to the Privacy Settings aspect, it may

be that participants were not sufficiently familiar with

this type of settings, and therefore could not judge

whether the fragment was incorrect or not. In the case

of confidence information, another possibility is that

the users did not think to question the results of the

tool and therefore ignored the contradictory informa-

tion of low confidence. This can happen when the

user considers that the system is reliable (Wang et al.,

2016). Figure 4 shows that the median of trustwor-

thiness perception is higher than the midpoint for all

conditions, which lends support to this hypothesis.

In general, the results of this study suggest that

adding explanatory information in the form of justi-

fication can be beneficial to automated privacy pol-

icy summarization tools. However, post-hoc power

analysis indicates that the sample is large enough to

detect small differences, meaning that it is possible

that statistically significant improvements in percep-

tion of usefulness and trustworthiness may not be rel-

evant in practice. Conversely, the findings that not all

explanatory information had a significant positive ef-

fect suggest that it is important to evaluate whether

this additional information can truly benefit users, be-

fore considering adding it to the result interface of

an automated privacy policy summarization tool. Al-

though our results do not indicate that there would be

negative effects if explanatory information is shown,

future research should consider evaluating any pos-

sible tradeoffs in terms of usability. More research

is needed to identify what information to present to

users in order to improve efficacy as well as percep-

tion of these automated privacy tools.

6 CONCLUSIONS

In this paper, we conducted an experimental study to

evaluate whether showing justification and confidence

of the results of an automated privacy policy summa-

rization tool influences user perception of the tool and

whether this information can help users correctly in-

terpret those results. The findings suggest that show-

ing a privacy policy fragment as justification for the

result can improve perception of usefulness and trust-

worthiness of the tool, and can improve intention of

using the tool. On the other hand, information about

the confidence of results did not appear to have much

influence on user perception. Moreover, the findings

also indicate that even when the confidence informa-

tion indicated higher uncertainty of the results, the

users did not rely on this information to interpret the

results of the tool.

Altogether, the findings indicate that it may be

worth considering adding explanatory information to

help improve the perception of an automated privacy

policy summarization tool, but that the type of expla-

nation should be carefully chosen and evaluated, since

explanatory information by itself may not be enough

to help users understand the limitations of the results

of the tool. Future research should investigate the type

of explanatory information that these automated pri-

vacy tools should provide to users, as well as how to

present that information in a usable way.

REFERENCES

Benjamini, Y. and Hochberg, Y. (1995). Controlling

the False Discovery Rate: A Practical and Power-

ful Approach to Multiple Testing. Journal of the

Royal Statistical Society. Series B (Methodological),

57(1):289–300.

Biran, O. and McKeown, K. (2017). Human-centric Jus-

tification of Machine Learning Predictions. In Pro-

ceedings of the 26th International Joint Conference on

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

150

Artificial Intelligence, IJCAI’17, pages 1461–1467.

AAAI Press.

Bracamonte, V., Hidano, S., Tesfay, W. B., and Kiyomoto,

S. (2019). Evaluating Privacy Policy Summarization:

An Experimental Study among Japanese Users. In

Proceedings of the 5th International Conference on

Information Systems Security and Privacy - Volume 1:

ICISSP,, pages 370–377. INSTICC, SciTePress.

Diakopoulos, N. (2016). Accountability in Algorithmic De-

cision Making. Commun. ACM, 59(2):56–62.

European Commission (2019). European Commis-

sion - PRESS RELEASES - Press release - Eu-

ropean Commission adopts adequacy decision on

Japan, creating the world’s largest area of safe

data flows. http://europa.eu/rapid/press-release IP-19-

421 en.htm.

European Parliament (2016). Regulation (EU) 2016/679

of the European Parliament and of the Council of 27

April 2016 on the protection of natural persons with

regard to the processing of personal data and on the

free movement of such data, and repealing Directive

95/46.

Gluck, J., Schaub, F., Friedman, A., Habib, H., Sadeh, N.,

Cranor, L. F., and Agarwal, Y. (2016). How Short

Is Too Short? Implications of Length and Framing

on the Effectiveness of Privacy Notices. In Twelfth

Symposium on Usable Privacy and Security (SOUPS

2016), pages 321–340. USENIX Association.

Gregor, S. and Benbasat, I. (1999). Explanations from In-

telligent Systems: Theoretical Foundations and Impli-

cations for Practice. MIS Quarterly, 23(4):497–530.

Harkous, H., Fawaz, K., Lebret, R., Schaub, F., Shin,

K. G., and Aberer, K. (2018). Polisis: Automated

Analysis and Presentation of Privacy Policies Using

Deep Learning. In 27th USENIX Security Symposium

(USENIX Security 18), pages 531–548. USENIX As-

sociation.

Kay, M., Patel, S. N., and Kientz, J. A. (2015). How Good

is 85%?: A Survey Tool to Connect Classifier Eval-

uation to Acceptability of Accuracy. In Proceedings

of the 33rd Annual ACM Conference on Human Fac-

tors in Computing Systems, CHI ’15, pages 347–356.

ACM.

Kelley, P. G., Bresee, J., Cranor, L. F., and Reeder, R. W.

(2009). A ”Nutrition Label” for Privacy. In Proceed-

ings of the 5th Symposium on Usable Privacy and Se-

curity, SOUPS ’09, pages 4:1–4:12. ACM.

Kelley, P. G., Cesca, L., Bresee, J., and Cranor, L. F. (2010).

Standardizing Privacy Notices: An Online Study of

the Nutrition Label Approach. In Proceedings of the

SIGCHI Conference on Human Factors in Computing

Systems, CHI ’10, pages 1573–1582. ACM.

Kizilcec, R. F. (2016). How Much Information?: Effects of

Transparency on Trust in an Algorithmic Interface. In

Proceedings of the 2016 CHI Conference on Human

Factors in Computing Systems, CHI ’16, pages 2390–

2395. ACM.

Lai, V. and Tan, C. (2019). On Human Predictions with Ex-

planations and Predictions of Machine Learning Mod-

els: A Case Study on Deception Detection. In Pro-

ceedings of the Conference on Fairness, Accountabil-

ity, and Transparency, FAT* ’19, pages 29–38. ACM.

Meyer, D., Zeileis, A., and Hornik, K. (2006). The Strucplot

Framework: Visualizing Multi-way Contingency Ta-

bles with vcd. Journal of Statistical Software, 17(3).

Schaub, F., Balebako, R., Durity, A. L., and Cranor, L. F.

(2015). A design space for effective privacy notices.

In Eleventh Symposium On Usable Privacy and Secu-

rity (SOUPS 2015), pages 1–17.

Statistics Bureau, Ministry of Internal Affairs and Commu-

nications (2010). Population and Households of Japan

2010.

Tesfay, W. B., Hofmann, P., Nakamura, T., Kiyomoto, S.,

and Serna, J. (2018a). I Read but Don’T Agree:

Privacy Policy Benchmarking Using Machine Learn-

ing and the EU GDPR. In Companion Proceedings

of the The Web Conference 2018, WWW ’18, pages

163–166. International World Wide Web Conferences

Steering Committee.

Tesfay, W. B., Hofmann, P., Nakamura, T., Kiyomoto, S.,

and Serna, J. (2018b). PrivacyGuide: Towards an

Implementation of the EU GDPR on Internet Privacy

Policy Evaluation. In Proceedings of the Fourth ACM

International Workshop on Security and Privacy Ana-

lytics, IWSPA ’18, pages 15–21. ACM.

ToSDR (2019). Terms of Service; Didn’t Read.

https://tosdr.org/.

Wang, N., Pynadath, D. V., and Hill, S. G. (2016). Trust

Calibration Within a Human-Robot Team: Compar-

ing Automatically Generated Explanations. In The

Eleventh ACM/IEEE International Conference on Hu-

man Robot Interaction, HRI ’16, pages 109–116.

IEEE Press.

Zaeem, R. N., German, R. L., and Barber, K. S. (2018).

PrivacyCheck: Automatic Summarization of Privacy

Policies Using Data Mining. ACM Trans. Internet

Technol., 18(4):53:1–53:18.

Zimmeck, S. and Bellovin, S. M. (2014). Privee: An

Architecture for Automatically Analyzing Web Pri-

vacy Policies. In 23rd USENIX Security Symposium

(USENIX Security 2014), pages 1–16. USENIX Asso-

ciation.

Evaluating the Effect of Justification and Confidence Information on User Perception of a Privacy Policy Summarization Tool

151