CNN Hyperparameter Tuning Applied to Iris Liveness Detection

Gabriela Yukari Kimura

1

, Diego Rafael Lucio

1 a

, Alceu S. Britto Jr.

2 b

and David Menotti

1 c

1

Vision, Robotics and Imaging Laboratory, Universidade Federal do Paran

´

a, Curitiba, Brazil

2

PPGIA, Pontif

´

ıcia Universidade Cat

´

olica do Paran

´

a, Curitiba, Brazil

Keywords:

Iris Biometrics, Deep Learning, Convolutional Networks.

Abstract:

The iris pattern has significantly improved the biometric recognition field due to its high level of stability and

uniqueness. Such physical feature has played an important role in security and other related areas. How-

ever, presentation attacks, also known as spoofing techniques, can be used to bypass the biometric system

with artifacts such as printed images, artificial eyes, and textured contact lenses. To improve the security of

these systems, many liveness detection methods have been proposed, and the first Internacional Iris Liveness

Detection competition was launched in 2013 to evaluate their effectiveness. In this paper, we propose a hy-

perparameter tuning of the CASIA algorithm, submitted by the Chinese Academy of Sciences to the third

competition of Iris Liveness Detection, in 2017. The modifications proposed promoted an overall improve-

ment, with 8.48% Attack Presentation Classification Error Rate (APCER) and 0.18% Bonafide Presentation

Classification Error Rate (BPCER) for the evaluation of the combined datasets. Other threshold values were

evaluated in an attempt to reduce the trade-off between the APCER and the BPCER on the evaluated datasets

and worked out successfully.

1 INTRODUCTION

Biometric recognition offers a natural and reliable al-

ternative for the automatic identification of individu-

als based on their physiological or behavioral charac-

teristics (e.g. fingerprint, iris, gait, voice, hand ge-

ometry, etc.) (Jain et al., 2011). The iris pattern is

regarded as one of the most accurate biometrics ow-

ing to its high level of stability and uniqueness. It has

played an important role in security and other associ-

ated fields (Tisse et al., 2002).

However, despite the many advantages, iris bio-

metric systems are highly susceptible to presentation

attacks, usually referred to as spoofing techniques,

that attempt to conceal or impersonate other identities

(Kohli et al., 2016; Menotti et al., 2015; Toosi et al.,

2017; Pala and Bhanu, 2017; Czajka and Bowyer,

2018; Sajjad et al., 2019; Tolosana et al., 2020). Ex-

amples of typical iris spoofing attacks include printed

iris images, video playbacks, artificial eyes, and tex-

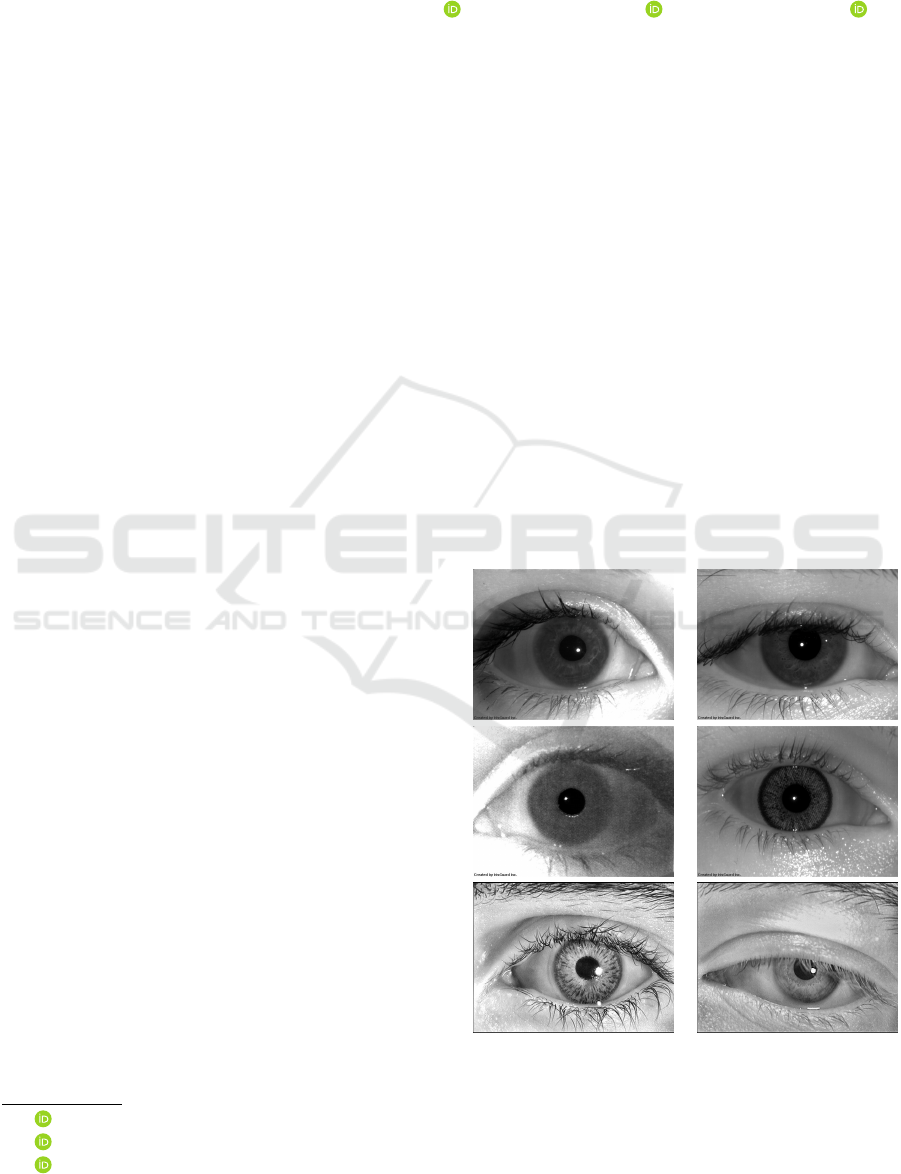

tured contact lenses. Figure 1 presents some exam-

ples. We observe how difficult is to a human being

make the right judgment.

a

https://orcid.org/0000-0003-2012-3676

b

https://orcid.org/0000-0002-3064-3563

c

https://orcid.org/0000-0003-2430-2030

Figure 1: Spoofing problem. Which images are true or

fake? Sorting from left to right and from top to bottom,

the ones numbered as 1, 4 and 6 are the real ones.

Therefore, in order to enhance the security of iris

recognition systems, liveness detection methods have

428

Kimura, G., Lucio, D., Britto Jr., A. and Menotti, D.

CNN Hyperparameter Tuning Applied to Iris Liveness Detection.

DOI: 10.5220/0008983904280434

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 5: VISAPP, pages

428-434

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

been suggested and can be categorized into software-

based and hardware-based techniques. In software-

based techniques, the spoof attacks are detected on the

sample that has already been acquired with a standard

sensor. Besides, they have the advantage of being less

expensive and less intrusive to the user.

In hardware-based techniques, physical character-

istics of the iris are measured, such as the behavior of

the pupil under different light conditions. Although

this approach usually presents a higher detection rate,

it has the disadvantage of being expensive and requir-

ing intervention at the device level (Galbally et al.,

2012).

To evaluate the efficiency of liveness detection

algorithms, the first international iris liveness con-

test was introduced in 2013, by Clarkson University,

Notre Dame University and Warsaw University of

Technology. The third contest was launched in 2017,

and included the combined dataset from West Virginia

University and IIIT-Delhi

1

.

The competition was open to all industrial and

academic institutions, and three software-based algo-

rithms were submitted for evaluation. They were eval-

uated by four datasets of live and spoof iris images,

represented by printed iris images, patterned contact

lenses, and printouts of patterned contact lenses. The

algorithm CASIA, from the Chinese Academy of Sci-

ences, designed a Cascade SpoofNets for iris liveness

detection and obtained the second-best results with a

combined error rate of 10.68% with an APCER of

11.88% and 9.47% BPCER, while the winner team,

an Anonymous submission, presented an APCER of

14.71% and BPCER of 3.36% (Yambay et al., 2017).

In this paper, a parameter tuning of the CASIA

SpoofNet is proposed. Through experiments, the op-

timal values of batch size, number of epochs, loss

function, learning rate, and weight decay were se-

lected. The suggested changes achieved better results

overall, with the exception of the Notre Dame dataset

evaluation, where the modified algorithm presented a

higher APCER.

The rest of the paper is structured as follows.

In Section II, other iris spoofing techniques are re-

viewed. In Section III, the databases used in our ex-

periments are described. In Section IV, the proposed

methodology is detailed. Experimental results are de-

scribed and discussed in Section V. Conclusions are

finally drawn in Section VI.

1

But we could not reach the authors for obtaining this

last dataset

2 RELATED WORK

Over the past few years, the vulnerability of iris au-

thentication systems to spoofing techniques has been

highly researched. In 2016, Kohli et al.(Kohli et al.,

2016) proposed a spoofing detection technique based

on the textural analysis of the iris images. The method

presented a unified framework with feature-level fu-

sion and combines the multi-order Zernike moments,

that encodes variations in the structure of the image,

with the Local Binary Pattern Variance (LBPV), used

for representing textural changes. In (Pacut and Cza-

jka, 2006), the authors introduced three solutions on

printed eye images based on the analysis of image fre-

quency spectrum, controlled light reflection from the

cornea, and the behavior of the iris pupil under light

conditions. And in (Lee et al., 2006), one may find

a method using collimated IR-LED (Infra-Red Light

Emitting Diode), where the theoretical positions and

distances between the Purkinje images are calculated

based on the human eye model. Both techniques

depend on hardware device resolution and in (Pacut

and Czajka, 2006), the subject cooperation is also re-

quired.

Recent works include Silva et al. (Silva et al.,

2015), which introduced a deep learning technique.

The area has been evolving and showing promising

results in several computer vision problems, such as

pedestrian detection(Ouyang and Wang, 2013), dig-

its recognition (Laroca et al., 2018; Laroca et al.,

2019a; Laroca et al., 2019b) and face recogni-

tion(O. M. Parkhi and Zisserman, 2015).

- A Robust Real-Time Automatic License

Plate Recognition Based on the YOLO Detec-

tor (DOI: 10.1109/IJCNN.2018.8489629) - Convolu-

tional Neural Networks for Automatic Meter Reading

(DOI: 10.1117/1.JEI.28.1.013023) - An Efficient and

Layout-Independent Automatic License Plate Recog-

nition System Based on the YOLO Detector (arXiv)

Their approach addresses three-class image detec-

tion problems (textured contact lenses, soft contact

lenses, and no lenses), and uses a convolutional net-

work in order to build a deep image representation

and an additional fully-connected single layer with

softmax regression for classification.

3 DATASETS

In this section, the databases used in the experiments

are described. All of them are publicly available upon

request and were used for the Iris Liveness Detection

competition of 2017. The images of the databases are

in grayscale and their dimensions are 640x480 pix-

CNN Hyperparameter Tuning Applied to Iris Liveness Detection

429

els. Additional details are presented in the following

subsections.

3.1 Clarkson Dataset

The Clarkson dataset consists of three sections of im-

ages captured by an LG IrisAccess EOU2200 cam-

era. The first and second are live and patterned con-

tacts iris images and the third is composed of printouts

of live NIR iris images as well as printouts created

from visible light images of the eye captured with an

iPhone 5.

The training set comprises a total of 4937 images:

2469 live images from 25 subjects, 1346 printed im-

ages from 13 subjects as well as 1122 patterned con-

tact lens images from 5 subjects. The testing set in-

cludes additional unknown data and consists of 4066

images: 908 printed images, 765 patterned contact

images, and 1485 live iris images. In Figure 2, we

present some examples of live and fake images.

Figure 2: Clarkson Dataset: In the first and second columns

we present real and fake images, respectively.

3.2 Warsaw Dataset

The Warsaw dataset consists of authentic iris images

acquired by the IrisGuard AD 100 sensor and their

corresponding paper printouts. The training set in-

cludes 4513 images: 1844 live images from 322 dis-

tinct eyes and 2669 images of the corresponding paper

printouts. The testing set is composed of two subsets:

known and unknown spoof images.

Figure 3: Warsaw Dataset: In the first and second rows we

present real and fake images, respectively.

Known spoofs subset includes 974 live iris images

acquired from 50 distinct eyes and 2016 images of the

corresponding printouts. The Unknown spoofs sub-

set includes 2350 live iris images acquired from 98

distinct eyes and 2160 images of the corresponding

printouts. In Figure 3, we present some examples of

live and fake images.

3.3 IIITD-VWU Dataset

The IIITD-WVU dataset consists of samples acquired

using the IriShield MK2120U mobile iris sensor at

two different acquisition environments: indoors (con-

trolled illumination) and outdoors (varying environ-

mental situations).

The training subset comprises 6250 images, com-

bining 2,250 images of authentic irises and 4000 at-

tack iris images, including textured contact lens iris

images, printouts of live iris images, and printouts

of contact lens iris images. Besides, the testing sub-

set includes 4,209 iris images, 702 live iris images

and 3507 attack iris images, combining textured con-

tact lens iris images, printouts of live iris images and

printouts of contact lens iris images. In Figure 5, we

present some examples of live and fake images.

3.4 Notre Dame Dataset

The Notre Dame dataset consists of samples acquired

by LG 4000 and AD 100 sensors. The training subset

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

430

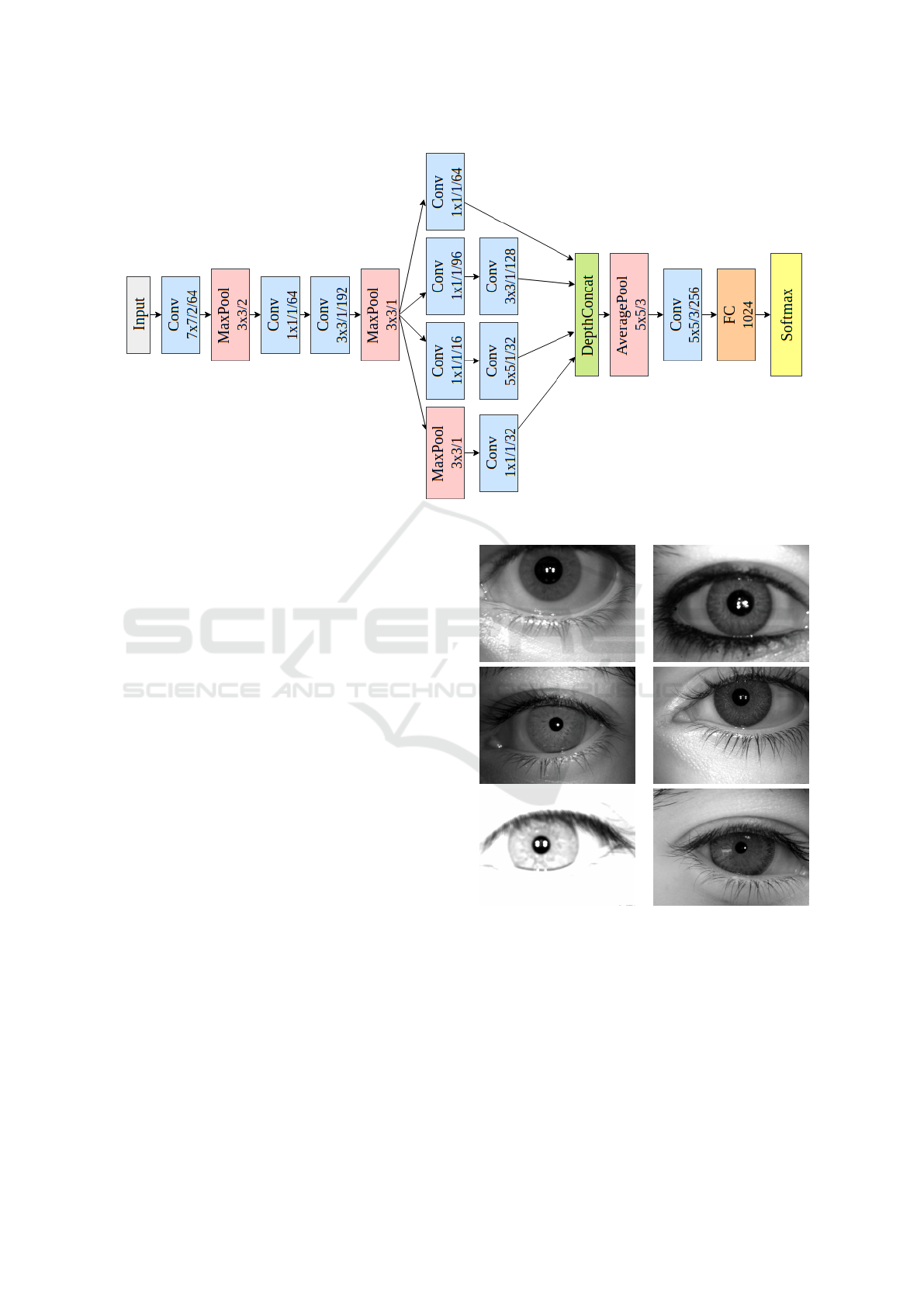

Figure 4: CASIA SpoofNet architecture.

consists of 600 images of authentic irises and 600 im-

ages of textured contact lenses manufactured by Ciba,

UCL, and ClearLab. The testing subset is split into

known spoofs and unknown spoofs.

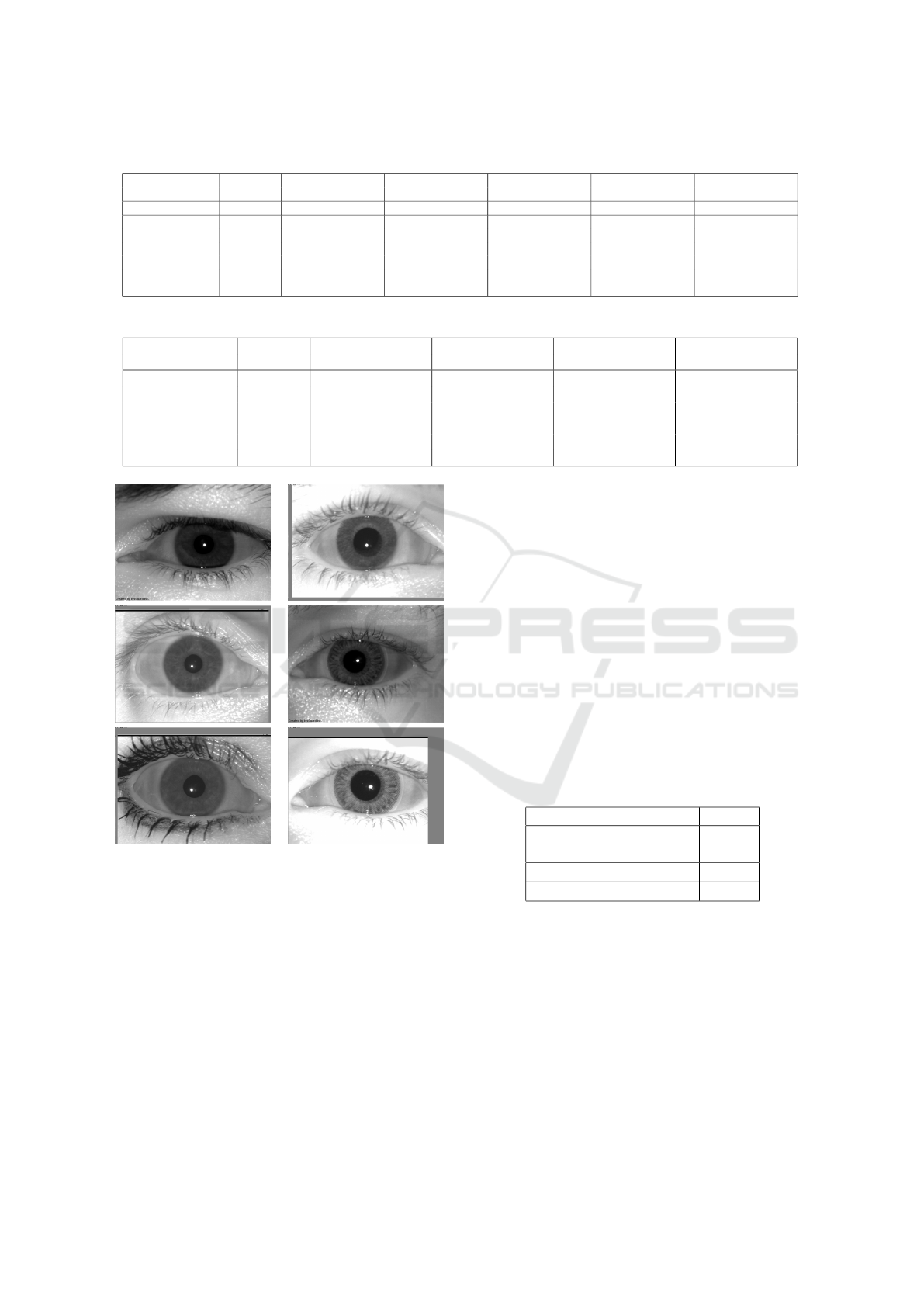

The known spoofs subset includes 900 images of

textured contact lenses and 900 images of authentic

irises. The unknown spoofs subset includes 900 im-

ages of textured contact lenses and 900 images of au-

thentic irises. In Figure 6, we present some examples

of live and fake images.

4 PROPOSED ALGORITHM

The CASIA algorithm submitted to the competition

considers two types of iris spoofings: a printed iris

and an iris with printed contact lenses. Since it is diffi-

cult to identify printed iris of poor quality, the printed

iris and iris with contact lens are individually cate-

gorized. To this end, CASIA algorithm designed a

Cascade SpoofNet combining two convolutional neu-

ral networks in sequence, SpoofNet-1 and SpoofNet-

2. SpoofNet-1 aims to distinguish printed and non-

printed iris images. If the iris image inputted into the

net is classified as a live sample, the iris is located and

the re-scaled iris image is classified by the SpoofNet-

2, to whether the sample is a live iris or a contact lens.

Both SpoofNets are based on GoogleNet (Szegedy

et al., 2015) and consist of four convolutional layers

and one inception module. The inception module is

composed by layers of convolutional filters of dimen-

sion 1× 1, 3 × 3 and 5 × 5, executed in parallel. It has

Figure 5: IIITD-WVU Dataset: In the first and second rows

we present real and fake images, respectively.

the advantage of reducing the complexity and improv-

ing the efficiency of the architecture, once the filters

of dimension 1x1 help reduce the number of features

before executing layers of convolution with filters of

higher dimensions. The architecture proposed and

their parameters (patch size/stride/filter dimension) is

presented in Figure 4.

In this paper, the iris of the images was located

manually in bounding boxes, using the graphical im-

CNN Hyperparameter Tuning Applied to Iris Liveness Detection

431

Table 1: Competition comparison - Error rates by dataset.

Algorithm Threshold

Clarkson Warsaw IIITD-WVU Notre Dame Combined

APCER BPCER APCER BPCER APCER BPCER APCER BPCER APCER BPCER

CASIA 50 9.61% 5.65% 3.40% 8.60% 23.16% 16.10% 11.30% 7.56% 11.88% 9.47%

Method Proposed

30 5.49% 0.00% 0.43% 2.94% 0.62% 31.9% 23.33% 0.27% 9.63% 1.49%

40 4.96% 0.00% 0.23% 3.82% 0.51% 34.61% 20.44% 0.72% 8.71% 1.90%

50 4.18% 0.00% 0.14% 4.60% 0.34% 36.89% 18.05% 0.94% 7.93% 2.32%

70 3.16% 0.06% 0.02% 6.70% 0.11% 41.31% 13.30% 1.40% 6.56% 3.37%

80 2.57% 0.20% 0.02% 8.40% 0.02% 43.58% 10.20% 2.20% 5.74% 4.15%

90 1.79% 0.47% 0.00% 9.05% 0.0% 49.85% 6.83% 3.20% 4.80% 5.64%

Table 2: Cross-dataset experiment - Error rates by dataset.

Algorithm Threshold

Clarkson Warsaw IIITD-WVU Notre Dame

APCER BPCER APCER BPCER APCER BPCER APCER BPCER

Method Proposed

30 37.0% 0.0% 0.0% 66.6% 41.3% 0.7% 30.2% 1.6%

40 35.2% 0.0% 0.0% 67.9% 37.2% 0.9% 27.7% 2.4%

50 33.0% 0.0% 0.0% 70.0% 34.1% 1.1% 25.8% 2.6%

70 28.6% 0.0% 0.0% 75.0% 26.9% 2.3% 20.9% 3.4%

80 25.8% 0.1% 0.0% 77.8% 22.4% 3.1% 18.2% 3.6%

90 22.5% 0.1% 0.1% 81.4% 16.1% 4.8% 14.6% 4.6%

Figure 6: Notre Dame Dataset: In the first and second rows

we present real and fake images, respectively.

age annotation tool LabelImg. The model was trained

by 80% of the training images and their correspond-

ing iris images. The rest 20% were used as the valida-

tion subset. In addition, the model was parameterized

based on the analysis of empirical experiments.

During training, many hyperparameters can influ-

ence the effectiveness of the resulted model. Too

many epochs might overfit the training data, while

too few may not give enough time for the network to

learn good parameters. To monitor the model’s per-

formance, the method EarlyStopping from the Keras

API was used with the loss function binary cross-

entropy. The patience parameter was set to 5 and

training of the model was executed with a maximum

of 20 epochs and batch size of 8.

The learning rate hyperparameter controls the

speed at which the model learns. Specifically, it con-

trols the amount of allocated error that the weights

of the model are updated. The lower the value, the

slower the model learns a problem. Typical values of

the learning rate are less than 1 and greater than 10e

−6

(Goodfellow et al., 2016). In this work, training was

better with a learning rate of value 10e

−5

.

Finally, to reduce overfitting and improve the per-

formance of the model on new data, the default value

of weight decay was changed to 10

−4

and a dropout

of 0.2 was employed for regularization. The main op-

timal parameters are summarized in Table 3.

Table 3: SpoofNet Hyperparameters Tuning.

Parameter Values

Number of epochs (max) 20

Batch size 8

Learning rate 1e

−5

Weight decay 10

−4

5 PERFORMANCE EVALUATION

The performance of the algorithm was evaluated

based on the APCER, i.e, the rate of misclassified at-

tack images, and the BPCER, i.e., the rate of misclas-

sified live images. Both APCER and BPCER were

calculated for each dataset individually, as well as for

the combination of all datasets.

Table 1 shows the error rates obtained by both CA-

SIA algorithm and the proposed modified CASIA al-

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

432

gorithm, in the evaluation of the datasets individually

and combined.

In the competition, the threshold value for de-

termining liveness was set at 50. Comparing the

error rates between the two algorithms, the pro-

posed method performed better overall, with the

only exception in the evaluation of the Notre Dame

dataset, where the proposed method presented a

higher APCER. Besides that, Clarkson dataset show-

cased the lowest error rates, with a combined error

rate of 4.18% APCER and 0% BPCER.

The presence of unknown spoofing attacks in the

testing subsets was the main difficulty during the pro-

cess of evaluation. Examining Table 1 and analyzing

the error rates presented by the proposed method, it is

noticeable the significant trade-off between APCER

and BPCER, once the model is not able to accurately

classify real and unknown attack variations.

Aiming to mitigate this trade-off, different values

of threshold were evaluated. Table 1 shows the error

rates presented by the proposed model to the thresh-

olds of values 30, 40, 70, 80 and 90.

The thresholds of values 70 to 90 helped reduce

gradually the difference of the error rates by reducing

the APCER and increasing the BPCER of the datasets

of Notre Dame and Clarkson university and for all

the datasets combined, but did not have the same ef-

fect on the Warsaw dataset, where it caused the in-

crease of the BPCER and the trade-off between the

error rates. In this dataset, however, the thresholds

of values 30 and 40 had a better effect, increasing

the APCER and reducing both the value of BPCER

and the trade-off with the dataset’s APCER. Note that

for the IIITD-WVU dataset the results obtained were

the worst among the compared datasets regarding the

BPCER

We also performed a cross-dataset experiment,

that is we trained the model using the data/images of

all datasets except the one where it is evaluated/tested.

The goal here is to observe the generalization power

of deep learning models and also verify how useful

these models are for realistic scenarios where there

is no data for a fine-tuning process. The results of

this experiment are summarized in Table 2. As can

be observe by comparing the figures of Table 2 and

Table 1, the models learned using this cross-dataset

scheme performed worse than the ones learned on its

own data. The less loss of performance is observed

on the Notre Dame dataset, anyway the figures of

APCER metrics are not suitable for some real-world

applications.

6 CONCLUSION

In this paper, we proposed a hyperparameter tuning

of the neural network presented by the CASIA algo-

rithm, submitted to the Iris Liveness Detection com-

petition of 2017. Most of the databases evaluated in-

cluded unknown presentation attacks, being the main

difficulty to our model. The suggested modifica-

tions significantly reduced the values of APCER and

BPCER of the datasets.

The Clarkson dataset showcased the lowest error

rate, with 4.18% APCER and 0% BPCER for the

threshold of value 50. In an attempt to reduce the

trade-off between the values of APCER and BPCER,

different threshold values were evaluated. The thresh-

olds of values 70 to 90 worked out successfully for

the datasets of Clarkson and Notre Dame University

and all the datasets combined, reducing gradually the

dataset’s APCER. On the other hand, the thresholds

of values 30 and 40 had a better effect on the Warsaw

dataset, reducing the BPCER and the trade-off with

the dataset’s APCER.

ACKNOWLEDGMENTS

The authors would like to thank the Brazilian National

Research Council – CNPq (Grants #313423/2017-2

and #428333/2016-8); the Foundation for Research

of the State of Paran

´

a (Fundac¸

˜

ao Arauc

´

aria) the Co-

ordination for the Improvement of Higher Education

Personnel (CAPES) (Social Demand Program); and

also acknowledge the support of NVIDIA Corpora-

tion with the donation of the Titan Xp GPU used for

this research.

REFERENCES

Czajka, A. and Bowyer, K. W. (2018). Presentation at-

tack detection for iris recognition: An assessment of

the state-of-the-art. ACM Comput. Surv., 51(4):86:1–

86:35.

Galbally, J., Ortiz-Lopez, J., Fierrez, J., and Ortega-Garcia,

J. (2012). Iris liveness detection based on quality re-

lated features. In 2012 5th IAPR International Con-

ference on Biometrics (ICB), pages 271–276.

Goodfellow, I., Bengio, Y., and Courville., A. (2016). Deep

learning. MIT press.

Jain, A. K., Ross, A. A., and Nandakumar, K. (2011). Intro-

duction to Biometrics 2011. Springer Science & Bus.

Media.

Kohli, N., Yadav, D., Vatsa, M., Singh, R., and Noore, A.

(2016). Detecting medley of iris spoofing attacks us-

ing desist. In 2016 IEEE 8th International Confer-

CNN Hyperparameter Tuning Applied to Iris Liveness Detection

433

ence on Biometrics Theory, Applications and Systems

(BTAS), pages 1–6.

Laroca, R., Barroso, V., Diniz, M. A., Gonc¸alves, G. R.,

Schwartz, W. R., and Menotti, D. (2019a). Convo-

lutional neural networks for automatic meter reading.

Journal of Electronic Imaging, 28(1):1 – 14.

Laroca, R., Severo, E., Zanlorensi, L. A., Oliveira, L. S.,

Gonc¸alves, G. R., Schwartz, W. R., and Menotti, D.

(2018). A robust real-time automatic license plate

recognition based on the yolo detector. In 2018

International Joint Conference on Neural Networks

(IJCNN), pages 1–10.

Laroca, R., Zanlorensi, L. A., Gonc¸alves, G. R., Todt,

E., Robson Schwartz, W., and Menotti, D. (2019b).

An efficient and layout-independent automatic license

plate recognition system based on the yolo detector.

arXiv:1909.01754.

Lee, E. C., Park, K. R., and Kim, J. (2006). Fake iris detec-

tion by using purkinje image. In Proceedings of the

2006 International Conference on Advances in Bio-

metrics, ICB’06, pages 397–403, Berlin, Heidelberg.

Springer-Verlag.

Menotti, D., Chiachia, G., Pinto, A., Schwartz, W. R.,

Pedrini, H., Falc

˜

ao, A. X., and Rocha, A. (2015).

Deep representations for iris, face, and fingerprint

spoofing detection. IEEE Transactions on Informa-

tion Forensics and Security, 10(4):864–879.

O. M. Parkhi, A. V. and Zisserman, A. (2015). Deep face

recognition. In British Machine Vision Conference

(BMVC), volume 1.

Ouyang, W. and Wang, X. (2013). Joint deep learning

for pedestrian detection. In 2013 IEEE International

Conference on Computer Vision (ICCV), pages 2056–

2063.

Pacut, A. and Czajka, A. (2006). Aliveness detection for iris

biometrics. In Proceedings 40th Annual 2006 Interna-

tional Carnahan Conference on Security Technology,

pages 122–129.

Pala, F. and Bhanu, B. (2017). Iris liveness detection by rel-

ative distance comparisons. In 2017 IEEE Conference

on Computer Vision and Pattern Recognition Work-

shops (CVPRW), pages 664–671.

Sajjad, M., Khan, S., Hussain, T., Muhammad, K., Sanga-

iah, A. K., Castiglione, A., Esposito, C., and Baik,

S. W. (2019). Cnn-based anti-spoofing two-tier multi-

factor authentication system. Pattern Recognition Let-

ters, 126:123 – 131. Robustness, Security and Regu-

lation Aspects in Current Biometric Systems.

Silva, P., Luz, E., Baeta, R., Pedrini, H., Falcao, A. X., and

Menotti, D. (2015). An approach to iris contact lens

detection based on deep image representations. In

2015 28th SIBGRAPI Conference on Graphics, Pat-

terns and Images, pages 157–164.

Szegedy, C., Wei Liu, Yangqing Jia, Sermanet, P., Reed, S.,

Anguelov, D., Erhan, D., Vanhoucke, V., and Rabi-

novich, A. (2015). Going deeper with convolutions. In

2015 IEEE Conference on Computer Vision and Pat-

tern Recognition (CVPR), pages 1–9.

Tisse, C.-L., Martin, L., Torres, L., and Robert., M.

(2002). Person identification technique using human

iris recognition. In 15th International Conference on

Vision Interface (VI2002), Canadian Image Process-

ing and Pattern Recognition Society (CIPPRS), vol-

ume 294, pages 294–299.

Tolosana, R., Gomez-Barrero, M., Busch, C., and Ortega-

Garcia, J. (2020). Biometric presentation attack de-

tection: Beyond the visible spectrum. IEEE Transac-

tions on Information Forensics and Security, 15:1261–

1275.

Toosi, A., Cumani, S., and Bottino, A. (2017). Cnn

patch–based voting for fingerprint liveness detection.

In Proceedings of the 9th International Joint Con-

ference on Computational Intelligence (IJCCI), pages

158–165.

Yambay, D., Becker, B., Kohli, N., Yadav, D., Czajka, A.,

Bowyer, K. W., Schuckers, S., Singh, R., Vatsa, M.,

Noore, A., Gragnaniello, D., Sansone, C., Verdoliva,

L., He, L., Ru, Y., Li, H., Liu, N., Sun, Z., and Tan,

T. (2017). Livdet iris 2017 — iris liveness detection

competition 2017. In 2017 IEEE International Joint

Conference on Biometrics (IJCB), pages 733–741.

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

434