Risk Identification: From Requirements to Threat Models

Roman Wirtz and Maritta Heisel

University of Duisburg-Essen, Germany

Keywords:

Risk Management, Security, Risk Identification, Threats, Requirements Engineering.

Abstract:

Security is a key factor for providing high-quality software. In the last few years, a significant number of secu-

rity incidents has been reported. Considering scenarios that may lead to such incidents right from the beginning

of software development, i.e. during requirements engineering, reduces the likelihood of such incidents signif-

icantly. Furthermore, the early consideration of security reduces development effort since identified scenarios

do not need to be fixed in later stages of the development lifecycle. Currently, the identification of possible

incident scenarios requires high expertise from security engineers and is often performed in brainstorming

sessions. Those sessions often lack a systematic process which can lead to overlooking relevant aspects. Our

aim is to bring together security engineers and requirements engineers. In this paper, we propose a system-

atic, tool-based and model-based method to identify incident scenarios based on functional requirements by

following the principle of security-by-design. Our method consists of two parts: First, we enhance the initial

requirements model with necessary domain knowledge, and second we systematically collect relevant scenar-

ios and further refine them. For all steps, we provide validation conditions to detect errors as early as possible

when carrying out the method. The final outcome of our method is a CORAS threat model that contains the

identified scenarios in relation with the requirements model.

1 INTRODUCTION

Security is a key factor for providing high-quality

software. In the last few years, a significant number

of security incidents have been reported. Such inci-

dents can lead to strong consequences for software

providers not only financially, but also in terms of rep-

utation loss. By following the principle of security-

by-design and considering security right from the be-

ginning of a software development lifecycle, the con-

sequences for software providers can be reduced sig-

nificantly. Besides, the early consideration of security

reduces development effort since identified security

aspects do not need to be fixed in the later stages of

the development lifecycle.

A central aspect for providing secure software is

the identification of scenarios that may lead to harm

for a stakeholder’s asset, i.e. a piece of value for that

stakeholder. The identification is an essential part of

risk management activities and requires high exper-

tise from security engineers. Risk management deals

with the identification and treatment of incidents in

an effective manner by prioritizing them according to

their risk level. The level can be defined by an inci-

dent’s likelihood and its consequence for an asset (In-

ternational Organization for Standardization, 2018).

Currently, development teams often perform brain-

storming sessions for that purpose, in which software

engineers and security engineers come together and

share their specific expertise. Those sessions often

lack a systematic process which can lead to overlook-

ing relevant aspects.

We aim to bring together security engineers and

requirements engineers by providing systematic guid-

ance in identifying relevant incident scenarios as early

as possible, i.e. during requirements engineering. In

previous work (Wirtz et al., 2018), we described a

risk management process which combines problem

frames with CORAS. In this method, the identifica-

tion of threats relies on external knowledge and lacks

of a systemization. To address this issue, we fur-

ther proposed a template to specify threats based on

the Common Vulnerability Scoring System (Wirtz and

Heisel, 2018; FIRST.org, 2015). The template uses

problem diagrams to relate specified threats to func-

tional requirements. The method we introduced to ex-

emplify the usage of the template lacks of a process

to identify relations between different threats.

In the present paper, we present a method that al-

lows to systematically derive CORAS threat diagrams

(Lund et al., 2011) from functional requirements of

software to be developed. With our method, we fo-

Wirtz, R. and Heisel, M.

Risk Identification: From Requirements to Threat Models.

DOI: 10.5220/0008935803850396

In Proceedings of the 6th International Conference on Information Systems Security and Privacy (ICISSP 2020), pages 385-396

ISBN: 978-989-758-399-5; ISSN: 2184-4356

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

385

cus on information security, and therefore we define a

piece of information as an asset to be protected with

regard to confidentiality, integrity, or availability. A

CORAS threat diagram describes scenarios that might

lead to harm for an asset concerning one of these

security properties. Our method follows a problem-

oriented approach. Therefore, we consider a problem

frames model as required input (Jackson, 2000). First,

we define security goals and elicit additional domain

knowledge, which we both document in a model. We

proceed with systematically identifying relevant sce-

narios based on the requirements model. The doc-

umented security goals help to focus on the relevant

aspects of the model. For the documentation, we use a

security model that is based on the CORAS language

(Lund et al., 2011). The last step of our method is

the refinement of the model, for which we inspect the

identified scenarios in more detail.

To support the application of our method, we pro-

vide a graphical tool. It allows to document the results

of the method in a model and provides different views

on the model instances. The model-based approach

ensures consistency and traceability. The tool guides

through the different steps of our method and supports

their execution, thus limiting the manual effort to ap-

ply the method. Furthermore, we define validation

conditions for each step which help to detect errors in

the application of the method as early as possible.

The remainder of the paper is structured as fol-

lows: In Section 2, we briefly introduce the underly-

ing concepts of this paper, i.e. problem frames and

CORAS, followed by a description of the underlying

models in Section 3. Section 4 contains our identifi-

cation method which we exemplify in Section 5. We

discuss related work in Section 6 and conclude our

paper with an outlook on future research directions in

Section 7.

2 BACKGROUND

For our method, we mainly consider two fundamental

concepts which we introduce in the following.

2.1 Problem Frames & Domain

Knowledge

To model functional requirements, we make use of the

problem frames approach as introduced by Michael

Jackson (Jackson, 2000). For modeling requirements,

we make use of problem diagrams which consist of

domains, phenomena, and interfaces. In previous

work, we proposed a notation based on the Google

Material Design

1

which provides a user-friendly way

to illustrate the diagrams (Wirtz and Heisel, 2019).

Machine domains (

) represent the piece of soft-

ware to be developed.

Problem domains represent entities of the real

world. There are different types: biddable domains

with an unpredictable behavior, e.g. persons ( ),

causal domains ( ) with a predictable behavior, e.g.

technical equipment, and lexical domains ( ) for data

representation. A domain can take the role of a con-

nection domain ( ), connecting two other domains,

e.g. user interfaces.

Interfaces between domains consist of phenom-

ena. There are symbolic phenomena, representing

some kind of information or a state, and causal phe-

nomena, representing events, actions, and commands.

Each phenomenon is controlled by exactly one do-

main and can be observed by other domains. A phe-

nomenon controlled by one domain and observed by

another is called a shared phenomenon between these

two domains. Interfaces (solid lines) contain sets of

shared phenomena. Such a set contains phenomena

controlled by one domain indicated by X!{...}, where

X stands for an abbreviation of the name of the con-

trolling domain.

A problem diagram contains a statement in form

of a functional requirement (represented by the sym-

bol ) describing a specific functionality to be devel-

oped. A requirement is an optative statement which

describes how the environment should behave when

the software is installed.

Some phenomena are referred to by a requirement

(dashed line to controlling domain), and at least one

phenomenon is constrained by a requirement (dashed

line with arrowhead and italics). The domains and

their phenomena that are referred to by a requirement

are not influenced by the machine, whereas we build

the machine to influence the constrained domain’s

phenomena in such a way that the requirement is ful-

filled.

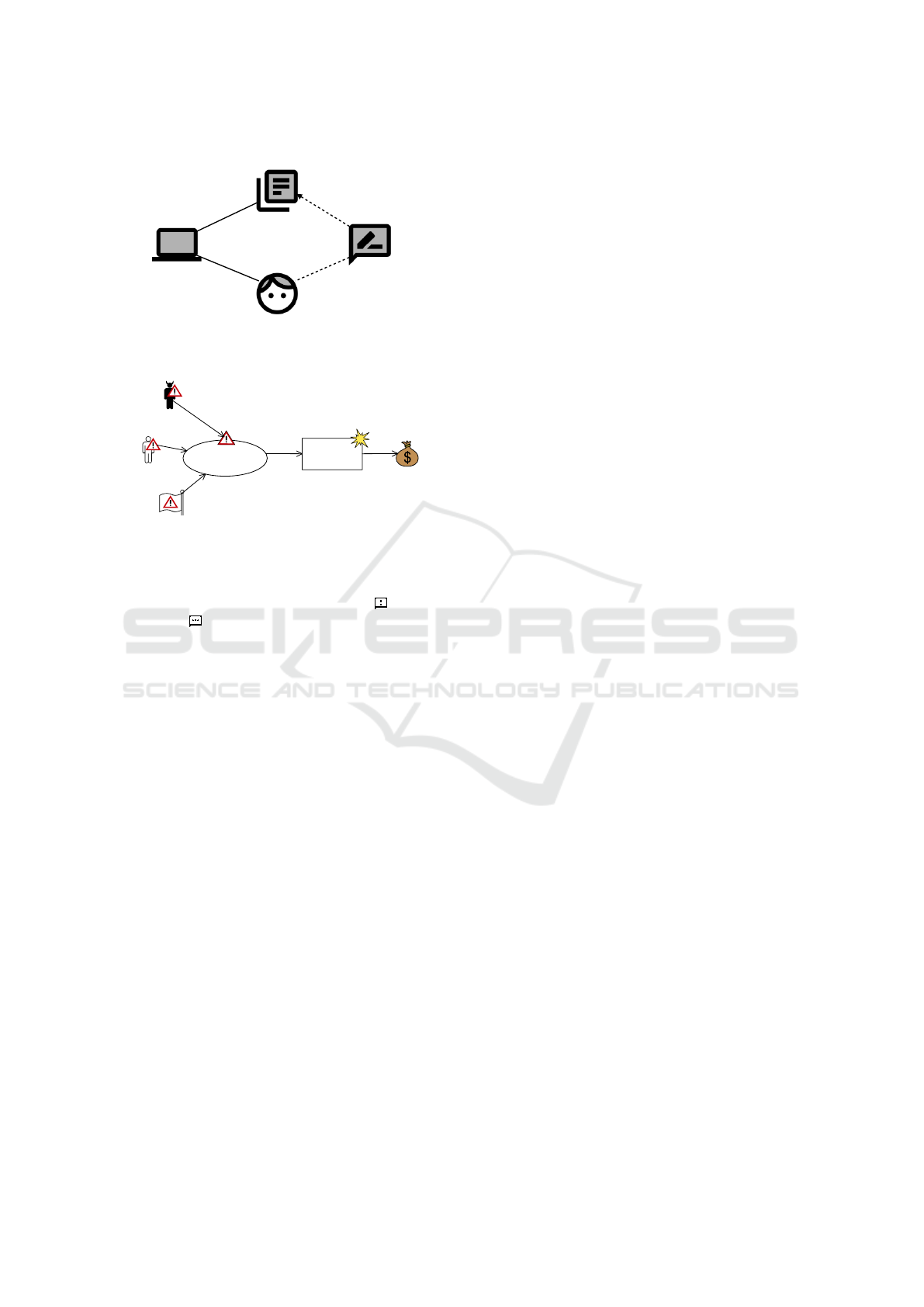

In Figure 1, we show a small example describing

a functional requirement for updating some informa-

tion. A Person provides information to Software

to be updated. We make use of a lexical domain In-

formation to illustrate a database. The functional

requirement Update refers to the phenomenon up-

dateInformation and constrains the phenomenon in-

formation.

In addition to problem diagrams, we make use

of domain knowledge diagrams which have a similar

syntax as problem diagrams. Such diagrams do not

contain any requirements, but indicative statements

1

Google Material - https://material.io (last access:

September 30, 2019)

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

386

Update

Person

Software

Information

updateInformation

information

P!{provideInformation}

S!{updateInformation}

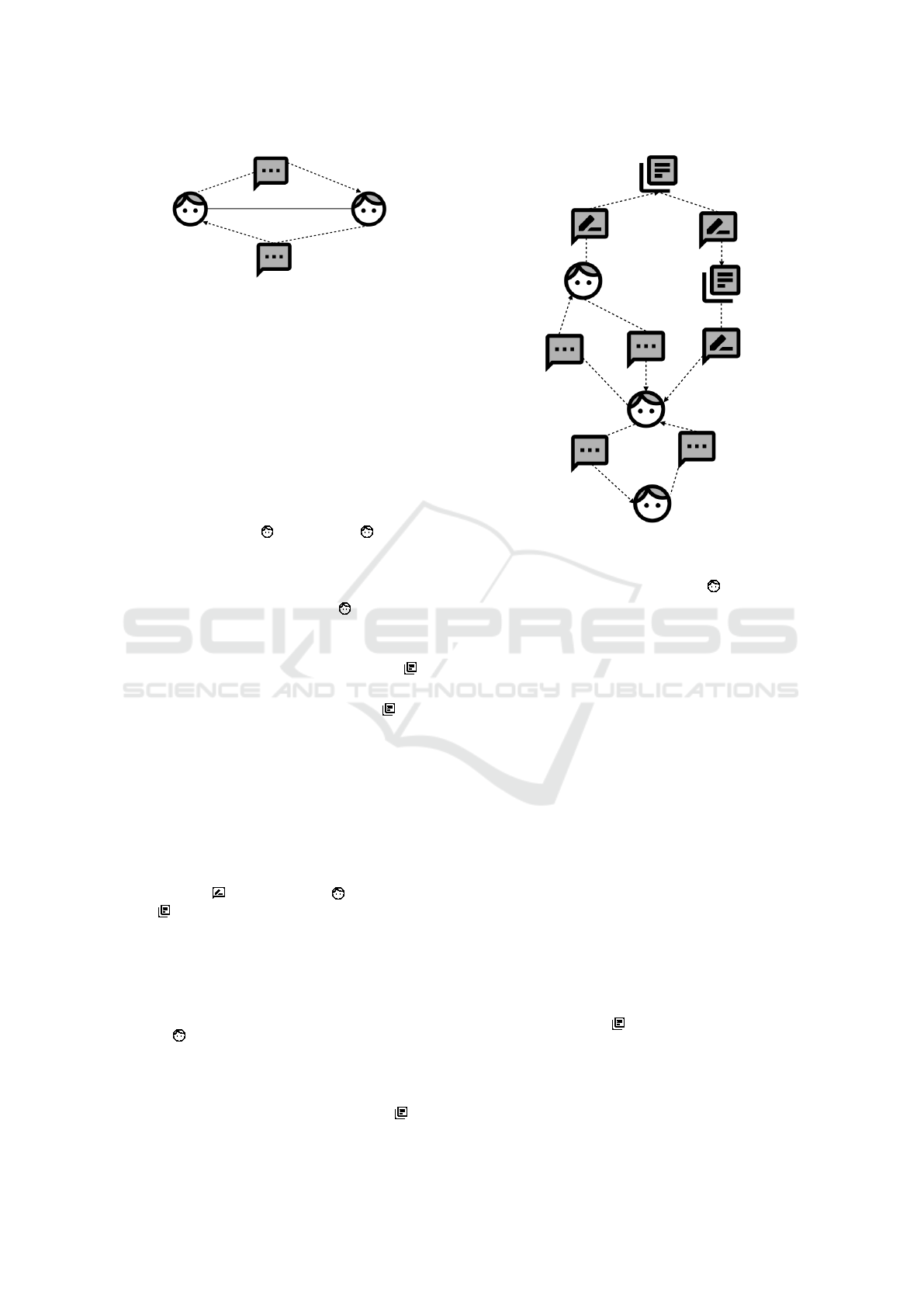

Figure 1: Example for Problem Diagram.

Human

Deliberate

Non-

Human

Unwanted

Incident

Human

Accidental

Asset

Threat

Scenario

ini�ates

leads

to

harms

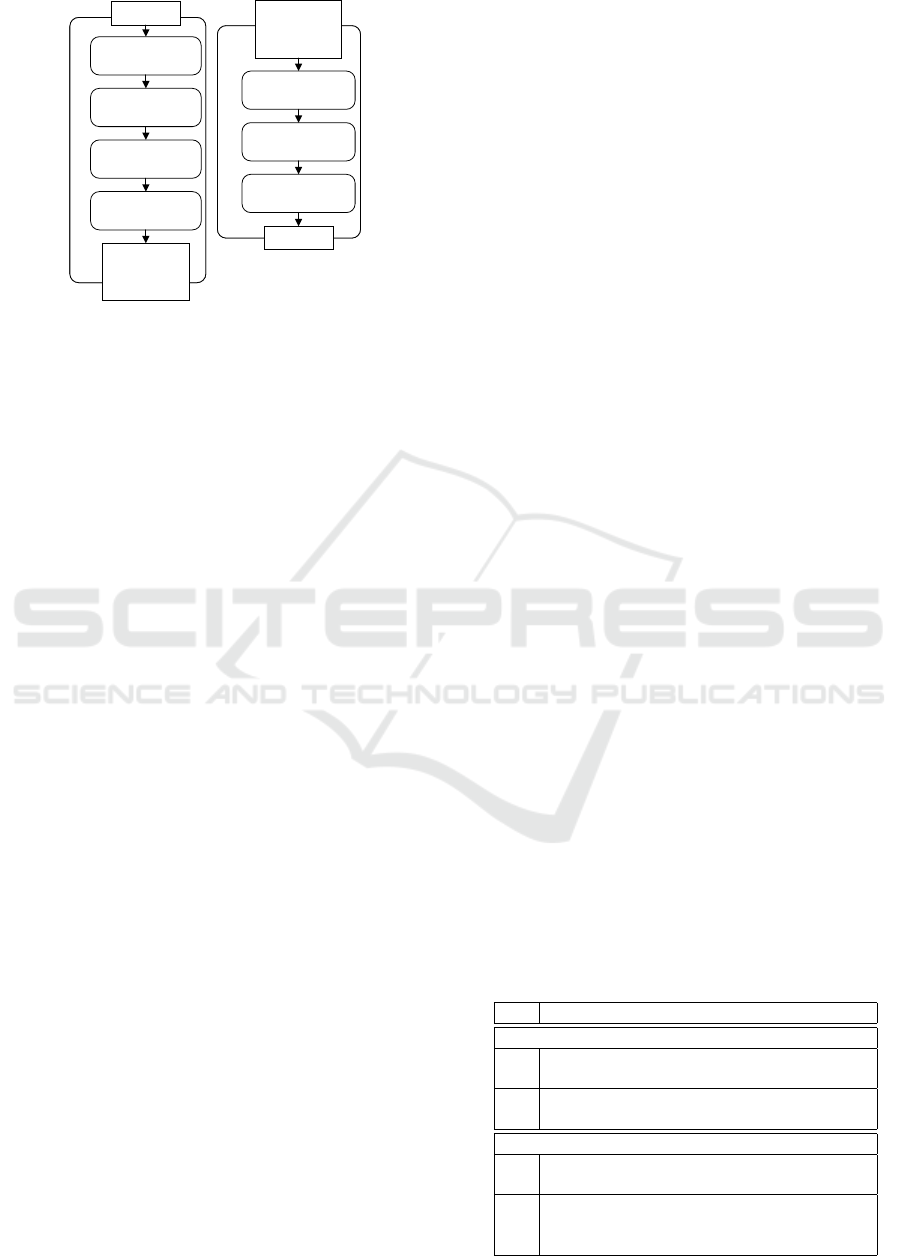

Figure 2: CORAS Threat Diagram.

which describe the environment. We distinguish two

types of those statements, namely facts ( ) and as-

sumptions ( ) (van Lamsweerde, 2009). As facts,

we consider fixed properties of the environment irre-

spective of how the machine is built. Assumptions

are conditions we have to rely on to be able to sat-

isfy the requirements. Similar to requirements, those

statements can refer to or constrain phenomena de-

scribing the expected behavior of domains according

to the domain knowledge.

2.2 CORAS

CORAS (Lund et al., 2011) is a model-based method

for risk management. It consists of a step-wise pro-

cess and different kinds of diagrams. The method fol-

lows the ISO 31000 risk-management standard (In-

ternational Organization for Standardization, 2018).

Each step provides guidelines for the interaction with

the customer on whose behalf the risk management

activities are carried out. The results are documented

in a model using the CORAS language. The method

starts with the establishment of the context and ends

up with the suggestion of treatments to address the

risk.

In our method, we use the CORAS language to

document incident scenarios, which may lead to a

harm for an asset. The symbols we make use of

are shown in Figure 2. Direct Assets are items of

value. There are Human-threats deliberate, e.g. a

network attacker, as well as Human-threats acciden-

tal, e.g. an employee pressing a wrong button acci-

dentally. To describe technical issues there are Non-

human threats, e.g. malfunction of software. A threat

initiates a Threat scenario. A threat scenario de-

scribes a state, which may possibly lead to an un-

wanted incident, and an Unwanted incident describes

the action that actually harms an asset.

3 UNDERLYING MODELS

To document the results of our method, we follow

a model-based approach. We distinguish between a

problem frames model and a CORAS threat model.

For both models, we provide different views that show

only parts of the model to help users of our method fo-

cusing on relevant aspects, thus ensuring scalability.

Problem Frames Model. The problem frames

model contains domains, phenomena, and in-

terfaces to document and analyze statements,

i.e. requirements and domain knowledge (cf.

Section 2.1). With regard to our method, there are

three different views on the model: (i) Statement

diagrams to show selected requirements and do-

main knowledge; (ii) an information flow graph

(IFG) to analyze the information flow between

domains; and (iii) a statement graph to show the

relations between domains and statements.

Security Model. The security model holds a refer-

ence to the problem frames model to ensure trace-

ability and consistency. In the security model, we

document security goals for software under devel-

opment and identified security incidents using the

CORAS approach. For this reason, we provide

two views on the model: (i) an overview of the

defined security goals; and (ii) CORAS threat di-

agrams describing the derived scenarios.

The tool, which we provide to support the applica-

tion of our method makes use of these models, helps

to maintain them, and helps to create graphical in-

stances of the views.

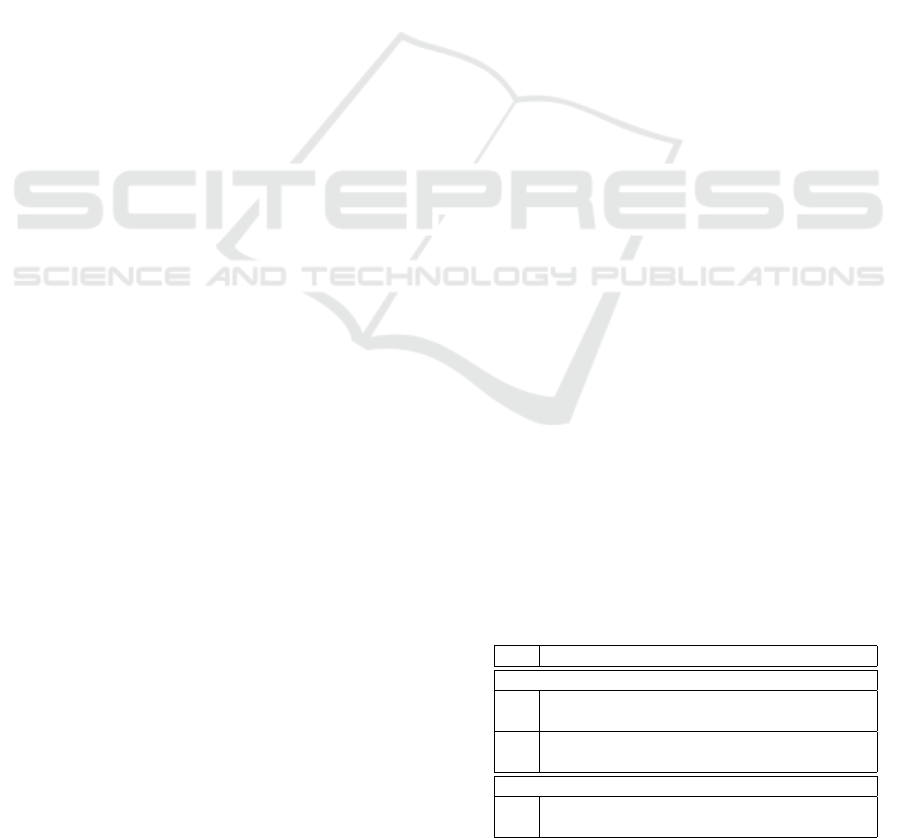

4 METHOD

Our method allows to systematically derive security

incidents from functional requirements. It consists of

two parts with different sub-steps, for which we pro-

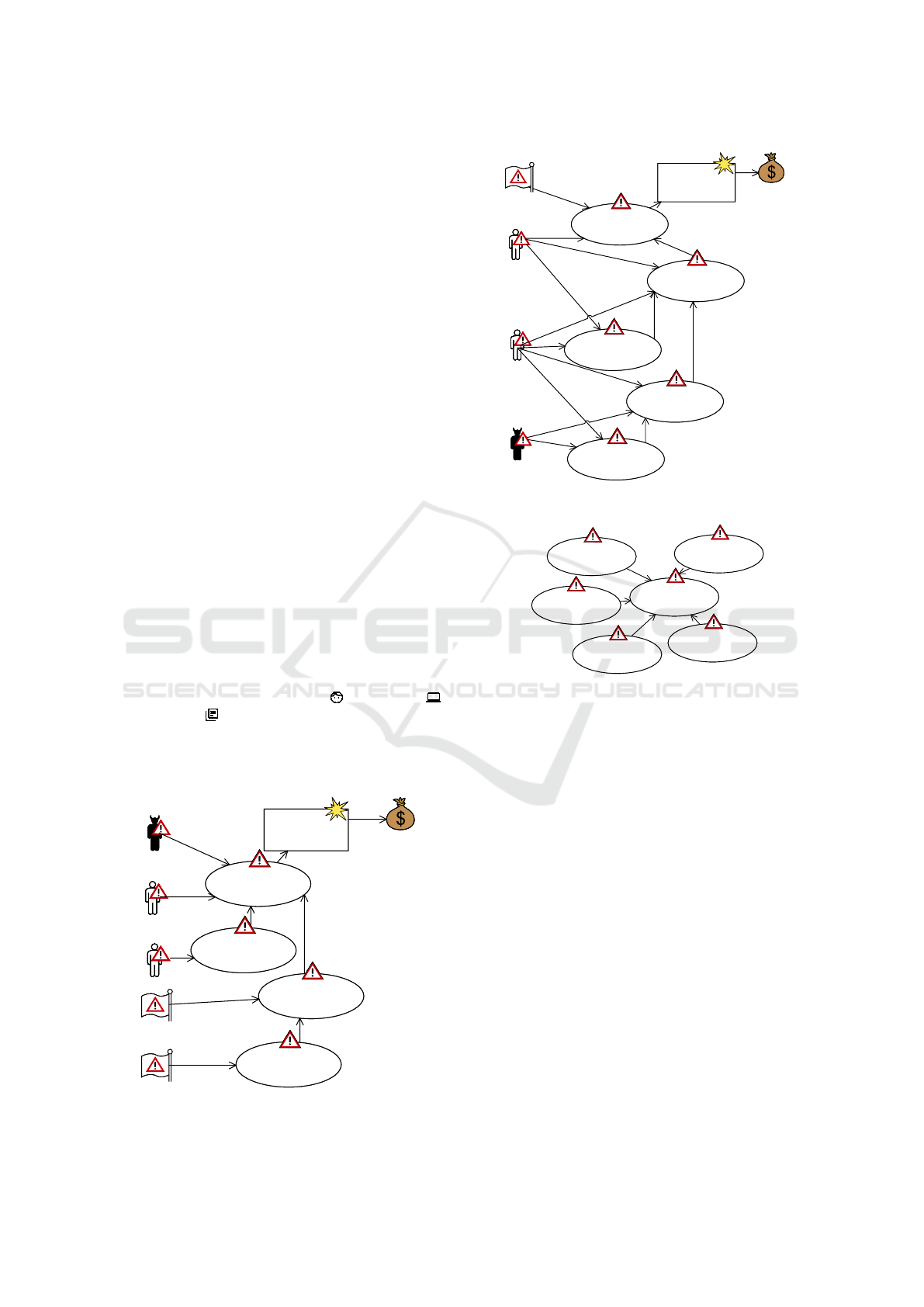

vide an overview in Figure 3. In the following, we

describe the steps in detail and state examples from a

growing set of validation conditions. Note, that some

conditions can be checked automatically using our

Risk Identification: From Requirements to Threat Models

387

(B) Incident Identification

(B.1) Define

Unwanted Incidents

(B.3) Refinement

(B.2) Identify

Relevant Incidents

Extended Problem

Frame Model

& Initial Security

Model

(A) Preparation

(A.1) Define

Security Goals

(A.4) Identify

Possible Attackers

(A.3) Derive

Information Flow

(A.2) Elicit Domain

Knowledge

Problem

Frame Model

Extended Problem

Frame Model

& Initial Security

Model

Security

Model

(B) Incident Identification

(B.1) Define

Unwanted Incidents

(B.3) Refinement

(B.2) Identify

Relevant Incidents

Extended Problem

Frame Model

& Initial Security

Model

(A) Preparation

(A.1) Define

Security Goals

(A.4) Identify

Possible Attackers

(A.3) Derive

Information Flow

(A.2) Elicit Domain

Knowledge

Problem

Frame Model

Extended Problem

Frame Model

& Initial Security

Model

Security

Model

Figure 3: Method overview.

tool. The ones to be checked manually help security

analysts in focusing on specific parts while reviewing

the documented results.

4.1 (A) Preparation

As input for our method, we require a problem frame

model describing the functional requirements of soft-

ware to be developed. Before identifying threats,

it is necessary to elicit domain knowledge and to

make that knowledge explicit. Domain knowledge

describes specific characteristics of the environment,

which complements the requirements model. With re-

gard to security, domain knowledge is an important

aspect since it may include additional threats, e.g. so-

cial engineering. Within the preparation part of the

method, we define four sub-activities for which we

document the results in the model, thus providing an

extended problem frame model as the outcome.

Step A.1: Define Security Goals

An asset is something of value for a stakeholder.

Since our method concentrates on information secu-

rity, we consider a piece of information which is of

value for someone as an asset. A security goal de-

notes that an asset shall be protected with regard to

confidentiality, integrity or availability.

As input for the step, we consider the initial prob-

lem frame model describing the functional require-

ments. In a problem frame model, information is rep-

resented by symbolic phenomena. Therefore, we take

the list of all symbolic phenomena as input for which

domain experts, e.g. the software provider, have to

define necessary security goals which are documented

in the security model (cf. Section 3). Each secu-

rity goal holds a reference to the corresponding phe-

nomenon in the problem frame model.

There are two validation conditions for the first

step that concern the completeness of security goal

definition.

VC1. All symbolic phenomena of the initial require-

ments model have been considered as possibly be-

ing an asset.

VC2. All desired security goals have been specified

and have been documented in the security model.

Step A.2: Elicit Domain Knowledge

The second sub-activity deals with eliciting additional

domain knowledge. The initial problem frame model

only covers functional requirements, and therefore

only captures those domains and interfaces that be-

long to those requirements. For identifying threats, it

is essential to elicit domain knowledge. We consider

the identification of additional stakeholders, as well as

additional information flows. To document the results

of the elicitation along with functional requirements,

we make use of domain knowledge diagrams as de-

scribed in 2.

Meis proposes questionnaires to elicit domain

knowledge based on problem frame models in the

context of privacy (Meis, 2013). There are different

questionnaires per domain type, i.e. one for causal

and lexical domains and one for biddable domains.

We adapt those questionnaires to identify stakehold-

ers and information flows, which we show in Table 1.

We define three validation conditions for the

present step which concerns the completeness of do-

main knowledge elicitation. Another important aspect

is that we only capture the desired characteristics of

the environment at this stage, not for example attack-

ers.

VC3. All domains have been inspected using the pro-

vided questionnaires.

VC4. The identified domain knowledge only cap-

tures the expected and desired characteristics of

the environment with regard to the software to be

developed.

Table 1: Questionnaire for indirect stakeholders.

No Question

Biddable Domains

1 Does the domain share any information with

other biddable domains?

2 Does the domain receive any information

from other biddable domains?

Causal & Lexical Domains

3 Are there stakeholders in the environment

that have direct access to the domain?

4 Are there other systems that contain the do-

main? What stakeholders have access to the

domain?

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

388

VC5. The identified domain knowledge has been

documented in the problem frame model.

Step A.3: Derive Information Flow

To identify threats for assets, it is necessary to fur-

ther analyze the intended information flow for the sys-

tem and to identify those domains at which an asset

is available. On the one hand, with regard to confi-

dentiality, it is necessary to not disclose the asset to

other domains than intended. On the other hand, in-

tegrity and availability of assets shall be preserved at

the identified domains.

We make use of an information flow graph to de-

rive the intended information flow (Wirtz et al., 2018).

Such a graph is directed and depicts which infor-

mation represented as a phenomenon is available at

which domain. The graph can be created by inspect-

ing the interfaces between the domains of a problem

frame model. When both domains share an asset-

related phenomenon, it means that the information is

available at both domains.

We limit the information flow graph to those phe-

nomena that are an asset, i.e. to those phenomena for

which a security goal has been defined. We do not

consider attackers we identify in the following step

for the information flow graph since assets shall not

be available for them.

For the third step, we define the following valida-

tion conditions:

VC6. All domains and interfaces of the problem

frame model have been considered for the deriva-

tion of the information flow graph.

VC7. The graph only depicts domains at which an

asset is available.

Step A.4: Identify Possible Attackers

In Step A.2, we focused on intended and authorized

stakeholders and information flows in the environ-

ment. In the present step, we identify possible attack-

ers by inspecting each domain of the problem frame

model with regard to its vulnerability. Again, we

make use of questionnaires with respect to the type

of domain under investigation, which we show in Ta-

ble 2. For a biddable domain, we investigate if the

domain is vulnerable for social engineering attacks or

if it may act as an attacker itself, e.g. as a malicious

insider. For causal and lexical domains, we elicit at-

tackers that may attack that domain.

Using domain knowledge diagrams, we document

the attackers in the problem frame model. Such a dia-

gram consists of a statement, attacker domain, vulner-

able domain, and the interface between both domains.

Note, that the identification of possible attackers

is an approximation since we neither define the objec-

tives of an attacker nor the conditions under which the

attack may succeed. The detailed analysis of threats

follows in the second phase of the method.

There are three validation conditions for this step

with regard to completeness and documentation of

identified attackers.

VC8. Each domain has been considered for being

possibly vulnerable.

VC9. All attackers have been documented indepen-

dently of whether an attack may be successful or

not.

VC10. The domain knowledge diagrams describe the

identified attackers in a meaningful manner.

4.2 (B) Incident Identification

The extended problem frame model and initial secu-

rity model with security goals serve as the input for

the threat identification. For identifying threats, we

consider the system as a whole, which means that we

do not consider each requirement or domain knowl-

edge in isolation, but also consider relations between

them. Considering a combination of statements en-

sures to capture a complete path of actions throughout

different domains that are necessary to successfully

realize a threat. As mentioned in Section 2, we make

use of the CORAS notation (Lund et al., 2011) to doc-

ument threats. The threat identification consists of

three sub-activities which we describe in the follow-

ing. We document the results in the form of CORAS

threat diagrams in the security model.

Step B.1: Define Unwanted Incidents

We create a CORAS threat diagram backwards, start-

ing from an asset, for which we first define unwanted

incidents. An unwanted incident denotes the action in

which an asset is actually harmed with regard to its

defined security goal. For each security property, we

consider a specific type of unwanted incident, and for

each type, we provide a textual pattern (TP), where

“< · ·· >” denotes a variable part of the pattern.

Table 2: Questionnaire for attackers.

No Question

Biddable Domains

1 Is the domain vulnerable for social engi-

neering attacks?

2 Is it possible that the domain may act as an

attacker, e.g. as a malicious insider?

Causal & Lexical Domains

3 Could the domain be attacked, by whom and

how?

Risk Identification: From Requirements to Threat Models

389

We define unwanted incidents for each security

goal in the following way:

Confidentiality. In the context of confidentiality,

an unwanted incident denotes an undesired disclosure

of an asset to unauthorized third parties represented

as a biddable domain in the model. We identify unau-

thorized third parties with the help of the information

flow graph which shows at which domains an asset is

available. As relevant third parties, we consider those

biddable domains at which an asset is not available,

i.e. which are not contained in the information flow

graph with regard to this asset. Security analysts now

decide whether the disclosure to this domain shall be

considered for further analysis. In case of consider-

ation, we create an unwanted incident with the fol-

lowing textual pattern, where third party describes the

domain to which the asset is disclosed:

TP: < asset > is disclosed to < third party >.

Integrity. An unwanted incident with regard to

integrity is the unauthorized alteration of an asset at

a specific domain. The information flow graph de-

picts all domains at which an asset is available. For

each identified domain, security analysts have to de-

cide whether the harm of integrity at this domain shall

be considered for further analysis. Using the follow-

ing textual pattern, we document the corresponding

unwanted incident, where domain stands for the do-

main at which the integrity may be harmed.

TP: < asset >’s integrity is violated at

< domain >.

Availability. For availability, an unwanted inci-

dent denotes that an asset is not available for autho-

rized entities. As authorized entities, we consider all

domains of the information flow graph at which the

asset is available. Again, security analysts have to de-

cide whether the unavailability at this domain shall be

further considered. The following textual pattern de-

scribes the resulting unwanted incident:

TP: < asset > is unavailable at < domain >.

We define the following three validation condi-

tions for the present step:

VC11. All security goals have been considered for

the definition of unwanted incidents.

VC12. For confidentiality, all biddable domains at

which the asset shall not be available have been

considered for identifying relevant unwanted in-

cidents.

VC13. For integrity and availability, all domains of

the information flow graph have been considered

at which the corresponding asset is available.

Step B.2: Identify Relevant Incidents

After we defined unwanted incidents that shall be con-

sidered, we identify threat scenarios and threats that

may lead to such unwanted incidents. To do so, we do

not only focus on specific statements but consider the

system as a whole, i.e. all requirements and domain

knowledge. We identify sequences of statements that

may lead to an unwanted incident. A statement that

refers to a domain and constrains another indicates a

possible information flow from the referred domain

to the constrained one. The statement graph of the

requirements model provides an overview of all state-

ments and their references to domains. A path in the

graph (sequence of statements) denotes an informa-

tion flow via several domains. In the following, we

identify relevant paths depending on the type of se-

curity property of an unwanted incident and translate

those sequences of statements systematically into a

CORAS threat diagram.

“h. . . i” represents a sequence of statements.

Confidentiality. With regard to confidentiality, we

collect all non-cyclic paths hs

1

, . . . , s

n

i for which the

following conditions hold:

• s

1

constrains the domain that is given in the un-

wanted incident, i.e. the stakeholder to which the

asset shall not be disclosed.

• s

n

refers to a domain at which the asset is avail-

able.

• For all 1 ≤ i < n: s

i

refers to a domain that s

i+1

constrains.

Such a path denotes a sequence of statements that

may lead to a disclosure of the asset to the unautho-

rized stakeholder. The path can be translated into a

CORAS threat diagram in the following way:

• Each statement s

i

is translated to a threat scenario

ts

i

.

• ts

1

leads to the unwanted incident.

• For all 1 ≤ i < n: ts

i+1

leads to ts

i

Translating a statement into a threat scenario means

to identify a situation with regard to the statement

that may lead to information flow from the referred

domain to the constrained domain, assuming that

the asset is available at the referred domain. The

resulting path denotes a sequence of threat sce-

narios that may lead to an information flow of the

asset from a domain at which it is available to the

unauthorized stakeholder. For each threat scenario,

we also identify the domain that initiates it. For

example, an attacker is a human-threat deliberate

who realizes a social engineering attack. We add the

threat to the threat diagram, as well. In case that a

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

390

statement of a path cannot be translated into a threat

scenario, e.g. because involved domains are con-

sidered as secure, we do not add the path to the model.

Integrity. In the following, we identify sequences

of statements that could lead to harm of integrity

for a given asset. We consider paths of statements

h(d

1

, s

1

), . . . , (d

n

, s

n

)i for which the following condi-

tions hold:

• d

1

is a domain at which the integrity shall be pre-

served

• For all 1 ≤ i ≤ n: s

i

constrains d

i

.

• For all 1 ≤ i < n: s

i

refers to d

i+1

.

• ∀i, j : 1..n|i 6= j • d

i

6= d

j

We do not consider a specific domain d

n

as an at-

tacker that harms the integrity of an asset at d

1

. In-

stead, we consider all non-cyclic paths of statements

that may lead to harm of integrity. Those paths can

be translated into a CORAS threat diagram in the fol-

lowing way:

• Each statement s

i

is translated to a threat scenario

ts

i

• ts

1

leads to the unwanted incident for d

1

• For all 1 ≤ i < n: ts

i+1

leads to ts

i

For each threat scenario, we also document the

corresponding threat that initiates the threat scenario.

Each path of threat scenarios denotes a sequence that

may lead to harm of integrity for an asset at a given

domain.

Availability. The creation of threat scenarios for

unwanted incidents with regard to availability is simi-

lar to the consideration of integrity. We identify paths

in the same way but consider threat scenarios that may

lead to unavailability of the asset at a given domain.

For the present step, we identify the following val-

idation conditions:

VC14. To identify statements paths, all statements

contained in the model have been considered.

VC15. Each identified path has been translated to a

corresponding path in the threat diagram. If not

possible, justifications have been given.

VC16. Only non-cyclic paths have been considered.

VC17. The domains that realize a threat scenario

have been documented as threats.

Step B.3: Refinement

To create the CORAS threat diagram in the previous

step, we only consider one threat scenario per state-

ment. To further refine the threat scenario, we inspect

the corresponding statement in detail by analyzing its

problem diagram or domain knowledge diagram, re-

spectively. For each domain or interface of the dia-

gram, we identify threat scenarios that may cause the

initial scenario and add them to the model. Each of

them may lead to the initial one, thus pointing to it.

The step of refinement is optional. A challenge for

security analysts is to find the right abstraction level.

They should only refine threat scenarios where neces-

sary to not provide an overhead of information.

For the last step of our method, we define the fol-

lowing validation conditions:

VC18. Only those domains have been considered to

refine a threat scenario that are related to the cor-

responding statement, i.e. contained in its prob-

lem diagram or domain knowledge diagram.

VC19. The identified threat scenarios point to the ini-

tial scenario, thus refining it.

VC20. Refinements have only been made where nec-

essary.

The resulting threat diagram can now be used as a

starting point to evaluate risks and to later select ap-

propriate controls that treat the identified threats.

5 EXAMPLE

For reasons of simplicity, we consider a small exam-

ple representing a typical software for a company. We

also applied our method to more complex examples,

e.g., a smart home scenario, to validate our approach

and the results.

All outcomes we show in the following can be cre-

ated with our tool. The items are contained in the un-

derlying models, and the diagrams provide different

views (cf. Section 3).

5.1 Initial Input

In the example, there are employees and a boss. For

the software to be developed, we consider the follow-

ing functional requirements (FR):

FR

1

. An employee can request data from a database.

FR

2

. A boss maintains a secret which he/she can

store and update in a database.

FR

3

. Data in the database shall be signed with the

secret provided by the boss.

In Figure 4, we show the corresponding problem

diagrams for the functional requirements. The re-

quirement FR1: Query database refers data at the

Database , where the data to be retrieved is stored.

Risk Identification: From Requirements to Threat Models

391

FR1: Query database

Employee

Database

Query

informationE

data

E!{requestData}

Q!{provideData}

D!{data}

(a) FR

1

: Query Database

FR2: Update secret

Boss

Secret

Update

secret

updateSecret

U!{storeSecret}

B!{updateSecret}

(b) FR

2

: Update secret

FR3: Sign data

Secret

Database

Signature

data

secret

D!{data}

Sig!{signedData}

S!{secret}

(c) FR

3

: Sign data

Figure 4: Example: Problem diagrams.

The requirement constrains the phenomenon in-

formationE of the Employee since to satisfy the re-

quirement the employee has to receive the requested

data. The machine Query describes the piece of

software to be built to satisfy the requirement.

For the requirement FR2: Update secret , we

consider three domains: Boss , Update , and Se-

cret which represents the specific database where the

secret is stored. The requirement refers to the event

updateSecret of the boss and constrains the secret.

The requirement FR3: Sign data refers to the

secret and constrains the data to be signed at the

database. The machine Signature is responsible

for signing the data.

5.2 Preparation

For the preparation, we consider the previously men-

tioned functional requirements as input.

Define Security Goals. We concentrate on the

following security goals which are documented in the

security model: (1) The integrity of the secret shall

be preserved, and (2) the confidentiality of the secret

shall be preserved. We do not show how to identify

threats with regard to availability since the procedure

is similar to integrity.

Elicit Domain Knowledge. Using our proposed

questionnaires, we identified two assumptions (A) as

domain knowledge to be considered:

A

1

. An employee provides information to the boss,

e.g. about his progress in projects.

A

2

. A boss provides some information to the em-

ployee, e.g. tasks to be performed.

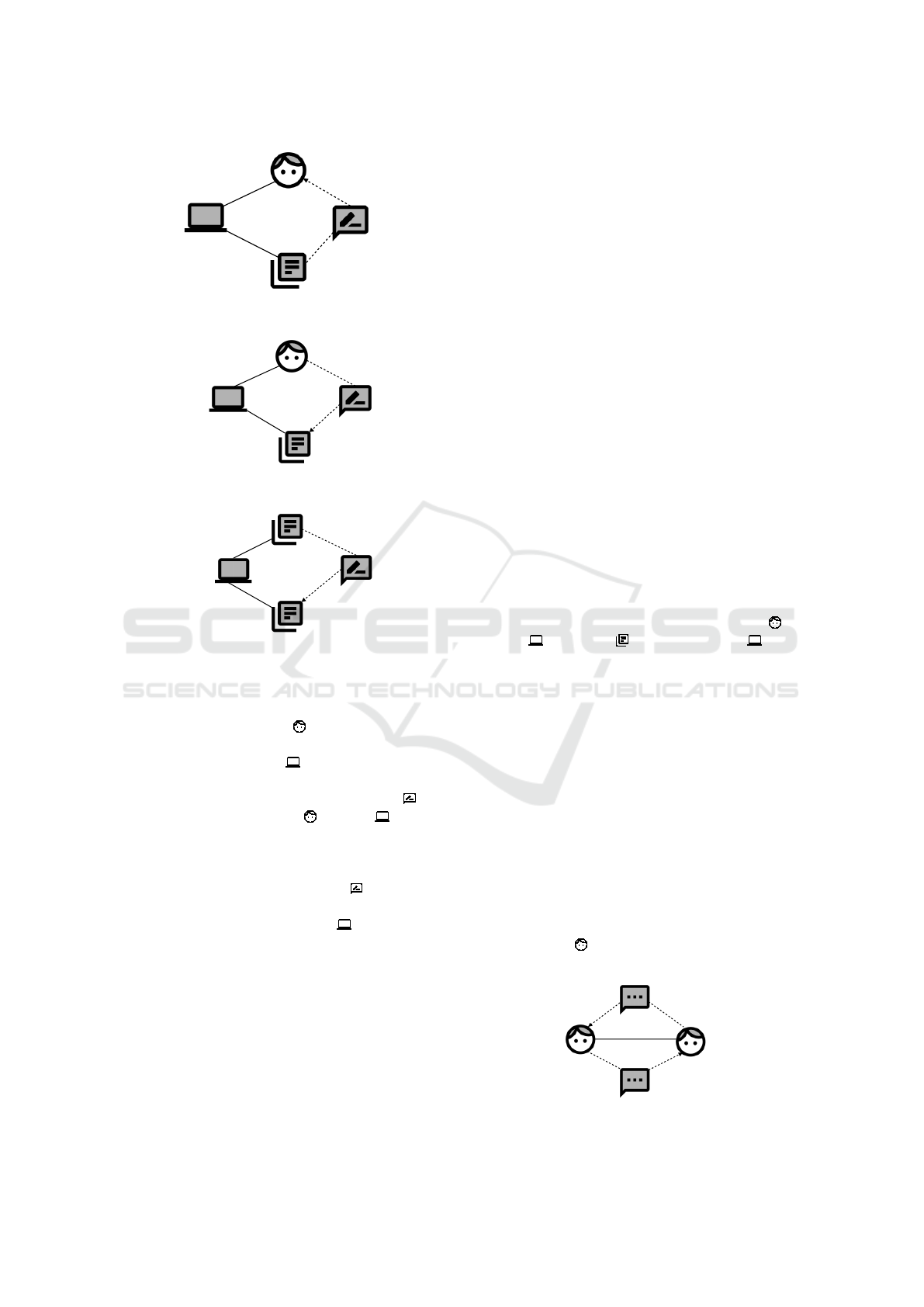

Figure 5 shows the domain knowledge diagram

for the stated assumptions which we document in the

problem frame model. For example, the assumption

A1: Talk to boss refers to the phenomenon talkTo-

Boss and constrains the phenomenon informationB,

which means that there is a communication from the

employee to the boss. The assumption A2: Receive

information from boss can be expressed in the same

way.

Derive Information Flow. Using the information

flow graph (Wirtz et al., 2018), we identify domains

at which the asset secret is available. There are

four domains we have to consider: (1) Boss , (2)

Update , (3) Secret , and (4) Signature .

Identify Possible Attackers. Using our question-

naire, we identified an employee as a vulnerable do-

main for social engineering. This leads to the follow-

ing additional assumptions to be considered for which

we show the corresponding domain knowledge dia-

grams in Figure 6:

A

3

. An attacker may influence an employee’s behav-

ior, e.g. by performing a social engineering at-

tack.

A

4

. An attacker may receive secret information from

an employee, e.g. by performing a social engi-

neering attack.

In the diagram, we consider a new biddable do-

main Attacker which represents the identified at-

tacker.

A2: Receive information from boss

Boss

Employee

A1: Talk to boss

informationE

provideInformation

informationB

talkToBoss

B!{provideInformation}

E!{talkToBoss}

Figure 5: Example: Domain knowledge.

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

392

A4: Receive information

A3: Influence employee

Employee

Attacker

informationA

provideInformation

informationE

influence

A!{influence}

E!{provideInformation}

Figure 6: Example: Attackers.

5.3 Incident Identification

Using the extended problem frame model, we pro-

ceed with identifying threats.

Define Unwanted Incidents. With regard to the as-

set secret, we defined security goals for integrity and

confidentiality. Those unwanted incidents are docu-

mented in the security model and serve as the starting

point for creating a threat diagram. From the infor-

mation flow graph we know that the asset shall not be

available for Employee and Attacker . In the fol-

lowing, we will focus on confidentiality with regard

to the attacker and define the following unwanted in-

cident (UI):

UI

1

. secret is disclosed to Attacker .

For defining unwanted incidents with regard to in-

tegrity, we consider those domains at which the asset

is available. We focus on the domain Secret and

define the following unwanted incident:

UI

2

. secret’s integrity is violated at Secret .

We identify relevant scenarios for both unwanted

incidents in the following.

Identify Relevant Incidents. To identify relevant

statement paths, we focus on the statement graph,

which is another view on the model. It only contains

the statements and references to the domains. For our

example, we show the graph in Figure 7. The notation

of the graph is similar to problem diagrams: FR2:

Update secret refers to Boss and constrains

Secret , thus indicating a possible information flow

from boss to secret. In the following, we will use

the graph to identify relevant threat scenarios. By

systematically traversing the graph as described in

Section 4, we identify relevant paths.

Confidentiality. The asset shall not be disclosed to

Attacker . Therefore, we start at this domain and

identify paths to domains at which the asset is avail-

able. For our example, this leads to the following

paths (P):

P

1

: hA

4

, FR

1

, FR

3

i (asset available at Secret )

FR1: Query database

FR2: Update secret

FR3: Sign data

Boss

Employee

Attacker

Secret

Database

A4: Receive information

A2: Receive information from boss

A3: Influence employee

A1: Talk to boss

Figure 7: Example: Statement Graph.

P

2

: hA

4

, A

2

i (asset available at Boss )

We translate both paths into a CORAS threat diagram,

which we show in Figure 8. It consists of the un-

wanted incident that harms the confidentiality of the

asset secret. For each statement that is contained in a

path, we add a threat scenario to the diagram. Both

paths contain the statement A

4

which can be trans-

lated into a threat scenario concerning a social en-

gineering attack. The attacker is a deliberate human

threat and the employee is an accidental human threat.

P

1

contains two additional statements. With regard

to FR

1

the machine Query may have a malfunction

and is, therefore, a non-human threat. The malfunc-

tion may lead to an undesired information flow from

the database to the employee. The same holds for the

statement FR

3

where Signature is a non-human threat

that may lead to an unintended flow of the secret to

the database. P

2

contains the statement A

2

where the

boss provides some information to an employee. As

an accidental human threat, the boss may provide the

secret to the employee. Both paths show how the un-

wanted incident may be realized by following the path

of threat scenarios.

Integrity. The integrity of the asset shall be preserved

at the domain Secret , which leads to the following

paths starting at the domain:

P

3

: h(Secret, FR

2

), (Boss, A

1

), (Employee, A

2

)i

P

4

: h(Secret, FR

2

), (Boss, A

1

), (Employee, A

3

),

(Attacker, A

4

)i

Risk Identification: From Requirements to Threat Models

393

P

5

: h(Secret, FR

2

), (Boss, A

1

), (Employee, FR

1

),

(Database, FR

3

)i

In Figure 9, we show the CORAS threat diagram

including the paths P3 and P4. The path P5 can be

translated in the same way. For example, considering

path P4, the threat scenario derived from FR

2

leads

to the unwanted incident. It describes that an update

performed by the boss may harm the integrity due to a

malfunction of software, thus leading to the unwanted

incident. The machine Update is a non-human threat

and the boss an accidental human threat. Due to

assumption A

1

, an employee may provide incorrect

information to the boss which may influence the

update of the secret. A social engineering attack

on an employee (A

3

) may yield to this provision of

wrong information, and an attacker may use collected

information from the employee to perform the attack

(A

4

).

Refinement. Traversing the requirements model in

the proposed way leads to a detailed analysis of de-

pendencies between threat scenarios. We now show

how such a threat scenario can be further refined. As

an example, we consider the threat scenario Update

harms the integrity of secret which belongs to the

requirement FR2: A boss maintains a secret which

he/she can store and update in a database. In Fig-

ure 4 b) we show the corresponding problem diagram.

To refine the threat scenario, we consider the domains

and interfaces of the diagram. There are three do-

mains to be considered: (1) Boss , (2) Update ,

and (3) Secret .

In Figure 10, we show the resulting threat scenar-

ios. For each domain and for each interface between

domains, there is one threat scenario. For example,

SecretSecret

secret is

disclosed to

Attacker

secret is

disclosed to

Attacker

Social Engineering on

Employee (A4)

Social Engineering on

Employee (A4)

AttackerAttacker

EmployeeEmployee

Error provides

information to

Employee (FR1)

Error provides

information to

Employee (FR1)

Boss provides secret

to employee (A2)

Boss provides secret

to employee (A2)

BossBoss

QueryQuery

Error stores secret in

database (FR3)

Error stores secret in

database (FR3)

SignatureSignature

Figure 8: Example: Threats to confidentiality.

SecretSecret

Secret’s integrity

is harmed at

Secret

Secret’s integrity

is harmed at

Secret

Update harms

integrity of secret

(FR2)

Update harms

integrity of secret

(FR2)

BossBoss

Employee receives

incorrect information

from Boss (A2)

Employee receives

incorrect information

from Boss (A2)

Employee provides

incorrect information

to Boss (A1)

Employee provides

incorrect information

to Boss (A1)

EmployeeEmployee

UpdateUpdate

Social Engineering on

Employee (A3)

Social Engineering on

Employee (A3)

AttackerAttacker

Attacker receives

information from

Employee (A4)

Attacker receives

information from

Employee (A4)

Figure 9: Example: Threats to integrity.

Update harms

integrity of secret

(FR2)

Update harms

integrity of secret

(FR2)

Boss enters wrong

value

Boss enters wrong

value

Incorrect transmission

between boss and

machine

Incorrect transmission

between boss and

machine

Machine violates

integrity

Machine violates

integrity

Incorrect transmission

between machine and

lexical domain

Incorrect transmission

between machine and

lexical domain

Database stores

incorrect value

Database stores

incorrect value

Figure 10: Example: Refinement.

the boss may enter the wrong value for the new se-

cret.

The results of the threat identification can now be

further analyzed, e.g. to identify appropriate controls

to limit the harm for assets.

6 RELATED WORK

There is a recent case study on threat modeling that re-

vealed the effectiveness and efficiency of threat mod-

eling in large scale applications (Stevens et al., 2018).

The participants reported that threat modeling sup-

ports the communication in teams and the develop-

ment of mitigation strategies. Since we provide a tool

and graphical representations of the model, we im-

prove the scalability for larger applications, and the

diagrams can be used as a starting point in discus-

sions.

Lund et al. propose the CORAS risk management

process from which we adapted our notation (Lund

et al., 2011). The authors describe the process of

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

394

identifying threats as an interactive brainstorming ses-

sion. By considering our method, the brainstorming

sessions may be structured in a systematic way.

Abuse frames describe problem-oriented patterns

to analyze security requirements from an attacker’s

point of view (Lin et al., 2003). An anti-requirement

is fulfilled when a threat initiated by an attacker is

realized. Domains are considered as assets. Abuse

frames consider problems in isolation, whereas we

consider the system as a whole.

Haley et al. provide a problem-oriented frame-

work to analyze security requirements (Haley et al.,

2008). Using so called satisfactory arguments, the au-

thors analyze a system with regard to security, based

on its functional requirements. There is no specific

way to identify threats. Instead, the framework al-

lows to analyze the system as a whole, i.e. to analyze

functional requirements, assets, threats,m and coun-

termeasures in combination.

In the context of goal-oriented software-

engineering, Secure Tropos allows modeling

and analyzing security requirements along with

functional requirements (Mouratidis and Giorgini,

2007). Another goal-oriented approach is the usage

of anti-models (van Lamsweerde, 2004). Currently,

we do not consider goals in detail and do not provide

any goal refinement process. Instead, we put a special

focus on requirements and the corresponding data

flow which we analyze with regard to information

security. By combining problem frames with goal-

oriented methods, it is possible to document the goals

of the system and its context (Mohammadi et al.,

2013).

Sindre and Opdahl introduce misuse cases as an

extension of use cases. A misuse case describes how a

software shall not be used, i.e. how potential attackers

may harm the software (Sindre and Opdahl, 2005).

Misuse cases are a high-level description which does

not provide any details about information flows, as we

do in our approach.

Microsoft developed a method called STRIDE

(Shostack, 2014). It is a popular security framework

which is used to identify security threats. Using data

flow diagrams for modeling the system and its be-

havior, threats are elicited based on threat categories.

Each of these categories is a negative counterpart to a

security goal. Those categories may be considered as

guide words to improve the derivation of threat sce-

narios for specific statements in our approach.

The STORE method allows to elicit security re-

quirements in the earliest phases of a software devel-

opment process (Ansari et al., 2019). The authors fol-

low a similar approach to first identify domain knowl-

edge such as external entities and vulnerabilities. To

identify threats, the authors propose a threat dictio-

nary which contains a collection of previously identi-

fied threats. Currently, we do not consider any cata-

logs of threats as an input for our method, but the au-

thors do not consider dependencies between require-

ments or any information flow. Thus, both approaches

may benefit from each other.

Beckers proposes attacker templates to elicit pos-

sible attackers to a system (Beckers, 2015). Those

templates help to systematically identify and specify

the behavior and capabilities of potential attackers.

By embedding those templates in our process, we can

improve the identification of attackers, and therefore

the identification of security incidents.

7 CONCLUSION

Finally, we summarize our contributions and provide

an outlook on future research directions.

Summary. In this paper, we proposed a model-

based method to systematically derive relevant secu-

rity incidents from functional requirements. As re-

quired input, we consider a problem-oriented require-

ments model which we extend with additional domain

knowledge, i.e. additional stakeholders and possible

attackers. By traversing the requirements model and

identifying sequences of statements, our method de-

rives security incidents that may lead to harm for an

asset. Since we do not inspect each requirement or

assumption in isolation, we consider the software to

be developed and its environment as a whole. To fur-

ther refine the identified incidents, we inspect each re-

quirement and elicited domain knowledge in more de-

tail. Our model-based approach ensures consistency

and traceability through all the steps of the method.

By providing validation conditions for each step, we

assist security engineers in detecting errors in the ap-

plication of our method as early as possible.

To limit the effort to apply our method and to en-

sure scalability, we provide a tool for our method.

The provided wizards guide through the method, and

with our notation, we present the diagrams in a user-

friendly way. We formalized several validation con-

ditions to validate the models automatically.

Outlook. As future work, we plan to evaluate the ef-

fectiveness of our method and our tool. To do so, we

cooperate with an industrial company. In an experi-

ment, we will compare the results achieved with our

method with the results manually achieved by their

security experts.

As mentioned in Section 1, we proposed a tem-

plate to specify threats. We will embed this template

into our method to further assist security engineers in

Risk Identification: From Requirements to Threat Models

395

identifying relevant threats.

To enhance the user experience with our tool, we

plan to add quick fixes that help users in correcting

errors in their models. Those quick fixes will be based

on the not yet completed set of validation conditions.

REFERENCES

Ansari, M. T. J., Pandey, D., and Alenezi, M. (2019).

STORE: security threat oriented requirements engi-

neering methodology. CoRR, abs/1901.01500.

Beckers, K. (2015). Pattern and Security Requirements

- Engineering-Based Establishment of Security Stan-

dards. Springer.

FIRST.org (2015). Common Vulnerability Scor-

ing System v3.0: Specification Document.

https://www.first.org/cvss/cvss-v30-specification-

v1.8.pdf.

Haley, C. B., Laney, R. C., Moffett, J. D., and Nuseibeh, B.

(2008). Security requirements engineering: A frame-

work for representation and analysis. IEEE Trans.

Software Eng., 34(1):133–153.

International Organization for Standardization (2018). ISO

31000:2018 Risk management – Principles and guide-

lines. Standard.

Jackson, M. A. (2000). Problem Frames - Analysing and

Structuring Software Development Problems. Pearson

Education.

Lin, L., Nuseibeh, B., Ince, D. C., Jackson, M., and Moffett,

J. D. (2003). Analysing security threats and vulnera-

bilities using abuse frames.

Lund, M. S., Solhaug, B., and Stølen, K. (2011).

Model-Driven Risk Analysis - The CORAS Approach.

Springer.

Meis, R. (2013). Problem-based consideration of privacy-

relevant domain knowledge. In Hansen, M., Hoep-

man, J., Leenes, R. E., and Whitehouse, D., editors,

Privacy and Identity Management for Emerging Ser-

vices and Technologies - 8th IFIP International Sum-

mer School, Nijmegen, The Netherlands, June 17-21,

2013, Revised Selected Papers, volume 421 of IFIP

Advances in Information and Communication Tech-

nology, pages 150–164. Springer.

Mohammadi, N. G., Alebrahim, A., Weyer, T., Heisel, M.,

and Pohl, K. (2013). A framework for combining

problem frames and goal models to support context

analysis during requirements engineering. In Cuz-

zocrea, A., Kittl, C., Simos, D. E., Weippl, E. R.,

and Xu, L., editors, 5th International Cross-Domain

Conference, CD-ARES 2013, Regensburg, Germany,

September 2-6, 2013. Proceedings, volume 8127 of

LNCS, pages 272–288. Springer.

Mouratidis, H. and Giorgini, P. (2007). Secure tropos: a

security-oriented extension of the tropos methodol-

ogy. International Journal of Software Engineering

and Knowledge Engineering, 17(2):285–309.

Shostack, A. (2014). Threat Modeling: Designing for Se-

curity. Wiley.

Sindre, G. and Opdahl, A. L. (2005). Eliciting security re-

quirements with misuse cases. Requir. Eng., 10(1).

Stevens, R., Votipka, D., Redmiles, E. M., Ahern, C.,

Sweeney, P., and Mazurek, M. L. (2018). The battle

for new york: A case study of applied digital threat

modeling at the enterprise level. In Enck, W. and

Felt, A. P., editors, 27th USENIX Security Symposium,

USENIX Security 2018, Baltimore, MD, USA, August

15-17, 2018., pages 621–637. USENIX Association.

van Lamsweerde, A. (2004). Elaborating security require-

ments by construction of intentional anti-models. In

Finkelstein, A., Estublier, J., and Rosenblum, D. S.,

editors, 26th International Conference on Software

Engineering (ICSE 2004), 23-28 May 2004, Edin-

burgh, United Kingdom, pages 148–157. IEEE Com-

puter Society.

van Lamsweerde, A. (2009). Requirements Engineering -

From System Goals to UML Models to Software Spec-

ifications. Wiley.

Wirtz, R. and Heisel, M. (2018). A systematic method to

describe and identify security threats based on func-

tional requirements. In Zemmari, A., Mosbah, M.,

Cuppens-Boulahia, N., and Cuppens, F., editors, Risks

and Security of Internet and Systems - 13th Interna-

tional Conference, CRiSIS 2018, Arcachon, France,

October 16-18, 2018, Revised Selected Papers, vol-

ume 11391 of LNCS, pages 205–221. Springer.

Wirtz, R. and Heisel, M. (2019). RE4DIST: model-

based elicitation of functional requirements for dis-

tributed systems. In van Sinderen, M. and Maciaszek,

L. A., editors, Proceedings of the 14th International

Conference on Software Technologies, ICSOFT 2019,

Prague, Czech Republic, July 26-28, 2019., pages 71–

81. SciTePress.

Wirtz, R., Heisel, M., Borchert, A., Meis, R., Omerovic, A.,

and Stølen, K. (2018). Risk-based elicitation of se-

curity requirements according to the ISO 27005 stan-

dard. In Damiani, E., Spanoudakis, G., and Maci-

aszek, L. A., editors, Evaluation of Novel Approaches

to Software Engineering - 13th International Con-

ference, ENASE 2018, Funchal, Madeira, Portugal,

March 23-24, 2018, Revised Selected Papers, volume

1023 of Communications in Computer and Informa-

tion Science, pages 71–97. Springer.

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

396