Assessing the Impact of Idle State Type on the Identification of RGB

Color Exposure for BCI

Alejandro A. Torres-Garc

´

ıa

a

, Luis Alfredo Moctezuma

b

and Marta Molinas

c

Department of Engineering Cybernetics, Norwegian University of Science and Technology (NTNU), Gløshaugen,

O. S. Bragstads plass 2, Trondheim, Norway

Keywords:

EEG Signals, Brain-Computer Interfaces (BCI), Classification, Color Exposure, Idle States, SVM, Random

Forest, Discrete Wavelet Transform (DWT), Empirical Mode Decomposition (EMD).

Abstract:

Self-paced Brain-Computer Interfaces (BCIs) are desirable for allowing the BCI’s user to control a BCI with-

out a cue to indicate him/her when to send a command or message. As a first step towards a self-paced

color-based BCI, we assessed if a machine learning algorithm can learn to distinguish between primary color

exposure and idle state. In this paper, we record and analyze the EEG signals from 18 subjects for assessing

the feasibility of distinguishing between color exposure and idle states. Specifically, we compare separately

the performances obtained in the classification of two different types of idle states (one relaxation-related and

another attention-related) and color exposure. We characterize the signals using two different ways based on

discrete wavelet transform and Empirical Mode Decomposition (EMD), respectively. We trained and tested

two different classifiers, support vector machine (SVM) and random forest. The outcomes provide exper-

imental evidence that a machine learning algorithm can distinguish between the two classes (exposure to

primary colors and idle states), regardless of the kind of idle state analyzed. The more consistent outcomes

were obtained using EMD-based features with accuracies of 92.3% and 91.6% (considering a break and an

attention-related task as the idle states). Also, when we discard the epochs’ onset the performances were

91.8% and 94.6%, respectively.

1 INTRODUCTION

EEG-based brain-computer interfaces (BCIs) can be

seen as a pattern recognition system that learns from

the users’ brain signals for helping them to send

messages and commands to the external world. In

the beginning, these systems were focused only on

disabled people but now, there are applications (as

game controlling) for other subjects too. Particu-

larly, EEG-based BCIs use one of 4 following neuro-

paradigms for sending the messages and commands:

motor imagery (MI), slow cortical potentials (SCP),

the P300 signals, steady-stable visual evoked poten-

tials (SSVEP). The last two are visual BCIs that re-

quire an additional system for flickering the stimuli,

which allows the generation of the specific signal for

interacting with the BCI. In P300 BCIs, this flickering

system helps the apparition of a p300 peak 300 ms af-

ter the desired output is flashed. Whereas in SSVEP

a

https://orcid.org/0000-0001-5091-0764

b

https://orcid.org/0000-0002-6632-8784

c

https://orcid.org/0000-0002-8791-0917

BCIs, this system blinks all the commands but at dif-

ferent frequencies.

Targeting to discard this flickering stimulator, the

use of the EEG responses to either color exposure

(

˚

Asly, 2019; Rasheed, 2011; Torres-Garc

´

ıa and Moli-

nas, 2019;

˚

Asly et al., 2019; Soler-Guevara et al.,

2019) or the imagination of colors (Yu and Sim, 2016;

Rasheed, 2011; Torres-Garc

´

ıa and Molinas, 2019)

have been analyzed with different degree of success-

ful aiming to implement a color-based BCI. Also,

these works could take advantage of the presence of

colors-based cues in our daily life.

Unlike the other visual BCIs, an online color-

based BCIs will need a method for identifying the

segments wherein the subjects are seeing the corre-

sponding colors (control commands) and when they

are not (idle state). In this case, the active status of

a color-based BCI happens when the subjects see the

target colors and the idle state happens when the sub-

jects are in rest or doing a different activity. This

makes that this kind of BCIs can be also grouped as

self-paced BCIs.

Torres-García, A., Moctezuma, L. and Molinas, M.

Assessing the Impact of Idle State Type on the Identification of RGB Color Exposure for BCI.

DOI: 10.5220/0008923101870194

In Proceedings of the 13th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2020) - Volume 4: BIOSIGNALS, pages 187-194

ISBN: 978-989-758-398-8; ISSN: 2184-4305

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

187

For that reason and looking for providing more ev-

idence of the feasibility of a self-paced color-based

BCI, we have analyzed if a machine learning algo-

rithm can distinguish between the EEG segments of

target colors (red, green and blue) and idle state using

the dataset recorded in (

˚

Asly, 2019). Particularly, the

main contribution of this paper is the assessment of

the impact of two different types of idle states on the

recognition performance of color exposure and idle

states. The first one (fixation cross) was related to

the epochs wherein the subjects had to pay attention

to the screen. Whereas, the second one was related

to the indication for a short break. The relevance of

this contribution is to look into if the method can rec-

ognize the activity of interest (EEG responses to color

exposure) regardless of the kind of idle state analyzed.

Finally, two different sets of features (EMD-based

and DWT-based) along with two classifiers (Random

forest and SVM) are studied for looking into if any

difference can be found between the classification of

each idle state (separately) and color exposure. Be-

low, the most similar works are presented.

2 RELATED WORKS

The analysis of interest activities vs idle state is an

important problem to be solved. In that sense, there

are some works using motor imagery neuro-paradigm

(Dyson, 2010; Dyson et al., 2010) and also using

imagined speech (AlSaleh et al., 2018; Song and

Sepulveda, 2014; Moctezuma et al., 2017). However,

there is not enough evidence about if this could be

achieved in color-exposure-based BCIs.

The work presented by (Moctezuma et al., 2017)

evaluated the classification of linguistic activity vs

linguistic inactivity (idle state). They used two dif-

ferent datasets, the first one consist of EEG signals

from 27 subjects and 5 imagined words (internal pro-

nunciation) and the second one with 20 subjects and

4 imagined words. The feature extraction was based

on the discrete wavelet transform (DWT) with four

levels of decomposition using the mother function

biorthogonal 2.2, then for each sub-band extracted the

teager, instantaneous, hierarchical and relative energy

distributions were computed. They presented another

characterization based on 15 statistical values. The

obtained vectors were used as input for the random

forest (RF) classifier, obtain accuracies up to 78%

and 92% respectively for each dataset using features

DWT-based and 83% and 91% when using statistical

values.

The discrimination between imagined speech and

two different non-speech tasks from EEG signals was

analyzed by (AlSaleh et al., 2018). They applied

high-pass and low-pass zero-phase filters in the range

of 1–30 Hz for removing power line noise and noise

corresponded to body movements. The features were

extracted using spatio-spectral and temporal features

from each EEG channel and it was used as input to

the linear discriminant analysis (LDA) algorithm and

Linear support vector machine (SVM). The results re-

ported were obtained using a dataset from nine sub-

jects 14 EEG channels.

They used 528 trials for each class and different

trial length (1, 1.5 and 2 seconds). In their best case,

they reported an accuracy of 67% for classification

between imagined speech and visual attention (non-

speech) using SVM and 1 second of the signal. Since

the classification accuracy is near to the chance level,

it shows that more work in the feature extraction is

necessary, additionally, the paradigms comparison are

not directly comparable with our approach.

The work described by (

˚

Asly, 2019) presented

a preliminary set of experiments for classifying the

EEG signals corresponded to RGB colors and idle

state, using a pre-processed version of the dataset

recorder in (Rasheed, 2011). The dataset consisted

of seven subjects and the configuration was using all

the instances of all the subjects (a single dataset) for

all the RGB color exposure as a single class and the

idle state. Then, for each instance of RGB-color or

idle state, the feature extraction was performed using

the empirical mode decomposition (EMD), choosing

only the first 3 intrinsic mode functions (IMFs). After

that, for each IMF, the instantaneous and teager en-

ergy, Petrosian and Higuchi fractal dimensions, min-

imum, maximum, mean, median, variance, standard

deviation, kurtosis, and skewness, were computed.

The work reported accuracies up to 99% and 87% us-

ing the random forest classifier, while using the whole

RGB-color segment or with a limited window (elimi-

nating the first 500 ms for a possible P300 peak), re-

spectively.

The onset of speech-related vs idle state was an-

alyzed by (Song and Sepulveda, 2014), the dataset

used consisted of EEG signals of linguistic activity

from four subjects. They applied a ban-pass filter

from 4-20 Hz, then an autoregressive model (AR)

was used for feature extraction. The classification

was performed using LDA, obtaining an accuracy of

79.88% on average for the four subjects.

The work presented by (Torres-Garc

´

ıa et al., 2019)

shown accuracies up to 98.7% for classification of

RGB-colors vs idle state, using SVM classifier. The

previous results were obtained using a dataset of 7

subjects and 52 instances for each RGB color, the fea-

ture extraction consisted of sub-bands extraction us-

BIOSIGNALS 2020 - 13th International Conference on Bio-inspired Systems and Signal Processing

188

ing EMD and then for each sub-band 2 energy and 2

fractal features were computed.

Last, the most related works (described in (Torres-

Garc

´

ıa et al., 2019;

˚

Asly, 2019)) have analyzed the

classification of color exposure and only one kind of

idle state. Then, it is not clear if the performances

can be kept analyzing another kind of idle state. Also,

aiming at a wearable design based on dry electrodes,

we assessed if the method can get similar perfor-

mances to those gotten in previous works.

3 EXPERIMENTAL SET-UP

We recorded the EEG signals from 20 healthy sub-

jects whose age range was between 21-27 years.

Their EEG signals were recorded from eight elec-

trodes using a g.Tec Nautilus device (with g.Sahara

electrodes). The analyzed channel locations were

FP1, FP2, AF3, AF4, P03, P04, O1 and O2, accord-

ing to the 10-20 international system. These loca-

tions were selected based on related works (Yu and

Sim, 2016; Rasheed and Marini, 2015; Liu and Hong,

2017).

All subjects signed an informed consent letter

in which we clearly explained the research purpose,

experiment-related risks and the management of the

privacy of their personal data. Furthermore, they in-

formed about BCI experience, their handedness and

illnesses as color blindness and epilepsy. They re-

sponded to a simple questionnaire (more details in

(

˚

Asly, 2019)) regarding their mental and physical

health before and after the experiment.

Before the subjects’ arrival to the experimental

session, they had to avoid both adding gel or any

substance in their hair and consuming legal (coffee,

tea, alcohol, medicines) or illegal stimulants at least

a day before, and having a good rest during the pre-

vious night. Whereas before starting the experiment,

the subjects’ ears were cleaned using medical alcohol

wipes (85%) for a better conductivity from the skin to

ground and reference. Also, static electricity was dis-

charged from them and the experimenter by touching

a metal grounded object. Finally, the EEG cap was

put on the subjects’ heads while verifying the right

electrode locations using a plastic measuring tape.

During the experiment, subjects were sitting in a

comfortable chair and were indicated to follow the

designed experimental protocol (described in subsec-

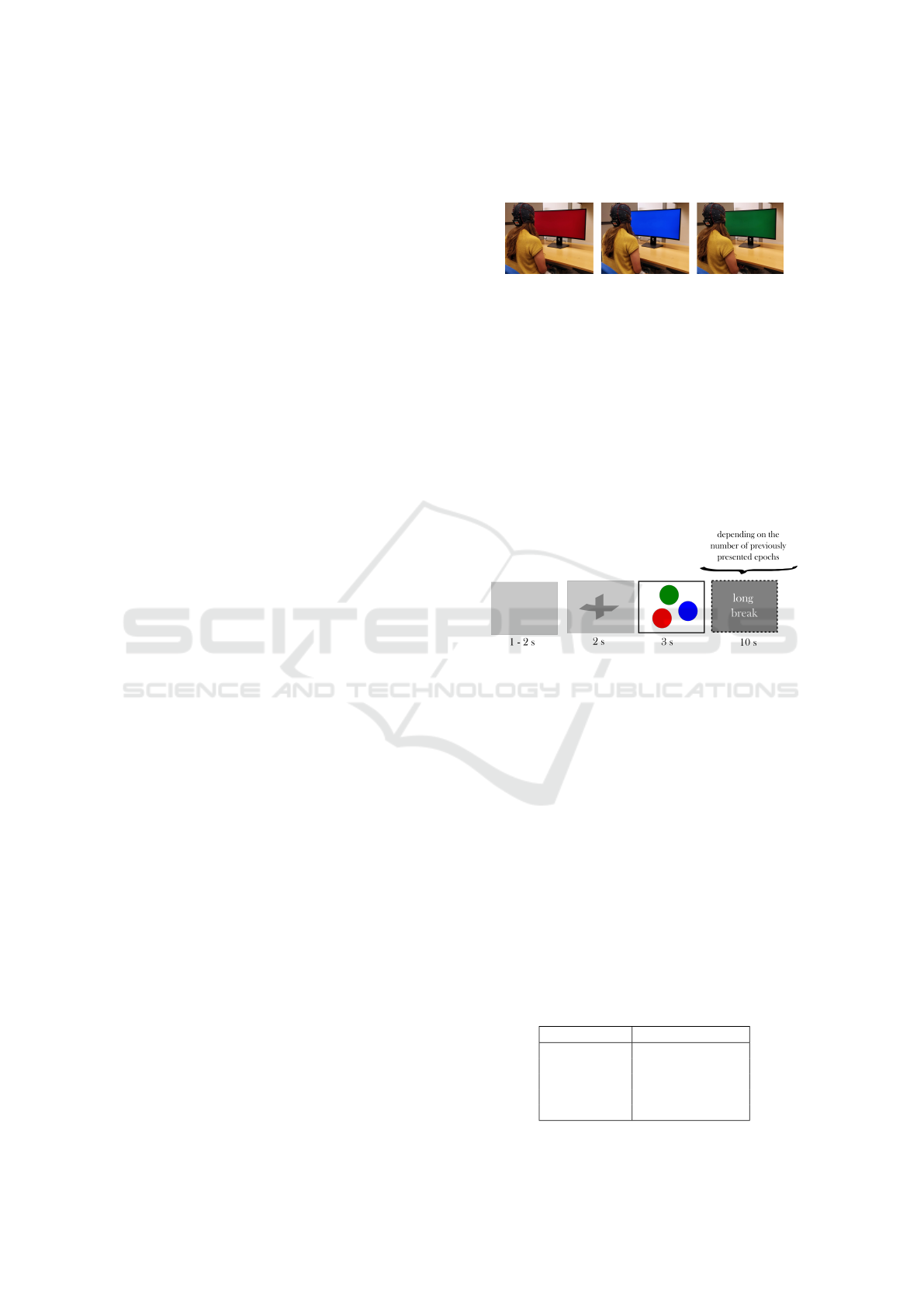

tion 3.1). Figure 1 shows the EEG signals record-

ing following this protocol during the exposure to pri-

mary colors. Finally, subjects’ were during the exper-

iment in a dark room, which was free from audible

and visible distractions. Also, an anti-static spray was

applied to its floor and furniture, looking for getting

high-quality recordings.

Figure 1: Subject in front of screen displaying RGB colors

during the experiment.

3.1 Experimental Protocol

We designed an experimental protocol for recording

the subjects’ EEG signals during color exposure (see

Figure 2). Specifically, we focused on the three pri-

mary colors (red, green and blue). Also, we recorded

as a fourth event the responses to simple mathemati-

cal operations. However, those trials will not be dis-

cussed due as they are far from the aim of this descrip-

tion (see (

˚

Asly, 2019) for more details).

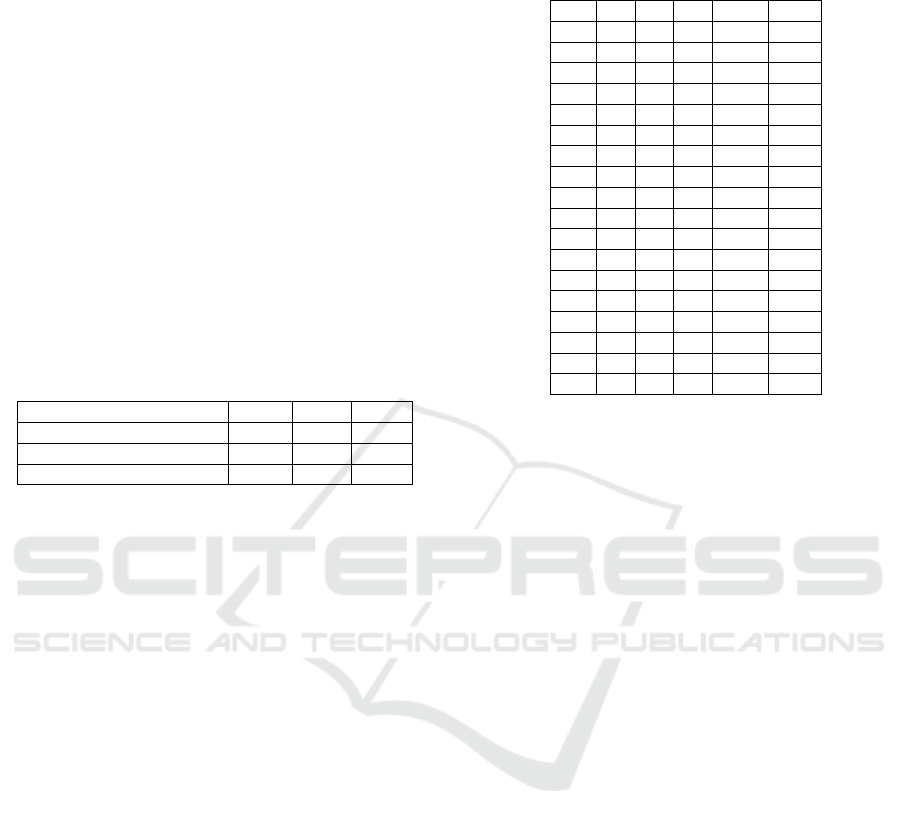

Figure 2: Protocol’s timing for recording EEG signals dur-

ing color exposure along with the duration for each shown

screen.

The protocol’s timing was decided to find a good

trade-off between the dynamics of the eye, color per-

ception and subjects’ comfort. First, a gray screen

was shown for a random time of 1-2 s. During this

period, the subjects were allowed blinking. Then, this

gray screen was kept but a fixation cross appeared in

the screen’s center to warn the subjects that a primary

color would be shown 2 s later. Next, any color out of

the three primary colors was randomly presented for

3 s and we asked the subjects to avoid blinks during

this period as possible. The hexadecimal values of the

used colors are shown in Table 1. Finally, a long pause

of 10 s was shown depending on whether the number

of presented epochs for each color reached the same

multiple numbers of five.

Table 1: Used colors in hexadecimal format.

color Hex value(RGB)

red FF 00 00

green 00 80 00

blue 00 00 FF

light gray C9 C9 C9

medium gray 80 80 80

Assessing the Impact of Idle State Type on the Identification of RGB Color Exposure for BCI

189

3.2 Dataset Summary

At the end of the recording process, we obtained the

EEG signals from 20 subjects. We recorded at least

one run from all the subjects but we also got two

runs for 13 subjects (S4-S11, S13-S16 and S19) and

three runs for S20. We recorded 15 instances for each

color, 60 for cross-fixation-related and 60 for break-

related. The number of cross-fixation-related and

break-related instances was that due to we recorded

an additional class (mathematical operation), which

is not analyzed in this paper.

After visual inspection, we rejected all the ses-

sions of S4 and S8 due to these had either some chan-

nels without information or with artifacts. Also, the

second session of S5 was rejected for the same rea-

son.

Table 2: Available instances of the NTNU color exposure

dataset.

Subjects Colors Cross Break

S1-S3, S5, S12 and S17-S18 45 60 60

S6-S7, S9-S11, S13-S16 and S19 90 120 120

S20 135 180 180

4 METHOD

4.1 Pre-processing

We applied the following preprocessing to the signals

looking for both improving the signal-to-noise ratio

of the EEG signals and rejecting artifacts related to

any artificial trend in the signals, muscle movements,

and blinks. First, we removed the mean of each

channel. Later on, the signals were detrended and

bandpass-filtered (2-30 Hz), then, these were epoched

for extracting the interest segments of color exposure,

pause-related and cross-fixation-related. This could

be done due to the signals were a priori marked dur-

ing their recording, so that an epoch is a repetition

of the EEG signals recorded during the presentation

of the interest colors, pause, and cross-fixation in this

work. Then, those epochs with at least one sample

with an amplitude higher/lower than ±100 µ V were

rejected. The final distribution of the instances for the

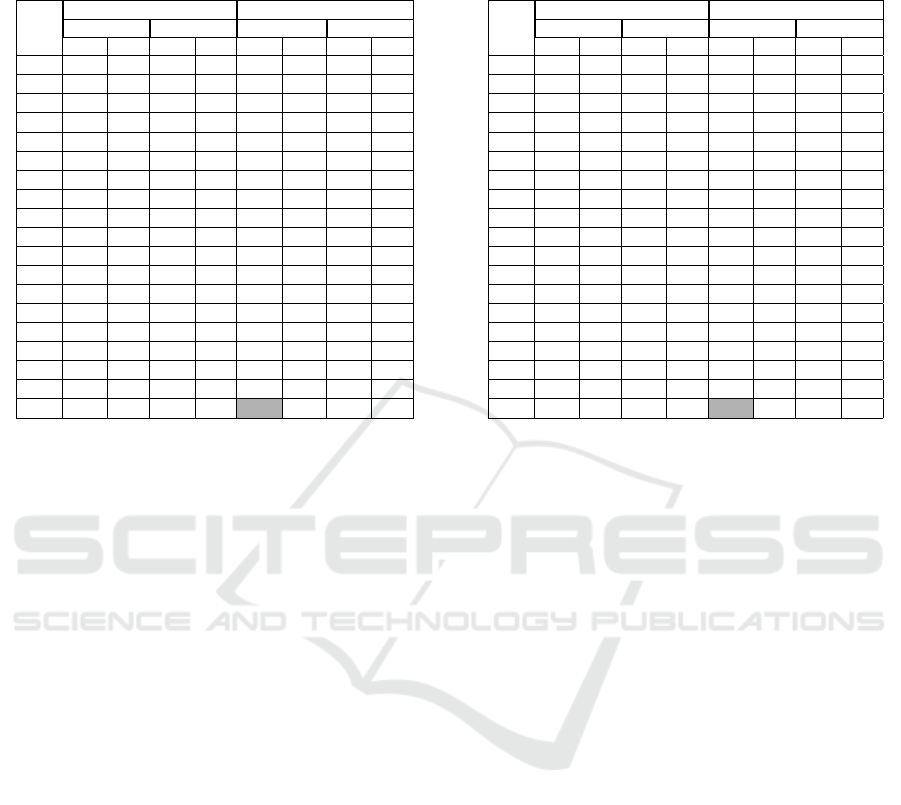

experiments is shown in Table 3.

4.2 Feature Extraction

The EEG signals are non-stationary, which means

that their frequency components are variable in time.

Therefore, the most suitable techniques are those that

allow the simultaneous analysis in both frequency and

Table 3: Instance distribution after pre-processing and

amplitude-based epoch removal.

sub R G B break cross

S1 13 14 12 46 51

S2 10 11 12 45 42

S3 8 6 6 44 39

S5 14 13 12 20 21

S6 22 26 26 34 21

S7 30 30 29 113 112

S9 27 26 27 50 109

S10 23 20 24 80 68

S11 28 29 27 73 80

S12 14 11 11 56 55

S13 25 24 23 98 95

S14 19 17 18 46 38

S15 29 30 30 108 103

S16 30 28 26 103 109

S17 13 15 13 10 36

S18 13 11 10 38 33

S19 27 27 29 114 115

S20 41 39 39 129 131

time for detecting changes in both domains. In this

paper, we employed DWT and EMD, the first one al-

lows the decomposition of the signals without the a

priori definition of a constant window size that could

avoid the detection of changes in some frequencies,

such as Short-Time Fourier transform (STFT) needs

to. Whereas, the second one is a data-driven method

that does not depend on a dictionary of functions

to decompose the original signals, unlike DWT and

STFT. Also, DWT-based and EMD-based features

have been previously explored in RGB color exposure

classification. As to wavelet features, we based on

the method described in (Torres-Garc

´

ıa and Molinas,

2019). Whereas, the computing of the EMD-based

features is based on the method described in (Torres-

Garc

´

ıa et al., 2019). Despite we analyzed a different

dataset, the use of these methods also helps to have a

benchmark for comparison purposes with these previ-

ous works.

For DWT-based features, we used the mother

function biorthogonal 2.2 with 4 levels of decompo-

sition. This number of decomposition levels was cho-

sen due to it produces that each level is related to

a given brain rhythm. Then, for each sub-band ex-

tracted, four features were computed: instantaneous

and teager energy distribution, and Higuchi and Pet-

rosian fractal dimension. After applying the previous

process, each instance is represented by a feature vec-

tor with 8 ∗ 5 ∗ 4 = 160 values. Those features were

chosen because the previous results obtained and be-

cause those features can represent variations in both,

amplitude and frequency (Didiot et al., 2010).

EMD was also used for sub-bands extraction, con-

sidering only the first three IMFs, in case only two

BIOSIGNALS 2020 - 13th International Conference on Bio-inspired Systems and Signal Processing

190

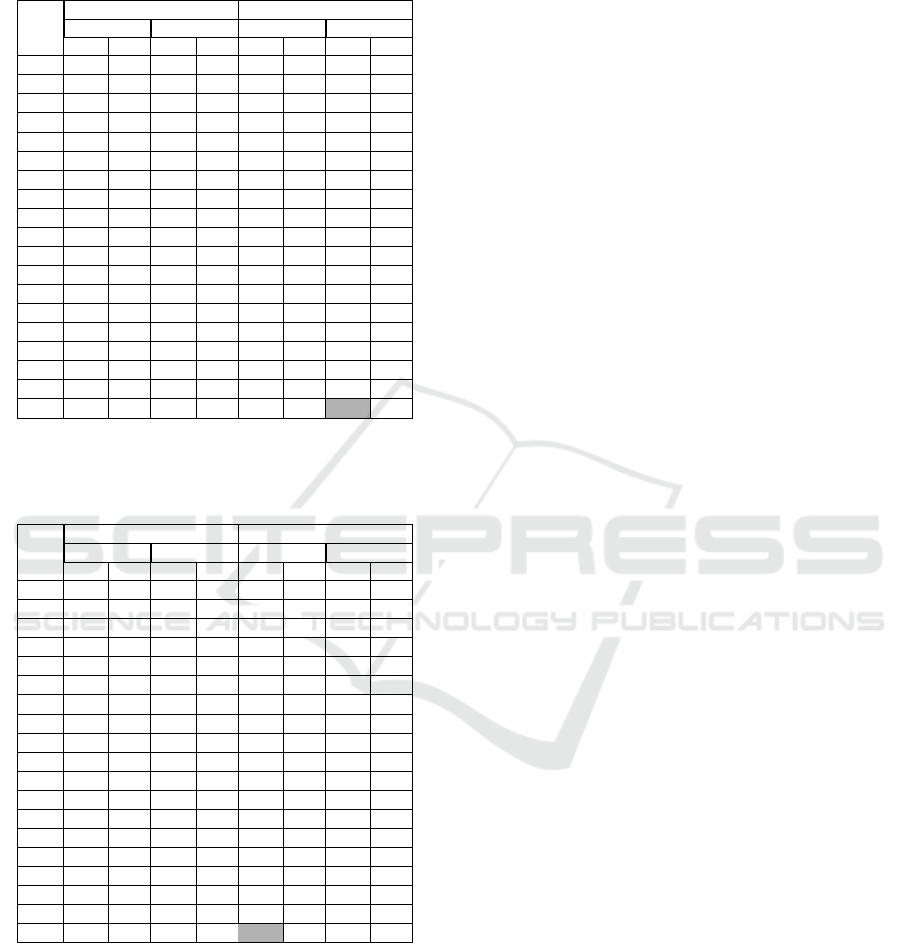

Figure 3: Method used for feature extraction from EEG sig-

nals recorded during exposure to colors and the idle states.

IMF were computed, we also used the residual. For

each IMF we computed the same four features, with

this process we obtained 8 ∗ 3 ∗ 4 = 96 features per

instance, as it is illustrated in Fig. 3.

The obtained vectors with their corresponding

tags were used as input for two different machine

learning classifiers, which are briefly described below.

4.3 Classification

From previous experiments (Torres-Garc

´

ıa et al.,

2019; Torres-Garc

´

ıa and Molinas, 2019) in color ex-

posure classification and in the discrimination of idle

state and color recognition, we identified that SVM

and RF are the most suitable classifiers for this task,

outperforming to Naive Bayes and K-nearest neigh-

bors. These classifiers aim to infer a function from

the dataset characterized using any of the two kinds of

features for classifying each instance from the dataset

to any of both available classes (color exposure and

idle state). Specifically, RF is an ensemble of deci-

sion trees with good properties as to speed and ca-

pability for handling instances with a large number

of features it refers to. Whereas, SVM looks for

the hyperplane that maximizes the separation between

classes through the kernel trick. Finally, we used the

versions of both classifiers implemented in the Weka

toolbox (Witten et al., 2016) and using their default

values hyperparameters. It is important to mention

that since the number of instances is not significantly

large, deep learning methods were not analyzed due

to it is reported that their performance depends on a

large amount of data, which is not common in BCI

applications (Lotte et al., 2018).

5 EXPERIMENTS AND RESULTS

In this section, we investigated whether a machine

learning algorithm could discriminate between all the

RGB colors seen as a single class and the idle state

(analyzing two different types of this, separately). All

the experiments were carried out as binary classifica-

tion problems and using balanced arrangements of the

dataset depending on the number of instances of the

minority class. Last aims to clearly evaluate if there is

a kind of idle state that impact on the method’s perfor-

mance, and discarding the possible differences related

to a different number of instances for each class.

All the classifiers’ performances were obtained

after the application of 10-fold cross-validation. It

means that each dataset’s arrangement is divided into

10 partitions, 9 out of them are used for training each

classifier and one for separately testing them. For

each classifier, this process is repeated until all the 10

partitions are used once for testing. The final accuracy

for each classifier is averaged using the 10 accuracies

for each testing partition.

5.1 Classification of Idle State and

Color Exposure

In the first experiment, the epochs recorded during the

break were assumed as the idle state. We then ran a

binary classification scheme for each subject and us-

ing the same number of instances for each class. The

number of instances for each subject was selected de-

pending on the minority class. For example, S2 has 33

instances of all colors and 45 for the break. Therefore

the experiment were carried out using 33 instances of

both classes.

The performances obtained for all the subjects are

shown in Table 4. For all of them, the performances

were above the chance level for two classes. Besides,

after applying sign tests

1

(Z = 0.485, p = 0.628,

α = .05) for DWT-based fts. and (Z = 1.033, p =

0.302, α = .05) for EMD-based fts., it was observed

that there is no difference between classifiers (SVM

and RF) when the same type of features are analyzed.

Nonetheless, there is a significant difference between

the use of EMD-based and DWT-based features when

we analyzed each classifier separately, (Z = −3.535,

p < .001, α = .05) for SVM and (Z = −4.007, p <

.001, α = .05) for RF.

In the second experiment, the epochs recorded

during the cross-presentation screen were assumed

as the idle state. We also ran a binary classification

1

This test was chosen after the analysis of the outcomes’

boxplots, which did not have a normal distribution and were

not symmetric regarding the median.

Assessing the Impact of Idle State Type on the Identification of RGB Color Exposure for BCI

191

Table 4: Percent accuracy and SDs obtained for the classi-

fiers for the recognition of all the RGB colors and idle state

(break).

subj DWT-based features EMD-based features

SVM RF SVM RF

acc std acc std acc std acc std

S01 79.5 6.6 82.1 13.7 88.6 12.5 85.7 9.7

S02 81.2 14.6 76.7 14.1 90.7 10.5 89.5 7.3

S03 75.0 26.4 75.0 23.6 87.5 17.7 90.0 17.5

S05 81.7 12.3 74.3 12.5 83.0 11.2 83.0 11.2

S06 83.4 8.3 87.9 4.7 94.4 8.0 91.6 7.0

S07 91.0 5.4 92.2 6.0 94.3 5.4 96.6 2.9

S09 94.6 5.2 91.5 4.4 98.5 3.2 96.9 4.0

S10 85.9 10.7 83.6 9.0 94.8 6.8 90.2 6.3

S11 83.5 5.3 85.4 8.4 91.8 5.2 92.4 7.7

S12 77.9 18.8 86.1 9.6 80.7 9.4 87.1 12.5

S13 87.3 11.1 83.2 11.2 92.2 8.6 91.6 8.7

S14 95.0 5.3 94.0 7.0 98.0 4.2 98.0 4.2

S15 90.5 4.5 89.9 6.3 97.2 3.9 94.4 6.5

S16 88.6 6.8 88.7 8.2 95.9 4.8 94.7 5.2

S17 86.3 9.5 82.3 6.3 92 10.3 84.3 8.3

S18 94.3 7.4 91.4 12.1 93.8 11.2 95.5 9.9

S19 89.7 6.6 90.2 6.8 92.7 6.3 92.7 5.8

S20 84.8 6.7 84.0 7.0 95.3 4.3 91.6 7.0

Avg 86.1 9.5 85.5 9.5 92.3 8.0 91.4 7.9

scheme for each subject and using the same number of

instances for each class (based on the minority class).

For example, this number was set on 36 for S17.

The performances obtained for all the subjects are

shown in Table 5. For all of them, the performances

were also above the chance level for two classes.

Also, there is no difference between classifiers (SVM

and RF) when the average performances for DWT-

based features are analyzed (after applying a sign test

(Z = 0.485, p = 0.628, α = .05)), but when the av-

erage performances for EMD-based features are an-

alyzed there is a significant difference (after apply-

ing a sign test (Z = 4.007, p < .001, α = .05)) be-

tween both classifiers, being better RF. Also, there is a

significant difference between EMD-based and DWT-

based features when the same classifier is separately

analyzed. After the application of sign tests, we ob-

tained (Z = −4.007, p < .001, α = .05) for SVM and

(Z = −3.395, p < .001, α = .05) for RF, being better

to use EMD-based features for both classifiers. Last,

EMD-based features allowed a reduction in the aver-

age of the standard deviations for all the subjects.

5.1.1 Classification of Idle State and Color

Exposure Removing the Epochs’ Onset

Since the performances may have additional infor-

mation related to the exposure to infrequent stimuli.

Then, we discarded the initial half-second of each

epoch, aiming to assess the impact of this in the ex-

periments.

When we considered the epochs of breaks as idle

Table 5: Percent accuracy and SDs obtained for the classi-

fiers for the recognition of all the RGB colors and idle state

(fixation cross).

subj DWT-based features EMD-based features

SVM RF SVM RF

acc std acc std acc std acc std

S01 78.0 8.9 71.8 16.4 85.7 11.4 79.3 11

S02 71.0 13.6 75.2 19.3 80.5 11.4 77.4 14.4

S03 75.0 11.8 77.5 14.2 82.5 16.9 77.5 18.5

S05 81.7 12.3 65.0 9.5 90.0 14.1 70.0 7.0

S06 76.8 4.6 77.9 3.0 87.1 11.4 78.9 1.2

S07 78.7 8.7 78.7 8.6 97.2 3.0 88.2 4.2

S09 76.9 9.3 70.6 9.8 98.8 2.6 88.1 7.5

S10 82.1 7.9 75.5 8.3 91.1 4.6 86.6 9.1

S11 70.7 11.7 77.4 12.6 95.0 5.7 84.0 8.9

S12 69.5 10.9 69.3 21.3 85.7 15.1 76.4 16.1

S13 79.3 7.5 72.9 10.2 91.6 4.4 80.0 9.5

S14 73.8 12.0 78.0 14.8 91.2 11.5 83.6 8.0

S15 88.7 8.1 83.7 8.3 96.1 4.7 89.4 7.7

S16 79.2 9.3 75.1 10.2 97.7 3.0 89.4 8.2

S17 81.6 11.1 73.4 15.4 91.3 10.3 71.4 14.1

S18 71.7 18.8 74.5 12.1 96.9 6.6 79.3 15.6

S19 82.5 7.5 85.6 8.9 92.1 4.1 86.8 7.7

S20 82.4 10.5 79.4 7.0 98.7 2.0 89.0 5.9

Avg 77.8 10.3 75.6 11.7 91.6 7.9 82.0 9.7

states, we got the performances that are shown in Ta-

ble 6. All the performances were above the chance

level for two classes. In addition, after applying sign

tests we observed that there is no difference between

classifiers when either DWT-based and EMD-based

features are separately used, (Z = 0.250, p = 0.803,

α = .05) for DWT-based features and (Z = 0, p = 1,

α = .05) for EMD-based features. However, the best

average performances are gotten using EMD-features

and RF. When we compared both types of features

using the same classifier, significant differences were

found after applying sign tests (Z = −2.593, p ≈ .009

, α = .05) for SVM and (Z = −2.750, p ≈ .006,

α = .05) for RF.

On the other hand, when we considered the epochs

of the fixation cross as idle states, we got the per-

formances showed in Table 7. All the performances

were above the chance level for two classes. Un-

like the above-mentioned comparisons between clas-

sifiers using separately the same kind of features, it

was observed in Table 7 that there is a significant dif-

ference between classifiers after applying sign test (Z

= 2.1213, p ≈ 0.033, α = .05) for DWT-based features

and (Z = 3.0641, p ≈ 0.002, α = .05) for EMD-based

features. For both types of features, the best perfor-

mances were obtained using SVM.

Also, when each classifier is separately analyzed,

it can be seen that there is a significant difference be-

tween both kind of features, after applying sign tests

(Z = −4.007, p < 0.001, α = .05) for SVM and (Z =

−3.395, p < 0.001, α = .05) for RF. This means that

EMD-based also outperformed DWT-based features

BIOSIGNALS 2020 - 13th International Conference on Bio-inspired Systems and Signal Processing

192

Table 6: Percent accuracy and SDs obtained for the classi-

fiers for the recognition of all the RGB colors and idle state

(break) removing the epochs’ onset.

subj DWT-based features EMD-based features

SVM RF SVM RF

acc std acc std acc std acc std

S01 85.9 12.8 86.1 7.1 89.6 10.5 93.6 6.8

S02 79.8 16.7 83.6 24.9 87.9 12.2 92.1 8.3

S03 72.5 21.9 80.0 19.7 87.5 13.2 87.5 17.7

S05 86.7 10.5 83.0 13.7 83.0 11.2 83.0 13.7

S06 91.6 8.0 89.8 6.7 92.6 5.8 89.8 7.0

S07 91.6 7.2 89.4 8.8 94.3 4.7 95.5 4.5

S09 93.1 4.4 93.1 4.4 94.6 5.2 95.4 4.0

S10 86.6 7.6 83.9 14.2 97.1 6.9 94.0 4.8

S11 89.1 6.8 89.8 7.8 91.7 4.3 94.2 5.7

S12 81.8 13.3 87.5 10.0 83.2 10.9 90.2 15.1

S13 86.8 6.2 85.9 10.2 91.6 9.8 91.5 6.6

S14 92.0 6.3 95.0 7.1 98.0 4.2 94.0 8.4

S15 95.6 4.4 92.1 6.0 97.2 4.7 94.9 4.2

S16 89.8 7.5 89.2 7.5 94.6 3.5 94.0 4.1

S17 88.0 14.0 90.0 10.5 92.0 10.3 92.0 10.3

S18 95.7 6.9 95.7 6.9 92.4 11.3 95.5 7.3

S19 92.8 5.4 90.9 5.3 90.3 5.1 95.1 4.9

S20 91.6 8.2 86.5 9.9 95.3 5.9 92.4 8.5

Avg 88.4 9.3 88.4 10.0 91.8 7.8 92.5 7.9

Table 7: Percent accuracy and SDs obtained for the classi-

fiers for the recognition of all the RGB colors and idle state

(fixation cross) removing the epochs’ onset.

subj DWT-based features EMD-based features

SVM RF SVM RF

acc std acc std acc std acc std

S01 78.6 15.5 79.3 12.9 87.1 8.4 87.3 10.2

S02 65.2 17.7 80.7 15.5 86.4 10.7 79.1 15.0

S03 82.5 16.9 67.5 20.6 95.0 10.5 80.0 19.7

S05 80.0 15.3 73.3 8.6 88.3 13.7 78.3 11.3

S06 80.9 7.2 77.9 3.0 91.7 8.3 77.9 3.0

S07 90.5 8.7 84.2 7.1 98.9 2.3 94.4 3.7

S09 87.5 8.8 79.4 7.3 98.8 2.6 95.0 4.9

S10 88.1 7.8 73.3 14.5 98.5 3.1 93.2 7.5

S11 87.8 9.6 82.3 11.1 97.5 4.3 92.7 7.6

S12 76.6 16.6 74.6 13.5 97.1 6.0 85.2 12.8

S13 87.6 9.1 82.7 5.9 96.6 3.6 88.3 8.9

S14 71.7 17.3 78.3 12.6 87.8 13.3 90.1 11.0

S15 88.3 9.2 82.5 11.8 96.7 3.9 83.8 8.0

S16 84.6 10.6 82.1 7.4 97.6 4.2 94.0 4.9

S17 81.8 14.3 73.8 16.8 96.3 6.0 85.9 16.1

S18 78.1 13.7 75.0 19.2 94.3 13.8 91.4 10.0

S19 79.0 13.2 84.9 10.4 95.8 4.2 86.7 10.0

S20 84.0 8.8 83.2 10.1 98.8 2.8 92.8 6.1

Avg 81.8 12.2 78.6 11.6 94.6 6.8 87.6 9.5

for this kind of idle state. Furthermore, the method

obtained lower standard deviations when EMD-based

features are analyzed.

On the other hand, since SVM and EMD-based

features got the best accuracies, we applied an ad-

ditional sign test to the accuracies obtained for

both kind of idle states (relax-related and attention-

related), showing that there is not a significant differ-

ence between both (Z = 1.65, p = 0.099 , α = .05).

This suggests that exposure to primary colors is dif-

ferent from the two idle states analyzed, and a method

could be designed to take advantage of this fact for

implementing a self-paced BCI.

Finally, despite all the averaged accuracies ob-

tained removing the epochs’ initial half-second were

better than the whole epochs were used, when we

separately analyzed the best outcomes for each kind

of idle states (using SVM and EMD-based features)

the differences were not significant. Sign tests were

applied for both kind of idle states with and without

the initial half-second, getting (Z = 1.206, p = 0.228,

α = .05) for break-related idle state and (Z = −1.940,

p = 0.052, α = .05) for cross-fixation-related idle

state. This suggests that the possible addition of noise

related to the screen transitions did not make impos-

sible recognition between both classes (idle and color

exposure).

6 DISCUSSION AND

CONCLUSIONS

In this work, we presented an assessment of the fea-

sibility of recognizing EEG signals recorded during

color exposure and idle states. We also evaluated two

different types of idle states. For this assessment,

we extracted two different types of features and these

were classified using SVM and RF. Also, we analyzed

the impact of the epochs’ onset in the performance

assuming a difference related to the exposure of in-

frequent stimuli. The obtained results provide experi-

mental evidence that the recognition of RGB color ex-

posure and idle states is possible (averaged accuracies

higher than 75% for all cases), regardless of the kind

of idle state analyzed. However, the method showed

the bigger differences when we used different tech-

niques for feature extraction than between the kind of

idle states and the classifiers studied. Which suggests

the pertinence of EMD-based features for this task.

It is important to highlight that when we assumed

the break-related epochs as the idle state, these im-

plied the exposure to an additional color (gray). Even

though this did not seem to impact the method per-

formance, it would be desirable a further analysis to

look into if another color for idle states could have an

impact on the experiment.

On the other hand, when the cross-fixation-related

epochs are used as the idle states, the accuracies were

lower (except for EMD-based features using SVM)

than when breaks-related epochs were used. This

could suggest a kind of common underlying activity

going on related to attention to color exposure and

cross-fixation-related epochs. Despite this common

Assessing the Impact of Idle State Type on the Identification of RGB Color Exposure for BCI

193

activity, the average accuracies were higher than 75%

for all the classifiers and features analyzed (even re-

moving the epochs’ onset). This suggests a clear dif-

ference between this kind of idle state and color ex-

posure too. Finally, the obtained outcomes confirm

those obtained in (Torres-Garc

´

ıa et al., 2019) using a

different dataset and analyzing a single type of idle

state.

As future work, we will assess the methods in

other related-neuro paradigms. Besides, the outcomes

could be improved for an additional stage for fea-

ture selection or feature reduction such as Principal

Component Analysis (PCA) and the optimization of

the classifiers’ hyperparameters. Also, an assessment

to identify the minimum size of the epochs to dis-

tinguishing between color exposure and idle state is

needed. This would be the second step towards an on-

line color-based BCI implementation. Last, the non-

stationary nature of EEG signals will make necessary

the application of incremental learning in that imple-

mentation, for tuning the method’s hyperparameters

along the time of use of a specific subject.

ACKNOWLEDGEMENTS

We thank the European Research Consortium for In-

formatics and Mathematics (ERCIM) for supporting

this research with a postdoctoral fellowship.

REFERENCES

AlSaleh, M., Moore, R., Christensen, H., and Arvaneh, M.

(2018). Discriminating between imagined speech and

non-speech tasks using eeg. In 2018 40th Annual In-

ternational Conference of the IEEE Engineering in

Medicine and Biology Society (EMBC), pages 1952–

1955. IEEE.

˚

Asly, S., Moctezuma, L. A., Gilde, M., and Molinas, M.

(2019). Towards EEG-based signals classification

of RGB color-based stimuli. In 8th Graz Brain-

Computer Interface Conference, page In press.

Didiot, E., Illina, I., Fohr, D., and Mella, O. (2010).

A wavelet-based parameterization for speech/music

discrimination. Computer Speech & Language,

24(2):341–357.

Dyson, M. (2010). Selecting optimal cognitive tasks for

control of a brain computer interface. PhD thesis, The

University of Essex.

Dyson, M., Sepulveda, F., and Gan, J. Q. (2010). Localisa-

tion of cognitive tasks used in EEG-based BCIs. Clin-

ical Neurophysiology, 121(9):1481–1493.

Liu, X. and Hong, K.-S. (2017). Detection of primary RGB

colors projected on a screen using fNIRS. Journal of

Innovative Optical Health Sciences, 10(03):1750006.

Lotte, F., Bougrain, L., Cichocki, A., Clerc, M., Con-

gedo, M., Rakotomamonjy, A., and Yger, F. (2018).

A review of classification algorithms for EEG-based

brain–computer interfaces: a 10 year update. Journal

of neural engineering, 15(3):031005.

Moctezuma, L. A., Carrillo, M., Villase

˜

nor-Pineda, L., and

Torres-Garc

´

ıa, A. A. (2017). Hacia la clasificaci

´

on de

actividad e inactividad ling

¨

u

´

ıstica a partir de se

˜

nales

de electroencefalogramas (EEG). Research in Com-

puting Science, 140:135–149.

Rasheed, S. (2011). Recognition of primary colours in elec-

troencephalograph signals using support vector ma-

chines. PhD thesis, Universit

`

a degli Studi di Milano.

Rasheed, S. and Marini, D. (2015). Classification of

EEG signals produced by RGB colour stimuli. Jour-

nal of Biomedical Engineering and Medical Imaging,

2(5):56.

˚

Asly, S. (2019). Supervised learning for classification of

EEG signals evoked by visual exposure to RGB col-

ors. Master’s thesis, Norwegian University of Science

and Technology.

Soler-Guevara, A., Giraldo, E., and Molinas, M. (2019).

Partial brain model for real-time classification of RGB

visual stimuli: a brain mapping approach to BCI. In

8th Graz Brain-Computer Interface Conference, page

In press.

Song, Y. and Sepulveda, F. (2014). Classifying speech re-

lated vs. idle state towards onset detection in brain-

computer interfaces overt, inhibited overt, and covert

speech sound production vs. idle state. In 2014 IEEE

Biomedical Circuits and Systems Conference (Bio-

CAS) Proceedings, pages 568–571. IEEE.

Torres-Garc

´

ıa, A. A., Moctezuma, L. A.,

˚

Asly, S., and Moli-

nas, M. (2019). Discriminating between color expo-

sure and idle state using EEG signals for BCI appli-

cation. In International Conference on e-Health and

Bioengineering (EHB) 2019, page In press. IEEE.

Torres-Garc

´

ıa, A. A. and Molinas, M. (2019). Analyz-

ing the Recognition of Color Exposure and Imagined

Color from EEG signals. In International Conference

on BioInformatics and BioEngineering (BIBE) 2019,

page In press. IEEE.

Witten, I. H., Frank, E., Hall, M. A., and Pal, C. J. (2016).

Data Mining: Practical machine learning tools and

techniques. Morgan Kaufmann.

Yu, J.-H. and Sim, K.-B. (2016). Classification of

color imagination using Emotiv EPOC and event-

related potential in electroencephalogram. Optik,

127(20):9711–9718.

BIOSIGNALS 2020 - 13th International Conference on Bio-inspired Systems and Signal Processing

194