An Evaluation Framework for Future Privacy Protection Systems: A

Dynamic Identity Ecosystem Approach

David Liau, Razieh Nokhbeh Zaeem and K. Suzanne Barber

The Center For Identity, The University of Texas at Austin. U.S.A.

Keywords:

Privacy Protection, Personal Identity Information, Stochastic Game, Policy Evaluation, Identity Ecosystem.

Abstract:

Today, more than ever, everyday authentication processes involve combinations of Personally Identifiable In-

formation (PII) to verify a person’s identity. Meanwhile the number of identity thefts is increasing dramatically

compared to the past decades. As a response to this phenomenon, numerous privacy protection regulations,

and identity management frameworks and companies thrive luxuriantly.

In this paper, we leverage previous work in the Identity Ecosystem, a Bayesian network mathematical repre-

sentation of a person’s identity, to create a framework to evaluate identity protection systems. After reviewing

the Identity Ecosystem, we populate a dynamic version of it and propose a protection game for a person’s PII

given that the owner and the attacker both gain some level of control over the status of other PII within the

dynamic Identity Ecosystem. Next, We present a game concept on the Identity Ecosystem as a single round

game with complete information. We then formulate a stochastic shortest path game between the owner and

the attacker on the dynamic Identity Ecosystem. The attacker is trying to expose the target PII as soon as

possible while the owner is trying to protect the target PII from being exposed. We present a policy iteration

algorithm to solve the optimal policy for the game and discuss its convergence. Finally, an evaluation and

comparison of identity protection strategies is provided given that an optimal policy is used against different

protection policies. This study is aimed to understand the evolutionary process of identity theft and provide a

framework for evaluating different identity protection strategies and in future privacy protection system.

1 INTRODUCTION

In the year of 2018, General Data Protection Reg-

ulation(GDPR) had been enforced by the European

Union(EU) for regulation of data protection and pri-

vacy security for individuals. The discussion on pri-

vacy protection had once again become one of the

emerging topics in the society. Personally Identifiable

Information, known as PII, often refers to all the in-

formation in real world that relates to a person. Mul-

tiple chosen PII attributes are used in a group for au-

thentication or authorization. As the number of ways

that PII is used for authentication and authorization

increases, so does the number of thefts against iden-

tity. Identity theft can generally be defined as any

unauthorized use of a person’s identity. In the lat-

est government-published statistics [Harrell, 2017],

three general types of incidents of Identity theft are

included: unauthorized use or attempted use of an ex-

isting account, unauthorized use or attempted use of

personal information to open a new account, and mis-

use of personal information for a fraudulent purpose.

According to the document, identity theft had already

affected 16.7 million people in the U.S. in the year of

2017.

The study of identity theft [Berghel, 2012] with

the concept of PII, including protection/prevention

[Shah and Okeke, 2011], management [Yuan Cao

and Lin Yang, 2010] [Khattak et al., 2010], recov-

ery [Goode and Lacey, 2017], or risk of exposure [De-

laitre, 2006], had been raised to focus throughout the

past decade. Companies like Discover, LifeLock and

IdentityForce nowadays start providing identity pro-

tection services to both individual and business as

counter measures against identity theft incidents. It

is of our interest to develop a universal framework

to compare different identity protection policies with

the presence of malicious attackers. In this work, we

proposed an identity protection evaluation benchmark

that serve as an analytic tool to provide benchmark for

different identity protection policies.

From previous analysis of the Identity Threat As-

sessment and Prediction (ITAP) model [Lacey et al.,

2016], which was built from real world identity theft

data by the Center for Identity at the University of

Texas at Austin, the project established an evidence-

136

Liau, D., Zaeem, R. and Barber, K.

An Evaluation Framework for Future Privacy Protection Systems: A Dynamic Identity Ecosystem Approach.

DOI: 10.5220/0008913501360143

In Proceedings of the 12th International Conference on Agents and Artificial Intelligence (ICAART 2020) - Volume 1, pages 136-143

ISBN: 978-989-758-395-7; ISSN: 2184-433X

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

based understanding of how one personal identity

information(PII) attribute is used to access another.

This particular evolutionary process within owner’s

PII collective results in identity theft incidents that

directly causes identity owner’s loss of properties.

To formally establish understanding within a person’s

PII collective, previous work from Zaeem et al. [Za-

eem et al., 2016] has establish the Identity Ecosys-

tem, a Bayesian network representation of a person’s

identity, to study the identity theft as well as other

personal identity related issues. For instance, one

could analyze the security level to an authentication

method utilizing the power of the Identity Ecosys-

tem [Chang et al., 2018]. The Ecosystem features dif-

ferent relationships between PII attributes to answer

three main queries of the real world: The risk of ex-

posure of a certain PII, the cause of exposure, and the

cost/liability issue.

The Identity Ecosystem grants us the ability to

calculate the probability of exposure of PII given the

status of the Bayesian network. On the other hand,

stochastic games, introduced by Shapley [Shapley,

1953], have been studied for more than half a cen-

tury since the original paper. In the original paper, the

game was constructed with two players, some finite

set of game states and finite actions to both players as-

sociated with every state of the game. The transition

from a state of the game to another follows a distribu-

tion that is controlled by both players through their

actions played in the previous state. It also brings

in the concept of a ”terminal state” which the game

would transit into with some positive probability at

each state. The game is proved to be in equivalent

form to a infinite-horizon game with discounted cost.

Later on, many extensions and variants of the original

work have been developed and studied [Kumar and

Shiau, 1981].

In this paper, we apply our novel dynamic ap-

proach to the Identity Ecosystem to create an evalua-

tion platform for future privacy protection system. In

addition to that, as part of the evaluation framework,

we provided the stochastic game based approach with

the dynamic Identity Ecosystem to generate the min-

imax response to general attacker strategies. More-

over, the method is also used to provided survival

evaluation to existing privacy protection systems by

generating effective attack strategies against a per-

son’s identity. We provide a policy iteration algorithm

as well as a running example of the algorithm for the

minimax strategy. In Section 2, we cover related aca-

demic work. Then we formally explain the idea be-

hind our dynamic Identity Ecosystem and the gaming

setup for the stochastic game in Section 3. We pro-

vide the basic policy iteration algorithm setup for the

specific problem and the running example for the al-

gorithm in Section 4. The result from the algorithm is

also illustrated so that readers with minimal under-

standing of general Markov decision processes can

follow the paper without difficulty. Finally, conclu-

sions are given in Section 5.

2 RELATED WORK

In this section, we briefly review the several important

concepts and background knowledge behind the eval-

uation tool we are proposing in the paper. In addition,

it is worth mention that this work is an extension of

previous work [Liau et al., 2019].

2.1 Bayesian Networks

In order to capture the inter-relationship within a per-

son’s PII collective, the Identity Ecosystem treats a

person’s identity as a Bayesian network. Previous

work Zaeem et al. [Zaeem et al., 2016] established an

example of such probabilistic graphical model with

real world security breach data. The work consider

each PII as a node in the Bayesian network—a ran-

dom variable with some distribution given the status

information from other related PII. The directed edges

in the Bayesian network model represents the casual

dependencies between random variables.

2.2 Game Theory in Network Security

Game theory has been widely used to study network

security and related topics [Roy et al., 2010] in the

past decades. Certain literature can be found [Roy

et al., 2010] that apply game theory in general. For

static game setup, [Carin et al., 2008] provides a good

example about how to apply basic game theory to in-

formation warfare. For dynamic games, [Manshaei

et al., 2013] surveyed several different concepts of

applying game theory to network security problems.

Game theory is often used to prove that a protocol is

optimized considering all the participants involved in

the system. In our work, we study the case where the

malicious users, having some partial control over the

network, are trying to acquire a specific PII attribute.

The problem of protecting PII that we tackle is

very similar to the problems like detection of credit

card fraud. In this type of problem, the owner of the

credit card is the one that actually has some partial

observation of the current status of PII. The owner

would like to detect the credit card fraud and take

counter measures even before the credit card fraud in-

cident takes place. A concrete example can be found

An Evaluation Framework for Future Privacy Protection Systems: A Dynamic Identity Ecosystem Approach

137

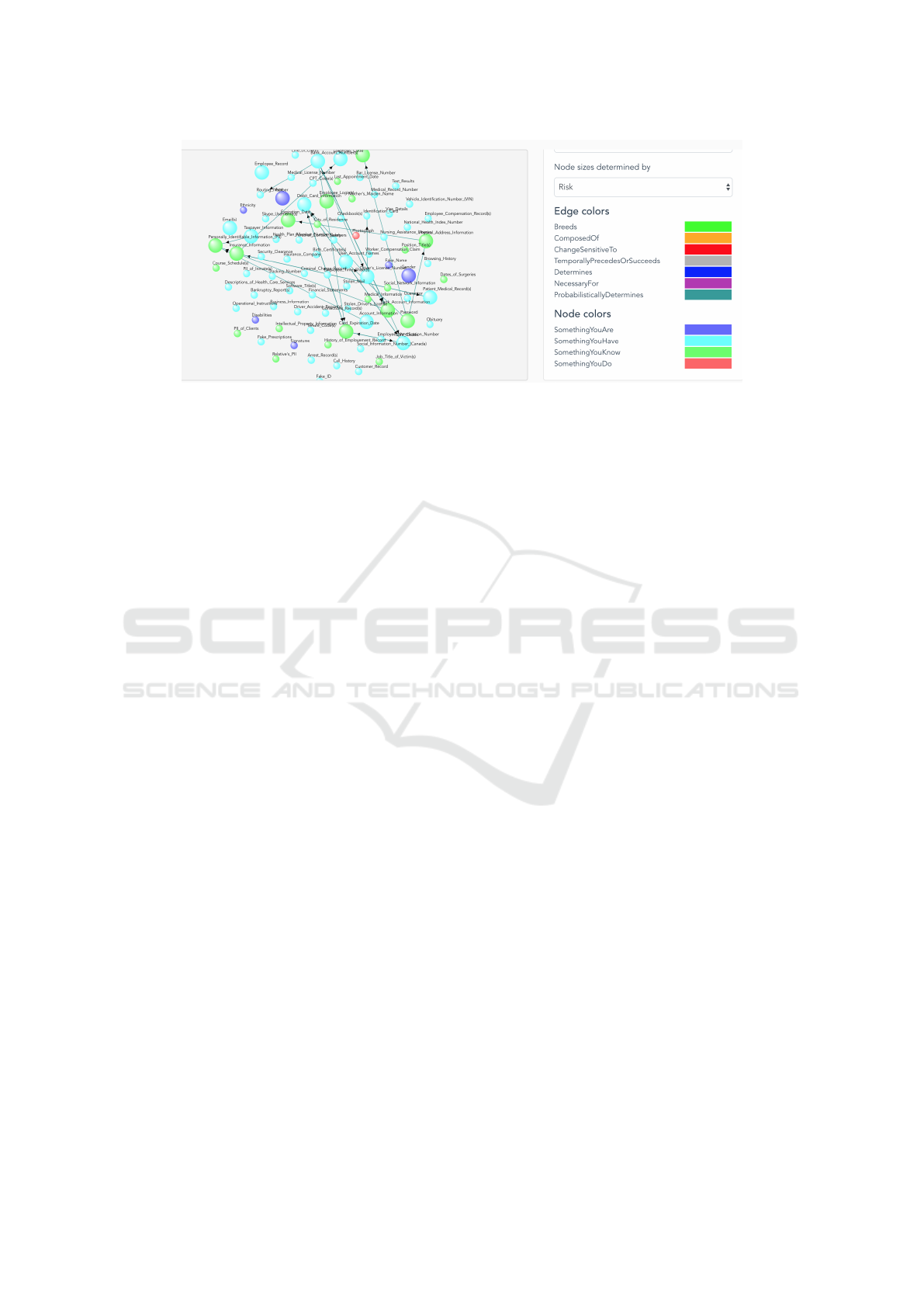

Figure 1: A snapshot of the Identity Ecosystem. In this particular example, the size of the node is determined by the risk of

exposure and different colors are used to distinguish the types of PII. It also has the ability to filter and display only related

nodes and edges to a specific PII.

in many articles like [Panigrahi et al., 2009] in which

a hybrid method to the detection of credit card fraud

was studied.

3 MATHEMATICAL

REPRESENTATION

In this section, we go through the mathematical rep-

resentation used throughout the paper including the

setup for a dynamic Identity Ecosystem. Then, we

provide a single round game example for demonstra-

tion of the idea behind our work. Finally, we intro-

duce the concept of treating identity protection as a

stochastic game.

3.1 Background: The Identity

Ecosystem

The original Identity Ecosystem in [Zaeem et al.,

2016] is a Bayesian model of PII attributes and their

relationships. Our version of the Identity Ecosystem,

as shown in Fig. 1, model is populated with real-

world data from approximately 6,000 reported iden-

tity theft and fraud cases. We leverage this populated

model to provide unique, research-based insights into

the variety of PII, their properties, and how they in-

teract. Informed by the real-world data, it enables the

investigation of the ecosystem of identifiable informa-

tion in which criminals compromise PII and misuse

them.

As an example query, the identity ecosystem im-

plementation is used to predict future risk and losses

of losing a given set of PII and the liability associ-

ated with its fraudulent use. In the Bayesian model,

each PII (e.g., Social Security Number) is modeled

as a graph node. Probabilistic relationships between

these attributes are modeled as graph edges. We lever-

age this Bayesian Belief Network with Gibb’s Sam-

pling to approximate the posterior probabilities of the

model, assuming the given set of PII attributes is com-

promised. In addition, once more information about

the victim or the incident is available, the Ecosystem

is able to refine the predicted risk and value to re-

flect the new information and converge to the risk and

value in the real world. Note that in general discus-

sion, the number for PII attributes that a person could

have is a finite number.

Utilizing a probabilistic graphical model to repre-

sent the instances of these complicated relationships

fits the purpose of understanding different aspects of

a person’s identity. The effect of PII exposure, for ex-

ample, is different depending on the status of other

PII of a person. For instance, the exposure or theft

of a person’s Social Security Number (SSN) could re-

sult in credit card fraud, identity fraud in mobile de-

vices or even unauthorized access to a person’s bank

accounts. The count on PII attributes that are at risk

of exposure if a person’s SSN is leaked may also be

different depending on the status of other PII. When

the SSN is leaked due to some incident, the impact on

personal identity for persons who share their birthday

publicly are more severe than those who do not.

3.2 PII Dynamics within Identity

Ecosystem

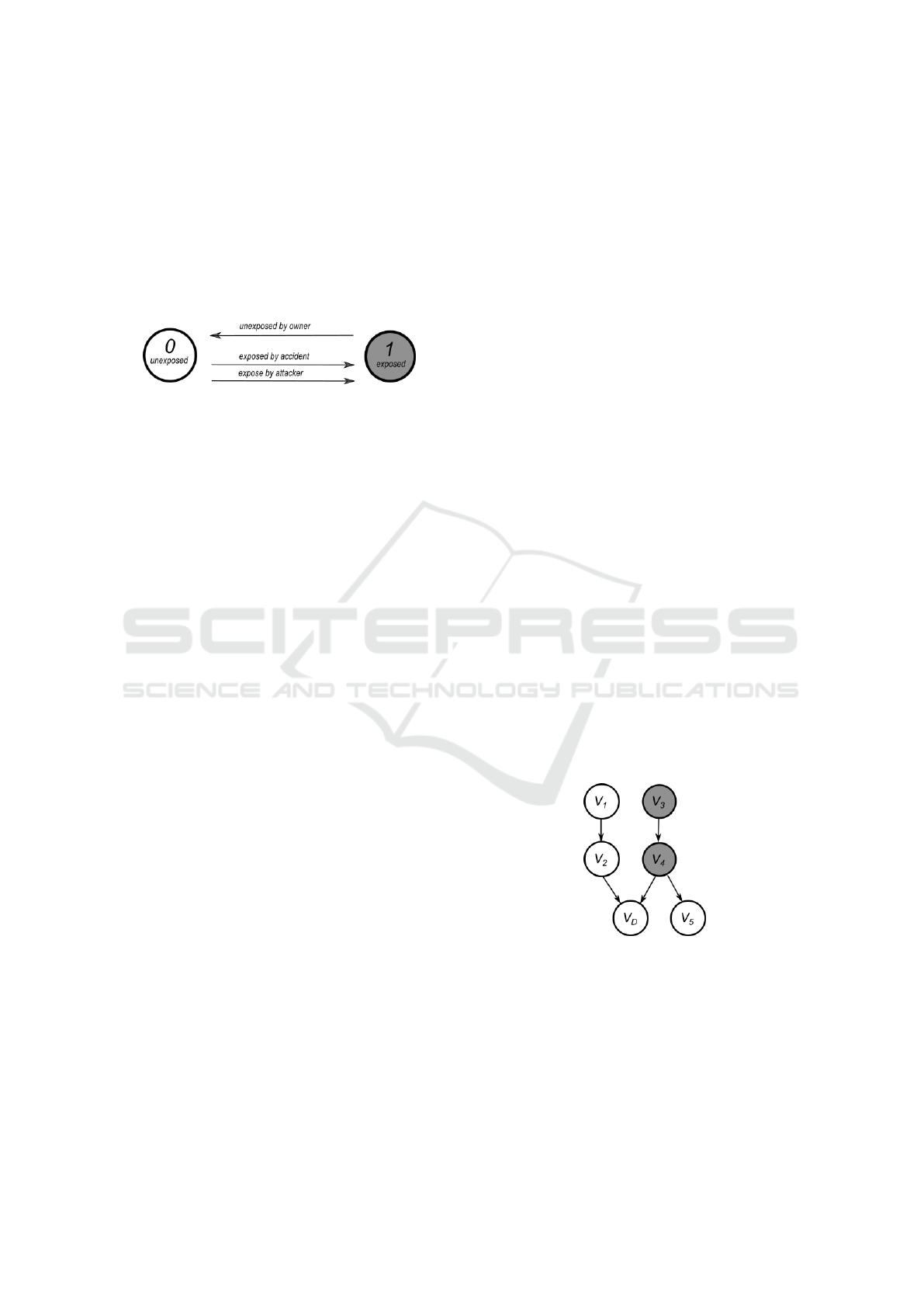

We establish our system dynamic over the Ecosystem

to construct our identity protection system evaluation

tool. Suppose there is an attacker who has some abil-

ity to expose/acquire some of the unexposed PII as

he/she wishes. The random variable turns from 0 to

1 when a person’s PII is exposed by the attacker. PII

ICAART 2020 - 12th International Conference on Agents and Artificial Intelligence

138

can also become exposed accidentally rather then be-

ing intentionally breached by the attacker. On the

other hand, the owner of the PII can also actively

make some of the exposed PII unexposed if it is al-

lowed to do so (e.g., changing a phone number). The

random variable then turns from 1 to 0 when the

owner takes action to protect the PII. Now we for-

mally define the state of the network as a N ×1 vector

S

V

:= [V

1

,V

2

,··· ,V

N

].

Figure 2: Node Model of PII. The status of the PII can

change due to owner protective action (e.g. changing pass-

port) or attacker exposure (e.g. phishing). Another case is

by exposure by accident (e.g. subscription).

This setup forms a basic game if we associate a

proper cost function to it. Consider two players, one

is the owner, who tries to minimize the probability

of a certain PII being exposed while the other is the

attacker, who tries to maximize the probability of a

certain PII being exposed. Without the lose of gen-

erality, we consider the basic case where each player

can only choose one PII to change.

3.3 Single Round Game with Complete

Information

To illustrate our idea of the basic game setup, let us

consider a similar but different example where as-

suming the game will be played only once. In this

scenario, we consider a single round, complete in-

formation setup, meaning that both the attacker (de-

noted as malicious playerM) and the owner (denoted

as player U) have the same amount of knowledge as

the other one. Let S

U

and S

M

be the collections of PII

that the owner and the attacker can change, respec-

tively. Moreover, the value of each random variable

in S

U

has to be 1 while the value of each random vari-

able in S

M

has to be 0. V

D

is the target PII which

the owner wants to protect while the attacker wishes

to expose. Suppose there is a strictly positive cost

function f : V

1

× V

2

× ··· × V

N

→ R when someone

wants to change the value of the node from 0 to 1

and also another strictly positive bounded cost func-

tion g : V

1

×V

2

× · ·· ×V

N

→ R if someone wants to

change the value of the node from 1 to 0.

Recall that in the original Identity Ecosystem, a

total of N PII attributes are deployed on the graph to

form a Bayesian Network. Link e

i j

in the Bayesian

network is directional, representing a direct influence

of V

i

to V

j

. A graph G is defined as a collection of

nodes and directional links. Define also a path from

V

i

to V

j

as a sequence of nodes and directed links that

starts at i and ends at j following the orientation of the

graph, i.e.,

(V

i

→ V

j

) = {V

i

,(V

i

,V

k

),V

k

,· ·· ,(V

m

,V

j

),V

j

}

where k, m ∈ [0,N]. An action of player i on iden-

tity j is defined as A

i

j

meaning that player i is trying

to change the value of V

j

. There exists two sets S

U

and S

M

denoting the set of PII that the owner and the

attacker can change. The following is common prop-

erties of the sets

1. S

U

∩ S

M

=

/

0

2. ∀V

j

∈ S

U

, V

j

= 1

3. ∀V

j

∈ S

M

, V

j

= 0

4. V

D

/∈ S

M

5. A action A

i

j

is valid if and only if V

j

∈ S

i

Now recall the function P

D

: V

1

× V

2

× ···V

j

·· · ×

V

N

→ [0, 1],V

j

∈ V \ V

D

from our ecosystem which

gives us the probability that V

D

is exposed under the

state S

V

. Denote the value of PII as V

0

j

after an action

had been taken by both of the players, then the utility

function of player i with action A

i

j

in the single round

game is given as

(

r

U

(A

U

j

,A

−U

) = P

D

(V

1

,· ·· ,V

N

) − P

D

(V

0

1

,· ·· ,V

0

N

)

r

M

(A

M

j

,A

−M

) = P

D

(V

0

1

,· ·· ,V

0

N

) − P

D

(V

1

,· ·· ,V

N

)

where A

−i

is the action taken by another player in the

game.

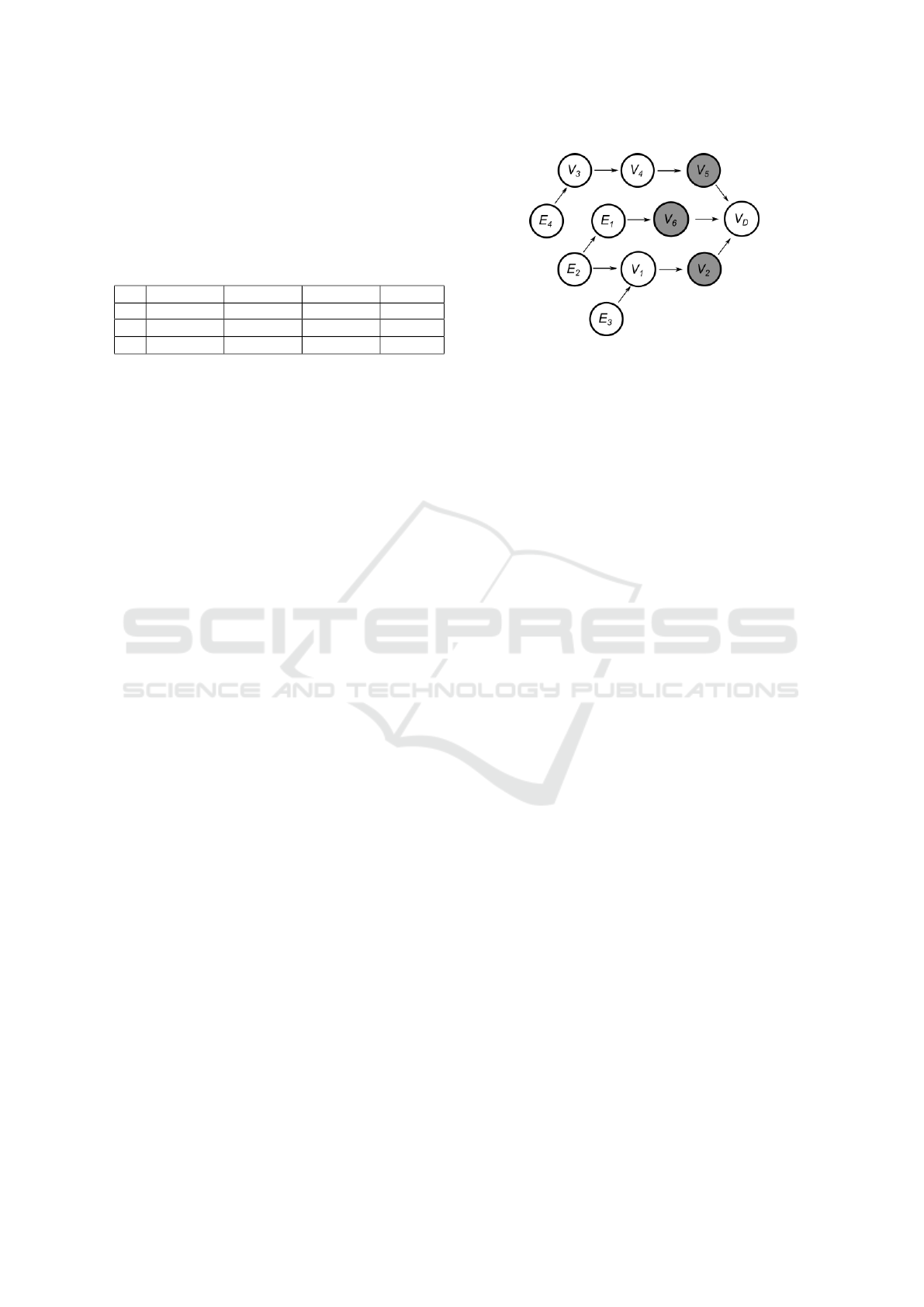

Next we provide a basic example of the single

round game calculation.

Figure 3: Basic Example for a single round game with com-

plete information. The color indicates whether the specific

PII is exposed (grey) or not. The increase or decrease in

exposure probability is calculated with the action players

choose and the Bayesian inference from the graph.

Consider a smaller instance of the ecosystem in

Figure 3. In the example, white vertices are the PII

attributes that are unexposed and the grey ones are

exposed. Now we can give the game with the fol-

lowing strategic form in Table 1. In this example, the

An Evaluation Framework for Future Privacy Protection Systems: A Dynamic Identity Ecosystem Approach

139

probability of V

D

being exposed is 0.5 for the initial

setup S

V

= [0,0, 1,1,0]. For instance, to calculate the

difference in probability for action pair (A

4

U

,A

1

M

), we

uses Bayesian inference to calculate the probability of

exposure for state S

0

V

= [1,0, 1,0,0] which is 0.4.

Table 1: The strategic form of the basic example in Fig 3.

None A

M

1

A

M

2

A

M

5

None(0,0) (−0.41, 0.41)(−0.42,0.42) (0,0)

A

U

3

(0.15,−0.15)(−0.4, 0.4) (0.25,−0.25)(0.1, −0.1)

A

U

4

(0.25,−0.25)(0.1, −0.1) (−0.3,0.3) (0.2,−0.2)

The calculation of the example should give us one

unique mixed strategy Nash equilibrium with player

M playing A

M

1

with probability

8

/21 and A

M

2

with

13

/21 while A

M

5

strictly dominated by the two strategy.

Player U should be playing A

U

3

for

11

/21 and A

U

4

for

10

/21.

Note that in this case, since both of the parties are

only considering the probability difference of the ac-

tions, it is a zero sum game in any case under the

setup.

3.4 Stochastic Game

Given the discussion in the previous section, we now

present the proposed dynamic Identity Ecosystem as

follows. The whole dynamic Ecosystem can be con-

sidered as a stochastic game, which conducted with

many rounds of modified single round version in the

previous section. In this the dynamic Ecosystem, each

round consists of three phases: decision, exposure and

resolve. In the decision phase, we play the game as a

single round game where the owner and the attacker

each choose certain PII to protect and expose. How-

ever, the payoff function here is replaced with a con-

stant for both attacker and owner for every round they

played, which we shall explain after setting up the rest

of the game. In the exposure phase, each unexposed

PII attributes in the network V

j

is going to be exposed

with a probability given by a strictly positive function

P

j

: V

1

×V

2

× ·· ·V

k

·· · ×V

N

→ (0, 1],V

k

∈ V \V

j

rep-

resenting accidental exposure. We setup this phase

to capture the real world phenomenon that personal

PII often get exposed unintentionally by neither the

owner nor the attacker. In the last phase, all PII in

the Bayesian network are updated with the new sta-

tus simultaneously and if the target PII is exposed,

the game ends in that round. Otherwise the game

continues until the target PII (e.g., password, credit

card information) is exposed. Different from the sin-

gle round game, the utility of the owner is now to pro-

long the game while attacker is trying to end the game

as fast as possible. Note that since the objective for

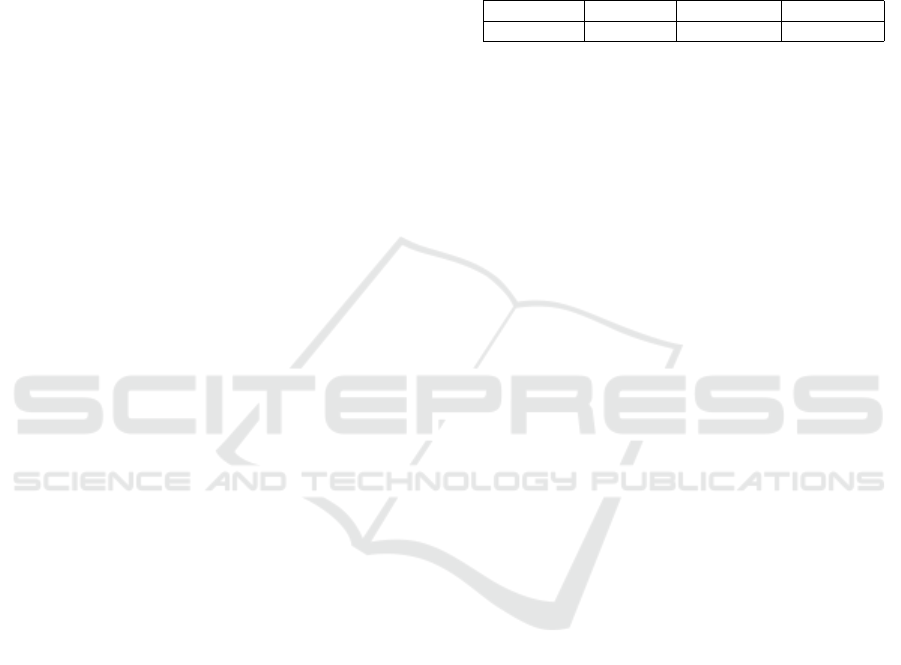

Figure 4: Partial Network of the identity Ecosystem. Note

that in this example, the attacker cannot directly expose PII

V

4

before PII V

3

is exposed. The figure also illustrates the

initial status of the PII in our example.

both player now is directly connected to the number

of rounds that the games is being played, it is conve-

nient for our discussion to setup the payoff function

as a constant that both player would receive at each

round.

4 ALGORITHM RESULT

Here we utilize the result from Patek and Bert-

sekas [Patek and Bertsekas, 1999]. From our setting

for the game and above lemmas, assumption SSP and

assumption R is satisfied thus suggests that there is a

unique fix point for the game and the convergence of

policy iteration to the fixed point.

Since the system is constructed on real world iden-

tity stories, it suggests that the game always ends with

the target PII being exposed which is a direct result

from the PII exposure probability function P

j

which

we stated as a strictly positive function if we look

at our setup of the game. The interpretation of this

corollary is consistent with the construction of the

Identity Ecosystem. The Identity Ecosystem is con-

structed from two main sources, one from manually

listing PII relationships and the other from the Iden-

tity Threat Assessment and Prediction (ITAP) project

at the Center for Identity at the University of Texas at

Austin. All of the PII being studied from these two

data sources are guaranteed to have some level of ex-

posure since the ITAP project generates the Identity

Ecosystem data from fraud cases that took place in the

real world. Thus we take the assumption that PII ex-

posure probability function P

j

is strictly positive. De-

fine the action chosen by attacker and owner in state

i as u

i

and v

i

respectively. Then we define the policy

as the set of actions that specify the player action in

every single stage and state the game is being played.

ICAART 2020 - 12th International Conference on Agents and Artificial Intelligence

140

Algorithm 1: Policy Iteration.

State SetX := V

1

×V

2

× ·· · ×V

N

State x,y ∈ X

Actions A

U

(x) = {V

i

,.. .,V

j

},A

U

(x) : X ⇒ A

U

(x)

Actions A

M

(x) = {V

l

,.. .,V

k

},A

M

(x) : X ⇒ A

M

(x)

Policy ν(x) : X ⇒

S

x∈X

A

U

(x)

Policy µ(x) : X ⇒

S

x∈X

A

M

(x)

Cost function g : X × A

U

(x) ⇒ R

+

Transition probability p

xy

(A

U

i

,A

M

l

) = P(y|x,A

U

i

,A

M

l

)

1: procedure POLICY ITERATION(X , A

U

, A

M

, g)

2: Initialize J, J

0

: X → R

+

0

arbitrarily

3: while J is not converged do

4: for x ∈ X do

5: J(x, ν(x),µ(x)) ←

∑

y∈X

p

xy

(ν(x),µ(x))[J(y) + g(y,ν(x), µ(x))]

6: end for

7: end whilereturn J

8: Improve Policy Set (ν(x),µ(x))

9: for x ∈ X do

10: ν(x)

0

,µ(x)

0

←

min

µ(x)

max

ν(x)

[E(g(x,ν(x),µ(x))) + J(x, ν(x),µ(x))]

11: end for

12: ν(x),µ(x) ← ν

0

(x),µ

0

(x)

13: if Policy Set ν(x), µ(x)stable then return

(ν(x),µ(x))

14: else return to line 3

15: end if

16: end procedure

4.1 Policy Iteration Algorithm

Consider a partial Identity Ecosystem which we run

our algorithm on as in Figure 4. The network struc-

ture is referenced from the original Identity Ecosys-

tem but modified and trimmed to better demonstrate

how a miniaturized system work. There are 11 nodes

including the target PII, 6 controllable PII and 4 evi-

dent PII. Note that in this case, we have information

about the evident PII which either they are exposed or

not in the initial condition. In our experience, when

we try to estimate the exposure risk on a person’s

Identity system, we can actually gather information

about the status of some portion of the PII. The ev-

ident PIIs {E

1

,E

2

,E

3

,E

4

} represent the PII that we

have the status information. We then assign an initial

action to play for the owner along with assigning an

initial value to all of the states. We choose the cost

function for both the attacker and the owner to be a

constant function 1 to simulate the situation in which

both the attacker and the owner shall try they best to

end or prolong the game without the consideration of

costs. Then the attacker calculates its best strategy

in the sense of minimizing the payoff of owner. Af-

ter that the ecosystem runs for one step to determine

the accidental exposure of PII for each of the states.

Combining the action choice of the attacker, the ac-

tion choice of the owner and the result from accidental

exposure, the Identity Ecosystem generates the transi-

tion distribution to all the other states connected under

A

U

and A

M

. The expected cost value is then calcu-

lated accordingly before the algorithm enters another

iteration.

Note that the algorithm is a greedy algorithm in

which the convergence to the unique fixed point is

guaranteed while the complexity is high. For each

step the algorithm runs, it evaluates the current poli-

cies with respect to all the states that the Identity

Ecosystem has. While properties of different PII pre-

vent the system from visiting all the possible states,

there are still O(2

N

) states in general for each evalua-

tion. Within the scope of this paper methods like gen-

eral Stationary Iterative Method (SIM) [Bertsekas and

Tsitsiklis, 1996] or Krylov Subspace Method [Senda

et al., 2014] can be applied to the problem to improve

the performance of the algorithm.

As our example in Fig. 4, eleven PII attributes

are presented including the target PII. We use a uni-

form cost setup for all the actions that are available

to both the attacker and owner, which means that the

attacker and owner have no concern about the cost of

achieving their goal while trying their best to expose

or defend the target PII.

Nodes V

1

·· ·V

6

are representing different PII that

the owner has. We use another practical setup here to

demonstrate the flexibility of the Identity Ecosystem

and the algorithm: for PII V

3

and PII V

4

, the attacker

cannot directly expose V

4

if V

3

was not exposed pre-

viously either accidentally or by the attacker.

Table 2: Strategy profile for Identity Ecosystem.

V

1

V

2

V

3

V

4

V

5

V

6

status unexposed exposed unexposed unexposed exposed exposed

Defend — 0 — — 1 0

Attack 0 — 1 0 — —

For example, at the initial state, the best strategy

for the owner is to unexpose V

5

with probability 1

while for the attacker the best strategy is to expose

V

3

with probability 1.

The game then enters the next state where the tran-

sition is the result of combining actions of the owner

and the attacker as well as accidental exposure. When

Table 3: Strategy profile for Identity Ecosystem for another

state

V

1

V

2

V

3

V

4

V

5

V

6

status unexposed exposed exposed exposed exposed unexposed

Defend — 0 0 1 0 —

Attack 0 — — — — 1

An Evaluation Framework for Future Privacy Protection Systems: A Dynamic Identity Ecosystem Approach

141

the algorithm converges to its optimal, for every state

such strategy profile is provided. The strategy profile

in this example is deterministic because of the setup

of the exposure probability function. The direct re-

sult from our setup is that there exist strong dominant

strategies in most of the states. If non-linearity was

included in the setup, a general probability distribu-

tion among the actions is expected.

4.2 The Evaluation Framework

What can these strategy profiles tell us? The strategy

profile from the algorithm result is the minimax so-

lution to the stochastic game problem. The solution

solves the Bellman equation which it does not solve

for the best strategy against some specific predefined

attack strategies. It is actually a conservative solution

that tries to maximize the individual payoff among all

of the possible strategies that the opponent might play.

We utilize this property to generate a minimax strat-

egy profile for both the PII owner and the malicious

attacker. While the game is being played, the payoff

that both player receive is the same in absolute value,

which directly create the incentive for the thief to end

the game as soon as possible. This generate a mini-

max strategy profile that incline with that goal.

On the other hand, given that we have the strat-

egy profile from the algorithm, we can interpret ex-

isting privacy protection policies to the system as

the owner’s strategy to complete against the minimax

strategy attacker strategy we have. Based on the num-

ber of rounds that the target PII or owner had survive,

it is possible to benchmark different identity protec-

tion strategies in a numeric way.

Take the partial dynamic Identity Ecosystem in

Fig. 4 as an running example, nowadays credit card

companies like Discover monitor several crucial PII

like Social Security Number in the internet to deter-

mine whether the PII is exposed or not instead of ac-

tively providing instructions to protect person iden-

tity. This type of identity management framework

was mentioned in [Mashima and Ahamad, 2008]. We

can translate this type of strategies as ”passive” strate-

gies into the dynamic Identity Ecosystem. This type

of strategies hand-pick PII from past experience and

monitor the status of them. For instance, if we create

a strategy with similar idea and run the partial system

with owner strategy as:

1. Create a watchlist consist of V

5

and V

6

.

2. Monitor any of the PII within the watchlist, if any

of them get exposed, try to unexposed them as

soon as possible.

3. If multiple PII within the list is exposed, randomly

choose one of them to unexpose at that round.

Then run the system against the minimax strategy. As

the experiment result, we compare the ”passive moni-

tor” strategy against randomly selection and minimax

strategy. The result is shown in table 4. We run the

system 50 times for the sample mean of how many

rounds specific strategy survive.

Table 4: Rounds of Survival(Mean) for different Owner

Strategies.

Passive Monitor Random Selection Minimax Strategy

number of rounds 5.32 8.72 9.34

We can see from the result that the passive monitor

strategy does not perform well in the evaluation. One

of the reason is that it only monitor 2 PII attributes

out of the 10 within our system. In the ITAP data

we have, the Ecosystem utilize 627 PII attributes to

define a person’s Identity status. In our survey pro-

cess, most of the protection system does not monitor

PII in this scale, as the passive monitor strategy in our

experiment. The random selection performs close to

the minimax strategy since the system we have is a

miniaturized Identity Ecosystem and the probability

of exposure assigned to the example does not fluctu-

ate much among the actions that the attacker can take

in many of the rounds.

5 CONCLUSION

Revisiting the idea of studying personal identity in

the Identity Ecosystem, we introduced our dynamic

mechanism for capturing the evolutionary process of

identity theft. We also applied game theory to un-

derstand the strategies to effectively protect/attack the

system. In addition, we presented a complete algo-

rithm by treating the interaction between the owner of

identity and the attacker as a stochastic shortest path

game and applied it to a partial Identity Ecosystem

to demonstrate how strategy profiles work. Finally,

we interpreted one of the common identity protection

mechanisms from the real world and utilized our gen-

erated strategy profile against it. We also provided

a new methodology for comparison among different

identity protection strategies.

In future work, we wish to evaluate our framework

on a full-size Identity Ecosystem. Furthermore, it is

particularly an interesting topic to evaluate and com-

pare more existing identity protection strategies with

the system we present. Meanwhile, the time complex-

ity of the proposed algorithm can be optimized for

certain network topology once more mature results

about the dynamic Identity Ecosystem are studied. Fi-

nally, we hope that results from this paper can provide

ICAART 2020 - 12th International Conference on Agents and Artificial Intelligence

142

some insight about applicable strategies of protecting

the integrity of one’s personal identity.

REFERENCES

Berghel, H. (2012). Identity theft and financial fraud: Some

strangeness in the proportions. Computer, 45(1):86–

89.

Bertsekas, D. P. and Tsitsiklis, J. N. (1996). Neuro-Dynamic

Programming. Athena Scientific, 1st edition.

Carin, L., Cybenko, G., and Hughes, J. (2008). Cybersecu-

rity strategies: The queries methodology. Computer,

41(8):20–26.

Chang, K. C., Zaeem, R. N., and Barber, K. S. (2018). En-

hancing and evaluating identity privacy and authenti-

cation strength by utilizing the identity ecosystem. In

Proceedings of the 2018 Workshop on Privacy in the

Electronic Society, pages 114–120. ACM.

Delaitre, S. (2006). Risk management approach on identity

theft in biometric systems context. In First Interna-

tional Conference on Availability, Reliability and Se-

curity (ARES’06), pages 4 pp.–982.

Goode, S. and Lacey, D. (2017). Designing and evaluating

two interventions to improve identity theft recovery

outcomes. In 2017 IEEE International Symposium on

Technology and Society (ISTAS), pages 1–6.

Harrell, E. (2017). Victims of identity theft. Bureau of

Justice Statistics.

Khattak, Z. A., Sulaiman, S., and Manan, J. A. (2010). A

study on threat model for federated identities in fed-

erated identity management system. In 2010 Inter-

national Symposium on Information Technology, vol-

ume 2, pages 618–623.

Kumar, P. and Shiau, T. (1981). Zero-sum dynamic games.

In LEONDES, C., editor, Advances in Theory and Ap-

plications, volume 17 of Control and Dynamic Sys-

tems, pages 345 – 378. Academic Press.

Lacey, D., Zaiss, J., and Barber, K. S. (2016). Understand-

ing victim-enabled identity theft. In 2016 14th An-

nual Conference on Privacy, Security and Trust (PST),

pages 196–202.

Liau, D., Zaeem, R. N., and Barber, K. S. (2019). Evalua-

tion framework for future privacy protection systems.

In International Conference on Privacy, Security and

Trust (PST), pages 339–341.

Manshaei, M. H., Zhu, Q., Alpcan, T., Bacs¸ar, T., and

Hubaux, J.-P. (2013). Game theory meets network se-

curity and privacy. ACM Comput. Surv., 45(3):25:1–

25:39.

Mashima, D. and Ahamad, M. (2008). Towards a user-

centric identity-usage monitoring system. In 2008 The

Third International Conference on Internet Monitor-

ing and Protection, pages 47–52.

Panigrahi, S., Kundu, A., Sural, S., and Majumdar, A.

(2009). Credit card fraud detection: A fusion ap-

proach using dempstershafer theory and bayesian

learning. Information Fusion, 10(4):354 – 363. Spe-

cial Issue on Information Fusion in Computer Secu-

rity.

Patek, S. and Bertsekas, D. (1999). Stochastic shortest path

games. SIAM Journal on Control and Optimization,

37(3):804–824.

Roy, S., Ellis, C., Shiva, S., Dasgupta, D., Shandilya, V.,

and Wu, Q. (2010). A survey of game theory as ap-

plied to network security. In 2010 43rd Hawaii Inter-

national Conference on System Sciences, pages 1–10.

Senda, K., Hattori, S., Hishinuma, T., and Kohda, T. (2014).

Acceleration of reinforcement learning by policy eval-

uation using nonstationary iterative method. IEEE

Transactions on Cybernetics, 44(12):2696–2705.

Shah, M. and Okeke, R. I. (2011). A framework for inter-

nal identity theft prevention in retail industry. In 2011

European Intelligence and Security Informatics Con-

ference, pages 366–371.

Shapley, L. S. (1953). Stochastic games. Proceedings of the

National Academy of Sciences, 39(10):1095–1100.

Yuan Cao and Lin Yang (2010). A survey of identity man-

agement technology. In 2010 IEEE International Con-

ference on Information Theory and Information Secu-

rity, pages 287–293.

Zaeem, R. N., Budalakoti, S., Barber, K. S., Rasheed, M.,

and Bajaj, C. (2016). Predicting and explaining iden-

tity risk, exposure and cost using the ecosystem of

identity attributes. In 2016 IEEE International Car-

nahan Conference on Security Technology (ICCST),

pages 1–8.

An Evaluation Framework for Future Privacy Protection Systems: A Dynamic Identity Ecosystem Approach

143