Robust Method for Detecting Defect in Images Printed on 3D

Micro-textured Surfaces: Modified Multiple Paired Pixel Consistency

Sheng Xiang

1

, Shun’ichi Kaneko

1

and Dong Liang

2

1

Graduate School of Information Science and Technology, Hokkaido University, Sapporo, Japan

2

College of Computer Science and Technology, Nanjing University of Aeronautics and Astronautics, Nanjing, P. R. China

Keywords:

Defect Inspection, Multiple Paired Pixel Consistency, Orientation Code, Qualification of Fitting, Data

Filtering, 3D Micro-textured Surface.

Abstract:

When attempting to examine three-dimensional micro-textured surfaces or illumination fluctuations, problems

such as shadowing can occur with many conventional visual inspection methods. Thus, we propose a mod-

ified method comprising orientation codes based on consistency of multiple pixel pairs to inspect defects in

logotypes printed on three-dimensional micro-textured surfaces. This algorithm comprises a training stage

and a detection stage. The aim of the training stage is to locate and pair supporting pixels that show similar

change trends as a target pixel and create a statistical model for each pixel pair. Here, we introduce our mod-

ified method that uses the chi-square test and skewness to increase the precision of the statistical model. The

detection stage identifies whether the target pixel matches its model and judges whether it is defective or not.

The results show the effectiveness of our proposed method for detecting defects in real product images.

1 INTRODUCTION

Defect inspection has always played a key role in the

manufacturing quality control (QC) process. There-

fore, many studies have focused on automatic qual-

ity inspection based on computer vision. In this pa-

per, our interest is in assessing how the currently

available visual inspection systems perform various

QC checks on printed products. We consider mainly

the inspection of printed characters and text or logo-

types for defects, such as holes, dents, and foreign

objects (Mehle et al., 2016). Currently, QC in the

printing industry is often carried out manually, but it

is labor-intensive and time-consuming process. Ad-

ditionally, results can vary according to inspectors’

mood, experience, and individual level of skill. Thus,

manual QC checks can be unreliable. Furthermore, no

operator-independentquality standard has been estab-

lished. To overcome these problems, manual inspec-

tion is beginning to be replaced by automatic visual

inspection systems (Ngan et al., 2011).

Texture is one of the most important features for

detecting defects and the issues that arise with de-

fect detection are generally considered to be due to

problems with texture analysis. Texture analysis tech-

niques may be categorized, as reported by Xie (Xie,

2008): statistical techniques (Karimi and Asemani,

2013), structural techniques (Kasi et al., 2014), filter-

based techniques (Jing et al., 2015), and model-based

techniques (Li et al., 2015).

In this paper, we analyze the surface of a printed

product embossed with randomly distributed three-

dimensional (3D) micro-textures. These types of sur-

face are achieved by an embossing process. Em-

bossing is the process of making tiny raised and

concave patterns on the surface of metal, plastic or

other materials. These embossed surfaces have an

attractive appearance, good hand feel and excellent

slip resistance. Therefore, these surfaces have been

widely used in many products worldwide. These

three-dimensional (3D) micro-structures, which are

uniformly embossed on the surface, produce a slight

shadow under illumination and appear as a random

texture in their image. Fluctuations in illumination

greatly influence the imaging effect of the surface. To

overcome this problem, we use our previously pro-

posed approach of orientation code matching (Ullah

and Kaneko, 2004), which is a robust matching or

registration method based on orientation codes. We

also analyze printed characters on 3D micro-textured

surfaces, which causes statistical fluctuation and thus

makes matching or defect registration difficult. From

past studies, we have found that points at the same lo-

cations in printed characters can show statistical simi-

260

Xiang, S., Kaneko, S. and Liang, D.

Robust Method for Detecting Defect in Images Printed on 3D Micro-textured Surfaces: Modified Multiple Paired Pixel Consistency.

DOI: 10.5220/0008910002600267

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 5: VISAPP, pages

260-267

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

larity so long as the difference between them is specif-

ically defined. The method we propose in the present

paper is called the Multiple Paired Pixel Consistency

(MPPC) Model in Orientation Codes, which is a mod-

ified version of the MPPC. Its effectiveness has al-

ready been demonstrated through many experiments

(Xiang et al., 2018). Based on the MPPC model of

defect-free images, we propose an algorithm for de-

fect inspection.

This paper is organized as follows. Section 2 in-

troduces in detail how the MPPC model works. Sec-

tion 3 introduces the modification for the MPPC. Sec-

tion 4 reports the experimental results and compares

the performance of the modified MPPC with that of

other advanced approaches. Section 5 concludes the

paper and addresses future works.

2 MPPC DEFECT-FREE MODEL

In this section, we first introduce the original ver-

sion of Orientation code and propose signed differ-

ence between any two codes as preparation for mak-

ing a more precise statistical model of their differ-

ence. Then we introduce how to make the MPPC

model for defect-free logo.

2.1 Orientation Codes

Orientation codes were proposed as filtering to extract

robust features based on only orientation information

involved in gradient vectors from any general types

of pictures. One could utilize it in the design of the

robust matching scheme, for example in the original

reference (Ullah and Kaneko, 2004).

Let I(i, j) be the brightness of pixel (i, j). Then

its partial derivatives in horizontal and vertical direc-

tions are written as ∇I

x

= ∂I/∂x and ∇I

y

= ∂I/∂y, re-

spectively. The orientation angle θ can be computed

by θ = tan

−1

(∇I

y

/∇I

x

) of which actual orientation

is determined after checking signs of the derivatives,

thus making the range of θ to be [0,2π). The orien-

tation code or OC is obtained by quantifying the ori-

entation angle θ into N levels with a constant width

∆θ(= 2π/N). The OC can be expressed as follows.

C

i, j

=

(

h

θ

i, j

∆θ

i

|▽I

x

| + |▽I

y

| ≥ Γ

N otherwise

(1)

where Γ is a threshold level for ignoring pixels with

low-contrast neighborhoods. That is, pixels with

neighborhoods of enough contrast are assigned OC

from the set {0,1,.. .,N − 1} while we assign the

code N to ignored pixels. In this paper Γ = 10 and

N = 16. An example of a set of OCs is shown in

Fig. 1.

Figure 1: Sixteen-OC.

2.2 Signed Difference in OC

Here we propose a somewhat new definition in OC

space which is better suited for the more detailed sta-

tistical design than the original one. In contrast with

the previous definition (Ullah and Kaneko, 2004), the

definition used here has not only positive differences

but also negative ones. We expect it to give a more

complete and precise distribution of OC differences

that facilitates statistical handling. The expression is

∆(a, b) =

(a− b) − N b ≤

N

2

− 1

∩ (a− b) ≥

N

2

(a− b) + N b >

N

2

− 1

∩ (a− b) < −

N

2

a− b otherwise

(2)

where a and b represent the orientation code to be

compared or subtracted, for instance from a target

and a reference image, respectively, and N shows the

invalid-pixel code.

2.3 MPPC Modeling

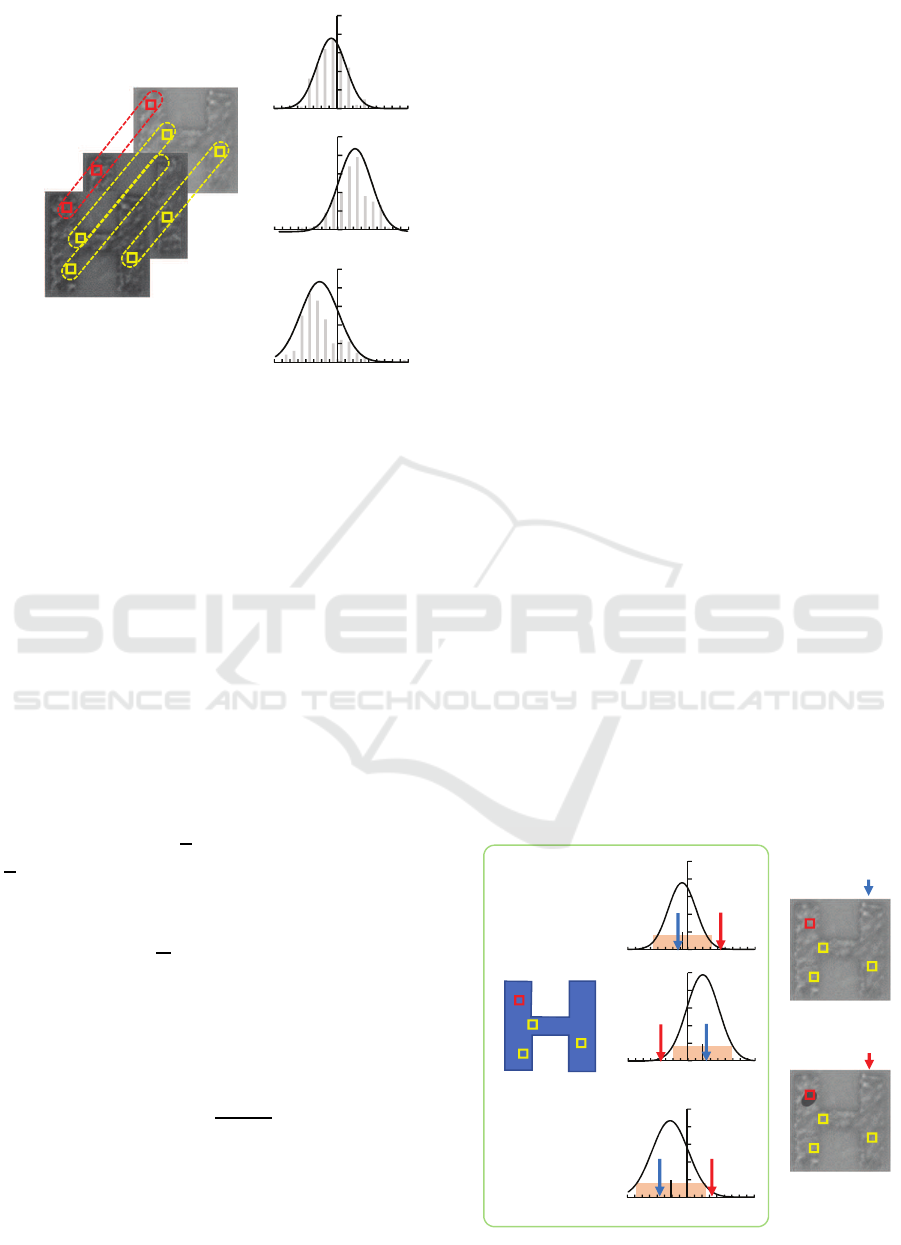

Fig. 2 shows the schematic structure of the proposed

model ‘Multiple Paired Pixel Consistency (MPPC)’

which can represent one statistical characteristic in

orientation code difference between two elemental

pixels in the pairs. The main idea of this statistical

modeling of pictures is called ‘CP3’ that has been pro-

posed previously by Liang (Liang et al., 2015) for

robust background subtraction. We propose an exten-

sion of this scheme by introducing the cohesive rela-

tionship of orientation codes in logo-logo pairs which

are defined between each target pixel P on a logotype

and the set of its supportingpixels also on the same lo-

gotype, which should be selected to have higher con-

sistency or correlation with the target pixel. In other

words, all of them have similar trends of change as

the target pixel has, for which we can make a statis-

tical model by fitting a single Gaussian distribution

to the orientation code difference histogram of these

pairs of high consistency.

Robust Method for Detecting Defect in Images Printed on 3D Micro-textured Surfaces: Modified Multiple Paired Pixel Consistency

261

-8-7-6-5-4-3-2-1 0 1 2 3 4 5 6 7 8

OC difference

50 0.3Frequency Density

݇ ൌ ͳ

گ

݇ ൌ ܭ

Q

2

Q

1

N

Q

P

-8-7-6-5-4-3-2-1 0 1 2 3 4 5 6 7 8

OC difference

50 0.2Frequency Density

Pair #N

Pair #1

-8-7-6-5-4-3-2-1 0 1 2 3 4 5 6 7 8

OC difference

50 0.2Frequency Density

Pair #2

…

…

݇ ൌ ʹ

Figure 2: Scheme of MPPC modeling.

We now consider how to select the supporting pix-

els from all the candidate pixels for a target pixel. For

an arbitrary logo-logo pixel pair (P,Q), we have two

sets of OC sequences in the same positions in all K

training images as follows:

P = {p

1

, p

2

,·· · , p

K

} (3)

and

Q = {q

1

,q

2

,·· · ,q

K

} (4)

where K is a total number of training sample images

as shown in Fig. 2.

For formalization in this paper, we use capital let-

ters in boldface, such as Q, to represent any set, sim-

ple capital ones to represent any pixel, lower ones to

show any orientation codes of the pixels, respectively.

The expectation values and the variances over

P and Q are defined as ¯p = 1/K

∑

K

k=1

p

k

, ¯q =

1/K

∑

K

k=1

q

k

, σ

2

P

=

1

K

∑

K

k=1

(p

k

− ¯p)

2

, and σ

2

Q

=

1

K

∑

K

k=1

(q

k

− ¯q)

2

, respectively.

The covariance between P and Q is defined as

C

P,Q

=

1

K

K

∑

k=1

(p

k

− ¯p)(q

k

− ¯q) (5)

If C

P,Q

> 0, then they have a consistency or

co-occurrence probability, and in order to measure

the consistency quantitatively, we use the Pearson

product-moment correlation coefficient:

γ

P,Q

=

C

P,Q

σ

P

· σ

Q

(6)

where σ

P

and σ

Q

are the standard deviations of P and

Q, respectively.

For each target pixel P(u,v) at the position (u,v),

we may have M − 1 candidate pixels in the same lo-

gotype, where M properly defines the total size of the

logotype in pixel. From these candidates we can se-

lect N (< M) supporting pixels in descending order of

the value of γ

P,Q

. The set of N supporting pixels is

Q =

Q

i

(u

i

,v

i

)|γ

P,Q

i

≥ γ

P,Q

i+1

i=1,2,···,N

(7)

We assume that each supporting pixel Q

i

main-

tains a bivariate OC difference with the target pixel

P,

∆(p,q

i

) ∼ N (µ

i

,σ

i

) (8)

where N (µ

i

,σ

i

) is the Gaussian distribution with the

mean µ

i

and the variance σ

2

i

which are calculated

from the corresponding pixel sets P and Q.

After the modeling for one target pixel P, the

above set of N pairs of four parameters for the po-

sition u

i

,v

i

and the two statistical parameters µ

i

and

σ

i

are recorded in a row of a look-up table (LUT).

Through the repetition of modeling, the LUT is filled

in to include the total set of MPPC models for all of

the pixels in an elemental logotype.

2.4 Defect Detection by MPPC

We now discuss how to utilize the proposed MPPC

model of the relationship between pixels in the defect-

free logotype for detecting many sorts of logotype de-

fects. Since the MPPC model can represent the sta-

tistical behavior of the relation of an individual target

pixel to the supporting pixels around it, we utilize it to

statistically test whether a target pixel is recognized as

a reasonable sample from the distribution registered

in the LUT or not. The scheme for defect detection

algorithm is shown in Fig. 3.

-8-7-6-5-4-3-2-1 0 1 2 3 4 5 6 7 8

OC difference

50 0.2Frequency Density

-8-7-6 -5-4-3 -2-1 0 1 2 3 4 5 6 7 8

OC difference

50 0.2Frequency Density

Pair #N

Pair #1

Pair #2

…

-8-7 -6-5-4-3-2-1 0 1 2 3 4 5 6 7 8

OC difference

50 0.3Frequency Density

P

Defect free: DF

Defective: D

DF

DF

DF

D

D

D

MPPC model

1

Q

2

Q

N

Q

P

1

Q

2

Q

N

Q

P

1

Q

2

Q

N

Q

Logotype ‘H’

Figure 3: Scheme of defect detection by MPPC model.

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

262

Defects have several types, such as dust or parti-

cles, scratches, misprinting, hairs, spits, etc., of which

characteristics are randomness in alignment, texture,

shape, or size. Because of high-performance qual-

ity control in production lines, these defects may be

very small, and furthermore, we should also keep in

mind their very low probability of occurrence. In ad-

dition, in this paper we need to handle the randomness

in 3D micro-textured surfaces too. Thus, these funda-

mental characteristics lead us to approaches based on

pixel-wise evaluation for detection. Afterwards we

may proceed to some next steps to recognize them as

aggregated regions that reveal some common charac-

teristics. From these considerations and the investi-

gation in Section 2.3, the two features of our MPPC

model, spatial sparseness and high consistency in cor-

relative relation, can be utilized for handling defects.

The former may prevent any defect from occupying P

and some supporting pixels simultaneously and then

by use of the latter feature we expect to have some

evaluation scheme for recognizing whether P is occu-

pied by any defect or not.

The next task must be to design a measure for

judging defect pixels or defect-free pixels by use of

the MPPC model. We first test each OC difference

between target pixel P and a supporting pixel Q in the

MPPC model or the LUT by using Q for P in the tar-

get image. We define the following measure:

β

i

=

(

1 k∆(p,q

i

) − µ

i

k ≥ C · σ

i

0 otherwise

(9)

for identifying the normal (β

i

= 0) or the abnormal

(β

i

= 1) states at the corresponding position defined

by the elemental MPPC model, where the constant C

is a parameter which can be set from 1 to 3 to define

an area for 68% through 99.7% acceptance probabil-

ity. Finally, we use the total sum

ξ =

1

N

N

∑

i=1

β

i

(10)

to construct a decision rule for the occupation of P by

any defect: ξ ≥ T, where T =

1

N

floor

N

2

+ 1

is a

threshold for the general majority rule, and N is the

total number of supporting pixels.

3 MODIFICATION OF THE MPPC

Two representativeapproaches are known for modify-

ing or upgrading the performance of the MPPC model

for defect detection. The first modifies the structure

and the second modifies the parameters. In this pa-

per, we attempt the first approach by excluding or fil-

tering inappropriate data from the process of making

elemental Gaussian models for each pixel pair con-

sisting of a target pixel and a supporting pixel. To

realize this process, we need two schemes: a judg-

ment scheme and a localization scheme that locates

inappropriate or outlying data.

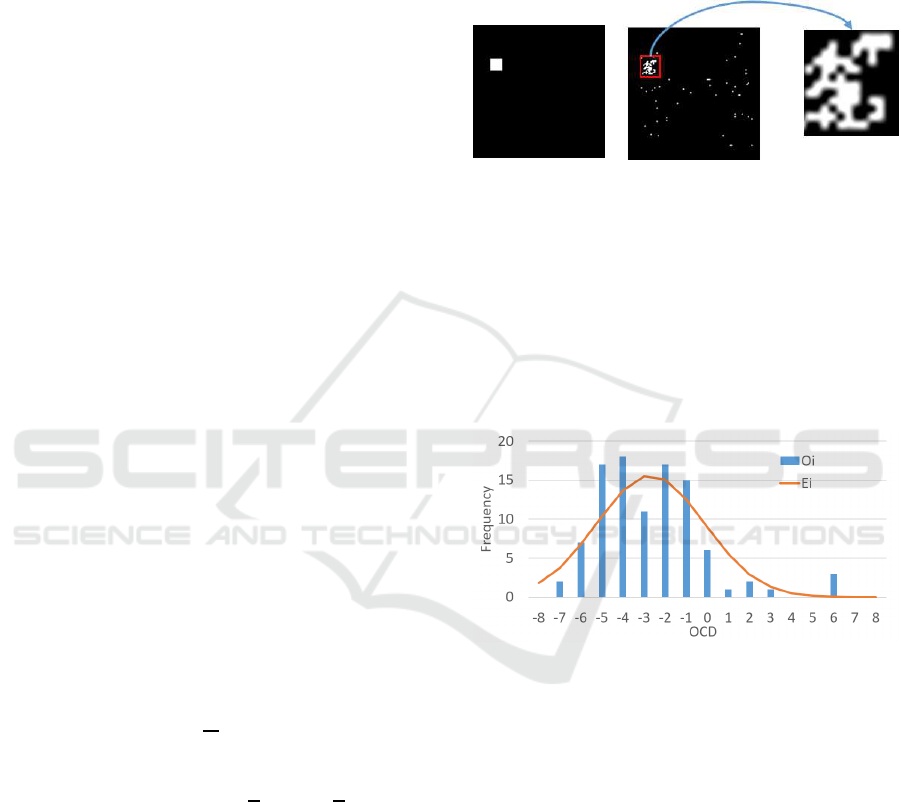

Fig 4 is an example of simulative detection. We

Figure 4: An example of a detection result. From left to

right, ground truth image, detection result, magnified view

of the defect.

can see that it contains some holes in the defective

squared area. We suspect that there may be some

problems with the trained model. So we need to an-

alyze the trained model. We randomly select a pixel

in the hole and analyze the statistical relationship be-

tween the target and its supporting pixels.

Fig 5 is a histogram for one pixel pair that shows

Figure 5: A pixel pair histogram showing frequency of ori-

entation code differences between the target pixel and its

supporting pixel.

the frequency of orientation code (OC) differences

between the target pixel versus its supporting pixel.

Where O

i

is the measured data and E

i

is the fitted

data. From this analysis, we could identify gaps be-

tween the fitted distribution and the actual distribution

of measured data. The gaps may have been caused

by noise (possibly including defects) in the training

data, but there were probably quite rare. Detecting

defects in the materials examined in this article is not

an easy task, even for professional inspectors who are

trained to find small and/or obscure defects. Further-

more, judgment may vary depending on the individ-

ual inspector. Another problem is that the number of

training samples is not so larger in general. If this

trial set of samples contains inaccurate data expressed

as noise, it may have a not neglectable effect on the

Robust Method for Detecting Defect in Images Printed on 3D Micro-textured Surfaces: Modified Multiple Paired Pixel Consistency

263

resulting model structure. Therefore, we proposed a

process of filtering out these outlying data as a modi-

fication of the MPPC model. We hoped that this mod-

ification would make a version of the MPPC model

that could detect defects more accurately.

The modification process comprises two steps.

First, we test the appropriateness of any trained ele-

ment of the MPPC model; in other words, we deter-

mine whether the fit is good. If the fit is poor, we need

to proceed with the second step, which comprises fil-

tering inconsistent image data. From the resulting fil-

tered image, we can then create a single elementary

Gaussian model to fit the pair of target and support-

ing pixels again.

3.1 Chi-square Qualification Test

The MPPC model needs many elementary Gaussian

distributions for each pixel pair, which are fitted to

a set of sample gray levels. In this section, we in-

troduce how to judge fit quality. For every elemen-

tary model, we have two histograms. One shows the

OC differences for a pixel pair and is called the ob-

servation histogram H

O

. The other is the expected

histogram H

E

generated by the MPPC modeling pro-

cedure. Here, we introduce Pearson’s chi-square

test (McHugh, 2013) to measure goodness of fit. The

expression is as follows:

χ

2

=

N

∑

i=1

(O

i

− E

i

)

2

E

i

(11)

where N is the number of bins in the H

O

, O

i

is an

observed value in the i-th bin of H

O

, and E

i

is the

corresponding expected value in the H

E

.

χ

2

< p − value indicates that the fit is good; oth-

erwise the fit is considered poor, in which case we

proceed with the next step of filtering noisy data, and

attempt the fit again until we achieve χ

2

< p− value.

The p− value can be found in the chi-square table.

3.2 Skewness-based Data Filtering

Fig. 5 shows an example of outlying noisy data in the

rightmost bin. The next problem may be how to find

the data or position of noisy data. Our MPPC model

is based on an approximation via the single Gaussian

model, which is symmetrical in nature, so it is diffi-

cult to distinguish these biased data in the histogram.

To estimate the locations of these data, we introduced

a statistical feature, that is, skewness (Mardia, 1970),

where asymmetry is measured in probability distri-

butions of a real-valued random variable around its

mean. Here, we utilize Pearson’s moment coefficient

of skewness γ

1

, defined as:

γ

1

= E

"

X − µ

σ

3

#

(12)

where µ is the mean, σ is the standard deviation, X is

the observed value, and E is the expectation operator.

γ

1

< 0 indicates negative skew, which means the

left tail in the histogram is longer than the right tail;

that is, the mass of the distribution is concentrated on

the right of the histogram.

In this case, we may assume that the leftmost

columns in histogram H

O

include noisy data. Thus,

we remove these data or record the identification

numbers, including those in the leftmost column in

histogram H

O

, to create a new histogram H

′

O

. We

then perform a single Gaussian fitting based on H

′

O

to obtain another new MPPC model. Next, we cre-

ate a new expected histogram H

′

E

based on the new

MPPC model. On the other hand, we must consider

positive skew, where the right tail is longer than the

left tail; that is, the mass of the distribution is concen-

trated on the left of the histogram. Therefore, we can

assume that the rightmost columns in histogram H

O

may contain noisy data. We must remove the image

data belonging to the rightmost column of histogram

H

O

, resulting in a new histogram H

′

O

. Finally, we uti-

lize the chi-square qualification test to check the fit-

ting quality of the new MPPC model. This loop oper-

ation is continued until the fitting quality improves.

4 EXPERIMENTS

4.1 Experimental Setup

We used six sets of real images from a factory: the

characters ‘H’, ‘U’, ‘A’, ‘W’, ‘E’, and ‘I’, each of

which includes 160 defect-free images taken under 3

different illumination conditions: dark, normal, and

bright. Of these 160 images, we chose 60 to train

the MPPC model. In our experiments, we set the

two thresholds in the detection stage as C = 2.0 and

T = 0.5.

We used two defect types: synthesized or artificial

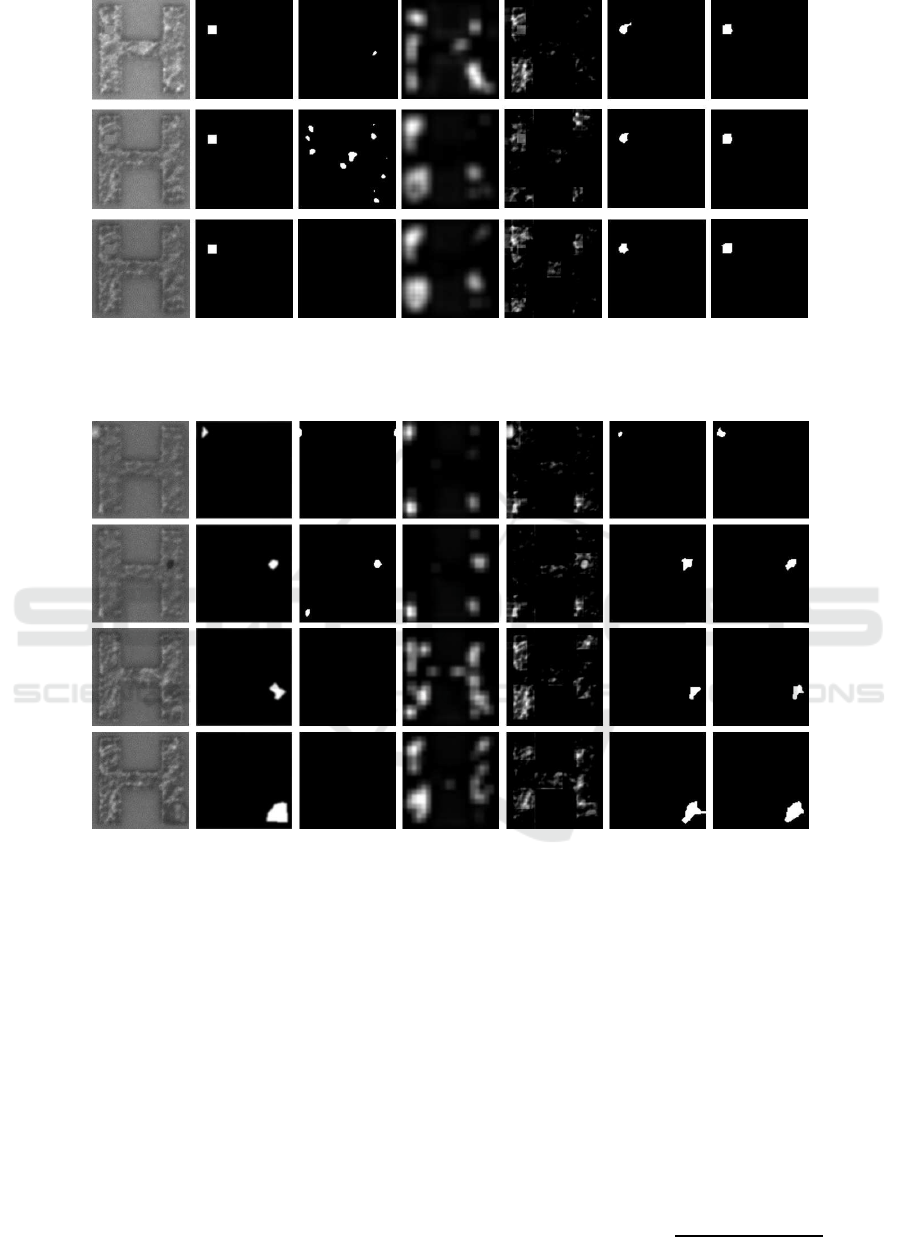

defects and real defects, as shown in Fig. 6 and Fig. 7,

respectively.

Because it was difficult to collect real defects from

factories, we instead used synthesized defects in our

experiments, as shown in Fig. 7. Here, we first ex-

tracted a small square area from the logotype back-

ground (unprinted portion) and pasted it onto the lo-

gotype (printed portion). These artificial defects rep-

resent misprinted logotypes. Fig. 6 demonstrates that

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

264

Figure 6: Examples of defect detection results for synthesized defects. From the leftmost to rightmost column: test images,

corresponding ground truth images, detected results by phase only transform, prior knowledge guided least squares regression,

modified robust principal component analysis with noise term and defect prior, multiple paired pixel consistency (MPPC),

and MPPC with modification, respectively.

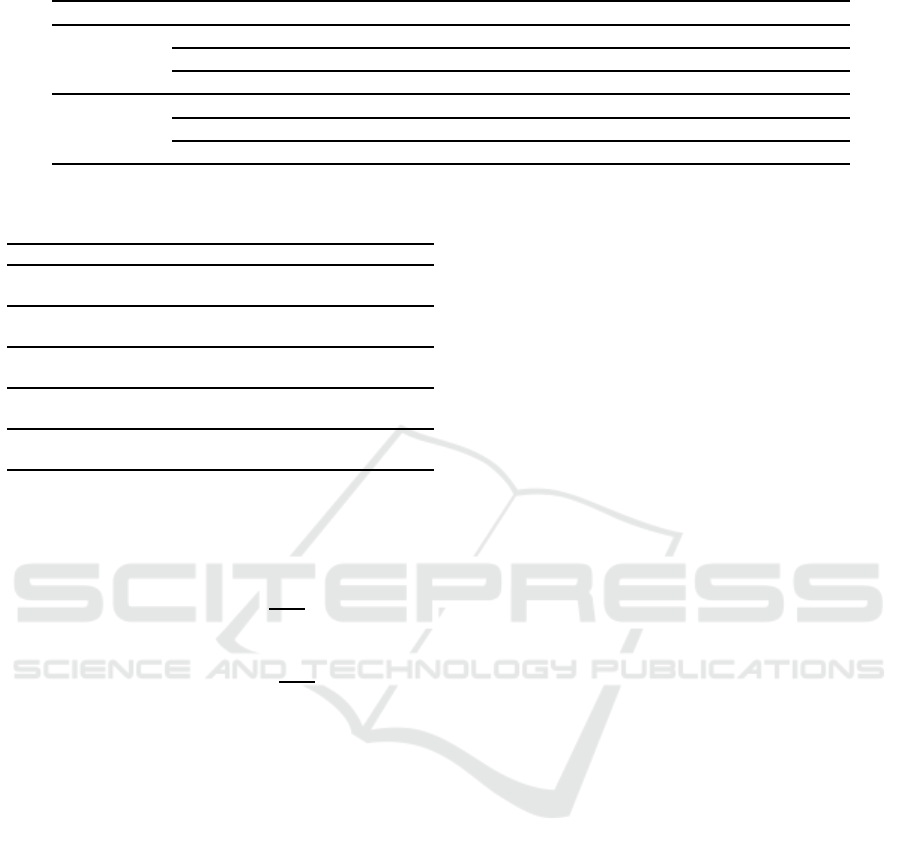

Figure 7: Detected defects in real defect images.

the ground truth can not easily be defined for real de-

fects because the boundary is unclear between the lo-

gotype and its background, even with magnification.

Therefore, we used the synthesized defects to quan-

titatively evaluate the performance of the proposed

MPPC-based detection method.

4.2 Evaluation Metrics

There are several ways to evaluate the performance

of defect detection. First pixel-level precision, re-

call, and F-measure are tried to test the proposed

MPPC models and the detection algorithm for the

statistical test based on the models, where our ap-

proach assumes as a two-class or binary classifica-

tion problem to classify any pixel into the defective

class and the defect-free class. Along with our prob-

lem to detect defects in pixel, we utilized three eval-

uation metrics: Precision(also known as positive pre-

dictive value), Recall (also known as sensitivity) and

F-measure. These measures have wide application in

pattern recognition, information retrieval, and binary

classification. And pixel-level defect detection is a

typical binary classification problem, so these three

indicators can also be used for the quantitative analy-

sis of defect detection. Precision can be considered a

measure of accuracy, while Recall can be considered

a measurement of defect integrity.

F-measure is a harmonic average of the Precision

and Recall.

F − measure =

2Precision· Recall

Precision+ Recall

(13)

Robust Method for Detecting Defect in Images Printed on 3D Micro-textured Surfaces: Modified Multiple Paired Pixel Consistency

265

Table 1: Pixel-level based performance of defect detection: comparison of 5 methods.

Defect type Measurement PHOT PG-LSR PN-RPCA MPPC MPPC+Modification

Synthesized

Recall 0.35 0.49 0.74 0.89 0.94

Precision 0.34 0.49 0.51 0.96 0.96

F-measure 0.35 0.49 0.61 0.91 0.95

Real

Recall 0.46 0.53 0.51 0.83 0.88

Precision 0.44 0.87 0.51 0.97 0.97

F-measure 0.45 0.66 0.51 0.90 0.93

Table 2: Image-level based performance of defect detection:

comparison of 5 methods.

Defect type Detection Rate(%)

PG-LSR

Synthesized 48

Real 65

PHOT

Synthesized 66

Real 50

PN-RPCA

Synthesized 66

Real 80

MPPC

synthesized 98

Real 100

MPPC+Modification

synthesis 98

Real 100

For image based performance evaluation (Ngan

et al., 2011), we utilized the detection rate and the

false alarm rate as follows:

Detection Rate =

N

T

N

TD

(14)

False Alarm Rate =

N

F

N

TF

(15)

where N

T

, N

F

, N

TD

and N

TF

are the numbers of de-

fectivelogotypes or images correctly detected, defect-

free detected as defective, the total number of defec-

tive samples, and defect-free logotypes, respectively.

4.3 Experimental Result

Many researchers carrying out defect detection

for surface inspection have commonly examined

steel (Selvi and Jenefa, 2014; Zhou et al., 2017), tex-

tile (Li et al., 2017; Arnia and Munadi, 2015), and

wood (Zhang et al., 2015). For printing inspections,

examinations have generally been performed with pa-

per materials (Fucheng et al., 2009) and pharmaceu-

tical capsules (Mehle et al., 2016). However, to our

knowledge, no studies have investigated defect detec-

tion for logotypes on 3D micro-textured surfaces, as

we have examined in the present study. To verify the

effectiveness of the proposed method, we compared

it and the original MPPC method with 3 methods:

prior knowledge guided least squares regression (PG-

LSR) for fabric defect inspection (Cao et al., 2017),

phase only transform (PHOT) for surface defect de-

tection (Aiger and Talbot, 2012), and modified robust

principal component analysis with noise term and de-

fect prior (PN-RPCA) for fabric inspection (Cao et al.,

2016). All 3 methods are unsupervised. Also, they

are very effective for detecting defects in textured ma-

terial. The texture of the material they process is

somewhatsimilar to the material mentioned in this pa-

per. Furthermore, all of three methods disclose their

source code and indicate the setting of the parameters.

Here, we used 60 synthesized defect images taken

under the 3 different illumination conditions men-

tioned above and 20 real defect images of each char-

acter for comparison. Their implementation were

conducted by use of the source code disclosed, and

the selection of parameters were the recommended

ones by the authors, respectively. Fig. 6 and Fig. 7

show representative results for the synthesized and

real defects, respectively. Although fluctuations in

illumination were large, we found that the proposed

method could detect defects of very similar size and

shape. This demonstrates that the OC in the MPPC

models were highly robust. Furthermore, approxi-

mately half of the defects detected by all 4 methods

were roughlyconsistent with the ground truth. For de-

fects with high contrast, all the methods showed good

detection performance. However, for low-contrast de-

fects, PG-LSR, PHOT, and PN-RPCA showed lower

performance than the proposed method. This is due

to how the target materials used in the experiments

were sufficiently different from those that they were

initially designed for, which have somewhat consis-

tent textures. The textures of our materials were gen-

erally random with irregular patterns. Table 1 shows

the pixel-level based performance evaluation, while

Table 2 shows the image-based performance evalua-

tion for each method. These tables show that PG-LSR

could successfully detect about half of all defects and

that PHOT and PN-RPCA could successfully detect

about 70% of all defects. The performance of the pro-

posed method was very high. Furthermore, the MPPC

with modification showed obvious improvementcom-

pared with the original MPPC.

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

266

5 CONCLUSIONS

We have proposed a novel model of statistical struc-

ture, the MPPC model, using orientation codes in

defect-free logotypes printed on a 3D micro-textured

surface. Based on the MPPC models of defect-free

images, we proposed a new defect localization algo-

rithm, which was effectivefor detecting defects in real

images. From this, we also proposed a modified ver-

sion of the MPPC. Our experimental results showed

that the modified MPPC was an obvious improvement

over the original MPPC.

In future works, we hope to design schemas to

identify and classify differentdefect types, which may

contribute to improving the effectiveness of QC in

manufacturing production lines.

REFERENCES

Aiger, D. and Talbot, H. (2012). The phase only transform

for unsupervised surface defect detection. In Emerg-

ing Topics In Computer Vision And Its Applications,

pages 215–232. World Scientific.

Arnia, F. and Munadi, K. (2015). Real time textile de-

fect detection using glcm in dct-based compressed im-

ages. In Modeling, Simulation, and Applied Opti-

mization (ICMSAO), 2015 6th International Confer-

ence on, pages 1–6. IEEE.

Cao, J., Wang, N., Zhang, J., Wen, Z., Li, B., and Liu, X.

(2016). Detection of varied defects in diverse fabric

images via modified rpca with noise term and defect

prior. International Journal of Clothing Science and

Technology, 28(4):516–529.

Cao, J., Zhang, J., Wen, Z., Wang, N., and Liu, X. (2017).

Fabric defect inspection using prior knowledge guided

least squares regression. Multimedia Tools and Appli-

cations, 76(3):4141–4157.

Fucheng, Y., Lifan, Z., and Yongbin, Z. (2009). The re-

search of printing’s image defect inspection based

on machine vision. In Mechatronics and Automa-

tion, 2009. ICMA 2009. International Conference on.

IEEE.

Jing, J., Chen, S., and Li, P. (2015). Automatic defect de-

tection of patterned fabric via combining the optimal

gabor filter and golden image subtraction. Journal of

Fiber Bioengineering and Informatics, 8(2):229–239.

Karimi, M. H. and Asemani, D. (2013). A novel histogram

thresholding method for surface defect detection. In

2013 8th Iranian Conference on Machine Vision and

Image Processing (MVIP), pages 95–99. IEEE.

Kasi, M. K., Rao, J. B., and Sahu, V. K. (2014). Identi-

fication of leather defects using an autoadaptive edge

detection image processing algorithm. In 2014 Inter-

national Conference on High Performance Computing

and Applications (ICHPCA), pages 1–4. IEEE.

Li, M., Cui, S., Xie, Z., et al. (2015). Application of gaus-

sian mixture model on defect detection of print fabric.

Journal of Textile Research, 36(8):94–98.

Li, P., Liang, J., Shen, X., Zhao, M., and Sui, L. (2017).

Textile fabric defect detection based on low-rank rep-

resentation. Multimedia Tools and Applications, pages

1–26.

Liang, D., Hashimoto, M., Iwata, K., Zhao, X., et al.

(2015). Co-occurrence probability-based pixel pairs

background model for robust object detection in dy-

namic scenes. Pattern Recognition, 48(4):1374–1390.

Mardia, K. V. (1970). Measures of multivariate skew-

ness and kurtosis with applications. Biometrika,

57(3):519–530.

McHugh, M. L. (2013). The chi-square test of inde-

pendence. Biochemia medica: Biochemia medica,

23(2):143–149.

Mehle, A., Bukovec, M., Likar, B., and Tomaˇzeviˇc, D.

(2016). Print registration for automated visual inspec-

tion of transparent pharmaceutical capsules. Machine

Vision and Applications, 27(7):1087–1102.

Ngan, H. Y., Pang, G. K., and Yung, N. H. (2011). Au-

tomated fabric defect detection- a review. Image and

Vision Computing, 29(7):442–458.

Selvi, M. and Jenefa, D. (2014). Automated defect detection

of steel surface using neural network classifier with

co-occurrence features. International Journal, 4(3).

Ullah, F. and Kaneko, S. (2004). Using orientation codes for

rotation-invariant template matching. Pattern recogni-

tion, 37(2):201–209.

Xiang, S., Yan, Y., Asano, H., and Kaneko, S. (2018).

Robust printing defect detection on 3d textured sur-

faces by multiple paired pixel consistency of orien-

tation codes. In 2018 12th France-Japan and 10th

Europe-Asia Congress on Mechatronics, pages 373–

378. IEEE.

Xie, X. (2008). A review of recent advances in surface de-

fect detection using texture analysis techniques. EL-

CVIA Electronic Letters on Computer Vision and Im-

age Analysis, 7(3).

Zhang, Y., Xu, C., Li, C., Yu, H., and Cao, J. (2015).

Wood defect detection method with pca feature fusion

and compressed sensing. Journal of forestry research,

26(3):745–751.

Zhou, S., Chen, Y., Zhang, D., Xie, J., and Zhou, Y. (2017).

Classification of surface defects on steel sheet us-

ing convolutional neural networks. Mater. Technol,

51(1):123–131.

Robust Method for Detecting Defect in Images Printed on 3D Micro-textured Surfaces: Modified Multiple Paired Pixel Consistency

267