Hand Gesture Recognition based on Near-infrared Sensing Wristband

Andualem T. Maereg

1 a

, Yang Lou

2 b

, Emanuele L. Secco

1 c

and Raymond King

2

1

Robotics Lab, Liverpool Hope University, Liverpool, U.K.

2

Facebook Reality Labs, Redmond, WA, U.S.A.

Keywords:

Hand Gesture, NIR, Human-machine Interaction (HCI), Bio-sensing, Virtual-reality, Wearable Sensing.

Abstract:

Wrist-worn gesture sensing systems can be used as a seamless interface for AR/VR interactions and control

of various devices. In this paper, we present a low-cost gesture sensing system that utilizes near Infrared

Emitters (600 - 1100 nm) and Photo-Receivers encompassing the wrist to infer hand gestures. The proposed

system consists of a wristband comprising Infrared emitters and receivers, data acquisition hardware, data

post-processing software, and gesture classification algorithms. During the data acquisition process, 24 near

Infrared Emitters are sequentially switched on around the wrist, and twelve Photo-diodes measure the light

reflected, refracted, and scattered by the tissues inside the wrist. The acquired data corresponding to different

gestures are labeled and input into a machine learning algorithm for gesture classification. To demonstrated

the accuracy and speed of the proposed system, real-time gesture sensing user studies were conducted. As a

result of this comparison, we obtained an average accuracy of 98.06% with standard deviation of 1.82%. In

addition, we evaluated that the system can perform six-eight gestures per second in real time using a desktop

computer operating with Core i7-7800X CPU at 3.5GHz and 32 GB RAM.

1 INTRODUCTION

Hand gesture recognition refers to the problem of

identifying hand gestures executed by a user at a spe-

cific time. Humans naturally gesticulate with their

hands forming both static hand poses and dynamic

gestures to deliver information. For this reason, hand

gesture sensing or recognition have long been stud-

ied for intuitive control of interactive systems, as

well as in many other engineering and medical ap-

plications (Fukui et al., 2014)(Rekimoto, 2001)(Ker-

ber et al., 2016). Some typical such applications in-

clude human-machine interaction interfaces, control

of hand prostheses and rehabilitation devices, sign

language interpretations (Peck, 2003)(Colac¸o et al.,

2013)(Freeman and Weissman, 1997).

In order to design all-day-wearable gesture sens-

ing devices, the following requirements are usually

considered: the devices should be non-obtrusive,

they should not cause physical discomfort or encum-

brances to the natural hand movement; moreover, they

should also be intuitive and easily accessible. To meet

all such criteria, a wrist-worn device is a great candi-

a

https://orcid.org/0000-0001-6389-0694

b

https://orcid.org/0000-0002-9216-5296

c

https://orcid.org/0000-0002-3269-6749

date. Therefore, there has been several bio-sensing

research that seeks to infer gestures from tracking the

anatomical changes within the wrist.

Several wrist-worn devices have been proposed

for hand gesture recognition. The sensing modalities

include camera-based systems (Kim et al., 2012), in-

ertial motion sensing (Wen et al., 2016)(Laput et al.,

2016), Electromyography(EMG) (Benalc

´

azar et al.,

2017)(McIntosh et al., 2016)(Boyali and Hashimoto,

2016)(Huang et al., 2015), Electrical Impedance To-

mography (EIT) (Zhang et al., 2016)(Zhang and

Harrison, 2015), and capacitive and resistive pres-

sure sensing systems (Dementyev and Paradiso,

2014)(McIntosh et al., 2016)(Truong et al., 2017).

Each modality has its own merits and limits. Wear-

able camera systems attach small cameras near the

wrist to recognize different hand shapes. For ex-

ample, Digits (Kim et al., 2012) uses a 3D infrared

camera to identify gestures using machine vision sys-

tems. However, some significant limitations of this

type of sensing include line-of-sight occlusions, am-

bient light noise, and higher computational cost as-

sociated with more complicated imaging processing

algorithms. Inertial motion sensing systems em-

ploy inertial measurement units (IMUs), which con-

sist of accelerometers, gyroscopes, and magnetome-

110

Maereg, A., Lou, Y., Secco, E. and King, R.

Hand Gesture Recognition based on Near-infrared Sensing Wristband.

DOI: 10.5220/0008909401100117

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 2: HUCAPP, pages

110-117

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

ters, to measure arm and finger orientations (Wen

et al., 2016). Accelerometers data has also been

used to recognize different activities by sensing dy-

namic features related to hand motions (Laput et al.,

2016). However, Inertial motion sensors are very lim-

ited at detecting static hand gestures. Electromyo-

graphy (EMG) estimates the myoelectric potential

generated during hand and finger movements by at-

taching electrodes to the upper part of the forearm. It

has been extensively explored for static and dynamic

gesture detection (Benalc

´

azar et al., 2017)(Boyali and

Hashimoto, 2016). Compared to other sensing tech-

niques, the limitations of EMG systems include the

requirement of massive datasets and the heavy com-

putation burden associated with extensive signal pro-

cessing. In addition, they also require careful ini-

tial configuration and calibration for adequate perfor-

mance. Electrical impedance tomography (EIT) is

another well-studied method for hand gesture recog-

nition (Zhang and Harrison, 2015). It measures the

impedance changes between pairs of electrodes to

track the wrist tissue changes. However, this type

of method is susceptible to resistance coupling be-

tween the electrode and skin, and sometimes require

electric-conductive gel for stable coupling. Other

sensing modalities include Force sensing resistors

(FSRs) system, which measures the pressure distri-

bution around the wrist to identify different static ges-

tures (Dementyev and Paradiso, 2014), and Capac-

itive pressure sensors, such as GestureWrist (Reki-

moto, 2001)(Truong et al., 2017), which measures ca-

pacitive changes around the wrist are also used. Most

of the gesture sensing methods mentioned above are

unsuitable for practical use due to either low accuracy,

high cost, poor ergonomics, portability, or ease of use.

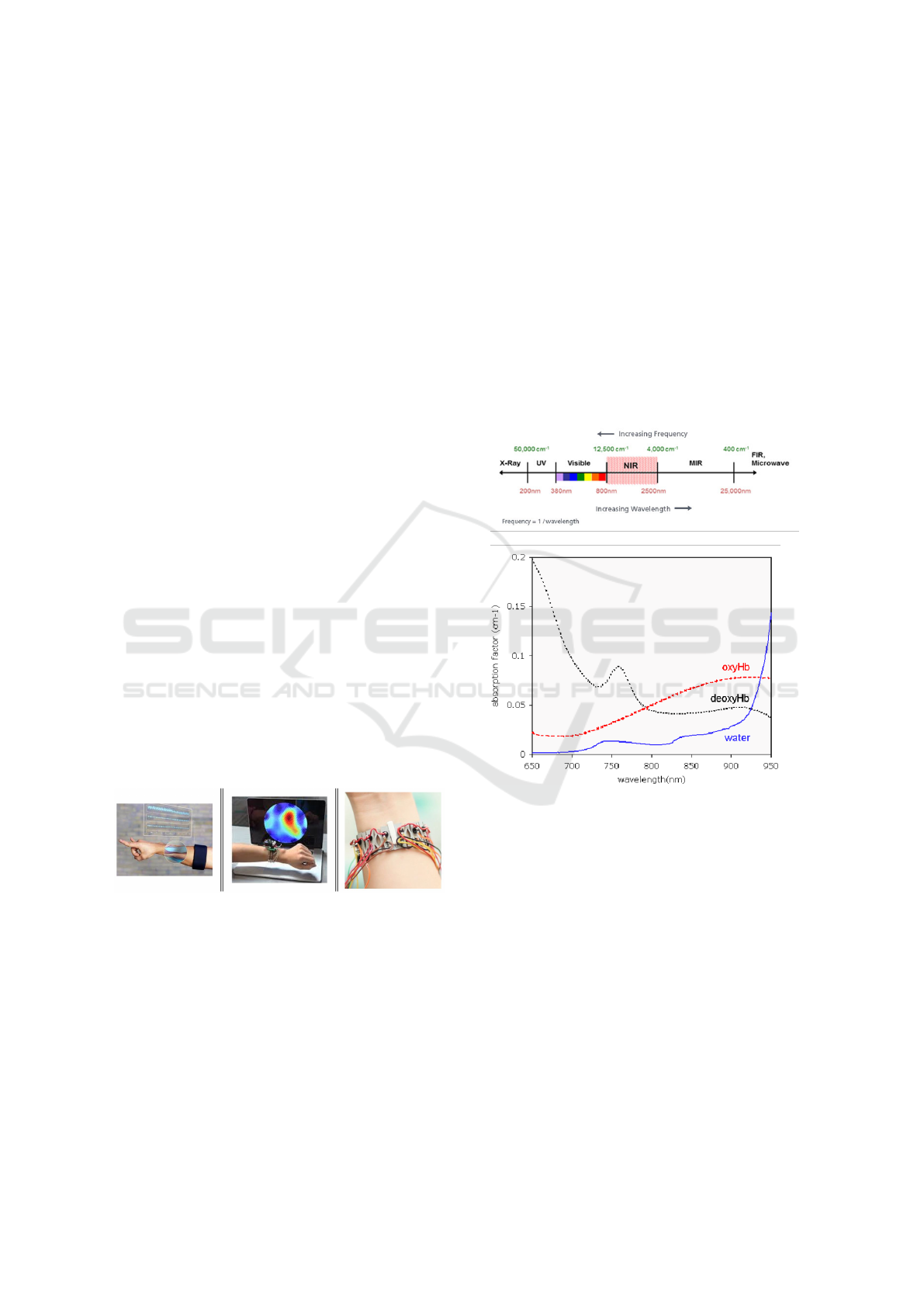

Figure 1: Gesture sensing technologies: Electromyography

(EMG), Electrical Impedance Tomography (EIT)(Zhang

and Harrison, 2015), Near Infrared Sensing(NIR) (McIn-

tosh et al., 2017), From Left to Right.

2 NEAR INFRARED (NIR)

SYSTEM

Near-infrared (NIR) systems have been long inves-

tigated in the area of medical applications, because

of their ability to image changes in tissues (Nielsen

et al., 2008)(Chaiken et al., 2011). The near-infrared

window is the light spectrum in the wavelength range

between 600nm to 1100nm. As shown in Figure 2,

Light has maximum penetration in tissues in Near In-

frared window. The absorption coefficient of water,

melanin, de-oxygenated, and oxygenated blood are

low in NIR window. Photons that enter human tis-

sue typically undergo absorption, scattering, and re-

flection. Changes in the anatomical structures of the

wrist while performing different gestures will result

in changes in how injected light interacts with tis-

sues, including the three types of incidents mentioned

above. These changes can be utilized to detect hand

gestures by measuring the transmission and diffrac-

tion of light through the wrist.

Figure 2: Absorption rate of Near Infrared light by differ-

ent tissues: Water, Oxygenated and De-oxygenated blood at

different light wavelength (Izzetoglu et al., 2007).

This project uses wristband which consists of

pairs of infrared emitters and receivers to measure

the light reflected or scattered through/off the wrist.

There have been some previous works (McIntosh

et al., 2017), that demonstrated NIR’s potentials for

non-invasive and accurate gestures recognition. In our

study, We explore further to improve the accuracy of

the NIR system in detecting gestures by developing a

system which is robust against skin-sensor coupling.

This study also explores the impact of the number and

configuration of the sensors on the overall accuracy

of the system. Two different wavelengths were also

tested to see the variation in accuracy at different light

absorption rates of tissues.

Hand Gesture Recognition based on Near-infrared Sensing Wristband

111

3 NIR WRISTBAND

IMPLEMENTATION

3.1 Wristband Hardware

The NIR wristband (shown in Figure 4) is composed

of 24 near-infrared emitters, 12 photo-diodes with an

on-chip trans-impedance amplifier. A controller is

used to switch the IR LEDs and sample the photo-

diodes output sequentially. The sampled data is then

used by a machine learning algorithm to classify fea-

tures into different gestures.

Figure 3: Block Diagram of NIR wristband: consists of the

wristband gesture sensing system, data acquisition and pro-

cessing.

Figure 4: The full NIR gesture sensing wristband hardware

setup: The data acquisition and IR LED control hardware.

The selection of emitters consider factors includ-

ing power consumption, optical power, and emitter-

detector spacing on the wristband. After testing dif-

ferent IR emitters to see the effect of radiant intensity

(12 - 1100 mW/sr) and light beam angle (6 − 130

◦

)

and emission wavelength on the received signal, we

chose MTMD7885NN24 multi-chip IR emitters that

have peak emission wavelengths at 770nm, 810nm,

and 850nm. We chose the photodiodes to be TI’s

OPT101 with an on-chip trans-impedance amplifier

and 1 Mohm feedback resistor creating a bandwidth

of 14kHz. The built-in trans-impedance amplifier re-

duces noise pick-up and space requirement, compared

to an external amplifier.

To generate a timed and synchronized PWM con-

trol signal sequence, a NI DAQ(USB-6353) device

was employed. The PWM sequence was generated

at a 20Hz frequency, with 4% duty cycle and 15 de-

grees phase shift of consecutive channels. It creates

a sequential switching of all emitters in 50 ms dura-

tion. A Darlington array ICs, ULN2803a (capable of

sinking up to 500 mA, 50V) was used to amplify the

control signal. The cascade connection of two transis-

tors in Darlington arrays creates the effect of a single

transistor with a very high current gain. The very high

β allows for high output current drive with a very-low

input current, essentially equating to operation with

low GPIO voltages. Current limiting resistors were

utilized to control the amount of current drawn by

the emitters. The acquired analog signal from each

photodiode amplifier was sampled at 16-bit resolution

and 9600 samples per second.

Compared to SenseIR (McIntosh et al., 2017), this

wristband hardware is developed with more IR emit-

ters, i.e., 24 IR emitters and a better mechanical wrist-

band design. This improvement increases the relative

prediction accuracy because the rigidity if the wrist-

band improves the robustness of the system to mis-

alignment errors. A careful analysis of the optical

power with respect to emitter-receiver spacing and IR

beam angle has also been done. The wristnband hard-

ware also features PCB connectors which are modular

and durable. Not only this reduces the cumbersome

cabling needed but also reduces noise pickups.

3.2 Machine Learning Software

The software of this study consists of four major

components: data collection, data processing, model

training, and both offline and real-time evaluation.

3.2.1 Data Collection

During this phase, the PWM waveform generation

and emitter control session are run continuously in

the background and scan the full 360 degrees of the

wrist at an effective frequency of 20Hz. During each

scanning of 360 degrees, 24 emitters are pulsed in

sequence, each generating a finite width rectangular

waveform.

The rising edge of emitter 1 triggers the analog

data acquisition and lasts for exactly 50ms. Since

the analog signal is sampled at 9600 samples/sec, 480

samples are acquired per channel in every full wrist

scan, which is referred to as one ’frame’. An example

of such a frame of data is shown in Figure 5

HUCAPP 2020 - 4th International Conference on Human Computer Interaction Theory and Applications

112

Figure 5: Matrix of Emitter-receiver measurements of a sin-

gle frame.

3.2.2 Data Processing

The data collected from each photodiode (channel)

are first filtered using a median filter of width five,

to suppress some narrow-width noises from exter-

nal near-infrared interference. The resulting data for

each channel, which is 480 samples, are then seg-

mented into 24 segments, each corresponding to the

time when a specific emitter has been switched on.

Because we have observed that each 20-sample seg-

ment appear to be almost flat in our experiment, we

computed the mean value as a representation for each

segment to reduce the size of data by a factor of 20.

In this way, the 480 samples per channel can be re-

duced to 24 samples, and a total of 12 channels sum

up to 288 samples per frame. These processed data

were input into both the model training and real-time

evaluation steps in this study.

3.2.3 Model Training

For several applications, wearable gesture recogni-

tion systems are required to function in real time with

comparable accuracy to those in offline modes. For a

gesture recognition system to operate in real-time, it

has to recognize a gesture in less than 300 ms equiv-

alent to 3Hz update rate (Benalc

´

azar et al., 2017).

Since wearable systems run on systems which needs

low computational complexity and low power sys-

tems, the primary challenge here is choosing a clas-

sifier which can exhibit good performance using less

complicated recognition models.

The labeled and processed data from the Data

Processing step are employed to train various ma-

chine learning models. We have explored the follow-

ing supervised learning classifiers: k-Nearest Neigh-

bors (kNN), Support Vector Machine (SVM), Linear

Discriminant Analysis(LDA), and Neural Networks

(NN). After a series of accuracy comparison for dif-

ferent models, a shallow neural network worked best

for our context. Therefore we will focus on describing

this model. The shallow neural network that we chose

was a single-layer fully connected network, with the

hidden layer consisting of 56 hidden nodes. The ac-

tivation function was set to be the rectified linear unit

(ReLU) function, and the cost function was a negative

log-likelihood function. During training, one epoch

of data was divided into batches of the size of 600,

and a total of 1000 epochs were trained, after which

the changes in both accuracy and cost-function value

falls below a small threshold. No dropout or batch

normalization was applied during training, because

this model is relatively simple, and it already general-

ized pretty well without these tricks.

3.2.4 Model Evaluation

We performed both offline evaluation of the trained

model using the collected data, also real-time eval-

uation by applying the trained model on real-time

streamed sensor data.

1. For offline evaluation, a typically collected

dataset usually consists of 10 trials. 10-fold cross-

validation was performed by training on any nine

trials and validating on the other one trial of data.

Both validation accuracy and confusion matrices

were averaged over all ten folds and served as a

metric of gesture classification performance.

2. For real-time evaluation, a real-time software

streamed frames of raw sensor data from the

wristband hardware, and performed the same

data-processing procedure on each frame, and

invoked model inference employing the trained

model to predict the gesture of the current frame.

The real-time evaluation software can update a

new gesture at 6-8Hz.

4 USER STUDY DESIGN

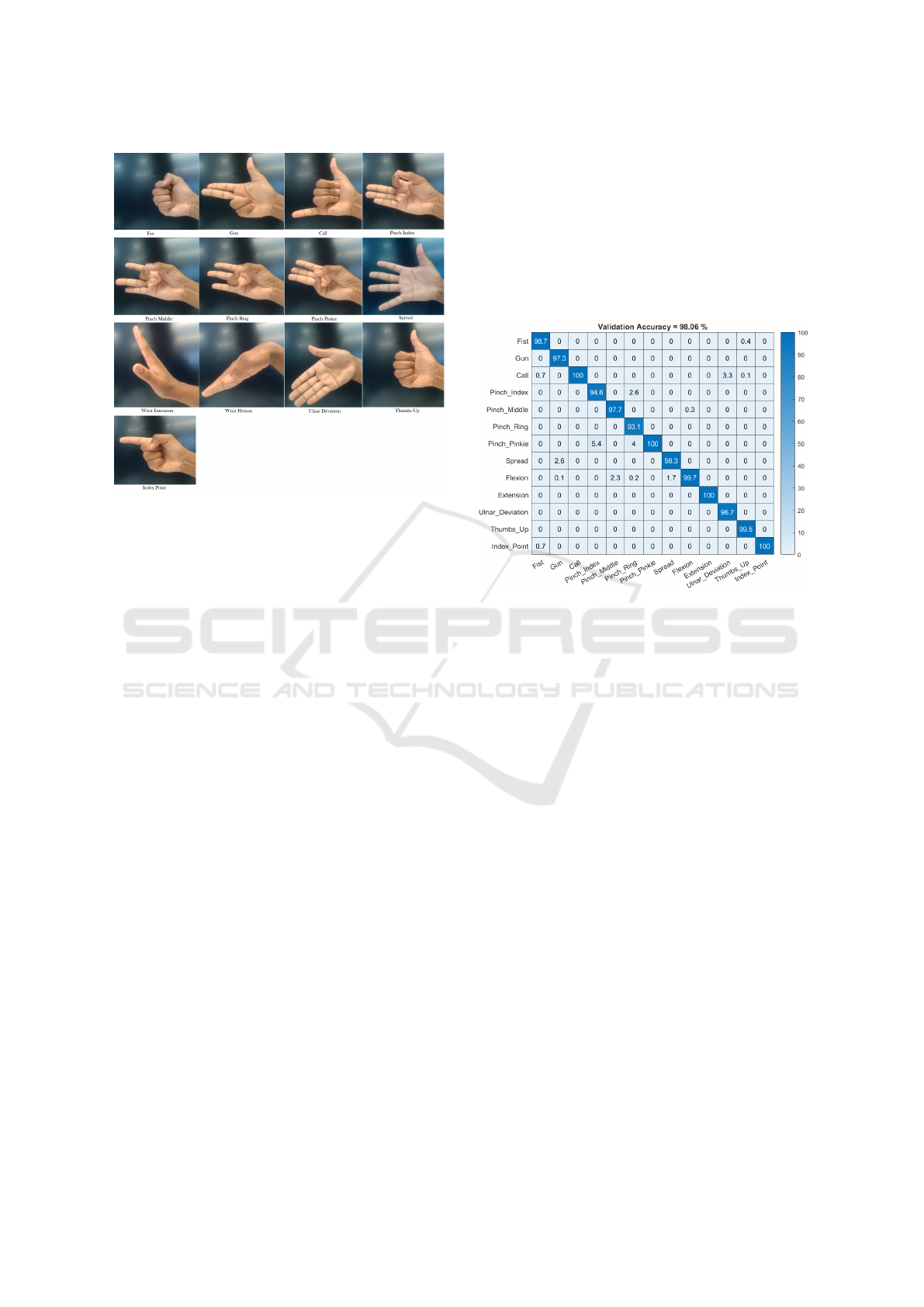

To assess the performance of the built wristband, hand

gesture sensing studies were conducted across multi-

ple subjects. To better compare our results with a pre-

vious study done by SenseIR (McIntosh et al., 2017),

we designed the studies to replicate the set-ups in

SenseIR as much as possible. Specifically, a total of

four pinch gestures (Index Pinch, Middle Pinch, Ring

Pinch, and Little Pinch), six common gestures (Fist,

Spread, Call, Gun, Index Point, and Thumbs up), and

three wrist gestures (Wrist Flexion, Wrist Extension,

and Wrist Abduction) were chosen, as shown in Fig-

ure 6.

Hand Gesture Recognition based on Near-infrared Sensing Wristband

113

Figure 6: Set of gestures used in the User study: Total of

13 gestures consists of four pinch gestures (Index Pinch,

Middle Pinch, Ring Pinch, and Little Pinch), six common

gestures (Fist, Spread, Call, Gun,Index Point, and Thumbs

up), and three wrist gestures (Wrist Flexion,Wrist Exten-

sion, and Wrist Abduction).

All participant wore the wristband on their right

arm. Before the test, each subject was given some

time to practice the gestures. During a single trial, the

subject was prompt to perform all 13 gestures in a ran-

dom sequence specified by the software, each gesture

for 5 seconds. The randomness in gesture sequence

can enhance the generalization of the machine learn-

ing model.

A total of 10 trials of data were collected for each

subject. Data corresponding to the first 2 seconds of a

new gesture was truncated, because we observed that

the raw sensor signals would fluctuate to difference

extents during this transitioning time, this truncation

can provide more stabilized signals for each gesture.

The labels of the data were simultaneously created

and time stamped.

5 RESULTS

In our study, the confusion matrix was computed to

serve as the metric of performance. The confusion

matrix is a N

gesture

by N

gesture

matrix, each entry is

strictly between 0 and 100, with the i-th column and j-

th row indicating the percentage of i-th gesture (true)

being classified as the j-th gesture, during valida-

tion. Its diagonal elements imply the percentage of

each gesture being classified correctly, whose average

value is a quantitative measure for the performance

of the trained model, therefore denoted as ”validation

accuracy” for the rest of the study. The confusion ma-

trix is always averaged over all folds during the 10-

fold cross-validation. The confusion matrix (shown

in Figure 7) corresponds to the confusion matrix av-

eraged over all ten subjects, and all ten folds for each

subject. The validation accuracy over all subjects is

98.06% with the standard deviation of ± 1.82%.

Figure 7: Confusion Matrix - The mean validation accuracy

across 10 participants for each gesture.

The prediction accuracy is observed to be less for

less-pronounced gestures. i.e., pinch gestures and

other gestures which only involve the movement of

a single finger. This is because the pinch gestures use

common muscle group. However, wrist gestures, ges-

tures which rotate the whole hand around the wrist

joint are easily recognized by the ML algorithms. In

addition, other experiments were designed to investi-

gate the impact of the number of sensors, sensor cov-

erage area and density, peak emission wavelength of

the IR emitters, and motion artifacts (particularly arm

rotations) on the sensing performance:

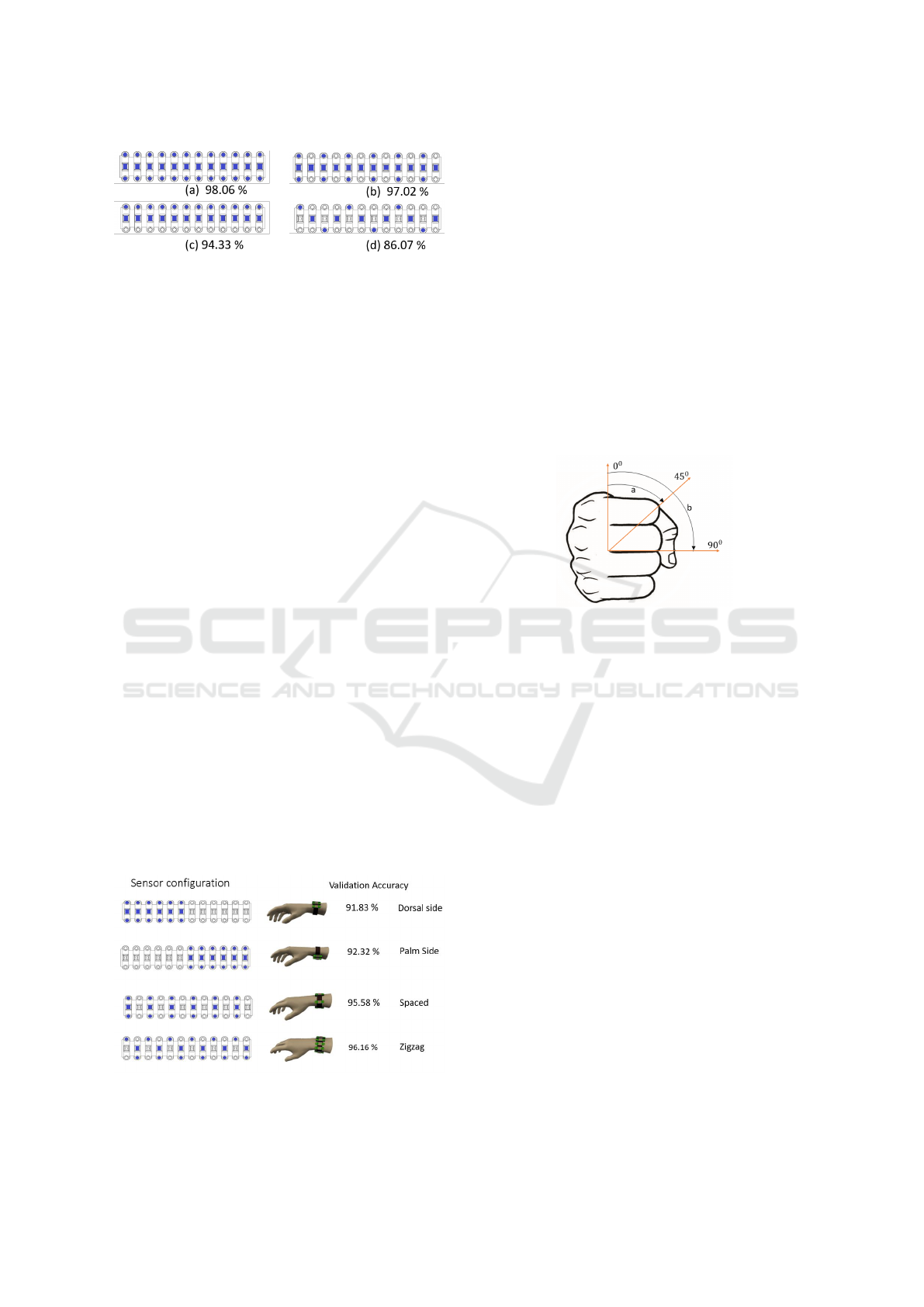

1. Number of sensors: From a dataset collected on

the full setup (24 emitters and 12 detectors), we

removed some data to create combination setups

with different number of emitters (6, 12, 24) and

detectors (6, 12) as shown in Figure 8, Where

the blue circles indicate the active IR emitters

and the blue rectangles indicate the active IR re-

ceivers. We only reduce the numbers of emit-

ters and detectors in the new setups, both are still

equally spaced around the wrist. The results show

a small decrease in accuracy for less number of

sensors. However, the small variation in accu-

racy for different emitter and receiver combina-

tion shows that it is still possible to get a reason-

ably high accuracy with less number of sensors.

HUCAPP 2020 - 4th International Conference on Human Computer Interaction Theory and Applications

114

Figure 8: Number of sensors used in the test versus their

corresponding Validation accuracy: (a) 24 emitters and 12

receivers (b) 12 emitters and 12 receivers (b) 12 emitters

and 12 receivers (c) 6 emitters and 6 receivers.

2. Peak emission wavelengths: Two wavelengths,

770 nm, and 850 nm, are tested in an experiment

with a 12-emitter-12-detector configuration. The

resulting validation accuracies for the two wave-

lengths are 94.33% and 93.37%, respectively. The

changes in accuracy are not significant between

the chosen two wavelengths.

3. Sensor coverage area: For hand gestures, more

useful signals may concentrate on specific regions

of the wrist. Sensor configuration, particularly

sensor density in some regions of the wrist and

the overall coverage area of the wristband should

also be carefully designed to keep the trade-off be-

tween the accuracy of the system and wristband

complexity or cost. In Figure 9, we showed the

variation in accuracy for different sensor config-

uration of 12 emitters and 6 receivers wristband.

This result shows that the sensors can be concen-

trated to a particular side of the wrist without a

significant variation in accuracy. The sensors can

also be arranged in a low-density configuration to

include other complementary types of sensor such

as EMG, inertial and pressure sensors which can

be used to reduce the practicality issues related

with the NIR wristband. However, the results

also show covering more wrist area with sensors

can give slightly better results than having sensors

concentrated in a particular area.

Figure 9: Different sensor configurations Vs Validation ac-

curacy for 12 emitters and 6 receivers.

4. Arm rotation: We have found that the rotation of

the arm introduces a relatively significant change

in the near-infrared signals. Therefore a signifi-

cant error may be observed if the arm rotation an-

gles during training and validation are different.

This problem can be solved by introducing vari-

ous arm orientations during training or including

the orientation information from additional sen-

sors during both training and validation. We per-

formed a preliminary study in which data corre-

sponding to three different arm rotations (0

◦

, 45

◦

,

90

◦

) were collected (as shown in Fig. 10) Valida-

tion accuracies were computed by training on the

0

◦

data and validating on the 45

◦

and 90

◦

data.

The accuracy of the system reduces to 74% and

68% for arm rotation of 45

◦

and 90

◦

respectively.

Figure 10: Hand or fore-arm Rotation Test: Data is col-

lected by rotating the hand from 0 degrees to 45 and 90

degrees.

6 DISCUSSION

From the experiments conducted and observations

during those experiments, we distinguished some fac-

tors that affect the model training and recognition pre-

diction algorithms.

• Misalignment of the IR sensors: This problem

mainly happens for multiple session tests when

the user takeoff the wristband and wear it again.

However, the shift can also happen while the user

wears the wristband in single session tests. The

misalignment can happen either by rotational dis-

placement or longitudinal displacement on the

wrist. The position of the sensors will be dis-

placed from the training position causing the shift

for the order of the features. Since the wristband

is sensitive for a shift in millimeters, manual cali-

bration is very difficult. Every time the wristband

shifts, the position/orientation of the wristband

with respect to a reference position should be cal-

culated. The reference position can be recognized

using a specific gesture which shows strong fea-

tures or a significant change in the signal. After

Hand Gesture Recognition based on Near-infrared Sensing Wristband

115

the position is detected, reassignment of the chan-

nels can be used to maintain the order of the fea-

tures without rotating the wristband.

• Arm movement: motion artifacts are the other ma-

jor problem in NIR systems. The motion of the

hand (arm rotation, elongation, elevation) causes

blood movement and muscle/tendon movement.

These movements cause an unwanted change in

the signal. It is difficult to solve this problem en-

tirely by including the arm movement information

in the study or machine learning training proce-

dures. However, it can be reduced by training

the different arm rotations and elevations for the

same gesture. An additional sensors output such

as IMU orientation data can also be used to com-

pensate for the arm rotation problem.

• Skin-sensor Coupling: Even though NIR wrist-

band does not suffer from any electrical sensor-

skin coupling unlike EMG and EIT techniques,

They still suffer from problems caused by me-

chanical coupling between the sensor and the

skin. This is mainly because light can be reflected

directly from the skin without entering the skin

tissue, thereby causing the saturation of photo-

diodes. This problem can be reduced by using an

appropriate mechanical wristband design which

can keep the coupling constant.

• IR interference: IR light from external IR sources,

e.g., The sun, IR illuminating cameras adds noise

on the main signal. In order to remove these

noises, enclosing the outer part of the wristband

with IR block film can be a potential solution. The

other solution can be a differential measurement

of the external IR and subtraction from the main

signal.

7 CONCLUSION

This paper presents a wrist-worn gesture sensing sys-

tem that consists of an array of an Infrared Emitter and

Photo-Receivers that are used to detect gestures by

measuring reflected and refracted light from tissues in

or under the skin. In this study, we have demonstrated

that Near-infrared wristbands can offer a low-cost and

high accuracy gesture sensing possibility. With the

advancement of ultra-miniature SMD IR emitters and

receivers, these techniques can be easily integrated

to wrist-worn devices such as smart-watches and Fit-

bit monitors. The system’s software consists of data

acquisition, preprocessing and classification stages.

Thirteen classes of gestures were analyzed to vali-

date the accuracy of the classification algorithm. Fu-

ture works include data collection and processing for

cross-session and cross-user performance. Integration

with orientation sensors and pressure sensors should

be investigated as a potential solution to enable recog-

nition of arm rotation and pressure distribution around

the wrist. Development of embedded data acquisition

and wireless data transfer methods can also be imple-

mented for entirely wearable and wireless wristband

gesture sensing system.

REFERENCES

Benalc

´

azar, M. E., Jaramillo, A. G., Zea, A., P

´

aez, A., An-

daluz, V. H., et al. (2017). Hand gesture recogni-

tion using machine learning and the myo armband. In

Signal Processing Conference (EUSIPCO), 2017 25th

European, pages 1040–1044. IEEE.

Boyali, A. and Hashimoto, N. (2016). Spectral collaborative

representation based classification for hand gestures

recognition on electromyography signals. Biomedical

Signal Processing and Control, 24:11–18.

Chaiken, J., Deng, B., Goodisman, J., Shaheen, G.,

and Bussjaeger, R. J. (2011). Analyzing near-

infrared scattering from human skin to monitor

changes in hematocrit. Journal of biomedical optics,

16(9):097005.

Colac¸o, A., Kirmani, A., Yang, H. S., Gong, N.-W.,

Schmandt, C., and Goyal, V. K. (2013). Mime: com-

pact, low power 3d gesture sensing for interaction

with head mounted displays. In Proceedings of the

26th annual ACM symposium on User interface soft-

ware and technology, pages 227–236. ACM.

Dementyev, A. and Paradiso, J. A. (2014). Wristflex: low-

power gesture input with wrist-worn pressure sensors.

In Proceedings of the 27th annual ACM symposium on

User interface software and technology, pages 161–

166. ACM.

Freeman, W. T. and Weissman, C. D. (1997). Hand gesture

machine control system. US Patent 5,594,469.

Fukui, R., Watanabe, M., Shimosaka, M., and Sato, T.

(2014). Hand-shape classification with a wrist contour

sensor: Analyses of feature types, resemblance be-

tween subjects, and data variation with pronation an-

gle. The International Journal of Robotics Research,

33(4):658–671.

Huang, Y., Guo, W., Liu, J., He, J., Xia, H., Sheng, X.,

Wang, H., Feng, X., and Shull, P. B. (2015). Prelim-

inary testing of a hand gesture recognition wristband

based on emg and inertial sensor fusion. In Interna-

tional Conference on Intelligent Robotics and Appli-

cations, pages 359–367. Springer.

Izzetoglu, M., Bunce, S. C., Izzetoglu, K., Onaral, B., and

Pourrezaei, K. (2007). Functional brain imaging us-

ing near-infrared technology. IEEE Engineering in

Medicine and Biology Magazine, 26(4):38.

Kerber, F., L

¨

ochtefeld, M., Kr

¨

uger, A., McIntosh, J., Mc-

Neill, C., and Fraser, M. (2016). Understanding same-

side interactions with wrist-worn devices. In Pro-

HUCAPP 2020 - 4th International Conference on Human Computer Interaction Theory and Applications

116

ceedings of the 9th Nordic Conference on Human-

Computer Interaction, page 28. ACM.

Kim, D., Hilliges, O., Izadi, S., Butler, A. D., Chen, J.,

Oikonomidis, I., and Olivier, P. (2012). Digits: free-

hand 3d interactions anywhere using a wrist-worn

gloveless sensor. In Proceedings of the 25th annual

ACM symposium on User interface software and tech-

nology, pages 167–176. ACM.

Laput, G., Xiao, R., and Harrison, C. (2016). Viband: High-

fidelity bio-acoustic sensing using commodity smart-

watch accelerometers. In Proceedings of the 29th An-

nual Symposium on User Interface Software and Tech-

nology, pages 321–333. ACM.

McIntosh, J., Marzo, A., and Fraser, M. (2017). Sensir: De-

tecting hand gestures with a wearable bracelet using

infrared transmission and reflection. In Proceedings

of the 30th Annual ACM Symposium on User Inter-

face Software and Technology, pages 593–597. ACM.

McIntosh, J., McNeill, C., Fraser, M., Kerber, F.,

L

¨

ochtefeld, M., and Kr

¨

uger, A. (2016). Em-

press: Practical hand gesture classification with wrist-

mounted emg and pressure sensing. In Proceedings of

the 2016 CHI Conference on Human Factors in Com-

puting Systems, pages 2332–2342. ACM.

Nielsen, K. P., Zhao, L., Stamnes, J. J., Stamnes, K., and

Moan, J. (2008). The optics of human skin: Aspects

important for human health. Solar radiation and hu-

man health, 1:35–46.

Peck, C. C. (2003). Gesture sensing split keyboard and ap-

proach for capturing keystrokes. US Patent 6,630,924.

Rekimoto, J. (2001). Gesturewrist and gesturepad: Un-

obtrusive wearable interaction devices. In Wearable

Computers, 2001. Proceedings. Fifth International

Symposium on, pages 21–27. IEEE.

Truong, H., Nguyen, P., Bui, N., Nguyen, A., and Vu, T.

(2017). Low-power capacitive sensing wristband for

hand gesture recognition. In Proceedings of the 9th

ACM Workshop on Wireless of the Students, by the

Students, and for the Students, pages 21–21. ACM.

Wen, H., Ramos Rojas, J., and Dey, A. K. (2016). Serendip-

ity: Finger gesture recognition using an off-the-shelf

smartwatch. In Proceedings of the 2016 CHI Confer-

ence on Human Factors in Computing Systems, pages

3847–3851. ACM.

Zhang, Y. and Harrison, C. (2015). Tomo: Wearable, low-

cost electrical impedance tomography for hand ges-

ture recognition. In Proceedings of the 28th Annual

ACM Symposium on User Interface Software & Tech-

nology, pages 167–173. ACM.

Zhang, Y., Xiao, R., and Harrison, C. (2016). Advancing

hand gesture recognition with high resolution elec-

trical impedance tomography. In Proceedings of the

29th Annual Symposium on User Interface Software

and Technology, pages 843–850. ACM.

Hand Gesture Recognition based on Near-infrared Sensing Wristband

117