Phishing URL Detection Through Top-level Domain Analysis:

A Descriptive Approach

Orestis Christou, Nikolaos Pitropakis, Pavlos Papadopoulos, Sean McKeown

and William J. Buchanan

School of Computing, Edinburgh Napier University, Edinburgh, U.K.

Keywords:

Phishing Detection, Machine Learning, Domain Names, URL.

Abstract:

Phishing is considered to be one of the most prevalent cyber-attacks because of its immense flexibility and

alarmingly high success rate. Even with adequate training and high situational awareness, it can still be hard

for users to continually be aware of the URL of the website they are visiting. Traditional detection methods

rely on blocklists and content analysis, both of which require time-consuming human verification. Thus, there

have been attempts focusing on the predictive filtering of such URLs. This study aims to develop a machine-

learning model to detect fraudulent URLs which can be used within the Splunk platform. Inspired from similar

approaches in the literature, we trained the SVM and Random Forests algorithms using malicious and benign

datasets found in the literature and one dataset that we created. We evaluated the algorithms’ performance

with precision and recall, reaching up to 85% precision and 87% recall in the case of Random Forests while

SVM achieved up to 90% precision and 88% recall using only descriptive features.

1 INTRODUCTION

The past few years have seen an outburst of high-

impact breaches and issues, showing that sole reliance

on traditional mitigation and prevention approaches

is not ideal for providing adequate protection against

such fluctuant environments. The Domain Name Sys-

tem (DNS), being one of the principal elements of

the Web, is not only a prime target for attacks in-

volving system downtime but is also used as a means

for the execution of further and more complex so-

cial engineering and botnet attacks. In the report by

Fouchereau and Rychkov (2019), 82% of the compa-

nies undertaking their survey reported suffering from

at least one DNS-related attack. The average num-

ber of attacks experienced per company was 9.45,

placing the average cost of damages at $1,000,000.

From the dangerously diverse and ever-growing threat

landscape, the most ubiquitous DNS-related threat

was phishing, with Malware, DDoS and Tunnelling

following closely behind (Fouchereau and Rychkov,

2019). FireEye’s recent report (Hirani et al., 2019) on

a grand-scale DNS hijacking attack by alleged Iran-

based actors, for record manipulation purposes, rein-

forces this notion.

Adversaries do not have to be networking experts,

nor possess knowledge of the underlying operation of

the DNS to misuse it. To execute a successful phish-

ing attack, the only thing an adversary needs to do

is to select the right domain name to host their mali-

cious website. Instead of merely choosing a generic

and innocent-appearing name, the process of select-

ing the domain name may include techniques such as

homograph spoofing or squatting (Kintis et al., 2017;

Moubayed et al., 2018; Nikiforakis et al., 2014).

One of the most popular approaches for dealing

with such websites is using blocklists (OpenDNS,

2016). It is simple and accurate as each entry in the

blocklist is usually manually verified as malicious.

The problem with the latter approach is that it re-

quires frequent updating of the blocklist through con-

stant scanning for new entries. Moreover, the systems

creating these blocklists tend to have high operational

costs, which lead to the companies requiring payment

to access them. Usually, adversaries that utilise these

malicious URLs do not keep them active for very long

as they risk being detected and blocked.

Machine Learning techniques use features ex-

tracted from the URLs and their DNS data to anal-

yse and detect whether they are malicious or benign.

Usually, methods which rely on the analysis of the

content of such URLs come at a high computational

cost. Blum et al. (2010) compute MD5 hashes of the

main index of their webpages and compare them with

Christou, O., Pitropakis, N., Papadopoulos, P., McKeown, S. and Buchanan, W.

Phishing URL Detection Through Top-level Domain Analysis: A Descriptive Approach.

DOI: 10.5220/0008902202890298

In Proceedings of the 6th International Conference on Information Systems Security and Privacy (ICISSP 2020), pages 289-298

ISBN: 978-989-758-399-5; ISSN: 2184-4356

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

289

the hashes of known phishing sites. In their work,

they mention that this technique is easily bypassed

just by obfuscating the malicious contents. This limi-

tation constrains approaches to exclusively analysing

the URL strings to classify the URLs (Blum et al.,

2010). More recently, L

´

opez (2019), attempted to de-

tect phishing by using Splunk and taking into consid-

eration only the use of typosquatting (L

´

opez S

´

anchez,

2019; Nikiforakis et al., 2014) and homograph squat-

ting.

To the best of our knowledge, our work is the first

attempt that takes into consideration all the forms of

domain squatting and produces an automated mecha-

nism to detect malicious phishing URLs, thus increas-

ing the situational awareness of the user against them.

The contributions of our work can be summarised as

follows:

• The Machine Learning system is trained using de-

scriptive features extracted from the URL strings

without utilising host-based or bag-of-words fea-

tures. The domain names come from real-world,

known phishing domains (blocklist) and benign

domain names (allowlist).

• The popular classification algorithms SVM and

Random Forests are used and compared empiri-

cally based on their performance.

• The process is heavily automated as it relies on

the Splunk software, thus it can easily be trained

against new datasets to generate alerts when new

malicious entries are detected.

The rest of the paper is organised as follows: Sec-

tion 2 briefly describes the related literature with re-

gards to phishing; Section 3 introduces our method-

ology while, Section 4 describes the results of our

experimentation along with their evaluation. Finally,

Section 5 draws the conclusions, giving some pointers

for future work.

2 BACKGROUND AND RELATED

WORK

2.1 Phishing

Phishing as a term did not exist until 1996 when it was

first mentioned by 2600 a popular hacker newsletter

after an attack on AOL (Ollmann, 2004). Since then,

there has been an exponential increase in phishing

attacks, with it becoming one of the most prevalent

methods of cybercrime. According to Verizon (2019),

phishing played a part in 78% of all Cyber-Espionage

incidents and 87% of all installations of C2 malware

in the first quarter of 2019 (Verizon, 2019). In the

earlier report by Widup et al. (2018), it is reported

that 78% of people didn’t click a single phish all year,

meaning that 22% of people did click one. Moreover,

only 17% of these phishing campaigns were reported

by users. It is also emphasised that even though train-

ing can reduce the number of incidents, phish hap-

pens (Widup et al., 2018). Since only a single e-mail

is needed to compromise an entire organisation, pro-

tection against it should be taken seriously.

Cyber-criminals use phishing attacks to either har-

vest information or steal money from their victims

through deceiving them with a reflection of what

would seem like a regular e-mail or website. By redi-

recting the victim to their disguised website, they can

see everything the victim inserts in any forms, lo-

gin pages or payment sites. Cyber-criminals either

copy the techniques used by digital marketing ex-

perts or take advantage of the fuss created by viral

events to guarantee a high click rate. Vergelis and

Shcherbakova (2019), reported a spike in phishing

redirects to Apple’s sites before each new product an-

nouncement (Vergelis and Shcherbakova, 2019).

Regular phishing attacks are usually deployed

widely and are very generic, such that they can be de-

ployed to target as many people as possible. A Spear-

Phishing attack, instead, targets a specific individual,

but requires that information be gathered about the

victim prior to crafting a successful spear-phishing

email. A more advanced version of this attack is a

Whaling attack, which specifically targets a com-

pany’s senior executives to obtain higher-level access

to the organisation’s system. Targeted phishing at-

tacks are increasingly gaining popularity because of

their high success rates (Krebs, 2018).

2.1.1 Attacks

If the end-goal of a phishing attack is to ensure that

the victim is ultimately redirected to the phishing

website without being aware of it, then the adversary

needs to use several techniques to guarantee that. One

of those techniques is URL hiding , where the at-

tacker obfuscates a malicious URL in a way that does

not raise any suspicions and ultimately gets clicked

on by the victim. One way to execute this would be to

replace a valid URL link with a malicious one. Short-

ened links from services such as Bitly can be used

to obfuscate malicious links easily. There is no way

to know the actual destination of an obfuscated link

without visiting it.

Homograph spoofing is a method which depends

on the replacement of characters in a domain name

with other visually similar characters. An example of

that would be to replace 0 with o, or I with 1 (Rouse

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

290

et al., 2019). So, for a URL bingo.com the spoofed

URL would be b1ng0.com. Characters from other al-

phabets such as Greek have also been used in the past

for such attacks. The Greek o character is visually in-

distinguishable from the English o even though their

ASCII codes are different and would redirect to differ-

ent websites. Content polymorphism is addressed, as

well, using visual similarity analysis of the contents

(Lam et al., 2009).

Typosquatting targets common typographic er-

rors in domain names. For example, an attacker could

use the domain gooogle.com to target users who in-

correctly type google.com or to trick them into click-

ing on a regular link. Moubayed et al. (2018), combat

this issue using the K-Means Clustering Algorithm

to observe the lexical differences between benign and

malicious domains to extract features, and propose a

majority voting system that takes into consideration

the outputs of five different classification algorithms

(Moubayed et al., 2018).

Soundsquatting leverages on the use of words

that sound alike (homophones). Nikiforakis et al.

(2014), show that for a domain www.test.com, an ad-

versary may use dot-omission typos (wwwtest.com),

missing-character typos (www.tst.com), character-

permutation typos (www.tset.com), character-

replacement typos (www.rest.com) and character-

insertion typos (www.testt.com). They illustrate how

they used Alexa’s top one million domain list to

create and register their soundsquatting domains,

measuring the traffic from users accidentally visiting

them. Through their research, they have proven

the significance of taking into account homophone

confusion through abuse of text-to-speech software

when tackling the issue of squatting (Nikiforakis

et al., 2014).

Combosquatting is different from other ap-

proaches as it depends on altering the target domain

by adding familiar terms inside the URLs. An ex-

ample of this technique would be bankofscotland-

live.com or facebook-support.com. Research per-

formed by Kintis et al. (2017), shows a steady

increase in the use of combosquatting domains for

phishing as well as other malicious activities over

time. It is also reported that combosquatting domains

are more resilient to detection than typosquatting and

that the majority of the combosquatting domains they

were monitoring remained active for extended peri-

ods, sometimes exceeding three years, thus suggest-

ing that the measures set in place to counter these are

inadequate (Kintis et al., 2017).

2.1.2 Suggested Defences

The term Passive DNS (pDNS) refers to the indirect

collection and archiving of DNS data locally for fur-

ther analysis. In the early days of pDNS URL analy-

sis (Spring and Huth, 2012), where privacy was still

not considered to be an issue, the pioneer system for

malicious domain detection through pDNS was Notos

(Antonakakis et al., 2010) with its reputation-based

classification of domains. Notos extracts a variety of

features from the DNS queries and creates a score for

each entry to represent the likelihood of it being ma-

licious. A similar approach is taken for EXPOSURE

(Bilge et al., 2011), which is a large-scale pDNS anal-

ysis system developed using a gathered dataset of 100

billion entries. Bilge et al. (2011), differ in their ap-

proach by operating with fewer data compared to No-

tos. Khali et al. (2016) focus on the global associa-

tions between domains and IPs instead of looking at

their local features, thus addressing any privacy issues

as they only extract information relevant to their re-

search from the gathered dataset (Khalil et al., 2016).

Okayasu and Sasaki (2015) compare the performance

of SVM and quantification theory in a similar setting.

SVM was proved to be superior in their comparison

(Okayasu and Sasaki, 2015).

Since the lexical contents of malicious URLs play

a significant role in their victim’s susceptibility, squat-

ting detection should play a vital role in the identifi-

cation of malicious URLs. Kintis et al. (2017) use a

joint DNS dataset comprised of 6 years of collected

DNS records from both passive and active datasets

that amount to a total of over 450 billion records.

They found that the majority of combosquatting do-

mains involve the addition of just a single token to the

original domain (Kintis et al., 2017).

A novel approach is taken by Blum et al. (2010),

where URLs are classified without the need for host-

based features. They found that lexical classification

of malicious URLs can rival other conventional meth-

ods in accuracy levels (Blum et al., 2010). Lin et

al. (2013) propose a similar Machine Learning (ML)

approach, which can detect malicious URLs by focus-

ing on the URL strings. They use two sets of features

to train their online learning algorithm: i) Lexical fea-

tures, which are extracted by taking the name of the

domain, path, and argument of each entry, then using

a dictionary to remove less useful words from them,

and ii) Descriptive features are static characteristics

derived from the URLs such as total length or number

of symbols (Lin et al., 2013).

Da Luz and Marques (2014), build upon the work

of Notos (Antonakakis et al., 2011) and EXPOSURE

(Bilge et al., 2011) to detect botnet activity using both

Phishing URL Detection Through Top-level Domain Analysis: A Descriptive Approach

291

host-based and lexical features. They give a com-

parison of the performance of the K-Nearest Neigh-

bours, Decision Trees and Random Forests algo-

rithms, showing that Random Forests performed sig-

nificantly better (da Luz, 2014). Lin et al. (2013)

make the distinction between descriptive and lexical

features by separating the features derived directly

from the domain names strings and the features de-

rived using their bag-of-words model. They use a

combination of the Passive-Aggressive algorithm, to

classify their dataset, and the Confidence Weighted

algorithm, to alter the characteristic’s weight, thus

achieving more efficiency than other content-based

models (Lin et al., 2013).

Our approach differentiates from these as it only

emphasises the descriptive characteristics of URLs in

order to observe and attempt to improve the perfor-

mance of a model without taking into consideration

host-based or lexical (bag-of-words) features. More-

over, none of the approaches in the literature explored

the creation and usage of a model in a widely used

platform such as Splunk, to provide alerting capabili-

ties to automate the detection of malicious URLs.

3 METHODOLOGY

As malicious parties continue to abuse DNS to

achieve their goals, the means of stopping them

should also be constantly developed. When observing

the literature historically, it can be deduced that mod-

ern approaches are becoming more focused on the de-

tection of specific problems. Therefore, following the

same trend, our work is assisted by the Splunk plat-

form to train and use a classifier to detect phishing

domains through their extracted descriptive features.

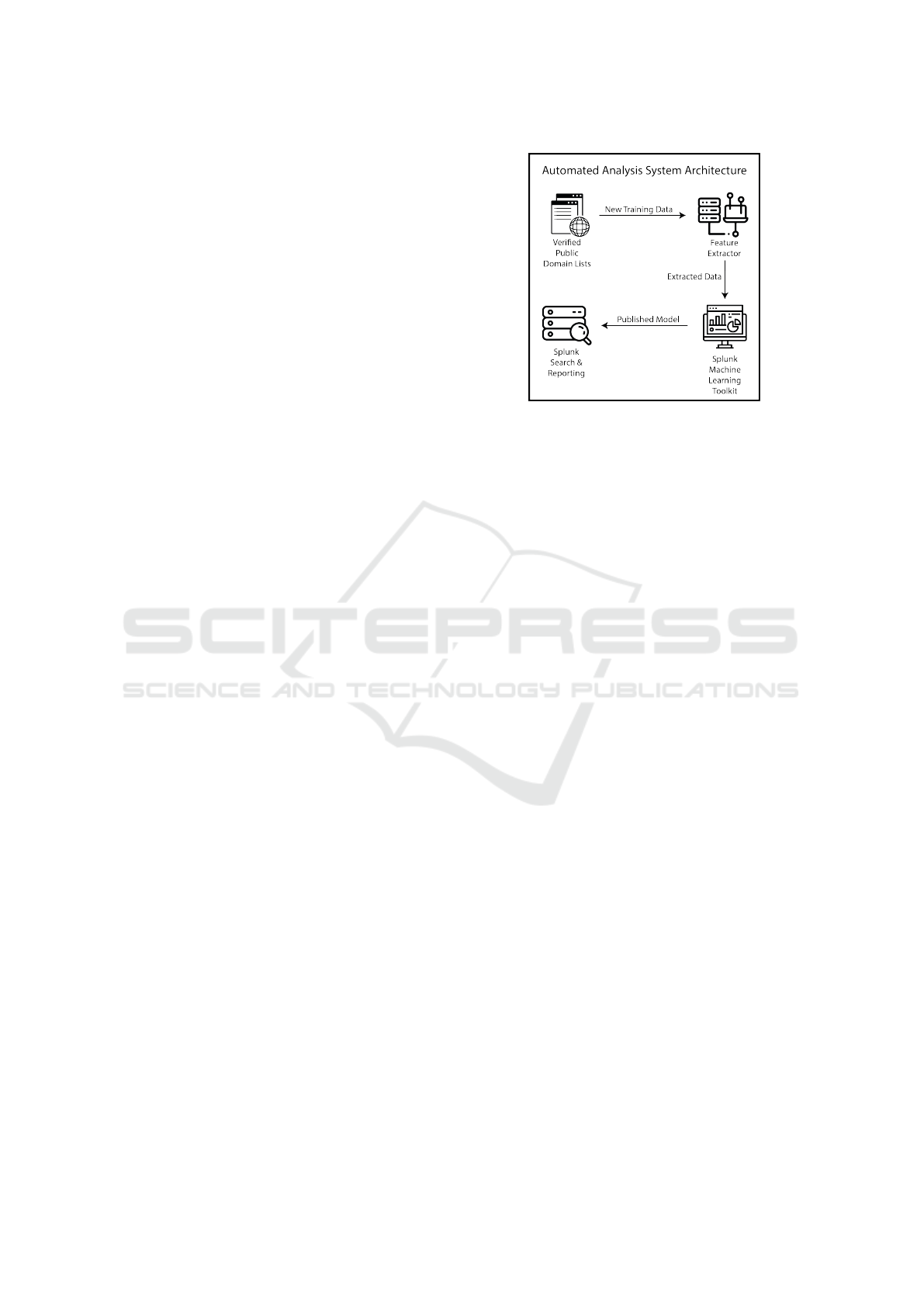

Figure 1 illustrates the architecture of the proposed

system. The technical specifications of our test envi-

ronment are an Intel Core-i5 CPU with clock speed of

1.70GHz, and 6GiB RAM.

3.1 Dataset Selection

The quality of the prediction of a ML algorithm is

strongly related to the quality of its training set. The

Machine Learning approach requires a supervised

learning algorithm, and therefore, the samples will

need to be labelled as either benign or malicious. To

reduce bias in our results, three tests were conducted

using a total of six lists, three allowlists and three

blocklists.

The first benign list was derived from TLDs in the

Alexa’s top one million domain list as of September

2019. This database contains one million entries of

Figure 1: System Architecture Diagram.

the most popular websites worldwide. As this list

contains domains ranked by popularity, we verified

their authenticity and content for the first five thou-

sand domains from this list. We therefore populated

our allowlist using the five thousand most popular

Alexa domains.

The first malicious list was created using Phish-

tank’s active blocklist (OpenDNS, 2016). The block-

list consists of more than four hundred thousand

phishing domain entries and is continuously updated

with active domains. Five thousand of those domains

were selected to populate the blocklist. The two lists

are joined, and overlapping domain names are re-

moved to avoid creating any noise. More entries from

the blocklists are included in the following tests.

As the Alexa’s database is not validated for poten-

tially malicious entries like combosquatting domains,

as proved in the related literature (Kintis et al., 2017),

we turned our attention to established datasets used

by the cyber security community. Therefore, the le-

gitimate and malicious lists provided by Sahingoz et

al. (2019) were used together in the second test. The

legitimate list is reported to have been cross-validated

and thus can be used at full scale (Sahingoz et al.,

2019). The phishing/legitimate URL set published

in Phishtorm (Marchal et al., 2014) is used in the

third test. The comparison of the performance of our

feature selection using each of the different sets will

be crucial in determining their relevance through the

elimination of dataset bias from the algorithm’s per-

spective.

3.2 Analysis

The previous sections have illustrated how miscreants

can misuse DNS in their attempts to perform phishing

attacks. From the knowledge extracted from the liter-

ature, a set of features was selected and extracted from

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

292

the gathered pDNS data. The set of features allow for

the classifier to divide the domain names into either

benign or malicious.

3.2.1 Feature Extraction

The Splunk ML Toolkit has no functionality for ex-

tracting features from strings, and therefore, the fea-

tures were extracted using Python libraries. The Pan-

das Python library was used to import the two datasets

into Python for the extraction of the features. We ex-

tracted a total of eighteen features from each domain

name in the DNS dataset. Since the benign dataset

did not include other details such as the TTL, cer-

tain types of features could not be derived. Therefore,

more weight is given to analysing the lexical charac-

teristics of our domains. The features are split into

two groups: descriptive features and statistical fea-

tures. The rationale behind the division of the features

into these two categories is that descriptive features

are variables derived directly from the domains while

statistical features are derived from applying mathe-

matical statistic operations on either the strings them-

selves or the descriptive features.

3.2.2 Descriptive Features

The descriptive features that were extracted from each

domain name string are distinguished in the length,

number of unique characters, numbers and symbols

in the URL, in the domain, and in domain suffix. Ma-

licious domain names tend to have a higher number

of symbols or numbers than benign ones, either be-

cause of squatting or because they are randomly gen-

erated. Therefore, we extracted features to represent

the number of symbols and numbers found in the dif-

ferent parts of the domain.

Malicious domains also tend to be longer than be-

nign ones (Moubayed et al., 2018). However, after

observing the entries in the phishing dataset, it was

noticed that many entries would have longer subdo-

mains but short domains. This would mean that even

though they would look disproportionate, they would

still be flagged benign as their length is similar to be-

nign entries. To counter this issue, features were set

to contain the length of each domain part.

The number of unique characters also differs in

malicious URLs because legitimate website owners

tend to choose simpler and easier to remember words

for their URLs. Using this reasoning, the number of

unique characters in the domain and suffix of each

URL was used to populate the features. Moreover,

since those unique characters are often numbers, fea-

tures were selected to represent the number of nu-

meric characters present in each URL.

3.2.3 Statistical Features

Features to constitute the character continuity rate of

the domain and suffix were selected. In general, as

mentioned earlier, website owners tend to choose sim-

pler names for memorisation purposes. Because sim-

pler domain names are usually more expensive to buy,

it is unlikely that the attackers will pay large sums for

a domain that will most likely serve them for a short

period. Lin et al. (2013), use this idea to design the

character continuity rate feature. To create this fea-

ture, the domain name is split into tokens of sequen-

tial characters based on their type (letter, number or

symbol). Once the domain is split, the length of each

token is measured and compared to the other tokens

in its respective category. Then, the longest token for

each character type is selected, and their total length

is added together and divided by the total length of

the token (Lin et al., 2013).

To detect randomised domain strings or at least

detect randomisations within them, the features con-

taining the Shanon entropy of the domain and suffix

strings were selected (Lin et al., 2013). Shannon en-

tropy H is calculated using the formula seen in Equa-

tion 4, where p

i

is the chance for a character i to ap-

pear in a given string (Marchal et al., 2012).

H =

∑

i

p

i

log

b

p

i

(1)

Equation 1: Shannon Entropy.

In our scenario, p

i

is replaced with the count of

different characters divided by the length of the string.

Standard deviation and mean of the entropy of the two

domain levels’ entropy were extracted to test if using

a more median number would produce better results

than using the initially extracted values of entropy.

3.3 Training, Application and Alerting

To train the algorithms, the training set containing all

the features and the malicious and benign labels was

exported. Splunk was set to monitor that file so that

if any changes needed to be made to it, they could

be updated in Splunk instantly. This experiment was

split into Tests 1, 2 & 3. Test 1 was performed using

a set of ten thousand data entries from the Alexa and

Phishtank datasets (Alexa, 2019; OpenDNS, 2016).

A 50/50 split was performed on the datasets for the

training phase using Splunk to provide a better out-

look of the algorithms’ efficiency by using one half

for training and the other for testing.

The chosen algorithms for this experiment are

SVM and Random Forests because of their reported

performance. Support Vector Machine or SVM was

Phishing URL Detection Through Top-level Domain Analysis: A Descriptive Approach

293

developed based on Vapnik Chervonenkis’ statistical

learning theory in 1995 (Vapnik, 1995). SVM is a lin-

ear model used to solve classification and regression

problems. It can be applied to both linear and non-

linear problems. Put simply; the algorithm separates

data into classes using a line called the hyperplane.

Random Forests algorithm takes the same parameters

as Decision Trees. It works by utilising multiple deci-

sion trees in order to improve the algorithm’s robust-

ness as well as its ability to generalise. The core idea

of it is to use a multitude of trees that will output class

predictions (Breiman, 2001).

Test 1.1 will examine the performance of the Ran-

dom Forests algorithm with minimal changes to its

default configuration of the parameters: infinite max-

imum depth, features and maximum leaf nodes, ten N

estimators and two minimum samples per split. Test

1.2 will take a similar approach against the SVM al-

gorithm, with slight alterations to the C and Gamma

parameters which are set by default to 1 and 1/500

respectively.

Test 2 was separated into Test 2.1 and Test 2.2

to evaluate the SVM and Random Forests algorithms

using a larger dataset of 70,000 data entries (Sahin-

goz et al., 2019). Likewise, Test 3 was divided into

Test 3.1 and Test 3.2 to compare SVM and Random

Forests with the Phishstorm dataset (Marchal et al.,

2014). Test 4 serves as a what if scenario that allows

the comparison of all the available algorithms in the

Splunk ML toolkit to see if there could have been al-

ternatives not mentioned in the literature.

After training the algorithm, Splunk will be con-

figured to periodically fit the algorithm on a con-

tinuously monitored file so that any new entries are

checked immediately for their maliciousness. If the

algorithm is accurate enough, it will be configured

for scheduled re-training to ensure that it is up to date

with recently found phishing domain entries.

4 RESULTS & EVALUATION

4.1 Results

The first algorithm to be tested was Random Forests

in Test 1.1. In Table 1, the performance of the al-

gorithm is evaluated. Moreover, the fine-tuning of the

algorithm’s parameters to achieve its full potential can

also be seen.

Test 1.2 implemented the SVM algorithm against

the same dataset as Test 1.1, with an initial precision

& recall of 0.89 and 0.87 respectively as shown in

Table 1. The algorithm’s performance increased im-

mensely by simply using a larger C value to increase

the hyperplane’s flexibility. However, reducing the in-

fluence of the points placed far from the hyperplane

resulted in a drop in accuracy. As shown in Table 1,

a precision of 0.90 and a recall of 0.88 was achieved

after the tweaking.

Test 2.1 evaluated the performance of the Random

Forests algorithm using a larger dataset containing

70,000 entries. Once again, the features were tweaked

until the perfect combination was found. As shown in

Table 1, the performance of Random Forests peaked

with a 0.84 precision and recall when 10 Estimators

(Decision Trees) and an infinite max depth of nested

statements were set. The alteration of the minimum

samples per split had no impact on the overall perfor-

mance, and therefore, it was kept to its default value.

The decrease of the maximum number of features to

consider per split negatively impacted the overall ac-

curacy and thus was kept as the default value.

The same procedure as earlier is repeated using

the SVM algorithm in Test 2.2. Using the default set-

tings with a C of 1 and a Gamma of 1/18, the algo-

rithm achieved a 0.76 precision and recall. In Table 1,

it can be observed that this time, the peak performance

was achieved using a C value of 100 and a Gamma of

1/500, with 0.79 precision and 0.77 recall achieved.

Table 1 shows the performance of the Ran-

dom Forests algorithm against the Phishstorm dataset

(Marchal et al., 2014) with 96,000 data entries in Test

3.1. Tweaking the algorithm parameters did not yield

better results and thus the default configuration of the

algorithm was kept.

Once more, the process is repeated using SVM in

Test 3.2 (Table 1). When a C value of 100 was used

the algorithm reached its peak precision & recall rates

of 0.81 respectively. Altering the Gamma value only

reduced our rates and thus was kept to its default of

1/18.

After completing the experiments, the experimen-

tal setup of Test 3.1 was chosen to create a model in

Splunk. The model allows for the fitting of the now

trained algorithm into other datasets which are im-

ported in Splunk. Splunk was configured to contin-

uously monitor the input file to update any further ad-

ditions or removals. An alert was created, which runs

the query against the input file every hour and notifies

the user if a new entry is flagged as malicious. The

alert is then added to the triggered alerts. With this,

the automated detection system is complete. Finally,

the model is set to re-train itself using any new data

added to the initial dataset.

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

294

Table 1: Accuracy of Tests.

Random Forests SVM

Tests N Estimators Max Depth Max Features Precision Recall C Gamma Precision Recall

1 10 ∞ ∞ 0.87 0.86 1 1/18 0.89 0.87

10 10 ∞ 0.89 0.86 1 1/50 0.83 0.83

10 10 2 0.89 0.87 10 1/18 0.90 0.88

2 10 ∞ ∞ 0.84 0.84 1 1/18 0.76 0.76

1 ∞ ∞ 0.81 0.81 10 1/18 0.77 0.77

10 10 ∞ 0.80 0.80 100 1/18 0.78 0.77

10 ∞ 2 0.84 0.84 100 1/500 0.79 0.77

3 10 ∞ ∞ 0.85 0.85 1 1/18 0.79 0.79

1 ∞ ∞ 0.83 0.83 100 1/18 0.81 0.81

10 10 ∞ 0.83 0.83 100 1/100 0.81 0.80

10 ∞ 2 0.85 0.85 100 1/500 0.80 0.79

4.2 Evaluation

The outcomes of Tests 1.1 & 1.2 established that

SVM performed slightly better than Random Forests.

With Random Forests, the precision & recall rates did

not vary significantly when changing the algorithm’s

parameters. Decreasing the Gamma value in SVM

however proved to have great significance on its re-

sults, indicating that even the furthest points from the

hyperplane were of great importance. The evaluation

of Tests 1.1 & 1.2 should yield better results than the

other tests due to the reduced number of entries.

The full dataset containing 70,000 entries was

used in Tests 2.1 & 2.2 as the benign entries were

already validated (Sahingoz et al., 2019). Test 2.1

hit its peak performance without any tweaking, while

Test 2.2 required a less straight hyperplane to do so.

The evaluation results of SVM and Random Forests

were not ideal for an automated filtration system but

are still usable in our experiment. This time Random

Forests was the predominant algorithm with a signifi-

cant difference in precision & recall. Overall both al-

gorithms achieved lower rates, the sudden drop in ac-

curacy of SVM indicates that Random Forests could

be more ideal for a large-scale application.

Using the Phishstorm dataset (Marchal et al.,

2014), of 90,000 entries in Tests 3.1 & 3.2 achieved

very similar but slightly better results than Tests 2.1

& 2.2. This means that the selected feature set is not

dataset-biased and is robust when handling new data.

Even though the achieved rates are not perfect, the

model can still be used in the passive detection of ma-

licious URLs.

While SVM performed better than Random

Forests in Test 1, Tests 2 & 3 showed that Random

Forests does not perform much differently when us-

ing a larger dataset, contrary to SVM’s performance

drop. Finally, Random Forests was selected for the fi-

nal model simply because of the stability in the out-

come it provides by utilising results from multiple de-

cision trees.

After the model was created, it could be used as

a standard search parameter in the original search

and reporting app by Splunk and not just in the ML

toolkit. This allowed for the easy creation of cus-

tomised periodic checks for new malicious entries in

the original dataset. In combination with a pDNS col-

lector, this finalises the automated phishing URL de-

tector. The feature for scheduled training could also

be very easily implemented.

4.2.1 Feature Comparison

After the publishing of the model, the summary com-

mand in Splunk was used to evaluate the individ-

ual importance of the features used using the Ran-

dom Forests algorithm. Test 1 indicates that longer

URLs (with more unique characters) tend to be ma-

licious. These however may also be the differences

between popular-expensive domains and unpopular-

cheaper ones. The results derived from Test 2 were

much more close, with the number of symbols in a

domain name being the most important one. For the

Test 3, the most important features are related with

the total length, the number of the symbols and the

character continuity rate in a domain name. An inter-

esting observation can be made about the similarity

in feature importance in Tests 2 & 3 and their extreme

contrast in performance with some features with Test

1 such as the number of symbols or character conti-

nuity rate. Another interesting observation is that the

character continuity rate feature presented by Lin et

al. (2013), had the third least importance of all the

other features in Test 1, while in their experiment it

ranked first. The count of numbers in the domain suf-

fix was of no importance as there were no numbers in

any of our URL suffixes (Lin et al., 2013).

Phishing URL Detection Through Top-level Domain Analysis: A Descriptive Approach

295

5 DISCUSSION

The precision and recall rates achieved here are good

enough to indicate that the approach may be useful

in real-life scenarios. They may not be ideal for an

automated filtration system but can still provide a list

of possibly malicious URLs, narrowing the list down

and reducing the human input required to spot them.

The sole use of descriptive features in a single clas-

sification may not be the correct approach if the goal

is to achieve the efficiency levels required for an au-

tomated detection system to work. If the system had

reached higher efficiency levels, then that would mean

that the multi-lingual domain classification difficul-

ties mentioned by Nikiforakis et al. (2014), would be

avoided. The purpose of selecting this specific ap-

proach was to further the trend of separate classifi-

cation of features seen in the literature by not con-

sidering lexical features. It is an essential step to-

wards understanding which groups of features work

best together so that future developed multi-classifier

systems are built knowing those relationships (Niki-

forakis et al., 2014).

The detection of phishing URLs is more challeng-

ing than the detection of botnet C&C URLs as by

their nature, phishing domain names attempt to mir-

ror the appearance of benign domains. In retrospect,

this model could have been a better fit for detecting

randomly generated C&C domains as their randomi-

sation would lead to higher entropy values, longer

URLs and would have used a multitude of unique

characters and symbols. This would yield higher pre-

cision and recall rates which would, in turn, produce

more accurate alerts once the model was published in

the Splunk search and reporting app. For the detection

of phishing domains, more focus should have been

given towards features explicitly targeting the detec-

tion of squatting domains (Moubayed et al., 2018).

This difference in feature importance between the

three tests as well as from other approaches in the lit-

erature suggests that the quality of the datasets used

can entirely change which features will be more crit-

ical for the classification. It is concerning how a fea-

ture such as the character continuity Rate, had so little

importance in the first experiment while in Tests 2 &

3 and in the literature (Mamun et al., 2016), it was one

of the most important. In the second approach taken

by (da Luz, 2014), their Shannon entropy features are

of similar importance to Shannon entropy of the do-

main and suffix strings in Test 1; high in the third level

domain and low in the second level domain. However,

in their first approach, which used a different dataset,

they differed completely, with both features holding

no importance. The positive evaluation of the Unique

Characters features by (Moubayed et al., 2018), mir-

rored their performance in the first test. The do-

mains’ length and symbol count features proved to

be the most important in Tests 2 & 3 but not the

count of unique characters; homograph spoofed and

typosquatted domains are the likely culprits responsi-

ble for this.

As demonstrated, it is possible to use the trained

model to make predictions against new data within the

Splunk Search and Reporting app. Though with the

current model, it would be ill-advised to do so as the

surge of false positive and false negative alerts gener-

ated would cause more harm than good. It would be

best, however, if a classifier with higher accuracy was

used.

5.1 Attacks against Machine Learning

The best method of evading detection from a ML al-

gorithm is to just use an expensive domain name,

meaning that a wealthy assailant can operate rela-

tively unhindered. For this reason, a lexical analysis

system should never be relied upon exclusively, but

should be incorporated into a larger system.

The ML system is at its most vulnerable during

training (Pitropakis et al., 2019), as that is where hu-

man error can thrive. If a malicious entry is included

in the benign training dataset then not only will that

particular entry not be detected later, but other mali-

cious URLs with similar characteristics may also es-

cape detection. A malicious entry in a allowlist (poi-

soning) has much more potential to cause damage

than a benign entry in a blocklist.

A creative adversarial approach to invalidate a ML

system and avoid detection would be to buy a swarm

of malicious names with similar features and asso-

ciate them with malicious activity. In time, after they

are included in blocklists because their similarities are

known to the adversary, it would be easier to select

domain names which would bypass detection as they

would have a hand in the training. Although in a

smaller scale, this method would not have much ac-

curacy, it is always a possibility in a grandiose cyber-

warfare scenario. However, with so many resources,

it would be much easier to simply buy an expensive

domain.

6 CONCLUSIONS AND FUTURE

WORK

As adversaries keep inventing different means of

abusing the DNS, the only certainty is that Machine

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

296

Learning will continue to play a vital role in the fu-

ture of malicious URL filtering. In this work, the de-

scriptive features derived from benign and malicious

domain name datasets were used to make predictions

on their nature using the Random Forests and SVM

algorithms. The final precision and recall rates pro-

duced when only using descriptive features and not

considering host-based features were up to 85% and

87% for Random Forests and up to 90% and 88% for

SVM respectively. Those results play a vital role in

the understanding of the operational relationship be-

tween features and thus contribute knowledge into the

correct grouping of features and the creation of multi-

model classifiers. The features were found to have

significantly different impact factors than some other

cases in the literature, proving the importance of plac-

ing great care in the selection of training datasets. Af-

ter the model was finalised and fine-tuned, it was pub-

lished in the Splunk Search and Reporting app where

it was used against new data to generate alerts. This

was the final step towards the automation of the detec-

tion process. Scheduled training was also configured

using Splunk, furthering the system’s autonomy.

There are several research pathways which can be

undertaken to improve the performance of this sys-

tem, some being parallel to and some being stem-

ming from the existing literature. A great addition to

our methodology would be the use of a dataset com-

posed of real-world passive DNS data for the training

phase that would allow for the generation of more fea-

tures, thus leading towards the elimination of noise.

As we have a passive DNS infrastructure under devel-

opment, we plan in the near future to make use of a

higher volume of real-world data as training datasets,

which would lead to the further improvement of our

model.

REFERENCES

Alexa (2019). The top 1.000.000 sites on the web.

Antonakakis, M., Perdisci, R., Dagon, D., Lee, W., and

Feamster, N. (2010). Building a dynamic reputation

system for dns. In USENIX security symposium, pages

273–290.

Antonakakis, M., Perdisci, R., Lee, W., Vasiloglou, N., and

Dagon, D. (2011). Detecting malware domains at the

upper dns hierarchy. In USENIX security symposium,

volume 11, pages 1–16.

Bilge, L., Kirda, E., Kruegel, C., and Balduzzi, M. (2011).

Exposure: Finding malicious domains using passive

dns analysis. In Ndss, pages 1–17.

Blum, A., Wardman, B., Solorio, T., and Warner, G. (2010).

Lexical feature based phishing url detection using on-

line learning. In Proceedings of the 3rd ACM Work-

shop on Artificial Intelligence and Security, pages 54–

60. ACM.

Breiman, L. (2001). Random forests. Machine learning,

45(1):5–32.

da Luz, P. M. (2014). Botnet detection using passive dns.

Radboud University: Nijmegen, The Netherlands.

Fouchereau, R. and Rychkov, K. (2019). Global DNS

Threat Report Understanding the Critical Role of

DNS in Network Security.

Hirani, M., Jones, S., and Read, B. (2019). Global dns hi-

jacking campaign: Dns record manipulation at scale.

blog, Jan.

Khalil, I., Yu, T., and Guan, B. (2016). Discovering ma-

licious domains through passive dns data graph anal-

ysis. In Proceedings of the 11th ACM on Asia Con-

ference on Computer and Communications Security,

pages 663–674. ACM.

Kintis, P., Miramirkhani, N., Lever, C., Chen, Y., Romero-

G

´

omez, R., Pitropakis, N., Nikiforakis, N., and An-

tonakakis, M. (2017). Hiding in plain sight: A longitu-

dinal study of combosquatting abuse. In Proceedings

of the 2017 ACM SIGSAC Conference on Computer

and Communications Security, pages 569–586. ACM.

Krebs, B. (2018). The Year Targeted Phishing Went Main-

stream.

Lam, I.-F., Xiao, W.-C., Wang, S.-C., and Chen, K.-T.

(2009). Counteracting phishing page polymorphism:

An image layout analysis approach. In International

Conference on Information Security and Assurance,

pages 270–279. Springer.

Lin, M.-S., Chiu, C.-Y., Lee, Y.-J., and Pao, H.-K. (2013).

Malicious url filtering—a big data application. In

2013 IEEE international conference on big data,

pages 589–596. IEEE.

L

´

opez S

´

anchez, J. (2019). M

´

etodos y t

´

ecnicas de detecci

´

on

temprana de casos de phishing. Universitat Oberta de

Catalunya (UOC).

Mamun, M. S. I., Rathore, M. A., Lashkari, A. H.,

Stakhanova, N., and Ghorbani, A. A. (2016). Detect-

ing malicious urls using lexical analysis. In Interna-

tional Conference on Network and System Security,

pages 467–482. Springer.

Marchal, S., Franc¸ois, J., State, R., and Engel, T. (2014).

Phishstorm: Detecting phishing with streaming ana-

lytics. IEEE Transactions on Network and Service

Management, 11(4):458–471.

Marchal, S., Franc¸ois, J., Wagner, C., State, R., Dulaunoy,

A., Engel, T., and Festor, O. (2012). Dnssm: A

large scale passive dns security monitoring frame-

work. In 2012 IEEE Network Operations and Man-

agement Symposium, pages 988–993. IEEE.

Moubayed, A., Injadat, M., Shami, A., and Lutfiyya, H.

(2018). Dns typo-squatting domain detection: A data

analytics & machine learning based approach. In 2018

IEEE Global Communications Conference (GLOBE-

COM), pages 1–7. IEEE.

Nikiforakis, N., Balduzzi, M., Desmet, L., Piessens, F., and

Joosen, W. (2014). Soundsquatting: Uncovering the

use of homophones in domain squatting. In Inter-

Phishing URL Detection Through Top-level Domain Analysis: A Descriptive Approach

297

national Conference on Information Security, pages

291–308. Springer.

Okayasu, S. and Sasaki, R. (2015). Proposal and evalu-

ation of methods using the quantification theory and

machine learning for detecting c&c server used in a

botnet. In 2015 IEEE 39th Annual Computer Software

and Applications Conference, volume 3, pages 24–29.

IEEE.

Ollmann, G. (2004). The phishing guide–understanding &

preventing phishing attacks. NGS Software Insight Se-

curity Research.

OpenDNS, L. (2016). Phishtank: An anti-phishing site. On-

line: https://www. phishtank. com.

Pitropakis, N., Panaousis, E., Giannetsos, T., Anastasiadis,

E., and Loukas, G. (2019). A taxonomy and survey of

attacks against machine learning. Computer Science

Review.

Rouse, M., Bedell, C., and Cobb, M. (2019). DEFINITION

phishing.

Sahingoz, O. K., Buber, E., Demir, O., and Diri, B. (2019).

Machine learning based phishing detection from urls.

Expert Systems with Applications, 117:345–357.

Spring, J. M. and Huth, C. L. (2012). The impact of pas-

sive dns collection on end-user privacy. Securing and

Trusting Internet Names.

Vapnik, V. N. (1995). The nature of statistical learning. The-

ory.

Vergelis, M. and Shcherbakova, T. (2019). Spam and phish-

ing in Q1 2019.

Verizon (2019). Verizon: 2019 data breach investigations

report. Computer Fraud & Security, 2019(6):4.

Widup, S., Spitler, M., Hylender, D., and Bassett, G. (2018).

2018 verizon data breach investigations report. Tech-

nical report.

ICISSP 2020 - 6th International Conference on Information Systems Security and Privacy

298