Context-aware Patch-based Method for Fac¸ade Inpainting

Benedikt Kottler

1

, Dimitri Bulatov

1

and Zhang Xingzi

2

1

Department of Scene Analysis, Fraunhofer Institute of Optronics,

System Technologies and Image Exploitation (IOSB), 76275 Ettlingen, Germany

2

Fraunhofer Singapore, Singapore

Keywords:

City Modeling, Inpainting, Patch-based Inpainting, Textures, Texture Image, Fac¸ade Elements.

Abstract:

Realistic representations of 3D urban scenes is an important aspect of scene understanding and has many

applications. Given untextured polyhedral Level-of-Detail 2 (LoD2) models of building and imaging con-

taining fac¸ade textures, occlusions caused by foreground objects are an essential disturbing factor of fac¸ade

textures. We developed a modification of a well-known patch-based inpainting method and used the knowl-

edge about fac¸ade details in order to improve the fac¸ade inpainting of occlusions. Our modification focuses

on suppression of undesired, superfluous repetitions of textures. To achieve this, a coarse inpainting result

by a structural-based method is used to influence the choice of the best patch so that homogeneous regions

are preferred. The coarse inpainting is calculated using the context knowledge and average color instead of

traditionally applied arbitrary structural inpainting. Our modification furthermore introduces a parameter that

allows to weight the influence of the coarse inpainting. A parameter study shows that this parameter can be

chosen intuitively and does not require any parameter choice method. The cleaned fac¸ade textures could be

successfully integrated into the accordingly adjusted building models thus upgrading them to LoD3.

1 INTRODUCTION

Realistic representations of 3D urban scenes, in par-

ticular, buildings walls from openly available images

is an important aspect of scene understanding and has

many applications in rapid response missions (Bula-

tov et al., 2014a; Bulatov et al., 2014b) and virtual

tourism (Shalunts et al., 2011), because buildings are

becoming easier to identify. For other applications,

such as computation of energy balance and, more

in general, simulation of buildings in spectral ranges

other than visual (Guo et al., 2018), it is important

to know the materials of fac¸ade parts and not only

textural compound. The ability to open, close and

breach windows may be useful in applications con-

nected with virtual reality (Bulatov et al., 2014b).

Summarizing, plausible decomposition of fac¸ade

models into classes e.g. door, window, background

using only a minimum of information, namely, tex-

ture images from data-bases are meaningful require-

ments. Such images are available through Internet-

based services (Google Maps, Bing Maps, and oth-

ers) providing public access to geographic informa-

tion system (GIS) data as well. Hence, there are many

related works (Vanegas et al., 2010; Zhang et al.,

2019) striving for adding automatically the 3D geom-

etry of the observed buildings to the building mod-

els. In particular, in the simple and robust approach

of (Zhang et al., 2019), dedicated to extending tex-

tured LoD2 models to LoD3 models, images were

rectified, the fac¸ade elements like doors and windows

detected, and the 3D model updated. However, in the

Berlin CityGML dataset employed for this work, the

quality of the texture images was rather poor. Com-

mon problems in low-quality texture images, such

as lens distortions, overexposure/underexposure, illu-

mination inconsistencies, shadows/occlusions, reflec-

tion/refraction, etc., can cause a significant reduction

in the rendering quality of a 3D city scene. Further-

more, since the texture images of building facades

were the only input of the procedure of (Zhang et al.,

2019), proper occlusion analysis could not be car-

ried out. This had a disadvantage that the fac¸ade

images were often contaminated, among others and

in particular, by trees standing in front of the build-

ings. Especially when it comes to simulation of win-

ter scenes, unpleasant and unrealistic green stains at

building walls as in Figure 1 are clearly visible.

In order to prevent this from happening, we wish

to extend the approach of (Zhang et al., 2019) into

the direction of cleaning building walls from textures

of trees using inpainting methods. The texture-based

210

Kottler, B., Bulatov, D. and Xingzi, Z.

Context-aware Patch-based Method for Façade Inpainting.

DOI: 10.5220/0008874802100218

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 1: GRAPP, pages

210-218

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

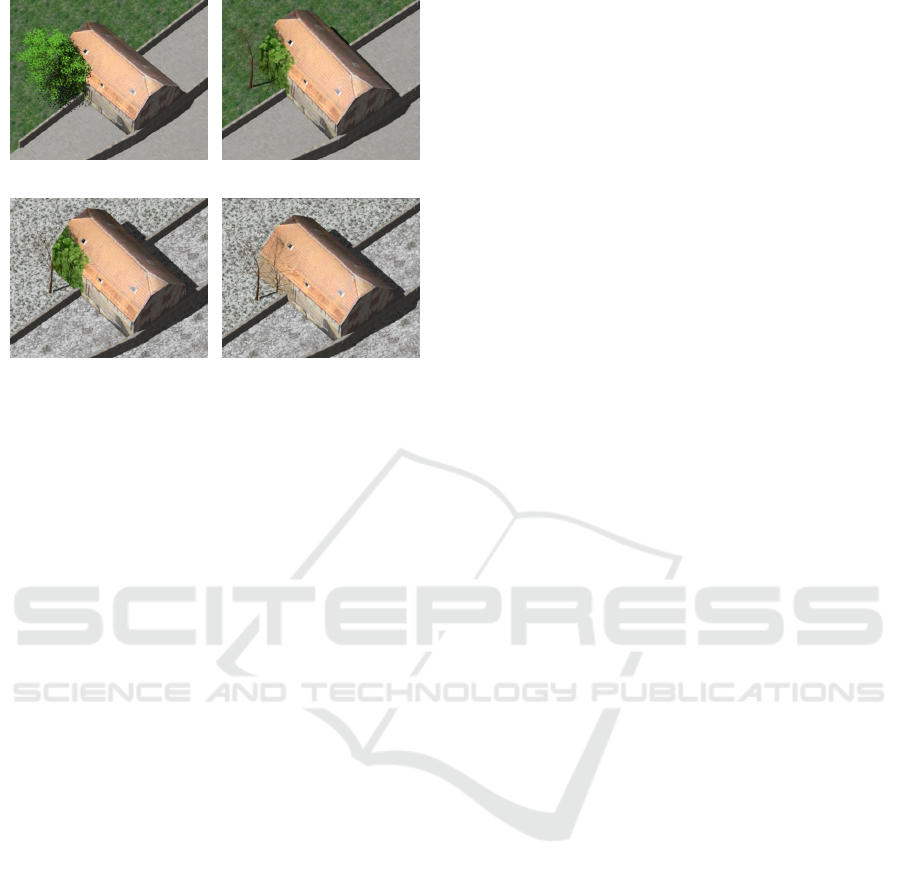

(a)

(b)

(c)

(d)

Figure 1: In summer captured textures need adaptation for

winter environments. (a) Summer scene with modeled tree,

(b) Summer scene without modeled tree, (c) Winter scene

with distracting texture, (d) Winter scene with cleaned tex-

ture.

inpainting is identified as a state-of-the-art tool to fill

large holes in the images. Our main contribution is

that the context, retrieved at the stage of fac¸ade detec-

tion of (Zhang et al., 2019), is extensively taken into

account. Note that we assume here that the masks for

trees and possibly other foreground objects are given

because otherwise they can be extracted using deep

learning techniques for semantic segmentation, such

as (Chen et al., 2018).

2 PREVIOUS APPROACHES

This section will consist of two parts, dedicated re-

spectively to particularly representative approaches

for creation of LoD3 models, or, more specifically,

detection of fac¸ade elements, and those for image in-

painting.

2.1 Creation of LoD3 Models

We start our selection of representative methods on

fac¸ade analysis by the method of (Xiao et al., 2008),

who use actual sensor data given as image sequences

from ground-based vehicles. A fac¸ade is recursively

decomposed into a set of rectangular patches based on

the horizontal and vertical lines detected in the tex-

ture image. The next step is constituted by a bottom-

up merging of patches, whereby the authors make

use of the architectural bilateral symmetry and repet-

itive patterns automatically detected. Finally, a ver-

tical plane with independent depth offset is assigned

to each patch, however, without assigning a seman-

tic label. The authors of (Loch-Dehbi and Pl

¨

umer,

2015) rely on a statistical approach. They automat-

ically generate a small number of most likely hy-

potheses and provide the corresponding probabili-

ties. The model parameters can be retrieved from

Gaussian mixture distributions, whereby constraints

between these parameters are simultaneously taken

into account. Probably, the main risk of this method

could ending up in the combinatoric chaos. An in-

teresting approach is presented by (Guo et al., 2018).

The fac¸ade is subdivided into facets. To determine

whether a facet contains a window, R-CNNs (Ren

et al., 2015) are employed. Even though this ap-

proach is not evaluated, the application field is in-

teresting: Representation of the digital twin in ther-

mal infrared spectrum. Yet another approach (Wen-

zel and F

¨

orstner, 2016) contains an optimization pro-

cedure incorporated into monte-carlo-like simulation

with multiple births and deaths of objects (doors, win-

dows, and balconies). The regularization prior is

given by symmetry constraints of fac¸ade objects and

the data term is constituted by descriptors allowing

detecting corners and other salient structures in im-

ages. Despite impressive results, monto-carlo simula-

tion and optimization by a procedure reminding sim-

ulated annealing is costly. To prove how the proposed

context-aware inpainting works in challenging situa-

tions, we decided to assess a method of (Zhang et al.,

2019), which provides good results without too many

complex computations. In Section 3, we will provide

a short overview of this work.

2.2 Image Inpainting Techniques

Concerning inpainting techniques, which represents

the focus of this paper, most of them can be denoted

as either structure-based or texture-based. To the

first subgroup, we assign the methods based on krig-

ing (Rossi et al., 1994), marching method of (Telea,

2004), or on finding a stationary solution of a par-

tial differential equation. The variational compo-

nent is added to enforce a model assumption, such

as piecewise smoothness. Thus, the relevant meth-

ods (Bertalmio et al., 2001; Sch

¨

onlieb and Bertozzi,

2011) are usually employed to tackle simultaneous

the denoising and inpainting task. The structure-

based methods have been intensively studied during

many years because of their inter-disciplinary appli-

cability. However, it has been established that they

neither perform well in case of richly textured back-

ground nor for too large holes, since this provokes

as a consequence unnatural blurring of the inpaint-

ing domain. Therefore, starting at epochal publica-

Context-aware Patch-based Method for Façade Inpainting

211

tions of (Efros and Leung, 1999; Criminisi et al.,

2004), texture-based methods have become increas-

ingly popular in the recent years. The basic idea is

to search for every pixel to be inpainted a window,

which partly appeared somewhere else and there con-

text is filled. Countless modifications have been im-

plemented since then, including manipulations of pri-

ority terms for pixels to be inpainted, e.g. (Le Meur

et al., 2011), convex combinations of patches in or-

der to suppress noise and artifacts between pasted

patches, and narrowing search spaces (Buyssens et al.,

2015). The crucial leverage, is hidden in this ‘’some-

where else”. In remote sensing, the didactically

excellent paper of (Shen et al., 2015), differentiate

between spatial, spectral, and temporal techniques.

They search for the missing information in other parts

of the same image, in another channel, or, respec-

tively, even in some completely different images. This

last group obviously opens the door to CNN-based

method which now are being increasingly employed

for inpainting tasks as well. From the pioneering

work of (Yeh et al., 2017), who were able to com-

plete images with only a very sparse number of pixels

covered using deep learning, only a few years have

passed until the NVidia solution (Liu et al., 2018) be-

came available online.

Typical for most solutions based on deep learning

is that the context information is sacrificed in favor

of millions of training examples. The authors, how-

ever, decided to make use of context and to process

not only the raw images from (Zhang et al., 2019),

but also the classification results of fac¸ade elements.

In case of structure-based inpainting, (Kottler et al.,

2016) demonstrated that even a simple method allows

to preserve sharp edges between classes, once context

information is taken into account. This paper will de-

scribe a suitable modification of the approach of (Cri-

minisi et al., 2004).

3 PRELIMINARIES

In this section, we will first briefly describe the pro-

cedure of (Zhang et al., 2019), in which applying in-

painting techniques are required. In the second sub-

section, we introduce the original method of Criminisi

et al. In the third subsection, a CNN-based inpainting

as a state-of-the-art tool will be outlined for compari-

son.

3.1 Texture Analysis and Creating

LoD3 Models of Zhang et al.

In what follows, we describe the approach of (Zhang

et al., 2019) which starts at LoD2 CityGML and tex-

ture images and aims at obtaining 3D models of the

fac¸ade, which are enriched with semantics about im-

portant fac¸ade elements like doors and windows. This

is a data-driven approach which allows to generate

plausible LoD3 models from textured LoD2 models.

This method starts by the rectification of a texture im-

age to make the facade elements axis-parallel. Then,

automatic detection of doors and windows in the tex-

ture images is based on the Mask R-CNN method

within ResNet. There are two successive phases in

Mask R-CNN. A Region Proposal Network proposes

candidate object bounding boxes and an Object De-

tection Network and a Mask Segmentation Network

predict the class and box offset. Thus, the second

phase has the output of the first one phase as in-

put and the combined loss function takes both losses

into account. After the detection, rule-based cluster-

ing is applied to align the detected fac¸ade elements

and regularize their sizes. The features for clustering

are the coordinates of object centroids. The sizes of

the fac¸ade elements are taken into account as well.

For example, if the size of a fac¸ade element differs

from other fac¸ade elements, it is marked as outlier and

would not be regularized. After interactively select-

ing matched models from a 3D facade element model

database, deforming and stitching them with the input

LoD2 model takes place, as Figure 2a shows.

In this way, building models can be extended

from LoD2 to LoD3 based on its texture images.

However, the texture images of the LoD2 CityGML

models are usually aerial images taken from top or

side views. As shown in Figures 2a and 2b, their

quality is sometimes very poor. Common problems

in low-quality texture images include distortions,

overexposure/underexposure, illumination inconsis-

tencies, shadows/occlusions, reflection/refraction, re-

dundancy and etc. These problems can cause a sig-

nificant reduction in the rendering quality of a 3D

city scene. The present work aims at overpainting the

foreground objects, like trees, from the images before

adding them to the model.

3.2 Texture-based Inpainting of

Criminisi et al.

In this section, we briefly describe the algorithm of

(Criminisi et al., 2004) and introduce the relevant

modifications later.

Given is an image with image region I , region to

GRAPP 2020 - 15th International Conference on Computer Graphics Theory and Applications

212

(a)

(b)

Figure 2: (a) Pipeline of the approach of (Zhang et al.,

2019), (b)s examples of extending LoD2 model to LoD3.

As we can see, the texture images in both (a) and (b) are

negatively affected by foreground objects (trees).

be filled D ⊂ I and a source region S ⊂ I −D. Straight

forward, S may be defined as the entire image minus

the target region (S = I − D), or it may be manually

specified by the user. The inpainting evolves inward

as the algorithm progresses, and so we also denote to

∂D as the fill front. Moreover, as with all exemplar-

based texture synthesis (Efros and Leung, 1999), the

size of the template window S must be specified. The

authors of (Criminisi et al., 2004) suggest a 9×9 win-

dow as default.

Each pixel maintains a confidence value C(p),

which reflects reliability of the pixel information, and

which is frozen once a pixel has been filled. Dur-

ing the algorithm, patches along the fill front are also

given a temporary priority value, which determines

the order in which they are filled.

The algorithm fills the pixels around the filling

front one after the other in order of prioritization by

copying image content from the best patch. The patch

of th

´

e current pixel of the filling front is compared

with all patches in the source area S. Using a cost

function evaluated on the pixels outside the inpaint-

ing area, the patch yielding the minimum distance is

then copied to the target area. The core of the algo-

rithm is an isophote-driven prioritization process that

combines structure and texture inpainting.

The method of (Criminisi et al., 2004) has the

restriction that, applied on images with complicated

contents and repetitive structures may be copied into

the inpainting area. Our proposed method is supposed

to smooth the result and how to use context knowl-

edge to restore the known objects.

3.3 Inpainting using Partial

Convolution Neuronal Network of

Liu et al.

Deep learning methods are currently very popular and

are successfully employed in many areas of computer

vision. Therefore, we use an inpainting method (Liu

et al., 2018) as a comparison to our proposed method.

This deep neural network has a U-Net-like structure

with deconvolutions based on nearest-neighbor prin-

ciple. The inpainting problematic is reflected by re-

placing all convolution layers by the so-called par-

tial convolution layers, that is, only pixels within the

inpainting mask are affected by convolutions. We

used the implementation available on Github

1

which

included a pre-trained model with images of size

256 × 256, trained for about 1 day on a small part

of ImageNet. For comparison, the original paper has

image sizes 512 × 512 and ten days training for Ima-

geNet and Places2 and three days for CelebA-HQ. In-

put images are resized on the shorter side to 256 pixels

with maintaining the original aspect ratio. The selec-

tion of a square with 256 × 256 took place, whereby

cropping is performed the way the door is preserved

in order not to lose important fac¸ade details.

4 METHOD

4.1 Inpainting with Classes

The two critical parts of the algorithm (Criminisi

et al., 2004) are determining the priorities, which

should be made as smart as possible, and the find-

ing of the best copy patch, which should be done as

efficiently as possible.

The aforementioned prioritization prefers pixels

which (i) are a continuation of edges and (ii) are sur-

rounded by many pixels containing information. We

search for the maximum of the priority term which is

given as a product

Pr(p) = Co(p)Da(p), (1)

where

Co(p) =

∑

q∈N

p

∩(I −D)

Co(q)

|N

p

|

, Da(p) =

∇I

⊥

p

· n

p

β

and |N

p

| denotes the number of neighboring pixels.

Initially, all pixels in the inpainting area bear no in-

formation content, so C o(p) = 0 for all p ∈ D and all

1

https://github.com/ja3067/

pytorch-inpainting-partial-conv

Context-aware Patch-based Method for Façade Inpainting

213

pixels outside have the value 1, so Co(p) = 1 for all

p ∈ (I − D). In the following, pixels are preferred

which have as many already filled neighbors as possi-

ble, whereas pixels, which were filled earlier, are pre-

ferred. The data term Da(p) describes the strength of

isophotes hitting the front of D. As filling proceeds,

this term boosts the priority of edges entering the in-

painting domain and gives the algorithm its structural

inpainting component.

After the prioritization step, the pixel q with the

best copy patch is determined for the patch of the

pixel p with the highest priority. The aim is to find

the patch which is closest to the given patch. The dis-

tances between the target patch and the patches of the

pixels in the source region are calculated and a mini-

mum argument is determined, so

q

∗

= arg min

q∈S

d(p,q),

where the distance measure is denoted with d : I ×

I → R.

It is defined as follows:

d(q, p) =

∑

x

q

∈N

q

{[ψ(x

q

) − I(y

p

(x

q

)] · M(x

q

)}

2

where in the general case

ψ(x) =

I(x) if x /∈ D

˜

I(x) if x ∈ D

(2)

or in the case with classes

ψ(x) =

I(x) if x /∈ D

Col(i) if x ∈ {y ∈ D|C (y) = i}

, (3)

where Col(i) denotes the average color of class i, and

M(x) =

1 if x /∈ D

α else

(4)

denotes the function ψ corresponds to the source im-

age I outside of the inpainting domain. Inside this

domain, it corresponds to an approximation of the

image content

˜

I. To preserve structural changes of

color information,

˜

I can be a structural inpainting re-

sult. Since elements of different classes are present,

we decided to break the procedure down to the level

of classes. Thus, an approximation is calculated with

the classification by setting the color value of a pixel

to the average color of the corresponding class (see

section 5.2). This approximation is based on addi-

tional information, for example the result of structure

inpainting, or on a classification result. The parameter

α ∈ [0,1] in M describes the weighting of the approx-

imation introduced in

˜

I. It can set intuitively as shown

in section 5.1.

The algorithm can be seen as a function I

Inp

=

Cr(I ,D,S [,C]), where the classification result is op-

tional and only used to calculate the approximation.

4.2 Fac¸ade Reconstruction

The first input in this section is a rectified fac¸ade tex-

ture image I in which the foreground objectes are de-

tected. After morphological dilation we obtain the

inpainting area D. The second, optional input is the

classification result C of the fac¸ade images described

in Section 3.1. One can obtain a mask for a given

class i by

C

i

(p) =

1 if C (p) = i /∈ D

0 otherwise

For the concrete problem, pixels belonging to fac¸ade

background and foreground object should be in-

painted using only regions of visible fac¸ade back-

ground, and pixels belonging to fac¸ade details should

be inpainted using only corresponding pixels of the

according class. We denote this set of ”valid pixels”

by the source region S = D

c

∩C

f. b.

and will apply our

method to the problem.

For the fac¸ade images, the algorithm was executed

twice. The first time, it is used to reconstruct the

fac¸ade background while the second time it is used

to add the fac¸ade details to the first result. This leads

to a two-step process for fac¸ade reconstruction.

Fac¸ade Background. For the fac¸ade background,

the inpainting area is restricted to the area belong-

ing to the fac¸ade background class. Accordingly, the

source area is restricted to the visible area, which be-

longs to the class fac¸ade background. This results in

the rule:

I

InpBack

= Cr(I ,D ∩C = f. b.,S = D

c

∩ C

f. b.

,C)

Fac¸ade Details. After that, the fac¸ade details can be

added. The painting area is restricted to the area be-

longing to the fac¸ade background class. Accordingly,

the source area is also restricted to the visible area that

belongs to the class fac¸ade details. This results in the

analogical rule:

I

InpFac¸ade

= Cr(I ,D ∩C = f. d.,S = D

c

∩ C

f. b.

,C).

Summarizing, in the first step, the facade back-

ground was reconstructed. In the second step we add

the fac¸ade details by repeating the first step, but using

only the locations of covered windows as the inpaint-

ing area and the areas where doors and windows as

source areas lied.

5 RESULTS AND DISCUSSION

In this section, we will first make a parameter study

for α in equation (4) and will show that its consid-

GRAPP 2020 - 15th International Conference on Computer Graphics Theory and Applications

214

eration allows preventing unwanted repetition of pat-

terns while its choice is quite straightforward and in-

tuitive. Then, we present the results of the modified

method of (Criminisi et al., 2004) applied on a data

set already analyzed by (Zhang et al., 2019). We con-

sidered rectified fac¸ade texture images, which were

part of a LoD3 city model of Berlin and correspond-

ing classification results for windows and doors. For

those images, where a foreground object – a tree, for

example – was occluded the fac¸ade. We selected a

binary mask accordingly. Otherwise, a polygon of

interest was selected that may best show the differ-

ences in performance of all algorithms. Following,

a detailed comparison of the results of the proposed

method with those produced using the deep learning

technique will take place. The section is concluded

by a description on a LoD3 model creation.

5.1 Choice of α

In this subsection, we make a visual evaluation of the

influence of the parameter α in equation (4) on the in-

painting result. For the complex wall texture in the

example image, we created a coarse approximation of

the structure using Navier-Stokes inpainting, shown

in Fig 3a. Then, we run our method and varied α be-

tween 0 and 1. In Figure 3, the result for different α

is shown. It becomes clear that for α values close but

not equal to 0, the dominant colors in the inpaining

domain are similar to those around it and that the un-

desired repetitions of patterns are much more rarely

present. The advantage of our method is that texture

content is searched for in the regions with similar col-

ors. The blurring is typical for structural inpainting

and thus, small values of α, but it disappears gradu-

ally with its growing value.

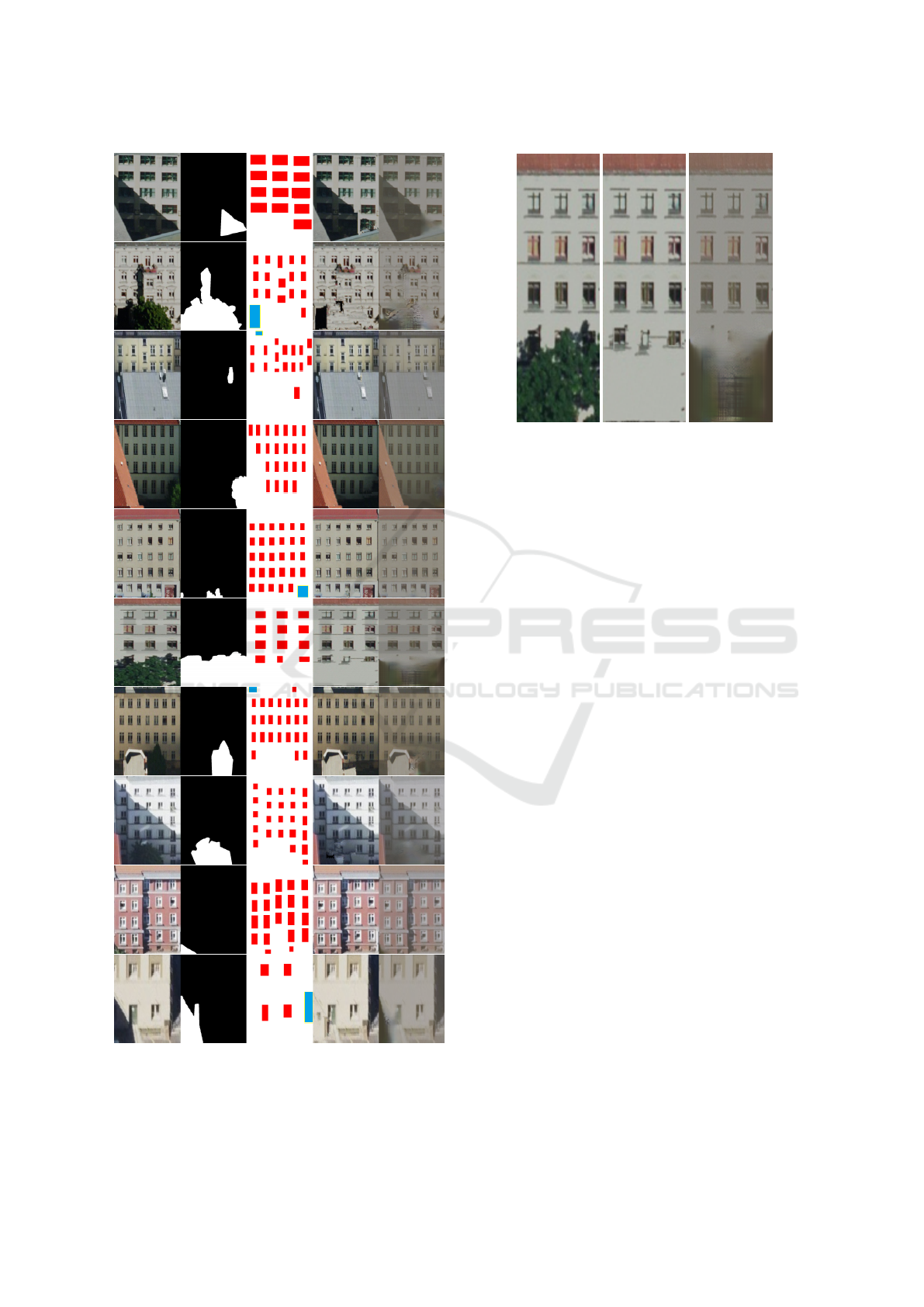

5.2 Fac¸ade Reconstruction

The results of the fac¸ade inpainting are shown by ten

characteristic examples of fac¸ade images and classifi-

cation results due to (Zhang et al., 2019) in Figure 4.

The classes for doors and windows are represented by

blue and red colors, respectively. The patch size for

the proposed texture-based method was 21 × 21 pix-

els and α = 0.98 for all images. As inpainting area,

we used the detected foreground object enlarged by

applying a morphological dilation.

We believe that in the majority of cases the in-

painted – either using the proposed or CNN-based

method – images (in the two right columns) have

a more realistic appearance than the corresponding

original images on the left. We can see that the struc-

tural inpainting can preserve the dominant colors of

(a) Structure (b) α = 0 (c) α = 0.2

(d) α = 0.4 (e) α = 0.6 (f) α = 0.8

(g) α = 0.9 (h) α = 0.92 (i) α = 0.94

(j) α = 0.96

(k) α = 0.98 (l) α = 1

Figure 3: Influence of parameter α. The choice α = 1 cor-

responds to the original method.

the fac¸ade and its elements. In the majority of cases,

the course of shadow is dropped (a desirable effect)

and in the few cases the shadows are preserved, it

happens because of progressive propagation of colors

during inpainting of texture. The backlashes of our

method are those single dark spots which stem from

the inaccuracies of the classification result: The part

of the fac¸ade close to a window is sometimes propa-

gated by pixels stemming from a window, but spuri-

ously classified as wall.

5.3 Comparison with CNN-based

Approach

In this section, we compare the result of our algorithm

with the state-of the-art CNN based method presented

in section 3.3. It is important to note that the net

not only fills the hole but affects the whole image,

which happens because before application, the (ten)

test images must be radiometrically and geometrically

corrected. By comparing both right-most columns

of Figure 4 we see, that our method better reflects

the facade elements. The CNN-based method often

produced wrongly reconstructed shapes of doors and

windows. The undesired effect of course of shadows,

which are clearly not present in original wall texture,

is more visible here. Also the blurring artifacts are ev-

Context-aware Patch-based Method for Façade Inpainting

215

Figure 4: Overview of the data set and results. From left

to right: original images, occlusion masks, inpainting using

our method, and result of CNN-based inpainting method.

Please note that some images were rescaled in order to bet-

ter fit the page (among other image 5, shown in page 7).

Figure 5: Detail view of image 5. From left to right: original

image, result of modified Criminisi et al., result of CNN

based method.

ident. In Figure 5, we show more detailed comparison

of both methods by exemplifying the fifth image. We

feel confirmed in our observations about blurring and

shadow course. While the proposed method nicely re-

constructed the bottom row of windows, in the CNN-

based result, a door seems to have been hallucinated

despite no blue box was detected in the fifth row of

Figure 4. But apparently, it was enough that a frontal

door was present in many training images. Moreover,

the grid structure of the door is caused by the decon-

volution based on nearest neighbor. In the middle im-

age, one could argue that the homogeneous texture of

the bottom part of the fac¸ade combined with some ar-

tifacts resulting from the inaccurate classification may

appear fake, but we believe that the interactive user

could better interpret this result than that on the right.

5.4 Textured 3D Model

Figure 6 shows the result included in the LoD3 model

output in the pipeline of (Zhang et al., 2019). The

fac¸ade models in the database may contain concavi-

ties (window inserts) and convexities (balconies). The

presented inpainting is a suitable method to enhance

the result and to improve the visual impression. In

contrast to the CNN-based method, it is also suitable

for closing very large occlusions, e.g. a tree that cov-

ers about one third of the wall. A further advantage

in connection with the training of rapid response mis-

sions is that only identified fac¸ade details are added.

Within virtual training and rehearsal of such missions,

the participants are supposed to find doors and win-

dows only on the spot where they actually are.

GRAPP 2020 - 15th International Conference on Computer Graphics Theory and Applications

216

(a)

(b)

Figure 6: Example of an inpainted LoD3 model: (a) in-

painted model, (b) original model (down).

6 CONCLUSIONS

In quick response and virtual reality applications, it is

often important for the user to be able to orient him or

herself in the unknown terrain. In many applications,

not only a visually appealing model is important, but

also the trustworthiness of observed information. We

have shown that considering classification results im-

proves the inpainting result. In addition to the orig-

inal procedure of (Criminisi et al., 2004), we use an

approximated inpainting result that includes classes

straightforward; in the second step, we control the re-

sult by weighting them. Cleaned textures are partic-

ularly useful to adjust the scenario for different sea-

sons. An example is shown in Figure 1, a model was

adapted for a winter scenario, where the uncleaned

textures are particularly disturbing. Another ques-

tion is whether inpainted texture images help to im-

prove the detection results and clustering results in

turn. We compared the detection results of two pairs

of original and inpainted texture images. The compar-

ison shows that inpainting does not necessarily im-

prove the detection or clustering results. The paper

shows that patch-based methods are in no way infe-

rior to a CNN-based method and thereby, we believe

to have proved the methodological principle. Addi-

tionally, the method still have potential to exploit. For

example, we have concentrated on RGB-color space

and have performed inpainting channel-by-channel.

However, there are sources in the literature, such as

(Cao et al., 2011) that pointed out that there are color

spaces better suitable for inpainting tasks. It is also

clear that if the instances of the same class have dif-

ferent colors (for example, green, blue, and red win-

dow), then color averaging would produce colors not

present so far. Thus color averaging can be replaced

by identification of accumulation points. With respect

to CNN-based generation, it would make sense to use

the results obtained by our method as training data

in order to improve the results of Sec 5.3. Other

CNN-based workflows, including generative adver-

sarial networks, seem to be a promising tool as well.

Finally, the mask for occluding object was created

manually. This is clearly not affordable in applica-

tions and therefore, a unified concept incorporating

this step into context-aware segmentation is highly

desirable.

ACKNOWLEDGMENTS

Many thanks to the student assistant Ludwig List from

Fraunhofer IOSB for providing the NVIDIA neural

network inpainting result.

REFERENCES

Bertalmio, M., Bertozzi, A. L., and Sapiro, G. (2001).

Navier-stokes, fluid dynamics, and image and video

inpainting. In IEEE Computer Society Conference on

Computer Vision and Pattern Recognition, 2001, vol-

ume 1, pages I–355.

Bulatov, D., H

¨

aufel, G., Meidow, J., Pohl, M., Solbrig, P.,

and Wernerus, P. (2014a). Context-based automatic

reconstruction and texturing of 3D urban terrain for

quick-response tasks. ISPRS Journal of Photogram-

metry and Remote Sensing, 93:157–170.

Bulatov, D., H

¨

aufel, G., Solbrig, P., and Wernerus, P.

(2014b). Automatic representation of urban terrain

models for simulations on the example of VBS2. In

SPIE Security+ Defence, pages 925012–925012. In-

ternational Society for Optics and Photonics.

Buyssens, P., Daisy, M., Tschumperl

´

e, D., and L

´

ezoray,

O. (2015). Exemplar-based inpainting: Technical re-

view and new heuristics for better geometric recon-

structions. IEEE Transactions on image processing,

24(6):1809–1824.

Cao, F., Gousseau, Y., Masnou, S., and P

´

erez, P. (2011). Ge-

ometrically guided exemplar-based inpainting. SIAM

Journal on Imaging Sciences, 4(4):1143–1179.

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., and

Adam, H. (2018). Encoder-decoder with atrous sep-

arable convolution for semantic image segmentation.

In Proceedings of the European Conference on Com-

puter Vision (ECCV), pages 801–818.

Criminisi, A., P

´

erez, P., and Toyama, K. (2004). Region

filling and object removal by exemplar-based image

inpainting. IEEE Transactions on Image Processing,

13(9):1200–1212.

Efros, A. A. and Leung, T. K. (1999). Texture synthesis by

non-parametric sampling. In Proceedings of the 7th

IEEE International Conference on Computer Vision,

volume 2, pages 1033–1038.

Guo, S., Xiong, X., Liu, Z., Bai, X., and Zhou, F. (2018).

Infrared simulation of large-scale urban scene through

LOD. Optics express, 26(18):23980–24002.

Context-aware Patch-based Method for Façade Inpainting

217

Kottler, B., Bulatov, D., and Schilling, H. (2016). Im-

proving semantic orthophotos by a fast method based

on harmonic inpainting. In 9th IAPR Workshop on

Pattern Recogniton in Remote Sensing (PRRS), 2016,

pages 1–5.

Le Meur, O., Gautier, J., and Guillemot, C. (2011).

Examplar-based inpainting based on local geometry.

In 2011 18th IEEE International Conference on Im-

age Processing, pages 3401–3404.

Liu, G., Reda, F. A., Shih, K. J., Wang, T.-C., Tao, A., and

Catanzaro, B. (2018). Image inpainting for irregular

holes using partial convolutions. In Proceedings of the

European Conference on Computer Vision (ECCV),

pages 85–100.

Loch-Dehbi, S. and Pl

¨

umer, L. (2015). Predicting building

fac¸ade structures with multilinear Gaussian graphical

models based on few observations. Computers, Envi-

ronment and Urban Systems, 54:68–81.

Ren, S., He, K., Girshick, R., and Sun, J. (2015). Faster

r-cnn: Towards real-time object detection with region

proposal networks. In Advances in neural information

processing systems, pages 91–99.

Rossi, R. E., Dungan, J. L., and Beck, L. R. (1994). Kriging

in the shadows: geostatistical interpolation for remote

sensing. Remote Sensing of Environment, 49(1):32–

40.

Sch

¨

onlieb, C.-B. and Bertozzi, A. (2011). Unconditionally

stable schemes for higher order inpainting. Commun.

Math. Sci, 9(2):413–457.

Shalunts, G., Haxhimusa, Y., and Sablatnig, R. (2011). Ar-

chitectural style classification of building fac¸ade win-

dows. In International Symposium on Visual Comput-

ing, pages 280–289.

Shen, H., Li, X., Cheng, Q., Zeng, C., Yang, G., Li, H., and

Zhang, L. (2015). Missing information reconstruc-

tion of remote sensing data: A technical review. IEEE

Geoscience and Remote Sensing Magazine, 3(3):61–

85.

Telea, A. (2004). An image inpainting technique based on

the fast marching method. Journal of graphics tools,

9(1):23–34.

Vanegas, C. A., Aliaga, D. G., and Bene

ˇ

s, B. (2010). Build-

ing reconstruction using manhattan-world grammars.

In 2010 IEEE Computer Society Conference on Com-

puter Vision and Pattern Recognition, pages 358–365.

Wenzel, S. and F

¨

orstner, W. (2016). Fac¸ade interpretation

using a marked point process. ISPRS Annals of the

Photogrammetry, Remote Sensing and Spatial Infor-

mation Sciences, 3:363–370.

Xiao, J., Fang, T., Tan, P., Zhao, P., Ofek, E., and Quan, L.

(2008). Image-based fac¸ade modeling. ACM Trans-

actions on Graphics (TOG), 27(5):161.

Yeh, R. A., Chen, C., Yian Lim, T., Schwing, A. G.,

Hasegawa-Johnson, M., and Do, M. N. (2017). Se-

mantic image inpainting with deep generative models.

In Proceedings of the IEEE Conference on Computer

Vision and Pattern Recognition, pages 5485–5493.

Zhang, X., Lippoldt, F., Chen, K., Johan, H., and Erdt,

M. (2019). A data-driven approach for adding fac¸ade

details to textured LoD2 CityGML models. In Pro-

ceedings of the 14th International Joint Conference

on Computer Vision, Imaging and Computer Graph-

ics Theory and Applications, VISIGRAPP, pages 294–

301.

GRAPP 2020 - 15th International Conference on Computer Graphics Theory and Applications

218