Human Climbing and Bouldering Motion Analysis:

A Survey on Sensors, Motion Capture, Analysis Algorithms,

Recent Advances and Applications

Julia Richter

1 a

, Raul Beltr

´

an Beltr

´

an

1 b

, Guido K

¨

ostermeyer

2 c

and Ulrich Heinkel

1 d

1

Professorship Circuit and System Design, Chemnitz University of Technology,

Reichenhainer Straße 70, Chemnitz, Germany

2

Department Sportwissenschaft und Sport, Friedrich-Alexander-Universit

¨

at Erlangen-N

¨

urnberg,

Schlossplatz 4, 91054 Erlangen, Germany

Keywords:

Climbing Analysis, Human Motion Analysis, Motion Capture, Performance Evaluation, Computer Vision.

Abstract:

Bouldering and climbing motion analysis are increasingly attracting interest in scientific research. Although

there is a number of studies dealing with climbing motion analysis, there is no comprehensive survey that

exhaustively contemplates sensor technologies, approaches for motion capture and algorithms for the analysis

of climbing motions. To promote further advances in this field of research, there is an urgent need to unite

available information from different perspectives, such as from a sensory, analytical and application-specific

point of view. Therefore, this survey conveys a general understanding of available technologies, algorithms

and open questions in the field of climbing motion analysis. The survey is not only aimed at researchers with

technical background, but also addresses sports scientists and emphasises the use and advantages of vision-

based approaches for climbing motion analysis.

1 INTRODUCTION

Bouldering and climbing are increasingly attracting

interest across all age groups and have become trend

sports all over the world.

While various studies demonstrated that climbing

improves coordination, flexibility, the cardiovascular

system and has positive effects on both physiologi-

cal and psychical health conditions (Bernst

¨

adt et al.,

2007), (Steimer and Weissert, 2017), (Luttenberger

et al., 2015), (Weber, 2014), other researchers call

the provided evidence into question: Due to the small

number of trials they regard the evidence for the effec-

tiveness of therapeutic climbing as limited (Buechter

and Fechtelpeter, 2011), (Siegel and Fryer, 2017). As

a consequence, the effects of climbing on health con-

ditions are still unclear and there is still urgent need

for further investigations, including climbing motion

analysis.

a

https://orcid.org/0000-0001-7313-3013

b

https://orcid.org/0000-0001-6612-3212

c

https://orcid.org/0000-0002-2681-5801

d

https://orcid.org/0000-0002-0729-6030

From the very beginning, especially in case of

competitive sports, climbing motions were analysed

to assess and optimise climbing techniques. In view

of therapeutic applications, climbing motion analysis

has gained importance to avoid movements that are

prone to cause injuries. At this point, the present sur-

vey provides a profound and exhaustive review of ex-

tant work with the focus on camera-based approaches

as well as recent advances in analysis techniques, in-

cluding sensors, human motion capture, analysis al-

gorithms and applications as a highly topical knowl-

edge base for future research.

The survey is structured as follows: Section 2 re-

views sensor technologies that were used in previous

work, provides an overview about available RGB-D

cameras on the market and compares parameters that

are relevant for motion analysis in climbing applica-

tions. This is followed by a review and discussion of

motion capture approaches in Section 3 as well as al-

gorithms for climbing motion analysis in Section 4.

The findings are summarised in Section 5 and finally,

an outlook at future work emphasises the potential

of using latest technologies and highlights open chal-

lenges that should be addressed in future research on

climbing motion analysis.

Richter, J., Beltrán, R., Köstermeyer, G. and Heinkel, U.

Human Climbing and Bouldering Motion Analysis: A Survey on Sensors, Motion Capture, Analysis Algorithms, Recent Advances and Applications.

DOI: 10.5220/0008867307510758

In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2020) - Volume 5: VISAPP, pages

751-758

ISBN: 978-989-758-402-2; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

751

2 SENSORS

2.1 Sensor Overview

Generally, we can distinguish between instrumented

climbing walls equipped with any kind of sensors,

wearables, and camera-based systems that are used

for climbing motion analysis. An overview about ap-

plied sensors and the obtained sensor data is presented

in Table 1. Next to the usage of only one type of

sensor, there are studies that apply sensor combina-

tions, such as (Pandurevic et al., 2018) who use both

an RGB-D camera as well as force sensors.

The advantages of optical sensors compared to

the other presented technologies are as follows: They

work in a contact-less mode, so that the climber does

not have to wear any device that could be inconve-

nient while climbing in terms of injuries or physi-

cal discomfort. Moreover they provide direct and

comparatively accurate information about the human

body: Especially RGB-D sensors allow the determi-

nation of points of interest such as the centre of mass

or even the position of skeleton joints in 3-D coordi-

nates. Besides, the adaption of the wall with installed

sensor equipment can be avoided, which makes a final

application more convenient for operators and users.

Since camera technology with depth sensing plays

an increasingly role in recent research on motion anal-

ysis, the following section reviews latest RGB-D sen-

sors available on the market that can be employed for

climbing motion analysis.

2.2 Camera Review

The application of RGB-D cameras for climbing mo-

tion analysis involves considerations about the set-up,

which includes parameters such as the distance to the

wall, which is affected by the provided range, the

camera field of view to capture the complete wall, the

required image resolution of both depth and RGB

image of the sensor to obtain sufficient information

about the climber’s body, the depth resolution and

also the availability of suitable skeleton extraction

SDKs. Table 2 provides an overview about current

sensors with relevant parameters and information.

The comparison presented here has been made

from the metrological point of view, to evaluate the

convenience of using an optical sensor to track a

climber on a climbing wall. Among the cameras re-

viewed, the Orbbec, Asus and the Intel D400 (see

example point cloud in Figure 1) series show state-

of-the-art features in structured light and active stere-

oscopy technologies, which use a triangulation pro-

cess to estimate the depth and are not exposed to the

Figure 1: 3-D point cloud of a climbing scene captured by

an Intel RealSense D435 RGB-D camera.

multipath effect, as happens with those based on time

of flight (ToF). Microsoft presents the smallest uncer-

tainty in the depth measurement, followed by Intel.

However, because the structured light technology is

affected by the environmental light conditions, they

are unfavourable for outdoor applications, where the

ToF technology offers better results.

3 MOTION CAPTURE

The analysis of a climbing motion always encom-

passes motion capture and human pose estimation

(HPE). In other words, analysis algorithms require in-

put data, such as locations of defined points of inter-

est on the body that can be tracked and analysed. In

existing work, a variety of methods were used to cap-

ture human motion. The captured data ranges from

a coarse body description, such as the centre of mass

(CoM), to very fine-granular models, such as skele-

ton models describing the poses of articulated joints

of a human body. Moreover, input data can be distin-

guished between 2-D and 3-D representations.

3.1 Centre of Mass

As already mentioned, the CoM is a very coarse de-

scription of the human body, which is analysed in

several studies (Sibella et al., 2007), (Reveret et al.,

2018). Even though the motion is represented by

only one single point, it provides relevant informa-

tion in case of climbing motions. Sibella et al. for

example, analysed the trajectory of the CoM to ob-

tain parameters, such as entropy, velocity and accel-

eration to draw conclusions about fluency and force of

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

752

the motion (Sibella et al., 2007). They calculated the

CoM as the weighted average of nine body segments.

These body segments were obtained by detecting vi-

sual markers attached to the climber’s body using

cameras distributed in a calibrated volume. Reveret et

al. approximated the CoM by means of a marker at-

tached to a harness worn around the waist (Reveret

et al., 2018). Wiehr et al. calculated the CoM from

the 3-D skeleton provided by the Kinect v2 in order

to determine whether the climber reached the top of

the route (Wiehr et al., 2016). Next to marker detec-

tion, the CoM can be derived from 3-D point clouds

defining a climber’s body, e. g. by means of functions

provided by the open-source Point Cloud Library.

3.2 Pose Estimation for Climbing

Analysis

Several analysis techniques rely on a fine-granular

skeleton model describing the human pose by means

of several joints.

Aladdin et al. constructed an instrumented boul-

dering wall where each hold was connected to a force

torque sensor (Aladdin and Kry, 2012). Based on the

force signals and a synchronised skeleton output of a

motion capture system, they were able to derive phys-

ically valid poses from several plausibility constraints

and forces alone.

A very popular Czech competitive climber, Adam

Ondra, ”hung with sensors” to analyse what makes

him such an outstanding climber (iROZHLAS, 2019).

For this purpose, a marker-based motion capture

system was used to analyse the movements and po-

sitions of his back, elbows, head and also his CoM.

Next to motion analysis, the pure analysis of stature

by means of his ”measured” skeleton yielded that

he has some advantages compared to other climbers:

Next to his long neck, his comparatively slim shoul-

ders result in less force on his fingers.

Kim et al. recognised climbing motions by pars-

ing a climber’s body area and the skeleton provided

by the Kinect (Kim et al., 2017). This body area was

determined by a foreground segmentation on a depth

image. The determined body parts were then used

to correct the feet and hands positions of the Kinect

skeleton, which are unreliable for climbing poses.

3.3 Machine Learning-Based Pose

Estimation

Next to the above described approaches to determine

a human pose, extant literature brought forth various

approaches using machine learning techniques to lo-

calise joint positions both in 2-D images and in 3-D

coordinates. The following list provides an overview

about latest and most popular image-based skeleton

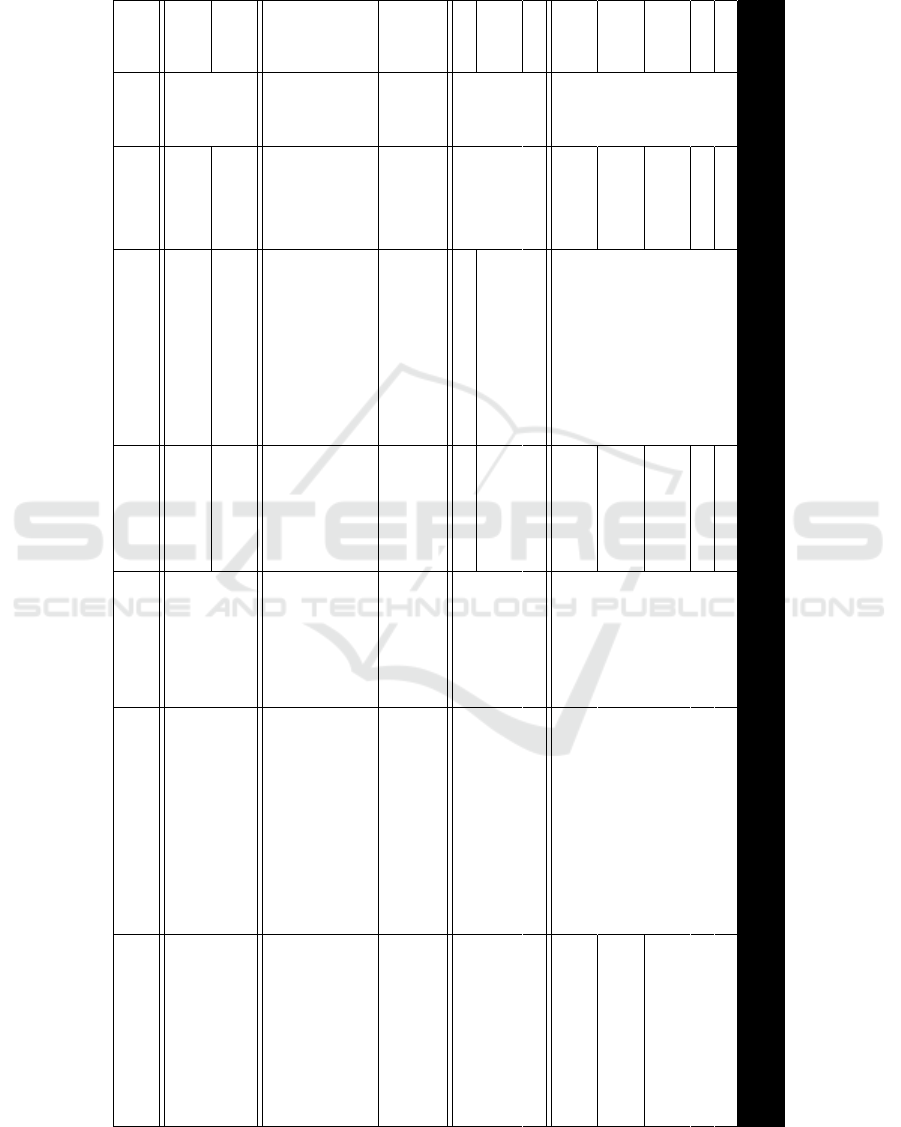

Table 1: Overview: Sensors used for climbing motion analysis.

Sensor Obtained data Examples

Strain gauges Sensors are attached to the holds of the wall. The obtained

forces were used to draw conclusions about equilibrium, leg

movement and body position.

(Quaine et al., 1997a),

(Quaine et al., 1997b),

(Quaine and Martin,

1999)

Force torque

sensors

Sensors are attached to holds of a wall. 3-axis force mea-

sures are obtained. Human body poses can be derived from

the measured forces, for example.

(Aladdin and Kry, 2012),

(Pandurevic et al., 2018)

Capacitive

sensors

Capacitive sensors are integrated into holds. A climber’s

presence is measured by means of a change in capacitance.

(Parsons et al., 2014)

Wearables Inertial sensors are tracking rotation, acceleration, and tem-

poral information about body limbs while climbing.

(Ebert et al., 2017) , (Kos-

malla et al., 2016), (Kos-

malla et al., 2015)

Commercial

MoCap

system

A skeleton is derived from reflective markers attached on the

body. Cameras with active lighting capture marker positions

to derive a human skeleton model. The joint positions were

used for the analysis.

(iROZHLAS, 2019)

Gray-scale

camera

Light-emitting diodes (LEDs) were attached as markers to a

climber’s waist. The position of the LED was determined to

obtain the climber’s trajectory.

(Cordier et al., 1994a)

RGB-D cam-

era

A skeleton extraction algorithm (OpenPose) is executed on

the RGB video stream provided by the camera. Based on the

skeleton, climbing technique can be analysed.

(Pandurevic et al., 2018)

Human Climbing and Bouldering Motion Analysis: A Survey on Sensors, Motion Capture, Analysis Algorithms, Recent Advances and

Applications

753

Table 2: Overview about cameras available on the market.

3-D

Sensor

Principle Range FOV RGB Resolution Depth Size Depth Resolution Skeleton API

Orbbec

Astra S

Structured

Light

0.4 m - 2 m

60

◦

H, 49.5

◦

V, 73

◦

D

640×480 @30fps

640×480 @30fps

at 1 m: uncertainty'1 mm bias'8 mm

at 2.5 m: uncertainty'5 mm bias'96 mm

Body Tracking SDK

BodySkeletonTracker (OpenNI2)

Astra Pro 0.6 m - 8 m 1280×720 @30fps

Astra

(Mini)

0.6 m - 8 m 640×480 @30fps

Persee 0.6 m - 8 m 1280×720 @30fps

Orbbec Body Tracking

(requires licence after 2018)

TVico 0.6 m - 5 m 1280×720 @30fps

Nuitrack SDK (lic. included)

Orbbec Persee SDK (lic. included)

Asus

Xtion Pro

Structured

Light

0.8 m - 3.5 m

58

◦

H, 45

◦

V, 70

◦

D 1280×1024

640×480 @30fps;

320×240 @60fps

Within 2 % for each distance

Gesture: 8 predefined poses

Body: multiple player recognition

Xtion Pro

Live

Xtion 2 74

◦

H, 52

◦

V, 90

◦

D 2592×1944

Microsoft

Kinect v2

Time of

Flight

0.5 m - 4.5 m 70

◦

H, 60

◦

V 1080×1920 @30Hz 512×424 @30Hz

at 1 m: uncertainty'1.5 mm bias'5 mm

at 2.5 m: uncertainty'2 mm bias'10 mm

BodyFrame

BodyIndexFrame

FaceFrame

Azure

Kinect

Time of

Flight

0.25 m - 2.88 m;

0.50 m - 5.46 m

RGB: 90

◦

H, 74.3

◦

V

Depth: 120

◦

H, 120

◦

V

3840×2160 (16:9);

4096×3072 (4:3)

12MP, rolling shutter

1024×1024 @5-15fps;

640×576 @5-30fps

1MP, wide and narrow

views individually

15 % to 95 % reflectivity

random error std. dev. ≤ 17 mm

typical error < 11 mm + 0.1 % of distance

Body Tracking SDK

Cognitive Services: Face

Intel

RealSense

D415 Structured

Light

0.16m - 10m

RGB: 69.4

◦

H, 42.5

◦

V, 77

◦

D (±3

◦

)

Depth: 65

◦

H, 40

◦

V, 72

◦

D (±2

◦

)

1920×1080 @30Hz

rolling shutter

1280×720 @30fps;

848×480 @60fps

at 1m: uncertainty'1.5 mm bias'2 mm

at 2.5 m: uncertainty'15mm bias'25 mm

Nuitrack SDK

D435 0.20 m - 10 m

RGB: 69.4

◦

H, 42.5

◦

V, 77

◦

D (±3

◦

)

Depth: 87

◦

H, 58

◦

V, 95

◦

D (±3

◦

)

1920×1080 @30Hz

global shutter

LIPSedge

DL

Time of

Flight

0.2 m - 1.2 m;

1.0 m - 4.0 m

RGB: 74.2

◦

H, 58.1

◦

V, 88

◦

D

Depth: 74.1

◦

H, 57.5

◦

V, 92

◦

D

1920×1080 @30fps 320×240 @30fps Up to 0.5% of distance LIPS Software

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

754

detection methods. More information can be obtained

from the provided references:

• ConvNet (Mar

´

ın et al., 2018)

• Nuitrack (Nuitrack, 2019)

• OpenPose (Cao et al., 2018), (OpenPose, 2019)

• ITOP (Haque et al., 2016), (ITOP, 2019)

• UBC3V (Shafaei and Little, 2016), (UBC3V,

2019)

• Microsoft HPE (Xiao et al., 2018), (Microsoft

HPE, 2019)

• 3D Human Pose Estimation in RGBD Images

for Robotic Task Learning (Zimmermann et al.,

2018), (RGB-D pose 3-D, 2019)

The problem of machine-learning-based HPE for

climbing poses does not have profuse research so far.

Difficulties can be seen in finding specific datasets of

climbing poses, since the available open datasets are

optimised to detect humans in upright frontal poses.

V

¨

ah

¨

am

¨

aki et al. presented a HPE method for climbing

that uses a set of computer-generated synthetic data to

train the model (V

¨

ah

¨

am

¨

aki, 2016). The dataset was

generated by building a rendering pipeline that pro-

duces a 3-D mesh of a virtual climber and renders

depth images from typical camera angles. Ground

truth joint positions and poses of body parts were gen-

erated in an indoor climbing scenario. The classifica-

tion algorithm uses a random decision forest to esti-

mate skeletal joints directly from depth images. The

research achieved good results in synthetic data, mak-

ing a reasonable generalisation of real-world data. Al-

though the training data does not capture all the vari-

ations observed in real scenarios, for which much

more information with human annotations is required,

the proposed method is a valid reference to enrich

datasets related to sport climbing. Unfortunately,

V

¨

ah

¨

am

¨

aki et al. provide neither data for training nor

the resulting model.

3.4 Conclusions

The estimation of position and orientation of the hu-

man body limbs from individual images or video se-

quences has been studied in 2-D and 3-D spaces, by

detecting the joints of the body using RGB and RGB-

D images. Although, as Mar

´

ın et al. indicate, a great

effort has been put to solve the problem, it is still

far from being solved (Mar

´

ın et al., 2018). Beyond

dealing with the high degrees of freedom of the hu-

man body, there are more challenges offered by the

clothing, camera views and self-occlusions. In this

sense, climbing is even more challenging to pose es-

timation algorithms than other activities performed in

an upright pose, due to the position the climber adopts

is non-conventional and requires the construction of

specialised datasets to train successful models.

With the popularisation of 3-D cameras and the

increasing precision they offer, more and more re-

search is being carried out with these types of sen-

sors to determine and measure a climber’s pose. The

results show that techniques based on optical sensors

are promising, although much computing power is

still required to offer results in real-time.

4 ALGORITHMS FOR MOTION

ANALYSIS

While climbing behaviour has been a matter of in-

terest in recent years motivated by the popularisa-

tion of bouldering and its inclusion in sport compe-

titions, early studies such as (Cordier et al., 1994b)

are still a benchmark to carry out new research. The

experiment in that study was conducted using a light-

emitting diode connected to the climber at waist

level, and a set of aligned photographic cameras. The

trajectory of the light drew the climber’s route on a

plane; the ratio between the length of such trajectory

and the convex hull that enclosed it, defined the en-

tropy of the climber’s route. As a result, Cordier et al.

demonstrated the inverse relationship between the ex-

perience of the climber and the entropy of a climbed

route.

Mermier et al. contributed to the formulation of

an appropriate model for sport climbing behaviour by

introducing new parameters to measure climbing ath-

letes and by proposing the use of Principal Compo-

nent Analysis (PCA) to treat the parameters as uncor-

related variables (Mermier et al., 2000). They demon-

strated that a climber’s performance is more suscepti-

ble to trainable variables such as strength, endurance,

and flexibility than physical attributes such as height,

arm length, and body weight.

Following the work of Cordier et al. (1994),

Sibella et al. carried out measurements in groups of

recreational climbers using a MoCap system with

passive sensors (Sibella et al., 2007). The aim was

to compare climbing strategies based on the route de-

scribed by the climber’s CoM. They improved the

prior technique introducing the register of the CoM

in a 3-D space, being able to measure the entropy,

velocity and acceleration in the frontal, sagittal and

transverse planes of the climbing space. They defined

fluency as “the effectiveness of the movement” mea-

sured by means of the entropy of the climbing route,

as well as the concept of agility, as a combination of

the speed and acceleration of the CoM.

Human Climbing and Bouldering Motion Analysis: A Survey on Sensors, Motion Capture, Analysis Algorithms, Recent Advances and

Applications

755

Pandurevic et al. added quantitative methods of

force and endurance evaluation employing a wireless

instrumented climbing wall, in conjunction with a

3-D camera (Pandurevic et al., 2018). They mea-

sured the 3-axis force applied on the holds by hands

and feet, and determined the route of the centre

of gravity using OpenPose to construct a climber’s

skeletal model. The system allowed measuring the

position and climber’s force within an energy budget

in a wireless way with the possibility of analysing the

pose of the climber’s limbs through a skeletal model.

Analysis of the speed has been carried out by

Reveret et al. In their study, they worked with high-

level climbers using an international accredited wall,

utilised to validate records, and attached a motion

sensor to the hip of the climber (Reveret et al., 2018).

They identified dynos, which are dynamic moves, as

a relation between vertical and absolute velocities of

the hip.

4.1 Motion Planning

Motion planning includes the prediction and simula-

tion of climbing motion behaviour to plan or create

new climbing problems and to obtain a set of anatom-

ically possible movements that can be proposed to a

climber for his or her next move.

Among the first studies, Ouchiet al. created a

model for the prediction of climbing behaviour

based on a data-driven analysis of a group of chil-

dren climbing a prepared wall with a series of uni-

form holds with embedded sensors (Ouchi et al.,

2010). Pfeil et al. proposed a system to guide the

design of routes by simulating climbing behaviour,

inspired by the background software used to run phys-

ical simulations in the designing of climbing clothes

and equipment (Pfeil et al., 2011). They developed

a tool that enables experienced and novice climbers

to design quality routes by placing holds in a virtual

climbing wall, that later is probed by a simulated vir-

tual climber.

Naderi et al. addressed the problem of offline

route planning for wall climbing by simulating boul-

dering with a graph-based application, optimised

through a k-shortest path finding algorithm (Naderi

et al., 2017). Their solution proposes alternative

paths depending on the anatomic characteristic of the

climber, e. g. strength, flexibility, or reach. In contrast

to previous works, they contemplated limbs hang free

for balancing and the use of the wall friction. Addi-

tionally, the simulated agent can move more than one

limb at a time, restricted to use at least two holds si-

multaneously. The simulations showed plausible so-

lutions on short bouldering routes.

Augmented Reality (AR) enabled users to cre-

ate climbing routes for the bouldering board Moon-

Board with their smart phone. The MoonBoard is a

special climbing wall that has a standardised layout

and hold sets, whereas each hold is equipped with an

LED to show a configured route (Daiber et al., 2013a).

Next to AR, Virtual Reality (VR) makes it possible

to share the experiences professional climbers made

on extreme routes allowing other climbers to experi-

ence these demanding routes as well, at least virtually

(Adidas, 2019).

4.2 Teaching and Training

Teaching bouldering requires multiple demonstra-

tions of postures and movements a novice climber

must imitate. Cha et al. analysed the movement of

limbs from a biomechanics point of view, employ-

ing two Microsoft Kinect V2 cameras to construct

realistic 3-D animations that can be followed by

the novice climbers in a computer monitor (Cha

et al., 2015). For the simulation, the study divided

into phases the movements to change from one ini-

tial posture to another finished one, loading individu-

ally the velocity of hip, forearms, upper-arms, thighs

and shins. The joint flexion angles were estimated

also within each phase. Difficulties in the detection

of limbs when they are close to the wall were pointed

out, which required the use of an acrylic transparent

wall specially made for this study. The research pre-

sented acceptable results for beginners training, but

deficiencies were observed working with faster and

more experienced climbers, due to problems related

to the correct reproduction of realistic speeds and an-

gles in the animations.

Kosmalla et al. presented a system for visualis-

ing reference motions on a bouldering wall (Kos-

malla et al., 2017a). They addressed the difficulty to

teach and learn simultaneously because it is hard to

remember all the movements exactly while ascend-

ing. To solve this, they proposed to present an aug-

mented real-time video projected on the wall while

the climber performs the training. There is a variety

of further publications examining the application of

augmented or mixed reality for teaching purposes in

climbing, such as (Wiehr et al., 2016), (Kajastila and

H

¨

am

¨

al

¨

ainen, 2014), (Kajastila et al., 2016), (Daiber

et al., 2013b), (Kosmalla et al., 2017b).

4.3 Conclusions

Due to the unavailability of reliable skeleton data,

the majority of motion analysis approaches relied on

body representations, such as the hip centre or the

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

756

CoM, or on combinations of existing HPE algorithms

and external sensors such as body-worn motion sen-

sors or force sensors integrated into the wall. Mea-

sured parameters focus on entropy, velocity, acceler-

ation and the distance to the wall to draw conclusions

about fluency, agility, force, energy and the detection

of dynos. Other approaches are related to teaching

and the simulation of climbing behaviour for climb-

ing prediction and route creation.

5 SUMMARY AND OUTLOOK

Taken together, real-time marker-less, vision-based

motion capture for climbing motions is far from

being solved and requires further research activities.

The availability of joint positions would be a great

benefit for a more detailed and precise climbing

analysis. So far, there are still open questions related

to a comparison of a novice’s climbing style with

the technique of an experienced climber to provide

feedback for an effective climbing. Moreover, neither

of the available studies was dedicated to the detec-

tion of typical motion errors in terms of technique.

Future climbing applications could work completely

by means of a camera and without additional infor-

mation, such as marker positions or wearable sen-

sors. The applicability in the improvement of teach-

ing, the analysis of athletic performance and the con-

tributions to the health sector and the entertainment

industry suggest an even greater growth in bouldering

research.

ACKNOWLEDGEMENTS

This publication is funded by the European Social

Fund (ESF).

REFERENCES

Adidas (2019). Climbing with VR. https://

www.youtube.com/watch?v=-1yhQF-rwi4. Ac-

cessed: 2019-09-10.

Aladdin, R. and Kry, P. (2012). Static pose reconstruction

with an instrumented bouldering wall. In Proceedings

of the 18th ACM symposium on Virtual reality soft-

ware and technology, pages 177–184. ACM.

Bernst

¨

adt, W., Kittel, R., and Luther, S. (2007). Thera-

peutisches Klettern. Georg Thieme Verlag.

Buechter, R. B. and Fechtelpeter, D. (2011). Climbing for

preventing and treating health problems: a systematic

review of randomized controlled trials. GMS German

Medical Science, 9.

Cao, Z., Hidalgo, G., Simon, T., Wei, S.-E., and Sheikh,

Y. (2018). OpenPose: realtime multi-person 2D pose

estimation using Part Affinity Fields. In arXiv preprint

arXiv:1812.08008.

Cha, K., Lee, E.-Y., Heo, M.-H., Shin, K.-C., Son, J., and

Kim, D. (2015). Analysis of Climbing Postures and

Movements in Sport Climbing for Realistic 3D Climb-

ing Animations. Procedia Engineering, 112:52–57.

Cordier, P., France, M. M., Bolon, P., and Pailhous, J.

(1994a). Thermodynamic study of motor behaviour

optimization. Acta Biotheoretica, 42(2-3):187–201.

Cordier, P., Mend

`

es F., M., Bolon, P., and Pailhous, J.

(1994b). Thermodynamic Study of Motor Behaviour

Optimization. In Acta Biotheoretica, volume 42,

pages 187–201, Netherlands. KluwerAcademic Pub-

lishers.

Daiber, F., Kosmalla, F., and Kr

¨

uger, A. (2013a). BouldAR

– Using Augmented Reality to Support Collaborative

Boulder Training. In CHI ’13 Extended Abstracts on

Human Factors in Computing Systems, pages 949–

954, New York, NY, USA.

Daiber, F., Kosmalla, F., and Kr

¨

uger, A. (2013b). Bouldar:

using augmented reality to support collaborative boul-

der training. In CHI’13 Extended Abstracts on Human

Factors in Computing Systems, pages 949–954. ACM.

Ebert, A., Schmid, K., Marouane, C., and Linnhoff-Popien,

C. (2017). Automated recognition and difficulty as-

sessment of boulder routes. In International Confer-

ence on IoT Technologies for HealthCare, pages 62–

68. Springer.

Haque, A., Peng, B., Luo, Z., Alahi, A., Yeung, S., and

Fei-Fei, L. (2016). Towards viewpoint invariant 3d

human pose estimation. In European Conference on

Computer Vision (ECCV).

iROZHLAS (2019). Adam Ondra hung with sen-

sors. https://www.irozhlas.cz/sport/ostatni-sporty/

czech-climber-adam-ondra-climbing-data-

sensors 1809140930 jab. Accessed: 2019-09-10.

ITOP (2019). ITOP homepage. https://

www.alberthaque.com/projects/viewpoint 3d pose/.

Accessed: 2019-09-10.

Kajastila, R. and H

¨

am

¨

al

¨

ainen, P. (2014). Augmented climb-

ing: interacting with projected graphics on a climbing

wall. In Proceedings of the extended abstracts of the

32nd annual ACM conference on Human factors in

computing systems, pages 1279–1284. ACM.

Kajastila, R., Holsti, L., and H

¨

am

¨

al

¨

ainen, P. (2016). The

augmented climbing wall: high-exertion proximity in-

teraction on a wall-sized interactive surface. In Pro-

ceedings of the 2016 CHI conference on human fac-

tors in computing systems, pages 758–769. ACM.

Kim, J., Chung, D., and Ko, I. (2017). A climbing mo-

tion recognition method using anatomical information

for screen climbing games. Human-centric Comput-

ing and Information Sciences, 7(1):25.

Kosmalla, F., Daiber, F., and Kr

¨

uger, A. (2015). Climb-

sense: Automatic climbing route recognition using

wrist-worn inertia measurement units. In Proceedings

of the 33rd Annual ACM Conference on Human Fac-

tors in Computing Systems, pages 2033–2042. ACM.

Human Climbing and Bouldering Motion Analysis: A Survey on Sensors, Motion Capture, Analysis Algorithms, Recent Advances and

Applications

757

Kosmalla, F., Daiber, F., Wiehr, F., and Kr

¨

uger, A. (2017a).

ClimbVis - Investigating In-situ Visualizations for Un-

derstanding Climbing Movements by Demonstration.

In Interactive Surfaces and Spaces - ISS ’17, pages

270–279, Brighton, United Kingdom. ACM Press.

Kosmalla, F., Wiehr, F., Daiber, F., Kr

¨

uger, A., and

L

¨

ochtefeld, M. (2016). Climbaware: Investigating

perception and acceptance of wearables in rock climb-

ing. In Proceedings of the 2016 CHI Conference on

Human Factors in Computing Systems, pages 1097–

1108. ACM.

Kosmalla, F., Zenner, A., Speicher, M., Daiber, F., Herbig,

N., and Kr

¨

uger, A. (2017b). Exploring rock climb-

ing in mixed reality environments. In Proceedings

of the 2017 CHI Conference Extended Abstracts on

Human Factors in Computing Systems, pages 1787–

1793. ACM.

Luttenberger, K., Stelzer, E.-M., F

¨

orst, S., Schopper, M.,

Kornhuber, J., and Book, S. (2015). Indoor rock

climbing (bouldering) as a new treatment for depres-

sion: study design of a waitlist-controlled randomized

group pilot study and the first results. BMC psychia-

try, 15(1):201.

Mar

´

ın, M., Romero, F., Mu

˜

noz, R., and Medina, R. (2018).

3D human pose estimation from depth maps using a

deep combination of poses. Journal of Visual Com-

munication and Image Representation, 55:627–639.

Mermier, C. M., Janot, J. M., Parker, D. L., and Swan, J. G.

(2000). Physiological and anthropometric determi-

nants of sport climbing performance. British Journal

of Sports Medicine, 34(5):359–366.

Microsoft HPE (2019). Microsoft HPE home-

page. https://github.com/microsoft/human-pose-

estimation.pytorch. Accessed: 2019-09-10.

Naderi, K., Rajam

¨

aki, J., and H

¨

am

¨

al

¨

ainen, P. (2017). Dis-

covering and synthesizing humanoid climbing move-

ments. ACM Transactions on Graphics, 36(4):43:1–

11.

Nuitrack (2019). Nuitrack homepage. https://nuitrack.com/.

Accessed: 2019-09-10.

OpenPose (2019). OpenPose homepage. https:

//github.com/CMU-Perceptual-Computing-Lab/

openpose. Accessed: 2019-09-10.

Ouchi, H., Nishida, Y., Kim, I., Motomura, Y., and Mi-

zoguchi, H. (2010). Detecting and modeling play be-

havior using sensor-embedded rock-climbing equip-

ment. In 9th International Conference on Interaction

Design and Children - IDC ’10, page 118, New York,

NY, USA. ACM Press.

Pandurevic, D., Sutor, A., and Hochradel, K. (2018). Meth-

ods for quantitative evaluation of force and technique

in competitive sport climbing.

Parsons, C. P., Parsons, I. C., and Parsons, N. H. (2014). In-

teractive climbing wall system using touch sensitive,

illuminating, climbing hold bolts and controller. US

Patent 8,808,145.

Pfeil, J., Mitani, J., and Igarashi, T. (2011). Interac-

tive climbing route design using a simulated virtual

climber. In SIGGRAPH Asia 2011 Sketches on - SA

’11, page 1, New York, NY, USA. ACM Press.

Quaine, F. and Martin, L. (1999). A biomechanical study of

equilibrium in sport rock climbing. Gait & Posture,

10(3):233–239.

Quaine, F., Martin, L., and Blanchi, J. (1997a). Effect of

a leg movement on the organisation of the forces at

the holds in a climbing position 3-d kinetic analysis.

Human Movement Science, 16(2-3):337–346.

Quaine, F., Martin, L., and Blanchi, J.-P. (1997b). The ef-

fect of body position and number of supports on wall

reaction forces in rock climbing. Journal of Applied

Biomechanics, 13(1):14–23.

Reveret, L., Chapelle, S., Quaine, F., and Legreneur, P.

(2018). 3D Motion Analysis of Speed Climbing Per-

formance. I4th International Rock Climbing Research

Association (IRCRA) Congress, pages 1–5.

RGB-D pose 3-D (2019). RGB-D pose 3-D home-

page. https://github.com/lmb-freiburg/rgbd-pose3d.

Accessed: 2019-09-10.

Shafaei, A. and Little, J. J. (2016). Real-time human motion

capture with multiple depth cameras. In Proceedings

of the 13th Conference on Computer and Robot Vi-

sion. Canadian Image Processing and Pattern Recog-

nition Society (CIPPRS).

Sibella, F., Frosio, I., Schena, F., and Borghese, N. (2007).

3D analysis of the body center of mass in rock climb-

ing. Human Movement Science, 26(6):841–852.

Siegel, S. R. and Fryer, S. M. (2017). Rock climbing for

promoting physical activity in youth. American jour-

nal of lifestyle medicine, 11(3):243–251.

Steimer, J. and Weissert, R. (2017). Effects of sport climb-

ing on multiple sclerosis. Frontiers in physiology,

8:1021.

UBC3V (2019). UBC3V homepage. https://github.com/

ashafaei/ubc3v. Accessed: 2019-09-10.

V

¨

ah

¨

am

¨

aki, J. (2016). Real-time climbing pose estimation

using a depth sensor. Master’s thesis, Degree Pro-

gramme in Computer Science and Engineering, Aalto

University, Finland.

Weber, F. (2014). Therapeutisches Klettern f

¨

ur Kinder mit

ADHS: visuelle Wahrnehmung und sensorische Inte-

gration. Diplomica Verlag.

Wiehr, F., Kosmalla, F., Daiber, F., and Kr

¨

uger, A. (2016).

betacube: Enhancing training for climbing by a self-

calibrating camera-projection unit. In Proceedings

of the 2016 CHI Conference Extended Abstracts on

Human Factors in Computing Systems, pages 1998–

2004. ACM.

Xiao, B., Wu, H., and Wei, Y. (2018). Simple baselines

for human pose estimation and tracking. In European

Conference on Computer Vision (ECCV).

Zimmermann, C., Welschehold, T., Dornhege, C., Burgard,

W., and Brox, T. (2018). 3d human pose estimation

in rgbd images for robotic task learning. In IEEE In-

ternational Conference on Robotics and Automation

(ICRA).

VISAPP 2020 - 15th International Conference on Computer Vision Theory and Applications

758