Face Smile Detection and Cavernous Biometric Prediction using

Perceptual User Interfaces (PUIs)

Hayder Ansaf

1a

, Sajjad Hussain

2

, Hayder Najm

1b

and Oday A. Hassen

3

1

Imam Al-Kadhum College (IKC), Wasit, Iraq

2

Xi’an Jiaotong University, School of Software Engineering, Xi’an, China

3

University Technical Malaysia MelakaHang Taya, Melaka 76100, Malaysia

Keywords: Perceptual User Interface, PUI, Smile Detection, Smile Analytics, Face Recognition.

Abstract: Face identification and biometric analytics is a modern domain of study and enormous algorithms in this

aspect. Perceptual interface can be described as: highly immersive, multi - modal interfaces focused on normal

human-to - human interactions, with the purpose of allowing users to communicate with software in a similar

way to how they communicate with one another and the physical environment. It is nowadays quite effectual

in face smile detection. Smile detection is a two-stage process. First you feel a face and then wait for a grin

and in thousands of zones a motion detector splits the clip, Analyzing criteria like auto focus and facial flash

level. When a human smile, the camera identifies a facial defect by identifying various parameters, It involves

shutting your eyes, making your teeth transparent, folding your mouth, and lifting your lips. You should adjust

the camera parameters to increase the sensitivity of the Smile automatic feature. When participants (with

bangs, etc.) do not cover their faces, especially their eyes, authentic smile recognition is more successful.

Helmets, masks or sunglasses can also be obstructed. For your subjects, you should have a wide and open-

mouthed smile. When the teeth are open and clear, the camera can even detect a smile better. The presented

work focuses on analytics of face smile through Perceptual User Interfaces in biometric analytics for

cumulative results.

1 INTRODUCTION

Graphical user interfaces have long been the dominant

medium for human-computer interaction (GUIs). The

GUI style has refined the usage of devices and

promoted the use of computers, particularly for

business software purposes which machines have been

used to perform tasks. Therefore, the approach we use

machines changes but more widespread computing, in

GUIs, the graphical interfaces needed to fulfil our

users' needs are not directly given.

It seems that identifying real and false emotion on

the human face is one of the toughest activities for the

brain once. The vision system of humans has a

remarkable capacity to distinguish a person's genuine

and false smile. Nevertheless, our brain is still not

talented enough to discern it clearly countless times.

But how does a computer vision system distinguish

between real and false feelings? For such questions,

there is no suitable reply to date. Nevertheless, in

a

https://orcid.org/0000-0002-1339-1616

b

https://orcid.org/0000-0001-9722-4542

order to find solutions to such difficult problems to

some degree, quite a few computer techniques have

been shown. In order to make these things

understandable, a well-known French physician

called Guillaume Duchenne from the 19th century

reserved the primary challenge to differentiate

genuine and false smile based on the muscles

involved in producing facial expressions (BRASOV,

2018). In this research we are using focuses on the

face smiles analysis of biometric analytics with the

PUIs for cumulative results.

2 PROPOSED SYSTEM

2.1 Perceptual User Interface (PUI)

and Biometric Traits

A perceptual design enables a user to communicate

with the device without utilizing the usual desktop

computer. This interface was realized by allowing the

device to recognize user gestures or voice control.

Table 1: The PUI is having enormous components and

module in which a number of segments are analyzed.

Conceptual User

Interface

Perceptual User

Interface

System Key Functions

for the Interaction and

Response.

Manipulation by the

User.

Response of System

similar to Human.

Human to Human like

Intelligent Interface.

2.2 Head Orientation

For certain visually impaired individuals, machines

are an important tool for connectivity, environmental

protection, schooling, and entertainment. Even so, a

person's impairment can make getting to the machine

harder. We aim to establish full advantage by

monitoring three noncolinear facial characteristics,

e.g., eyes and nose. Since we know the relative

positions of the features when the consumer stares

straight only at screen, we will estimate shifts in head

orientation if we know immediate locations of the

features. This differential direction may be used to

guide the cursor.

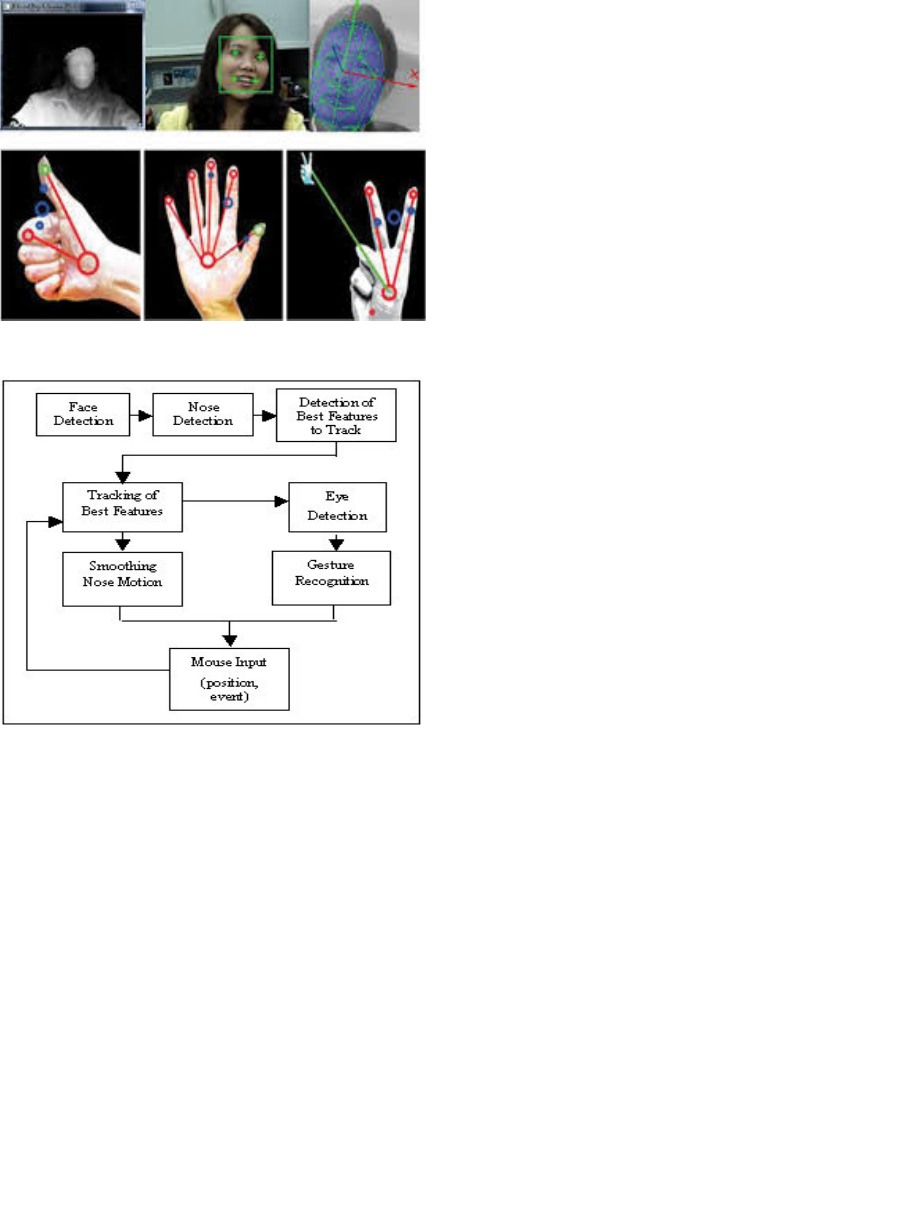

2.3 Gesture Input

In this section, we examined ways of manipulating

the cursor direction through head motions, primarily

through monitoring the nose: as you look upwards,

the nose travels upwards and the cursor follows.

To do this, we must find the nostrils. This is

rendered possible by first finding the mask, then two

nearby dark areas. Locating facial colored pixels will

find the facial.

Face color is determined by environmental

illuminating and skin pigmentation. Fortunately, the

change of skin color attributable to discrepancies of

pigmentation is reasonably minimal, but if we can

accommodate changes induced by discrepancies in

light, we should be able to distinguish potential skin

pixels.

Several color normalization approaches were

proposed. The easiest is to have the red, green, and

blue components identical. A much more complicated

approach, but one that may provide better outcomes,

is to use log-opponent representation, as Fleck and

Forsyth say. We preferred uniform red and green as

this is computationally cheaper.

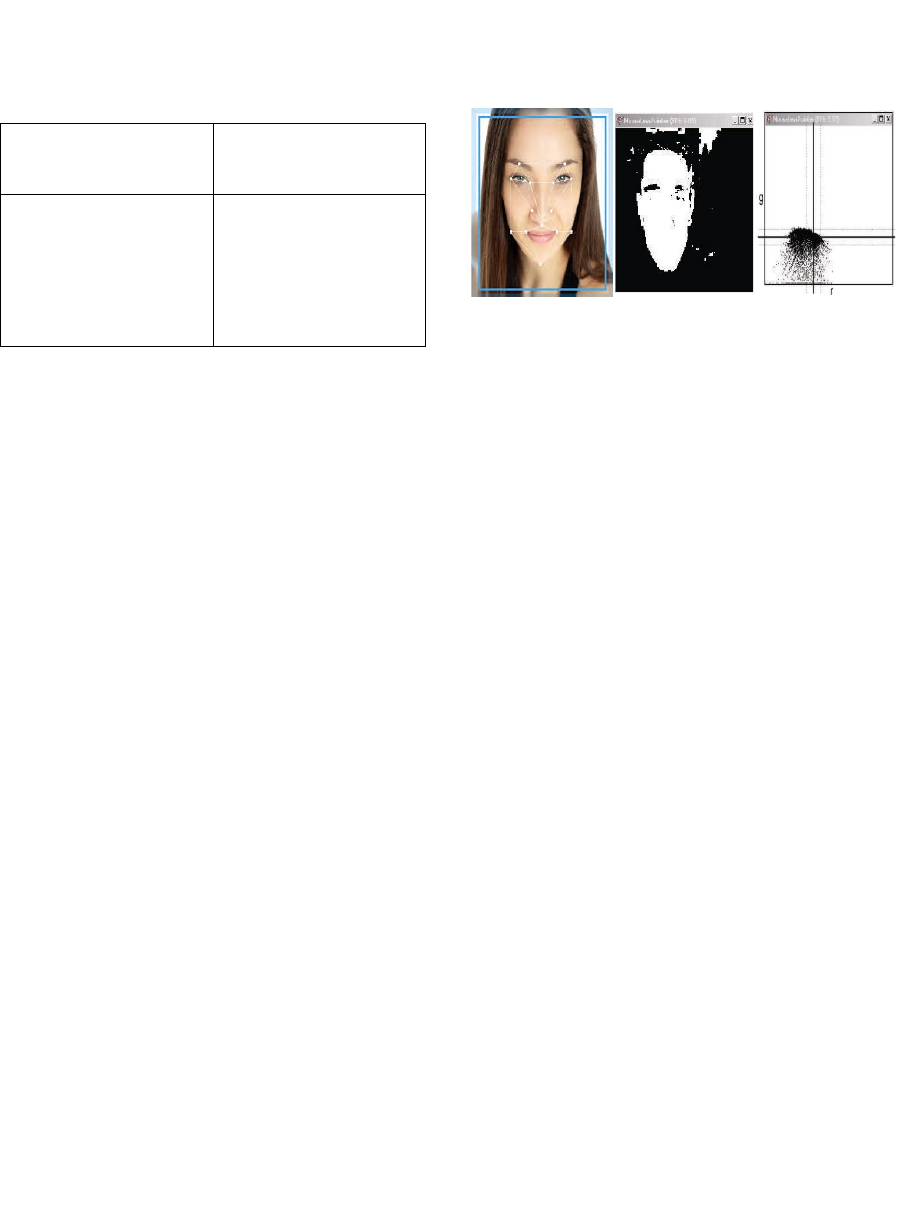

The following photos display intermediate

outcomes while analyzing details.

Figure 1: Analytics of Face Smile for Evaluations.

A bounding box on the input image will be

displayed in the final picture. There are also two

crosses that indicate where the nostrils are situated.

The average position for cursor driving is tracked.

With modest hardware, we were able to achieve

acceptable frame rates. This tracker will be integrated

into a regular function system in the next stages of

this research.

2.4 Gesture Analytics

We want a number of gestures to replace the mouse.

In order to work on applications without the mouse to

specifically, we would like to reduce the points as

well as click and point that drag operations. They

want a picture series to find and map the fingertip.

We'd like to infer what the consumer means. Magi

display intermediate effects while analyzing details.

This role requires evaluating the text the consumer

performs.

2.5 Analysis Patterns for Face Smile

and Biometric using PUI

We must switch to normal, automatic, responsive and

unobtrusive interfaces to respond to a wider range of

situations, positions, users and tastes. A latest MCI

concentrate, (Perceptual User Interfaces PUIs), aims

to make interactions among people and devices more

like interactions through people and the environment.

(El Haddad, 2016).

This chapter talks about the changing PUI domain

and concentrates on the segments of PUI Computer-

based vision policies that view applicable user

information visually (Vyshagh and Vishnu).

Figure 2: Key Segments in PUIs.

Figure 3: Integration of Face Detection with Assorted

Segments.

Graphical user interfaces have been the main tool

for human-computer interaction (GUIs). Computers

were streamlined and simplified by the GUI-based

interaction style, especially for business software

requirements where computers have been used for

particular tasks (Phung, 2017). However, as

computation advances and computing become more

ubiquitous, (GUIs) can’t comfortably meet the

diversity of experiences expected to meet the needs of

your users. We need to switch to standard, automated,

versatile and discreet interfaces to accommodate a

greater range of situations, responsibilities, users and

interests (Au, 2020). HCI's target is to make human-

computer experiences more analogous to the way

humans communicate for one another and with the

world, called perceptual user interfaces (PUIs). This

article describes the emerging PUI field and focused

on three main (PUI) tasks: computer- based vision

strategies to demonstrate consumer awareness (Song,

2018).

There is no Moore law for user interfaces.

Communication between humans and robots has not

dramatically changed for nearly two decades. Most

users may connect by sort, point, and click their

computers. Most HCI work in previous decades has

been built to make interactive user interface users to

track and detect directly (Rizzo, 2016). These

properties will provide consumer with a basic model

about what commands and actions are possibly and

what their effects may be; they enable users would be

conscious of total and taking charge of interaction

with software solutions.

Although these attempts were common, their

WIMP (Windows, buttons, menu, pointer) paradigm

was a reliable global system face, Obviously, this

paradigm would not work into different machine

shapes and uses in the future. Computers are

becoming smaller and more common, and their

encounters with our daily life is becoming much

significant. Large displays are becoming more

popular simultaneously, and we are beginning to see

a convergence of computers and television. (El

Haddad). It is very important to connect with

technology in a more public and conjectural way in

all situations. Shortly, the way most people interact

with many computing facilities will not be as they

display, select and type, though still beneficial for

many computers’ solutions (Najm, 2019).

What we need are networking approaches that are

well matched to how humans use computers. It does

not match anything from lightweight, portable

appliances to powerful machines installed into

homes, factories and automobiles. Will the nature of

such complex future HCI specifications exist? We

assume that it exists and that it is based on the

connection between the people and the natural

universe. PUIs are defined by interaction techniques

incorporating an awareness of human natural abilities

(necessary to conduct a range, motor, mental and

perceptual ability). Using the contexts in which

individuals communicate verbally with each other

and the environment, the consumer interface is more

normal and compulsive. Sensors must be clear and

passive with the software, and computers have to

interpret appropriate human communication

networks and produce a naturally known output. This

will include technological integration at various

levels, including speech and acoustic synthesis and

generation, computer vision, graphic design,

simulation, language interpretation, sensing and

suggestions dependent on touch (haptics), device

modelling, conversation and listening (De Oliveira,

2018; Oday A., 2017).

The figure below illustrates how research in

various fields requires PUI. Since the figure shows

the transfer of information within a traditional

machine form factor, PUI is also meant for new form

factors.

A perceptive user interface applies human sensing

skills to the device, such as reminding the computer

of the user's vocabulary or the user's face, body,

hands... Some interfaces use the input PC while

communicating between people, and engines are

used. (Taskirar, 2019).

Multimodal UI has strong links that underline

human communication skills. We use different

modalities that result in better contact as we engage

in face-to-face interaction. Much of the MUI function

concentrated on device inputs (for instance, by

speaking with pen-based gestures). The multimodal

performance uses multiple ways to interpret what is

viewed by individuals using auditory, cognitive, and

communication abilities, including visual

presentation, audio, and tactile feelings. In

multimodal user interfaces, various modalities are

sometimes used separately or even concurrently or

closely related. (Oday A., 2017; Ryu, 2017).

Multimedia UI, that has undergone tremendous

study throughout the past two decades, utilizes

perceptual abilities to understand the user's details.

Normal media is text, graphics, audio or video.

Multimedia study focuses on media, multimodal

study on human sensory sources. Multimedia review

is a multimodal branch of output testing from that

angle.

PUI incorporates perceptive, multi - modal, with

multimedia interfaces to bear on developing more

natural, responsive interfaces. PUIs can improve the

usage of machines as instruments or equipment,

improving GUI-based software explicitly, for

example, through taking into account motions, voice,

and eye gaze ('No, that'). Maybe more significantly,

these emerging developments would allow computers

to be widely used as assistants or agents who

communicate in more humane ways. Perceptual

interfaces would enable various input modes,

including such speech alone, speech and motion, text

and contact, vision and synthetic voice, any of which

could be suitable in different situations, be it web

applications, hands-free phones or embedded

household structures (Ugail, 2019; Ansaf, 2019;

Azez, 2018).

Pentland advocates sensory intelligence as

essential to interfacing with potential generations of

machines; it identifies two classes of responsive

sensor-based environments and technology expected

to help them. latest investigation about computer-

based sensing and interpretation of human behavior

in particular vision areas. They offer a wide view of

the field and explain two initiatives that, using visual

experiences, improve graphical interfaces. Reeves

and Nass discuss the criteria for a deeper

understanding of human cognition and psychological

in conjunction with technology interaction, and their

studies concentrate on human beings. Additional

knowledge on unique Perceptual User Interface

domains, that is haptic and computational effects

(Hassena, 2019).

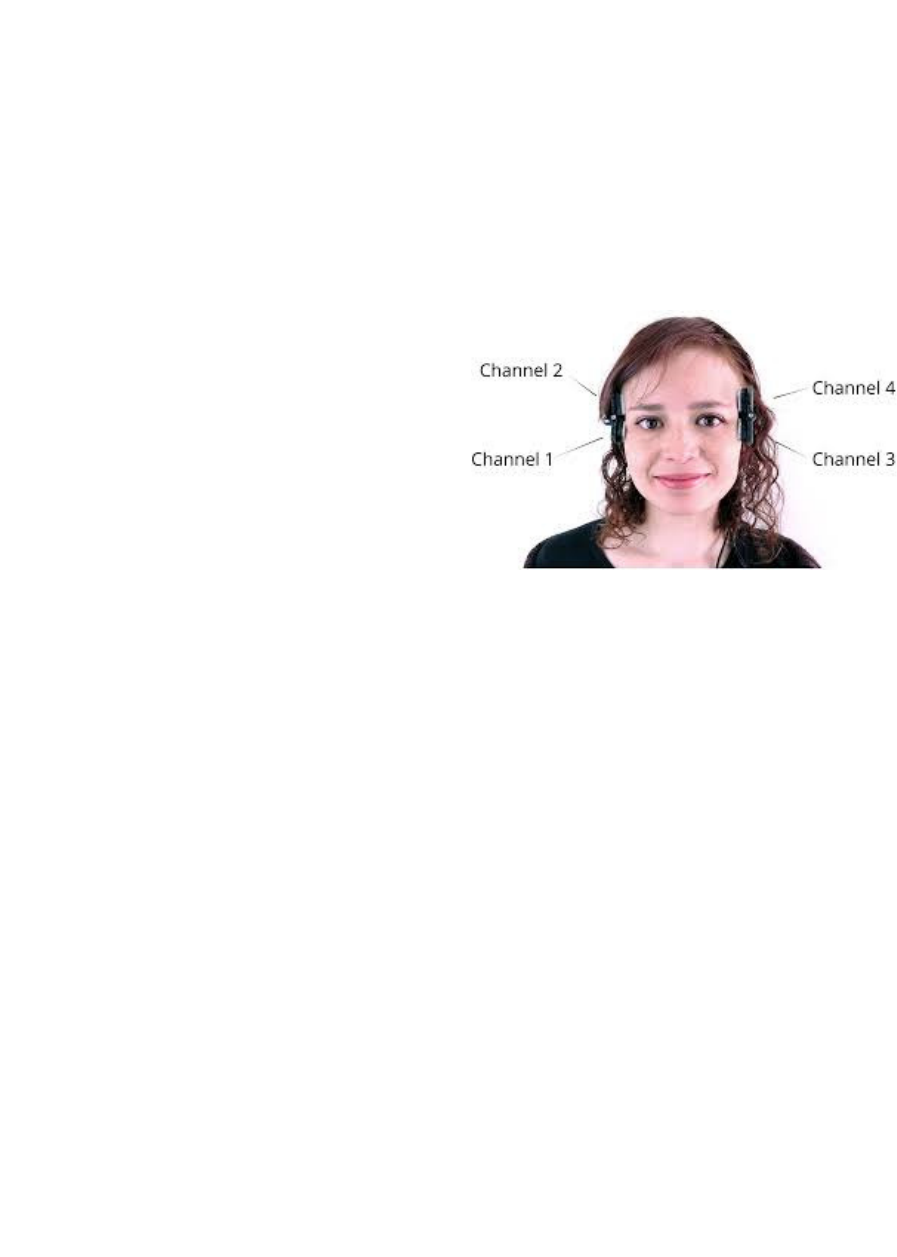

Figure 4: Channel based Face Analytics.

A device able to recognize or verify an individual

from a digital picture or video source is a technology.

Many processes function, however overall, by

comparing a specified image's chosen facial features

with faces in a database. Different facial recognition

technologies exist. The program, which can recognize

an individual by analyzing patterns based on facial

structure and form, is also identified as biometric

artificial intelligence.

In the past, it has seen broader applications of

mobile platforms and other aspects of technology

including robots, though initially a computer

program. Currently used in access management

authentication schemes, it can be contrasted with

some other biometrics such as facial patterns system

(Ugail, 2019; Hassen, 2017).

3 RESULT AND DISCUSSION

Expression monitors are used in several industries,

like newspapers, one is the advertising business,

where it is essential for businesses to evaluate the

market response to their goods. Here we create an

OpenCV smile detector that receives web cam feed.

There are several simpler approaches to incorporate

our ideal smile / happiness detector.

Phase # 1: First, we must import OpenCV library.

Importing cv2

Move # 2: Include hair-cascades.

Hair-cascades are classifiers that used detect features

(in this case face-to - face) through superimposing

measures or procedures over facial segments and

utilizing them as XML data. In our template, we can

use haar-cascades profile, eye and smile to be inserted

in the working directory after installing.

The requisite hair-cascades were found here.

Face Cascade=.CascadeClassifier('haarcascade

default.xml)

CascadeClassifier('haarcascade eye.xml)

= .CascadeClassifier('haarcascade smile.xml)

Phase 3: Step 3

At this point, to detect the grin, we will enhance the

main function.

Frame by frame from the webcam/vid unit for the live

stream is analyzed. Where hair-cascades work more

effectively on it, we consider the grey image.

We make use of the following to detect the face:

Faces = Face MultiScale(gray, 1.3,

5)

Detection(gray, frame):

Face = MultiScale Face(gray, 1.3, 5)

For face(x, y, w, h):

Rectangle(frame,(x,

y),(x+w),(y+h)),(255,0,0),(2)

Roi gray = gray[y+h, x: x+w]

Roi color = frame[y+h, x: x+w]

Smile cascade.detectMultiScale(1.8,

20)

Smiles (sx, sy, sw, sh):

Computervision2.rectangle(roi

color,(sx + sw),(sy + sh),(0, 0,

255), 2)

Return photo

Vid capture = PC2.VidCapture(0)

Whereas True:

# Captures frame vid capture

, vid capture.read)

# Capture monochrome image

Gray =

computerview2.computerviewtColor(fr

ame, computerview2.COLOR BGR2GRAY)

# Calls feature detect)

Canvas = detect(gray)

# Shows camera feed data

Computerview2.imshow('Vid, 'canvas)

# Regulation breaks when q is pushed

Where computervision2.waitKey(1) &

0xff==ord('q'):

Breakdown

# Unlock capture during all testing.

Vid capture.release) (Release)

Computerview2.destroyAllWindows)

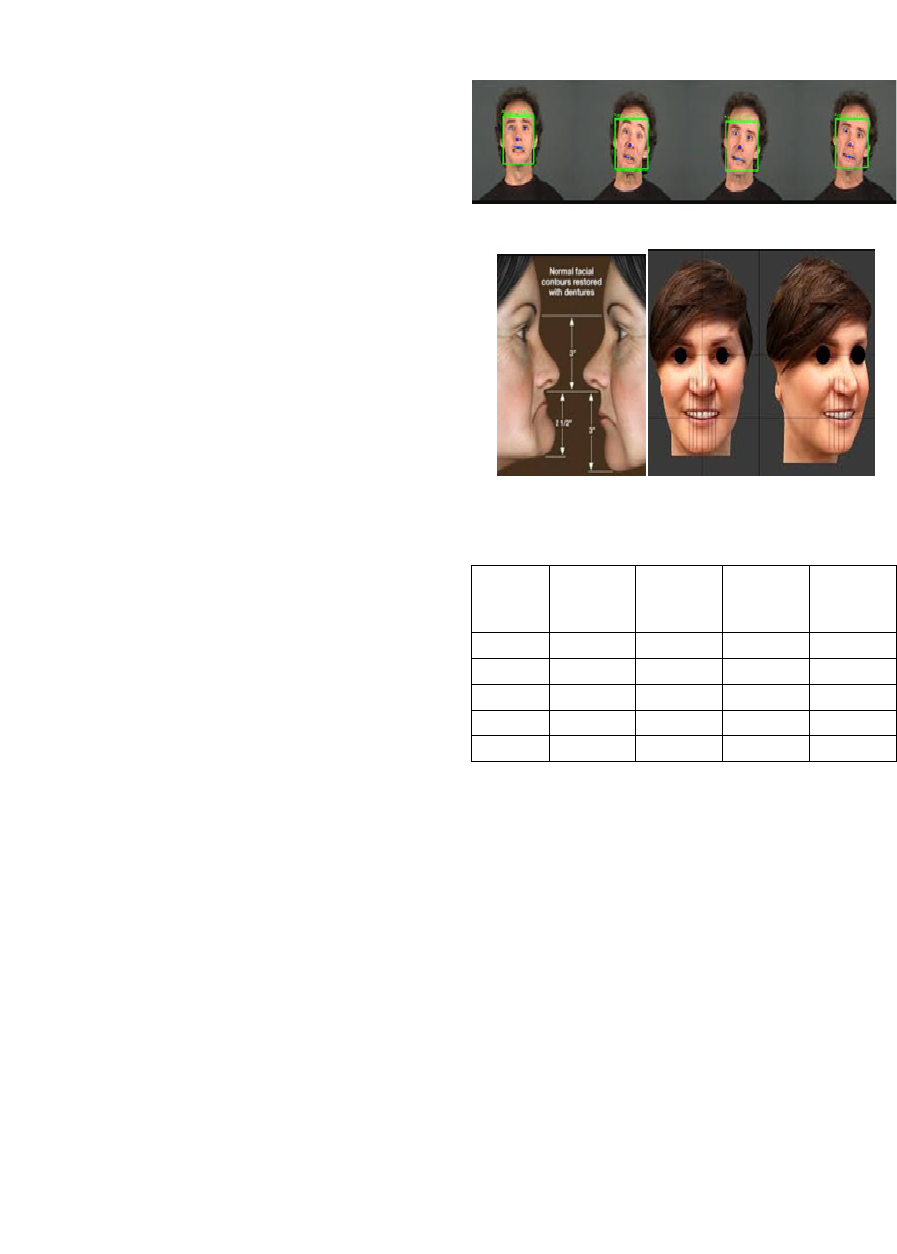

Figure 5: PUI with Face Smile Predictions.

Table 2: Evaluation Analytics.

Category

(Class)

Label

Accuracy

(%)

Specificity

(%)

Precision

(%)

Sensitivity

(%)

0 95 69 77 84

1 93 66 79 85

2 95 65 81 87

3 93 69 81 81

4 97 64 94 79

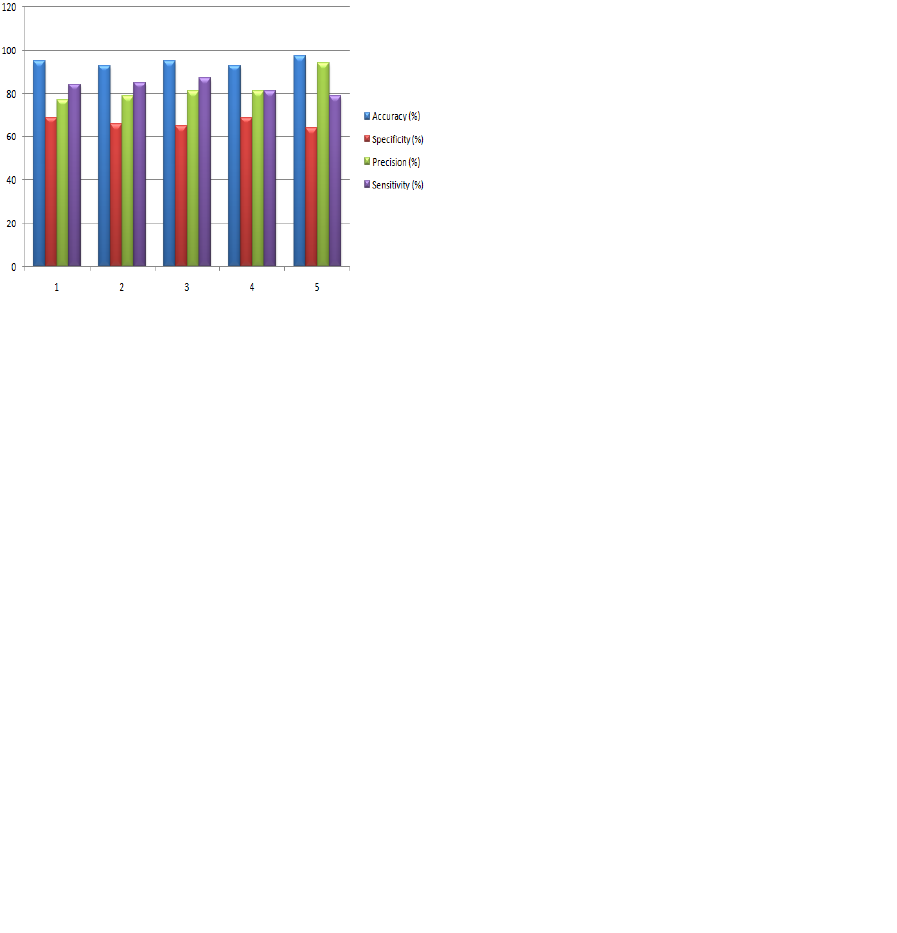

The figure 6 shows the different categories

specificity, sensitivity, precision and accuracy.

PP = Truly Identified Positive data points

NF = Falsely Identified Negative data points

PF = Falsely Identified positive data points

NP = Truly Identified negative data points

Sensitivit

y

= PP / PP+PF (1)

Speci

f

icit

y

= NP / NP+NF (2)

Precision = PP / PP+NF (3)

A

ccurac

y

= PP+NP / PP+NP+NF +PF (4)

The new MCI focus, recognized as PUIs, aims to

make the interaction between individuals more like

people's contact with the environment. In either case,

we concentrate on PUI and PUI motivated projects

emerging field: computer-based graphics techniques

for the visual thoughts of individual user awareness.

Figure 6: Assorted Patterns on Specificity, Sensitivity,

Precision and Accuracy.

4 CONCLUSION AND FUTURE

DIRECTION

While the precision of the face recognition method as

a biometric system is below iris recognition and

fingerprint recognition, it is commonly believed due

to non-invasive, contactless operation. Also, famous

recently as a method for commercial recognition and

promotion.

Integrating Perceptual User Interfaces and

associated dimensions with meta-heuristics will give

biometric analytics a higher degree of precision and

efficiency on several aspects. Our experiment has

provided the best results so far and still we can

improve accuracy if we can train networks with real

and fake databases and also in future work, we are

planning to present an effective approach for

detecting smiles in the wild with deep learning. Deep

learning can effectively integrate feature learning and

classification into a single model, unlike previous

work that extracted hand-crafted features from face

images and trained a classifier to perform smile

recognition in a two-step approach.

REFERENCES

El Haddad, K., Cakmak, H., Dupont, S., & Dutoit, T.

(2016). Laughter and smile processing for human-

computer interactions. Just talking-casual talk among

humans and machines, Portoroz, Slovenia, 23-28.

Vyshagh, A., & Vishnu, K. S. Study on Different

Approaches for Head Movement Deduction.

Phung, H., Hoang, P. T., Nguyen, C. T., Nguyen, T. D.,

Jung, H., Kim, U., & Choi, H. R. (2017, September).

Interactive haptic display based on soft actuator and soft

sensor. In 2017 IEEE/RSJ International Conference on

Intelligent Robots and Systems (IROS) (pp. 886-891).

IEEE.

Au, P. K. (2020). An application of FEATS scoring system

in Draw-A Person-in-the-Rain (DAPR): Distinguishing

depression, anxiety, and stress by projective drawing

(Doctoral dissertation, Hong Kong: Hong Kong Shue

Yan University).

Song, B., & Zhang, L. (2018, July). Research on Interactive

Perceptual Wearable Equipment Based on Conditional

Random Field Mining Algorithm. In 2018 International

Conference on Information Systems and Computer

Aided Education (ICISCAE) (pp. 164-168). IEEE.

Rizzo, Albert, et al. "Detection and computational analysis

of psychological signals using a virtual human

interviewing agent." Journal of Pain Management 9.3

(2016): 311-321.

Najm, Hayder, Hayder Ansaf, and Oday A. Hassen. "An

Effective Implementation of Face Recognition Using

Deep Convolutional Network." Journal of Southwest

Jiaotong University 54.5 (2019).

El Haddad, K., Cakmak, H., Doumit, M., Pironkov, G., &

Ayvaz, U. Social Communicative Events in Human

Computer Interactions.

De Oliveira, C. C. (2018). Experience Programming: an

exploration of hybrid tangible-virtual block based

programming interaction.

Oday A. Hassen, "Face smile and related dimension

analysis using deep learning", International Journal of

Enterprise Computing and Business Systems(IJECBS),

vol. 7, issue, 2, pp:1-13, 2017.

Taskirar, M., Killioglu, M., Kahraman, N., & Erdem, C. E.

(2019, July). Face Recognition Using Dynamic

Features Extracted from Smile Videos. In 2019 IEEE

International Symposium on INnovations in Intelligent

SysTems and Applications (INISTA) (pp. 1-6). IEEE.

Ryu, H. J., Mitchell, M., & Adam, H. (2017). Improving

smiling detection with race and gender diversity. arXiv

preprint arXiv:1712.00193, 1(2), 7.

Ugail, H., & Aldahoud, A. A. A. (2019). The Biometric

Characteriztics of a Smile. In Computational

Techniques for Human Smile Analysis (pp. 47-56).

Springer, Cham.

Ansaf, H., Najm, H., Atiyah, J. M., & Hassen, O. A.

Improved Approach for Identification of Real and Fake

Smile using Chaos Theory and Principal Component

Analysis. Journal of Southwest Jiaotong University,

54,5,(2019).

Hassena, Oday A., Nur Azman Abub, and Z. Zainal

Abidinc. "Human Identification System: A Review."

International Journal of Computing and Business

Research (IJCBR), Vol. 9. Issue 3, pp. 1-26, September

2019.

Hassen, Oday A., and Nur Azman Abo. "HAAR: An

Effectual Approach for Evaluation and Predictions of

Face Smile Detection." International Journal of

Computing and Business Research (IJCBR) 7.2 (2017):

1-8.

Hayder Najm, Haider K. Hoomod, Rehab Hassan,

“Intelligent Internet of Everything (IOE) Data

Collection for Health Care Monitor System “,

International Journal of Advanced Science and

Technology, Vol. 29, No. 4, 2020.

Hayder Najm, Haider K. Hoomod, Rehab Hassan, "A

proposed hybrid cryptography algorithm based on

GOST and salsa20", Periodicals of Engineering and

Natural Sciences,Vol 8, No 3, 2020.

Mahdi, Mohammed Salih, and Nidaa Falih Hassan. "A

Proposed Lossy Image Compression based on

Multiplication Table." Kurdistan Journal of Applied

Research 2.3 (2017): 98-102.

Azez, H. H., Ansaf, H. S. H., & Abdul-Hassan, H. A.

(2018). Secured Energy Aware Projected 5G Network

Architecture for Cumulative Performance in Advance

Wireless Technologies. International Journal of

Engineering & Technology, 7(4.17), 53-56.

Brasov, O. F. Proceeding of the IVth Balkan Congress of

History of Medicine. Bulletin of the Transilvania

University of Brasov• Vol, 2009, 6.

Khalaf, Mahdi, et al. "Schema Matching Using Word-level

Clustering for Integrating Universities’ Courses." 2020

2nd Al-Noor International Conference for Science and

Technology (NICST). IEEE, 2020.

Najm, Hayder, Haider Hoomod, and Rehab Hassan. "A

New WoT Cryptography Algorithm Based on GOST

and Novel 5d Chaotic System." (2021): 184-199.