Can I Just Pass by? Testing Design Principles for Industrial

Transport Robots

Marijke Bergman, Sandra Bedaf, Goscha van Heel and Janienke Sturm

School of HRM and Psychology, Fontys University of Applied Sciences, Eindhoven, The Netherlands

Keywords: Human-Robot Interaction, Transport Robot, AGV, Design Principles, Intent-expressive Behaviour, Legibility.

Abstract: Currently, two types of industrial collaborative robots are emerging: collaborative robot arms and transport

robots. For such robots to cooperate with humans, intuitive interaction is required. They have to display

behaviour that is predictable and legible and elicits positive emotions. In this paper we examine the application

of two general design principles to the design of transport robots: (1) use analogies from nature, and (2) adhere

to social rules. Both are expected to result in better user-experience and understanding of the behaviour and

intentions of a transport robot. The current study tests the effects of using 1a) a curved path and 1b) average

walking speed in combination with deceleration upon nearing the human, and 2a) swerving to the right and

2b) respecting personal space. The principles tested in this study show positive effects for user experience

and legibility. However, predictability is not improved. Options for additional adjustments, such as the use of

communicative lights, are discussed.

1 INTRODUCTION

In industry robots and humans cooperate increasingly

closely. So called collaborative robots are no longer

working in isolation, separated from their users by

fences or safety screens. The environment in which

collaborative robot operate will be less structured and

it is to be expected that more and more users will be

less experienced than traditional operators and will

have had less formal training to work with these

robots (Freese et al., 2018). In particular mobile

transport robots may encounter humans who are

casually passing by. Consequently, when

collaborative robots are implemented the human-

robot interaction changes as well. Collaborative

robots and humans form a team, as it were. In human

teams there is ample and timely exchange of

information (McNeese et al., 2018). Not all

information is exchanged verbally, there is a fair

amount of non-verbal communication as well. Such

sharing of information is necessary in human-robot

teams as well.

Cooperation and collaboration between robots

and humans require natural and intuitive interaction.

(Korondi et al., 2015). The fluency of the interaction

can be improved if humans can predict or anticipate

the actions of the robot (Hoffman & Breazeal, 2007;

2010). Predictable robot motion, motion that is

expected, supposedly helps humans to trust and

understand the robot (Dragan et al., 2013).

Furthermore, to obtain a fluent and intuitive

interaction, legible and intent-expressive behaviour is

required, helping humans to understand the robot’s

intentions, (Dragan et al., 2013; Lichtenthäler &

Kirsch, 2016).

Two types of robots are becoming common co-

workers in factories: 1) collaborative robotic arms

used for pick-and-place tasks or that may help

humans by handing over objects or mounting parts,

and 2) transport robots and autonomous guided

vehicles (AGV) that fetch and deliver parts and

products. Both types may encounter challenges where

intuitive, natural interaction and legible behaviour are

concerned. Interaction that is intuitive and natural

will not only improve user experience but will also

reduce cognitive load for the user.

The concept of cognitive load is used in

interaction design and the field of UX (user

experience) as the amount of mental resources needed

to use a product or its interface. Cognitive psychology

and engineering psychology use similar concepts

such as information load, task load and workload. The

amount of information humans can process is limited

by, amongst others, the capacity of working memory,

the complexity of the information, and the amount

178

Bergman, M., Bedaf, S., van Heel, G. and Sturm, J.

Can I Just Pass by? Testing Design Principles for Industrial Transport Robots.

DOI: 10.5220/0010144301780187

In Proceedings of the 4th International Conference on Computer-Human Interaction Research and Applications (CHIRA 2020), pages 178-187

ISBN: 978-989-758-480-0

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

and diversity of attention the interaction and other

events in the environment demand (see, e.g., Wickens

et al., 2015). In cases where more information has to

be processed than the available processing capacity

accommodates, humans will miss information, get

stressed and experience cognitive failures (Broadbent

et al., 1982; Simpson et al., 2005; Wadsworth et al.,

2003). Moreover, limiting cognitive load in human-

robot interaction is interrelated with increasing trust

in the robot (Ahmad et al., 2019; Novitsky et al.,

2018) and acceptance (Palinko & Sciutti, 2014).

In line with this, research shows that the more

predictable the motions of a robot are, the better

human task performance (Koppenborg et al., 2017)

and the higher experienced comfort or safety will be

(Butler & Aga, 2001; Tan et al., 2009). The use of

well thought out design principles could thus be

beneficial for interaction and teamwork (Petruck et

al., 2016). Many principles may be discerned,

however, for this study we will limit ourselves to two

general principles that are applicable in many

contexts and for most types of robots.

One principle is to make use of metaphors and

analogies from the natural world i.e. from nature.

We interpret the looks and actions of objects and

creatures we do not know within a frame of reference

based on things and situations that are familiar to us.

We create mental models using analogies and

metaphors of similar objects and situations from the,

mostly natural, world around us. Employing natural

cues utilizes existing, well-calibrated mental models

and improves the quality and efficiency of the

interaction (Goodrich & Olsen, 2003). Furthermore,

we are subject to animism and tend to project

characteristics of lifeforms onto non-living objects

(Korondi et al., 2015). In designing the behaviour of,

mostly social, robots this is used by modelling it after

human behaviour and interactions between humans

(Kittmann et al., 2015; Takayama et al., 2011). Such

modelling is claimed to help interpret, understand and

predict the motion behaviour of robots (Goodrich &

Olsen, 2003; Lichtenthäler & Kirsch, 2016). For

instance: “The industrial robot is like an extra arm to

work on the product”.

Using lifelike appearances or behaviour does not

necessarily mean that a robot should specifically look

and behave as a human (de Graaf et al., 2015). In

agreement with Kruse et al. (2013), we define

naturalness as the similarity of (low level) behaviour

between robots and living creatures. Bergman et al.

(2019) suggest that, in addition to or instead of

modelling after humans, emulating animalistic

behaviour and using animal metaphors can be used to

make the interaction with collaborative robots more

intuitive and to support building useful mental

models. For instance: “The transport robot is like a

dog fetching things”. Similar claims are made by

others (Koay et al., 2013; Philips et al., 2012; Sharp

et al., 2019). Humans often have an intuitive

understanding of what an animal is communicating

and how to interpret their signalling behaviour. Thus,

mimicking or emulating relevant aspects of such

behaviour may serve well to improve the legibility of

robot behaviour (Lichtenthäler & Kirsch, 2016).

However, care should be taken to assure that such

analogies and metaphors are suitable in the context in

which they are used. Also, the looks and behaviour

should be consistent with the actual capabilities of the

robot (Rose et al., 2010), thus providing relevant cues

and interaction affordances (Hoffman & Ju, 2014).

Another principle is to adhere to social rules.

This principle partly overlaps with using analogies

from the natural world. Behaviour displayed by

humans as well as some animals conform to social

rules. Various studies in human-robot interaction

show that similar social rules displayed by the robot,

result in positive user experience and more intuitive

interaction. Consequently, the robot is seen as

sociable, where sociability can be defined as adhering

to (high-level) cultural conventions (Kruse et al.,

2013).

Social skills are considered vital for robots that

function as companions or assistants (Ogden &

Dautenhahn, 2000), but smoothen interaction with

other types of robots as well. Among such skills are

not interrupting humans unnecessarily, moving out of

the way and slowing down when getting close,

avoiding to approach a human from behind, and

showing awareness or attention (Dautenhahn, 2007).

For instance, respecting someone’s personal space

makes a user feel safer and more comfortable around

a robot (Bortot et al., 2012; Rios-Martinez et al.,

2015; Tan et al., 2009). Being polite through

approaching and turning toward a user helps to

initiate interaction (Kato et al., 2015).

Acknowledging a user by a social gesture like

nodding, increases the social acceptance of an

industrial, non-humanoid, robot (Elprama et al.,

2016).

In this study we focus on applying these two

interrelated design principles, as to how they help

users to understand the behaviour of and interact

intuitively with transport robots. Expectations are,

that using design principles that a) use analogies from

nature and b) adhere to social rules, will result in a

more positive experience and in a better

understanding of the behaviour and intentions of a

transport robot. That is, the application of such

Can I Just Pass by? Testing Design Principles for Industrial Transport Robots

179

principles will result in positive emotions or affect as

opposed to negative emotions or affect, and it will

result in higher legibility and predictability of the

behaviour. We aim at a parsimonious approach,

looking for maximal effects of minimal adjustments

in existing collaborative transport robots. The

movements and behaviour in robots currently

available, are limited mainly by technical constraints

and safety guidelines. Redesigning the behaviour of

such robots is considered challenging (Dautenhahn,

2007; Liu et al., 2019). In addition to being

parsimonious, adjustments should contribute to

reducing the cognitive load put on the user.

2 DESIGN PRINCIPLES FOR

TRANSPORT ROBOTS

Assuming that such general principles as mentioned

above may improve user experience, it is useful to

determine how these principles can be made more

specific for transport robots in an industrial work

environment. Here AGVs and transport robots are no

longer confined to warehouses where they work in

isolation. Autonomous transport robots now emerge

in settings where they, for instance, bring and fetch

parts for assembly workers. They thus move around

in factory halls together with humans. So, the

question arises what natural behaviour would be

suitable to use. Which social rules are important for

an industrial transport robot?

2.1 Analogies from Nature

Implementing natural motion in a transport robot

aims at increasing its acceptability through making it

behave more like a human or an animal. To emulate

natural behaviour and to make a transport robot more

lifelike some animation techniques may be useful.

For instance, in nature living creatures usually follow

arched trajectories, in contrast to mechanical objects

that more often follow straight paths. Thus, making a

transport robot move through a curved path can be

used to make it more predictable (Kruse et al., 2013;

Olivera & Simmons, 2002) or to deduce its intentions

more easily (Mavrogiannis & Knepper, 2019).

A second animation technique slow in and slow

out can suggest the natural acceleration and

deceleration of living creatures. Also, it is known that

creatures or objects approaching at high speed, elicit

fear and often result in flight reactions (Stankowich

& Blumstein, 2005). In line with this, Kirby et al.

(2009) suggest limiting the velocity of transport

robots to human walking speed between 1 and 2 m/s,

as to increase perceived safety. Butler and Aga

(2001), and Pacchierotti et al. (2005) also showed that

the relative speed of a mobile robot is an important

factor in user experience. Additionally, Kruse et al.

(2013) showed that reducing velocity when

approaching a person improves user experience with

mobile robots. Existing mobile robots mostly

conform to walking speed because of safety

regulations.

In addition, it may be helpful to make internal

robot states, like being stand by or in error mode,

visible in an intuitive manner. This may be achieved

by mimicking being at rest or sleeping, or being

confused, and draws on a familiar frame of reference.

Animated lights (Baraka et al., 2016) or a pulsing

light mapped to the rhythm of a human heartbeat

(Wessolek in Harrison et al., 2012), for example, may

be used to communicate state or intentions. In this

case light is used to

It is suggested regularly, that adding a face or a

snout to a robot will help to generate a focal point for

interaction, referring to objects or locations and

helping to infer the intentions of a robot. However,

adding a head or snout and gazing behaviour to a

transport robot would require quite extensive

adjustments and hardly be parsimonious (Admoni &

Scassellati, 2017). Also gazing behaviour may

increase the cognitive load if it distracts the user from

the task at hand.

In the current research a) a curved path or arc, and

b) average walking speed in combination with slow in

and slow out will be used deliberately to make the

communicative behaviour of the robot more intuitive

and to

improve human-robot interaction. Some

existing AGVs and transport robots use of a curved

path to avoid obstacles or average walking speed in

combination with slow in and slow out for safety

reasons. However, these movements are often

unintentional, i.e. not the result of consistent

implemented natural motions derived from analogies

from nature.

2.2 Social Rules

According to Kruse et al. (2013) applying social rules

deals with modelling and respecting cultural norms.

This helps to prevent discomfort and to improve the

interactions. Typical rules in the context of mobile

robots are that the robot should keep an adequate

distance as to respect personal space, should move to

its right when it approaches from the front or should

slow down when encountering humans. Currently,

many transport robots approach humans as obstacles,

CHIRA 2020 - 4th International Conference on Computer-Human Interaction Research and Applications

180

moving in a straight line towards the obstacle before

stopping or going around it at a short distance.

In general, humans prefer to stay out of each

other’s personal or intimate space, when close contact

is not essential. Several studies show, that humans

feel more comfortable with mobile robots that respect

personal space by remaining at a distance of 1.22m

up to 2.44m (Khambhaita & Alami, 2020; Kirby,

2010; Kruse, et al., 2013; Rios-Martinez et al., 2015;

Torta et al., 2013; Walters et al., 2009). Shorter

distances may sometimes be acceptable though. This

may depend on the specific context and spatial layout

or cultural and personal differences (Kirby, 2010),

and by the size of the robot (Butler & Agah, 2001).

Yet overall, humans prefer a robot to stay out of their

personal and intimate space when they pass each

other (Pacchierotti et al., 2006).

Moving to one’s right when someone is walking

towards another person is a common social rule in

many countries. When a mobile social robot is

approaching a person in a hallway it is also preferred

that the robot moves to its right side of the hallway

(Kirby et al., 2009; Pacchierotti et al., 2005; Rios-

Martinez, 2015). In contrast, Neggers et al., (2018)

claim that there is no difference between left or right

passage. Some studies indicate that the onset of this

evasive movement should start in time (Fernandez et

al., 2018; Pacchierotti et al., 2006) or at a distance of

6 meters (Pacchierotti et al., 2005). The optimal

lateral distance between human and robot is

inconclusive. Some studies state that the robot should

move as far to its right as the layout of the hallway

allows (Pacchierotti et al., 2005). However, this

lateral distance may also be influenced by many

different factors such as the form, size and speed of

the robot (Rios-Martinez, 2015).

It is to be expected, that the aforementioned social

rules will apply to social robots and transport robots

or AGVs alike. The current study will include a)

swerving to the robot’s right, and b) respecting

personal space by keeping an adequate distance from

humans.

2.3 Current Study

The current study explores a) the effect of the

movements of a transport robot on the experienced

emotions or affect by humans, and b) the effect of the

movements of the robot on its legibility and

predictability. We examine how using the design

principles, as explained above, influence the user

experience, as well as the legibility and predictability

of the movement behaviour of the robot. We follow

the definitions given by Dragan et al. (2013) and

Lichtenthäler and Kirsch (2016), where legible

behaviour is behaviour that ensures the intentions of

the robot can be understood, and predictable motion

is motion that is expected and can be foretold, helping

humans to understand the robot’s intentions (Dragan

et al., 2013). It is to be expected, that the application

of the principles used here, will result in positive

emotions or affect, as opposed to negative emotions

or affect, and it will result in higher legibility and

predictability of the behaviour.

The user tests consist of two experimental

conditions where a mock-up transport robot, inspired

by the MiR100, is used. The transport robot

approaches a human from the front before passing, in

a constrained area like a factory hall: 1) the robot

moves along a straight path and, using the animation

technique slow in and slow out, stops in front of the

participant, and 2) the robot uses a curved path, based

on the animation technique arched trajectories, to

move around the participant using social rules such as

swerving to its right and respecting personal space.

Both conditions will use an average walking speed.

All dependant variables, affect, legibility and

predictability, will be measured by asking

participants to rate their experiences on a 5-point

rating scale.

3 METHOD

3.1 Participants

The individual user tests with two conditions were

conducted at Fontys University of Applied Sciences

in Eindhoven, the Netherlands. A total of 30 adults

(13 male and 17 female) participated in the test.

Among the participants were participants from the

general public, as well as students and lecturers of the

Fontys school of HRM and Psychology. Most

participants had little or no experience with transport

robots or AGVs. All participants were aged 18+.

Further background information was not registered

for privacy reasons. Participants were selected based

on their availability at the test location and randomly

assigned to one of the two conditions. This resulted in

8 male and 7 female participants for condition 1, and

5 male and 10 female participants for condition 2.

3.2 Measures

The questionnaire consisted of a total of 15 items that

were rated on a 5–point answering scale (1 = totally

disagree, through 5 = totally agree) as a subjective

measure of the user experience. The first part of this

Can I Just Pass by? Testing Design Principles for Industrial Transport Robots

181

questionnaire was loosely based on the item scales

perceived safety and likeability from the Godspeed

questionnaire (Bartneck et al., 2009), translated from

English into Dutch. These items were used to measure

positive and negative affect. A statement “I felt …”

was used, followed by one of 13 adjectives, for

example safe, agitated, relaxed, anxious, pleasant or

unpleasant. All adjectives were placed in random

order. Additionally, two statements to assess the

legibility and predictability of the robot behaviour

were included (Lichtenthäler & Kirsch, 2016).

Furthermore, a short semi-structured interview

was conducted afterwards to gather additional

information on how participants experienced the

specific movements of the transport robot, as well as

its speed. These interviews will help to understand

how and why the design principles may work for the

participants.

3.3 Procedure

The user tests were conducted in a public space at the

university. A radio-controlled car was given a casing

inspired by the looks of the MiR100 (Mobile

Industrial Robots A/S; see Figure 1). The MiR100 is

an autonomous transport robot that measures 890 mm

x 580 mm x 352 mm. Its maximum speed is 1.5 m/s,

which is comparable to an average walking speed. A

Wizard of Oz method was applied in this study, as the

test leader operated the mock-robot without the

participants knowing. All user tests were filmed with

permission of the participants. After a short

introduction and signing of the informed consent,

participants were assigned to one of the two

conditions.

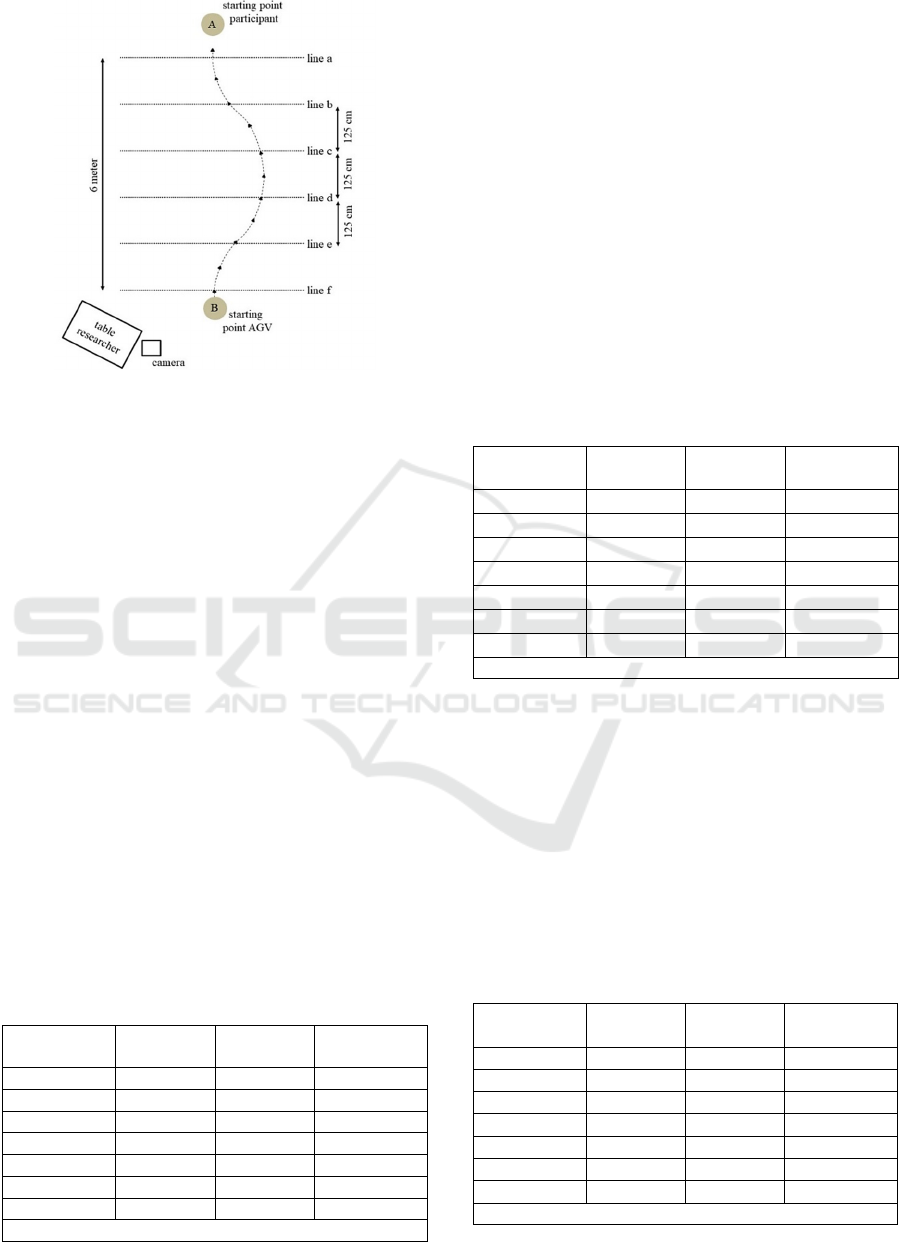

In condition 1 the robot started to move once the

participants passed line a, at an average walking

speed of approximately 1.1 m/s to 1.4 m/s, following

a straight path from Point B towards point A (see

Figure 2). The robot started to slow down after

passing line e and when the participant reached line c

the robot stopped at line d.

In condition 2 the robot also started to move at

average walking speed in a straight path from Point B

towards point A once the participants passed line a

(see Figure 3). After passing line f the robot moved to

the right using a curved path. The distance between

the participants and the robot, between line c and line

d, was approximately 30 cm. After passing line c the

robot returned to its original path, again through a

curved path, and continued to move towards point B

in a straight path.

Figure 1: Scaled radio-controlled car with the casing

inspired by the looks of a MiR100.

For both conditions, points A and B were the same

and marked on the floor using tape, just as line a

through f (see Figure 2 and Figure 3). Participants

were instructed to stand on point A and to start

walking, at normal speed, towards Point B once the

researcher would say “go”.

Figure 2: Straight path of the robot in condition 1.

Participants were informed that when they would

start to walk, the robot would start to move towards

them. However, no information was given on how the

robot would move. This situation was closely

observed to see if and when the participant would

walk around the robot to reach point B. After

performing the test, participants were asked to fill out

the questionnaire and to participate in the interview.

All interviews were audio recorded.

For the quantitative data from the questionnaire,

statistical tests were performed to determine

differences between the two conditions and to

examine to what extent the scores for affect deviate

from the middle value of the rating scale. The

qualitative data from the interviews were transcribed

and coded following the grounded theory method of

open, axial, and selective coding.

CHIRA 2020 - 4th International Conference on Computer-Human Interaction Research and Applications

182

Figure 3: Curved path of the robot in condition 2.

4 RESULTS

4.1 User Experience and Affect

To determine the effect of the movements of the robot

on the experienced emotions or affect, the scores on

the items measuring affect were tested against the

scale middle value, i.e. 3 on a 5-point scale (One

Sample t Test). Twelve of the 13 items measuring

affect were used in the analyses. One item, surprising,

was excluded, since it was deemed ambiguous.

For condition 1, straight path, the mean scores for

items indicating negative affect are, in general,

significantly lower than the scale middle value (see

Table 1). Of the scores for items indicating positive

affect, only the items calm (t(28) = 2.358, p = .033,

M = 3.6, SD = .99) and relaxed (t(28) = -3.761, p =

.002, M = 3.9, SD = .96) are a significantly higher

than the middle value. These results indicate that, in

general, the straight path of the robot is not

experienced distinctly negative by the participants.

Table 1: Mean scores for negative affect in condition 1

tested against the middle value of the 5-point rating scale.

Affect Mean SD

t-value

df = 28

intimidated 2.3 1.23 -2.092*

suspicious 3.0 1.36 0

uneasy 2.2 1.27 -2.449**

tensed 2.2 1.15 -2.703**

unpleasant 2.0 1.00 -3.873**

scared 1.6 0.74 -7.359**

overall 2.22

* p-value ≤ .10; ** p-value ≤ .05

Moreover, the scores are rather neutral for this

condition.

For condition 2, curved path, the scores for

negative affect are, similar to condition 1, lower than

the middle value of the 5-point answering scale (see

Table 2), implying that the robot using a curved path

is not experienced as negative either. Though the

scores for negative affect in condition 2 seem to be

even lower than in condition 1, the difference

between the two conditions is not significant (using

an Independent Samples t Test). However, when

comparing individual items, it became apparent that

the curved path used in condition 2 was experienced

as less intimidating by the participants (M = 1.6, SD

= .91), than the straight path of the robot in condition

1 (M = 2.3, SD = 1.23), t(28) = 1.852, p = .075.

Table 2: Mean scores for negative affect in condition 2

tested against the middle value of the 5-point rating scale.

Affect Mean SD

t-value

df = 28

intimidated

1.6

0.91

-5.957**

suspicious

2.9

1.25

-0.414

uneasy

2.2

1.08

-2.863**

tensed

2.1

1.10

-3.287**

unpleasant

1.9

0.96

-4.298**

scared

1.6

1.11

-4.641**

overall

2.06

** p-value ≤ .05

The scores for positive affect in condition 2 were,

in general, above the middle value of the 5-point

answering scale, though not all significantly so (see

Table 3). This implies that the robot using a curved

path is experienced as fairly positive. Overall, the

scores for positive affect do not show significant

differences between conditions 1 (M = 3.4, SD =

0.18) and condition 2 (M = 3.6, SD = 1.09), using an

Independent Samples t Test. It seems that neither a

curved, nor a straight path do elicit strong emotions

Table 3: Mean scores for positive affect in condition 2

tested against the middle value of the 5-point rating scale.

Affect Mean SD

t-value

df = 28

safe

3.8 1.42 2.175**

comfortable

3.4 1.24 1.247

at ease

3.5 1.19 1.522

tranquil

3.8 1.08 2.863**

relaxed

3.3 1.05 1.234

calm

3.9 1.19 2.827**

overall

3.6

** p-value ≤ .05

Can I Just Pass by? Testing Design Principles for Industrial Transport Robots

183

4.2 Legibility and Predictability

To determine the effect of the movement of the robot

on its legibility and predictability, the scores on these

items were compared for the two conditions, again

using an Independent Samples t Test. The scores for

these items indicate that participants rated the curved

path of the robot in condition 2 (M = 4.1, SD = 1.35)

as significantly more legible than the straight path

used in condition 1 (M = 3.1, SD = 0.99), t(28) = -

2,783, p = 0,011). The scores regarding the

predictability of the robot of condition 1 (M = 2.4, SD

= 0.9) and condition 2 (M = 2.7, SD = 1.34) showed

no significant difference. Also, both conditions score

below the middle value of 3 on the 5-point answering

scale, suggesting both the straight and the curved path

were not seen as very predictable.

In addition to the quantitative analyses, the results

from the semi-structured interviews clearly show

overlap with the results from the questionnaires. For

both conditions, participants stated they failed to

predict the robot’s next move. They explained that

they, unsuccessfully, searched for contact with the

robot, hoping to be able to predict its next move.

Several participants compared this need for contact to

the situation where two people, who walk in the

opposite direction towards each other, can

communicate their path and their next move using

non-verbal behaviour, such as eye contact in

combination with body language. In order to improve

the legibility, participants suggested to add turn

signals and brake lights to the robot, or to project its

path on the floor.

4.3 Qualitative Data

Where the design principles are concerned, the

qualitative analyses give some interesting additional

insights. First, the use of analogies from nature

through moving along an arched trajectory, results in

less negative experiences shared by the participants,

than the statements made by the participants who

experienced the straight path condition. Participants

of condition 2 mentioned that using the curved path

resulted in the robot displaying more natural

movement or behaviour. Such an experience was not

mentioned by the participants of condition 1.

The participants of condition 1 appreciated the

deceleration of the robot, which was based on the

animation technique slow in slow out, as it gave them

the time to anticipate the actions of the robot.

However, the majority of them did not understand

why the robot slowed down and stopped in front of

them. Multiple participants also wished that the robot

would have moved to the side instead of moving

straight towards them.

The majority of the participants thought the

velocity limited at natural walking speed of the robot

was appropriate. Some participants, however,

preferred the robot to move slower.

As for adhering to social rules, in condition 1 the

vast majority of the participants thought the stopping

(social) distance of 125 cm was fine. In condition 2

the robot passed the participant at a distance of 30 cm.

None of the participants of condition 2 experienced

this as an anxious or threatening situation.

5 DISCUSSION

Overall, the use of the two design principles tested

here, show positive effects for the user experience

Analogies from nature, operationalized through the

curved path and the deceleration of the robot, show

positive effects on the user experience, in particular

where affective experiences are concerned. This

coincides with adhering to social rules such as

swerving to the right. Also, these design principles

appear to improve the legibility of the transport robot.

However, as far as predictability is concerned, these

principles do not seem to contribute much. This result

is in contrast with previous research (Kruse et al.,

2013; Olivera & Simmons, 2002). Yet, other earlier

studies indicate that path adaptation may be more

confusing and more uncomfortable than velocity

adaptation (Kruse, 2014). Furthermore, the concepts

legibility and predictability may be confounded

(Lichtenthäler & Kirsch, 2016). In the current

experiment, the participants in condition 1 experience

the adaptation in velocity as positive, as it gives them

time to anticipate the behaviour of the robot. This is

in line with the findings of Kruse.

Though the design principles used did appear to

improve the legibility of the robot, there is still room

for further improvement. In order to improve the

legibility further, it is important to provide useful cues

regarding the internal state of the robot, such as its

intentions. However, care should be taken to avoid

accidentally creating misleading cues (Kruse et al.,

2014). In line with the goal of the project, minimal

adjustments to the robot, while creating a maximum

impact, are preferred.

Add-ons such as light or sound fit these criteria.

As the transport robot operates in noisy industrial

settings, add-ons using sound are not very obvious.

Adding light signals, on the other hand, does not

require extensive adjustments to current transport

robots and AGVs. Light signals are rather easy to

CHIRA 2020 - 4th International Conference on Computer-Human Interaction Research and Applications

184

detect for humans and in addition they attract

attention. Furthermore, they are readily interpreted as

an attempt to communicate (Fernandez et al., 2018).

Participants in the current study indicate, that the

legibility or the predictability of the robot could be

improved by adding light signals. They thought of

turn signals or brake lights, similar to those used on

cars, or to use a projection to show its direction and

purpose. Several studies already focussed on adding

light interfaces to, for example, cars or drones

(Habibovic et al., 2018; Szafir et al., 2015) or use

projection to communicate directions (Chadalavada,

2016; Chadalavada et al., 2020). Therefore, adding

communicative light signals to a transport robot is an

interesting option to explore further. However, it

should be taken into account that communicating the

intention of a transport robot is more complex than

adding a simple turn signals, since these are difficult

to interpret when detached from the context of cars

(Fernandez et al., 2018).

The user tests were performed in a in a public

space at the university, which is not a very realistic

working environment of a transport robot. Using a

scaled radio-controlled car with the chasing inspired

by the looks of a MiR100 in combination with the

Wizard of Oz method is a relative simple method to

test complex robot behaviour with users (Dahlbäck et

al., 1993; Walters et al., 2005). However, the chasing

and scaled size of the mock-up transport robot used

may have influenced the results. The slightly smaller

scale may, for instance, appear friendlier or less

threatening than the actual MiR100. Additionally,

simulating consistent robot behaviour is difficult for

a human operator, even in similar situations (Walters

et al., 2005). Using the Wizard of Oz method may

have led to inconsistencies between the sessions.

Additional experiments should take place in a more

realistic setting, using actual transport robots or

AGVs. Further, it is advisable to explore the possible

confounding of the concepts legibility and

predictability.

6 CONCLUSION

To make the behaviour of transport robots more

legible and predictable, one needs carefully thought

out design principles. Two general design principles

were examined here: (1) use analogies from nature

and (2) adhere to social rules. The effects of using

natural walking speed, timely deceleration (slow in

and slow out), a curved path (arched trajectory), in

combination with swerving to the right, show positive

effects for the user experience and improve the

legibility of the transport robot. Future research may

explore the distinguished effects of these variables.

In order to further improve the legibility of

transport robots and AGVs communicative light

signals could be used to convey intentions. However,

more research is needed to determine which signals

are most appropriate to ensure intuitive interaction in

an industrial setting. Applying proven principles will

contribute to intuitive interaction between humans

and collaborative robots, and promote effective

teamwork.

ACKNOWLEDGEMENTS

This research is funded by the Dutch Ministry of

Economic affairs through the SIA-RAAK program,

project “Close encounters with co-bots”

RAAK.MKB.08.018.

REFERENCES

Admoni, H. & Scassellati, B. (2017). Social eye gaze in

human-robot interaction: a review. Journal of Human-

Robot Interaction, 6(1), 25-63.

Ahmad, M.I., Bernotat, J., Lohan, K., & Eyssel, F. (2019).

Trust and Cognitive Load During Human-Robot

Interaction. arXiv preprint arXiv:1909.05160.

Baraka, K., Paiva, A., & Veloso, M. (2016). Expressive

lights for revealing mobile service robot state. In Robot

2015: Second Iberian Robotics Conference (pp. 107-

119). Springer, Cham.

Bartneck, C., Kulić, D., Croft, E., & Zoghbi, S. (2009).

Measurement instruments for the anthropomorphism,

animacy, likeability, perceived intelligence, and

perceived safety of robots. International journal of

social robotics, 1(1), 71-81.

Bergman, M., de Joode, E., de Geus, M., & Sturm, J.

(2019). Human-cobot Teams: Exploring Design

Principles and Behaviour Models to Facilitate the

Understanding of Non-verbal Communication from

Cobots. In Proceedings of the 3rd International

Conference on Computer-Human Interaction Research

and Applications (CHIRA) (pp. 191-198).

Bortot, D., Ding, H., Antonopolous, A., & Bengler, K.

(2012). Human motion behavior while interacting with

an industrial robot. Work, 41(Supplement 1), 1699-

1707.

Broadbent, D.E., Cooper, P.F., FitzGerald, P., & Parkes,

K.R. (1982). The cognitive failures questionnaire

(CFQ) and its correlates. British journal of clinical

psychology, 21(1), 1-16.

Butler, J.T. & Agah, A. (2001). Psychological effects of

behavior patterns of a mobile personal robot.

Autonomous Robots, 10(2), 185-202.

Chadalavada, R. T. (2016). Human robot interaction for

Can I Just Pass by? Testing Design Principles for Industrial Transport Robots

185

autonomous systems in industrial environments

[Unpublished master's thesis] Chalmers University.

Chadalavada, R.T., Andreasson, H., Schindler, M., Palm,

R., & Lilienthal, A.J. (2020). Bi-directional navigation

intent communication using spatial augmented reality

and eye-tracking glasses for improved safety in human–

robot interaction. Robotics and Computer-Integrated

Manufacturing, 61, 101830.

Dahlbäck, N., Jönsson, A., & Ahrenberg, L. (1993,

February). Wizard of Oz studies: why and how. In

Proceedings of the 1st international conference on

Intelligent user interfaces (pp. 193-200).

Dautenhahn, K. (2007). Socially intelligent robots:

dimensions of human–robot interaction. Philosophical

Transactions of the Royal Society B: Biological

Sciences, 362(1480), 679-704.

de Graaf, M.M., Allouch, S.B., & Van Dijk, J.A.G.M.

(2015). What makes robots social?: A user’s

perspective on characteristics for social human-robot

interaction. In International Conference on Social

Robotics (pp. 184-193). Springer, Cham.

Dragan, A.D., Lee, K.C., & Srinivasa, S.S. (2013).

Legibility and predictability of robot motion. In 2013

8th ACM/IEEE International Conference on Human-

Robot Interaction (HRI) (pp. 301-308). IEEE.

Elprama, B.V.S.A., El Makrini, I., & Jacobs, A. (2016).

Acceptance of collaborative robots by factory workers:

a pilot study on the importance of social cues of

anthropomorphic robots. In International Symposium

on Robot and Human Interactive Communication.

Fernandez, R., John, N., Kirmani, S., Hart, J., Sinapov, J.,

& Stone, P. (2018). Passive demonstrations of light-

based robot signals for improved human

interpretability. In 2018 27th IEEE International

Symposium on Robot and Human Interactive

Communication (RO-MAN) (pp. 234-239). IEEE.

Freese, C., Dekker, R., Kool, L., Dekker, F., & van Est, R.

(2018). Robotisering en automatisering op de

werkvloer – bedrijfskeuzes bij technologische

innovaties. Den Haag: Rathenau Instituut.

Goodrich, M.A. & Olsen, D.R. (2003). Seven principles of

efficient human robot interaction. In SMC'03

Conference Proceedings. 2003 IEEE International

Conference on Systems, Man and Cybernetics.

Conference Theme-System Security and Assurance

(Cat. No. 03CH37483) (Vol. 4, pp. 3942-3948). IEEE.

Habibovic, A., Lundgren, V.M., Andersson, J., Klingegård,

M., Lagström, T., Sirkka, A., Fagerlönn, J., Edgren, C.,

Fredriksson, R., Krupenia, S., & Saluäär, D. (2018).

Communicating intent of automated vehicles to

pedestrians. Frontiers in psychology, 9, 1336.

Harrison, C., Horstman, J., Hsieh, G., & Hudson, S. (2012).

Unlocking the expressivity of point lights. In

Proceedings of the SIGCHI Conference on Human

Factors in Computing Systems (pp. 1683-1692).

Hoffman, G. & Breazeal, C. (2007). Effects of anticipatory

action on human-robot teamwork efficiency, fluency,

and perception of team. In Proceedings of the

ACM/IEEE international conference on Human-robot

interaction (pp. 1-8). ACM.

Hoffman, G. & Breazeal, C. (2010). Effects of anticipatory

perceptual simulation on practiced human-robot tasks.

Autonomous Robots, 28(4), 403-423.

Hoffman, G. & Ju, W. (2014). Designing robots with

movement in mind. Journal of Human-Robot

Interaction, 3(1), 91-122.

Kato, Y., Kanda, T., & Ishiguro, H. (2015). May I help

you?-Design of human-like polite approaching

behavior. In 2015 10th ACM/IEEE International

Conference on Human-Robot Interaction (HRI) (pp.

35-42). IEEE.

Khambhaita, H. & Alami, R. (2020). Viewing robot

navigation in human environment as a cooperative

activity. In Robotics Research (pp. 285-300). Springer,

Cham.

Kirby, R., Simmons, R., & Forlizzi, J. (2009). Companion:

A constraint-optimizing method for person-acceptable

navigation. In RO-MAN 2009-The 18th IEEE

International Symposium on Robot and Human

Interactive Communication (pp. 607-612). IEEE.

Kirby, R. (2010). Social Robot Navigation [Unpublished

Doctoral dissertation] Carnegie Mellon University.

Kittmann, R., Frolich, T., Schäfer, J., Reiser, U.,

Weisshardt, F., & Haug, A. (2015). Let me Introduce

Myself: I am Care-O-bot 4, a Gentleman Robot. In:

Mensch und Computer 2015 Tagungsband.

Koay, K.L., Lakatos, G., Syrdal, D.S., Gácsi, M., Bereczky,

B., Dautenhahn, K., Miklósi, A., & Walters, M.L.

(2013). Hey! There is someone at your door. A hearing

robot using visual communication signals of hearing

dogs to communicate intent. In 2013 IEEE Symposium

on Artificial Life (ALife) (pp. 90-97). IEEE.

Koppenborg, M., Nickel, P., Naber, B., Lungfiel, A., &

Huelke, M. (2017). Effects of movement speed and

predictability in human–robot collaboration. Human

Factors and Ergonomics in Manufacturing & Service

Industries, 27(4), 197-209.

Korondi, P., Korcsok, B., Kovács, S., & Niitsuma, M.

(2015). Etho-robotics: What kind of behaviour can we

learn from the animals?. IFAC-papersonline, 48(19),

244-255.

Kruse, T., Kirsch, A., Khambhaita, H., & Alami, R. (2014).

Evaluating directional cost models in navigation. In

Proceedings of the 2014 ACM/IEEE international

conference on Human-robot interaction (pp. 350-357).

Kruse, T., Pandey, A.K., Alami, R., & Kirsch, A. (2013).

Human-aware robot navigation: A survey. Robotics and

Autonomous Systems, 61(12), 1726-1743.

Lichtenthäler, C. & Kirsch, A. (2016). Legibility of robot

behavior: a literature review. Available from:

https://hal.archives-ouvertes.fr/hal-01306977

Liu, C., Tang, T., Lin, H.C., & Tomizuka, M. (2019).

Designing robot behavior in human-robot interactions.

CRC Press.

Mavrogiannis, C.I. & Knepper, R.A. (2019). Multi-agent

path topology in support of socially competent

navigation planning. The International Journal of

Robotics Research, 38(2-3), 338-356.

McNeese, N.J., Demir, M., Cooke, N.J., & Myers, C.

(2018). Teaming with a synthetic teammate: Insights

CHIRA 2020 - 4th International Conference on Computer-Human Interaction Research and Applications

186

into human-autonomy teaming. Human factors, 60(2),

262-273.

Neggers, M.M., Cuijpers, R.H., & Ruijten, P.A. (2018).

Comfortable passing distances for robots. In

International conference on social robotics (pp. 431-

440). Springer, Cham.

Ogden, B. & Dautenhahn, K. (2000). Robotic etiquette:

Structured interaction in humans and robots. In Procs

SIRS 2000, 8th Symp on Intelligent Robotic Systems.

University of Reading.

Olivera, V.M. & Simmons, R. (2002). Implementing

human-acceptable navigational behavior and a fuzzy

controller for an autonomous robot. In Proceedings

WAF: 3rd workshop on physical agents (pp. 113-120).

Pacchierotti, E., Christensen, H.I., & Jensfelt, P. (2005).

Human-robot embodied interaction in hallway settings:

a pilot user study. In ROMAN 2005. IEEE International

Workshop on Robot and Human Interactive

Communication, 2005. (pp. 164-171). IEEE.

Pacchierotti, E., Christensen, H.I., & Jensfelt, P. (2006).

Evaluation of passing distance for social robots. In

Roman 2006-the 15th ieee international symposium on

robot and human interactive communication (pp. 315-

320). IEEE.

Palinko, O. & Sciutti, A. (2014). Exploring the estimation

of cognitive load in human robot interaction. In

Workshop HRI: A Bridge between Robotics and

Neuroscience at 9th ACM/IEEE International

Conference on Human-Robot Interaction (pp. 3-6).

Petruck, H., Kuz, S., Mertens, A., & Schlick, C. (2016).

Increasing safety in human-robot collaboration by

using anthropomorphic speed profiles of robot

movements. In Advances in Ergonomics of

Manufacturing: Managing the Enterprise of the Future

(pp. 135-145). Springer, Cham.

Rios-Martinez, J., Spalanzani, A., & Laugier, C. (2015).

From proxemics theory to socially-aware navigation: A

survey. International Journal of Social Robotics, 7(2),

137-153.

Rose, R., Scheutz, M., & Schermerhorn, P. (2010).

Towards a conceptual and methodological framework

for determining robot believability. Interaction Studies,

11(2), 314-335.

Sharp, H., Preece, J., & Rogers, Y. (2019). Interaction

design: Beyond human-computer interaction. John

Wiley & Sons.

Simpson, S.A., Wadsworth, E.J., Moss, S.C., & Smith, A.P.

(2005). Minor injuries, cognitive failures and accidents

at work: incidence and associated features.

Occupational Medicine, 55(2), 99-108.

Stankowich, T. & Blumstein, D.T. (2005). Fear in animals:

a meta-analysis and review of risk assessment.

Proceedings of the Royal Society B: Biological

Sciences, 272(1581), 2627-2634.

Szafir, D., Mutlu, B., & Fong, T. (2015). Communicating

directionality in flying robots. In 2015 10th ACM/IEEE

International Conference on Human-Robot Interaction

(HRI) (pp. 19-26). IEEE.

Takayama, L., Dooley, D., & Ju, W. (2011). Expressing

thought: improving robot readability with animation

principles. In 2011 6th ACM/IEEE International

Conference on Human-Robot Interaction (HRI) (pp.

69-76). IEEE.

Tan, J.T.C., Duan, F., Zhang, Y., Watanabe, K., Kato, R.,

& Arai, T. (2009). Human-robot collaboration in

cellular manufacturing: Design and development. In

2009 IEEE/RSJ International Conference on Intelligent

Robots and Systems (pp. 29-34). IEEE.

Torta, E., Cuijpers, R.H., & Juola, J.F. (2013). Design of a

parametric model of personal space for robotic social

navigation. International Journal of Social Robotics,

5(3), 357-365.

Wadsworth, E.J.K., Simpson, S.A., Moss, S.C., & Smith,

A.P. (2003). The Bristol Stress and Health Study:

accidents, minor injuries and cognitive failures at work.

Occupational Medicine, 53(6), 392-397.

Walters, M.L., Woods, S., Koay, K.L., & Dautenhahn, K.

(2005). Practical and methodological challenges in

designing and conducting human-robot interaction

studies. In Procs of the AISB 05 Symposium on Robot

Companions. AISB.

Walters, M.L., Dautenhahn, K., Te Boekhorst, R., Koay,

K.L., Syrdal, D.S., & Nehaniv, C.L. (2009). An

empirical framework for human-robot proxemics.

Procs of new frontiers in human-robot interaction.

Wickens, C.D., Hollands, J.G., Banbury, S., &

Parasuraman, R. (2015). Engineering psychology and

human performance. Psychology Press.

Can I Just Pass by? Testing Design Principles for Industrial Transport Robots

187