Design of Syllabic Vibration Pattern for Incoming Notification on a

Smartphone

Masaki Omata

1

and Misa Kuramoto

2

1

Graduate Faculty of Interdisciplinary Research, University of Yamanashi, Kofu, Yamanashi, Japan

2

Department of Computer Science and Engineering, University of Yamanashi, Kofu, Yamanashi, Japan

Keywords: Vibration Pattern, Incoming Notification, Syllable, Smartphone.

Abstract: We designed vibration patterns that notify a degree of urgency of an incoming message on a smartphone, a

type of communication tool of the message and the sender’ name, while the message is being received on the

smartphone. The design assigns segmentations of the vibration to syllables of the words such as “twitter” or

“LINE” and sender’s surname. We propose that the patterns are easy-to-memorize and easy-to-discriminate

because the vibration syllables imitate the syllabic sounds. Therefore, a user who senses and hears the

vibrations can easily discriminate the information without looking at the smartphone screen. Discriminative

correctness of the vibrations patterns was tested in a usability study with the smartphone in the user’s hand or

trouser pocket. For two degrees of urgency, six types of communication tools and six senders’ names, the

average correct answer rate was 78% in the hand and 34% in the pocket.

1 INTRODUCTION

Smartphone users receive so many notifications such

as phone calls, messages, news, and application

updates in their daily lives (Gallud et al., 2015, Okeke

et al., 2018, Yoon et al., 2014). A survey conducted

by Gallud et al. revealed that 69.3% participants

received less than 50 notifications a day, and 9.7% of

them received more than 100 notifications a day

(Gallud et al., 2015). The survey also reported that

each time a new notification was received, 44.7%

participants checked their smartphones immediately.

Sound, vibration, visual notifications and a

combination of the modalities are used in a

smartphone notification. However, the visual or

sound notifications force a user to look at or hear an

incoming notification and is disabled when a phone is

put on silent mode. Additionally, when a smartphone

is in a bag or a trouser pocket, a user needs to pull it

out to look at the visual notification. Typically, a

visual or sound notification can disturb user’s

concentration while the user is performing a more

important task with their smartphone.

In this study, we focus on a vibration notification

of a smartphone because a vibration alert can inform

an incoming event without any visual and/or sound

alert if a user can touch a phone directly or indirectly

through a bag’s handle or harness. Haptic information

involves kinematic control and evokes a subjective

sense (Uchikawa, 2008). However, vibration

notifications in current use can only inform the timing

of an event and nearly nothing about the details of the

event.

Thus, we designed patterns of vibrations to

transmit information of an incoming event of a

message when the message was being received. A

pattern comprises three parts: urgency of message,

type of message, and name of message sender.

Degrees of the urgency help a user decide whether to

check details of the message immediately or later.

The type informs a user about a communication tool

used to receive the message. The sender’s name

informs a user about who sent the message.

Our novel design approach assigns vibration

duration and an interval between vibrations to

syllables of communication tools and senders unlike

previous patterns that used bit sequence (Yonezawa

et al., 2013), Morse code (Ohta et al., 2010), or six-

point Braille characters (Al-Qudah et al., 2014). The

proposed approach allows a user to perceive vibration

similar to the manner in which users perceive the

sound of a name, and it is easy to make a short pattern

with this strategy. Therefore, users can easily

memorize the patterns and discriminate each one.

Omata, M. and Kuramoto, M.

Design of Syllabic Vibration Pattern for Incoming Notification on a Smartphone.

DOI: 10.5220/0010064100270036

In Proceedings of the 4th International Conference on Computer-Human Interaction Research and Applications (CHIRA 2020), pages 27-36

ISBN: 978-989-758-480-0

Copyright

c

2020 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

27

We also evaluated discrimination correctness and

usability of the patterns. This paper describes details

of the design, the evaluation experiments, and the

results, providing the following contributions.

The average discrimination correctness of the

vibration patterns about each of the six names

of communication tools is 59%–86%.

Users can discriminate the patterns not only

through direct contact with their hand but also

through indirect contact wherein the

smartphone is within a bag held with the user’s

hand.

Discriminating a vibration pattern of a sender’s

name that is similar to another senders’ name is

difficult. The highest correct answer rate of the

names is over 70%, but the lowest rate is 30%.

Participants answered that knowing the

urgency and the order of the vibration patterns

were useful.

2 BACKGROUND

2.1 Related Work

Jayant et al. developed V-Braille that is a way to

haptically represent Braille characters on a

smartphone using touch-screen and vibration (Jayant

et al., 2010). The screen divided into six parts to

mimic six dots in a single Braille cell. Experimental

results shows that nine users (six deaf-blind, three

blind) had a 90% accuracy rate. Five out of them were

able to read a character in less than 10 seconds.

Azenkot et al. developed feedback methods called

Wand, ScreenEdge and Pattern using vibration on a

smartphone to provide turn-by-turn walking

instructions to visual impairments (Azenkot et al.,

2011). The Wand vibrates when the top of a phone is

roughly pointing in a direction. The ScreenEdge

vibrates when the user touches close to the edge

corresponding to a direction. The Pattern vibrates for

1 to 4 pulses to indicate a direction. One pulse means

“go forward,” two pulses mean “turn right,” three

pulses mean “turn back,” and four pulses mean “turn

left.”

Ohta et al. proposed the method of text

communication by tactile sensation (called Tachifon)

(Ohta et al., 2010). It conveys text information in the

form of Morse or modified Braille signals using the

vibration for users with visual and hearing

impairment. They assessed communication

efficiency of Tachifon and found a high level of

correct message recognition over 96%.

Yonezawa et al. proposed Vineteraction that

leveraged combination of vibrator and accelerometer

to send information from a smart device to another

device (Yonezawa et al., 2013). The system encodes

each character of a string to “0” that means stopping

vibration and “1” that means generating vibration.

Results of evaluation for accuracy show that all the

smartphone achieves almost 100 % of accuracy of

information transferring.

Exler et al. hypothesized that there was a

correlation between the perceptibility of a notification

depending on its notification type (ringtone, vibration

and LED) and the smartphone position (on a table, in

a trouser front pocket and in a backpack) (Exler et al.,

2017). The ringtone was standard sound “Tejat” for

about 250 ms. The vibration was a pattern of 300 ms

off, 400 ms on, 300ms off and again 400 ms on. The

LED blinked for 500 ms in green and stays off for 500

ms. The results show that vibration and ringtone are

perceived best at all positions, and that users felt that

vibration is most pleasant than the ringtone due to

habit, lower obtrusiveness, lower disturbance, and

lower distraction.

Kokkonis et al. explored how visually impaired

people can take advantage of vibro-tactile interaction

in order to distinguish colours, objects or specific

areas on the touch screen (Kokkonis et al., 2019).

Different vibration patterns were proposed and

assigned to each of eight colours. The evaluation tests

revealed that the vibration time should be shorter than

300 ms and the idle time should be shorter than 400

ms. The period time for the vibration pattern should

be shorter than 600 ms.

Al-Qudah et al. developed a method for

presenting the six-point Braille characters on mobile

devices that feature tactile feedback (Al-Qudah et al.,

2014). The eight various combination of raised and

lowered points of the three-point column are encoded

with a single pattern of vibration. The proposed

method significantly reduces the average reading

time and the average power consumption.

2.2 Motivation

Simple combination of vibration and pause as

described above cannot represent meaningful pattern.

Moreover, vibration patterns based on ASCII code or

Morse code can represent several meaningful

vibrations but it is difficult for a user to memorize and

recognize the meaning of them because there is no

direct relationship between the patterns and the

characters or words that are expressed by the patterns,

and the patterns can become so long.

CHIRA 2020 - 4th International Conference on Computer-Human Interaction Research and Applications

28

We propose a method to assign vibration

segmentations to syllables of a word. The method can

make direct syllabic relationship between the

vibrations and the words. Also, length of a pattern

made by the method can become shorter than that

made by ASCII or Morse code. For example, when a

vibration pattern of “mail” in Japanese is represented

by Morse code method, the pattern consists of “– . . .

– . – – . – – . – – .” (each dot(.) means a short vibration

and each dash(–) means long vibration.). On the other

hand, when the word is represented by our method,

the pattern consists of “– .” (described in the next

section in detail), which is shorter than that of Morse

and is simple.

3 DESIGN OF VIBRATION

PATTERNS

This section describes our proposed design of

vibration patterns to notify a smartphone user of a

degree of urgency of an incoming received message

(or a tele-phone call), a communication type

(including telephone), and a message sender (or a

telephone caller). Vibrations of a pattern are

segmented and presented in the order of urgency,

communication type and sender because we consider

that users who sense and hear the vibrations may want

to be notified of this information in that order. In our

design, the time interval among the three

segmentations is 800 ms, the segmentations last for

5–9 s, and the number of vibrations per pattern is less

than 18. We believe that these numbers ensure that a

user has no problem perceiving the information in a

notification because humans can perceive as many as

eight vibrotactile stimuli at a single site and at a rate

of five per second (Lederman, 1991). However, we

cannot control the frequency of the vibration because

it depends on a specification of a vibrating motor of a

receiving phone. Additionally, our current design

excludes the simultaneous presentation of multiple

vibration notifications.

3.1 Patterns for Degrees of Urgency

Because Gallud et al. reported that 43.9% of

participants responded a notification after receiving it

depends on the urgency (Gallud et al., 2015), we

assigned the first vibration pattern to urgency of

notification.

Because information on degree of urgency is

nonverbal, the degrees are distinguished by the

number of vibrations in a pattern. We set the number

Table 1: Vibration pattern for notifying urgency.

Degree of

urgency

Pattern of

vibrations

Duration time of

each dot (.) [ms]

Neutral

. . .

80

High

. . . . . .

80

of degrees of urgency to two: high and neutral. Table

1 explains the number of vibrations in each degree. In

table 1, each dot means a vibration over a period of

80 ms. The time interval between vibrations is 100

ms. In our design, the number of vibrations for high

urgency is double that of neutral urgency because the

more vibrations in the pattern, the more a user feels

the urgency.

3.2 Patterns for Communication Type

After urgency, the system notifies users concerning

communication type. It is important for a user to

know the type of communication that generated the

phone message in order to consider degree of priority

for response to a message or phone call. For instance,

many people give phone calls higher priority than

emails.

Our design informs six types of communication

tools that are widely used by smartphone users in

Japan: telephone, email, LINE app (LINE, 2020),

Facebook, Twitter, and others. They are described by

the white paper information and communications in

Japan as widely used tools (Ministry of internal

affairs and communications, 2016). Table 2 lists the

five named tools (plus “Others”) and shows each of

their vibration patterns. These patterns are made up of

syllables, which are sequenced units of speech sounds

typically consisting of a vowel and a consonant

(Kubozono, 1998). For instance, because “twitter” is

pronounced “Tu-Wi-Tter” in Japanese, the vibration

pattern is “. . –,” of which the dot (.) means a short

vibration, and the dash (–) means a long vibration.

However, the pattern of syllables of “LINE (La-I-N)”

is “. . .” is the same as “telephone (De-N-Wa in

Table 2: Vibration patterns for different tools.

Communication

tool

Pattern of

vibrations

Duration

of each

dot (.)

[ms]

Duration

of each

dash (–)

[ms]

Telephone

(De-N-Wa)

– –

NA 1000

Mail

– .

200 500

LINE

. . .

200 NA

Facebook

– . . .

200 400

Twitter

. . –

100 500

Others

(So-No-Ta)

–

NA 500

Design of Syllabic Vibration Pattern for Incoming Notification on a Smartphone

29

Japanese)” and “others (So-No-Ta in Japanese).”

Therefore, we assigned “– –” to De-N-Wa and “–” to

So-No-Ta as easy to memorize and distinguishable

patterns, although they are not syllables. Note that the

terms of the vibrations are adjusted to term of

syllables as pronounced by Japanese. The time

between vibrations is 100 ms.

3.3 Patterns for Surname of Sender

Lastly, the system notifies a user regarding the name

of the caller when a telephone is used for the

communication or of the sender when another

communication tool is used.

Because a name is verbal information, vibrations

are assigned according to the syllables in the name.

However, it is difficult to distinguish individual

names by using only syllables because there are many

surnames that have the same number of syllables (two

to four) in Japanese. For example, “Suzuki,”

“Tanaka,” and “Satou” have three syllables with

Japanese pronunciation. Therefore, we proposed a

method to assign vibration duration time to a

consonant and assign time interval between

vibrations to a vowel of Japanese alphabet

pronunciation. Otherwise, it would be impossible to

assign individual vibration patterns to the 46

characters of the Japanese alphabet.

The differences in assigned vibration durations

and intervals between vibrations using our method are

shown in Tables 3 and 4. Each consonant is assigned

a non-overlapping vibration duration time in

multiples of 100 ms, increasing from ‘A’ to ‘W

’ (see Table 3). On the other hand, as shown in Table

4, each vowel is assigned a separate interval time

(between vibrations that represent consonants) as a

multiple of 100 ms, increasing from ‘a’ to ‘o’.

For instance, “ToYoTa” is converted to patterns

representing three syllables: a 400-ms vibration (T)

Table 3: Vibration duration for surname consonant (in

Japanese alphabetical order).

Japanese consonant Duration of vibration [ms]

A 100

K 200

S 300

T 400

N 500

H 600

M 700

Y 800

R 900

W 1000

Table 4: Interval between vibrations for surname vowel (in

Japanese alphabetical order).

Japanese vowel Time interval [ms]

a 100

i 200

u 300

e 400

o 500

followed by a 500-ms interval (o), then an 800-ms

vibration (Y) followed by a 500-ms interval (o) and

then a 400-ms vibration (T) followed by a 100-ms

interval (a).

3.4 Implementation

We used Android Studio (Android Studio, 2020) to

develop an application for an Android smartphone

(Google Nexus 4) that generates vibration patterns for

urgency, communication type and sender’s name. We

used the Vibrator class of the Android Application

Programming Interface to implement the vibration

patterns. Additionally, we used an application to

collect answers from participants in experiments (as

described in the next section).

4 EXPERIMENTS

We ran two experiments to assess the correctness of

discrimination of vibration patterns for

communication type and sender surname (as

described above) when participants sense and hear the

vibration of a smartphone without seeing its display

screen. Since the vibration patterns related to the

degree of urgency are simple and the number of types

is small, it was expected that the correct answer rate

would be higher than the vibrations related to

communication type or sender surname, so the

experiment of the urgency was omitted.

4.1 Communication Type

We conducted an experiment to assess factors

affecting the correctness of the smartphone user’s

discrimination among vibration patterns for six

communication types (Table 2) and three common

smartphone locations (in a hand, in a bag, or in a

trouser pocket) (Figure 1). The dependent variables

were percentage of types identified correctly and time

for response to a pattern. We used a within-subject

experimental design. Six right-handed participants

aged 21–23 years old took part in this experiment.

CHIRA 2020 - 4th International Conference on Computer-Human Interaction Research and Applications

30

4.1.1 Environment

We used an Android smartphone (Google Nexus 4) to

actuate vibration and a laptop computer for

participants to answer one of the six communication

types. The phone was connected to the computer via

WiFi. A participant sat a chair that was about 20 cm

from a table where the computer was located, sensed

a vibration pattern, and selected communication type

that he or she thought. This experiment was

conducted in a silent laboratory room.

We assessed common smartphone locations as

one type of experimental condition. For the in-hand

condition, a participant held the smartphone in his or

her preferred hand and kept his or her elbow on the

table (Figure 1a). After sensing vibration in the hand,

the participant selected the vibration pattern from a

list of the types by using the other hand to tap the

button on the phone’s screen. For the in-bag

condition, a participant sitting in the chair used his or

her non-preferred hand to hold the handles of a bag

that contained the phone and that was on the floor

(Figure 1b). After sensing vibration through the

(a)

(b)

(c)

Figure 1: Smartphone’s locations: (a) on a preferred hand,

(b) in a bag, and (c) in a trouser pocket.

handles, the participant selected the vibration pattern

from the list of the types by using the preferred hand

to click the selected button on the laptop screen. For

the in-pocket condition (Figure 1c), a standing

participant had the phone in his or her right rear

trouser pocket (We had asked him or her to wear tight

pants, such as skinny jeans.). After sensing vibration,

the participant selected a type on the computer screen.

4.1.2 Procedure

There were two blocks in the experiment. The first

block was unexplained the design approach of the

patterns and the second block was explained it. In the

first block, the participants memorized the vibration

patterns for communication types, but the

experimenter did not explain the design approach that

assigned short or long vibration to syllables of a

name. Therefore, participants needed to memorize the

patterns without knowing the reason behind the

patterns. In contrast, in the second block, participants

memorized the patterns again after the experimenter

informed them the approach. We hypothesized that

correct answer rate in the second block would higher

than that in the first block because we expected that it

would be easier for participants to memorize the

patterns with the explanation about the design than

those without the explanation.

The experimental procedure used in the blocks

were the same, with the exception of the prior

explanation about the design. In the training phase,

first, a participant held the smartphone and

memorized each vibration pattern by sensing it five

times while using acoustic earmuffs to cut off sounds

from the vibrations. Then the experimenter asked the

participant whether he or she had been able to

memorize all the patterns. If he or she could not

memorize some part of them, the experimenter

instructed him or her to sense and memorize the

patterns five times again in order for sufficient

training. Then the participant trained to sense a

vibration pattern and select its type of a list of the

types twice to each pattern at random (i.e., 12 trials)

as experimental task practice while holding the phone

in his or her hand.

In the performing phase, the participant

performed the experimental task to sense vibration

patterns and select a type five times for each

communication type randomly for each of the three

phone locations. Therefore, the number of all trials

was 90 (30 trials × 3 locations) for each participant

(within-subjects design). Finally, participants filled

out a questionnaire about the degree to which the felt

vibrations were easy to sense and easy to memorize.

Design of Syllabic Vibration Pattern for Incoming Notification on a Smartphone

31

In the second block, the experimenter explained the

design approach of the patterns to the same

participant. The procedure was similar to the first one

after that.

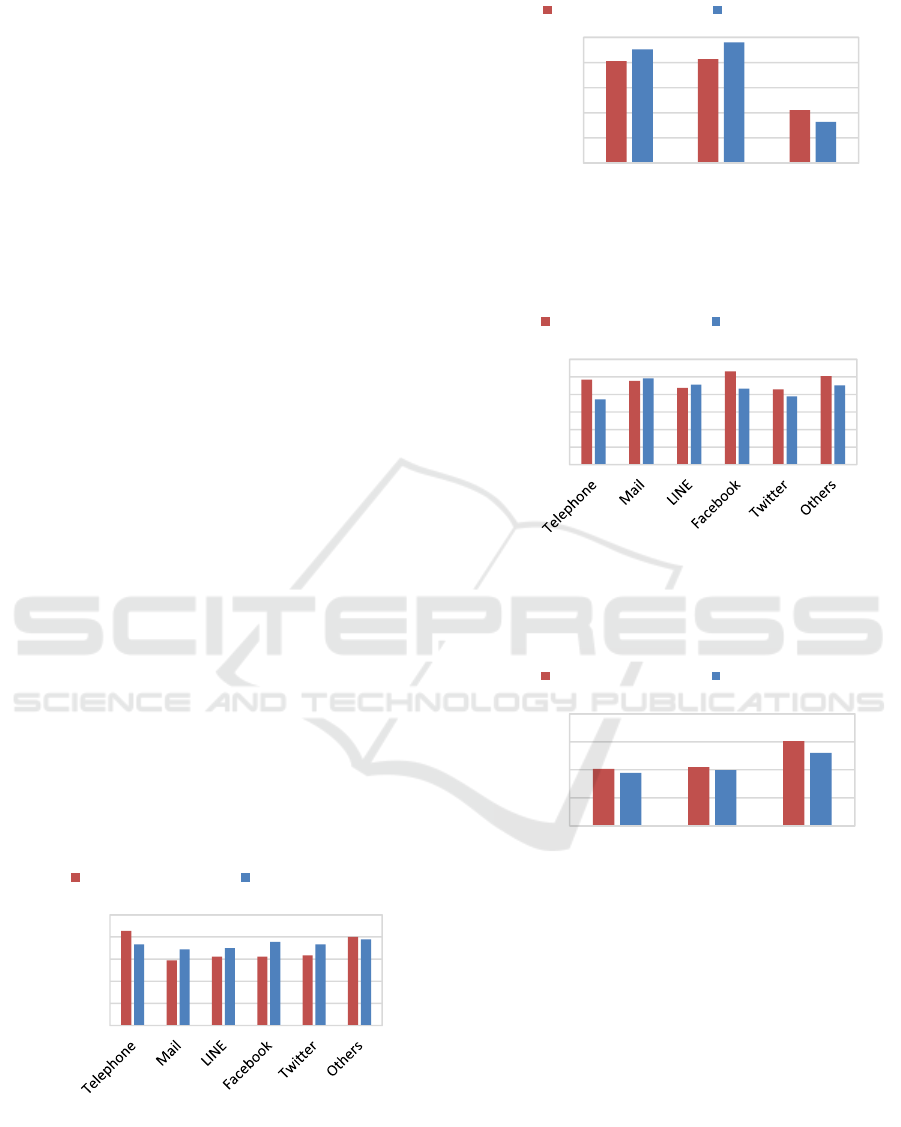

4.1.3 Result

Average correct answer rates of all of the participants

in the two blocks for discriminating the six

communication types given three different phone

locations are shown in Figures 2 and 3. As shown on

Figure 2, the rates were from 59% to 86% in without-

explanation condition, and the rates were from 69%

to 78% in with-explanation condition. There was a

significant difference across the phone locations but

not among the communication types (two-way

ANOVA, p < 0.05). Additionally, there was no

interaction between communication type and phone-

location factors. We found significant differences

between in-hand and in-pocket locations and between

in-bag and in-pocket locations by performing

multiple comparisons (p < 0.05). We found the

correct answer rate of the in-pocket condition was

significantly lower than those of the in-hand and in-

bag conditions.

Average response times of all of the all

participants in the two blocks for discriminating

communication types and phone locations are shown

in Figures 4 and 5. There were significant differences

among the communication types and across the phone

locations but no interaction between type and location

(two-way ANOVA, p < 0.05). By performing

multiple comparisons (p < 0.05), we found significant

differences between in-hand and in-pocket locations

and between in-bag and in-pocket locations. Overall,

the response time in the in-pocket condition was

longer than those in the other locations. Also, there

Figure 2: Average correct answer rate of each of the

communication types in presence or absence of the

explanation.

Figure 3: Average correct answer rate of each of the

locations in presence or absence of the explanation.

Figure 4: Average response time of each of the

communication types in presence or absence of the

explanation.

Figure 5: Average response time of each of the location in

presence or absence of the explanation.

were significant differences between “mail” and

“Twitter” and between “mail” and “telephone (De-N-

Wa).”

A summary of participants’ responses to the

questionnaire about vibration patterns are shown in

Table 5. We found that most of them answered that it

was easy to sense the vibration patterns for in-hand

and in-bag conditions but hard to sense for the in-

pocket condition.

0

20

40

60

80

100

Correct answer rate [%]

Communication type

Without explanation With explanation

0

20

40

60

80

100

Hand Bag Pocket

Correct answer rate [%]

Phone's location

Without explanation With explanation

0

1

2

3

4

5

6

Response time [s]

Communication type

Without explanation With explanation

0

2

4

6

8

Hand Bag Pocket

Response time [s]

Phone's location

Without explanation With explanation

CHIRA 2020 - 4th International Conference on Computer-Human Interaction Research and Applications

32

Table 5: Number of answers about sensitivity of the

vibrations.

Question

Presence

or absence

of

explanation

V

e

r

y

h

a

r

d

H

a

r

d

N

e

i

t

h

e

r

E

a

s

y

V

e

r

y

e

a

s

y

Ease of memorization

of vibration patterns

without 0 1 1 4 0

with 0 2 0 1 3

Sensitivity of in-hand

vibration

without 0 0 0 2 4

with 0 0 0 1 5

Sensitivity of in-bag

vibration

without 0 0 1 2 3

with 0 1 0 2 3

Sensitivity of in-

pocket vibration

without 5 1 0 0 0

with 6 0 0 0 0

4.1.4 Discussions

We conclude that users can sense a syllabic vibration

patterns for in-hand and in-bag conditions and

correctly discriminate the six patterns because people

would usually use a hand to touch a smartphone or to

hold a bag and because mechanoreceptors such as

Meissner’s corpuscle for vibration perception are

distributed densely in the hand (Uchikawa, 2008). In

contrast, users can hardly sense in-pocket vibrations

through trousers, because the fabric of which

separates the in-pocket phone from more direct

sensory perception, and the density of the Meissner

corpuscles on hip is lower than that on hand.

Our hypothesis that users could memorize the

syllabic vibration patterns more easily after having

the design explained to them was not validated. We

find that it is difficult for users to discriminate similar

patterns such as LINE (. . .) and mail (– .) regardless

of whether they know the design approach. On the

other hand, their response time is shorter after such

explanations.

4.2 Names of Senders

We conducted a validation experiment to assess

correctness of users’ discrimination among vibration

patterns for six senders’ surnames when a smartphone

that vibrates is in a hand or in a trouser pocket. Factors

for this experiment are vibration pattern, smartphone

location, and sound-insulation. The number of levels

for the vibration pattern factor is six. We used the

typical Japanese three-syllable names “I-to-u,” “Su-

zu-ki,” “Ta-na-ka,” “Sa-to-u,” “Ka-to-u” and “Yo-

shi-da.” The number of levels for the phone-location

factor is two: in-hand and in-pocket. The in-bag

condition was not included because its correct answer

rate was the same as or greater than that of the in-hand

condition, as discussed above. The number of levels

for the sound-insulation factor, added to assess

correctness differences between only-vibration and

vibration-with-sound conditions, is two: using or not

using acoustic earmuffs. Therefore, we assessed four

experimental conditions (two location and two sound

conditions), and the dependent variables were

percentage of names answered correctly and time for

response to a pattern.

We used a within-subject experimental design.

Five right-handed participants 21–22 years old took

part in this experiment. Experimental environment for

this experiment was the same as discussed in the

previous section.

4.2.1 Procedure

First, the experimenter explained the design method

(Tables 3 and 4) to a participant and demonstrated the

vibration patterns for a participant. Then, the

participant confirmed the vibration by holding a

smartphone in his or her hand without putting on the

acoustic earmuffs. The participant sensed the

vibration pattern for each surname twice at random as

a training phase. After that, the participant did it five

times for each pattern at random and answered the

sender’s name that the vibration pattern meant for

each condition as a performing phase. Finally, the

participant was interviewed concerning the

understandability of the patterns.

4.2.2 Result

Average correct answer rates of all of the participants

for all six surnames and all conditions are shown in

Figure 6. Average correct answer rates of all

participants for the sound-insulation and phone-

location factors are shown in Figures 7a and 7b. As

shown on Figure 6, the highest correct answer rate

was over 70% (Suzuki and Yoshida), but the lowest

rate was 30% (Satou). We found significant

differences among the surnames, between using and

not using acoustic earmuffs and between in-hand and

in-pocket conditions (three-way ANOVA p < 0.05).

By performing multiple comparisons (p < 0.05), we

found significant differences between “Katou” and

“Yoshida,” between “Satou” and “Suzuki,” and

between “Satou” and “Yoshida.” Results of “Suzuki”

Design of Syllabic Vibration Pattern for Incoming Notification on a Smartphone

33

Figure 6: Average correct answer rate of each of the

surnames.

Figure 7: Average correct answer rate (a) with or without

the acoustic earmuffs and (b) by each of the location.

and “Yoshida” were more correct (Figure 6) and their

response times were shorter (Figure 8a) than those of

the four others. Notably, most participants correctly

answered “Yoshida” in the first representation of the

pattern because the vibration pattern for “Yoshida” is

very different from those for the other surnames.

Additionally, some of the participants said that it was

difficult to discriminate between “Itou,” “Satou” and

“Katou” because their voice patterns are similar, and

their vibration patterns are also similar.

For all of the participants, response times for all

six surnames and all conditions are shown in Figure

8a, and for the sound-insulation factor, in Figure 8b.

We found a significant difference between using and

not using acoustic earmuffs: Participants could

determine a name vibration pattern more quickly

without putting on acoustic earmuffs.

Figure 8: Average response time of (a) each of the surnames

and (b) with or without use of the acoustic earmuffs.

4.2.3 Discussions

We conclude that it is difficult for a user to sense the

specific syllabic vibration patterns used in our

approach for a particular name. We found it

especially difficult for them to discriminate between

similar name patterns, although it was not difficult to

identify the characteristic pattern.

We also found that the participants relied on not only

vibration but also sound of vibration to discriminate

each vibration pattern.

5 EVALUATION

We conducted an experiment to evaluate summative

usability of our proposed syllabic vibration patterns

consisting of three kinds of notifications: a degree of

urgency, a communication type and a sender’s name.

Factors for this experiment are phone’s location and

sound-insulation. Levels for the phone-location factor

are in-hand, in-trouser-pocket during standing.

Levels of the sound-insulation factor are putting on

acoustic earmuffs and without them. Dependent

variables are percentage of patterns answered

correctly and time for response to a pattern.

We used a within-subject experimental design.

Five right-handed participants 21–22 years old took

part in this experiment. Experimental environment for

this experiment was the same as described in the

previous chapter.

0

20

40

60

80

100

Correct answer rate [%]

Surname of sender

0

20

40

60

80

100

Hand Pocket

Correct answer rate

[%]

(b) Phone's location

0

20

40

60

80

100

Presence Absence

Correct answer rate

[%]

(a) Acoustic earmuffs

0

2

4

6

8

Response time [s]

(a) Surname of sender

0

2

4

6

Presence Absence

Response time [s]

(b) Acoustic earmuffs

CHIRA 2020 - 4th International Conference on Computer-Human Interaction Research and Applications

34

5.1 Procedure

First, the experimenter explained the design method

described above to a participant and demonstrated the

vibration patterns for the participant. Then, as a

training phase, the participant confirmed vibration

patterns five times by holding a smartphone on his or

her hand without putting on the acoustic earmuffs.

Each of the patterns included three segmentations is

combined and ordered randomly. After sensing the

sequential three segmentations, the participant

answered content of each segmentation as soon as

possible.

After that, as a performing phase, the participant

performed the task 10 times in each condition. The

number of conditions is four that means combinations

of two phone’s locations and with or without the

earmuffs. After performing all trials, the participant

was interviewed concerning understandability of the

patterns.

5.2 Result

5.2.1 Correct Answer Rate

Average correct answer rates from urgency to

communication type (two segmentations) and from

urgency to sender (three segmentations) of all of the

participants and all conditions are shown in Figure 9.

There were significantly differences between phone’s

locations and between using and not using acoustic

earmuffs (two-way ANOVA, p < 0.05). As shown on

Figure 9, because the correctness rate of three

segmentations in in-pocket and using-earmuff

condition was 0%, we found it was almost impossible

to discriminate them with only vibration in a pocket.

In contrast, the correctness rate of three

segmentations in in-hand and not-using-earmuff

condition was 78%, and the correctness rate of two

segmentations in the same condition was 98%.

Therefore, we found that it was sufficiently usable to

notify two-degree urgency and one of six

communication types when a user could sense the

vibration patterns, and it was important to use

vibration with the vibration sounds.

5.2.2 Response Time

Average response times from urgency to

communication type (two segmentations) and from

urgency to sender (three segmentations) of all of the

participants and all condition are shown in Figure 10.

There was significantly difference between in-hand

and in-pocket conditions, and there was no

Figure 9: Average correct answer rate of sequential

vibration patters in each of the condition.

Figure 10: Average response time of sequential vibration

patters in each of the condition.

significantly difference between using and not using

earmuffs (two-way ANOVA, p < 0.05). We found

that the difference between the presence and absence

of the sounds had no effect on the response time.

5.3 Discussions

We found that it was easy to discriminate two degree

of urgency, it was possible to discriminate each

communication type, and it was difficult to

discriminate each sender’s name of the sequential

vibration patterns. Therefore, we argue that a

vibration pattern for urgency and communication

type can provide a smartphone user with adequate

information for making a decision whether to check

the message immediately or later without looking at

the smartphone screen. However, the vibration

patterns of senders’ names need further improvement

from discrimination correctness.

0

20

40

60

80

100

Hand Hand Pocket Pocket

With

earmuffs

Without

earmuffs

With

earmuffs

Without

earmuffs

Correct answer rate [%]

Phone's location and using or not using

earmuffs

To type (two segmentations)

To sender (three segmentations)

0

4

8

12

16

20

With

earmuffs

Without

earmuffs

With

earmuffs

Without

earmuffs

Hand Pocket

Response time [s]

Phone's location and using or not using

earmuffs

To type (two segmentations)

To sender (three segmentations)

Design of Syllabic Vibration Pattern for Incoming Notification on a Smartphone

35

From the results of the interviews, we also found

that the 800 ms for time interval was so short to

distinguish delimited timing and discriminate content

of a vibration pattern. We will change the time to

1000 ms and evaluate it. Moreover, we plan to

redesign the interval being dynamically changed with

fitting user’s habituation of the vibration patterns.

6 CONCLUSIONS

We designed syllabic vibration patterns to notify

urgency of an incoming message that being received,

communication type of the message and sender’s

name of the message on a smartphone in order to

reduce the number of looking at the smartphone

screen directly. Then, we conducted the experiments

to validate discrimination correctness of the patterns

and evaluate usability of them. We conclude that the

design to use syllabic vibration is useful to memorize

and remember the patterns and to discriminate each

pattern via a hand directly and indirectly because the

vibrations are like sounds of the words which are

presented syllabic vibration. However, we also

conclude that the design to assign syllabic vibration

and interval to a sender’s name makes it difficult for

a user to discriminate each sender’s name because of

a number of names and the similar vibration patterns.

We propose that a smartphone user can reduce the

number of looking at the smartphone screen to check

a notification and can determine whether to confirm

content of the message immediately or later by

sensing and/or hearing our proposed vibration

patterns. Additionally, we suggest that the design can

deter smartphone use while walking.

REFERENCES

Al-Qudah, Z., Doush I. A., Alkhateeb, F., Maghayreh, E.

A., Al-Khaleel, O., 2014. Utilizing Mobile Devices'

Tactile Feedback for Presenting Braille Characters: An

Optimized Approach for Fast Reading and Long

Battery Life, In Interacting with Computers, 26(1), 63–

74, https://doi.org/10.1093/iwc/iwt017.

Android Studio. Retrieved June 14, 2020. from

https://developer.android.com/studio/.

Azenkot, S., Ladner, R. E. Wobbrock. J. O., 2011.

Smartphone haptic feedback for nonvisual wayfinding.

In The proceedings of the 13th international ACM

conference on Computers and accessibility, 281–282.

https://doi.org/10.1145/2049536.2049607.

Exler, A., Dinse, C., Günes, Z., Hammoud, N., Mattes, S.,

Beigl, M., 2017. Investigating the perceptibility

different notification types on smartphones depending

on the smartphone position. In Proceedings of the 2017

ACM International Joint Conference on Pervasive and

Ubiquitous Computing and Proceedings of the 2017

ACM International Symposium on Wearable

Computers, 970–976. https://doi.org/10.1145/

3123024.3124560.

Gallud J. A., Tesoriero, R., 2015. Smartphone

Notifications: A Study on the Sound to Soundless

Tendency. In Proceedings of the 17th International

Conference on Human-Computer Interaction with

Mobile Devices and Services Adjunct, 819–824.

https://doi.org/10.1145/2786567.2793706.

Jayant, C., Acuario, C., Johnson, W., Hollier, J., Ladner. R.,

2010. V-braille: haptic braille perception using a touch-

screen and vibration on mobile phones. In Proceedings

of the 12th international ACM conference on

Computers and accessibility, 295–296.

https://doi.org/10.1145/1878803.1878878.

Kokkonis, G., Minopoulos, G., Psannis, K. E. and Ishibashi,

Y., 2019. Evaluating Vibration Patterns in HTML5 for

Smartphone Haptic Applications, In 2nd World

Symposium on Communication Engineering, 122–126,

https://doi.org/10.1109/WSCE49000.2019.9040998.

Kubozono. H., 1998. On the Universality of Mora and

Syllable (Features on Theories of Syllable and Mora).

In Journal of the phonetic society of Japan 2(1), 5–15.

https://doi.org/10.24467/onseikenkyu.2.1_5.

Lederman. S. J., 1991. Skin and touch. In Encyclopedia of

human biology 7, 51–63. Academic Press, San Diego.

LINE, Retrieved June 14, 2020. from https://line.me/en/.

Ministry of internal affairs and communications, 2016.

White paper: information and communications in

Japan, Section 2. Tokyo.

Ohta, S., Kishimoto, T., Horiuchi, K., Saeki, N., Uchiyama,

M., Kohno, T., Kawata M., Nkamoto. H., 2010.

Development of Tachifon, Mobile Phone Vibration

Communication System and Server for Users with

Visual and Hearing Impairment. In: Kawasaki medical

welfare journal 19(2), 329–338.

Okeke, F., Sobolev, M., Dell, N., Estrin. D., 2018. Good

vibrations: can a digital nudge reduce digital overload?

In Proceedings of the 20th International Conference on

Human-Computer Interaction with Mobile Devices and

Services, 1–12. https://doi.org/10.1145/3229434.

3229463.

Oyama, T., Imai S., Wake T., 1994. Feeling/consciousness

mental handbook. New edition. 1741. Seishin Shobo,

Tokyo.

Uchikawa K. (ed.), 2008.

Auditory Sensation, Tactual

Sensation and Vestibular Sensation, 213, Asakura

Publishing, Tokyo.

Yonezawa, T., Nakazawa, J., Nagata T., Tokuda, H., 2013.

Vinteraction: Vibration-based Interaction for Smart

Devices, In Journal of information processing, 54(4),

1498–1506.

Yoon, S., Lee, S., Lee, J., Lee, K., 2014. Understanding

notification stress of smartphone messenger app. In

CHI ’14 Extended Abstracts on Human Factors in

Computing Systems, 1735–1740. https://doi.org/

10.1145/2559206.2581167.

CHIRA 2020 - 4th International Conference on Computer-Human Interaction Research and Applications

36