Peer Tutoring Orchestration

Streamlined Technology-driven Orchestration for Peer Tutoring

Lighton Phiri

1

, Christoph Meinel

2

and Hussein Suleman

1

1

Department of Computer Science, University of Cape Town, Rondebosch 7701, Cape Town, South Africa

2

Hasso Plattner Institute, University of Potsdam, Prof.-Dr.-Helmert-Str. 2-3, D-14482 Potsdam, Germany

Keywords:

Computer Supported Instruction, Orchestration, Peer Tutoring, Technology-enhanced Learning.

Abstract:

Peer tutoring models that involve senior students teaching junior students is a well established practice in most

large universities. While there are a range of teaching activities performed by tutors, these are often done in an

ad hoc manner. We propose to leverage organised orchestration in order to make peer tutoring more effective.

A prototype tutoring platform, aimed at facilitating face-to-face tutoring sessions, was implemented in order

to facilitate orchestration of activities in peer tutoring sessions. The tool was evaluated by 24 tutors for first

year Computer Science courses at a large university. The NASA Task Load Index (NASA-TLX) and Perceived

Usefulness and Ease of Use (PUEU) instruments were used to measure the orchestration load and usability

of the tool, respectively. The overall workload falls within acceptable limits. This initial result confirms the

feasibility of the early stage tools to implement organised orchestration for peer tutoring.

1 INTRODUCTION

Peer tutoring involves students learning with and from

one another (Falchikov, 2001). The learning bro-

adly involves individuals from similar social grou-

pings helping one another to learn. The individuals

who take on the role of teaching are tutors while those

being taught are tutees (Topping, 1996). In higher

education, tutors are typically senior students in hig-

her levels with little or no teaching qualification. The

advantages of peer tutoring in higher education, such

as small group learning and cost savings, are well

documented (Bowman-Perrott et al., 2013; Topping,

1996; Beasley, 1997). With the widespread availa-

bility of general purpose technology and specialised

educational technology, peer tutoring is increasingly

becoming more effective (Evans and Moore, 2013).

A technique commonly employed in large under-

graduate courses involves forming smaller managea-

ble tutorial groups, which are administered by tutors.

However, in the majority of these cases, the tutorial

sessions are typically conducted in an informal man-

ner. This is, in part, due to the fact that tutors usually

do not have the formal training required to teach. In

this work, we investigate the potential of technology-

driven organised orchestration on peer-led tutoring

with a particular focus on pre-session management of

learning activities.

Orchestration involves the management of pro-

cesses and procedures that are performed by educa-

tors in formal learning environments (Dillenbourg,

2013). Roschelle et al. further state that orches-

tration is a Technology Enhanced Learning appro-

ach that focuses on challenges faced by educators

when using technology in formal learning environ-

ments (Roschelle et al., 2013). Our previous work

has highlighted flaws and shortcomings of contem-

porary orchestration of learning activities, primarily

due to its ad hoc nature. We argue that the ad hoc

nature of orchestration is as a direct result of a lack

of a standardised way of orchestrating learning acti-

vities (Phiri et al., 2016a). Furthermore, we propose

a more streamlined approach for orchestration of le-

arning activities: organised orchestration (Phiri et al.,

2016b).

This work is a further attempt at exploring the po-

tential applicability of our proposed approach in a dif-

ferent educational setting: peer tutoring sessions. We

argue that due to its focus on curriculum content and,

additionally, the lack of formal teaching training of

tutors, peer tutoring could potentially be made more

effective by leveraging organised orchestration.

We propose the design and implementation of a

peer tutoring teaching platform aimed at facilitating

the orchestration of tutor-led learning activities. A

proof of concept pre-session management tool was

434

Phiri, L., Meinel, C. and Suleman, H.

Peer Tutoring Orchestration - Streamlined Technology-driven Orchestration for Peer Tutoring.

DOI: 10.5220/0006339104340441

In Proceedings of the 9th International Conference on Computer Supported Education (CSEDU 2017) - Volume 1, pages 434-441

ISBN: 978-989-758-239-4

Copyright © 2017 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

developed based on an existing standard: IMS Global

Simple Sequencing Specification (IMS Global Lear-

ning Consortium, 2003). We also present preliminary

results gathered after evaluating the implementation

of this tool.

The main contributions of this paper are as fol-

lows:

1. A new potentially–viable approach to facilitate

technology-driven orchestration of peer-led lear-

ning activities.

2. A use of the IMS Global Simple Sequencing Stan-

dard to facilitate organised orchestration of lear-

ning activities.

3. Experimental results to demonstrate the viability

of tools for pre-session management of peer-led

tutorial sessions.

The remainder of this paper is organised as fol-

lows: Section 2 is a synthesis of related work, and

Section 2 presents design and implementation details

of the prototype Web-based tool. In Section 4, expe-

rimental design and results details are outlined, while

Section 5 discusses the implication of the results. Fi-

nally, Section 6 presents concluding remarks and fu-

ture directions.

2 RELATED WORK

Peer Assisted Learning (PAL) has historically been

employed in higher education, particularly in difficult

courses and those with significantly large enrolments.

While there exist many different models of PAL, Top-

ping emphasises that Peer Tutoring and Cooperative

Learning are the most common models (Topping,

2005). Peer tutoring typically focuses on the curricu-

lum content, with clearly outlined procedures. In ad-

dition, participants will generally receive some form

of training (Topping, 2005). However, cooperative le-

arning involves collaboration among students in order

to achieve a shared goal (Johnson et al., 2000).

2.1 Technology for Peer-led Learning

There is a wide range of tools that have been em-

ployed to facilitate peer tutoring. However, most of

these tools are aimed at facilitating interaction bet-

ween peers and, additionally, enabling teachers to mo-

nitor interactions between peers.

Classwide Peer Tutoring Learning Management

System (CWPT-LMS) provides tools and services re-

quired by teachers to implement CWPT (Greenwood

et al., 2001). The software enables teachers to plan

and measure progress. Unlike CWPT-LMS, our work

focuses more on facilitating the activities performed

by the tutors.

G-math Peer-Tutoring System is a Web-based ap-

plication developed as a Massive Multiplayer Online

Game, in order to facilitate interactions among con-

nected users (Tsuei, 2009). The system is composed

of two modules, which are operated by teachers and

students. The core focus of the system is to improve

mathematics outcomes of learners by facilitating inte-

ractions amongst the learners.

Due to the size of most Massively Open Online

Courses (MOOCs), peer feedback has become an in-

tegral part of the assessment process. PeerStudio is

an assessment platform that was implemented to take

advantage of large MOOC enrolment numbers in or-

der to facilitate rapid assessment feedback (Kulkarni

et al., 2015).

2.2 Technology for Supporting

Orchestration of Learning Activities

There have been numerous studies that have propo-

sed techniques aimed at supporting the orchestration

of learning activities. Niramitranona et al. proposed

a toolset, consisting of a scenario designer: SceDer,

in order to support one-on-one collaborative learning

(Niramitranon et al., 2007). GLUEPS-AR is a system

aimed at facilitating orchestration of learning sce-

narios in ubiquitous environments (Mu

˜

noz-Crist

´

obal

et al., 2013). Some approaches have been more focu-

sed on computer-supported collaborative learning; for

instance GLUE!-PS facilitates deployment and mana-

gement of learning designs in distributed learning en-

vironments (Prieto et al., 2014).

This paper is explicitly aimed at exploring the im-

plications of facilitating the orchestration of learning

activities by peer tutors during formal face-to-face in-

teraction with learners.

3 A PEER-LED TUTORING

ORCHESTRATION TOOL

3.1 Design Goals

The premise of our work is that peer-led tutorial ses-

sions can be made more effective by the use of orga-

nised orchestration tools. A proof-of-concept toolkit

was developed to serve as the basis for experiments

to test this premise, and an initial evaluation was then

conducted to assess the usability of the toolkit by tu-

tors in the context of actual tutorial/course content.

Peer Tutoring Orchestration - Streamlined Technology-driven Orchestration for Peer Tutoring

435

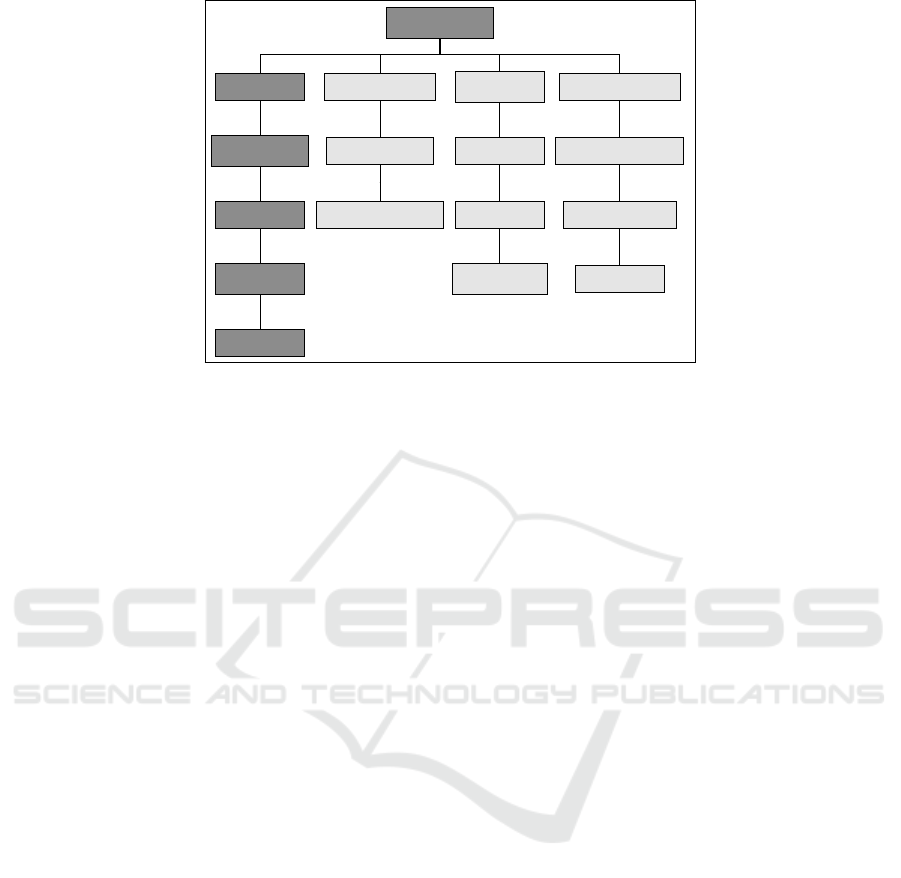

Sequencing

Directed

Branching

Linear

Looping

Random

Self-Guided

Full Choice

Partial Choice

Adaptive

Limited

Full

Intelligent

Collaborative

Instructor-Led

One-on-One

Cohorts

Figure 1: IMS Global Simple Sequencing activity tree.

The toolkit has two major functions: pre-session

management and in-session orchestration of activi-

ties. The pre-session management involved three spe-

cifics tasks:

• Activity management, which is the specification

of metadata associated with the activity;

• Resource management, which is the uploading

and organising of resources; and

• Activity sequencing, which is the ordering of re-

sources within the activity.

After an activity has been designed, using the tool,

it can be viewed or played back by a tutor in a tuto-

rial session. There are two viewers for this purpose:

a built-in viewer that uses HTML; and a PowerPoint

export feature.

3.2 Key Components

As described above, there are four key components

that implement the major function of pre-session and

in-session management of the tool. These are descri-

bed further in the following sections.

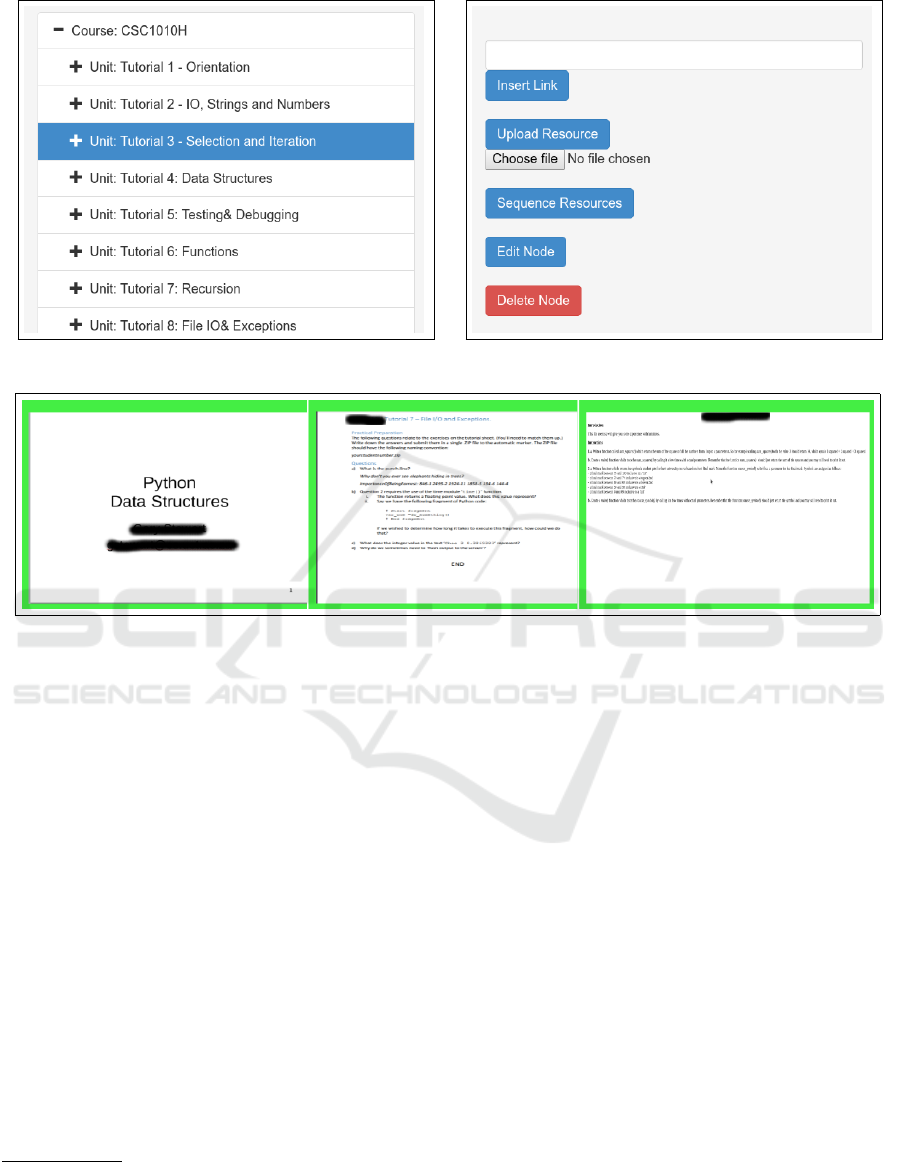

3.2.1 Activity Manager

The Activity Manager module makes it possible for

session activities to be properly structured and organi-

sed. A two-level hierarchical node structuring techni-

que allows for courses or modules to act as top-level

container structures and for session activities to be

presented as level two node structures. Teaching re-

sources are then associated to the level two nodes, as

described in Section 3.2.2. Figure 2 shows a screens-

hot of the structuring.

3.2.2 Resource Manager

The Resource Manager module allows for resources

such as PDF documents, video and audio files to be

uploaded and associated with level two nodes. As

shown in Figure 2, this is accomplished by selecting

a specific level two node and subsequently uploading

the desired resources. In addition associated resour-

ces can later be downloaded.

3.2.3 Activity Sequencer

The Activity Sequencer module enables the user to

construct a sequence chain that explicitly specifies the

order in which the associated resources should be or-

chestrated.

3.2.4 Activity Viewers

A basic HTML viewer can then be used to play back

the sequence chain, as shown in Figure 4. In addi-

tion, another proof of concept viewer allows for the

sequence chain to be downloaded as a PowerPoint do-

cument with the specified order. Furthermore, the se-

quence chain is accessible through the RESTful API,

described in Section 3.3.3.

3.3 Implementation

3.3.1 Data Storage Standard

The IMS Global Simple Sequencing Specification

(IMS Global Learning Consortium, 2003) was used

as the underlying standard representation for data

storage. The standard can be used to represent many

different types of sequenced activities, as shown in Fi-

gure 1. In this proof-of-concept implementation, only

CSEDU 2017 - 9th International Conference on Computer Supported Education

436

Figure 2: Activity management. Figure 3: Resource management.

Figure 4: Activity sequencer.

the Directed path was used, as tutorial sessions are

typically linear-structured directed activities.

3.3.2 Scripting Platform

The scripting platform was implemented as a Web-

based system

1

. The front-end was implemented using

HTML/CSS and JavaScript, together with Twitter

Bootstrap

2

. Node.js

3

was used to implement core

backend module services, as described below.

3.3.3 Scripting API

A RESTful Web service API (Fielding, 2000) enables

access to specific activities and resources. This would

effectively make it possible for tailored viewing user

interfaces to be implemented. The API is currently

implemented to facilitate access to sequenced activi-

ties and resources and, as such, only GET requests are

allowed.

1

http://simba.cs.uct.ac.za/indefero/index.php/p/

simplescripting

2

http://getbootstrap.com

3

https://nodejs.org/en

4 EVALUATION

A user study was performed to better understand the

orchestration load imposed by the described tool, du-

ring scripting of learning activities and, additionally,

to assess its potential usefulness to tutors. The emp-

hasis of this initial study was on the reaction of tutors

to the tool in a controlled environment, rather than an

assessment of the tool in tutorial sessions.

4.1 Context Selection

The experiment was conducted in a Computer

Science department at a large university. The context

provides for an ideal environment in which peer-led

learning is essential. In order to complement the for-

mal traditional lectures, the department hires senior

undergraduate students to act as peer tutors.

Students enrolled for a typical course are split into

smaller, more manageable tutorial groups that are ad-

ministered by tutors, as shown in Table 1. In some

of the courses, the tutors’ role involves facilitating tu-

torial sessions aimed at revising lecture material and

responding to ad hoc student queries. Tutorial ses-

Peer Tutoring Orchestration - Streamlined Technology-driven Orchestration for Peer Tutoring

437

sions are held once a week and topics addressed are

those from the previous week.

Table 1: Tutorial groups in study environment.

Course Students Tutors Tutorial

Groups

CSC1015F 754 38 12

CSC1017F 165 9 3

CSC1010H 80 6 5

CSC1011H 26 2 2

4.2 Experimental Design

4.2.1 Instrumentation

The orchestration load was measured to determine the

amount of effort needed to use the tool, or the degree

of complexity of the tool. If the load is low, this indi-

cates that the tutors are able to use the tool effectively

to achieve the necessary orchestration of activities.

Measuring the orchestration load was accomplis-

hed through the use of the NASA Task Load In-

dex (NASA-TLX) (Hart and Staveland, 1988) pencil

and paper version

4

. The NASA-TLX measurement

instrument measures the subjective workload score

using a weighted average rating of six subscales, out-

lined below.

Mental Demand. How much mental and perceptual

activity was required (e.g. thinking, deciding, calcula-

ting, remembering, looking, searching, etc)? Was the

task easy or demanding, simple or complex, exacting

or forgiving?

Physical Demand. How much physical activity was

required (e.g. pushing, pulling, turning, controlling,

activating, etc)? Was the task easy or demanding,

slow or brisk, slack or strenuous, restful or laborious?

Temporal Demand. How much time pressure did

you feel due to the rate or pace at which the tasks

or task elements occurred? Was the pace slow and

leisurely or rapid and frantic?

Performance. How successful do you think you

were in accomplishing the goals of the task set by the

experimenter (or yourself)? How satisfied were you

with your performance in accomplishing these goals?

Effort. How hard did you have to work (mentally and

physically) to accomplish your level of performance?

Frustration. How insecure, discouraged, irritated,

stressed and annoyed versus secure, gratified, content,

relaxed and complacent did you feel during the task?

4

https://humansystems.arc.nasa.gov/groups/tlx/

tlxpaperpencil.php

Measuring the subjective workload is a two-step pro-

cess, outlined in Section 4.2.4, that involves pair-wise

comparisons among the six subscales and individual

ratings on each of the subscales.

In order to measure the usability and usefulness of

the tool, the technology acceptance model (TAM) was

used to evaluate the Perceived Usefulness (PU) and

Perceived Ease of Use (PEU) (Davis, 1989). TAM

facilitates the prediction of user attitudes and actual

usage by using participants’ subjective perceptions of

usefulness and ease of use of a system. The TAM

questionnaire was used in its entirety. Table 2 outlines

the PUEU questions used in the questionnaire.

4.2.2 Selection of Subjects

The study participants were chosen based on conve-

nience, from a sample pool of all tutors who had tuto-

red first year courses. A total of 24 participants were

recruited, via email, after ethical clearance was gran-

ted. Each participant received ZAR 50.00 as compen-

sation for their time.

4.2.3 Experimental Tasks

The experiment used official teaching materials for

CSC1010H—outlined in Table 1—normally used

and/or referenced by tutors during tutorial sessions in

order to respond to student queries and concerns. The

description of the teaching materials that were used

during the experiment sessions are detailed below.

Lecture Slides. Archived lecture slide notes used by

lecturers in formal lecture sessions.

Laboratory Exercises. Practical laboratory exercise

questions used in practical programming sessions.

Pre-practical Tutorials. Assessment questions, si-

milar to assignment questions, meant to orient stu-

dents to the assignment questions.

Assignment Tutorials. Assignments questions that

are required to be handed in by students.

The list of the three experiment tasks performed

by the participants are outlined below. For each of

the three tasks, participants repeated the procedures

for two tutorial session scenarios: ”Tutorial 6: Python

Functions“ and ”Tutorial 7: Recursion“.

Task 1: Activity Management. This task involved

activity management by creating two-level hierarchi-

cally structured orchestration activity nodes.

Task 2: Resource Management. This task involved

resource management of all teaching materials requi-

red to orchestrate a typical learning session. This

involved uploading teaching materials and subse-

quently associating them to their respective nodes.

CSEDU 2017 - 9th International Conference on Computer Supported Education

438

Task 3: Sequencing Activities. This task involved

the creation of a learning session sequence chain

using specified teaching resources.

4.2.4 Procedure

One-on-one hour-long sessions were held with each

of the 24 participants. Participants were briefed about

the study; they were then requested to read and sign

an informed consent form, explaining the purpose and

procedures of the experiment.

Thereafter, participants performed experiment

tasks outlined in Section 4.2.3, using the tool des-

cribed in Section 3.3.2. After completing each of

the three tasks described in Section 4.2.3, participants

were asked to fill out a NASA-TLX questionnaire in

order to assess their subjective workload for each of

the individual tasks. Specifically, this process was

conducted as follows for each of the three tasks:

1. Participants executed the experiment task

2. Participants then filled out a NASA-TLX questi-

onnaire

(a) Participants performed pair-wise comparisons

for the six NASA-TLX subscales

(b) Participants provided raw ratings for the six

NASA-TLX subscales

Finally, after performing the activities specific to

each of the three tasks, participants filled out a PUEU

questionnaire.

4.3 Results

4.3.1 NASA-TLX Ratings

The Shapiro-Wilk test was used to test the normality

of each of the three task participant results; a One

Sample median test was performed on Task 1 (p <

0.001) and One sample t-tests Task 2 (p > 0.05) and

Task 3 (p < 0.01); Task 1 and Task 3 scores were sig-

nificantly lower than the 50% mark, however Task 2

scores were not significantly lower than 50%.

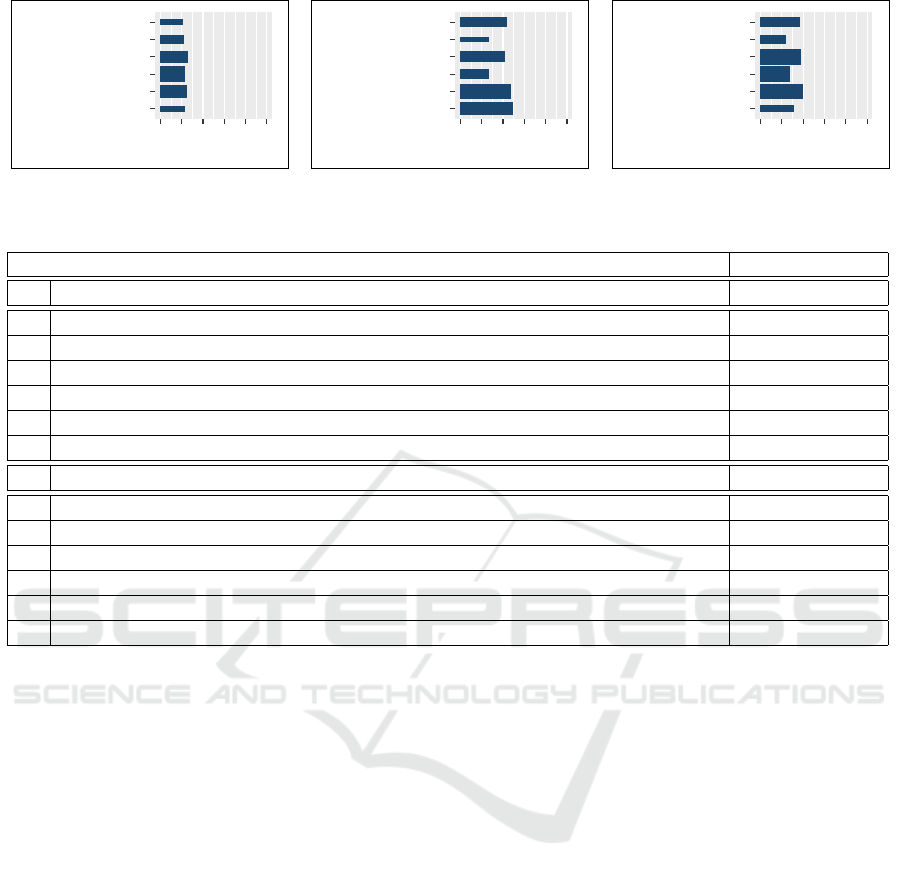

Figure 5 shows the weighted workload scores for

all the three tasks. The overall weighted scores for

all the three tasks are below the 50 mark, with Task 1

(Activity Management) requiring the least workload

and Task 2 (Resource Management) requiring the

most workload.

Figures 6 to 8 show subscale ratings for each of

the three tasks. The width of the subscale bars indi-

cate the importance of each factor, while the length

represents the raw rating scores for the subscales.

Sequencing Activities

Resource Management

Activity Management

0 20 40 60 80 100

Mean Weighted Rating

Experiment Tasks

Figure 5: Overall weighted workload score.

In Task 1, the Performance subscale contributed

the most towards the overall workload, while the Phy-

sical Demand subscale was the least contributor. For

Task 2, the Effort subscale was the highest contri-

butor to the overall workload, while the Mental De-

mand subscale contributed the least. Then, for Task

3, the Performance subscale contributed the most to

the workload and the Frustration subscale was the le-

ast contributor.

In terms of the raw ratings, all subscale ratings

were rated below the 50 mark, however, the Frustra-

tion subscale for Task 2 and Effort subscale for Task

3 were closer to the 50 mark.

4.3.2 PUEU Scores

Table 2 shows the PU and EU mean scores and their

associated standard deviations. The Shapiro-Wilk test

was used to test the normality of the individual que-

stion scores and aggregate PU and EU scores. One-

sample t-test

†

and Wilcoxon signed rank test

‡

were

conducted as shown in Table 2, with p-value results

represented with the asterisk.

The aggregate PU and PEU scores were all signi-

ficantly greater than 4, where 4 is the mid-point of the

scale of responses. In addition, all the individual 12

questions were also significantly greater than 4. The

implication of this is that all results were statistically

better than average.

The results indicate the potential usefulness and

ease of use of the tool.

5 DISCUSSION

The purpose of this paper was to investigate the effect

of technology-driven organised orchestration when

applied to a specific educational setting: peer tuto-

ring sessions. The NASA-TLX workload and PUEU

scores provided an avenue for measuring the orches-

tration load and usability of the tool, respectively.

As shown in Figure 5, the results indicate that

Resource Management requires the most workload.

The high workload is as a result of four subscales—

Physical Demand, Temporal Demand, Effort and

Peer Tutoring Orchestration - Streamlined Technology-driven Orchestration for Peer Tutoring

439

Frustration

Effort

Performance

Temporal Demand

Mental Demand

Physical Demand

0 20 40 60 80 100

Mean Raw Rating

NASA-TLX Subscales

Figure 6: Activity management.

Frustration

Effort

Performance

Temporal Demand

Mental Demand

Physical Demand

0 20 40 60 80 100

Mean Raw Rating

NASA-TLX Subscales

Figure 7: Resource management.

Frustration

Effort

Performance

Temporal Demand

Mental Demand

Physical Demand

0 20 40 60 80 100

Mean Raw Rating

NASA-TLX Subscales

Figure 8: Sequencing activities.

Table 2: Descriptive statistics for PUEU responses.

*

p <0.05,

**

p <0.01,

***

p <0.001.

Perceived Usefulness and Ease of Use (n=24) Mean (sd)

A. Perceived Usefulness 5.12 (1.14)

*** ‡

1. Using the system in my job would enable me to accomplish tasks more quickly 4.50 (1.67)

* ‡

2. Using the system would improve my job performance 5.42 (1.18)

*** ‡

3. Using the system in my job would increase my productivity 5.25 (1.15)

*** ‡

4. Using the system would enhance my effectiveness on the job 5.38 (1.41)

*** ‡

5. Using the system would make it easier to do my job 4.71 (1.63)

* ‡

6. I would find the system useful 5.46 (1.41)

*** ‡

B. Perceived Ease of Use 5.80 (0.85)

*** ‡

7. Learning to operate the system would be easy for me 6.25 (1.15)

*** ‡

8. I would find it easy to get the system to do what I want it to do 5.46 (1.69)

*** ‡

9. My interaction with the system would be clear and understandable 5.79 (1.10)

*** ‡

10. I would find the system to be flexible to interact with 4.83 (1.40)

*** ‡

11. It would be easy for me to become skillful at using the system 6.33 (0.82)

*** ‡

12. I would find the system easy to use 6.13 (1.12)

*** ‡

Frustration—with raw rating scores above 40 and also

because both these scales contributed significantly to

the weighted score. This can be attributed to the fact

that this is the most involving of the three tasks as all

teaching resources have to be individually associated

to specific activity nodes. Incidentally, some partici-

pants expressed a desire for there to be a bulk upload

feature in order to cut down on the amount of time re-

quired to associate resources to activity nodes. Anot-

her potential workaround would be to create templa-

tes that would only require a user to edit important

fields.

Activity Management required the least workload

due to the simplistic nature of the task. All the subs-

cales scored below 25, with the subscales contribu-

ting the most to the workload having the lowest raw

ratings. The task only requires a user to specify me-

tadata necessary to uniquely identify nodes. Further-

more, the experimental task only required participants

to create one level-one node and two level-two nodes.

As with Activity Management, the sequencing of

learning activities did not require much workload. In

fact, the reason why the score is significantly higher

than Activity Management could be attributed to it ha-

ving been the last task to be performed.

The results for the usability were very revea-

ling. Most notably, the aggregate scores for both

the Perceived Usefulness and Perceived Ease of Use

were significantly greater than 4, therefore better than

average. Furthermore, the individual question scores

were also greater than 4, therefore better than average.

6 CONCLUSIONS

This paper proposes to facilitate the formalisation of

the face-to-face peer-led tutoring process by levera-

ging organised orchestration. It is argued that a tool

can be developed to help tutors to more effectively or-

ganise both their pre-session and in-session activities.

A proof-of-concept tool was designed and developed

to meet this objective. The various functions of this

tool were then assessed by tutors, with an emphasis

on the pre-session management of activities. Initial

results indicate that the tool, and therefore the appro-

ach, are viable as a means of organising tutor-led acti-

vities in tutorial sessions.

While the tool has been demonstrated to be usa-

ble and potentially useful from the tutor’s perspective,

CSEDU 2017 - 9th International Conference on Computer Supported Education

440

with an emphasis on the initial three pre-session acti-

vities, it has not been tested during tutorial sessions.

This assessment of the final of four activities suppor-

ted by the tool is the next planned experiment, to com-

plement these initial results with further evidence of

the viability of the tool, but with an emphasis on the

in-session activities. It is expected that in-session use

will confirm the effectiveness of organised orchestra-

tion in the classroom, as applied to the specific case

of tutor-led small group teaching.

ACKNOWLEDGEMENTS

This work is funded, in part, by the Hasso Plattner

Institute. Any opinions, findings, and conclusions or

recommendations expressed in this work are those of

the authors and do not necessarily reflect the views of

the sponsors. We are grateful to Imaculate Mosha for

software development platform. We would also like

to thank all the study participants.

REFERENCES

Beasley, C. J. (1997). Students as teachers: The benefits

of peer tutoring. In Pospisil, R. and Willcoxson, L.,

editors, Proceedings of the 6th Annual Teaching Lear-

ning Forum, pages 21–30, Perth. Murdoch University.

Bowman-Perrott, L., Davis, H., Vannest, K., Williams,

L., Greenwood, C., and Parker, R. (2013). Acade-

mic benefits of peer tutoring: A meta-analytic review

of single-case research. School Psychology Review,

42(1):39–55.

Davis, F. D. (1989). Perceived Usefulness, Perceived Ease

of Use, and User Acceptance of Information Techno-

logy. MIS Quarterly, 13(3):319.

Dillenbourg, P. (2013). Design for classroom orchestration.

Computers & Education, 69:485–492.

Evans, M. J. and Moore, J. S. (2013). Peer tutoring with

the aid of the internet. British Journal of Educational

Technology, 44(1):144–155.

Falchikov, N. (2001). Learning Together: Peer Tutoring

in Higher Education. RoutledgeFalmer, London, 1st

edition.

Fielding, R. T. (2000). Architectural Styles and

the Design of Network-based Software Ar-

chitectures. PhD thesis, University of Ca-

lifornia, Irvine. http://www.ics.uci.edu/ fiel-

ding/pubs/dissertation/top.htm. Accessed: November

15, 2016.

Greenwood, C. R., Arreaga-Mayer, C., Utley, C. A., Gavin,

K. M., and Terry, B. J. (2001). Classwide peer tu-

toring learning management system applications with

elementary-level english language learners. Remedial

and Special Education, 22(1):34–47.

Hart, S. G. and Staveland, L. E. (1988). Development of

NASA-TLX (Task Load Index): Results of empiri-

cal and theoretical research. Advances in psychology,

52:139–183.

IMS Global Learning Consortium (2003).

IMS Simple Sequencing Specification.

https://www.imsglobal.org/simplesequencing/index.html.

Accessed: November 15, 2016.

Johnson, D. W., Johnson, R. T., and Stanne, M. B. (2000).

Cooperative Learning Methods: A Meta-Analysis

Methods Of Cooperative Learning: What Can We

Prove Works. Methods Of Cooperative Learning:

What Can We Prove Works, pages 1–30.

Kulkarni, C. E., Bernstein, M. S., and Klemmer, S. R.

(2015). Peerstudio: Rapid peer feedback emphasizes

revision and improves performance. In Proceedings

of the Second (2015) ACM Conference on Learning @

Scale, L@S ’15, pages 75–84, New York, NY, USA.

ACM.

Mu

˜

noz-Crist

´

obal, J. A., Prieto, L. P., Asensio-P

´

erez, J. I.,

Jorr

´

ın-Abell

´

an, I. M., Mart

´

ınez-Mon

´

es, A., and Di-

mitriadis, Y. (2013). GLUEPS-AR: A System for

the Orchestration of Learning Situations across Spa-

ces Using Augmented Reality. In Hern

´

andez-Leo, D.,

Ley, T., Klamma, R., and Harrer, A., editors, Procee-

dings of the 8th European Conference, on Technology

Enhanced Learning, pages 565–568. Springer Berlin

Heidelberg, Berlin, Heidelberg.

Niramitranon, J., Sharples, M., Greenhalgh, C., and Lin,

C.-P. (2007). SceDer and COML: Toolsets for lear-

ning design and facilitation in one-to-one technology

classroom. 15th International Conference on Compu-

ters in Education: Supporting Learning Flow through

Integrative Technologies, ICCE 2007, pages 385–391.

Phiri, L., Meinel, C., and Suleman, H. (2016a). Ad hoc

vs. Organised Orchestration: A Comparative Analysis

of Technology-driven Orchestration Approaches. In

Kumar, V., Murthy, S., and Kinshuk, editors, IEEE

8th International Conference on Technology for Edu-

cation, pages 200–203, Mumbai. IEEE.

Phiri, L., Meinel, C., and Suleman, H. (2016b). Streamlined

Orchestration: An Orchestration Workbench Frame-

work for Effective Teaching. Computers & Education,

95:231–238.

Prieto, L. P., Asensio-P

´

erez, J. I., Mu

˜

noz-Crist

´

obal, J. a.,

Jorr

´

ın-Abell

´

an, I. M., Dimitriadis, Y., and G

´

omez-

S

´

anchez, E. (2014). Supporting orchestration of

CSCL scenarios in web-based Distributed Learning

Environments. Computers and Education, 73:9–25.

Roschelle, J., Dimitriadis, Y., and Hoppe, U. (2013). Class-

room orchestration: Synthesis. Computers & Educa-

tion, 69:523–526.

Topping, K. J. (1996). The effectiveness of peer tutoring in

further and higher education: A typology and review

of the literature. Higher Education, 32(3):321–345.

Topping, K. J. (2005). Trends in peer learning. Educational

psychology, 25(6):631–645.

Tsuei, M. (2009). The g-math peer-tutoring system for sup-

porting effectively remedial instruction for elemen-

tary students. In 2009 Ninth IEEE International Con-

ference on Advanced Learning Technologies, pages

614–618. IEEE.

Peer Tutoring Orchestration - Streamlined Technology-driven Orchestration for Peer Tutoring

441