Bootstrapping a Semantic Lexicon on Verb Similarities

Shaheen Syed

1

, Marco Spruit

1

and Melania Borit

2

1

Department of Information and Computer Sciences, Utrecht University, Utrecht, The Netherlands

2

Norwegian College of Fishery Science, University of Tromsø, Tromsø, Norway

Keywords:

Semantic Lexicon, Bootstrapping, Extraction Patterns, Web Mining.

Abstract:

We present a bootstrapping algorithm to create a semantic lexicon from a list of seed words and a corpus

that was mined from the web. We exploit extraction patterns to bootstrap the lexicon and use collocation

statistics to dynamically score new lexicon entries. Extraction patterns are subsequently scored by calculating

the conditional probability in relation to a non-related text corpus. We find that verbs that are highly domain

related achieved the highest accuracy and collocation statistics affect the accuracy positively and negatively

during the bootstrapping runs.

1 INTRODUCTION

Semantic lexicons for specific domains are increas-

ingly becoming important in many Natural Language

Processing (NLP) tasks such as information extrac-

tion, word sense disambiguation, anaphora resolution,

speech recognition, and discourse processing. On-

line lexical resources such as WordNet (Miller et al.,

1990) and Cyc (Lenat, 1995) are useful for generic

domains but often fall short for domains that in-

clude specific terms, jargon, acronyms and other lex-

ical variations. Handcrafting domain-specific lexi-

cons can be time-consuming and costly. Various tech-

niques have been developed to automatically create

semantic lexicons, such as lexicon induction, lexi-

con learning, lexicon bootstrapping, lexical acquisi-

tion, hyponym learning, and web-based information

extraction.

We define a semantic lexicon as a dictionary of

hyponym words that share the same hypernym. For

example, cat, dog or cow are all hyponym words that

share the hypernym ANIMAL. Likewise, the words red,

green, and blue are semantically related by the hyper-

nym COLOR. A semantic lexicon differs from an on-

tology or taxonomy as it does not describe the formal

representation of shared conceptualizations or infor-

mation about concepts and their instances, nor does it

provide a strict hierarchy of classes.

Several attempts have been made to automatically

create semantic lexicons from text corpora by uti-

lizing semantic relations in conjunctions (dogs and

cats and cows), lists (dogs, cats, cows), apposi-

tives (labrador retriever, a dog) and compound nouns

(dairy cow) (Riloff and Shepherd, 1997; Roark and

Charniak, 1998; Phillips and Riloff, 2002; Widdows

and Dorow, 2002). Others have used extraction pat-

terns (Colombia was divided, the country was di-

vided) (Thelen and Riloff, 2002; Igo and Riloff,

2009), instance/concept (”is-a”) relationships (Pantel

and Ravichandran, 2004), coordination patterns (Zier-

ing et al., 2013a), multilingual symbiosis (Ziering

et al., 2013b), or combinations thereof (Qadir and

Riloff, 2012). Lexical learning from informal text,

such as social media, has also been performed (Qadir

et al., 2015).

Learning semantic lexicons is often based on ex-

isting corpora that may not be available for all do-

mains. Furthermore, little research on lexicon learn-

ing has been performed on web text from informa-

tive sites, forums, blogs, and comment sections that

contain content written by a variety of people, thus

containing different writing idiosyncracies. We incor-

porate web mining techniques to build our own do-

main corpus and combine the BASILISK (Thelen and

Riloff, 2002) bootstrapping algorithm and the Point-

wise Mutual Information (PMI) scoring metric pro-

posed by (Igo and Riloff, 2009). We based the hy-

ponym relationships on the extraction pattern context

of verb stem similarities and used a probability scor-

ing metric to extract the most suitable verbs. Our aim

is to use existing web content to create a semantic lex-

icon and subsequently use extraction patterns to find

semantically related words. Extraction patterns, and

more specifically the verbs within these patterns, are

Syed, S., Spruit, M. and Borit, M.

Bootstrapping a Semantic Lexicon on Verb Similarities.

DOI: 10.5220/0006036901890196

In Proceedings of the 8th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2016) - Volume 1: KDIR, pages 189-196

ISBN: 978-989-758-203-5

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All r ights reserved

189

scored against a non-related text corpus to explore if

verbs that occur more frequently in domain text are

more likely to be accompanied by semantically re-

lated nouns.

The remainder of this paper is structured as fol-

lows: First, we present previous work in semantic lex-

icon learning for hypernym-hyponym relationships.

Second, we detail our bootstrapping algorithm, the

extraction of the text corpus, the creation of extrac-

tion patterns, and the scoring method. Third, we ana-

lyze the semantic lexicon and the accuracy of our al-

gorithm during each successive run of the bootstrap-

ping process. We then conclude our work and provide

new research directives.

2 PREVIOUS WORK

(Riloff and Shepherd, 1997) used noun co-occurrence

statistics to bootstrap a semantic lexicon from raw

data. Their bootstrapping algorithm scored conjunc-

tions, lists, appositives and nominal compounds to

find category words within a context window. It is

one of the first attempts to build a semantic lexicon

and uses human intervention to review the words and

select the best ones for the final dictionary. (Roark

and Charniak, 1998) applied a similar technique but

use a different definition for noun co-occurrence and

scoring candidate words. (Riloff and Shepherd, 1997)

ranked and selected candidate words based on the ra-

tio of noun co-occurrences in the seed list to the total

frequency of the noun in the corpus while (Roark and

Charniak, 1998) used log-likelihood statistics (Dun-

ning, 1993) for final ranking.

(Widdows and Dorow, 2002) used graph mod-

els of the British National Corpus for lexical acqui-

sition. They focused on the relationships between

nouns when they occurred as part of a list. The

nodes represent nouns and are linked to each other

when they conjunct with either and or or. The edges

are weighted by the frequency of the co-occurrence.

Their algorithm mitigates the infection of bad words

entering the candidate word list by looking at type fre-

quency rather than token frequency.

(Phillips and Riloff, 2002) automatically created

a semantic lexicon by looking at strong syntactic

heuristics. They distinguish between two types of

lexicons, (1) proper noun phrase and (2) common

nouns, by utilizing the syntactic relationships be-

tween them. Syntactic structures are defined by

appositives, compound nouns, and ”is-a” clauses—

identity clauses with a main verb of to be. Statistical

filtering is applied to avoid inaccurate syntactic struc-

tures and deterioration of the lexicon entries. (Pantel

and Ravichandran, 2004) also looked at ”is-a” rela-

tionships by looking at concept signatures and apply-

ing a top-down approach. Their method first uses co-

occurrence statistics for semantic classes to then find

the most appropriate hyponym relationship.

(Thelen and Riloff, 2002) proposed a weakly

supervised bootstrapping algorithm (BASILISK) to

learn semantic lexicons by looking at extraction pat-

terns. They used the AutoSlog (Riloff, 1996) extrac-

tion pattern learner and a list of manually selected

seed words to build semantic lexicons for the MUC-4

proceedings, a terrorism domain related corpus. Ex-

traction patterns capture role relationships and are

used to find noun phrases with similar semantic mean-

ing as a result of syntax and lexical semantics. This

is explained by their example verb robbed. The sub-

ject of the verb robbed often indicates the perpetrator

while the direct object of the verb robbed could indi-

cate the victim or target. To avoid inaccurate words

entering the lexicon and to increase the overall accu-

racy they learned multiple categories simultaneously.

(Igo and Riloff, 2009) used BASILISK and applied

co-occurrence statistics for hypernym en seed word

collocation by computing a variation of Pointwise

Mutual Information (PMI). They used web statistics

to re-rank the words after the bootstrapping process

was finished.

(Qadir and Riloff, 2012) used pattern-based dic-

tionary induction, contextual semantic tagging, and

coreference resolution in an ensemble approach.

They combined the three techniques in a single boot-

strapping process and added lexicon entries if words

occur in at least two of the three methods. Since each

of them exploit independent sources, their ensemble

method improves precision in the early stages of the

bootstrapping process, in which semantic drift (Cur-

ran et al., 2007) can decrease the overall accuracy sub-

stantially. (Ziering et al., 2013b) have also employed

an ensemble method and used linguistic variations be-

tween multiple languages to reduce semantic drift.

We combine the BASILISK bootstrapping algo-

rithm (Thelen and Riloff, 2002) and co-occurrence

statistics proposed by (Igo and Riloff, 2009) to dy-

namically score each word before it enters the lex-

icon. We compare BASILISK’s scoring metric Av-

gLog in contrast to the PMI metric at each bootstrap

run rather than using PMI scores to re-rank the lexi-

con after the bootstrapping process has finished.

3 LEXICON BOOTSTRAPPING

We use a list of seed words to initiate the bootstrap-

ping process and use this set of seed words to create

KDIR 2016 - 8th International Conference on Knowledge Discovery and Information Retrieval

190

a highly related text corpus by mining the web. Web

mining was applied because domain specific corpora

are not always readily available. Before bootstrapping

begins, we use the linguistic expressions of extrac-

tion patterns (Riloff, 1996) and group noun phrases

when they occur with the same stemmed verb. The

stemmed verbs are then scored by a probability score

with respect to a non-related text corpus. Verbs that

are domain specific, that is, they infrequently occur

in general text are given a higher score. We then use

BASILISK’s bootstrapping algorithm and a PMI scor-

ing metric before adding new words to the lexicon.

The PMI score is a measure of association between

words. For this, we use web count statistics between

hyponym and hypernym words to calculate colloca-

tion statistics and utilize these scores to only allow

the strongest hyponyms to enter the lexicon, thus de-

creasing wrong lexicon entries during the bootstrap-

ping process. A schematic overview of the bootstrap-

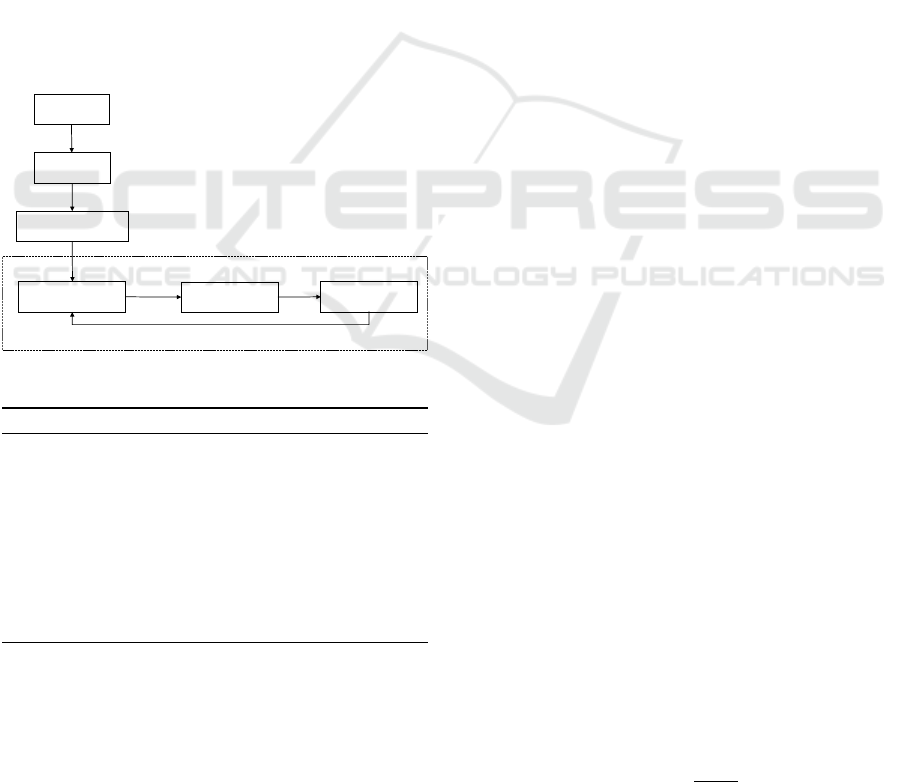

ping process is displayed in Figure 1 and Algorithm 1.

We will discuss the bootstrapping process in detail in

subsequent sections.

seed words

corpus

create

extraction patterns

extract

semantic lexicon

initialize

pattern pool

select topN

patterns

word pool

extract

nouns

add topN words

Figure 1: Schematic overview of bootstrapping process.

Algorithm 1: Bootstrap Lexicon.

1: lexicon ← seedwords

2: cor pus ← TopNSearches(seedwords)

3: patterns ← Patterns(corpus, 0.5 ≤ p ≤ 1.0)

4: for i = 0 to i < m do

5: patternpool ← Score(patterns,topN + i)

6: words ← GetNouns(patternpool)

7: lexicon ← ScoreWord(words,topN) /∈ lexicon

8: i + +

9: end for

10: return lexicon

3.1 Domain and Seed Words

We choose to build a single related lexicon for the

fisheries domain. The fisheries domain includes a

multitude of knowledge production approaches, from

mono- to transdisciplinary. Biologists, oceanogra-

phers, mathematicians, computer scientists, anthro-

pologists, sociologists, political scientists, economists

and researchers from many other more disciplines

contribute to the fisheries body of knowledge, to-

gether with non-academic participants, such as de-

cision makers and stakeholders. Due to these di-

verse contributions of specialized language from a

multitude of knowledge production approaches, the

fisheries domain is characterized by a large body of

words. For the same reason, this body of words is

extremely rich in concepts. However, sometimes the

concepts use different words to refer to the same ab-

straction (e.g. fishermen and fishers) and sometimes,

even though they are using the same words, they are

referring to different abstractions (e.g. fisher behavior

may mean something for an economist and something

else for an anthropologist). In addition, the fisheries

domain has a high frequency of compound words (e.g.

fisheries management, fishing method), in order to

differentiate it from other resource management do-

mains, such as forestry, for example. The definition

of a hyponym-hypernym relation is therefore based

on a more abstract level and also includes transitive

relationships. For example, if x is a hyponym of y,

and y is a hyponym of z, then x is a hyponym of z.

The seed words were chosen by experts from the

Arctic University of Norway where they were asked

for a list of 10 nouns or compound nouns that best

cover the domain. The list contains the phrases fish-

ery ecosystem, fisheries management, fisheries policy,

fishing methods, fishing gear, fishing area, fish, fish

species, fishermen, fish supply chain.

3.2 Building the Corpus

The fisheries corpus was created by extracting web

text from a Google search for each of the seed words

and extracting the content of the first 30 URLs. We

enclosed the 10 search terms with quotations marks to

force Google into an exact match. We found that the

first 30 searches provided sufficient text for the cor-

pus and still contained search related content. After

mining the pages we started an extensive data clean-

ing process. Besides cleaning HTML, JavaScript, and

CSS tags, we needed to clean text from e.g. headers,

footers, labels, sidebars that entered the corpus. We

removed content such as ”click here”, ”all rights re-

served”, ”copyright”. Finally, we scored the mined

text fragments with an en-US and en-GB lookup dic-

tionary D as:

S

text

i

=

V

i

∑

i=1

w

i

V

i

(1)

Where V

i

is the vocabulary size of text

i

, and w

i

a word

Bootstrapping a Semantic Lexicon on Verb Similarities

191

match such that w

i

∈ D. We found S

text

i

≥ 0.3 suffi-

cient to clean the corpus even further as it removes

non-English and improper formatted text. For ex-

ample, phrases like ”Not logged inTalkContribution-

sCreate accountLog in” bear no meaning and serves

navigational purposes only when rendered by the web

browser, yet it is extracted from the HTML content.

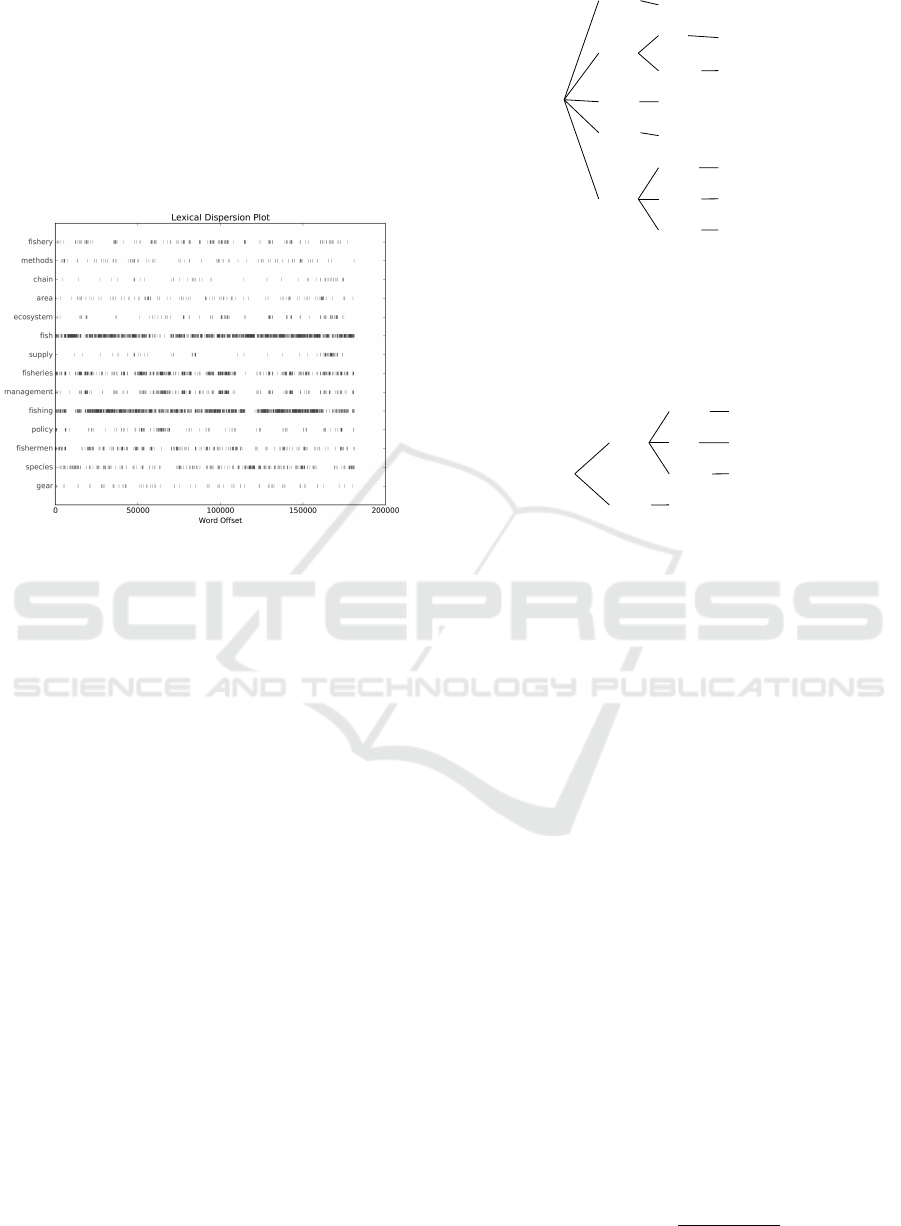

Figure 2 shows the dispersion plot for the seed words

unigrams after the cleaning phase.

Figure 2: The lexical dispersion plot shows how often a

word, displayed on the y-axis, occurs in the cleaned corpus.

The x-axis displays the position within the corpus from be-

ginning to end.

3.3 Chunking

We used the Punkt sentence tokenizer and a part-of-

speech (POS) tagger to create individual sentences

with their grammatical properties. We then used a

shallow parser with regular expressions (RE) on POS

tags to extract noun phrases (NP) when they occur as

a direct object or subject. The verb that precedes or

follows the NP is used to group related NPs when they

share the same stem.

The RE grammar for the parser is defined as

(1) (<VB.*><DT>?<JJ>*<NN>+) for direct object

noun phrases and (2) (<DT>?<JJ>*<NN>+<VB.*>+)

as subject noun phrases. (1) extracts a verb in any

tense (<VB.*>), followed by an optional determiner

(<DT>), followed by 0 or more adjectives (<JJ>*)

and ending with at least one noun (<NN>+). Figure 3

shows the parse tree of the phrase manage European

fish and protect the marine environment. We extract

two patterns manage European fish and protect the

marine environment.

(2) extracts patterns starting with an optional de-

terminer (<DT>), followed by 0 or more adjectives

(<JJ>*), followed by at least one noun (<NN>+) and

ending in any verb tense (<VB.*>). Figure 4 shows

the parse tree for the phrase the northern fisherman

S

NP

NN environment

NN marine

DT the

VB protect

CC and

NP

NN fish

JJ European

VB manage

Figure 3: Parse tree for the noun phrase European fish with

its accompanying transitive verb manage and noun phrase

the marine environment with transitive verb protect. Both

NPs occur as a direct object.

catches in which the NP occurs as a subject.

S

VB catches

NP

NN fisherman

JJ northern

DT the

Figure 4: Parse tree for the noun phrase the northern fisher-

man with its accompanying transitive verb catches. The NP

occurs as a subject.

3.4 Scoring Verbs

The verb tenses that precede an NP as a direct object,

or follow the NP as a subject are stemmed with the

Porter algorithm (Porter, 1980) to subsequently group

NPs when creating the extraction patterns. Stemming

groups verb tenses into the same stem—not necessar-

ily the root of the verb. To score stemmed verbs we

used a non-related text corpus containing rural infor-

mation. The idea behind scoring the domain related

verbs is to limit the extraction patterns to verbs that

are more frequently found in the fisheries domain. For

example, the verb create is often found in all sorts of

domains but the verb catch not. The noun phrases that

are linked to the verb catch are more likely to contain

semantically related words.

Let D

1

be the set of stemmed verbs from the seed

word related corpus (Section 3.2) and D

2

be the set

of stemmed verbs from the non-related corpus (rural

corpus) and that the vocabulary size |V |

D

1

≈ |V |

D

2

.

Now let L be the combined set of stemmed verbs such

that for every l ∈ L we have that l ∈ D

1

∪ D

2

. Let

N

l,D

1

denote the number of instances l contain in D

1

.

We estimate the probability that stemmed verb l con-

tained within the seed word related set D

1

:

P(l|D

1

) =

N

l,D

1

N

l,D

1

+ N

l,D

2

(2)

KDIR 2016 - 8th International Conference on Knowledge Discovery and Information Retrieval

192

For example, the verb supervise, which includes

supervised and supervising, gets stemmed into

supervis. A frequency of D

1

=12 and D

2

=3 results

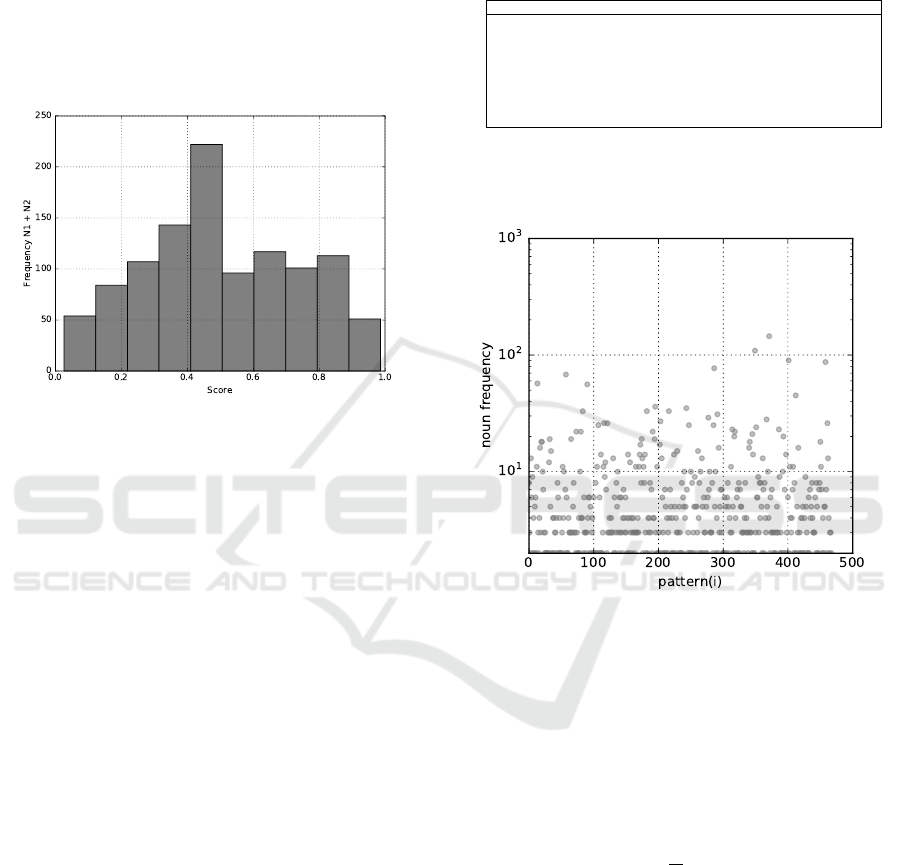

in a score of 0.8. The distribution of scores is shown

in Figure 5 and shows the number of verbs with their

estimated probability. For example, there are 50 verbs

with a score ρ ≥ 0.9 which are highly domain related,

such as prohibit, catch, rescue, exploit, breath, de-

plete, fish.

Figure 5: Frequency of stemmed verbs and estimated prob-

ability.

3.5 Verb Extraction Pattern

We selected verbs in which ρ ≥ 0.5 and group them in

steps of 0.1 such that 0.9 ≤ ρ ≤ 1.0, 0.8 ≤ ρ ≤ 1.0, ... ,

0.5 ≤ ρ ≤ 1.0 so that we analyze the top 10%, 20%, ...

, 50% of verbs. To create the extraction pattern, any

noun <NN> or compound noun <NN>+ extracted

by the chunker is grouped together if they share the

same stemmed verb in either subject and direct ob-

ject cases. For example, Table 1 shows some phrases

for the root verb regulate which gets stemmed into

regul and root verb catch which has an identical

stem. The nouns industry, seafood trade, quota, and

legislation are grouped together into an extraction pat-

tern because they all share the same stem regulate.

For every NP, we have extracted the noun or com-

pound noun. We have not restricted ourselves to the

head noun as compound nouns are generally more in-

formative for the fisheries domain. For example, ma-

rine science was accepted as a fisheries related word

but science not. An overview of some extraction pat-

terns are listed below:

• {industry, seafood trade, quota, legislation}

• {fish, marine life, deep-dwelling, fish, fisherman}

• {catch, conservation, sustainability, growth, fish-

ing, participation, tuna, management approach}

• {oxygen, air, fish, equipment, right}

• {sediment resuspension, world, bycatch, poten-

tial, fishing technique, hunger}

Table 1: Example phrases where the transitive verb of a

phrase gets stemmed into the same stem.

stem verb noun phrase

regul regulated industry ... regulated industry ...

regul regulated seafood trade ... regulated seafood trade ...

regul regulated quota ... quota regulated ...

regul regulates legislation ... legislation regulates ...

catch catching fish ... catching wild fish ...

catch catching marine life ... catching marine life ...

catch catch deep-dwelling fish ... catch deep-dwelling fish ...

catch catches fisherman ... the fisherman catches ...

We found 468 different extraction patterns in our

corpus. An overview of the distribution is shown in

Figure 6 (y-axis logarithmically scaled).

Figure 6: The frequency of nouns in an extraction pattern.

The x-axis shows the number of extraction patterns found

in our corpus (468). The y-axis shows the number of nouns

grouped within that pattern.

3.6 Bootstrapping

The bootstrapping process starts with a list of 10 seed

words (Section 3.1). Next, all the extraction patterns

are scored by calculating the RlogF score (Riloff,

1996):

RlogF(pattern

i

) =

F

i

N

i

∗ log

2

(F

i

) if F

i

≥ 1

−1 if F

i

= 0

(3)

Where F

i

is the number of lexicon words found in

pattern

i

and N

i

the total number of nouns in pattern

i

.

Extraction patterns that contain nouns that are already

part of the lexicon will get a higher score. The first

iteration selects the top N patterns which are then

placed into a pattern pool. We used N=20 for the first

iteration and incremented it by 1 every next run to al-

low new patterns to enter the process.

The next step is to score all the nouns that are

part of the pattern pool. We evaluated two scoring

Bootstrapping a Semantic Lexicon on Verb Similarities

193

metrics: (1) BASILISK’s AvgLog, (2) PMI that uses

search counts from the Bing search engine. The Av-

gLog score is defined as:

AvgLog(word

i

) =

P

i

∑

j=1

log

2

(F

j

+ 1)

P

i

(4)

(1) AvgLog uses all the patterns to score the nouns

found in the top N patterns. P

i

is the number of pat-

terns in which word

i

occurs and F

j

the number of lex-

icon words found in pattern j. The nouns that are part

of the pattern pool are given a higher score, thus be-

ing more semantically related, when they also occur

in other extraction patterns with a high number of lex-

icon word matches.

(2) is based on hypernym collocation statistics

proposed by (Igo and Riloff, 2009). We implement

the PMI scoring metric within the bootstrapping pro-

cess and dynamically calculate collocation statistics

before adding new words in the lexicon. We hypoth-

esize that lexicon words that occur more often in col-

location with its domain are more likely to be seman-

tically related. We use the number of hits between

a lexicon word (hyponym) and its hypernym word

by utilizing the NEAR operator from the Microsoft’s

BING search engine. We choose collocation range of

10 and define the PMI score as:

PMI

x,y

= log

ρ

x,y

ρ

x

ρ

y

(5)

PMI

x,y

= log(N) + log

N

x,y

N

x

N

y

(6)

PMI

x,y

= log

N

x,y

N

x

N

y

(7)

PMI

x,y

is the Pointwise Mutual Information that

lexicon word x occurs with hypernym y, where ρ

x,y

is

the probability that x and y occur together on the web,

ρ

x

the probability that x occurs on the web, and ρ

y

the

probability that y occurs on the web. We would have

to calculate probabilities such as ρ

x

by dividing the

number of x by the total number of web pages N and

can rewrite PMI

x,y

by incorporating N. However, N is

not known and can be omitted because it will be the

same for each lexicon word. We can rewrite PMI

x,y

again by taking the log of the number of hits from

the collocation statistics and dividing it by statistics

of their individual parts.

Each noun that was part of the extraction pattern

was given a score and the top-N nouns were selected

to enter the lexicon. We added the noun with the high-

est score (N=1) to the lexicon and repeated the boot-

strapping process.

4 EVALUATION

We evaluated the lexicon entries by a gold standard

dictionary. Domain experts have labeled every noun

or compound noun that was found in the extraction

patterns. A value of 1 was assigned if the word was re-

lated to the fisheries domain and 0 if it had no relation-

ship. We did not distinguish between highly relevant

domain words and less relevant words. For example,

the word marine science is highly relevant and has

often a direct link to the domain, but the word conser-

vation in itself can be ambiguous. It could be related

to e.g. conserving artwork or to prevent depletion of

natural resources. However, a word was considered

to be correct if any sense of the word is semantically

related. Furthermore, unknown words were manually

looked up for their meaning. For example, plecoglos-

sus altivelis, oncorhynchus keta, leucosternon, peach

anthias and khaki grunter are types of fish unknown

to the annotators, yet are semantically related.

We have run the bootstrapping algorithm until the

lexicon contained 100 words. We repeated the pro-

cess for the top 10% to top 50% scored verbs as dis-

cussed in Section 3.5 and compared the AvgLog (S

b

)

and PMI (S

pmi

) scoring metric. Examples of lexicon

entries are: invertebrate seafood, ground fish, shell-

fish, demersal fish, mackerel, carp, crabs, deepwater

shrimp, school, life, squid, hake, fisherman, cod, tuna,

conservation reference size, trout, quota, sea, shrimp,

mortality, freshwater, trawl, salmon, tenkara, snap-

per, method, license, attractor, pocket water, fee, ves-

sel license, style fly, artisan, plastic worm, freshwater

fly, saltwater, jack mackerel, trawler.

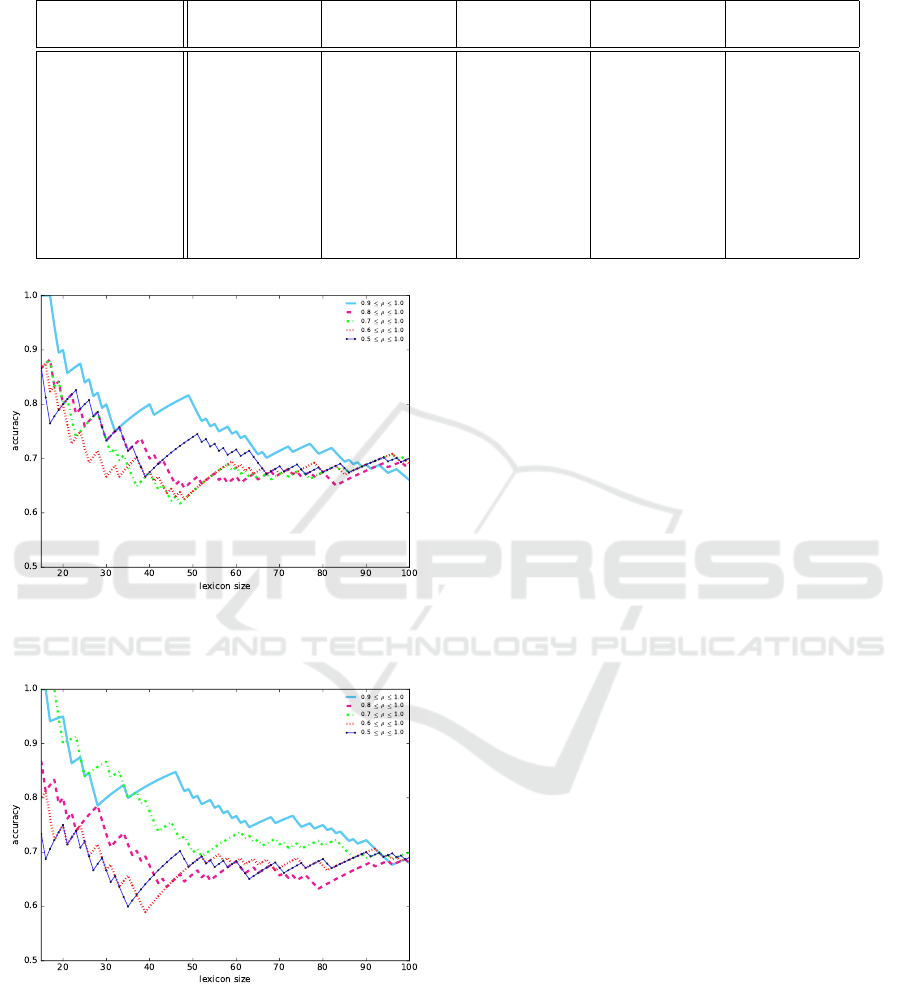

Figure 7 shows the accuracy (percentage of cor-

rect lexicon words) for S

b

when learning 100 semanti-

cally related words. The lines represent the verb prob-

ability groupings (e.g. top 10%, 20%). The accuracy

degrades when incorrect words enter the lexicon and

starts to contribute in learning more incorrect words.

The accuracy converges to around 70% for all prob-

ability groupings. Figure 8 shows the accuracy for

S

pmi

. It shows that using web statistics for hyponym-

hypernym words affects the accuracy positively and

negatively, yet still converges to around 70% after 100

words are learned. The use of S

pmi

affects the top 30%

verbs substantially, outperforming S

b

up to learning

90 words. However, S

pmi

negatively affects the accu-

racy when looking at the top 50% verbs compared to

S

b

.

Scoring verbs before creating extraction patterns

causes differences in accuracy up to a lexicon size of

90. Verbs that occur more often in domain related

text, such as discussed in Section 3.4, essentially ben-

efit the accuracy of the lexicon up to a certain size

KDIR 2016 - 8th International Conference on Knowledge Discovery and Information Retrieval

194

Table 2: Lexicon accuracy when learning upto a 100 words. Accuracy scores are given for the top 10%, ... , 50% verbs for

both S

b

(scoring new entries with BASILISK’s AvgLog), and S

pmi

(scoring new entries with PMI).

Lexicon entries

0.5 ≤ ρ ≤ 1.0 0.6 ≤ ρ ≤ 1.0 0.7 ≤ ρ ≤ 1.0 0.8 ≤ ρ ≤ 1.0 0.9 ≤ ρ ≤ 1.0

S

b

S

pmi

S

b

S

pmi

S

b

S

pmi

S

b

S

pmi

S

b

S

pmi

20 0.8 0.75 0.8 0.75 0.8 0.9 0.8 0.8 0.9 0.95

30 0.73 0.67 0.67 0.67 0.73 0.87 0.73 0.73 0.8 0.8

40 0.68 0.65 0.68 0.6 0.68 0.78 0.7 0.68 0.8 0.82

50 0.74 0.68 0.64 0.68 0.64 0.7 0.66 0.66 0.8 0.8

60 0.72 0.68 0.68 0.68 0.68 0.73 0.67 0.68 0.75 0.77

70 0.69 0.67 0.67 0.67 0.67 0.71 0.67 0.66 0.71 0.76

80 0.68 0.69 0.68 0.68 0.68 0.71 0.68 0.64 0.71 0.75

90 0.69 0.7 0.69 0.7 0.69 0.69 0.68 0.68 0.69 0.72

100 0.7 0.69 0.69 0.68 0.7 0.7 0.69 0.68 0.66 0.68

Figure 7: Graph that shows the accuracy when learning 100

words for the top 10% to top 50% verbs when scoring can-

didate words with BASILISK’s AvgLog (S

b

).

Figure 8: Graph that shows the accuracy when learning 100

words for the top 10% to top 50% verbs when scoring can-

didate words with Pointwise Mutual Information (S

pmi

).

yet have limited effect on large lexicons. We would,

however, have expected that the top 10% verbs out-

perform the top 20% and so on. This does not seem

to hold for both S

b

and S

pmi

. For example, when

using S

b

and learning 50 words, the top 50% verbs

achieved a higher accuracy (0.74), compared to the

top 20% (0.66). Similarly, when learning 40 words

and using S

pmi

, the top 20% verbs achieved lower ac-

curacy (0.68) than the top 30% verbs (0.78). The top

10% verbs for S

b

and S

pmi

achieve the highest accu-

racy in nearly all stages of the bootstrapping process.

Small lexicons would benefit from selecting only the

top 10% verbs to create extraction patterns, achieving

an accuracy of around 0.8 when learning 50 words.

An overview of all accuracy scores is given in Table 2.

5 CONCLUSION

In this paper, we presented a bootstrapping algorithm

based on BASILISK and a highly related corpus that

was created by mining web pages. We have created

the corpus by utilizing the same set of seed words that

initially was used to start the bootstrapping process.

We used extraction patterns to group noun phrases

with similar semantic meaning by grouping them

when they share the same stemmed verb. We scored

the extraction patterns with a non-related text corpus

and calculated accuracy scores for the top 10%, 20%,

30%, 40% and 50%. Next to using BASILISK orig-

inal scoring metric, we used a PMI score by looking

at hyponym-hypernym collocation statistics.

We found varied results between the scored ex-

traction patterns. Patterns that were created by look-

ing at strong verbs that most often occur in domain

related text, and less frequent in general (non-related)

text, created a higher accuracy lexicon when looking

at the top 10% scored verbs while other top scores

showed mixed results. The use of collocation statis-

tics by utilizing the NEAR operator of Microsoft’s

Bing search engine provided better accuracy for a

number of scores but simultaneously caused a de-

grade in the accuracy for other verb scores.

The achieved accuracy covers web text for the

Bootstrapping a Semantic Lexicon on Verb Similarities

195

fisheries domain and more research is needed con-

cerning the generalizability into other domains and

other forms of text, such as scientific literature and

other technical language. Furtermore, research is

needed to explain why accuracy varies between verb

scores and why collocation statistics work better in

some cases. Finally, research is also necessary when

scoring the verbs against a non-related text corpus to

see which types or genres of non-related domain cor-

pora affects the domain under study.

ACKNOWLEDGEMENTS

This research was funded by the project SAF21 - So-

cial science aspects of fisheries for the 21

st

Century.

SAF21 is a project financed under the EU Horizon

2020 Marie Skłodowska-Curie (MSC) ITN - ETN

programme (project 642080).

REFERENCES

Curran, J. R., Murphy, T., and Scholz, B. (2007). Minimis-

ing semantic drift with mutual exclusion bootstrap-

ping. In In Proceedings of the 10th Conference of

the Pacific Association for Computational Linguistics,

pages 172–180.

Dunning, T. (1993). Accurate methods for the statistics of

surprise and coincidence. Computational Linguistics,

19(1):61–74.

Igo, S. P. and Riloff, E. (2009). Corpus-based semantic lex-

icon induction with web-based corroboration. In Pro-

ceedings of the Workshop on Unsupervised and Mini-

mally Supervised Learning of Lexical Semantics, UM-

SLLS ’09, pages 18–26, Stroudsburg, PA, USA. As-

sociation for Computational Linguistics.

Lenat, D. B. (1995). Cyc: A large-scale investment in

knowledge infrastructure. Commun. ACM, 38(11):33–

38.

Miller, G. A., Beckwith, R., Fellbaum, C., Gross, D., and

Miller, K. J. (1990). Introduction to WordNet: an on-

line lexical database. International Journal of Lexi-

cography, 3(4):235–244.

Pantel, P. and Ravichandran, D. (2004). Automatically la-

beling semantic classes. In HLT-NAACL, pages 321–

328.

Phillips, W. and Riloff, E. (2002). Exploiting strong syn-

tactic heuristics and co-training to learn semantic lex-

icons. In Proceedings of the ACL-02 Conference on

Empirical Methods in Natural Language Processing -

Volume 10, EMNLP ’02, pages 125–132, Stroudsburg,

PA, USA. Association for Computational Linguistics.

Porter, M. F. (1980). An algorithm for suffix stripping. Pro-

gram, 14(3):130–137.

Qadir, A., Mendes, P. N., Gruhl, D., and Lewis, N. (2015).

Semantic lexicon induction from twitter with pattern

relatedness and flexible term length. In Proceedings

of the Twenty-Ninth AAAI Conference on Artificial In-

telligence, AAAI’15, pages 2432–2439. AAAI Press.

Qadir, A. and Riloff, E. (2012). Ensemble-based seman-

tic lexicon induction for semantic tagging. In Pro-

ceedings of the First Joint Conference on Lexical and

Computational Semantics - Volume 1: Proceedings of

the Main Conference and the Shared Task, and Volume

2: Proceedings of the Sixth International Workshop

on Semantic Evaluation, SemEval ’12, pages 199–

208, Stroudsburg, PA, USA. Association for Compu-

tational Linguistics.

Riloff, E. (1996). Automatically generating extraction pat-

terns from untagged text. In Proceedings of the Thir-

teenth National Conference on Artificial Intelligence -

Volume 2, AAAI’96, pages 1044–1049. AAAI Press.

Riloff, E. and Shepherd, J. (1997). A corpus-based ap-

proach for building semantic lexicons. In In Proceed-

ings of the Second Conference on Empirical Methods

in Natural Language Processing, pages 117–124.

Roark, B. and Charniak, E. (1998). Noun-phrase co-

occurrence statistics for semiautomatic semantic lexi-

con construction. In Proceedings of the 17th Interna-

tional Conference on Computational Linguistics - Vol-

ume 2, COLING ’98, pages 1110–1116, Stroudsburg,

PA, USA. Association for Computational Linguistics.

Thelen, M. and Riloff, E. (2002). A bootstrapping method

for learning semantic lexicons using extraction pattern

contexts. In Proceedings of the ACL-02 Conference on

Empirical Methods in Natural Language Processing -

Volume 10, EMNLP ’02, pages 214–221, Stroudsburg,

PA, USA. Association for Computational Linguistics.

Widdows, D. and Dorow, B. (2002). A graph model for un-

supervised lexical acquisition. In Proceedings of the

19th International Conference on Computational Lin-

guistics - Volume 1, COLING ’02, pages 1–7, Strouds-

burg, PA, USA. Association for Computational Lin-

guistics.

Ziering, P., van der Plas, L., and Sch

¨

utze, H. (2013a). Boot-

strapping semantic lexicons for technical domains. In

IJCNLP, pages 1321–1329. Asian Federation of Nat-

ural Language Processing / ACL.

Ziering, P., van der Plas, L., and Sch

¨

utze, H. (2013b).

Multilingual lexicon bootstrapping - improving a lex-

icon induction system using a parallel corpus. In

IJCNLP, pages 844–848. Asian Federation of Natural

Language Processing / ACL.

KDIR 2016 - 8th International Conference on Knowledge Discovery and Information Retrieval

196