Feature Engineering for Activity Recognition from Wrist-worn Motion

Sensors

Sumeyye Konak

1

, Fulya Turan

1

, Muhammad Shoaib

2

and Ozlem Durmaz Incel

1

1

Deapartment of Computer Engineering, Galatasaray University, Ciragan Cd. No:36, Besiktas/Istanbul, Turkey

2

Pervasive Systems Group, University of Twente, Zilverling Building, PO-Box 217, 7500 AE Enschede, The Netherlands

Keywords:

Activity Recognition, Motion Sensing, Wrist-worn Devices, Mobile Sensing.

Abstract:

With their integrated sensors, wrist-worn devices, such as smart watches, provide an ideal platform for human

activity recognition. Particularly, the inertial sensors, such as accelerometer and gyroscope can efficiently cap-

ture the wrist and arm movements of the users. In this paper, we investigate the use of accelerometer sensor for

recognizing thirteen different activities. Particularly, we analyse how different sets of features extracted from

acceleration readings perform in activity recognition. We categorize the set of features into three classes: mo-

tion related features, orientation-related features and rotation-related features and we analyse the recognition

performance using motion, orientation and rotation information both alone and in combination. We utilize a

dataset collected from 10 participants and use different classification algorithms in the analysis. The results

show that using orientation features achieve the highest accuracies when used alone and in combination with

other sensors. Moreover, using only raw acceleration performs slightly better than using linear acceleration

and similar compared with gyroscope.

1 INTRODUCTION

Mobile phones’ ubiquity and the rich set of sen-

sors available on these devices make them a suitable

platform for human activity recognition (Incel et al.,

2013; Bulling et al., 2014). More recently, wrist-worn

devices, such as smart watches are also emerging as

an alternative for activity recognition. Wrist-worn de-

vices have the advantage of capturing wrist, hand and

arm movements compared to the smart phones which

are usually carried in pockets and bags. Moreover,

the smart phones may not always be attached to the

user’s body, such that it can be left on a desk, while

the wrist-worn devices are usually attached to the user

unless removed.

While other sensors, such as GPS, microphone,

can also be used for activity recognition, motion or

inertial sensors available on wrist-worn devices, such

as accelerometer, gyroscope, are among the most ef-

fective sensors for activity recognition. They can

easily capture the user’s movements and in fact ac-

tivity recognition using inertial sensors has been an

active field of research (Avci et al., 2010; Bulling

et al., 2014; Lane et al., 2010; Shoaib et al., 2015b).

These sensors also have the advantage of consuming

less battery power compared to other resource-hungry

sensors, such as GPS.

In this paper our aim is to analyse the perfor-

mance of activity recognition with different set of

features for activity recognition from wrist-worn mo-

tion sensors, particularly the accelerometer. The main

idea is to analyse how much a wrist-worn device

move, change its orientation and rotate and how these

changes can be used to recognize the activities of a

user. For this purpose, we categorize the features to

be extracted from raw accelerometer data into three

classes: motion features, orientation features and ro-

tation features. Features from the magnitude of ac-

celeration are used as the motion-related features,

whereas features from the individual axes of the ac-

celerometer are utilized to compute the orientation-

related features. Additionally, instead of using gy-

roscope for rotation information, we extract rotation-

related features, namely pitch and roll, from the accel-

eration readings. The main motivation is to explore

the effectiveness of an only-accelerometer solution.

In order to investigate the effectiveness of these

features both alone and when fused together, we use

a dataset (Shoaib et al., 2016) collected from ten par-

ticipants. A Samsung Galaxy S2 phone was used to

emulate a smart watch and was placed on the wrist

of the participants. The sampling rate was 50Hz. In

76

Konak, S., Turan, F., Shoaib, M. and Incel, O.

Feature Engineering for Activity Recognition from Wrist-worn Motion Sensors.

DOI: 10.5220/0006007100760084

In Proceedings of the 6th International Joint Conference on Pervasive and Embedded Computing and Communication Systems (PECCS 2016), pages 76-84

ISBN: 978-989-758-195-3

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

total, 13 activities were performed: eating, typing,

writing, drinking coffee, smoking, giving a talk, walk-

ing, jogging, biking, walking upstairs, walking down-

stairs, sitting and standing. Although some activities

can easily be captured by a wrist-worn device, such

as eating, typing, some are more challenging to de-

tect by such a device, such as sitting, standing. The

second category of activities are usually used in the

studies for activity recognition with mobile phones.

In the initial tests, we show how the recognition

performance can be increased with the use of orienta-

tion and rotation-related features besides the motion-

related features using only the accelerometer. In the

next round of tests, the use of linear acceleration is

investigated compared to the accelerometer and in

the last round, instead of computing rotation features

from accelerometer, gyroscope is used for extracting

rotation related features. The aim is to compare the

performance of an acceleration-only approach with

using extra sensors. In all the tests, performance of

different classifiers such as, decision tree, naive Bayes

and random forest is also compared. Our results show

that using orientation features achieve the highest ac-

curacies when used alone and in combination with

other sensors. Moreover, on average the random for-

est classifier performs the best compared with other

classifiers. Using only raw acceleration performs bet-

ter than using linear acceleration and similar com-

pared with gyroscope. The following lists the main

highlights of this paper:

• We extract pitch and roll features from the ac-

celerometer. The use of these features were inves-

tigated for activity recognition from smart phone

sensors in (Incel, 2015; Coskun et al., 2015), but

not from wrist-worn sensors.

• We categorize the set of features into three cat-

egories: motion related features, orientation-

related features and rotation-related features. We

analyse the performance of activity recognition

using motion, orientation and rotation information

both alone and in combination.

• We focus on activities that can be recognized

from wrist-worn sensors, such as eating, smoking,

and also activities, such as walking, running, that

are typically recognized by smart phone sensors

placed in the pocket. This makes our dataset more

challenging and different from those that only uti-

lize wrist-related activities.

• We analyse the performance of accelerometer-

only solution and compare its performance with

different classifiers.

2 RELATED WORK

Feature engineering is an important part of the activ-

ity recognition process. In recent years, it has been

studied extensively in the context of physical activ-

ity recognition as summarized in various survey stud-

ies (Lane et al., 2010; Bulling et al., 2014). How-

ever, most of the studies focus on the recognition of

simply physical activities at the pocket position. For

example, the authors in (Figo et al., 2010) studied

extensively various time and frequency domain fea-

tures which are suitable for running on smartphones.

They compared various features using their computa-

tion and storage complexity and described their suit-

ability for mobile devices. However, they evaluate

all these features using a threshold based mechanism

with only three activities such as walking, jumping,

and running. Moreover, this study used only one ac-

celerometer in the right jeans pocket position. Sim-

ilarly, the authors in (Kwapisz et al., 2011) also in-

vestigated various features for simple seven physical

activities at the pocket position.

Some of the studies also investigated various fea-

tures at the wrist position. However, they also mainly

focused on the simple physical activities. For exam-

ple, the authors in (Maurer et al., 2006) compared

various features on multiple body positions includ-

ing wrist using a decision tree classifier. However,

they only evaluated seven simple physical activities.

Moreover, they used only accelerometer. Previously,

we also investigated various time and frequency do-

main features for the wrist position, however, it was

done only for seven simple physical activities (Shoaib

et al., 2014).

We have previously studied the recognition of

simple and complex activities at the wrist posi-

tion, however, it was done using only two simple

time-domain features: mean and standard deviation

(Shoaib et al., 2016; Shoaib et al., 2015c). Moreover,

the main focus of that study was to evaluate the ef-

fect of increasing window size and combining sensor

data from pocket position with the wrist position on

the recognition performance of various activities. We

also did not consider any features based on pitch and

roll. In this study, we extend our previous work by ex-

ploring an extended set of features for both simple and

complex activities at the wrist position as described in

Section 1.

Feature Engineering for Activity Recognition from Wrist-worn Motion Sensors

77

3 METHODOLOGY OF FEATURE

ENGINEERING

3.1 Dataset Details

The dataset was collected from ten participants with

an age range: 23 to 35. All participants were given

two mobile phones (Samsung Galaxy S2) during data

collection. One was located in in their right trousers’

pocket. In order to emulate a smart watch or a wrist-

worn device, the other phone was located at their right

wrist. While in our previous work (Shoaib et al.,

2016), we investigated the fusion of data from both

phones, in this paper we only use the data collected

from the phone located at the wrist. Our aim is to

analyse the performance of wrist-worn devices with

these activities. Data was sampled at 50 Hz from the

phone’s accelerometer, its (virtual) linear acceleration

sensor and its gyroscope.

In total, 13 activities were included in the dataset.

Seven activities (walking, jogging, biking, walking

upstairs, walking downstairs, sitting and standing)

were performed by all the participants with a dura-

tion of 3 minutes per activity. Seven of the partici-

pants also performed the activities of eating, typing,

writing, drinking coffee and giving a talk with a dura-

tion of 5-6 minutes. Smoking data was collected from

six of the participants, where each of them smoked

one cigarette while standing, since not all the partic-

ipants were smokers. More details of the dataset can

be found in (Shoaib et al., 2016).

3.2 Feature Extraction

In this paper, our aim is to analyse the classification

performance with different feature sets, namely the

motion features, orientation features and rotation fea-

tures. The raw acceleration readings include both the

dynamic (due to movement of the phone) and static

acceleration (due to gravity) values and it is not pos-

sible to separate them when the phone is moving with-

out using gravity readings. However, in this study, in-

stead of using computing the exact orientation of the

phone, we try to detect the changes in the acceleration

readings in the individual axes.

The magnitude of acceleration (square-root of the

sum of the squares of readings in each accelerome-

ter axis) is utilized for the extraction of motion fea-

tures. From the raw acceleration readings, the follow-

ing motion features are computed over a time-window

of 20 seconds:

• Mean: The average value of the magnitude sam-

ples over a time window.

• Variance: Average of the squared differences of

the sample values from the mean value over a time

window.

• Root Mean Square (RMS): The root mean

square is the square root of the sums of each data

over a window, divided by the sample size.

• Zero-Crossing Rate (ZCR): The number of

points where a signal crosses through a specific

value corresponding to half of the signal range. In

our case, the mean of a window is utilized.

• Absolute Difference (ABSDIFF): Sum of the

differences from between each magnitude sam-

ple and the mean of that window divided by the

number of data points. This feature was utilized

in (Alanezi and Mishra, 2013) for individual ac-

celeration axis to enhance the resolution in cap-

turing the information captured by data points.

• First 5-FFT Coefficients: the first 5 of the fast-

Fourier transform coefficients are taken since they

capture the main frequency components.

• Spectral Energy: Square sum of spectral coeffi-

cients divided by the number of samples in a win-

dow.

The readings from each of the 3-axis of the ac-

celerometer are used for the computation of orien-

tation features. The following features are extracted

from each accelerometer axis such that in total 12 fea-

tures are computed:

• Standard Deviation: Square root of variance.

• Root mean square (RMS)

• Zero-crossing rate (ZCR)

• Absolute Difference (ABSDIFF)

The rotation features are computed from the

changes in the pitch and roll angles. The rotational in-

formation can be extracted from the gyroscope or ori-

entation sensor on Android phones, however this re-

quires the use of other sensors and the orientation sen-

sor was deprecated in Android 2.2 (API level 8). In

our previous work (Incel, 2015; Coskun et al., 2015),

we extracted pitch and roll information from the ac-

celeration readings. In Equation 1 and Equation 2, it

is given how the pitch and roll values are computed

respectively. In the equations x, y and z represent the

accelerometer readings in the 3-coordinates, whereas

g is the gravitational acceleration, i.e., 9.81 m/s

2

:

β =

180

Π

.tan

−1

(y/g,z/g) (1)

α =

180

Π

.tan

−1

(x/g,z/g) (2)

PEC 2016 - International Conference on Pervasive and Embedded Computing

78

Using the pitch and roll values, the following

rotation-related features are extracted such that 12

more features are extracted:

• Mean

• Standard Deviation: Square root of variance.

• Root mean square (RMS)

• Zero-crossing rate (ZCR)

• Absolute Difference (ABSDIFF)

• Spectral energy

In total, 35 features are extracted from the ac-

celerometer readings. Similar to raw acceleration

readings, the same set of features are extracted from

linear acceleration readings. In order to evaluate the

performance of rotation features extracted from gy-

roscope we extract Standard-deviation, RMS, ZCR

and ABSDIFF of three axes, resulting in 12 fea-

tures(discussed in Section 4.3).

4 PERFORMANCE EVALUATION

In this section, we present the results obtained by fol-

lowing the methodology explained in Section 3.

We used Python programming environment for

preprocessing the data and feature extraction. For

the classification phase, we used Scikit-learn (Version

0.17), which is a also Python-based machine learn-

ing toolkit (Pedregosa et al., 2011). Three classifiers,

which are commonly used for practical activity recog-

nition, are utilized: Naive Bayes, decision tree and

random forest (Shoaib et al., 2015b; Shoaib et al.,

2015a). All the classifiers were set in their default

mode. For the decision tree, Scikit-learn uses an op-

timized version of the CART (Classification and Re-

gression Trees) algorithm.

In the classification phase, we used 10-fold strat-

ified cross-validation without shuffling. In this vali-

dation method, the whole dataset is divided into ten

equal parts or subsets and at each iteration, nine of

these parts are used for training and one part for test-

ing. The window size was selected as 20 seconds

since in our previous work (Shoaib et al., 2016), it was

shown that larger window sizes achieve higher accu-

racies with activities where wrist movements domi-

nate and are less-repetitive.

4.1 Recognition with Accelerometer

In this section, we present the results obtained by us-

ing raw acceleration readings and discuss how mo-

tion, orientation and rotation features perform when

both used alone and in combination. We also com-

pare the recognition performance of different classifi-

cation algorithms, namely naive Bayes, decision tree

and random forest.

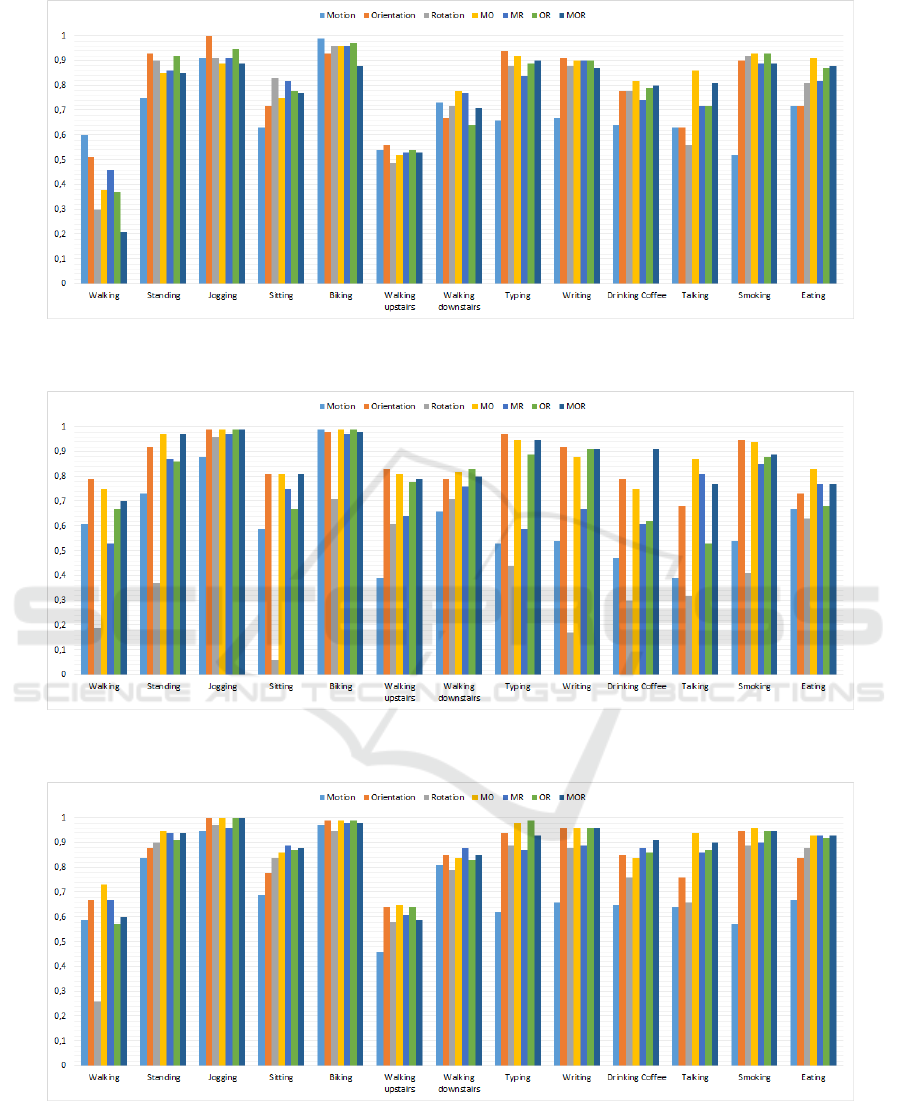

In Figure 1, the results of experiments using de-

cision tree classifier are presented. In this test, the

aim is to analyse different combinations of features in

detail.The y axis represents the accuracy values. Al-

though the accuracy values range between zero and

one, in the text, we mention the accuracies in terms of

percentages, for the ease of reading.

When the results of individual sets of features

are analysed (only motion, only orientation, only

rotation), in general using only orientation features

achieves the highest accuracy for most activities with

a few exceptions. For example, the motion features

perform better for walking, biking, walking down-

stairs and rotation features for sitting, smoking and

eating.

For various combinations of different feature sets,

such as motion and orientation (MO), motion and ro-

tation (MR) and orientation and rotation (OR), the

combination of motion and orientation features (MO)

achieves the highest accuracies for different activities.

Compared with the single sets of features, the com-

bination of feature sets performs better for drinking

coffee, talking, smoking, eating and walking down-

stairs. For other activities, either motion or orienta-

tion features perform better, except sitting where ro-

tation features achieve the highest accuracy. When

all feature sets are used together (MOR), accuracies

either remained the same, or decreased in few cases.

It could be that adding rotation features increased the

confusion rate between activities. Only exception is

the sitting activity, where rotation and motion-rotation

features (MR) achieve the best results.

The average accuracy for all activities is 69% for

motion features, 78% for orientation, 76% for rota-

tion, 81% for motion-orientation, 79% for motion-

rotation, 79% for orientation-rotation and 77% for

motion-orientation-rotation combinations. Overall,

using motion and orientation features achieves the

highest accuracy on average. We achieve the highest

accuracy for biking and jogging and lowest accuracy

for walking, walking upstairs and downstairs, because

these activities were confused with each other. An

example confusion matrix for Random Forest classi-

fier using motion-orientation features is given in Ta-

ble 1. Additional sensors, such as pressure, can be

used in differentiating these activities or they can be

combined into a single activity if possible.

In Figure 2, results with naive Bayes classifier are

presented. Compared with decision tree results given

in Figure 1, accuracies for all the activities have in-

Feature Engineering for Activity Recognition from Wrist-worn Motion Sensors

79

Figure 1: Recognition performance of accelerometer with different feature combinations using decision tree classifier

(M:Motion, O:Orientation, R: Rotation).

Figure 2: Recognition performance of accelerometer with different feature combinations using Naive Bayes classifier

(M:Motion, O:Orientation, R: Rotation).

Figure 3: Recognition performance of accelerometer with different feature combinations using Random Forest classifier

(M:Motion, O:Orientation, R: Rotation).

creased except for eating and sitting activities. Partic-

ularly, walking, walking upstairs and downstairs ac-

tivities are recognized with higher accuracies. Simi-

lar to the decision tree results, the orientation features

are the dominant set of features in achieving high ac-

curacy. Walking, walking upstairs, typing, writing

PEC 2016 - International Conference on Pervasive and Embedded Computing

80

Figure 4: Comparison of Decision Tree, Naive Bayes and

Random Forest in terms of average accuracy (M:Motion,

O:Orientation, R: Rotation).

and smoking are recognized with the highest accu-

racy when only orientation features were used. The

highest average accuracies for all the activities are

computed as 86% with motion features and 87% with

motion-orientation features.

In Figure 3, the results of the experiments us-

ing random-forest classifier are presented. In par-

ticular, the maximum average accuracy is achieved

with motion and orientation features which is 89%.

This is higher compared with other classifiers where

maximum average accuracy is 81% for decision tree

with motion-orientation features, and 87% with naive

Bayes classifier again using motion-orientation fea-

tures. In Figure 4 we present a comparison of the

classifiers in terms of their average performance.

In general, random forest achieves the highest ac-

curacies for most of the activities. However, naive

Bayes achieves higher accuracies for walking and

walking upstairs activities. As mentioned, these ac-

tivities are confused with each other and an example

confusion matrix is given in Table 1 using motion-

orientation features.

4.2 Recognition with Linear

Acceleration

In this section, our aim is to analyse the performance

with linear acceleration readings instead of using raw

acceleration. The accelerometer sensor on Android

phones, measures the gravitational acceleration, if the

device is stationary or its speed does not change. If

the phone is accelerating, it measures the combina-

tion of the gravitational acceleration and the acceler-

ation due to movement and this acceleration due to

movement, is named as the “linear acceleration”. We

only provide the results with the random forest clas-

sifier in this section due to space limitation. We also

experimented with the other two classifiers, however

random forest achieved the highest accuracies similar

to raw acceleration results.

The results are given in Figure 5. When we com-

pare these results with the results obtained with raw

acceleration, given in Figure 3, we see that results

are either the same or slightly lower with linear ac-

celeration. Particularly, the walking activity is rec-

ognized with 64% accuracy using motion features

with linear acceleration whereas it is recognized with

73% accuracy using raw acceleration with motion-

orientation features, which results in 9% lower accu-

racy. Computing linear acceleration readings gener-

ally consumes more battery power and may not be

preferred in real-time, continuous-running applica-

tions of activity recognition (Incel, 2015).

4.3 Recognition with Acceleration and

Gyroscope

In this section, we aim to analyse whether gyroscope

should be used for extracting rotation features. As

mentioned we extracted features from pitch and roll

values which were computed from raw acceleration

readings. Hence, in this section we extract rota-

tion features from gyroscope and use them either in

combination with motion and orientation features ex-

tracted from raw acceleration or alone. We replace

the twelve rotation features extracted from accelera-

tion with the twelve features extracted from the indi-

vidual axes of gyroscope: Standard-deviation, RMS,

ZCR and ABSDIFF. The evaluation is performed with

the random forest classifier.

Results are given in Figure 6. In general, us-

ing only rotation, i.e. the gyroscope, performs

worse than using combinations of features. Com-

pared with the results obtained with raw accelera-

tion, given in Figure 3, most of the activities are

recognized with a similar accuracy. However, sit-

ting, walking upstairs and downstairs are recog-

nized with 4%, 12% and 9% higher accuracies com-

pared with raw acceleration. The average accuracy

considering all the activities is around 90% when

using motion-rotation or motion-orientation-rotation

features, which was 88% when motion-orientation-

rotation features are calculated from raw acceleration.

Although an accelerometer-only solution provides an

efficient solution, gyroscope can compute exact ro-

tation information, compared to using accelerometer

for computing pitch and roll values. However, the

average performance with only acceleration solution

is still acceptable with 89% accuracy using motion-

orientation features.

Feature Engineering for Activity Recognition from Wrist-worn Motion Sensors

81

Table 1: Confusion Matrix with Random Forest using motion-orientation features, in %.

Walk Stand Jog Sit Bike Walk

Up-

stairs

Walk

Down-

stairs

Type Write Drink Talk Smoke Eat

Walk 72.22 0 0 0 0 23.33 4.44 0 0 0 0 0 0

Stand 0 92.22 0 0 0 0 0 0 0 2.22 2.22 3.33 0

Jog 0 0 100 0 0 0 0 0 0 0 0 0 0

Sit 0 0 0 84.44 0 0 0 1.11 4.44 7.78 0 0 2.22

Bike 0 0 0 2.22 97.78 0 0 0 0 0 0 0 0

Walk

Up-

stairs

23.33 0 0 0 0 64.44 12.22 0 0 0 0 0 0

Walk

Down-

stairs

2.22 0 0 0 0 11.11 84.44 0 0 0 2.22 0 0

Type 0 0 0 0 0 0 0 97.78 1.11 1.11 0 0 0

Write 0 0 0 0 0 0 0 1.11 97.78 0 0 0 1.11

Drink 0 1.11 0 10 0 0 0 0 0 83.33 1.11 1.11 3.33

Talk 0 0 0 0 0 0 0 0 0 0 93.33 2.22 4.44

Smoke 0 0 0 0 0 0 0 0 0 1.11 0 98.89 0

Eat 0 0 0 0 0 0 0 0 0 3.33 0 0 96.67

Figure 5: Recognition performance of linear accelerometer with different feature combinations using Random Forest classifier

(M:Motion, O:Orientation, R: Rotation).

Figure 6: Recognition performance of acceleration and gyroscope with different feature combinations using Random Forest

(M:Motion, O:Orientation, R: Rotation).

PEC 2016 - International Conference on Pervasive and Embedded Computing

82

5 CONCLUSION AND FUTURE

WORK

In this paper, the main motivation is to evaluate the

performance of activity recognition with wrist-worn

devices using inertial sensors and particularly anal-

yse the performance with different feature sets. We

categorize the set of features into three classes: mo-

tion related features, orientation-related features and

rotation-related features and we analyse the perfor-

mance using motion, orientation and rotation infor-

mation both alone and in combination. We utilize

a dataset collected from 10 participants with thir-

teen activities and use decision tree, naive Bayes and

random forest classification algorithms in the analy-

sis. The results show that using orientation features

achieve the highest accuracies when used alone and in

combination with other sensors. However, the com-

bination of all features (motion, orientation and ro-

tation) does not usually improve the results. Con-

sidering the average accuracies, random forest clas-

sifier achieves the highest performance. Additionally,

using only raw acceleration performs slightly better

(89%) than using linear acceleration and similar com-

pared with gyroscope. Hence, our results show that

using an accelerometer only solution can perform as

well as using linear acceleration or using both an ac-

celerometer and gyroscope. The main advantage is

that an acceleration-only solution consumes less bat-

tery power and this is an important factor for real-

time, continuous-running applications.

We are currently collecting a dataset using smart

watches and particularly focusing on the recognition

of smoking. As a future work, we plan to apply the

same methodology to the new dataset. Moreover, we

aim to apply feature selection methods and reduce the

number of features used and analyse the battery con-

sumption on a smart watch.

ACKNOWLEDGEMENTS

This work is supported by the Galatasaray Univer-

sity Research Fund under Grant Number 15.401.004,

by Tubitak under Grant Number 113E271 and by

Dutch National Program COMMIT in the context of

SWELL project.

REFERENCES

Alanezi, K. and Mishra, S. (2013). Impact of smartphone

position on sensor values and context discovery. Tech-

nical Report 1030, University of Colorado Boulder.

Avci, A., Bosch, S., Marin-Perianu, M., Marin-Perianu, R.,

and Havinga, P. (2010). Activity recognition using

inertial sensing for healthcare, wellbeing and sports

applications: A survey. In 23th International Con-

ference on Architecture of Computing Systems, ARCS

2010, pages 167–176.

Bulling, A., Blanke, U., and Schiele, B. (2014). A tuto-

rial on human activity recognition using body-worn

inertial sensors. ACM Computing Surveys (CSUR),

46(3):33.

Coskun, D., Incel, O., and Ozgovde, A. (2015). Phone posi-

tion/placement detection using accelerometer: Impact

on activity recognition. In Intelligent Sensors, Sensor

Networks and Information Processing (ISSNIP), 2015

IEEE Tenth International Conference on, pages 1–6.

Figo, D., Diniz, P. C., Ferreira, D. R., and Cardoso, J. M.

(2010). Preprocessing techniques for context recogni-

tion from accelerometer data. Personal and Ubiqui-

tous Computing, 14(7):645–662.

Incel, O., Kose, M., and Ersoy, C. (2013). A review and

taxonomy of activity recognition on mobile phones.

BioNanoScience, 3(2):145–171.

Incel, O. D. (2015). Analysis of movement, orientation and

rotation-based sensing for phone placement recogni-

tion. Sensors, 15(10):25474.

Kwapisz, J. R., Weiss, G. M., and Moore, S. A. (2011).

Activity recognition using cell phone accelerometers.

ACM SigKDD Explorations Newsletter, 12(2):74–82.

Lane, N. D., Miluzzo, E., Lu, H., Peebles, D., Choudhury,

T., and Campbell, A. T. (2010). A survey of mobile

phone sensing. Communications Magazine, IEEE,

48(9):140–150.

Maurer, U., Smailagic, A., Siewiorek, D. P., and Deisher,

M. (2006). Activity recognition and monitoring using

multiple sensors on different body positions. In Wear-

able and Implantable Body Sensor Networks, 2006.

BSN 2006. International Workshop on, pages 4–pp.

IEEE.

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V.,

Thirion, B., Grisel, O., Blondel, M., Prettenhofer,

P., Weiss, R., Dubourg, V., Vanderplas, J., Passos,

A., Cournapeau, D., Brucher, M., Perrot, M., and

Duchesnay, E. (2011). Scikit-learn: Machine learning

in Python. Journal of Machine Learning Research,

12:2825–2830.

Shoaib, M., Bosch, S., Durmaz Incel, O., Scholten, J., and

Havinga, P. J. (2015a). Defining a roadmap towards

comparative research in online activity recognition on

mobile phones.

Shoaib, M., Bosch, S., Incel, O. D., Scholten, H., and

Havinga, P. J. (2014). Fusion of smartphone mo-

tion sensors for physical activity recognition. Sensors,

14(6):10146–10176.

Shoaib, M., Bosch, S., Incel, O. D., Scholten, H., and

Havinga, P. J. (2015b). A survey of online ac-

tivity recognition using mobile phones. Sensors,

15(1):2059–2085.

Shoaib, M., Bosch, S., Incel, O. D., Scholten, H., and

Havinga, P. J. M. (2016). Complex human activity

Feature Engineering for Activity Recognition from Wrist-worn Motion Sensors

83

recognition using smartphone and wrist-worn motion

sensors. Sensors, 16(4):426.

Shoaib, M., Bosch, S., Scholten, H., Havinga, P. J., and In-

cel, O. D. (2015c). Towards detection of bad habits by

fusing smartphone and smartwatch sensors. In Perva-

sive Computing and Communication Workshops (Per-

Com Workshops), 2015 IEEE International Confer-

ence on, pages 591–596. IEEE.

PEC 2016 - International Conference on Pervasive and Embedded Computing

84