Testing Distributed and Heterogeneous Systems: State of the Practice

Bruno Lima

1,2

and João Pascoal Faria

1,2

1

Faculty of Engineering, University of Porto, Rua Dr. Roberto Frias s/n, 4200-465 Porto, Portugal

2

INESC TEC, FEUP campus, Rua Dr. Roberto Frias s/n, 4200-465 Porto, Portugal

Keywords:

Software Testing, Distributed Systems, Heterogeneous Systems, Systems of Systems, State of the Practice.

Abstract:

In a growing number of domains, such as health-care and transportation, several independent systems, forming

a heterogeneous and distributed system of systems, are involved in the provisioning of end-to-end services to

users. Testing such systems, running over interconnected mobile and cloud-based platforms, is particularly

important and challenging, with little support being provided by current tools. In order to assess the current

state of the practice regarding the testing of distributed and heterogeneous systems (DHS) and identify op-

portunities and priorities for research and innovation initiatives, we conducted an exploratory survey that was

responded by 147 software testing professionals that attended industry-oriented software testing conferences,

and present the main results in this paper. The survey allowed us to assess the relevance of DHS in software

testing practice, the most important features to be tested in DHS, the current status of test automation and tool

sourcing for testing DHS, and the most desired features in test automation solutions for DHS. We expect that

the results presented in the paper are of interest to researchers, tool vendors and service providers in this field.

1 INTRODUCTION

Due to the increasing ubiquity, complexity, critical-

ity and need for assurance of software based sys-

tems (Boehm, 2011), testing is a fundamental lifecy-

cle activity, with a huge economic impact if not per-

formed adequately (Tassey, 2002). Such trends, com-

bined with the needs for shorter delivery times and

reduced costs, demand for the continuous improve-

ment of software testing methods and tools, in order

to make testing activities more effective and efficient.

Nowadays software is not more like simple appli-

cations but has evolved to large and complex system

of systems (DoD, 2008). A system of systems con-

sists of a set of small independent systems that to-

gether form a new system. The system of systems

can be a combination of hardware components (sen-

sors, mobile devices, servers, etc.) and software sys-

tems used to create big systems or ecosystems that

can offer multiple different services. Currently, sys-

tems of systems capture a great interest from the soft-

ware engineering research community. These type

of systems are present in different domain like e-

health (AAL4ALL, 2015) or transportation (Torens

and Ebrecht, 2010).

Testing these distributed and heterogeneous soft-

ware systems or systems of systems, running over

interconnected mobile and cloud based platforms, is

particularly important and challenging. Some of the

challenges are: the difficulty to test the system as

a whole due to the number and diversity of individ-

ual components; the difficulty to coordinate and syn-

chronize the test participants and interactions, due to

the distributed nature of the system; the difficulty to

test the components individually, because of the de-

pendencies on other components. Because of that,

the attention from the research community increased,

however, the issues addressed and solutions proposed

have been primarily evaluated from the academic per-

spective, and not the viewpoint of the practitioner.

Hence, the main objective of this paper is to ex-

plore the viewpoint of practitioners with respect to

the testing of distributed and heterogeneous systems

(DHS), in order to assess the current state of the prac-

tice and identify opportunities and priorities for re-

search and innovation initiatives. For that purpose, we

conducted an exploratory survey that was responded

by 147 software testing professionals that attended

industry-oriented software testing conferences, and

present the main results in this paper. Besides intro-

ductory questions for characterizing the respondents

and contextualizing their responses, the survey con-

tained several questions with the aim of assessing the

practical relevance of testing DHS, the importance of

testing several features of DHS, the current level of

test automation and tool sourcing, and the desired fea-

Lima, B. and Faria, J.

Testing Distributed and Heterogeneous Systems: State of the Practice.

DOI: 10.5220/0005989100690078

In Proceedings of the 11th International Joint Conference on Software Technologies (ICSOFT 2016) - Volume 1: ICSOFT-EA, pages 69-78

ISBN: 978-989-758-194-6

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reser ved

69

tures in test automation solutions for DHS. We expect

that the results presented in the paper are of interest to

researchers, tool vendors and service providers in the

software testing field.

The rest of the paper is organized as follows: Sec-

tion 2 presents the research method used to conduct

the survey. Section 3 presents the results, which are

further discussed in Section 4. Section 5 describes

the related work. Section 5 concludes the paper and

points out future work.

2 RESEARCH METHOD AND

SCOPE

The research method used in this work is the explana-

tory survey. Explanatory surveys aim at making ex-

planatory claims about the population. For example,

when studying how developers use a certain inspec-

tion technique (Wohlin et al., 2003).

2.1 Goal

The main goal of this work is to explore the testing of

DHS from the point of view of industry practitioners,

in order to assess the current state of the practice and

identify opportunities and priorities for research and

innovation initiatives.

More precisely, we aim at responding to the fol-

lowing research questions:

• RQ1: How relevant are DHS in the software test-

ing practice?

• RQ2: What are the most important features to be

tested in DHS?

• RQ3: What is the current status of test automation

and tool sourcing for testing DHS?

• RQ4: What are the most desired features in test

automation solutions for DHS?

2.2 Survey Distribution and Sampling

Since our main goal was to collect the point of view

of industry practitioners that were involved in the test-

ing of DHS, we shared the survey to the participants

of two industry-oriented conferences in the software

testing area: TESTING Portugal 2015

1

and User Con-

ference on Advanced Automated Testing (UCAAT)

1

http://www.cvent.com/events/testing-portugal-2015/

event-summary-a1a41d7f08674008b58e43454bb9f54a.aspx

2015

2

. In total we distributed 250 surveys and we ob-

tained 167 answers. From these 167 answers, only

147 were complete and valid. Most of the invalid an-

swers were related with respondents that did not com-

plete the survey.

2.3 Survey Organization

The survey was composed of two main parts. The first

part was an introduction, where we explained the goal

of the survey and define the term "Distributed and

Heterogeneous Systems" in the context of this survey.

In the context of this survey we define a Distributed

and Heterogeneous System as a set of small indepen-

dent systems that together form a new distributed sys-

tem, combining hardware components and software

systems, possibly involving mobile and cloud-based

platforms.

The second part of the questions is divided in

three different groups. The first group is related with

the professional characterization of the participants.

Here, the survey participant needs to explain:

• what is his main responsibility in his current po-

sition (e.g., software testing, software developer,

project manager);

• how many years he is in the current position;

• how many years he has been working in software

testing;

• how many years he has been working with dis-

tributed and heterogeneous systems (related with

RQ1).

The second group contains questions about the

company characterization. This group has questions

to identify:

• the industry sector;

• the company size;

• the role(s) under which the company conducts

software tests (as developer, as customer/user, as

independent test service provider, and/or as sys-

tem integrator);

• the test levels performed by the company.

Regarding the last question, we asked the partici-

pants about the test levels normally considered in in-

dustrial practice (ISTQB, 2016a):

• unit (or component) testing - the testing of indi-

vidual software components;

• integration testing - testing performed to expose

defects in the interfaces and in the interactions be-

tween integrated components or systems;

2

http://www.etsi.org/news-events/events/868-2015-etsi-

ucaat

ICSOFT-EA 2016 - 11th International Conference on Software Engineering and Applications

70

• system testing - testing an integrated system to

verify that it meets specified requirements;

• acceptance testing - formal testing with respect to

user needs, requirements, and business processes

conducted to determine whether or not a system

satisfies the acceptance criteria and to enable the

user, customers or other authorized entity to de-

termine whether or not to accept the system.

The questions in the first two groups are useful for

characterizing the respondents and confirming their

relevance for the purposes of the survey. The last

question in the first group is also important for an-

swering RQ1.

The last group contains the questions related with

the testing of DHS and the main research questions

underlying the survey:

• in what role(s) does the company conduct soft-

ware tests for DHS, if any (related with RQ1);

• how important is the testing of several specific

features of DHS (related with RQ2);

• what is the current status of test automation for

DHS (manual testing, automatic test execution

and/or automatic test generation) (related with

RQ3);

• what is the current status of tool sourcing for test-

ing DHS (commercial-off-the-shelf or developed

in-house) (related with RQ3);

• what are the most desired features in test automa-

tion solutions for DHS (relate with RQ4);

• how useful would be an automatic test generation

solution based on interaction models as input (re-

late with RQ4).

In the second question above (features to be

tested), we asked the participants about features that

are characteristic of DHS and raise significant testing

challenges:

• interactions between the system and the environ-

ment - distributed systems usually have several

points of interaction (ports) with the environment

(users or external systems) that are themselves

distributed, complicating test coordination (Hi-

erons, 2014);

• interactions between components of the system -

monitoring the interactions between components

of the system under test (SUT) is important not

only in the context of integration testing, but also

in the context of system and acceptance testing,

to facilitate fault localization, however such inter-

actions are often obfuscated by middleware or are

even encrypted, being difficult to intercept and in-

terpret at an appropriate level of abstraction;

• parallelism and concurrency - components of

DHS almost always exhibit some sort of paral-

lelism and concurrency, which can be the source

of subtle errors (race conditions, etc.) that are dif-

ficult to detect and replicate (West et al., 2012);

• timing constraints - in many DHS, several sorts of

timing constraints (behaviors triggered by time-

outs, response time limits, etc.) are imposed on

interactions between components of the system or

between the system and the environment, requir-

ing the application of advanced test case genera-

tion and execution techniques (Kim et al., 2014)

and the simulation of realistic operational condi-

tions in the test environment;

• nondeterministic behaviors - nondeterminism

may occur for various reasons in complex DHS,

requiring adaptive test strategies (Petrenko and

Yevtushenko, 2011) in which the next test action

depends on the observed behavior of the SUT;

• multiple platforms - different components of a

DHS may run on different platforms (operating

systems, devices, browsers, etc.) and the same

component may be deployed on different plat-

forms, requiring complex testing infrastructures.

As for the most desired features in test automa-

tion solutions for DHS, besides the support for multi-

ple platforms, we asked the participants about the test

activities that can be fully or partially automated:

• support for automatic test case execution - this is

the most widespread test automation facet in gen-

eral, so we expect to be a popular desired feature

for DHS;

• support for automatic test case generation - this

provides the highest level of test automation, and

some model-based testing (MBT) techniques and

tools (Utting and Legeard, 2007) are attracting

increasing interest from industry, but the need

to build behavioral models of the SUT, together

with limitations still existent in the techniques

and tools (Dias Neto et al., 2007), hinder a wider

adoption;

• support for test coverage analysis - test cover-

age analysis is better known in the context of

white-box testing (using code coverage metrics)

but can also be employed in the context of gray-

box or black-box testing (e.g., using model cover-

age metrics);

• support for automatic test stub generation - test

stubs are need in the context of unit (component)

testing, to simulate other components on which

the component under test depends, and, under

some conditions, may be automatically generated

Testing Distributed and Heterogeneous Systems: State of the Practice

71

from specifications or models (Faria and Paiva,

2014).

Our final question was intended to evaluate the re-

ceptiveness of the participants to a type of input mod-

els - interaction models such as the ones depicted by

UML sequence diagrams (OMG, 2015) - that are par-

ticularly well suited for generating test cases for the

most relevant features of DHS (asked in the second

question). In fact, UML 2 sequence diagrams provide

a convenient means to specify the messages that are

exchanged between components and actors of DHS

under specific scenarios, and express parallelism, syn-

chronization and time constraints, among other fea-

tures (Lima and Faria, 2016).

3 RESULTS

3.1 Participants Characterization

Before drawing conclusions on the main questions of

this survey it is important realize the profile of the

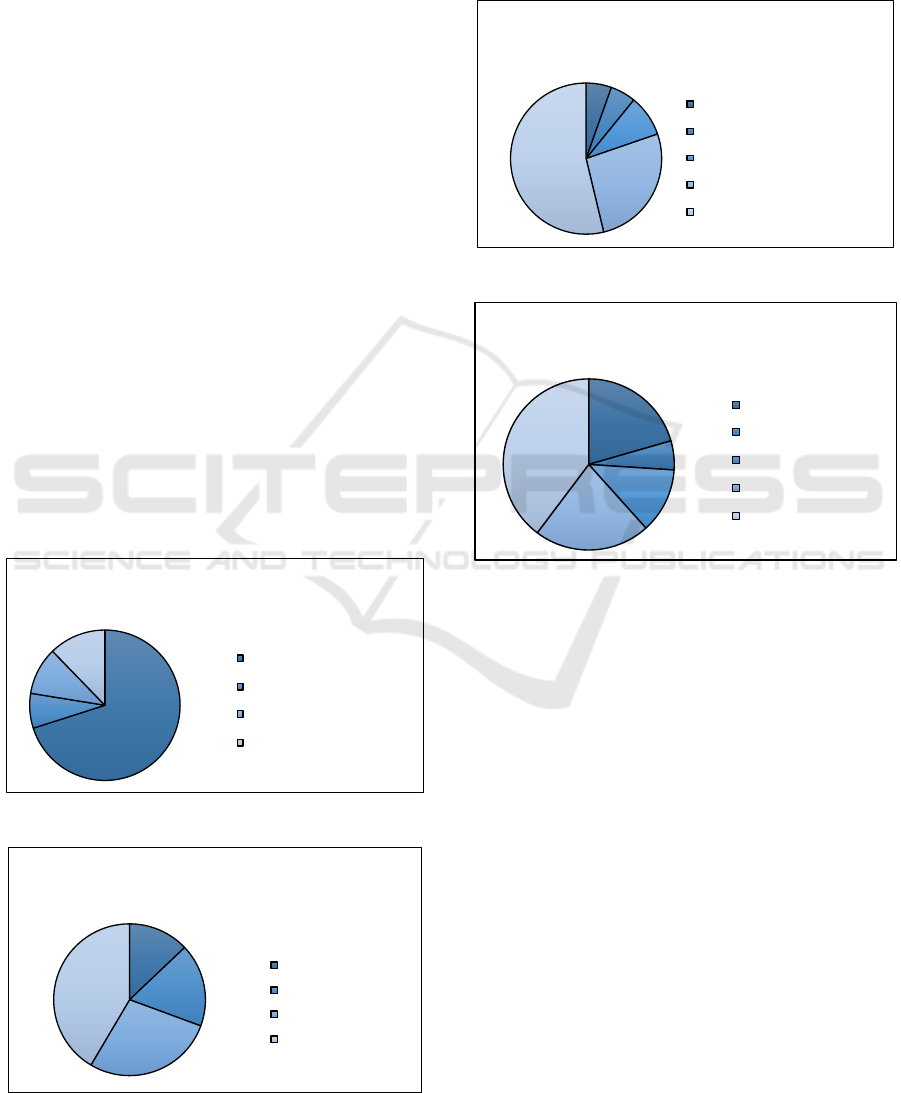

survey participants. The results show that most of the

people (70%) that responded this survey work in soft-

ware testing, verification & validation (see Figure 1)

and 41% are in the current position for more than five

years (see Figure 2).

70%

8%

10%

12%

Which%is%your%main%responsibility%in%

your%current%position?

Software/ testing,/verification/ &/

validation

Software/ developer, architect/ or/

analys t

Project/manager

Others

Figure 1: Current Position.

13%

18%

28%

41%

How$long$have$you$ been$workin g$in$

your$current$position?

Les s*than*1*year

Between* 1*and *2 *years

Between* 2*and *5 *years

More*than*5*years

Figure 2: Time in Current Position.

Regarding the experience in software testing, the

results show (see Figure 3 and 4) that the majority of

the survey participants have more than 5 years of ex-

perience in software testing in general and 40% have

more than 5 years of experience with DHS.

5%

5%

9%

27%

54%

How$long$have$you$ been$working$

with$softwar e$testin g?

I've+ never+ worked+with+ software+testing

Les s+than+1+year

Between+ 1+and+2+years

Between+ 2+and+5+years

More+than+5+years

Figure 3: Time in Software Testing.

21%

5%

12%

22%

40%

How$long$hav e$you $been$working $with $

distributed$and$heterogeneous$systems?

I've+ never+ worked

Les s +than +1+year

Between+ 1+and+2+years

Between+ 2+and+5+years

More+than+5+years

Figure 4: Time in Software Testing of DHS.

3.2 Company Characterization

The companies surveyed worked in a large range of

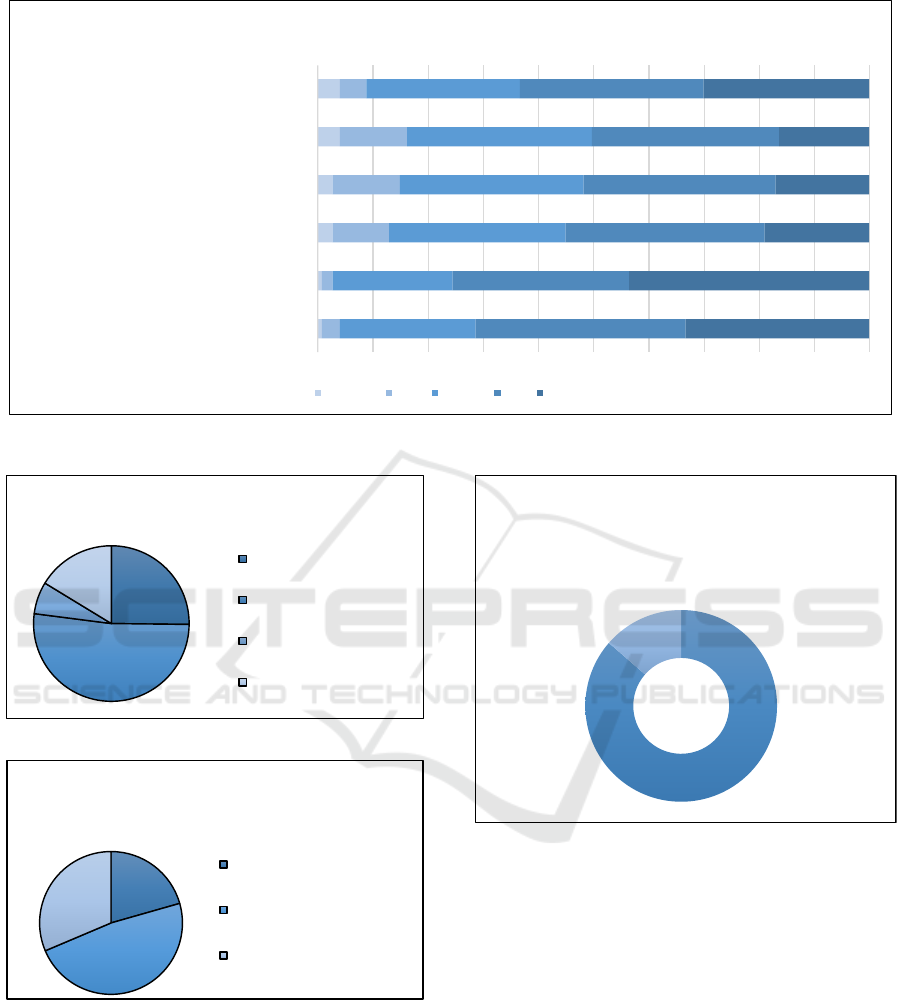

industry sectors. The results represented in Figure 5

identify more than 10 different industry sectors.

We also analyzed the size of the companies ac-

cording to their number of collaborators. Most of the

companies are large companies, 37% have between

100 and 1,000 collaborators and 45% have more than

1,000 collaborators (Figure 6).

The answers to ’In what role(s) does your com-

pany conducts software test, if any?’ show that half

of the companies performs tests to the software devel-

oped by themselves (Figure 7).

Regarding the types of test levels performed, we

realize from the answers (Figure 8) that the unit test-

ing level is the less performed and the other three

levels (integration, system and acceptance) are per-

formed with the same frequency.

ICSOFT-EA 2016 - 11th International Conference on Software Engineering and Applications

72

!"#

!#

$%#

$&#

$'#

$(#

%#

(#

%#

"#

)# $)# %)# !)# ')#

*+,-. /0123456-782-9:0;<4:6

=23475<18-782->:6:704?

@1;:08A:85-782-B<C<570D

E:7C5?470:

,0786 F10575<18

G<8784:

=85:057<8A:85H,130<6 A

=8:0ID

,:C:41AA38<475<18

J5?:06

In#what#industry#is#your#company#

work ing?

[Multiple#answers#were#allowe d]

Figure 5: Industry Sectors.

1%

17%

37%

45%

What%is%the%size%of%your%company?

Les s *than*10 *collab orators

Between* 10*and*99*co llaborators

Between* 100*and*1000*collaborators

More*than*1000 *collaborators

Figure 6: Company Size.

50%

44%

38%

31%

As*developer

As*costumer/user

As*test*services*provider*

(independent*tester)

As*system*integrator

0% 20% 40% 60%

In#what#role(s )#does#yo u r#company#

conducts #software#test,#if#any ?

[Multiple#answers#were#allowed]

Figure 7: Company Roles.

68%

78%

77%

78%

Unit)tes ting

Integration) testing

System )te sting

Acceptance)testing

60% 65% 70% 75% 80%

Which%s oftware%test%levels%are%performed%

in%your% company ,% if%any?

[Multiple%answers%were%allowed]

Figure 8: Test Levels.

3.3 Distributed and Heterogeneous

Systems Testing

Focusing now on the main questions of this survey,

specifically related to the testing of DHS, the answers

to ’In what role(s) does your company conducts soft-

ware test (for DHS), if any?’ show that a vast majority

of 90% of the companies (all but 10%) conducts tests

for DHS in at least one role, with 42% of the com-

panies performing tests for DHS developed by them-

selves (Figure 9).

42%

36%

31%

27%

10%

0% 10% 20% 30% 40% 50%

As,developer

As,costumer/user

As,test,services,provider,

(independent,tester)

As,system,integrator

No,option,selected

In#what#role(s)#does#your# company#

conduct#software#tests#(for#DHS),# if#any?

[Multiple#answers#were#allowed]

Figure 9: Test Roles DHS.

We also tied to understand what kinds of levels are

most commonly used in the testing of such systems.

Regarding the responses obtained (Figure 10), there is

a higher emphasis on system testing (71%) followed

by integration testing (65%). Only 8% of the respon-

dents did not mention any test level for DHS.

Testing Distributed and Heterogeneous Systems: State of the Practice

73

55%

65%

71%

63%

8%

Unit,tes ting

Integration, testing

System,testing

Acceptance,testing

No,option,selected

0% 20% 40% 60% 80%

About&these&syst ems,&w hich&softw are&test&

levels&are&performed& in&your&company,&if&&

any?

[Multiple&answers&were&allowed]

Figure 10: Test Levels DHS.

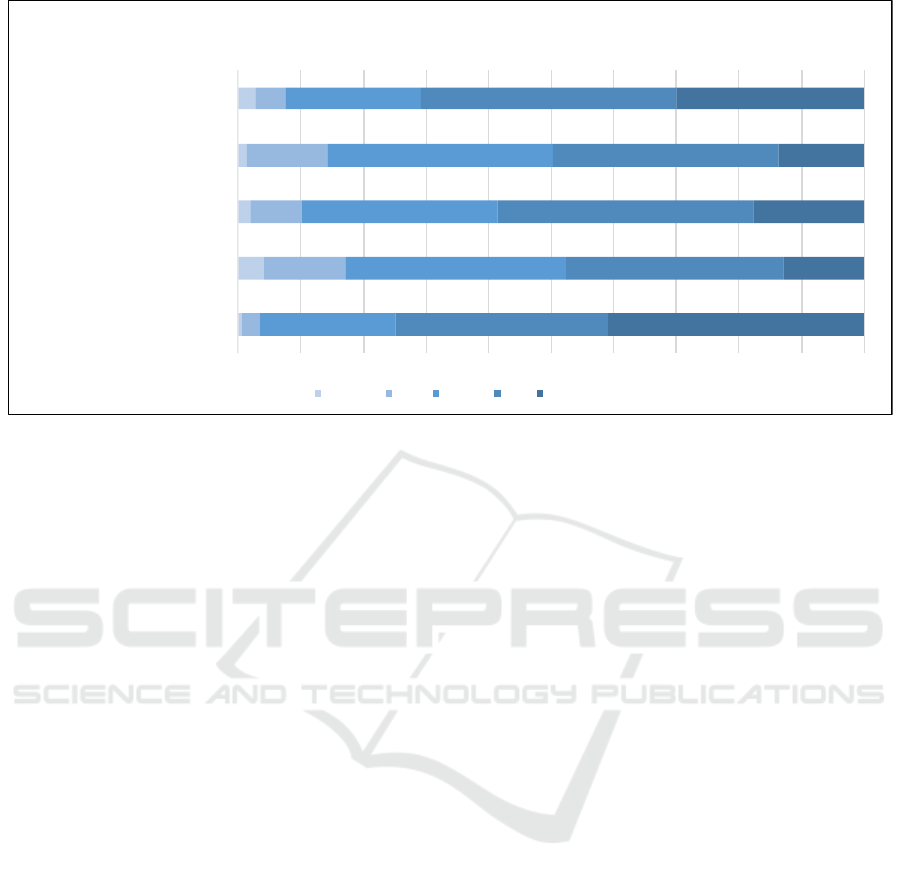

Regarding the most important features that need

to be tested in DHS, the results in Figure 11 show that

the feature that was considered the most important

to be tested was ’Interactions between components

of the system’ (with 76% of responses high or very

high), followed by ’Interactions between the system

and the environment’ (71%) and ’Multiple platforms’

(66%). All the features have been considered of ’very

high’ or ’high’ importance by a majority of respon-

dents (50% or more).

Regarding the level of test automation for DHS,

the results presented in Figure 12 show that 75% of

the tests follow some automated process, however

only 16% are fully automatic, which is lower than the

25% who claim to perform only manual testing.

For people who responded that there is at least

some automatic process, we asked what kind of tool

they use. With this question we can understand the

level of effort required to automate the testing pro-

cess. Looking at the results (Figure 13) we realize

that only 31% use a commercial tool to automate the

process, and the majority, 69%, use a tool developed

in-house, reusable or not in different SUTs.

Regarding the desired features of a test automation

solution for DHS, the results presented in Figure 15

show that the most important features (based in the

percentage of responses high or very high) in an auto-

mated testing tool for DHS are ’Support for automatic

test case execution’ (75%) and ’Support for multiple

platforms’ (71%).

As a possible solution to test DHS, we asked the

participants in this survey if they would find useful

a tool to test these systems that use only a model

of interactions (UML sequence diagram) as an entry

model. The results (Figure 14) show that 86% con-

sider useful a tool with these characteristics.

3.4 Multivariate Analysis

For questions specifically related to the opinion of the

participants, a multivariate analysis was held with the

aim to determine whether the participants’ responses

depend on their current function (Software testing,

verification & validation versus all the others).

The results of the chi-square test for independence

show that there is no statistically significant associa-

tion (for a 95% significance level) between the cur-

rent function (Software testing, verification & valida-

tion versus all others) and the answers to the questions

shown in Figures 11, 15 or 14.

4 DISCUSSION

4.1 Relevance of Respondents

The results presented in the previous section show

that this survey met the original purpose with regard

to their target audience, since 70% of respondents’

primary responsibility is related to ’Software testing,

verification & validation’. With regard to their expe-

rience, the results showed that they are not only peo-

ple who are mostly in their current position for sev-

eral years, as work with software testing in general

and specifically with DHS. With respect to the type

of companies, the results show that this survey cov-

ers companies with diverse activity sectors and also

large companies (45% have more than 1000 collabo-

rators) which provides a great support to the conclu-

sions reached.

Concerning the main conclusions we can draw

from the results, they are next organized according to

the initial research questions.

4.2 RQ1: How Relevant are DHS in the

Software Testing Practice?

The results (Figure 9) show that a vast majority of ap-

proximately 90% of the companies surveyed (all with

software testing activities in general) conducts tests

for DHS, in at least one role and at least one test level,

hence confirming the high relevance of DHS in soft-

ware testing practice.

ICSOFT-EA 2016 - 11th International Conference on Software Engineering and Applications

74

!"

!"

#"

#"

$"

$"

#"

%"

!&"

!%"

!%"

'"

%$"

%%"

#%"

##"

##"

%("

#("

#%"

#)"

#'"

#$"

##"

##"

$$"

!*"

!+"

!)"

#&"

&" !&" %&" #&" $&" '&" )&" +&" (&" *&" !&&"

,-./012.34-56 7/.8//-6 .9/65:5./;61-<6.9/6/-=304-;/-.

,-./012.34-56 7/.8//-6 24;>4-/-.56 4?6 .9/65:5./;6

@101AA/A35;6 1-<624 -2 B00 /-2:

C3;/624-5.01 3-.5

D4-6 </./0;3-35.326 7/91= 3405

EBA.3>A/6 >A1.?40;5

Please&rate& the°ree& of&importance&of&testing& each& of&the& following&features&of&distributed&and&

heterogeneous&systems:

=/0:65;1AA 5;1AA ;/<3B; 93F9 =/0:693F9

Figure 11: Features.

25%

52%

7%

16%

What%is %the%level% o f%test%automatio n%

for%these% systems?

Only+manual+testing

Automatic+test+execution+(with+

manual+test+scripting/coding)

Automatic+test+generation+ (with+

manual+execution)

Automatic+test+generation+ and+

execution

Figure 12: Automation Level.

!"#

$%#

&"#

If#you #selected#"Au tomatic#test#..."#in#

the#previous#question ,#what#ty pe#o f#

tools#are#us ed ?

'()(*+,(-./-/,0(-12345+67 (10++*81

0/2*+94:/-(1;+9105(1<=>

'()(*+,(-./-/,0(-12345+67 (10++*81

9(67/?*(1;+91-2;;(9(3017@70(:71

63-(910(7 01A<=>7 B

C+::(9D2/*1+ ;;405(475(*;10++*

Figure 13: Automation Tool.

4.3 RQ2: What are the Most Important

Features to be Tested in DHS?

Regarding the most important features that need to be

tested in DHS, the results in Figure 11 show that the

feature that was considered the most important to be

tested was ’Interactions between components of the

system’ (with 76% of responses high or very high),

Yes

86%

No

14%

If-there-was-a-too l- that-could-test-a-distributed-

and-heterogeneous-system-us ing-only - a-model-of-

interactions- (UML-sequence- diagram)-as-an-entry-

model,-would-you-find-it-useful?

Figure 14: New Tool.

followed by ’Interactions between the system and the

environment’ (71%) and ’Multiple platforms’ (66%).

Nevertheless, all the features inquired were con-

sidered of high or very high importance by a majority

of respondents (50% or more).

4.4 RQ3: What is the Current Status of

Test Automation and Tool Sourcing

for Testing DHS?

The results show that the current level of test automa-

tion for DHS is still very low, and there is large room

for improvement, since 25% of companies in the sur-

vey claim that they only perform manual tests, against

only 16% who claim to test DHS with a full automatic

Testing Distributed and Heterogeneous Systems: State of the Practice

75

!"

#"

$"

!"

%"

%"

!%"

&"

!%"

'"

$$"

%'"

%!"

%("

$$"

%#"

%'"

#!"

%("

#!"

#!"

!%"

!&"

!#"

%)"

)" !)" $)" %)" #)" ')" ()" *)" &)" +)" !))"

,-../012 3/024-1/54167218912749828:87-16/;

,-../012 3/024-1/5416721891274982<8;80416/;

,-../012 3/02189127/=804<824;4>?969

,-../012 3/024-1/5416721891291-@2 <8;80416/;

,-../012 3/025->16.>82 .>413/059

Please&rate& the°ree& of&importance&of&each&of&the& following&features& of&a&test& automation&solution&

for&distributed&and& heterogeneous&systems:

=80?2954>> 954>> 58A6-5 B6<B =80?2B6<B

Figure 15: Tool Features.

process.

If we look for companies that have some type of

automation in its testing process, we realize that the

automation process is requiring a high effort in the

creation / adaptation of own tools, because only 31%

of companies claim to use a commercial tool to test

these types of systems.

4.5 RQ4: What are the Most Desired

Features in Test Automation

Solutions for DHS?

Regarding the conclusions that can be drawn for fu-

ture work, particularly at the level of creating tools

that can reduce the effort required to test DHS, look-

ing at Figure 15, we realize that companies identify as

key aspects of a tool to test such systems the ability to

automate test execution (75% of responses with high

or very high importancte) and the support for multiple

platforms (71%).

Nevertheless, all the features inquired were con-

sidered of medium, high or very high importance by

a large majority of respondents (83% or more).

The comparison of the degree of importance at-

tributed to automatic test case execution (96% of the

responses mentioning a medium, high or very high

importance in Figure 15) with the current status (78%

of companies applying automatic text execution in

Figure 12), show that there is a significant gap yet

to be filled between the current status and the desired

status of automatic test case execution.

The gap is even bigger regarding automatic test

case generation, with 83% of the responses mention-

ing a medium, high or very high importance in Fig-

ure 15, and only 23% of the companies currently ap-

plying automatic text generation in Figure 12.

We realized even by the Figure 14, that companies

are highly receptive to a test tool that has only a model

of interactions as an input model for automatic test

case generation and execution.

5 RELATED WORK

We only found in literature one survey (Ghazi et al.,

2015) that discuss some aspects related to the test-

ing of heterogeneous systems. The survey conducted

by (Ghazi et al., 2015) explored the testing of hetero-

geneous systems with respect to the usage and per-

ceived usefulness of testing techniques used for het-

erogeneous systems from the point of view of in-

dustry practitioners in the context of practitioners in-

volved in heterogeneous system development report-

ing their experience on heterogeneous system testing.

For achieving this goal the authors tried to answer two

research questions:

• RQ1: Which testing techniques are used to evalu-

ate heterogeneous systems?

• RQ2: How do practitioners perceive the identified

techniques with respect to a set of outcome vari-

ables?

The authors concluded that the most frequently

used technique is exploratory manual testing, fol-

lowed by combinatorial and search-based testing, and

that the most positively perceived technique for test-

ing heterogeneous systems was manual exploratory

ICSOFT-EA 2016 - 11th International Conference on Software Engineering and Applications

76

testing. Our work has a different objective of the

survey conducted by Ghazi. The Ghazi main goal

was to identify testing techniques, our aim is to un-

derstand how distributed systems and heterogeneous

are tested in companies realizing which test levels are

performed and which are the automation levels for

testing these systems. The Ghazi survey also involved

a much smaller number of participants (27).

As regards the general software testing in the lit-

erature there are many surveys, however as the main

aim of our work is to analyze the state of practice,

we analyze surveys carried out in the industry by rec-

ognized standardization bodies as ISTQB (ISTQB,

2016a). The most recent survey of this organiza-

tion (ISTQB, 2016b) conducted over more than 3,000

people from 89 countries, although it has a different

purpose of our work because is related to the soft-

ware test in general, provides results that meet the re-

sults presented in this article, namely that there are

still significant improvement opportunities in test au-

tomation (was considered in this study the area with

highest improvement potential).

6 CONCLUSIONS

In order to assess the current state of the practice re-

garding the testing of DHS and identify opportuni-

ties and priorities for research and innovation initia-

tives, we conducted an exploratory survey that was

responded by 147 software testing professionals that

attended industry-oriented software testing confer-

ences.

The survey allowed us to confirm the high rele-

vance of DHS in software testing practice, confirm

and prioritize the relevance of testing features charac-

teristics of DHS, confirm the existence of a significant

gap between the current and the desired status of test

automation for DHS, and confirm and prioritize the

relevance of test automation features for DHS. We ex-

pect that the results presented in the paper are of inter-

est to researchers, tool vendors and service providers

in this field.

As future work, we intend to develop techniques

and tools to support the automatic test generation and

execution of test cases for DHS, based on UML se-

quence diagrams.

ACKNOWLEDGEMENTS

This research work was performed in scope of the

project NanoSTIMA. Project “NanoSTIMA: Macro-

to-Nano Human Sensing: Towards Integrated Mul-

timodal Health Monitoring and Analytics/NORTE-

01-0145-FEDER-000016” is financed by the North

Portugal Regional Operational Programme (NORTE

2020), under the PORTUGAL 2020 Partnership

Agreement, and through the European Regional De-

velopment Fund (ERDF).

REFERENCES

AAL4ALL (2015). Ambient Assisted Living For All.

http://www.aal4all.org.

Boehm, B. (2011). Some Future Software Engineering Op-

portunities and Challenges. In Nanz, S., editor, The

Future of Software Engineering, pages 1–32. Springer

Berlin Heidelberg.

Dias Neto, A. C., Subramanyan, R., Vieira, M., and Travas-

sos, G. H. (2007). A Survey on Model-based Testing

Approaches: A Systematic Review. In Proceedings

of the 1st ACM International Workshop on Empiri-

cal Assessment of Software Engineering Languages

and Technologies: Held in Conjunction with the

22Nd IEEE/ACM International Conference on Auto-

mated Software Engineering (ASE) 2007, WEASEL-

Tech ’07, pages 31–36, New York, NY, USA. ACM.

DoD (2008). Systems Engineering Guide for Systems of

Systems. Technical report, Office of the Deputy Under

Secretary of Defense for Acquisition and Technology,

Systems and Software Engineering Version 1.0.

Faria, J. and Paiva, A. (2014). A toolset for confor-

mance testing against UML sequence diagrams based

on event-driven colored Petri nets. International Jour-

nal on Software Tools for Technology Transfer, pages

1–20.

Ghazi, A. N., Petersen, K., and Börstler, J. (2015). Software

Quality. Software and Systems Quality in Distributed

and Mobile Environments: 7th International Confer-

ence, SWQD 2015, Vienna, Austria, January 20-23,

2015, Proceedings, chapter Heterogeneous Systems

Testing Techniques: An Exploratory Survey, pages

67–85. Springer International Publishing, Cham.

Hierons, R. M. (2014). Combining Centralised and Dis-

tributed Testing. ACM Trans. Softw. Eng. Methodol.,

24(1):5:1–5:29.

ISTQB (2016a). International Software Testing Qualifica-

tions Board. http://www.istqb.org/.

ISTQB (2016b). ISTQB Worldwide Software Testing Prac-

tices Report 2015-2016. Technical report.

Kim, B., Hwang, H. I., Park, T., Son, S. H., and Lee, I.

(2014). A layered approach for testing timing in the

model-based implementation. In DATE, pages 1–4.

IEEE.

Lima, B. and Faria, J. P. (2016). Software Technologies:

10th International Joint Conference, ICSOFT 2015,

Colmar, France, July 20-22, 2015, Revised Selected

Papers, chapter Automated Testing of Distributed and

Heterogeneous Systems Based on UML Sequence Di-

agrams, pages 380–396. Springer International Pub-

lishing, Cham.

Testing Distributed and Heterogeneous Systems: State of the Practice

77

OMG (2015). OMG Unified Modeling LanguageTM

(OMG UML) Version 2.5, Superstructure. Technical

report, Object Management Group.

Petrenko, A. and Yevtushenko, N. (2011). Testing Software

and Systems: 23rd IFIP WG 6.1 International Con-

ference, ICTSS 2011, Paris, France, November 7-10,

2011. Proceedings, chapter Adaptive Testing of Deter-

ministic Implementations Specified by Nondetermin-

istic FSMs, pages 162–178. Springer Berlin Heidel-

berg, Berlin, Heidelberg.

Tassey, G. (2002). The Economic Impacts of Inadequate

Infrastructure for Software Testing. Technical report,

National Institute of Standards and Technology.

Torens, C. and Ebrecht, L. (2010). RemoteTest: A Frame-

work for Testing Distributed Systems. In Software En-

gineering Advances (ICSEA), 2010 Fifth International

Conference on, pages 441–446.

Utting, M. and Legeard, B. (2007). Practical Model-Based

Testing: A Tools Approach. Morgan Kaufmann Pub-

lishers Inc., San Francisco, CA, USA.

West, S., Nanz, S., and Meyer, B. (2012). Demonic test-

ing of concurrent programs. In Proceedings of the

14th International Conference on Formal Engineering

Methods: Formal Methods and Software Engineer-

ing, ICFEM’12, pages 478–493, Berlin, Heidelberg.

Springer-Verlag.

Wohlin, C., Höst, M., and Henningsson, K. (2003). Em-

pirical research methods in software engineering. In

Empirical methods and studies in software engineer-

ing, pages 7–23. Springer.

ICSOFT-EA 2016 - 11th International Conference on Software Engineering and Applications

78