Gamepad Control for Industrial Robots

New Ideas for the Improvement of Existing Control Devices

Maximilian Wagner

1

, Dennis Avdic

2

and Peter Heß

2

1

Nuremberg Campus of Technology, Nuremberg Institute of Technology, F

¨

urther Straße 246b, 90429 Nuremberg, Germany

2

Department of Mechanical Engineering and Building Services Engineering, Nuremberg Institute of Technology,

Keßlerplatz 12, 90489 Nuremberg, Germany

Keywords:

Gamepad, Industrial Robots, Robot Control Devices, Usability.

Abstract:

In the scope of this work, a frequently used gaming device—the gamepad—is investigated as a control device

for industrial robots in order to extract new ideas for the improvement of existing control devices. Thus,

an approach for the integration of a gamepad control in a common industrial robot system is developed and

implemented. The usability of the gamepad control is improved by adding a smartphone to the gamepad,

which serves as a display to show information to the user. Finally, the developed gamepad control is compared

with the operation via conventional control device.

1 INTRODUCTION

The sales of industrial robots reached the highest level

ever recorded in 2015 (IFR, International Federation

of Robotics, 2015). At the same time, modern prod-

ucts offer a lot of variety and diversity. Thus, small

and varying batch sizes are in the focus. In doing so,

a high flexibility becomes more important for indus-

trial robot systems. This requires a quick and easy

programming of the robots in order to lower the costs.

For this purpose, an intuitive Human Machine Inter-

face (HMI) for the robots is important as it lowers the

qualification skills needed by the user. In addition,

the time needed by inexperienced users to get started

with the robot is reduced by an intuitive interface.

Common control devices for industrial robots

have hardly changed in the past few years. Usually,

the user input is still done via buttons, whether they

are physical or virtual. Alternative devices exist in

the gaming industry. One of the most frequently used

gaming devices is the gamepad. In the scope of this

work, we investigate the gamepad as a control device

for industrial robots to obtain new ideas for the im-

provement of existing devices.

This paper is structured as follows: In Section 2

the related work on control devices for industrial

robots and on gamepads is shown. The approach for

the use of a gamepad as a control device for industrial

robots is explained in Section 3. The implementation

of this approach and a usability test of the gamepad

control is presented in Section 4. Only initial trends

are pointed out because of a currently low number of

test candidates. Finally, Section 5 concludes the paper

with a short summary and an outlook to future work.

2 RELATED WORK

This section shows the related work on control de-

vices for industrial robots. Furthermore, the gamepad

is explained in detail.

2.1 Industrial Robot Control Devices

The commonly used control device for industrial

robots is called Robot Teach Pendant (RTP). Almost

every robot manufacturer offers such a control device

included with the robot. The RTP varies from man-

ufacturer to manufacturer, but most of the time the

control is done through physical or virtual buttons.

Some devices have an additional joystick, 3D or 6D

mouse to achieve a more intuitive controlling of the

robot. Most applications can be accomplished with a

RTP. The usability can be improved by an extended

interface, as shown by Dose and Dillmann (Dose and

Dillmann, 2014). Usability tests have shown that

this method can be used without expert knowledge in

robotics and additionally reduces the set up time of a

new task dramatically.

368

Wagner, M., Avdic, D. and Heß, P.

Gamepad Control for Industrial Robots - New Ideas for the Improvement of Existing Control Devices.

DOI: 10.5220/0005982703680373

In Proceedings of the 13th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2016) - Volume 2, pages 368-373

ISBN: 978-989-758-198-4

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

Another method for moving the robot is the man-

ual hand guidance. With this method the program is

taught by positioning the robot to the desired place

by hand. Modern lightweight robots offer such a

function, but with larger robots, this is not yet avail-

able. For heavier robots the movement can be sup-

ported by the robot motors, as shown by Colombo et

al. (Colombo et al., 2006). The robot has a force

and torque sensor that recognizes an outer force and

moves to the desired position accordingly.

Guiding a robot by vocal input has been realized

by Pires (Pires, 2005). The user has a fixed set of

commands to guide the industrial robot through tasks.

The operator uses his voice and tells the robot when to

stop, open or close the gripper or start welding. The

huge advantage of this interface is the natural commu-

nication since it is the same interface humans use to

exchange information. Secondly, it is easy to change

from one robot to another by simply saying the appro-

priate command. Furthermore, it reduces complexity

as the set of existing commands is smaller.

Hand gesture and face recognition has gathered a

lot of interest lately and many different approaches

of gestures can be recognized. Some approaches uti-

lize color information of camera images, as shown by

Br

`

ethes et al. (Br

`

ethes et al., 2004) and by Malima

et al. (Malima et al., 2006). The identification of

body parts based on depth sequence data is consid-

ered by Liu and Fujimura (Liu and Fujimura, 2004).

The main problem in the context of gesture recogni-

tion is the gesture spotting, which means when a ges-

ture command is starting or ending.

Modern motion control devices like the Nintendo

Wii Remote have also been used successfully for the

guidance of an industrial robot, shown by Neto et al.

(Neto et al., 2010). It provides a user easy means to

control a robot without expert robot knowledge and a

wireless and intuitive way to deal with common in-

dustrial tasks such as pick and place. This approach

has been compared to the manual guidance of a robot

and showed, that the manual guidance was faster in

completing given applications.

Some gamepad controls for robots have already

been realized. Especially for mobile robots the game-

pad is a popular control device, e.g. used by Caccamo

et al. (Caccamo et al., 2015). Colombo et al. used

a gamepad for an industrial robot arm, but there is

no approach and no further investigation explained

(Colombo et al., 2006).

2.2 Gamepad

Gamepads were first developed within the video gam-

ing industry (Cummings, 2007). The first gamepads

consisted a D-pad and two buttons. The D-pad is a

plus shaped button, which can be tilted in four (up,

down, left and right) or eight (plus the diagonals) di-

rections. Thus, it is ideal for simple movement con-

trols. As the possibilities within games became more

complex, the movement control within the game had

to be easier and more intuitive. When the videogames

started to become three-dimensional the D-pad was

starting to lose its meaning. In order to give the user

more control, the analog stick was introduced. Its

sensitivity and intuitiveness as well as the possibility

to choose between more orientations made it popular

and the analog stick became the dominant control for

three-dimensional problems.

3 APPROACH

In this approach a gamepad is used for the control

of an industrial robot. Usually, directly controlling

the robot from an external device is not offered by

the robot manufacturer. Thus, further components are

necessary for the communication (cf. Figure 1). One

part of the system is the robot arm connected with its

controller. Furthermore, the gamepad is connected to

the control computer. A wireless connection is pre-

ferred to improve the movability of the user. In addi-

tion, there is a smartphone attached to the gamepad to

show information to the user. It is used as a display

and also connected wirelessly to the control computer

by Wi-Fi. The control computer and the robot con-

troller are also connected to each other.

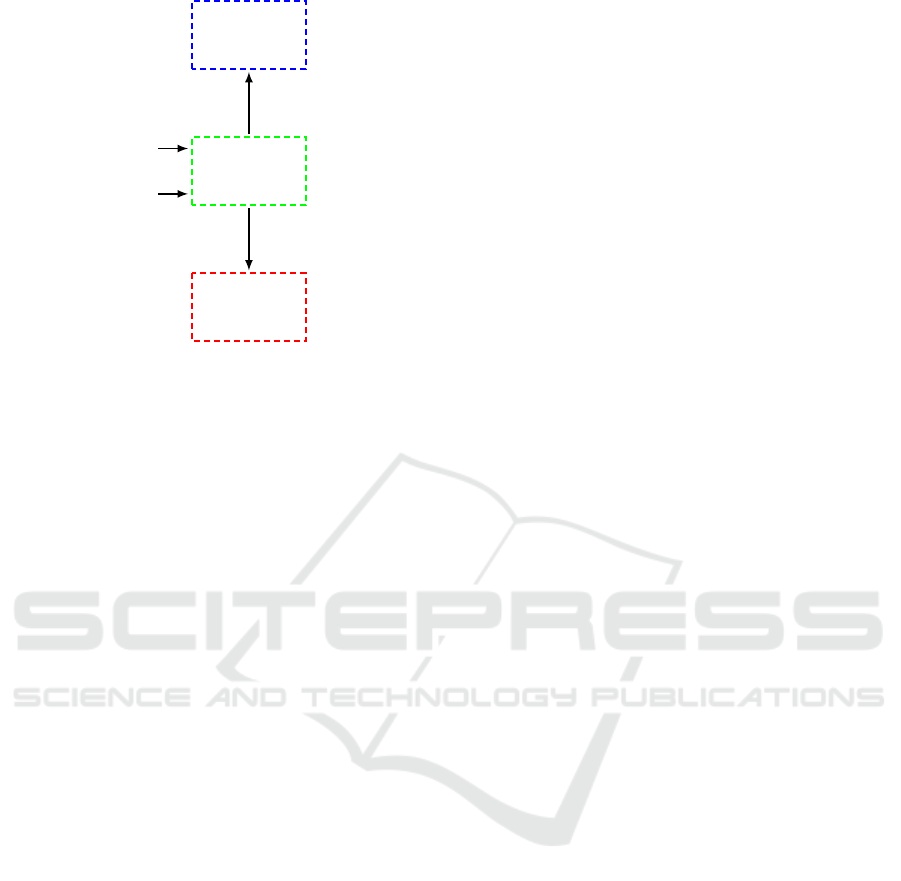

The main software part of this approach runs on

the control computer. The control software continu-

ously receives the state of the gamepad (cf. Figure 2).

All changes of the state—the pressing of a button or

the deflection of an analog stick—are processed fur-

Control

computer

Smartphone

Gamepad

Robot

controller

Robot arm

Control software

Visualization app

Interpreter program

Figure 1: System overview for the gamepad control for in-

dustrial robots.

Gamepad Control for Industrial Robots - New Ideas for the Improvement of Existing Control Devices

369

Control

software

Visualisation

app

Interpreter

program

Gamepad state

Settings file

Visualization data

Desired values

Figure 2: Software overview for the gamepad control for

industrial robots.

ther. Similar to conventional robot control devices, a

safety function is also integrated in order to prevent

unintentional entries. Thus, buttons can be used as

release buttons and a robot action is initialized only

if these buttons are pressed. The resulting action for

each input is defined in a settings file. Each line of

the file contains a setting consisting a button or axis

parameter and a robot action parameter. The axis pa-

rameters also consist a modifier to regulate the sen-

sitivity. Thus, the settings can be changed quickly

by editing the settings file. The desired values for

the robot are estimated from the combination between

the gamepad state and the settings. They are sent to

the robot controller by the control software. The in-

terpreter program reads the information and executes

the appropriate robot commands. To provide the user

with information, the software sends the relevant data

to the visualization app.

Notheis et al. (Notheis et al., 2014) suggested that

visual hints for the movement of robots were helpful

for the participants. We adopted this idea and inte-

grated a visualization which would help the user to

understand the controls and shorten the learning pro-

cess for operating the industrial robot. Since an in-

dustrial robot can quickly cause damage to the equip-

ment when it is used by an inexperienced user, we

were seeking an opportunity to avoid collisions. Most

collisions and problems happen because of a lack of

robotic-human awareness. Drury et al. (Drury et al.,

2003) described that many users collided with objects

even though sensors indicated them. We assumed that

this might be due to an abundance of input data which

might lead the user to involuntarily ignore certain in-

formation. Based on this assumption the app is mod-

ified to only show the input options that are currently

necessary to conduct a certain movement.

4 EXPERIMENTS AND RESULTS

In this section, the implementation of the approach

described above is presented (cf. Section 4.1). It be-

gins by outlining the used hardware. Furthermore, the

software implementation is described in detail. Fi-

nally, the usability of the realized approach is exam-

ined (cf. Section 4.2).

4.1 Implementation

For the implementation of the approach four main

hardware components are necessary: a robot with its

controller, a control computer, a smartphone and a

gamepad. A KUKA KR 6 R900 sixx industrial robot

arm is used as robot for the implementation. It has

six axis and a separate robot controller. A software

package for the XML communication via Ethernet

offered by the robot manufacturer is installed on the

controller. The control computer has an Intel Core i7

CPU and 16 GB RAM. Its operating system is Win-

dows 8.1. A Google LG Nexus 5 smartphone with

Android 6.0.1 is used as a display for the user. It is

fixed to the gamepad by a self-constructed adapter. A

Microsoft Xbox 360 Wireless Controller for Windows

is used as gamepad. As the name already indicates,

it is a wireless controller and connected to the con-

trol computer by an usb-transceiver. The gamepad is

equipped with two analog sticks and one D-pad. Fur-

thermore, the gamepad is equipped with two triggers

and ten buttons. Two of these buttons are integrated

in the analog sticks.

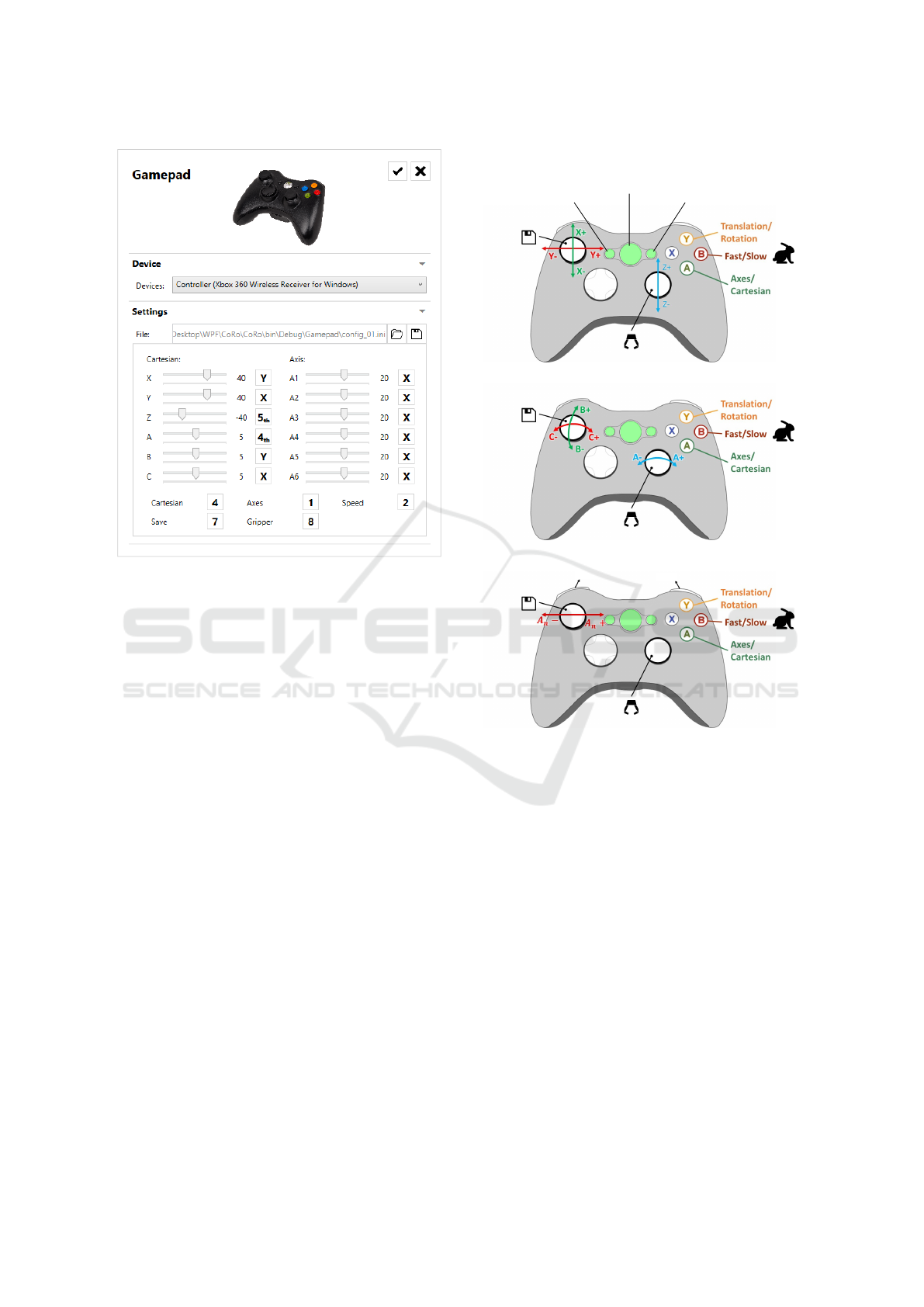

The control software is realized with C# and the

.NET Framework. All connections are initialized

from this software. The open source framework

SlimDX is utilized for the connection with the game-

pad. For the network connections the data is sent to a

connected socket by the System.Net.Sockets names-

pace. An user interface for the configuration of the

settings file is implemented in the software to simplify

the configuration process (cf. Figure 3). Through this

interface the settings can be changed by clicking on

the robot parameter and subsequently entering the ac-

companying gamepad button or axis. The modifier

can be changed by a slide control next to each axis

setting. The specific settings selected for this imple-

mentation are explained in the back of this section.

An XML file is utilized for the communication be-

tween the control computer and the robot. It is trans-

fered by the control software via Ethernet continu-

ously. It contains parameters for a target robot posi-

tion and robot axes values, a gripper state and a mode

parameter to select between cartesian and axes move-

ment. Inside of the interpreter program the XML file

ICINCO 2016 - 13th International Conference on Informatics in Control, Automation and Robotics

370

Figure 3: Settings window in the control software for the

gamepad control.

is read and subsequently robot actions are executed

based on the parameters. This is also done continu-

ously to ensure a rapidly execution.

The main part of the visualization app is an im-

age of the gamepad (cf. Figure 4). It contains in-

formation about the action connected with each but-

ton. Depending on the selected mode it contains

also information about the actions connected with

the analog sticks. There are three modes defined:

two cartesian modes (translation and rotation) and

an axes mode. The switching between the two carte-

sian modes is done by the Y-button. The A-button

switches between the cartesian modes and the axes

mode. The selected axis can be changed by the two

buttons on the top of the gamepad. The left button

reduces the axis index by one and the right button in-

creases the index by one. Two speed levels (fast and

slow) are implemented for a regulation of the move-

ment speed. A switching between these two levels can

be done by the B-button. The two speed levels are vi-

sualized by a turtle and a rabbit icon to achieve a bet-

ter perception. Furthermore, the states of the connec-

tions between robot controller and control computer

as well as between gamepad and control computer are

visualized by colored areas. Thus, the user can easily

check the connection states of the system. In addition,

the safety button state is visualized in the same way to

give a feedback of the pressed safety button. The two

Gamepad

connection

state

Safety

button state

Robot

connection

state

(a) Translation mode.

(b) Rotation mode.

n − 1

n + 1

n = 1

(c) Axes mode.

Figure 4: Different modes in the visualization app.

triggers are used as safety buttons. They need to be

pressed to achieve any kind of action. The gripper

can be opened and closed by pressing the right ana-

log stick. A robot position is added to a program by

pressing the left analog stick. We were considering to

use the D-pad as well but decided against it since it is

suggested by Cummings that a D-pad performs worse

for 3D tasks (Cummings, 2007).

4.2 Usability Test

With the implemented gamepad control for an indus-

trial robot arm a usability test is conceived. Thus, two

tasks are defined which represent frequent sequences

of industrial robot applications. The first task is a sim-

ple pick and place task (cf. Figure 5). An object has

to be moved from a position p

1

= (500 − 500 0)

T

to a position p

2

= (500 500 0)

T

, all units are in mm.

Gamepad Control for Industrial Robots - New Ideas for the Improvement of Existing Control Devices

371

+

p

1

+

p

2

1000

300

Robot base

+

p

1

+

p

2

500

z

y

x

x

y

z

Figure 5: First task for the usability test of the gamepad

control for industrial robots.

In doing so, an obstacle at y = 0 mm with a height

of 300 mm must be overcome. The two positions are

marked by bars which support the positioning. Be-

tween these bars the object has a clearance of 1.5 mm.

The exact path points are not specified. For this task a

gripper, fitting to the moved object, is mounted on the

robot arm.

For the second task the robot has to be moved

along an s-shaped path (cf. Figure 6). The linear part

of the path has a length of 300 mm in y-direction.

The neighboring linear parts are linked by an arc with

a radius of 50 mm. A pen is attached to the robot arm

to achieve a visible path by drawing on a plane. All

points of the path are marked by a circle with 5 mm

radius. Before the first position and after the last po-

sition of the path the tool has to be moved up in z-

direction to get a distance between the tool and the

plane.

+

p

1

+

p

2

+

p

3

+

p

4

+

p

5

+

p

6

+

p

7

+

p

8

300

100

x

y

z

Figure 6: Second task for the usability test of the gamepad

control for industrial robots.

These two tasks are performed with the conven-

tional RTP and with the gamepad control by ten test

users so far. The time required for each performed

task is recorded. In order to avoid understanding re-

lated errors each task is done twice with each of the

devices. Thus, eight time values result for each test

user, four with the RTP (t

P

) and four with the game-

pad (t

G

). The mean values of all users are summa-

rized in Table 1. It appears that lower average times

are achieved with the gamepad control. Due to the

smaller difference between first and second attempt,

the game pad appears to be more intuitive to learn.

In addition, user data about the age, the experience

and the perception of the usability is documented.

Because of the above-mentioned low number of test

candidates a more detailed evaluation does not make

sense until now.

5 CONCLUSIONS

Within this paper we have developed a software,

which reads inputs from a common gamepad device,

translates these inputs and refers them to the robot

controller, which commands the movement of an in-

dustrial robot. In order to simplify the guiding pro-

cess and to reduce the training time of the user, we

have integrated an easy and self-explanatory inter-

face, which has only the necessary functionalities in-

cluded. The method of robot manipulation with a

gamepad is tested in two different tasks. Every user

had to perfom every task twice with the RTP and

twice with the gamepad. In the first tests, lower times

are achieved with the gamepad control. In addition,

all users strongly preferred the gamepad as a manipu-

lator over the RTP.

From this we are inclined to suggest that the

gamepad seems to be more intuitive to users then the

RTP. However, due to the small number of test users,

no valid statement can be determined until now. Thus,

the usability test should be extended to more users

with different ages and levels of experience to con-

clude if the gamepad is faster and more intuitive then

Table 1: Mean time values for the usability test tasks.

Task Attempt

t

P

t

G

(min:s) (min:s)

Pick and 1

7:57 5:29

place 2

5:27 4:16

Path

1

7:11 4:38

2

5:45 4:07

ICINCO 2016 - 13th International Conference on Informatics in Control, Automation and Robotics

372

the RTP. In addition, the hardware could be improved

by constructing a smartphone adapter that results a

center of mass in the middle of the gamepad and not

infront of it. Thus, the handling of the device could

be improved.

REFERENCES

Br

`

ethes, L., Menezes, P., Lerasle, F., and Hayet, J.

(2004). Face tracking and hand gesture recognition

for human-robot interaction. In Proceedings of the In-

ternational Conference on Robotics and Automation

(ICRA 2004), volume 2, pages 1901–1906. IEEE.

Caccamo, S., Parasuraman, R., B

˚

aberg, F., and

¨

Ogren, P.

(2015). Extending a UGV teleoperation FLC interface

with wireless network connectivity information. In

Proceedings of the International Conference on Intel-

ligent Robots and Systems (IROS 2015), pages 4305–

4312. IEEE.

Colombo, D., Dallefrate, D., and Tosatti, L. M. (2006). PC

based control systems for compliance control and in-

tuitive programming of industrial robots. In Proceed-

ings of the International Symposium on Robotics (ISR

2006), Munich, Germany. VDE-Verlag.

Cummings, A. H. (2007). The evolution of game controllers

and control schemes and their effect on their games.

In Proceedings of the Interactive Multimedia Confer-

ence. University of Southampton.

Dose, S. and Dillmann, R. (2014). Eine intuitive Mensch-

Maschine-Schnittstelle f

¨

ur die automatisierte Kleinse-

rienmontage. Shaker Verlag, Herzogenrath, Germany.

Drury, J. L., Scholtz, J., and Yanco, H. A. (2003). Aware-

ness in human-robot interactions. In Proceedings of

the International Conference on Systems, Man and

Cybernetics (SMC 2003), volume 1, pages 912–918.

IEEE.

IFR, International Federation of Robotics (2015). World

Robotics 2015 Industrial Robots. VDMA-Verlag,

Frankfurt, Germany.

Liu, X. and Fujimura, K. (2004). Hand gesture recognition

using depth data. In Proceedings of the International

Conference on Automatic Face and Gesture Recogni-

tion, pages 529–534. IEEE.

Malima, A.,

¨

Ozg

¨

ur, E., and Cetin, M. (2006). A fast al-

gorithm for vision-based hand gesture recognition for

robot control. In Signal Processing and Communica-

tions Applications, pages 1–4. IEEE.

Neto, P., Pires, J., and Moreira, A. P. (2010). High-level

programming and control for industrial robotics: us-

ing a hand-held accelerometer-based input device for

gesture and posture recognition. In Industrial Robot:

An International Journal, volume 37, Bingley, United

Kingdom. Emerald Group Publishing Limited.

Notheis, S., Hein, B., and W

¨

orn, H. (2014). Evalua-

tion of a method for intuitive telemanipulation based

on view-dependent mapping and inhibition of move-

ments. In Proceedings of the International Conference

on Robotics & Automation (ICRA 2014), pages 5965–

5970. IEEE.

Pires, J. (2005). Robot-by-voice: Experiments on com-

manding an industrial robot using the human voice.

In Industrial Robot: An International Journal, vol-

ume 32, Bingley, United Kingdom. Emerald Group

Publishing Limited.

Gamepad Control for Industrial Robots - New Ideas for the Improvement of Existing Control Devices

373