A Lightweight and High Performance Remote Procedure Call

Framework for Cross Platform Communication

Hakan Bagci and Ahmet Kara

Tubitak Bilgem Iltaren, Ankara, Turkey

Keywords:

Remote Procedure Call, High Performance Computing, Cross Platform Communication.

Abstract:

Remote procedure calls (RPC) are widely used for building distributed systems for about 40 years. There

are several RPC implementations addressing different purposes. Some RPC mechanisms are general purpose

systems and provide a number of calling patterns for functionality, hence they do not emphasize performance.

On the other hand, a small number of RPC mechanisms are implemented with performance as the main

concern. Such RPC implementations concentrate on reducing the size of the transferred RPC messages. In

this paper, we propose a novel lightweight and high performance RPC mechanism (HPRPC) that uses our

own high performance data serializer. We compare our RPC system’s performance with a well-known RPC

implementation, gRPC, that addresses both providing various calling patterns and reducing the size of the

RPC messages. The experiment results clearly indicate that HPRPC performs better than gRPC in terms

of communication overhead. Therefore, we propose our RPC mechanism as a suitable candidate for high

performance and real time systems.

1 INTRODUCTION

RPC enables users to make a call to a remote proce-

dure that resides in the address space of another pro-

cess (Corbin, 2012). This process could be running

on the same machine or another machine on the net-

work. RPC mechanisms are widely used in building

distributed systems because they reduce the complex-

ity of the system and the development cost (Kim et al.,

2007). The main goal of an RPC is to make remote

procedure calls transparent to users. In other words, it

allows users to make remote procedure calls just like

local procedure calls.

The idea of the RPC is first discussed in 1976

(Nelson, 1981). Nelson studied the design possibili-

ties for RPCs in his study. After Nelson, several RPC

implementations developed such as Courier RPC (Xe-

rox, 1981), Cedar RPC (Birrell and Nelson, 1984)

and SunRPC (Sun Microsystems, 1988; Srinivasan,

1995). SunRPC became very popular on Unix sys-

tems. Then it is ported for Solaris, Linux and Win-

dows. SunRPC is widely used in several applications,

such as file sharing and distributed systems (Ming

et al., 2011).

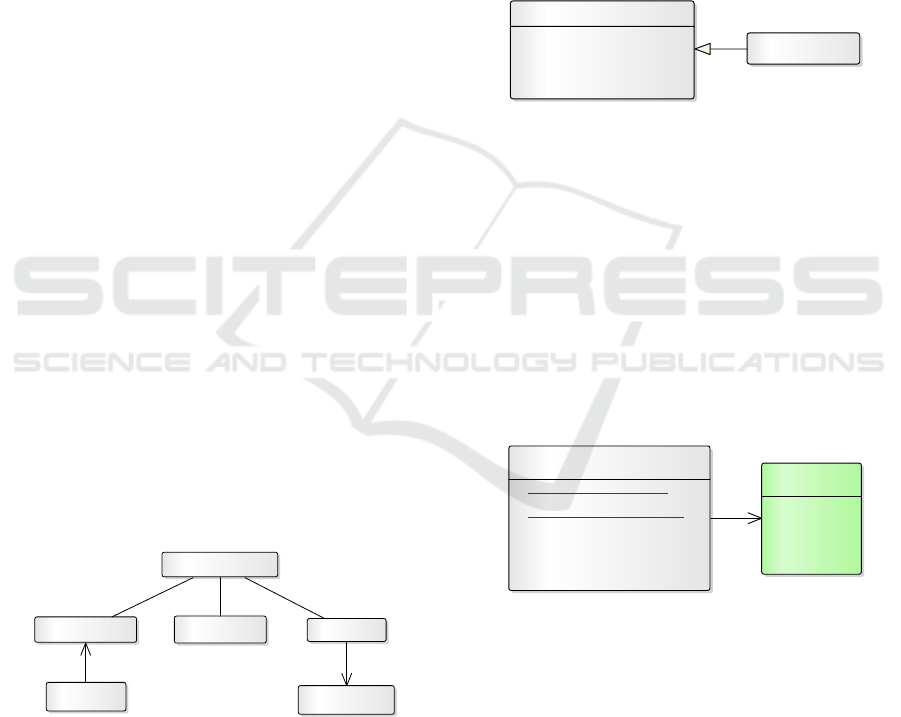

RPC mechanism is basically a client-server model

(Ming et al., 2011). Client initiates an RPC request

that contains the arguments of the remote procedure

through a proxy. The request is then transferred to

a known remote server. After the server receives the

request, it delegates the request to a related service

that contains the actual procedure. The service then

executes the procedure using received arguments and

produces a result. Server sends this result back to

client. This process is illustrated in Figure 1.

Client Server

Proxy

Local

Procedure

Service

Remote

Procedure

parameters

result

Figure 1: RPC Client Server Communication.

One of the major concerns in high performance

computing systems and real-time systems is to re-

duce the size of data transfer. Therefore, a system

that wants to make a remote procedure call needs a

lightweight RPC mechanism with minimal overhead.

Furthermore, most of the RPC mechanisms operate

through networks, hence it is important to reduce the

size of the RPC packets. There are several research

Bagci, H. and Kara, A.

A Lightweight and High Performance Remote Procedure Call Framework for Cross Platform Communication.

DOI: 10.5220/0005931201170124

In Proceedings of the 11th International Joint Conference on Software Technologies (ICSOFT 2016) - Volume 1: ICSOFT-EA, pages 117-124

ISBN: 978-989-758-194-6

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

117

work focused on reducing the overhead of RPC mech-

anisms (Bershad et al., 1990; Satyanarayanan et al.,

1991).

Other RPC mechanisms focus on providing dif-

ferent types of calling patterns such as asynchronous

and parallel remote procedure calls (Satyanarayanan

and Siegel, 1990; Ananda et al., 1991). However, in-

creasing the number of calling patterns brings extra

overhead to the system. The size of the RPC packets

increases as new high level features are added to the

RPC mechanism. This is because of the increasing

size of protocol control data.

gRPC is a language-neutral, platform neutral,

open source RPC mechanism initially developed at

Google (Google, 2015b). It employs protocol buffers

(Protobuf) (Google, 2015a), which is Google’s ma-

ture open source mechanism, for structured data seri-

alization. gRPC aims to minimize data transfer over-

head while providing several calling patterns. gRPC

is a general purpose RPC mechanism, thus has sev-

eral high level features, which bring extra overhead in

data transfer. For example, an identifier is transferred

to the server for each parameter. Therefore, it is not

suitable for systems that aims minimal data transfer

overhead.

In this paper, we introduce a lightweight and

high performance remote procedure call mechanism,

namely High Performance Remote Procedure Call

(HPRPC), that aims to minimize data transfer over-

head. We employ our own structured data serializa-

tion mechanism, named Kodosis. Kodosis is a code

generator that enables high performance data serial-

ization. The main contributions of our work are given

below:

• A novel lightweight and high performance RPC

mechanism is proposed.

• A code generator that enables high performance

data serialization is introduced.

• A comparison between the performances of our

RPC mechanism and gRPC is given which

demonstrates that our RPC mechanism performs

better than gRPC in terms of data transfer over-

head.

The rest of the paper is organized as follows: In the

next section, the research related to the RPC mech-

anisms is briefly explained. In Section 3, we pro-

vide the details of HPRPC. The experiment results are

given in Section 4. Finally, we conclude the paper

with some discussions.

2 RELATED WORK

RPC mechanisms are widely used for building dis-

tributed systems since mid 1970s. There are several

RPC implementations in the literature. In this section,

we briefly describe the RPC mechanisms that are re-

lated to our work.

XML-RPC (UserLand Software Inc., 2015) en-

ables remote procedure calls using HTTP as trans-

port protocol and XML as serialization technique for

exchanging and processing data (Jagannadham et al.,

2007). Since HTTP is available on every kind of pro-

gramming environment and operating system, with

a commonly used data exchange language as XML,

it is easy to adapt XML-RPC for any given plat-

form. XML-RPC is a good candidate for integra-

tion of multiple computing environments. However,

it is not good for sharing complex data structures

directly (Laurent et al., 2001), because HTTP and

XML bring extra communication overhead. In (All-

man, 2003), the authors show that the network per-

formance of XML-RPC is lower than java.net due to

the increased size of XML-RPC packets and encod-

ing/decoding XML overhead.

Simple Object Access Protocol (SOAP) (W3C,

2015) is a widely used protocol for message exchange

on the Web. Similar to XML-RPC, SOAP generally

employs HTTP as transport protocol and XML as data

exchange language. While XML-RPC is simpler and

easy to understand, SOAP is more structured and sta-

ble. SOAP has higher communication overhead than

XML-RPC because it adds additional information to

the messages that are to be transferred.

In (Kim et al., 2007), the authors present a flexible

user-level RPC system, named FlexRPC, for develop-

ing high performance cluster file systems. FlexRPC

system dynamically changes the number of worker

threads according to the request rate. It provides

client-side thread-safety and supports multithreaded

RPC server. Moreover, it supports various calling

patterns and two major transport protocols, TCP and

UDP. However, these extra capabilities of FlexRPC

could cause additional processing and communication

overhead.

In recent years, RPC mechanisms has started to

be used in context of high-performance computing

(HPC). Mercury (Soumagne et al., 2013) is an RPC

system specifically designed for HPC systems. It

provides an asynchronous RPC interface and allows

transferring large amounts of data. It does not imple-

ment higher-level features such as multithreaded exe-

cution, request aggregation and pipelining operations.

The network implementation of Mercury is abstracted

which allows easy porting to future systems as well as

ICSOFT-EA 2016 - 11th International Conference on Software Engineering and Applications

118

efficient use of existing transport mechanisms.

gRPC (Google, 2015b) is a recent and widely used

RPC mechanism that addresses both issues; provid-

ing various calling patterns and reducing the size of

the RPC messages. It uses Protobuf (Google, 2015a)

for data serialization. Protobuf aims to minimize the

amount of data to be transferred. Since gRPC is a

recent and well-known RPC framework and Protobuf

is a mature serialization mechanism, we compare our

HPRPC system’s performance with gRPC. The com-

parison results are presented in Section 4.

3 PROPOSED WORK

High Performance Remote Procedure Call (HPRPC)

Framework is designed with three main objectives:

• Lightweight & simple architecture

• High performance operation

• Cross-Platform support

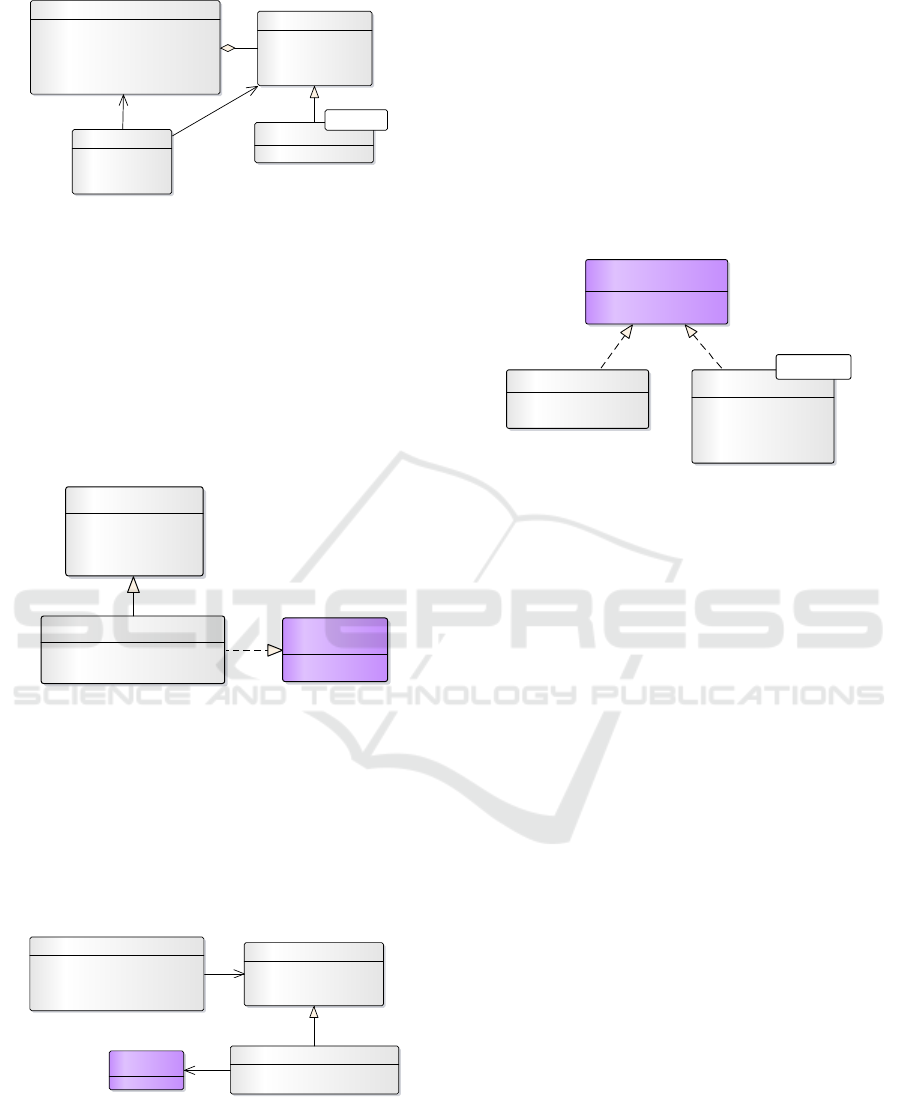

3.1 Architecture

The design of HPRPC is inspired from (Google,

2015c), but it is implemented completely in our re-

search group with our objectives and experiences in

mind. It relies on three main components and one

controller (Figure 2). RpcChannel is the communi-

cation layer that is responsible for transportation of

data. RpcClient is responsible for sending client re-

quests on the channel and RpcServer is responsible

for handling client requests incoming from the chan-

nel. RpcController, on the other hand, is the main

controller class that directs messages of client/server

to/from the channel. We will dig into the capabilities

of these classes in the following sections.

RpcController

RpcServer

RpcClient

RpcChannel

RpcService

RpcProxy

Figure 2: HPRPC Framework Overview.

3.1.1 Channel

RpcChannel implements duplex transportation

mechanism to send/receive messages (Figure 3).

Client/Server initiates a request by calling the Start-

Call method, which then requests an RpcHeader that

identifies the message and returns a BinaryWriter ob-

ject to transport the parameters. Caller serializes the

method parameters/return value to the BinaryWriter

object and calls the EndCall method to finish the

remote procedure call. On the other side the receiving

RpcChannel deserializes the RpcHeader structure

and sends it to the Controller with a BinaryReader

object in order to read the parameters/return value.

Controller invokes Client or Server message handling

methods according to this RpcHeader information.

Currently, RpcChannel is implemented only for

the Tcp/Ip communication layer. However, the archi-

tecture allows any other implementations that include

pipes or shared memory mechanisms.

RpcChannel

+ EndSend(): void

+ RpcChannel(RpcController)

+ StartSend(RpcHeader): BinaryWriter

«property»

+ Controller(): RpcController

TcpRpcChannel

Figure 3: RpcChannel Class.

RpcHeader is a simple struct that consists of 4

fields (Figure 4):

• A unique id that identifies the call

• Type of message that identifies the payload

• Type of service

• Type of called procedure

Header struct specifies its serialization/deserialization

methods that use a 4-byte preamble to protect mes-

sage consistency. If the previous message was not

consumed properly, preamble-check throws an excep-

tion to prevent unexpected failures.

«struct»

RpcHeader

+ Read(BinaryReader): RpcHeader

+ RpcHeader(int, MessageType, int, int)

+ Write(BinaryWriter, RpcHeader): void

«property»

+ Id(): int

+ MsgType(): MessageType

+ ProcType(): int

+ SrvType(): int

«enumeration»

MessageType

None = 0

Call = 1

Result = 2

VoidResult = 3

Error = 4

CloseChannel = 5

Figure 4: RpcHeader Struct.

3.1.2 Client

RpcClient is the class that is used by the proxies to

send method call requests and wait for their responses

(Figure 5). It contains different XXXCall methods to

initiate remote procedure calls and handle their re-

sponses. Call requests return a PendingCall object

that specifies a ticket for the return value. Caller waits

on the PendingCall object. When a response is re-

ceived from the server, it returns a value or throws

an exception depending on the remote procedure’s re-

sponse.

A Lightweight and High Performance Remote Procedure Call Framework for Cross Platform Communication

119

RpcClient

+ Call(int, int): PendingCallWithResult<T>

+ Call(int, int): PendingCall

+ EndCall(): PendingCallWithResult<T>

+ EndCall(): PendingCall

+ ReceiveResult(RpcHeader, BinaryReader): void

+ ReleasePendingCalls(string): void

+ StartCall(int, int): BinaryWriter

PendingCall

+ GetError(): string

+ GetId(): int

+ HasError(): bool

+ PendingCall(int)

+ Wait(): void

T

PendingCallWithResult

+ GetResult(): T

RpcProxy

+ RpcProxy(RpcClient, int)

«property»

# Client(): RpcClient

+ SrvType(): int

0..*1

Figure 5: RpcClient Class.

Proxies are custom generated classes that trans-

form user requests to the RpcClient calls. For each

method call, the proxy class calls the XXXCall method

of the RpcClient and waits for the PendingCall ob-

ject. This mechanism introduces transparency for the

user on the HPRPC framework. In Figure 6, it is

shown that the IConnectionTester interface is imple-

mented on the ConnectionTesterProxy. User calls the

KeepAlive method of this interface and does not deal

with the HPRPC framework details.

RpcProxy

+ RpcProxy(RpcClient, int)

«property»

# Client(): RpcClient

+ SrvType(): int

«interface»

IConnectionTester

+ KeepAlive(): void

ConnectionTesterProxy

+ ConnectionTesterProxy(RpcClient)

+ KeepAlive(): void

Figure 6: RpcProxy Class.

3.1.3 Server

RpcServer is responsible for handling client requests

(Figure 7). According to the ServiceType attribute it

calls the related RpcService’s Call method. Then se-

rializes its ReturnValue or in case of an error the ex-

ception information for the client.

«i nterface»

IConnectionTester

+ KeepAlive(): void

RpcServer

+ FindService(int): RpcService

+ ReceiveCall(RpcHeader, BinaryReader): void

+ RegisterService(RpcService): void

+ RpcServer(RpcController)

+ UnRegisterService(int): void

RpcService

^ Call(int, BinaryReader): IReturnValue

+ RpcService(int)

«property»

+ SrvType(): int

ConnectionTesterServ ice

^ Call(int, BinaryReader): IReturnValue

+ ConnnectionTesterService(IConnectionTester)

0..11

0..*0..1

Figure 7: RpcServer Class.

Services are custom generated classes that over-

ride the Call method of RpcService class. They invoke

the relevant method of their inner service instance

with the appropriate parameters. Instead of imple-

menting service methods like proxies, service classes

invoke service calls on an object that they own. As in

Figure 7, when the client calls the KeepAlive method,

RpcServer directs this call to ConnectionTesterSer-

vice which subsequently calls the KeepAlive method

of its own object.

The Call method of an RpcService class returns an

IReturnValue object that holds the return value of the

procedure call. As in Figure 8, return values can be

void or a typed instance of ReturnValue class. They

are handled on the RpcServer to transfer the method

results.

«interface»

IReturnValue

+ HasValue(): bool

+ WriteValue(BinaryWriter): void

ReturnValueVoid

+ HasValue(): bool

+ WriteValue(BinaryWriter): void

T

ReturnValue

+ HasValue(): bool

+ ReturnValue(T)

+ WriteValue(BinaryWriter): void

«property»

+ Value(): T

Figure 8: RpcReturnValue Class.

3.2 High Performance

One of the main objectives of HPRPC is having High

Performance operations. To achive this objective we

focused on two major areas:

• Having lightweight design

• Optimization of data serialization

3.2.1 Lightweight Design

The main objective of RPC is to establish a con-

nection between two endpoints easily with a proce-

dure call. Any other capabilities like asynchronous

call, multi-client/multi-server, heterogeneous plat-

forms etc. are additional features to facilitate spe-

cial user needs, but may reduce performance because

of their extra control mechanisms and data exchange

costs. For the High Performance objective in HPRPC,

we skipped these features to increase overall perfor-

mance. Basic features of our Lightweight Design can

be outlined as follows:

• Synchronous Call: Our clients can call one proce-

dure at a time.

• Single Client/Single Server: Our client and server

classes allow single endpoints.

• Homogenous Platforms: Although we support

cross-platform communications, the endpoints

must be homogenous. Therefore, their byte order

(Little/Big Endian), string encoding etc. should

be identical.

ICSOFT-EA 2016 - 11th International Conference on Software Engineering and Applications

120

We implemented our architecture ready for an

asynchronous call with the PendingCall class usage.

In the future, we are planning to add asynchronous

calls optionally with a limited performance loss.

3.2.2 Data Serialization

A procedure call generally includes some additional

data to transfer like method parameters and return

value. Hence, performance of an RPC mechanism is

highly correlated with the performance of its data se-

rialization/deserialization mechanism. In HPRPC, we

implemented data serialization with the help of a code

generator named KodoSis, which is developed in our

research group. It has the following capabilities:

• Consistency: Our data structures are defined in a

single configuration file and generated for all end-

points and platforms by KodoSis, which allows us

to use consistent data structures.

• Integrity: KodoSis generates the serializa-

tion/deserialization routines for all data structures

without any custom code.

• Minimal Overhead: No informative tags are re-

quired in data serialization, because both ends are

aware of the complete structure of the data.

• Performance: KodoSis serialization routines use

special mechanisms, specifically for lists of blit-

table types to increase performance.

3.3 Cross-platform Support

Our architecture has been implemented on several

languages and platforms. The same architecture, with

identical class and method names, is implemented

and tested for multiple languages and compilers. All

implementations can communicate with each other,

without the knowledge of the other platform.

In Windows operating system, we support C# lan-

guage with .NET Framework and C++ language with

Microsoft Visual C++ Compiler. In Linux like op-

erating systems we support C++ language with gcc

compiler.

In C++, we employed C++ v11 constructs like

shared ptr, thread, mutex etc. to increase robust-

ness and have simple code. In some legacy systems

(gcc v4.3) that does not support C++ v11, equivalent

classes of The Boost Framework (Boost, 2015) are

employed.

4 EVALUATION

As explained previously, HPRPC aims to minimize

communication overhead between the RPC client and

the RPC server. In order to achieve this goal, it em-

ploys Kodosis to minimize the amount of data to be

transferred through the RPC channel. We conduct

several experiments to evaluate our proposed work.

In these experiments, we compare the performance

of HPRPC with gRPC. gRPC is a well-known open

source RPC mechanism that is initially developed by

Google. It employs Protobuf for data serialization.

Similar to Kodosis, Protobuf also aims to minimize

the amount of data transferred.

4.1 Evaluation Methodology

Four different operations are chosen to be used in

experiments. Each operation uses different kinds of

requests and responses. Average and GetRandNums

operations are also used in evaluation of XML-RPC

(Allman, 2003). The details of these operations are

given below:

• HelloReply SayHello (HelloRequest Request)

This operation sends a HelloRequest message that

contains a parameter with type string and re-

turns a HelloReply message that contains ”Hello”

concatenated version of the request parameter.

This operation represents a small request/small re-

sponse transaction.

• DoubleType Average (Numbers Numbers)

This operation returns the average of Numbers list

that contains 32-bit integers. The size of the Num-

bers list is not fixed. We test the performance

of RPC mechanisms under varying numbers of

Numbers list. This operation represents a big re-

quest/small response transaction.

• Numbers GetRandNums (Int32Type Count)

This operation returns a list of random 32-bit in-

tegers given the desired number of random num-

bers. We test the performance of RPC mecha-

nisms under the varying numbers of count param-

eter. This operation represents a small request/big

response transaction.

• LargeData SendRcvLargeData (LargeData

Data)

This operation receives a large amount of data

and returns the same data. There are different

versions of LargeData that contain different

number of parameters. This operation represents

a big request/big response transaction.

In experiments, we measure the transaction times of

each operation for gRPC and HPRPC. All the experi-

ments are repeated for 1000 times and the total trans-

action times are recorded. In large data experiments,

we also measure the RPC packet sizes to compare the

A Lightweight and High Performance Remote Procedure Call Framework for Cross Platform Communication

121

amount of data that is to be transferred through RPC

channels.

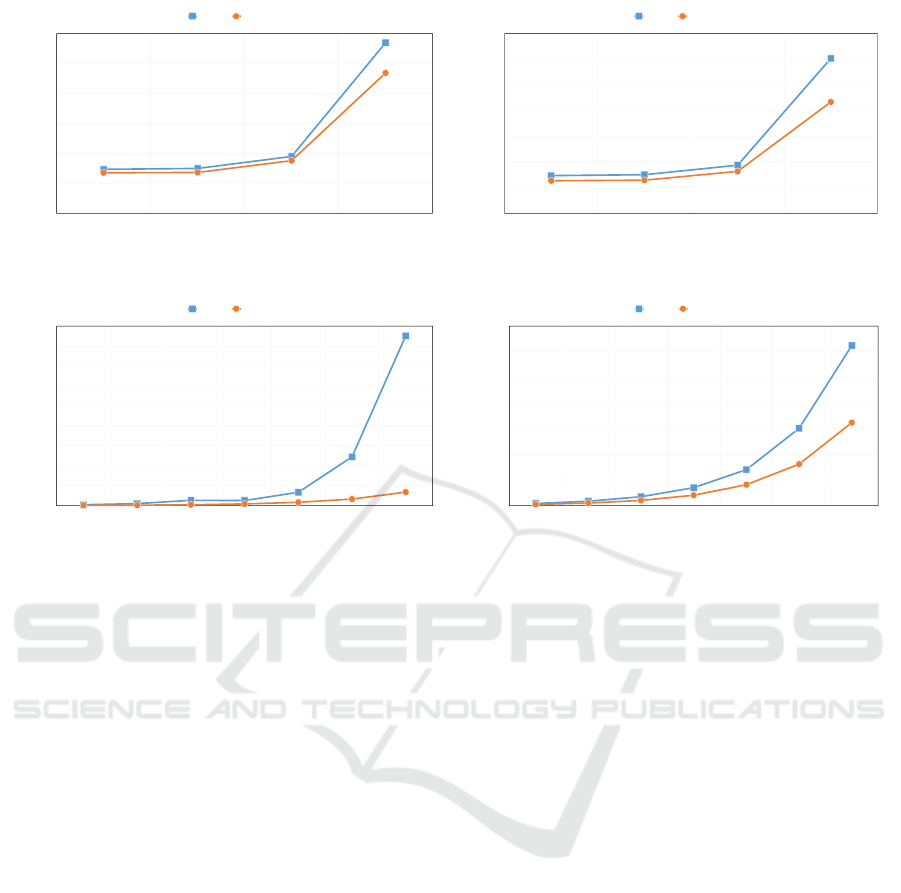

4.2 Experiment Results

For the first experiment, we measure the transac-

tion time of SayHello function which is a small re-

quest/small response operation. The total transaction

times of 1000 operations for gRPC and HPRPC are

305 ms and 279 ms, respectively. HPRPC performs

9.3% better than gRPC. This is due to the fact that

Protobuf tags the string parameter in HelloRequest

message while Kodosis does not use tags in serial-

ization. Therefore, total amount of data that is to

be transferred is increased in gRPC as opposed to

HPRPC. In this experiment, we only have a single pa-

rameter in the request and the response messages. We

also examine the performance of the two RPC mech-

anisms when we increase the number of parameters

in a single message. The results of this experiment is

presented in large data tests.

The second experiment measures the performance

of the two RPC mechanisms using Average function

which represents a big request/small response trans-

action. The results of Average operation tests are de-

picted in Figure 9a. In this experiment, clients call the

respective Average functions from RPC servers for

different number of 32-bit integers. When the number

of inputs is 10, HPRPC has 8.5% better performance

than gRPC. The difference between performances in-

crease and gets the highest value, when the input size

increased to 10000. At that point, gRPC’s total trans-

action time is 21.6% higher than HPRPC. The perfor-

mance difference gets even higher when the input size

is beyond 10000. This result gives us a hint about the

improvement of HPRPC when the amount of data is

increased.

In Average function experiments, we only increase

the size of the request message. For completeness,

we also measure performance for increased response

message size. The results of GetRandNums function

experiments are shown in Figure 9b. As expected,

the results of this experiment are similar to the pre-

vious experiment’ results. In this case, the perfor-

mance difference increases even more. When 10 ran-

dom numbers are requested from RPC server, HPRPC

performs 12.9% better than gRPC. The difference in-

creases up to 39.2% when requested random number

count is 10000.

In Average and GetRandNums experiments, Num-

bers message type is employed as request and re-

sponse type, respectively. Numbers data structure

models multiple numbers as a repeated member of

the same type. gRPC and HPRPC both have opti-

mizations on repeated members. In the next experi-

ment, we evaluate the performances of RPC mecha-

nisms when a message type consists of non-repeating

members. In this experiment, we employ different

versions of LargeData message type such as Large-

Data128, LargeData256, etc. The number concate-

nated to LargeData indicates the number of members

in message type. For example, LargeData128 mes-

sage type contains 128 different members of type in-

teger.

SendRcvLargeData experiments employ 7 differ-

ent versions of LargeData message types to evaluate

the performance of RPC mechanisms under the vary-

ing numbers of members in the message. In these

tests, clients call the respective SendRcvLargeData

function from RPC servers, and servers send the same

data back to the clients. The results of this exper-

iment are illustrated in Figure 9c. HPRPC perfor-

mance is nearly 2.7 times better than gRPC when

member count is 128. As the number of members

in message types is increased, the transaction times of

gRPC increase substantially as opposed to HPRPC. In

the extreme case of 8192 number of members, gRPC

consumes approximately 12.5 times more transcation

time than HPRPC. This result clearly indicates that

HPRPC outperforms gRPC with increasing number

of members in RPC messages.

In order to find out why gRPC needs so much

transaction time when the number of members are in-

creased in messages, we further analyze the results

of the SendRcvLargeData experiments. In this anal-

ysis, we compare the transferred data packet sizes of

gRPC and HPRPC. The results of this analysis are de-

picted in Figure 9d. This analysis reveals the fact that

gRPC tends to use more bytes than HPRPC when the

number of members is increased in messages. This is

because of that gRPC employs a unique tag for each

member in messages. The messages are serialized

with these tags, hence the RPC packet sizes are in-

creased. Moreover, as the number of members are

increased, the number of bytes that are used to repre-

sent tag values also increase. Tags are useful in some

cases such as enabling optional parameters, however

tagging each parameter brings extra communication

and processing overhead. Therefore, we conclude that

tagging parameters is not useful for high performance

systems.

HPRPC assumes all parameters in a message as

required, and the order of parameters do not change.

Therefore, RPC messages are serialized and deseri-

alized in the same order, which eliminates the need

for tagging parameters in HPRPC. This assumption

gives HPRPC advantage over other RPC implemen-

tations in high performance systems. This is due to

ICSOFT-EA 2016 - 11th International Conference on Software Engineering and Applications

122

0

200

400

600

800

1000

1200

10 100 1000 10000

gRPC HPRPC

Elapsed

Time (

ms

)

Number of 32-bit Integers

(a) Average

0

200

400

600

800

1000

1200

1400

10 100 1000 10000

gRPC HPRPC

Elapsed

Time (

ms

)

Number of 32-bit Integers

(b) GetRandNums

0

500

1000

1500

2000

2500

3000

3500

4000

4500

128 256 512 1024 2048 4096 8192

gRPC HPRPC

Elapsed

Time (

ms

)

Number of 32-bit Integers

(c) SendRcvLargeData

0

10000

20000

30000

40000

50000

60000

70000

128 256 512 1024 2048 4096 8192

gRPC HPRPC

Total Size of RPC

Packets

(bytes

)

Number of 32-bit Integers

(d) Large Data Packet Size

Figure 9: Experiment Results.

the reduced RPC packet size and eliminated extra pro-

cessing cost of tagging. The results of SayHello and

GetRandNums experiments also verifies with this ar-

gument.

Protobuf additionally, enables several features for

definition of message types such as optional parame-

ters which brings extra overhead in size of RPC pack-

ets. On the other hand, our Kodosis implementation

provides a simple message definition language that

only enables basic required features. These features

of Kodosis help reduce RPC packet sizes, hence the

communication overhead.

5 CONCLUSION

HPRPC is an RPC mechanism that focuses on three

main objectives: Lightweight & simple architecture,

high performance and cross-platform support. In this

paper, we give details of our architecture with relevant

class diagrams and present some performance evalu-

ations that indicate our advantages over gRPC.

In order to evaluate the performance of our RPC

mechanism, we compare HPRPC with gRPC which

is a recent and well-known RPC mechanism that ad-

dresses both providing various calling patterns and re-

ducing the size of the RPC messages. The results of

the experiments clearly indicate that HPRPC outper-

forms gRPC in terms of communication overhead in

all of test cases. This is due to the high level features

of gRPC that bring extra overhead in data transfer.

In addition, the underlying serialization mechanism

of gRPC, Protobuf, tags all parameters in a message.

Therefore, gRPC messages tend to occupy more bytes

in data transfer. The size of the messages get even

higher as the number of parameters in a message are

increased. On the other hand, HPRPC provides not

only all the required basic features but also ensures

reduced RPC message size and minimal processing

overhead. This advantage allows HPRPC to perform

better in high performance scenarios. We conclude

that HPRPC is an appropriate RPC mechanism to be

used in high performance systems.

Currently, we implemented HPRPC with C# and

C++ languages on Windows and Linux operating sys-

tems. In the future we are planning to implement

our architecture with Java and increase our cross-

platform support. Moreover, we plan to implement

asynchronous calling patterns and concurrent execu-

tion of requests on server side.

REFERENCES

Allman, M. (2003). An evaluation of xml-rpc. ACM sig-

metrics performance evaluation review, 30(4):2–11.

A Lightweight and High Performance Remote Procedure Call Framework for Cross Platform Communication

123

Ananda, A. L., Tay, B., and Koh, E. (1991). Astra-an asyn-

chronous remote procedure call facility. In Distributed

Computing Systems, 1991., 11th International Confer-

ence on, pages 172–179. IEEE.

Bershad, B. N., Anderson, T. E., Lazowska, E. D., and

Levy, H. M. (1990). Lightweight remote proce-

dure call. ACM Transactions on Computer Systems

(TOCS), 8(1):37–55.

Birrell, A. D. and Nelson, B. J. (1984). Implementing Re-

mote Procedure Calls. ACM Transactions on Com-

puter Systems (TOCS), 2(1):39–59.

Boost (2015). Boost Framework. http://www.boost.org. Ac-

cessed: 2015-12-08.

Corbin, J. R. (2012). The art of distributed applications:

programming techniques for remote procedure calls.

Springer Science & Business Media.

Google (2015a). Google Protocol Buffers (Pro-

tobuf): Google’s Data Interchange Format.

Documentation and open source release.

https://developers.google.com/protocol-buffers.

Accessed: 2015-12-08.

Google (2015b). gRPC. http://www.grpc.io. Accessed:

2015-12-08.

Google (2015c). Protobuf-Remote: RPC Implemen-

tation for C++ and C# using Protocol Buffers.

https://code.google.com/p/protobuf-remote. Ac-

cessed: 2015-12-28.

Jagannadham, D., Ramachandran, V., and Kumar, H. H.

(2007). Java2 distributed application development

(socket, rmi, servlet, corba) approaches, xml-rpc and

web services functional analysis and performance

comparison. In Communications and Information

Technologies, 2007. ISCIT’07. International Sympo-

sium on, pages 1337–1342. IEEE.

Kim, S.-H., Lee, Y., and Kim, J.-S. (2007). Flexrpc: a flex-

ible remote procedure call facility for modern cluster

file systems. In Cluster Computing, 2007 IEEE Inter-

national Conference on, pages 275–284. IEEE.

Laurent, S. S., Johnston, J., Dumbill, E., and Winer, D.

(2001). Programming web services with XML-RPC.

O’Reilly Media, Inc.

Ming, L., Feng, D., Wang, F., Chen, Q., Li, Y., Wan, Y., and

Zhou, J. (2011). A performance enhanced user-space

remote procedure call on infiniband. In Photonics

and Optoelectronics Meetings 2011, pages 83310K–

83310K. International Society for Optics and Photon-

ics.

Nelson, B. J. (1981). ”Remote Procedure Call” CSL-81-9.

Technical report, Xerox Palo Alto Research Center.

Satyanarayanan, M., Draves, R., Kistler, J., Klemets, A.,

Lu, Q., Mummert, L., Nichols, D., Raper, L., Rajen-

dran, G., Rosenberg, J., et al. (1991). Rpc2 user guide

and reference manual.

Satyanarayanan, M. and Siegel, E. H. (1990). Parallel com-

munication in a large distributed environment. Com-

puters, IEEE Transactions on, 39(3):328–348.

Soumagne, J., Kimpe, D., Zounmevo, J., Chaarawi, M.,

Koziol, Q., Afsahi, A., and Ross, R. (2013). Mercury:

Enabling remote procedure call for high-performance

computing. In Cluster Computing (CLUSTER), 2013

IEEE International Conference on, pages 1–8. IEEE.

Srinivasan, R. (1995). RPC: Remote Procedure Call Proto-

col Specification Version 2 (RFC1831). The Internet

Engineering Task Force.

Sun Microsystems, I. (1988). RPC: Remote Procedure Call

Protocol Specification Version (RFC1057). The Inter-

net Engineering Task Force.

UserLand Software Inc. (2015). XML-RPC Specifica-

tion. http://xmlrpc.scripting.com/spec.html. Ac-

cessed: 2015-12-15.

W3C (2015). SOAP Version 1.2 Part 1:

Messaging Framework (Second Edition).

http://www.w3.org/TR/soap12/. Accessed: 2015-12-

15.

Xerox, C. (1981). Courier: The Remote Procedure Call

Protocol. Xerox System Integration Standard 038112.

ICSOFT-EA 2016 - 11th International Conference on Software Engineering and Applications

124