Ontological Interaction Modeling and Semantic Rule-based

Reasoning for User Interface Adaptation

Fatma-Zohra Lebib, Hakima Mellah and Linda Mohand Oussaid

Research Center in Scientific and Technical Information, CERIST, Algiers, Algeria

Keywords: Human-computer Interaction (HCI), Modality, Ontology, SWRL, Rule-based Reasoning,

User Interface Adaptation.

Abstract: The paper aims to show how reasoning on ontology can be helpful for user interface adaptation. From a set

of user characteristics and interface parameters, it is possible to deduct the most suitable and adaptable

interfaces for him/her. To do so, Semantic Web Rule Language (SWRL) rules are used to derive the

appropriate interface for a specific user, considering different factors related to his/her abilities, preferences,

skills, etc. A use case, in handicrafts domain, is presented; different input and output interaction modalities

(writing, selection, text, speech, etc) are proposed to a handcraft woman according to her sensory perception

and motor skills. The modalities are structured within what we called "interaction ontology".

1 INTRODUCTION

In human-computer interaction (HCI) areas, user

with disabilities needs to be effectively supported,

offering him appropriate interaction methods (both

input and output) in order to perform tasks. In

particular, his perceptual, cognitive and physical

disabilities should be considered in order to choose

the best modalities for the rendering and

manipulation of the interactive system. So user

interfaces, which are habitually designed without

taking human diversity into consideration, should be

adapted to user (Jameson, 2003) (Simonin, 2007).

In the last decades, people working in diverse areas

of the Artificial Intelligence field have been working

on adaptive systems, hence creating valuable

knowledge that can be applied to the design of

adaptive user interfaces for people with disabilities.

This research work presents a part of the whole

project in handicraft domain in emerging countries

(Algeria and Tunisia). The project aims at improving

the craftswomen socio-economic level within the

two countries. Indeed, this project targets to assist

the craftswomen during their business activities

through the use of new technologies of Information

and Communication Technologies (ICT) in order to

help them to make the appropriate decisions

concerning their sells and their business by

providing them an (easy) interface which

encourages communication between different actors

(providers, customers and handcraft woman).

The project targets women from poor social

background exert various business such as ceramic,

tapestry, traditional pastry, embroidery etc. These

women are characterized by different profiles,

especially may have some disabilities (physical,

cognitive, etc.), making their interaction with

computer system difficult. In this work, we have

built an ontology which is used to adapt user

interface. This ontology describes both user profile

(motor and sensory capacities), and logical and

physical interaction resources (modes, modalities

and devises). Set of adaptation rules on the

ontology allow to provide adaptive interface

according to woman profile. The ICT application in

handcraft domain should adapt the interface to the

abilities of different women in order to improve

interaction performance between women and the

system and to provide a better and easier interface.

The interface customizing mechanism, including (1)

an auditory interface for vision-impaired women and

graphical interface for women with good visual

ability, (2) vocal command without having to touch

the button for the women physical disability, (3)

raise volume of an audio content for the women

hearing impaired, etc.

Semantic technologies enabling interoperability

across different platforms are highly expressive

when modeling complex relationships. They support

Lebib, F-Z., Mellah, H. and Mohand-Oussaid, L.

Ontological Interaction Modeling and Semantic Rule-based Reasoning for User Interface Adaptation.

In Proceedings of the 12th International Conference on Web Information Systems and Technologies (WEBIST 2016) - Volume 1, pages 347-354

ISBN: 978-989-758-186-1

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

347

semantic reasoning and have the ability to reuse

information from several application domains (Janev,

2011). They enable to reason about various data, that

is, to draw inferences from existing knowledge about

a particular area for the purposes of creating new

knowledge. At the heart of semantic-based

technologies is the use of ontologies. In this work the

use the semantic technologies to model, represent and

reason about craftswomen has been adopted for the

purpose of user interface adaptation.

The remainder of this paper is organized as

follows. Section 2 provides the related work as a

starting point. In Section 3, we give a global view on

interactive interface design and we define and explain

the notion of interaction modality. We then present

our interaction ontology proposal in section 4. Finally,

section 5 shows the conclusions and future work.

2 RELATED WORK

Ontologies are, according to the widely accepted

definition given by Gruber (Gruber, 1995), “an

explicit specification of a conceptualization". In

mathematical words, an ontology is a set of classes,

properties connecting classes to one another,

restrictions on properties and axioms (Maedche,

2002). Ontology-based modeling involves

specifying a number of concepts related to a

particular domain, along with any number of

properties or relationships associated with those

concepts. In essence, ontologies provide a

“representation vocabulary”, where these domain

concepts are structured in a taxonomy based on

various domain aspects. Ontological models can be

used by logic reasoning mechanisms to deduce high-

level information from raw data and have the ability

to enable the reuse of system knowledge. This is

particularly important when modeling domain

aspects that can be remembered and reused later

(Chandrasekaran, 1999).

Semantic Web research has devoted an important

effort in defining a common language for ontology

modeling and reasoning with the objective to

achieve semantic interoperability. The Web

Ontology Language (OWL), a language based on

description logic has become the recommended

language by the World Wide Consortium in 2004.

Semantic Web Rule Language (SWRL) is a

combination of Rule Mark-up Language (known as

RuleML) and OWL-DL (OWL Description Logics)

and on the Rule Markup Language (RuleML) which

provides both OWL-DL expressivity and rules from

RuleML (Horrocks, 2010).

In the field of ontology design, efforts have been

made by several research groups to facilitate ontology

engineering process, employing manual, semi-

automatic and automatic (Maynard, 2009) methods.

Semi-automatic methods focus on the acquisition of

ontologies from domain texts (Maedche, 2000).

Methontology is a methodology that is widely

recognized within the ontologies engineering

community, as a reference of tasks needed to build

ontology (Fernandez, 1997) (Corcho, 2005).

Comprehensive surveys of existing methodologies

can be found in (Cristani, 2005) and (Noy, 1997).

Throughout the ontology creation process, the

designers may take into account a set of ontology

design criteria, such as clarity, coherence and

extensibility (Fluit, 2002). Specific tools like

Protégé (Noy, 2001) are under rapid development

and offer a wide range of functionalities, from

design of classes and concepts to visualization,

querying and inferencing.

In the past few years, ontology is used for

modeling context knowledge. By context, we refer

to any information that can be used to characterize

the situation of an entity, where an entity can be a

person, a place or a (physical or computational)

object (Dey, 2001). Although there is a variety of

context ontologies developed for different

application scenarios (Hatala, 2005) (Heckmann,

2005) (Preuveneers, 2004) (Clerckx, 2007)

(Razmerita, 2003) (Poveda, 2010). However, there is

no widely accepted model that can be reused for

modeling context knowledge in different

applications. We summarize in the following the

most well-known.

Razmerita et al. (Razmerita, 2003) presented

work on user modeling with a generic ontology-

based architecture called OntobUM.

While user modeling associated rules and

ontology-based representations for realtime

ubiquitous applications in an interactive museum

scenario has been proposed by (Hatala, 2005),

context features and situational statements for

ubiquitous computing have been proposed as a

General User Model Ontology (GUMO) by

(Heckmann, 2005) (Heckmann, 2007).

Authors in (Preuveneers, 2004) proposed

CoDAMoS ontology which defines four main core

entities: user, environment, platform, and service.

The challenges surrounding CoDAMoS ontology

are: application adaptation, automatic code

generation, code mobility, and generation of device-

specific user interfaces.

(Poveda, 2010) Proposed mIO! ontology

network, a context ontology in the mobile

SRIS 2016 - Special Session on Social Recommendation in Information Systems

348

environment that aims to represent contextual

knowledge about the user that can influence his

interaction with mobile devices. The goal of the

mIO! ontology network is to represent knowledge

related to context as a whole, e.g., information on

location and time, user information and its current or

planned activities, as well as devices located in his

surroundings. The ontology aims at solving the

challenge of adapting the applications based on the

user context.

In (Clerckx, 2007), interaction environment

ontology has been designed with the aim of solving

the challenge of multi-devices user interfaces

generation. This ontology is an extension of a

general context ontology used in the DynaMo-AID

development process (Preuveneers, 2004), where

authors describe different modalities, interaction

environment (resources, devices), and the way these

two concepts are related to each other. While in

(Clerckx, 2007) interaction constraints related to

available devices and modalities provided are

considered, the present work considers interaction

constraints related to user (craftswoman) and the

modalities supported by each craftswoman based on

her sensory and motor abilities.

In (Skillen, 2012a) (Skillen, 2012b), authors

propose a method that combines ontological

modeling of user profiles and context-aware

adaptation techniques. The same authors in (Skillen,

2013) (Skillen, 2014) use rule-based personalization

mechanisms and services technology for providing

personalized Help on-Demand services to mobile

users in pervasive environments. The proposed

method uses an intelligent personalization service

that incorporates a rule-based knowledge and a

reasoning engine. Authors focus on user

environment to offer services depending on context

parameters (like location for ex.). In our approach

user capacities (sensory and motor) and interaction

resources (both physical and logical) are modeled by

the mean of an ontology, with the purpose of the

modality adaptation for users disabilities.

The scope of our work is adaptive interface

design. We aim to adapt the user interface by using

ontology modeling and reasoning; expressing trough

a set of adaptation rules.

3 INTERACTIVE INTERFACE

DESIGN

Any given interface is generally defined by the

number and diversity of inputs and outputs it

provides. Different configurations and designs upon

which an interface is based (Karray, 2008):

1) A system based on only modality

2) A system based on multimodality

Multimodal interfaces incorporate multiple

modalities (e.g., speech, gesture, writing, and

others). Nigay and Coutaz (Nigay, 1993) define

modality as the combination of a physical input or

output device (d) and an interaction language (L),

which can be formalized as a tuple <d, L>.

Examples for interaction modalities on a smart-

phone could be <touchscreen, gestures> or

<microphone, speech>.

When designing an interactive system, one has to

choose which modalities will be used, and how they

will convey information. We distinguish two types

of interaction: input interaction (from the user to the

system) and output interaction (from the system to

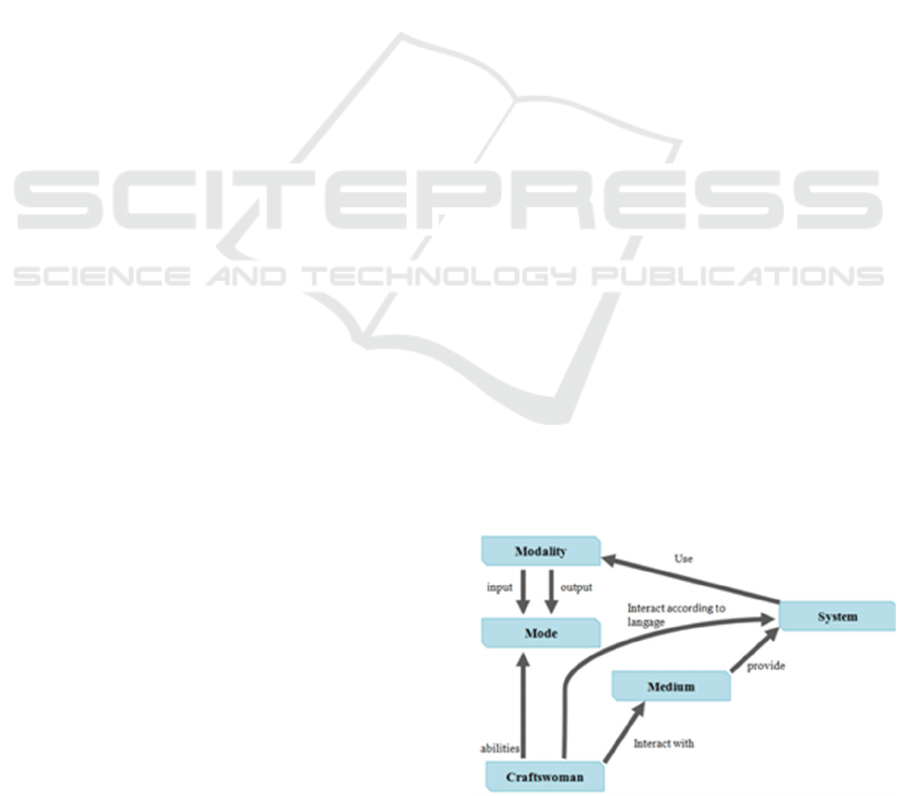

the user). The concept of interaction component

represents the physical or logical communication

mean between the user and the application. There

are three types of interaction components: mode,

modality and medium (Figure 1). A mode refers to

the human sensory system used to perceive (visual,

auditory, tactile, etc.) or to introduce (speech,

gestures) given information, so that we distinguish

input modes and output modes. A modality means a

communication mode according to human senses

and computer devices. Input modality is defined by

the information structure that is perceived by the

user (text, speech synthesis, vibration, etc.). Output

modality is defined by the way to introduce

information by the user (selection, pointing, writing,

speech etc.). Finally, a medium is an organ

necessary to a system or a human in order to acquire

or deliver information. Input medium is an input

device allowing the expression of an input modality

(keyboard, mouse, microphone, etc.). Output

medium is an output device allowing the expression

of an output modality (screen, speaker, vibrator, etc.).

There are some relations existing between these

three notions. A mode can be associated with a set of

Figure 1: Overview of the interaction model.

Ontological Interaction Modeling and Semantic Rule-based Reasoning for User Interface Adaptation

349

modalities and each modality can be associated to a

set of medium. For example, the “vibrator” medium

allows the expression of the “vibration” modality

which is perceived through the “tactile” mode.

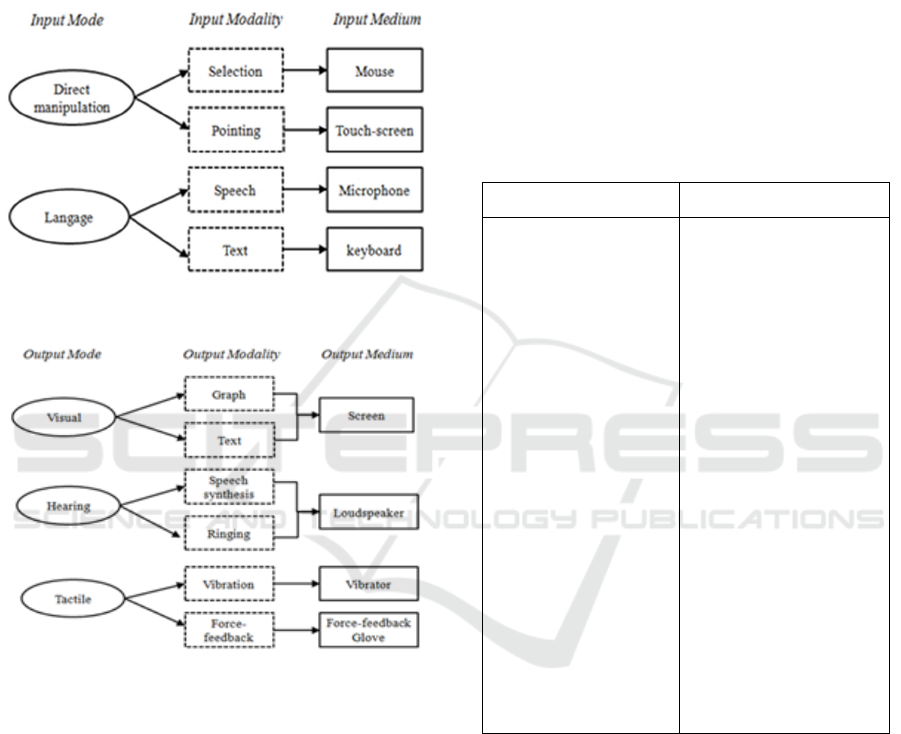

These relations are presented through the input

interaction components diagram (Figure 2) and the

output interaction components diagram (Figure 3).

Figure 2: Input interaction components diagram.

Figure 3: Output interaction components diagram.

4 PROPOSED INTERACTION

ONTOLOGY

Ontology-based systems are becoming more and

more popular due to the inference and reasoning

capabilities that ontological knowledge

representation provides. The ontology based

modeling can be used for various purposes such as

personalization and adaptation. In this work, we use

ontology to model the interaction components and

craftswoman characteristics in order to support

adaptive application development. The proposed

ontology is called interaction ontology.

Methontology (Fernandez, 1997) enables the

construction of ontologies at the knowledge level.

We model in the same ontology the interface

parameters (mode, modality and medium) and the

craftswoman profile. We focus more on the

characteristics describing her abilities to use the

interaction modalities. However, other user

characteristics can be considered, such as skills,

preferences, education level and motivation. Some

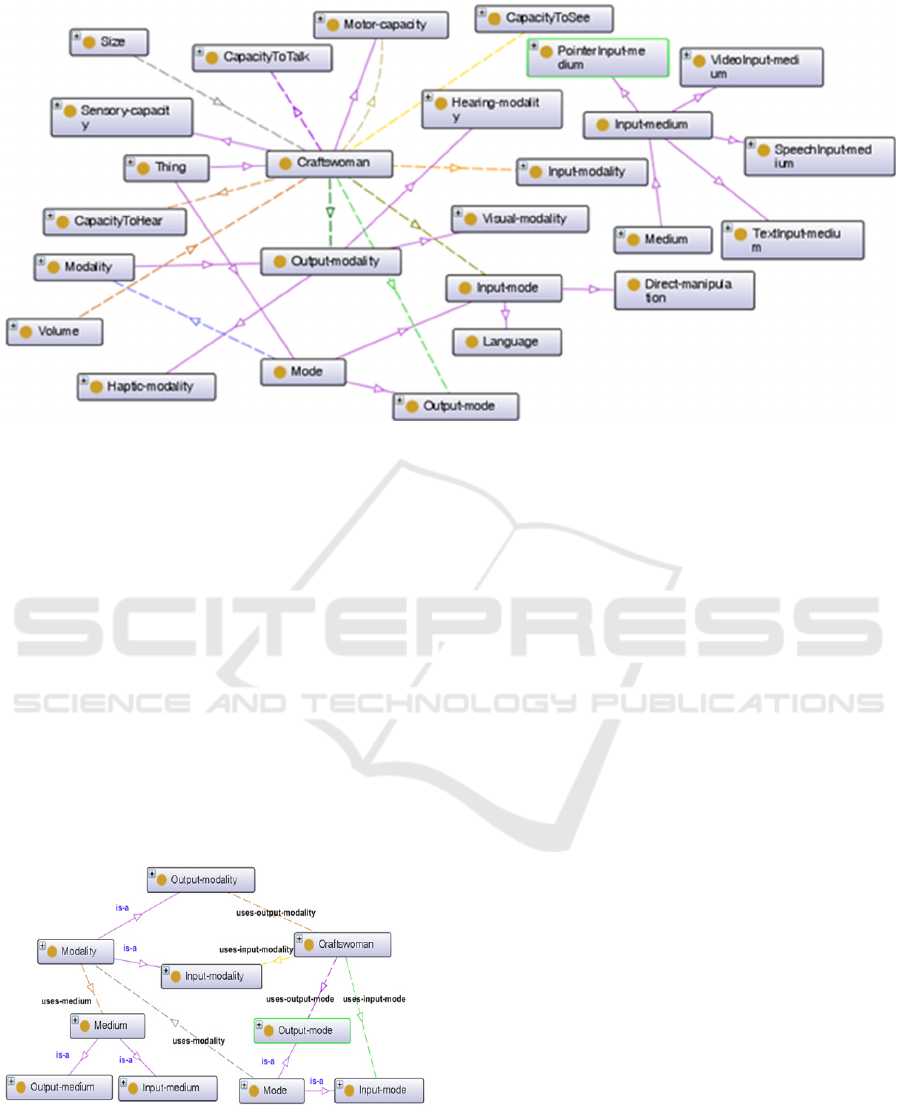

ontology relevant concepts are presented in table1.

The ontology was implemented using the Protégé

framework. Figure 4 represents semantic

relationships between the different interaction

ontology concepts.

Table 1: Interaction concepts.

Interaction concepts Description

Craftswoman

Input-mode

Output-mode

Input-modality

Output-modality

Input-medium

Output-medium

Size

Volume

Person who interact with the

system and who is described

by a profile

The way information is

introduced (language, direct

manipulation)

The way information is

perceived (visual, hearing,

tactile)

The way information is

introduced by the user using

a specific medium (speech,

writing, selection, etc.)

The information structure as

it is perceived by the user

(text, graph, image,

vibration, etc.)

Physical device to introduce

information (keyboard,

mouse, microphone, etc.)

Physical device to receive

information (screen,

projector, loudspeaker,

vibrator)

parameter “Size” of a

modality (text size)

parameter “Volume” of a

modality (video volume)

Object properties are defined to relate the core

concept craftswoman to the concepts mode and

modality, they specify modalities (input and output)

that can be used by a given woman (see Figure 5):

• uses-input-mode (from Craftswoman to Input-

mode): to specify the input modes

• uses-output-mode (from Craftswoman to Output-

mode): to specify output modes

• uses-input-modality (from Craftswoman to Input-

modality): to specify input modalities

• uses-output-modality (from Craftswoman to

Output-modality): to specify output modalities

SRIS 2016 - Special Session on Social Recommendation in Information Systems

350

Figure 4: Relationships between different concepts of Interaction ontology.

The ontological approach for interaction

modelling was motivated by the possibility of

reasoning on the model. The reasoning allows

checking ontology consistency. Furthermore, it helps

to deduct (infer) high-level data from a set of

captured raw data (low-level data).

The interaction ontology is used to adapt user

interface. We describe in the following section how

the user characteristics (e.g., ability to see, to talk, to

move, etc.) and the interface parameters (e.g.,

writing, speech, text, image, etc.) are used to

generate the adaptive user interface based ontology

reasoned.

4.1 Reasoning on the Interaction

Ontology

Figure 5: Object properties of interaction ontology.

Ontology based reasoning is used in our work for

deriving new information based on both OWL

defined concepts and properties, and adaptation

rules. Based on woman’s characteristics (physical

abilities), interface parameters values are defined

(input and output modalities). An adaptive interface

is generated; is composed with these modalities for

example, for introducing a new product or for

displaying a list of clay providers.

The adaptation rules are defined and edited in

interaction ontology using dedicated rule language

(SWRL-Semantic Web Rule Language). There are

several inference engines that allow inferring

knowledge from OWL. We use Pellet (Sirin, 2007)

as reasoning engine.

SWRL rules are implication rules with following

syntax (Mun, 2011):

antecedent → consequent

Both antecedent and consequent are composed

of a set of concepts and properties. Each adaptation

rule is presented by: set of woman characteristics

(antecedent) then set of interface parameters

(consequent). We note that there is some

relationship between woman characteristics and

interface parameters. A good visual ability implies

interaction mode visual possible for a given woman.

We define the following hypothesis: any

craftswoman is able to interact with the system;

indeed she can use at least one input modality and

one output modality. Every woman should have

some abilities enabling her to interact with the

system. Nevertheless, motor or visual impairment

may make impossible the use of an input or output

modality. For example if a woman is visually

impaired then the visual mode cannot be used.

Similarly hearing or visual weakness involve the

need to change some modality properties, like,

increasing the audio volume or the text size. Notice

Ontological Interaction Modeling and Semantic Rule-based Reasoning for User Interface Adaptation

351

that multiple modalities can be used to perform a

task for a woman. In this case the redundancy is

accepted, for example the combination of visual and

speech modalities for presenting an information.

Physical capabilities considered are: capacities

to see, to hear, to move and to talk, where:

• capacities to see and to hear, are used to derive

output modalities

• capacities to move, to see and to talk, are used to

derive input modalities

To measure these capabilities, we have defined

four capacity levels: Good, Moderate, Low and

Severe, where:

• Good and Moderate levels present no constraint

for using corresponding interaction modalities;

all the available modalities can be used

• Low level requires certain changes of modality

properties (change the volume or the size)

• Severe level is the lowest level that requires total

elimination of corresponding modality, e.g.

eliminate the speech modality for a mute woman

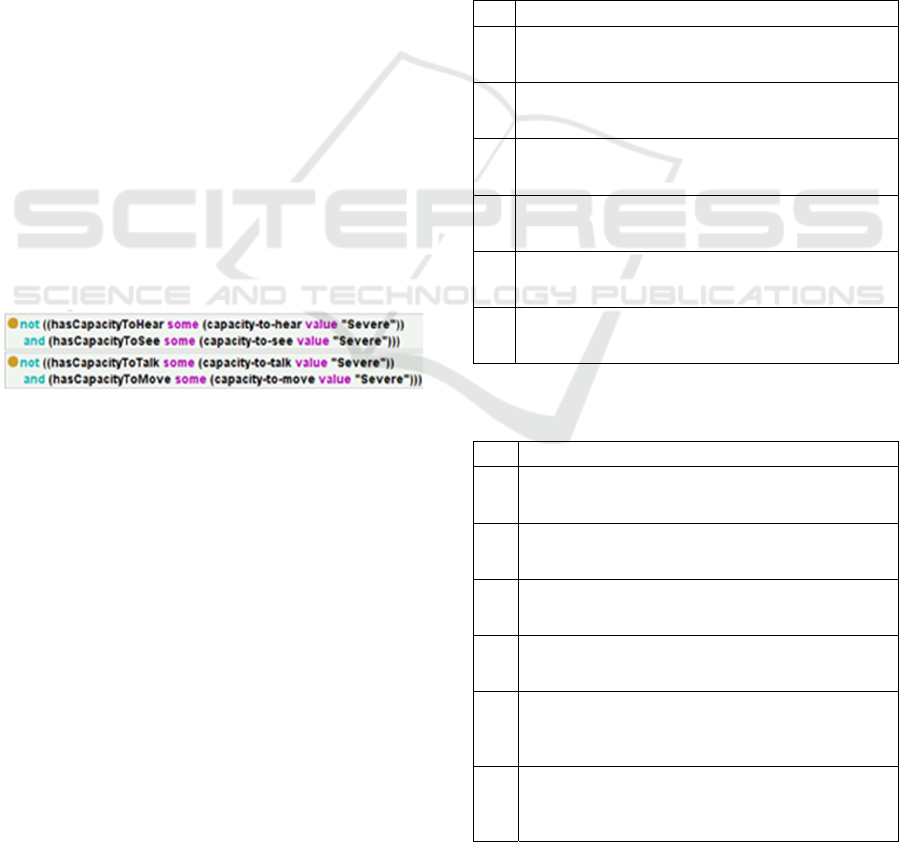

To check our hypothesis, we have added two

restrictions. The first expresses that woman’s

capacity level to hear and to see cannot be severe at

the same time; therefore she can use at least one

output modality. The second expresses that woman’s

capacity level to talk and move cannot be severe at

the same time; therefore she can use at least one

input modality (Figure 6).

Figure 6: Example of restrictions edited within ontology.

Example of rule.

Craftswoman(?x),hasCapacityToSee(?x,

Low) -> uses-modality(?x, textModality,

size(“High”)

This rule expresses that, if a woman has a visual

impairment (her capacity to see is low) then the text

modality is used with increase the size.

An example of the specified SWRL rules in

Table 2and Table 3. Within Table 2, the described

adaptation rules allow to derive input modalities

used for a specific woman. Woman without motor

disabilities (i.e. her capacity to move is different to

severe value) can use all the available direct-

manipulation modalities (writing, selection,

pointing…) (Rule 1, 2 and 3). Likewise the speech

modalities (discourse) can be used with the

exception mute woman (her capacity to talk is equal

to severe value) (Rule 4, 5 and 6). Within Table 3,

rules which are described allow to derive output

modalities. Woman without visual disabilities

(capacity to see is different to severe value) can use

all the available visual modalities (display: text,

graph, image…) (Rule 1, 2 and 3). However the

modality size is increased for woman who has weak

sight (Rule 3). Same rules are defined for hearing

modalities; the sound modalities (speech-synthesis,

ringing, bip) cannot be used for deaf women (Rules

4, 5 and 6).

After the SWRL rules are created, they can be

tested and checked for inconsistencies using the

reasoning tool.

Table 2: Excerpt of SWRL rules (to infer input

modalities).

No. SWRL Expression

1 Craftswoman(?x), DirectManipulation-modality(?z),

hasCapacityToMove(?x, ?y), capacity-to-move(?y,

"Good") -> uses-modality-input(?x, ?z)

2 Craftswoman(?x), DirectManipulation-modality(?z),

hasCapacityToMove(?x, ?y), capacity-to-move(?y,

"Moderate") -> uses-modality-input(?x, ?z)

3 Craftswoman(?x), DirectManipulation-modality(?z),

hasCapacityToMove(?x, ?y), capacity-to-move(?y,

"Low") -> uses-modality-input(?x, ?z)

4

Craftswoman(?x), Speech(?z), hasCapacityToTalk(?x,

?y), capacity-to-talk(?y, "Good") -> uses-modality-

input(?x, ?z)

5 Craftswoman(?x), Speech(?z), hasCapacityToTalk(?x,

?y), capacity-to-talk(?y, "Moderate") -> uses-modality-

input(?x, ?z)

6 Craftswoman(?x), Speech(?z), hasCapacityToTalk(?x,

?y), capacity-to-talk(?y, "Low") -> uses-modality-

input(?x, ?z)

Table 3: Excerpt of SWRL rules used within the Ontology

(for inferring the output modalities).

No. SWRL Expression

1 Craftswoman(?x), Visual(?z), hasCapacityToSee(?x,

?y), capacity-to-see(?y, "Good") -> size(?z,

"Medium"), uses-mode-output(?x, ?z)

2 Craftswoman(?x), Visual(?z), hasCapacityToSee(?x,

?y), capacity-to-see(?y, "Moderate") -> size(?z,

"Medium"), uses-mode-output(?x, ?z)

3 Craftswoman (?x), Visual-modality (?z),

hasCapacityToSee(?x, ?y), capacity-to-see(?y, "Low") -

> use-mode-output(?x, ?z), size(?z,"High")

4 Craftswoman(?x), Hearing(?z), hasCapacityToHear(?x,

?y), capacity-to-hear(?y, "Good") -> volume(?z,

"Medium"), uses-mode-output(?x, ?z)

5 Craftswoman(?x), Hearing(?z), hasCapacityToHear(?x,

?y), volume(?n, "Medium"), capacity-to-hear(?y,

"Moderate") -> volume(?z, "Medium"), uses-mode-

output(?x, ?z)

6 Craftswoman (?x), Hearing-modality(?z),

hasCapacityToHear(?x, ?y), capacity-to-hear(?y,

"Low") -> use-modality-output(?x, ?z),

volume(?z,"High")

SRIS 2016 - Special Session on Social Recommendation in Information Systems

352

4.2 Illustrative Example

We present in the following an example of the

implementation of these rules. Amel is a mute

craftswoman. She suffers from a visual weakness

and as a result finds it difficult to read small text.

However Amel’s hearing and motor abilities are

good (Figure 7). Using Pellet and the associated

SWRL rule-set and taking into consideration Amel’s

disabilities we can infer input and output modalities

which Amel is able to use (yellow part in Figure 7).

Indeed, Amel can use visual modalities (text, graphs

...) and the direct manipulation modalities (selection)

but she cannot use the speech modality; her

disability does not allow it. The size of the visual

modalities (text, graph) is increased to the maximum

value (high) because her visual capacity is low.

Figure 8 shows an adapted interface for Amel, it

allows introducing a new product using selection

mode.

5 CONCLUSIONS

In the paper, we have presented a method to adapt

user interface based on ontology modeling and the

reasoning process. User characteristics and interface

parameters are combined through adaptation rules

execution to generate adaptive interface according to

user profile. The proposal can be extended by

considering others aspects of user (e.g. preferences,

expertise, motivation, etc) and of the interface (e.g.

density of information, luminosity, etc.). As future

work, we plan to generalize this work and extend the

ontology for taking into account other user and

interface characteristics.

Figure 7: Reasoning on interaction ontology.

Figure 8: Example of adapted interface generation.

REFERENCES

Chandrasekaran, B., Josephson, J.R and Benjamins, V.R.,

1999. What are Ontologies, and Why do we Need

them?. Intelligent Systems and Their Applications,

IEEE, 14, pp. 20-26.

Clerckx, T., Vandervelpen, C. and Coninx, K., 2007.

Task-based design and runtime support for multimodal

user interface distribution. In Proceedings of

Engineering Interactive Systems.

Corcho, O., Fernández-López, M., Gómez-Pérez, A. and

López-Cima, A., 2005. Building Legal Ontologies

with Methontology and Webode. In Law and the

Semantic Web, Benjamins, V.R., Casanovas, P.,

Breuker, J. and Gangemi, A. (eds.). Springer, pp. 142-

157.

Cristani, M. and Cuel, R., 2005. A Survey on Ontology

Creation Methodologies. International Journal on

Semantic Web and Information Systems, vol. 1, No. 2,

49 – 69.

Dey, A. K., 2001. Understanding and using context.

Personal and Ubiquitous Computing Journal, 5(1), pp.

5-7.

Fernandez, M., Gómez-Pérez, A. and Juristo, N. 1997.

METHONTOLOGY: From ontological art towards

ontological engineering. In Spring Symposium Series

on Ontological Engineering, Stanford, AAAI Press.

Fluit, C. Sabou, M. and van Harmelen, F., 2002.

Ontology-based Information Visualisation. In

Visualising the Semantic Web, Springer Verlag.

Gruber, T.R., 1995. Toward principles for the design of

ontologies used for knowledge sharing. International

Journal of Human-Computer Studies 43 (5/6), pp.

907–928.

Hatala, M., Wakkary, R. and Kalantari, L., 2005. Rules

and ontologies in support of real-time ubiquitous

application. Web Semantics:Science, Services and

Agents on the World Wide Web, vol. 3, pp. 5-22.

Heckmann, D. Schwartz, T., Brandherm, B., Schmitz, M.,

and von Wilamowitz-Moellendorff, M., 2005. GUMO

- the General User Model Ontology. In 10th

International Conference on User Modeling

(UM'2005), Edinburgh, UK, , pp. 428-432.

Ontological Interaction Modeling and Semantic Rule-based Reasoning for User Interface Adaptation

353

Heckmann, D., Schwarzkopf, E., Mori, J., Dengler, D. and

Kroner, A., 2007. The User Model and Context

Ontology GUMO revisited for future Web 2.0

Extensions, vol. Contexts and Ontologies:

Representation and Reasoning, pp. 37-46.

Horrocks, I., Patel-Schneider, P., Boley, H., Tabet, S.,

Grosof, B. and Dean, M., 2010. SWRL: A Semantic

Web Rule Language combininig OWL and RuleML.

Jameson, A., 2003. Adaptive Interfaces and Agents. In

Jacko, J. A. & Sears, A., (eds.), the human-computer

interaction handbook: Fundamentals, evolving

technologies and emerging applications pp. 305–330,

Mahwah, NJ: Erlbaum.

Janev, V. and Vraneš, S., 2011. Applicability Assessment

of Semantic Web Technologies. Information

Processing & Management, 47, pp. 507-517.

Karray, F., Alemzadeh, M. and Saleh, J.A., 2008. Human-

computer interaction: Overview on state of the art.

International Journal on Smart, 1(1), pp.137-159.

Maedche, A., 2002. Ontology learning for the semantic

web. Journal of Intelligent Systems, IEEE 16 (2), pp.

72-79.

Maedche, A., Staab, S., 2000. Mining Ontologies from

Text. EKAW, pp. 189-202.

Maynard, D., Funk, A. and Peters, W., 2009. Sprat: a tool

for automatic semantic pattern based ontology

population. In Proc. of the Int. Conf. for Digital

Libraries and the Semantic Web.

Mun, D. and Ramani, K., 2011. Knowledge-based part

similarity measurement utilizing ontology and multi-

criteria decision making technique. Advanced

Engineering Informatics 25, pp. 119-130.

Nigay, L. and Coutaz, J., 1993. A Design Space for

Multimodal Systems: Concurrent Processing and Data

Fusion. In Proceedings of the SIGCHI Conference on

Human Factors in Computing Systems (CHI), pp.

172–178. New York, NY, USA: ACM.

Noy, N.F., Sintek, M., Decker, S., Crubezy, M.,

Fergerson, R.W. and Musen, M.A., 2001. Creating

Semantic Web contents with Protégé-2000. IEEE

Intelligent Systems, 16 (2), pp. 60-71.

Noy, N. F. and Hafner, C., 1997. The State of the Art in

Ontology Design, A Survey and Comparative Review.

AI Magazine, 18 (3), pp. 53-74.

Poveda Villalon, M., Suárez-Figueroa, M.C., García-

Castro, R. and Gómez-Pérez, A., 2010. A Context

Ontology for Mobile Environments. In Workshop on

Context, Information and Ontologies, CIAO 2010 Co-

located with EKAW, Lisbon, Portugal.

Preuveneers, D., Van Den Bergh, J., Wagelaar, D.,

Georges, A., Rigole, P., Clerckx, T., Berbers, Y.,

Coninx, K., Jonckers, V. and De Bosschere, K., 2004.

Towards an Extensible Context Ontology for Ambient

Intelligence.

Razmerita, L., Angehrn, A. and Maedche, A., 2003.

Ontology-Based User Modeling for Knowledge

Management Systems. User Modeling, pp. 148-148.

Simonin, J. and Carbonell, N., 2007. Interfaces adaptatives

: adaptation dynamique à l’utilisateur courant. In

Saleh, I. and Regottaz, D., Interfaces numériques, Pari,

Hermès Lavoisier (coll. Information, hypermédias et

communication).

Sirin, E., Parsia, B., Grau, B.C., Kalyanpur, A. and Katz,

Y., 2007. Pellet: A Practical Owl-Dl Reasoner. Web

Semantics: science, services and agents on the World

Wide Web, 5, pp. 51-53.

Skillen, K.L., Chen, L., Nugent, C.D., Donnelly, M.P.,

Burns,W., Solheim, I., 2013. Using SWRL and

ontological reasoning for the personalization of

context-aware assistive services. PETRA 48, pp. 1-

48:8.

Skillen, K.L., Chen, L., Nugent, C.D., Donnelly, M.P.,

Burns,W., Solheim, I., 2014. Ontological user

modelling and semantic rule-based reasoning for

personalisation of help-on-demand services in

pervasive environments. Future Generation Computer

Systems 34, pp. 97–109.

Skillen, K.L., Chen, L., Nugent, C.D., Donnelly, M.P.,

Solheim, I., 2012a. A user profile ontology based

approach for assisting people with dementia in mobile

environments,” in Engineering in Medicine and

Biology Society (EMBC), 2012 Annual International

Conference of the IEEE, pp. 6390–6393.

Skillen, K.L., Chen, L., Nugent, C.D., Donnelly, M.P.,

Burns,W., Solheim, I., 2012b. Ontological User

Profile Modeling for Context-Aware Application

Personalization,” in Ubiquitous Computing and

Ambient Intelligence, ser. L.N. in Computer Science.

Springer Berlin Heidelberg, vol. 7656, pp. 261–268.

SRIS 2016 - Special Session on Social Recommendation in Information Systems

354