Application of Myo Armband System to Control a Robot Interface

Gabriel Doretto Morais, Leonardo C. Neves, Andrey A. Masiero and Maria Claudia F. Castro

Centro Universit

´

ario da FEI, Av. Humberto Alencar Castelo Branco 3972, S

˜

ao Bernardo do Campo, Brazil

Keywords:

Myoelectric Signal, PeopleBot, Human Machine Interface, Myo, Inertial Measurement Unit.

Abstract:

This paper discusses the application of myoelectric signals to control electronic devices aiming the develop-

ment of a digital controlling interface with Myo Gesture Control Armband System. Through this interface

it is possible to control the movement of a robot and its interaction with the environment, in this case the

robot being PeopleBot, a robot designed for home necessities. Thus, allowing an assessment on the opera-

tion of controlling devices with myoelectric signals and Inertial Measurement Unit (IMU), the advantages and

disadvantages of working with this technology are discussed.

1 INTRODUCTION

Nowadays, it is impossible to imagine a world with-

out technology. The use of mobile devices such as

mobile phones, tablets, among others, has constantly

evolved in many fields of society such as health,

transportation, communication and security. How-

ever, with the increased integration between technol-

ogy and humans, studies of flexible and bendable

electronic equipment such as roll up displays and

wearable devices, have attracted the public’s atten-

tion (Gwon et al., 2011; Futurecom, 2015). Some

of these devices operate through myoelectric signals,

like Myo Gesture armband. Myo is a wearable device

that uses the concept to create a controlling platform

to other electronic devices with preset gestures.

The myoelectric signal is a biological signal pro-

duced by the electrical activity in a muscle during its

contraction, and it can be detected through electrodes

applied on skin. The use of these signals is very feasi-

ble for analysis of movements and controlling of some

electronic devices, since for each movement it has dif-

ferent modes of muscle activation, which are reflected

in different signatures or patterns for device control.

This technique could be use in the rehabilitation of

people with motor disorders, to control prostheses,

robotic devices, biomechanical and human machine

interfaces (HMI) (da Silva, 2010).

Myo system has been used as a control platform

for TedCube in (Caballero, 2015). According with

the author, into operating rooms, when doctor needs

to rotate a 3D image to see some exam detail, he has

to tell for some assistant do it. In that case, Myo can

help the doctor manipulate system’s interface and see

any detail touchless.

At current market, system for gesture recognition

exists on many different platforms. The most popular

between them is Microsoft Kinect which is in your

second version. This device works with two cameras

and a infrared sensor. This technology calls Time Of

Flight (TOF – 3D tracking through lighting pulses)

which, recognizes gestures to execute some action on

software (Canaltech, 2014).

TedCube also uses Microsoft Kinect for gesture

recognition. However, there are many disadvantages

when it compares to Myo system. As TedCube needs

to use commands through gesture, the system focus

on Myo gestures make possible a simple approach

without barrier like Microsoft Kinect could be. Be-

yond that Myo system could offer a most precise

capture for short movements and less aggressive for

surgery center environment which, turns it safety (Ca-

ballero, 2014).

Another application for Myo system is to moni-

tor Parkinson disease development. Usually, doctors

use three different exams, Positron Emission Topog-

raphy (PET), Magnetic Resonance Imaging (MRI)

and DaTSCAN (exam to detect functional images of

the brain used on nuclear medicine). These exams

are expensive and have to be done on specialized im-

age centers. Tremedic, developed by (Song, 2014),

make the monitoring of patient in real time through

Myo device. Current electromyography data are com-

pared with old ones to identify disease symptoms.

It allows that treatment with levodopa (antiparkinson

medicine) could be analyzed for confirming or con-

testing the diagnostic.

Morais, G., Neves, L., Masiero, A. and Castro, M.

Application of Myo Armband System to Control a Robot Interface.

DOI: 10.5220/0005706302270231

In Proceedings of the 9th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2016) - Volume 4: BIOSIGNALS, pages 227-231

ISBN: 978-989-758-170-0

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

227

Comparing to others gestures recognition system,

Myo has some disadvantages to define the depth. De-

vice’s algorithm works with data acquired through

eight electromyographic sensors, divide on inertial

sensors and rotational sensors. But this information

cannot generate object reference in a 3D world like

Microsoft Kinect does. But it helps to work with an-

other devices as smart-phones, personal computers,

wearable gadgets, even robots (Bullok, 2015).

In this paper, we focus on the application of Myo

Armband in the development of a controlling inter-

face for PeopleBot robot. This kind of application

could be used in many ways like reaching bio-infected

or radioactive places or any place that would be harm-

ful to human beings. Also, another application is to

control a robot for helping elderly or disabled peo-

ple on tasks involving motion and drag and drop ob-

jects, for example. In that way, we chose PeopleBot

because its hardware is made to human-robot interac-

tion problems. It has wheels to move and a grip to get

objects on the top of a table.

To integrate Myo and PeopleBot, we use ROS

(Robot Operating System) which allows the connec-

tion of the commands interpreted by Myo and sent to

the robot controller. This study shows the advantages

and disadvantages of working with myoelectric con-

trolling devices and what improvements are need to

make it better as a HMI for helping people on daily

life.

2 METHODS AND

DEVELOPMENT

At start, it was necessary to study the general opera-

tion of Myo. Initially, we used the Myo Connect soft-

ware, developed by Thalmic labs, that deal with the

events of sensors and was used to control softwares

like media players, slide show presentations programs

and on-line games. Scripts were made in Lua pro-

gramming language as interface (Labs, 2015).

To identify movements through Myo software

needs a classifier to determine the best combination

of the eight existing sensors. To understand how it

works, figure 1 presents sensors raw data for move-

ments from wave-out to wave-in. On figure 1 has the

best combination of the sensors to classify the move-

ments. It can note on graphics the inverse relation-

ship between two sensors located on main muscles to

make the movement. At last graphic the electrodes

have a minimal influence for the movement. The

other graphics are too embracing and it cannot con-

clude anything relevant.

Aiming the application on the ROS platform, the

Figure 1: Relationship between the data electrodes to a

muscle activating for a movement.

use of the Myo Connect and programs developed in

Lua script were not sufficient to create communica-

tion between computer and robot. There are many

factors that influence on it like incompatibility be-

tween operating systems. Thus, the script should be

able to access directly raw information from sensors

(hexadecimal matrix information) to use it in such

manner to control the robot.

Methods to communicate the Myo platform, that

was developed for Windows and MacOS operational

systems, with the ROS, developed for Linux oper-

ational system which controls the robot should be

addressed. The communication between operational

systems, which require a network with at least two

computers was aborted due to its low flexibility and

possible delays in the communication. The alterna-

tive that was chosen receive data from the sensors of

Myo directly on the same platform of the robot.

For signal acquisition, the script developed by

Danny Zhu, under license from MIT (Dzhu, 2015)

was used. Raw data is read from the sensors of the

armband and allows its visualization and the manip-

ulation to be used for PeopleBot controlling (figure

2).

Figure 2: PeopleBot (TelepresenceRobots.com, 2015).

BIOSIGNALS 2016 - 9th International Conference on Bio-inspired Systems and Signal Processing

228

2.1 Publishing Myo’s Information

With raw data, a simple algorithm was written to get

then and publish to the robot with the action to be exe-

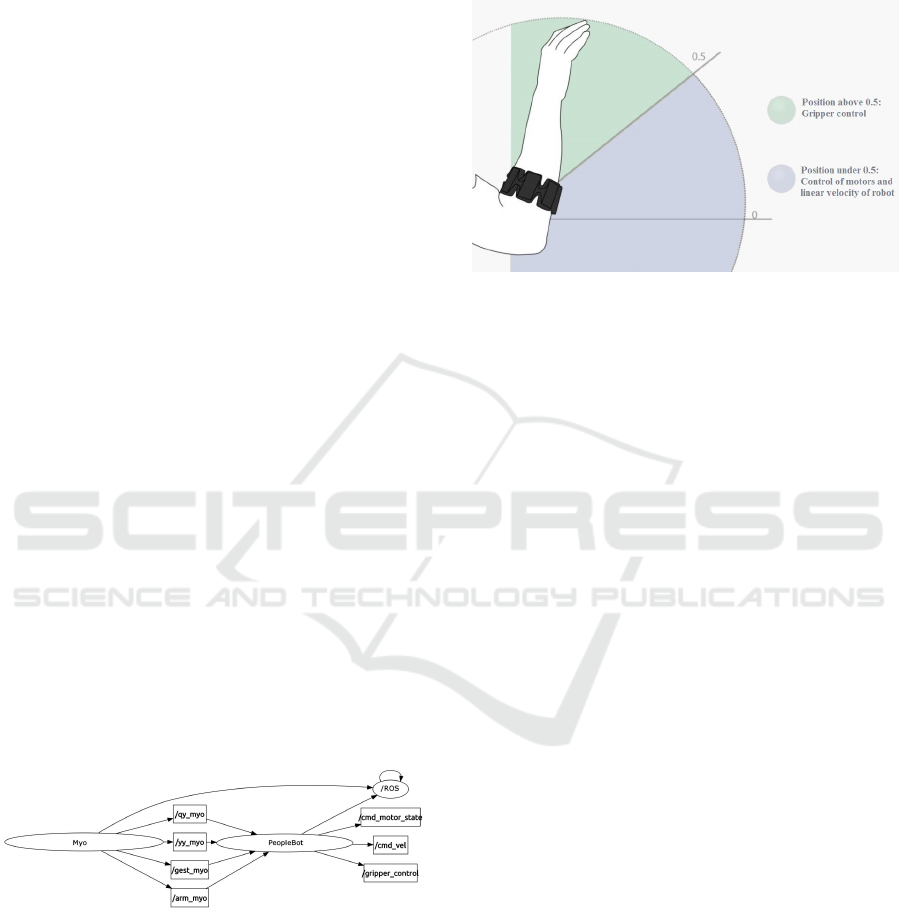

cuted. Figure 3 shows the system architecture. There

are four algorithms (or topics, as they are called on

ROS) to communicate with the robot:

• The topic arm-myo returns data from the sensors,

received as integer numbers of eight bits; to keep

the algorithm functional independent of which

arm is being worn, or how it was positioned, the

topic “armmyo” was created, publishing data in

eight-bit integer numbers;

• To obtain the linear speed of the robot, the qy-myo

was written, which converts the information from

the IMU (pitch, roll and yaw) from the built-in

gyro in the armband into numbers for the speed

publisher; to control the linear speed in the Peo-

pleBot’s x axis, a method to normalize the data

from pitch, roll and yaw (specific orientation in

space) was defined, publishing only the pitch on

“qymyo” topic, as the x position of a 3D vec-

tor (x,0,0), in which the maximum value assigned

was 1 and the minimum -1. When passing by 0 it

stops the robot;

• yy-myo allows to combine gestures, because with-

out it, there are the limitation of five gesture con-

trols. Combining the IMU with the gesture recog-

nition gave a greater flexibility to increase the

range of control. The technique was the combi-

nation between gesture and position of the arm. It

publishes 64 bit float data to keep the script aware

of the angular position of the arm;

• In order to send the original gestures, preset by

Thalmic labs, the topic gest-myo publishes them

into integer numbers of eight bits to the robot.

Figure 3: Operating flowchart between nodes of Myo and

PeopleBot.

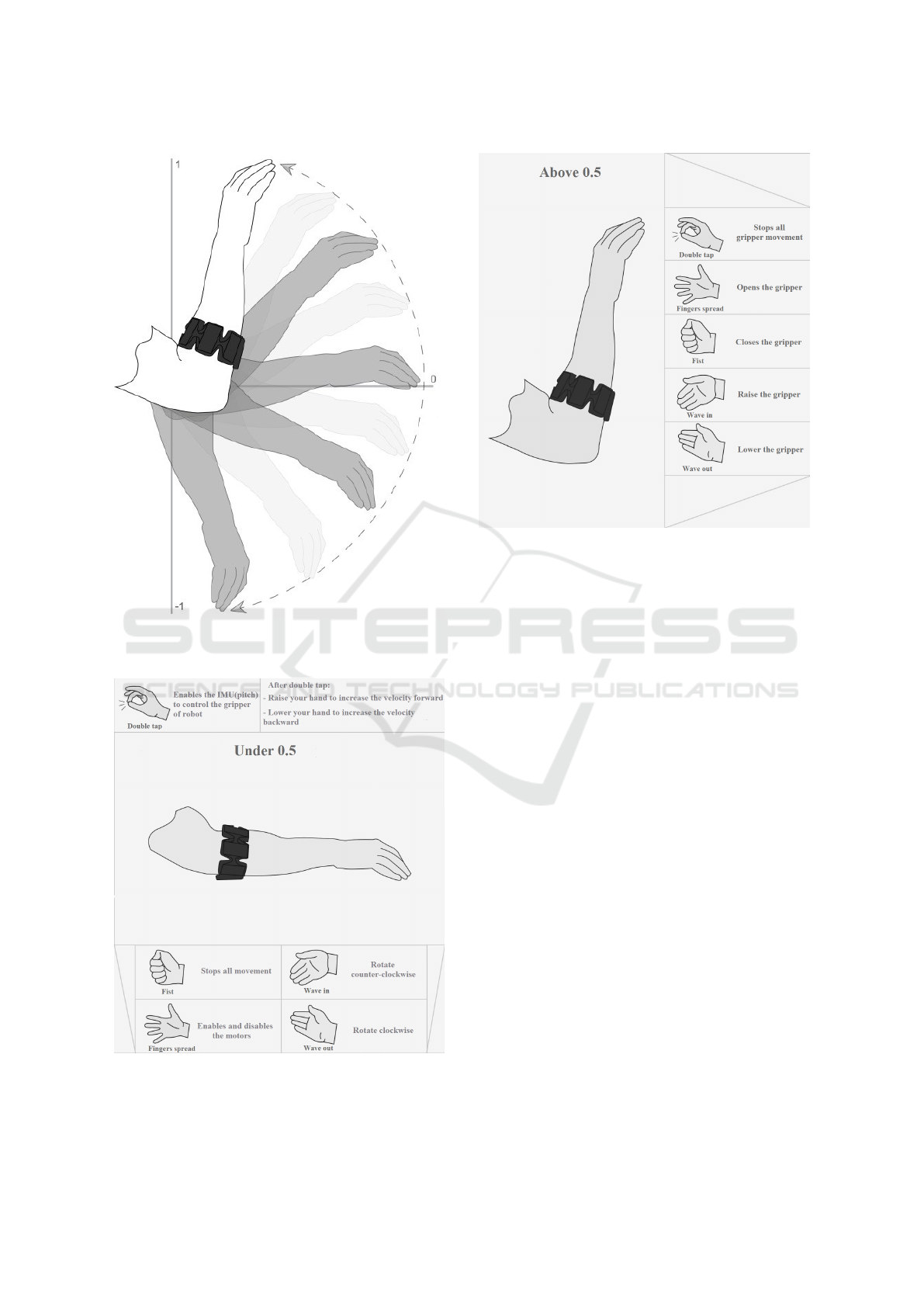

2.2 Robot Control

To allow the controlling of the robot, is necessary for

Myo to be synchronized. This is done with the stan-

dard gesture from Thalmic Labs. The movement of

the robot depends on the state of the motors, so for ac-

tivate them we defined the fingers-spread gesture with

angular information under 0.5. All the controlling of

the robot depends on the angular position of the user’s

arm, defined by: Above 0.5: robot’s gripper control,

below 0.5: robot’s movement control.

Figure 4: Illustration of the control area.

The movement of the robot was set through ges-

tures as shown in figure 6:

• Fingers spread – enables and disable of motors;

• Fist – stops the robot;

• Wave in – turns the robot clockwise;

• Wave out – turns the robot anticlockwise;

• Double tap – enables linear movement of the

robot. Figure 5 shows how to move the arm to

control the robot velocity.

Gripper movements:

• Fingers spread – opens the gripper;

• Fist – closes the gripper;

• Wave in – raises the gripper;

• Wave out – lowers the gripper;

• Double tap – stops all movements.

3 RESULTS

With the study of PeopleBot’s operation and the ROS

platform, allied to the knowledge about Myo, it was

possible to create a functional interface to the robot

using the myoelectric technology. PeobleBot could

be controlled to perform movements and grips.

One of the advantages of controlling computa-

tional applications with Myo is that the controlling re-

sponse is very fast, furthermore, having a gyroscope,

accelerometer and control of its raw data, despite the

lack of gestures, can create combinations that over-

comes some of this limitations, and is very practi-

cal/comfortable for user.

Application of Myo Armband System to Control a Robot Interface

229

Figure 5: Example of arm motion to control the linear ve-

locity.

Figure 6: Illustration to control PeopleBot’s motors and its

movements.

Figure 7: Illustration of gripper control.

To analyze how precise are the detection of move-

ments by the software, some tests were executed on

five volunteers. Before test begins, volunteers make

the gestures a few times to be familiarized with move-

ments and also to get the timing for performing each

gesture. It needs a interval from one second between

each one. After training time, users made 20 times

each gesture in a random sequence. The mean clas-

sification rate to distinguish among movements was

93,6% which, determining an efficient scan of myo-

electric signs by the hardware also due to feedback

interaction with the robot.

During the creation process and the studies of the

interface, we found some difficulties: For the number

of tasks performed by the robot, we had few gestures

available. Thus, it can be concluded that the gestu-

ral options offered by Myo are limited for control-

ling more complex devices. Another point noted was

that the continuous use of the equipment can cause

some muscle discomfort due to fatigue generated by

the repetition of gestures, which also causes problems

in the recognition of the data. Apparently, muscle fa-

tigue causes different myoelectric signals to the ges-

ture performed or just turns it more difficult to be rec-

ognized by the algorithm. Physical activity before the

use of Myo seems to cause difficulty on determining

the gestures as well.

BIOSIGNALS 2016 - 9th International Conference on Bio-inspired Systems and Signal Processing

230

4 CONCLUSION

This work was just one example of a huge range of

things the Myo armband can do. Even considering

the “young age” of this device, it is very useful, has an

excellent acquisition and processing of signals, more-

over, in the future it is possible that new gestures will

be implemented and used to open a wider range of

options for the user. Furthermore, the research should

generate new information about the continuous use of

myoelectric detection devices, the effects on the user

and its limitations.

For next steps, we have more 15 volunteers to help

us for creating new gestures recognition system using

the same hardware Myo with a different classifier al-

gorithm to improve its capabilities adding other ges-

tures and after that apply it on another systems.

ACKNOWLEDGEMENTS

This study was produced with FEI, CAPES and

FAPESP funding.

REFERENCES

Bullok, J. (2015). Thalmic myo for musical applications.

http://jamiebullock.com/post/108636815624/thalmic-

myo-for-musical-applications. Accessed: 2015-03-

20.

Caballero (2015). Tedcas. http://www.tedcas.com. Ac-

cessed: 2015-04-10.

Caballero, G. (2014). Myo armband + tedcas. https://

www.youtube.com/watch?v=ngcVtQQ4V2Q. Ac-

cessed: 2015-03-18.

Canaltech (2014). How kinect works. http://canaltech.

com.br/o-que-e/kinect/Como-funciona-o-Kinect/.

Accessed: 2015-03-01.

da Silva, G. A. (2010). An

´

alise multivariada do sinal

mioel

´

etrico para caracterizac¸

˜

ao do torque isom

´

etrico

do m

´

usculo quadr

´

ıceps da coxa.

Dzhu (2015). Myo raw. https://github.com/dzhu/myo-raw.

Accessed: 2015-05-06.

Futurecom (2015). Entenda os wearable devices. http://

www.futurecom.com.br/blog/entenda-os-wearable-

devices-os-dispositivos-vestiveis/. Accessed: 2015-

09-01.

Gwon, H., Kim, H.-S., Lee, K. U., Seo, D.-H., Park, Y. C.,

Lee, Y.-S., Ahn, B. T., and Kang, K. (2011). Flexi-

ble energy storage devices based on graphene paper.

Energy Environ. Sci., 4:1277–1283.

Labs, T. (2015). Myo armband. https://www.thalmic.com/

myo/. Accessed: 2015-03-01.

Song, K.; Parsons, M. C. J. (2014). Tremedic: Objec-

tively track parkinson’s tremors. http://hackthenorth.

challengepost.com/submissions/27017-tremedic. Ac-

cessed: 2015-03-20.

TelepresenceRobots.com (2015). Peooplebot p3-dx. http://

telepresencerobots.com/robots/adept-mobile-robots-

llc peoplebot-p3-dx. Accessed: 2015-09-01.

Application of Myo Armband System to Control a Robot Interface

231