Automatic Image Colorization based on Feature Lines

Van Nguyen

1

, Vicky Sintunata

1

and Terumasa Aoki

1,2

1

Graduate School of Information Sciences (GSIS), Tohoku University, Aramaki Aza Aoba 6-3-9, Aoba-ku, Sendai, Japan

2

New Industry Hatchery Center (NICHe), Tohoku University, Aramaki Aza Aoba 6-6-10, Aoba-ku, Sendai, Japan

Keywords:

Automatic Colorization, Color Lines, Feature Lines.

Abstract:

Automatic image colorization is one of the attractive research topics in image processing. The most crucial

task in this field is how to design an algorithm to define appropriate color from the reference image(s) for

propagating to the target image. In other words, we need to determine whether two pixels in reference and

target images have similar color. In previous methods, many approaches have been introduced mostly based

on local feature matching algorithms. However, they still have some defects as well as time-consuming.

In this paper, we will present a novel automatic image colorization method based on Feature Lines. Feature

Lines is our new concept, which enhances the concept of Color Lines. It represents the distribution of each

pixel feature vector as being elongated around the lines so that we are able to assemble the similar feature

pixels into one feature line. By introducing this new technique, pixel matching between reference and target

images performs precisely. The experimental achievements show our proposed method achieves smoother,

evener and more natural color assignment than the previous methods.

1 INTRODUCTION

Image colorization works on finding a worth solu-

tion for adding colors to mono-chroma images. Ap-

proaching a novel solution in this field contributes the

strong principle for colorizing large amount of old im-

ages and videos. Depending on the contribution of

user influence in colorization process, existing image

colorization methods can be classified into two main

categories: interactive (or manual) colorization (Pang

et al., 2014), (Levin et al., 2004), (Marki et al., 2014)

and automatic colorization techniques (Gupta et al.,

2012), (Yang et al., 2014), (Irony et al., 2005), (Chia

et al., 2011). All interactive methods require dozens

of color feeding from users, (Pang et al., 2014) ex-

pands provided scribbles by self-similarity algorithm

where similar patches with provided color cues are

identified by looking inside a pre-defined search win-

dow. (Levin et al., 2004) works on inferring color

of gray pixels from provided clues by optimizing the

difference between known-color pixels around partic-

ular gray scale pixel within a window. The work of

(Marki et al., 2014) uses geodesic distance to transfer

color from user-provided strokes to other pixels in im-

age and concentrates on creating a simulation of wa-

ter painting application which produces smooth and

artistic colorized images. Although the gray images

are impressively colorized, these user-assisted meth-

ods demand plentiful color scribbles feeding from

users. The process of colorizing images requires

strong and careful efforts from users. In the situa-

tion of automatic colorization, recent approaches re-

quire robust feature vectors to achieve high precision

of matching algorithm between reference and target

pixels, along with that, high computational cost is

needed. Our goal is to focus only on automatic col-

orization technique and demand the standard features

in pixel matching schemes to overcome this obstacle.

In RGB color space, it is non-trivial problem to

determine whether two pixels have the similar color.

The concept of Color Lines (Omer and Werman,

2004) exploits the information of the pixels in RGB

spaces to build a Color Lines model in which the pix-

els having similar color will be elongated around their

representative color lines. It means that if we know

two pixels belonging to the same color line they are

probably similar in RGB channels. In other words,

the line which the pixels elongate to, is their repre-

sentation in RGB color space and we can project color

from the color lines to them with trivial discrespancy.

Expanding the concept of Color Lines, we intend

to introduce a method of visualizing pixel feature as

a vector of three feature components. Following this

approach, each of input images (including reference

and target image) can be converted into ”feature im-

age” where the feature components are considered as

126

Nguyen, V., Sintunata, V. and Aoki, T.

Automatic Image Colorization based on Feature Lines.

DOI: 10.5220/0005676401260133

In Proceedings of the 11th Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2016) - Volume 4: VISAPP, pages 126-133

ISBN: 978-989-758-175-5

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

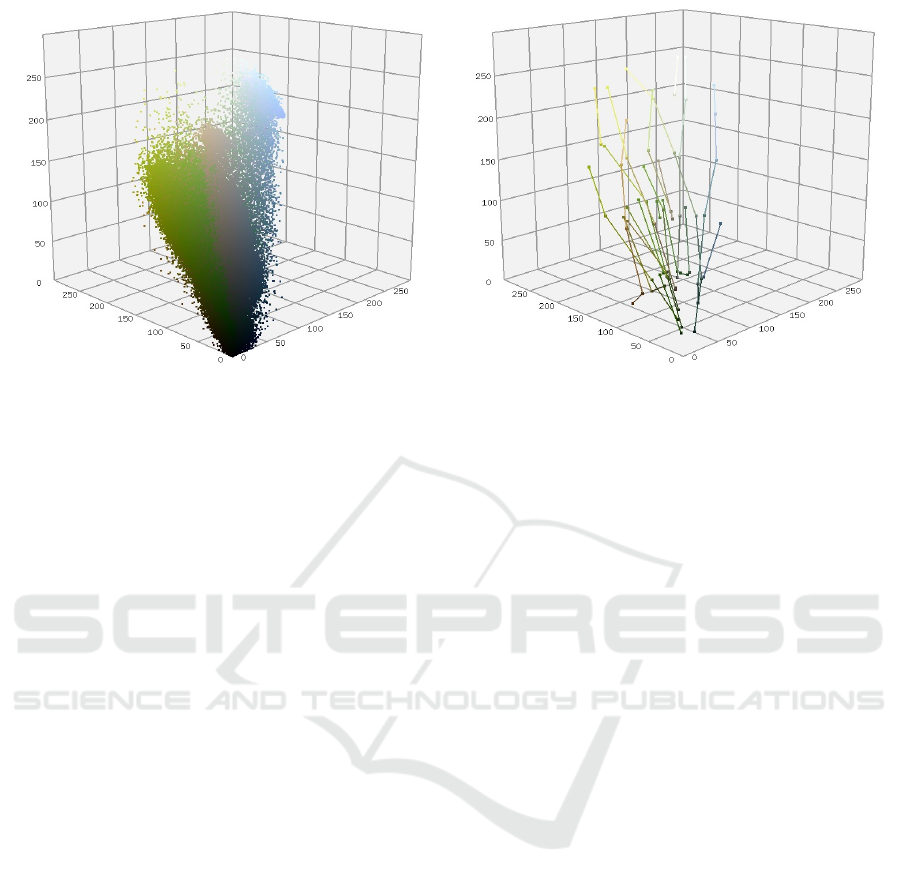

(a)

(b)

Figure 1: (a) Pixel RGB histogram, (b) Color Lines model.

the RGB channels corresponding to each pixel. When

applying Color Lines concept to feature images, we

can get the Feature Lines model of each input image.

These feature lines are plausibly precise feature rep-

resentation for the pixels which elongate to them.

In this paper, we will introduce a new method of

automatic colorization based on the concept of Fea-

ture Lines. Our method uses very simple features in-

cluding pixel intensity, standard deviation and neigh-

bor standard deviation to classify pixels and match

them up between reference and target images. By ap-

plying Feature Lines concept to target and reference

feature images, we can get their corresponding Fea-

ture Lines models to use as the inputs for our match-

ing scheme. The following sections will discuss in

detail about the algorithm to construct Feature Lines

models from input images and its application on au-

tomatic colorization method.

2 PREVIOUS WORK

2.1 Existing Automatic Image

Colorization Algorithms

Automatic image colorization is the rich fields where

there are many works have been introduced. (Irony

et al., 2005) proposed a method for colorizing gray

images using image and feature space voting where

Discrete Cosine Transform (DCT) Coefficients of a k

by k neighborhood around each pixel was used as its

feature vector. The authors go further by switching to

low dimensional subspace of feature using Principal

Component Analysis (PCA) and projections to over-

come the problem of mismatching referent and target

segments. The proposed algorithm performs well in

the case of input images with low number of textures

and might fail when images contain diverse details.

Another work in this field is the internet images

search based colorizing system (Chia et al., 2011)

where authors concentrate on introducing a software

system for colorizing mono-chroma images with less

user efforts. The proposed system required user to

provide a label for each target image to use as search-

ing keyword and a segmented image as filter to obtain

most appropriate reference image from internet. This

method exploits the vibrant resource from the inter-

net. Though, it might not work well when users can

not provide a concrete keyword for searching step.

In the state-of-the-art automatic colorization

method (Gupta et al., 2012), the authors use a bun-

dle of feature vectors corresponding to each super-

pixel which includes Gabor, SURF, standard devia-

tion and intensity feature in a cascade feature match-

ing scheme. However, feature vectors are extracted as

the average all of the pixels in target and reference im-

age superpixels respectively. Then these vectors are

used as the representation for pixels belonging to a

specific superpixel. Therefore, the results of feature

extracting process will be affected by the accurracy

of superpixel extraction algorithm. Superpixel is the

group of square-shaped neighboring pixels with a spe-

cific size so that there will exist many superpixels as-

sembling dozen of stray pixels especially in the case

of images containing many different small details or

the pixels near by edges of objects. Moreover, when

extracting SURF feature for arbitrary pixel, the pre-

required parameters of the keypoints are missing or

they are left to default values. These shortages will

Automatic Image Colorization based on Feature Lines

127

influence the precision of the superpixel feature com-

putation leading to the inaccuracy in feature matching

algorithm.

In the most recent automatic colorization method

(Yang et al., 2014), reference and target images

are condensed to epitomes using hidden mapping

scheme. This approach can perform efficiently in the

case of images with few number of textures and be-

come less productive when input images contains a

large amount of details. Beside that, the learning pro-

cess for epitomic image generation uses only single

type of feature, however, robust feature vectors in-

cluding YIQ chanels, dense SIFT feature and the ro-

tation invariant Local Binary Pattern (LBP) are still

demanded for matching algorithm.

These approaches demand a bundle of robust fea-

ture vectors to achieve high precision matching re-

sults, however, the high computational cost is also re-

quired to implement these algorithms.

2.2 Color Lines Representation

Color Lines has been introduced as the ideal model

for pixel classification in RGB color space. Based on

the observation that two pixels having similar color

should be closed to each other when being plotted in

RGB coordinate system. By exploiting the geometri-

cal properties of pixel RGB components, (Omer and

Werman, 2004) builds a concrete clustering scheme

to classify image pixels into color clusters. Each of

cluster is represented by two connected pixels which

creating a line segment as its skeleton so-called ”color

line”.

Color Lines algorithm firstly slices RGB his-

togram using the hemispheres of equal radius dis-

tances centered at the origin O. Each histogram slice

is the collection of all pixels with RGB-norms in

between two upper and lower hemisphere surface

boundaries. The maxima points are determined as

the pixels which intersect with higher hemisphere sur-

face. To define the color points of each color line

in corresponding histogram slice, simply picks up the

pixels with maxima RGB norms. Then, the Euclidean

distances and a threshold are used as the parameters

to joint pair of color points from neighbor histogram

slices in to color line skeleton. A Gaussian is fitted to

each skeleton and used as the classifying model to dis-

tribute pixels into corresponding color line cluster. Fi-

nally, from the RGB histogram shown in figure 1a we

can get the image Color Lines representation model

depicted in figure 1b. By using this model, RGB coor-

dinates of pixels can be recovered by projecting color

from their belonging color lines.

Figure 2: Feature image.

3 FEATURE LINES

3.1 Feature Lines Concept Intuition

Color Lines representation performs its advantages

on pixel color classification by introducing a concrete

clustering algorithm based on only RGB components

of image pixels. Since our implementation is involv-

ing the problem of image colorization, the idea of

exploiting the achievements of Color Lines come up

to us intuitively. Target and reference image are se-

mantically chosen so that they should have similar

Color Lines model. In other word, if we can deter-

mine Color Lines model of reference image, then the

colorized target Color Lines model will be alike. Be-

side that, target and reference image are similar which

means there exists the corresponding image areas in

each of them having similar characteristics or feature

vectors.

The method of using pre-extracted image seg-

ments for feature matching scheme have been intro-

duced in many previous approaches. (Gupta et al.,

2012) method extracts feature vectors of superpix-

els to feed them to a cascade feature matching pro-

cess and (Chia et al., 2011), (Irony et al., 2005) re-

quires segmented images before performing further

steps. Although there are many efficient methods for

extracting superpixels (Achanta et al., 2012), (Levin-

shtein et al., 2009) or segments (Comaniciu et al.,

2002), all of them are affected by spatial constraint

of pixel in image matrix which might be the weak-

ness in feature extracting work since pixels in differ-

ent positions of an image can have similar neighbor

and texture characteristics.

Our approach moves in the opposite direction of

those familiar processes. We directly segment image

based on the local features of its pixels to avoid the

double erroneous short-coming in clustering and fea-

VISAPP 2016 - International Conference on Computer Vision Theory and Applications

128

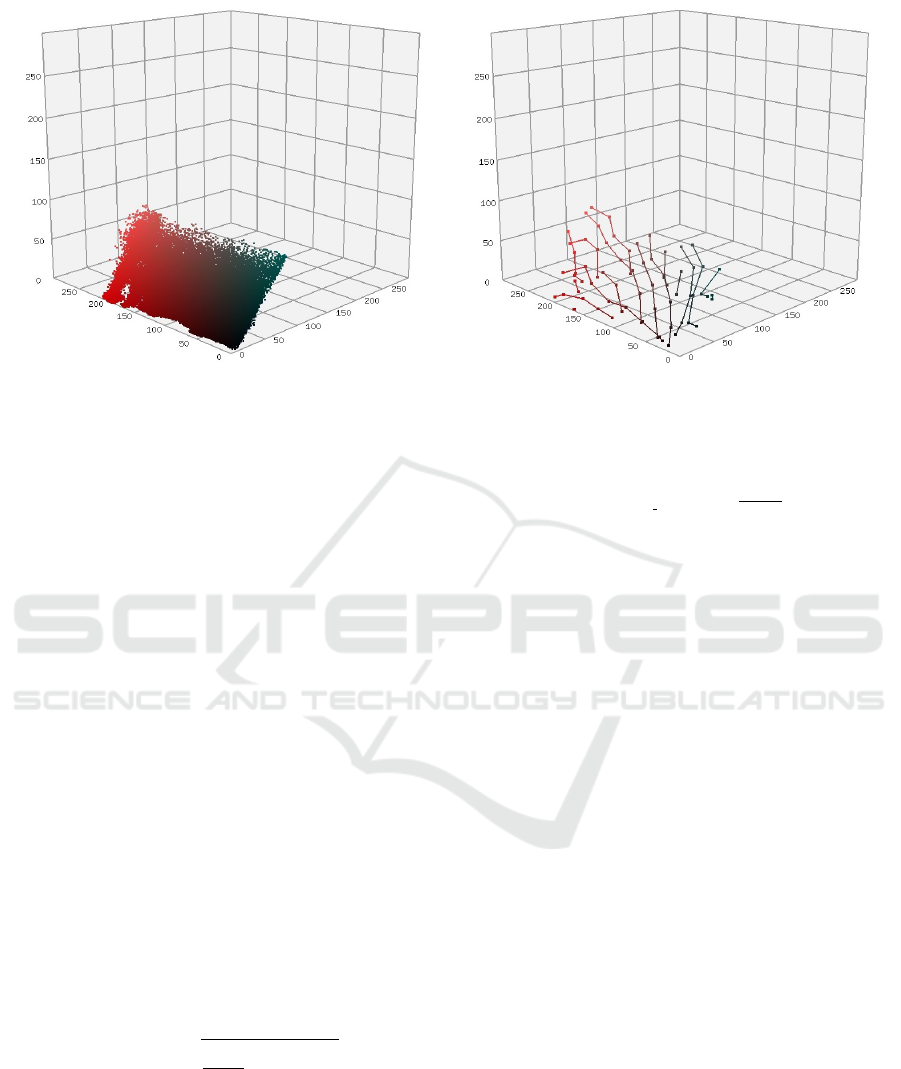

(a) (b)

Figure 3: (a) Pixel feature coordinates, (b) Feature Lines representation.

ture extracting process. Unearthing this motivation,

we think about applying the concept of Color Lines

for feature components instead of three RGB color

channels. We construct three-dimensional feature co-

ordinates for each pixel by using one dimensional fea-

ture vectors. With three types of pixel feature, we can

exactly mimic RGB color elements as in Color Lines

model. Consequently, the output model of this pro-

cess is the feature model corresponding to each input

image so-called ”Feature Lines” model.

3.2 Feature Coordinates Generation

Feature Lines is the extension of Color Lines con-

cept in three-dimensional feature space. For this pur-

pose, each pixel need to be constructed with three-

dimensional feature coordinate. In this paper, we use

intensity, standard deviation and neighbor standard

deviation as three components of pixel feature coor-

dinates.

Intensity Feature. We use gray scale value as

the first component of pixel feature coordinate.

Standard Deviation Feature. The second fea-

ture component is pixel standard deviation, this fea-

ture can be calculated by using below expression.

f

deviation

=

s

1

n − 1

n

∑

i=1

(x

i

− ¯x)

2

(1)

where n is the total number of pixels in neighboring

window of current pixel, x

i

, ¯x are the intensity of cur-

rent pixel and mean of neighboring pixel intensities

respectively.

Neighbor Standard Deviation Feature. Neigh-

bor deviation is the mean of pixel standard deviations

in a square window around each pixel.

f

deviation neighbor

=

d +

¯

d

2

(2)

where d is the deviation of current pixel and

¯

d denotes

the mean of neighboring pixel deviations.

3.3 Feature Lines Construction

After computing feature elements, we construct three-

dimensional coordinates for each pixel of reference

and target image. The outputs of this step are the im-

ages where feature coordinates are visualized as RGB

components of the pixels yielding feature images. To

define intensity for feature images, we simply com-

pute the mean of three-dimensional coordinates of

each pixel. Figure 3 depicts three-dimensional fea-

ture coordinates 3a and Feature Lines model 3b in

RGB color space. When we apply the concept of

Color Lines on feature images, the obtained mod-

els are feature-based pixels classification where pixels

having similar features will elongate around the fea-

ture lines. In the following section, we will discuss

further the algorithm of applying Feature Lines model

to solve the problem of automatic image colorization.

4 AUTOMATIC COLORIZATION

USING FEATURE LINES

4.1 Feature Lines Matching

The most crucial task of automatic image colorization

is to determine the corresponding color between ref-

Automatic Image Colorization based on Feature Lines

129

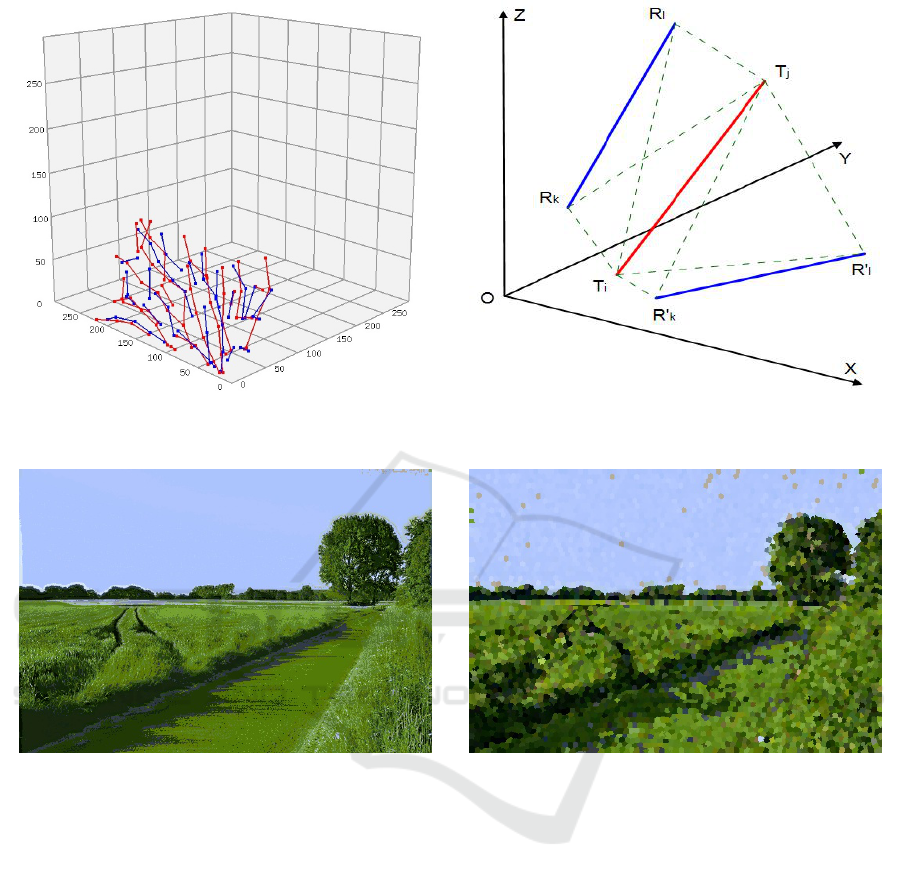

(a)

(b)

Figure 4: (a) Reference (red) and target (blue) Feature Lines models, (b) Feature Lines segment matching algorithm.

(a) (b)

Figure 5: (a) Mean color transfering for matched feature lines, (b) Mean color transfering for matched superpixels in (Gupta

et al., 2012).

erence and target image. The obvious methods are

to use the Euclidean distances between feature vec-

tors of reference and target pixels. However, this

method will suffer from the weakness of Euclidean

distance. Althought Euclidean measurement can pre-

serve the difference or similarity of magnitude be-

tween two given vectors, it is vunerable for extracting

the geometrical relation between them. Our method

will exploit the advantages of Feature Lines models

to strengthen matching scheme.

As the inducements of Feature Lines which are in-

herited from the Color Lines concept, Feature Lines

model of an image is the feature representation for

the pixels belonging to them. Since reference and tar-

get image are similar, their feature line models should

also be alike. Figure 4b shows the Feature Lines

models of reference and target images in single three-

dimensional space. It is obvious to see that when we

consider each pair of reference and target feature line

segments, the best candidate of referent feature line

should be the line closest target feature line. Beside

that, Feature Lines models are the spatial-based fea-

ture clustering themself so that they are the concrete

inputs for feeding to feature matching scheme. Figure

4b demonstrates our technique to define correspond-

ing feature lines between input images. We simply

compute the Euclidean distances between their fea-

ture points coordinates and use them as the decisive

cost in matching process. Below is the computational

formula.

m

cost

= d

T

i

R

k

+ d

T

i

R

l

+ d

T

j

R

k

+ d

T

j

R

l

(3)

where d is the Euclidean distance between target and

reference feature line segment points, T

i

, T

j

denote

target feature line segment points and R

k

, R

l

are ref-

erence feature line segment points. The matched ref-

VISAPP 2016 - International Conference on Computer Vision Theory and Applications

130

(a) (b)

Figure 6: (a) Zoom in Feature Lines based colorized image, (b) zoom in colorized image using (Gupta et al., 2012) method.

erence feature line segment should be the one which

has the lowest m

cost

.

Since Color Lines concept shows their advantage

in color preservation, we will perform further steps

by using Color Lines as the model to propagate color

from reference to target pixels.

4.2 Color Projection

We will firstly, construct Color Lines models for ref-

erence and target image. We consider target image as

a ”color” image with R, G, B channels are equal to

gray scale of each pixel. The step to construct Color

Lines for target image is actually to classify its pixels

based on their gray intensities. However, by apply-

ing Color Lines concept, the output is expected to be

more precise.

After getting Color Lines models of input images,

we map the corresponding color line for each fea-

ture line segment by simply defining common pix-

els between them and keeping only the color line

which contains most of pixels belonging to current

feature line. Since we have 1:1 correspondence be-

tween color line and feature line, we can then directly

define the corresponding reference color line of target

pixels. The final step is to project color from reference

color line to target pixels based on their belonging ref-

erence color line and gray scale level.

5 EXPERIMENTAL RESULTS

In the previous sections, we discussed our proposed

algorithm based on Feature Lines concept to tackle

the problems of automatic image colorization. This

section will show the achievements of our algorithm

implementation to some input images and the com-

parisons with (Gupta et al., 2012)’s method.

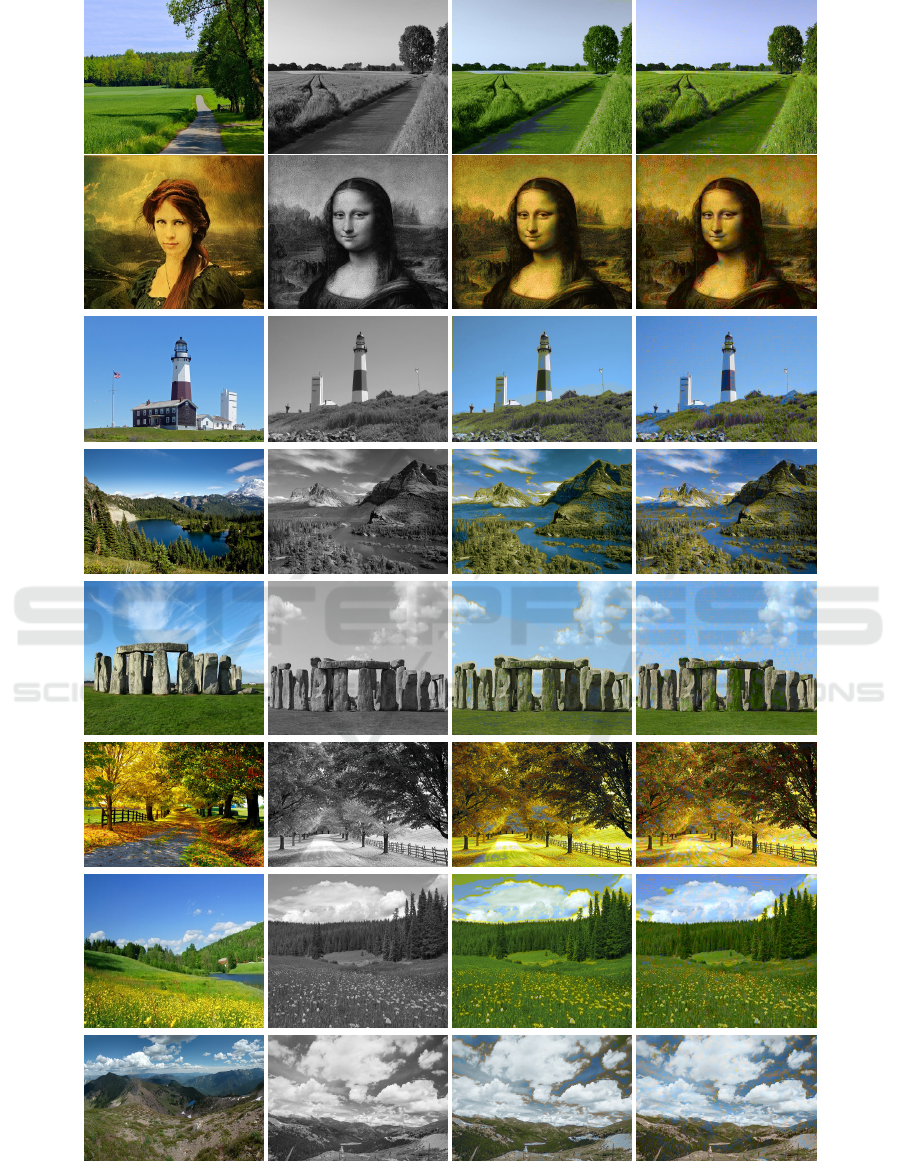

Figure 5 depicts the results of our Feature Lines

based matching scheme comparing with cascade fea-

ture matching in the state-of-the-art superpixel based

method (Gupta et al., 2012). It is clearly to see

that, by only transfering mean color of correspond-

ing patches, our matching result is more uniform and

evener than superpixel based scheme. Moreover, the

color propagation process is performed by exploiting

Color Lines representation model which can smoothy

and evenly projects color from color lines skeleton

to belonging pixels. Our Feature Lines based algo-

rithm returns smoother and more natural color assign-

ment without any color jerky which might occur in

superpixel-based approach as shown in figure 6. Fig-

ure 7 shows our experimental results and the compar-

ison with superpixel based method. While superpixel

based algorithm requires robust feature vectors such

as SURF and Gabor features to achieve highly pre-

cise colorization, our experiments use only three ba-

sic pixel feature vectors: Pixel intensity, standard de-

viation feature within the 3x3 square window around

each pixel and neighbor standard deviation feature

with the 9x9 window size. Despite those very lim-

ited input conditions, our method generates better re-

sults as dipicted in figure 7c compared with the super-

pixel based method shown in figure 7d. Our method

classifies pixels based on their feature vectors with-

out any constraint to spatial position in image ma-

trix. Therefore pixel characteristics are completely

preserved and it is guaranteed that pixels having sim-

ilar features gather in the same feature line.

Automatic Image Colorization based on Feature Lines

131

(a)

(b) (c) (d)

Figure 7: (a) Reference image, (b) target image, (c) our proposed method, (d) (Gupta et al., 2012) method.

VISAPP 2016 - International Conference on Computer Vision Theory and Applications

132

6 CONCLUSION AND FUTURE

WORK

In this paper, we proposed a new feature matching

scheme using Feature Lines model which exploits

the advantages of Color Lines representation concept

and its application on solving the problem of au-

tomatic image colorization. By constructing three-

dimensional feature vectors, we considered them as

coordinates of pixels in color space. Reference im-

age is semantically similar to target image, intuitively,

they should have the similar Feature Lines models

which are the outcomes when applying Color Lines

concept to feature images. Following this theory, we

were able to match up feature lines and consequently

pixels from reference and target image. To propa-

gate color from matched reference to target pixels,

we represented reference image as a set of color lines

and defined corresponding color lines of feature lines

in reference image. Color transfering process could

be done accordingly by projecting color from corre-

sponding color lines to harmonized target pixels.

Since, the results of automatic colorization algo-

rithms strongly depend on how semantically equiv-

alent between reference and target image, we might

get imprecise results when input images are not sat-

isfying. Our method exploits the advantage of Color

Lines concept in feature space. Pixels are classified

based on the distribution of their feature vectors in

three-dimensional space. However, feature vectors ar-

rangement is not persistently similar with RGB color.

Feature points gather in a denser and more crowded

area than color pixels in RGB space. For future work,

we would like to explore more robust pixel features to

strengthen matching scheme and work on improving

clustering algorithm to overcome the obstacle of fea-

ture distribution. Additionally, we also intend to ex-

tend our method for any dimensional vectors since our

current approach only dedicates for three-dimensional

features.

REFERENCES

Achanta, R., Shaji, A., Smith, K., Lucchi, A., Fua, P., and

Susstrunk, S. (2012). SLIC superpixels compared to

state-of-the-art superpixel methods. IEEE Transac-

tions on Pattern Analysis and Machine Intelligence,

34(11):2274–2281.

Chia, A. Y.-S., Zhuo, S., Gupta, R. K., Tai, Y.-W., Cho, S.-

Y., Tan, P., and Lin, S. (2011). Semantic colorization

with internet images. ACM Transactions on Graphics,

30(6):1.

Comaniciu, D., Meer, P., and Member, S. (2002). Mean

Shift: A Robust Approach Toward Feature Space

Analysis. 24(5):603–619.

Gupta, R., Chia, A., and Rajan, D. (2012). Image coloriza-

tion using similar images. Proceedings of the 20th

ACM international conference on Multimedia, pages

369–378.

Irony, R., Cohen-Or, D., and Lischinski, D. (2005). Col-

orization by Example. Symposium A Quarterly Jour-

nal In Modern Foreign Literatures, pages 201–210.

Levin, A., Lischinski, D., and Weiss, Y. (2004). Coloriza-

tion using optimization. ACM Transactions on Graph-

ics, 23(3):689.

Levinshtein, A., Stere, A., Kutulakos, K. N., Fleet, D. J.,

Dickinson, S. J., and Siddiqi, K. (2009). TurboPixels:

Fast superpixels using geometric flows. IEEE Trans-

actions on Pattern Analysis and Machine Intelligence,

31(12):2290–2297.

Marki, N., Wang, O., Gross, M., and Smoli, A. (2014).

COLORBRUSH : Animated Diffusion for Intuitive

Colorization Simulating Water Painting. pages 4652–

4656.

Omer, I. and Werman, M. (2004). Color lines: image spe-

cific color representation. Proceedings of the 2004

IEEE Computer Society Conference on Computer Vi-

sion and Pattern Recognition, 2004. CVPR 2004., 2.

Pang, J., Au, O. C., Yamashita, Y., Ling, Y., Guo, Y., and

Zeng, J. (2014). Self-Similarity-Based Image Col-

orization The Hong Kong University of Science and

Technology Tokyo Institute of Technology. pages

4687–4691.

Yang, Y., Chu, X., Ng, T. T., Chia, A. Y.-s., Yang, J., Jin,

H., Huang, T. S., Avenue, N. M., Star, a., and Way, F.

(2014). Epitomic Image Colorization Department of

Electrical and Computer Engineering , University of

Illinois at Urbana-Champaign Adobe Research , San

Jose , CA 95110 , USA. pages 2489–2493.

Automatic Image Colorization based on Feature Lines

133