Multi-robot Systems, Machine-Machine and Human-Machine

Interaction, and Their Modelling

Ulrico Celentano and Juha R

¨

oning

Biomimetics and Intelligent Systems Group, Faculty of Information Technology and Electrical Engineering,

University of Oulu, P.O. Box 4500, FI-90014, Oulu, Finland

Keywords:

Cognition and Metacognition, Cognitive Agents, Interworking Cognitive Entities, Multi-robot Systems,

Social Interaction.

Abstract:

The control of multi-agent systems, including multi-robot systems, requires some level of context and envi-

ronment awareness as well as interaction among the interworked cognitive entities, whether they are artificial

or natural. Proper specification of the cognitive functionalities and of the corresponding interfaces helps in

achieving the capability to reach interoperability across different operational domains, and to reuse the system

design across different application domains. The model for interworking cognitive entities presented in this

article, which includes explicitly interworking capabilities, is applied to two major classes of interaction in

multi-robot systems. Being the model inspired by both artificial and natural systems, makes it suitable for

both machine-machine and human-machine interaction.

1 INTRODUCTION

The management or self-management of a multi-

robot system (MRS) requires interaction among all

the involved interworked entities. At scattered en-

tities, awareness of own environment and context is

needed, and forms of distributed decision-making are

possible. More, a MRS may realise various dis-

tributed sensor-actuator configurations since action at

one entity may be based (also) on perceptions at other

entities.

In a number of use cases, not only machine-

machine but also human-machine interaction is

present, hence both artificial and natural cognitive en-

tities interact and their role as supervisor or as an ob-

ject may change depending on use case or context.

Embedding humans into the overall system allows

taking into account their needs, capabilities and limi-

tations, with an effect on the system architecture and

the interfaces within the entire social ecosystem.

Discussion on the specification of the interfaces is

needed because it may help interoperability of a sys-

tem across operational domains and even in reusing

system design across different application domains.

After having introduced in Sect. 3 multi-entity in-

telligent systems, this article starts building upon the

identification in Sect. 4 of the issues related to the

control of multi-robot systems as interworking cog-

nitive entities and continues presenting in Sect. 5 a

model for those constituent elements. For what seen

above, a model should be as holistic as possible to

encompass particularities of both artificial and natu-

ral cognitive entities. An example instantiation of the

cognitive model in a multi-robot system scenario is il-

lustrated in Sect. 6, whereas Sect. 7 discusses a com-

parative evaluation of the model. The core part in the

above sections is preceded by a review of related work

and other background in Sect. 2, and concluded by a

discussion in Sect. 8.

2 BACKGROUND

Decision-making based on situational awareness re-

quires interaction of multiple entities. Interoperabil-

ity of informative systems is recognised as a cru-

cial aspect in multinational, co-operative command

and control (C2) operations, including peace-keeping.

Related standardisation activity is promoted for ex-

ample by the Simulation Interoperability Standards

Organization (SISO)

1

. Interoperability is also one of

the goal of the standardisation work done within the

Third Generation Partnership Project (3GPP) to en-

able Long-Term Evolution (LTE) wireless communi-

1

http://www.sisostds.org/. Last accessed 9 Sep 2015.

118

Celentano, U. and Röning, J.

Multi-robot Systems, Machine-Machine and Human-Machine Interaction, and Their Modelling.

DOI: 10.5220/0005667801180125

In Proceedings of the 8th International Conference on Agents and Artificial Intelligence (ICAART 2016) - Volume 1, pages 118-125

ISBN: 978-989-758-172-4

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

cation system for public safety.

Cognitive processes – essentially involving per-

ception, decision-making and action – are fundamen-

tal components in robot systems and human-machine

interaction. Related models – see (Celentano, 2014;

Celentano and R

¨

oning, ms) for a wider and deeper

review – date back to years 1939 for quality control

in industrial production (Shewhart, 1939), and 1945

for problem solving (Polya, 1957). These are both

four-phase models: plan, do, check, act; and under-

stand the problem, devise a plan, carry out the plan,

look back; respectively. Models for robotics started

also with three-four phase models – sense, (model),

plan, act – (Nilsson, 1984) but got then radically re-

vised by the introduction of the subsumption architec-

ture, decomposing phases into elementary behaviours

(Brooks, 1986). The adaptive control of thought-

rational (ACT-R) architecture was developed for hu-

mans but it was also applied to human-machine inter-

action (Anderson et al., 2004; Langley et al., 2009).

Specifically for C2 decision-making, John Boyd

devised a model to understand adversaries – the

observe-orient-decide-act (OODA) loop – further

adapted to cognitive radios and extended (Thomas

et al., 2005, e.g.) by Mitola (Mitola, 2000). A follow-

ing proposal was the critique-explore-compare-adapt

(CECA) model (Bryant, 2004). Its last three phases

have similar duties as in the OODA model, but the

critique makes the model more proactive. Whereas

in the CECA model plan serves as a sort of initialisa-

tion phase before the context acquisition takes place,

in (Mitola, 2000) it is used to take further actions as

a consequence of the acquired context information.

We may observe that OODA regulates the actions,

whereas CECA regulates the plan.

The definition of a robot operating system dates

back at least to 1984, with the control of a single

robot but integrated into a system comprising sensors

and humans (Dupourqu

´

e, 1984). Various operating

systems (Kerr and Nickels, 2012) and architectures

(M

¨

aenp

¨

a

¨

a et al., 2004, e.g.) have been proposed, with

the open-source robot operating system ROS been re-

cently used for multi-robot systems. ROS is devel-

oped for large-scale robot systems and is realised as

a peer-to-peer topology of processes running on sepa-

rate hosts and interconnected together (Quigley et al.,

2009). ROS nodes communicate by messages. Al-

though message structure can be customised, some

message structures are encouraged to be adopted to

enable interoperability.

A multi-robot system may be part of a larger

ecosystem, possibly together with humans sometimes

having a supervisory role, or embedded into the sys-

tem with a peer role (Peschl et al., 2012). Under

SISO, the battle management language (BML) has

been designed as a human readable, unambiguous

language to control robots integrated in an interna-

tional warfare system, including interaction among

command and control systems, human units and

robots (Rein et al., 2009).

3 MULTI-ENTITY INTELLIGENT

SYSTEMS

A generic model applicable to both artificial and nat-

ural entities is welcome since it is more easily appli-

cable to systems in which both machines and humans

are possibly present, see previous sections. Moreover,

such a model also helps in more effectively bridging

natural and artificial systems by exporting knowledge

across these domains. A generic model is presented

and discussed in this article, together with example

applications of it.

Roughly, but see Sect. 5 and 7 for details, an in-

telligent system such as a robot or a human has three

main functions: observation, decision-making and ac-

tion execution. Many peculiarities and implications

are involved in the action execution part. They in-

clude for example, in the case of robots, the practi-

cal actuation and the fine control of system dynamics.

These aspects are left out of the scope of this arti-

cle. On the contrary, central of interest in this article

are decision-making and the necessary awareness de-

rived from observations, see the illustrative examples

in Sect. 4 and 6.

An important contribution of this article concerns

the social interaction in multi-entity intelligent sys-

tems, see Sect. 5 and examples in Sect. 6.

Natural interfaces are created in a multi-entity

cognitive system. For flexible and reusable design

purposes it is an advantage to specify interfaces also

for functions internal to an entity. This is facilitated

by the specification of the duties of cognitive func-

tions, see Sect. 5.

4 CONTROL OF COGNITIVE

MULTI-ROBOT SYSTEMS

Multi-robot systems often require forms of co-

operation, collaboration or co-ordination among the

entities, such as collision-avoidance (Remmersmann

et al., 2010) or flocking control (La et al., 2015), and

for those inter-robot interaction is needed. Robots

may possess various levels of cognition; see for ex-

ample (Celentano, 2014).

Multi-robot Systems, Machine-Machine and Human-Machine Interaction, and Their Modelling

119

The most straightforward way to implement the

above forms of interaction is to let any entity to ob-

serve the actions (e.g., position and movement) of the

other robots. Robots in this case sense the actuations

of the other robots. Although in some cases prediction

of actions (e.g. trajectories) of the other robots is pos-

sible, if tasks/plans of the other robots are unknown,

or they are dynamically adapted (e.g. to avoid obsta-

cles on the terrain), prediction may be impossible or

inaccurate, see Fig. 1 and Fig. 2.

(a) (b)

Figure 1: An example about self/non-self-awareness.

Fig. 1 illustrates an example about self/non-self-

awareness. While the resulting relative configuration

of the system is the same in both cases, on the left the

displacement is caused by a movement of the marked

robot (darker grey circle), and on the right it is due

to the rest (lighter grey circles). The situation may

be more complicated if not all of the lighter grey en-

tities are synchronised. In any case, absolute posi-

tioning may be used in this example, but in general it

may be quicker or more exact, or even feasible (abso-

lute positioning may not always be available or it may

not have the required resolution), deducting the cause

from shared information.

Figure 2: A fleet of robots with one avoiding an obstacle.

Fig. 2 shows a fleet of robots with one entity

avoiding an obstacle. Despite the lead robot (darker

grey circle) changes its course (to avoid an obsta-

cle, straight solid line), the rest of the system (lighter

grey circles) follows the originally agreed path, hav-

ing been instructed (dashed circles) about the reason

of the change.

The examples above are about managing be-

havioural anomalies (abnormalities in group dynam-

ics), i.e., it is of interest to understand whether the rea-

son for the anomaly is due to our entity or to the other.

Similar decisions are needed for example in inter-

vehicle coordination, which can be realised through

warnings for man-controlled vehicles, or as part of

their control, also applicable to unmanned vehicles.

In decision making, explicit coordination can be ex-

ploited (for example by inter-entity communications,

like in Fig. 2), or implicit information can be used (by

self-awareness, like in the scenario of Fig. 1).

In general, three forms of interaction can be iden-

tified in an MRS: act-and-observe (robots observe the

actions of other robots), share-and-act (robots act af-

ter negotiation of their actions), act-while-checking

(robots behave initially as in the first case, but refine

their operations as in the following case).

As noted, sharing part of robot’s own plans is a

way to improve coexistence within a multi-robot sys-

tem. In this case, actions are based on exchange of in-

formation among robots, rather than observing their

decisions (actions, movements). Indeed, misalign-

ments due to own or other’s errors require different

responses; this means that self/nonself discrimination,

discussed above, is needed.

From the above examples it is derived that robots

may need to share their knowledge (and or plans).

5 MODEL FOR COGNITION

This section presents our model (Celentano, 2014)

that introduces the required phases missing from

those in the literature (Sect. 2 and references therein).

The specification of cognitive functionalities

should respond to the needs outlined in Sect. 1. To

this end, in our model presented here, the definition

of functionalities – and as a consequence of the in-

terfaces – is done in such a way that cognitive func-

tions are neatly assigned to specific units or physical

elements within it. The phases of the cognitive entity,

i.e., the components instantaneously active in the cog-

nitive process, are defined considering also their role

in relation to the environment they operate within.

It is obvious that what is an action for one entity

may be a perception for another. However, interaction

of a cognitive entity with its environment is not lim-

ited to initial observation and final action events but it

includes also interaction with intermediate cognitive

phases in which more cognitive entities are involved.

It is important to note that in general the interaction

of the cognitive entities with their environment occurs

during the cognitive process and not necessarily only

at its start or end (i.e., at perception or action, respec-

tively). This sharing of information, or knowledge,

or sometimes commands – in this article, a piece of

information or knowledge or a command are together

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

120

referred to as instructions – takes possibly place be-

fore the actual actions are taken and therefore cannot

be modelled as act-perceive interactions. New cogni-

tive phases are needed for that.

Cognitive phases are divided into functionali-

ties dealing with exchanging facts and implement-

ing competences, and those in charge of processing

knowledge, making decisions and learning. Metacog-

nition is the knowledge about the cognitive phenom-

ena (or knowledge of cognition) and the regulation of

the cognition processes.

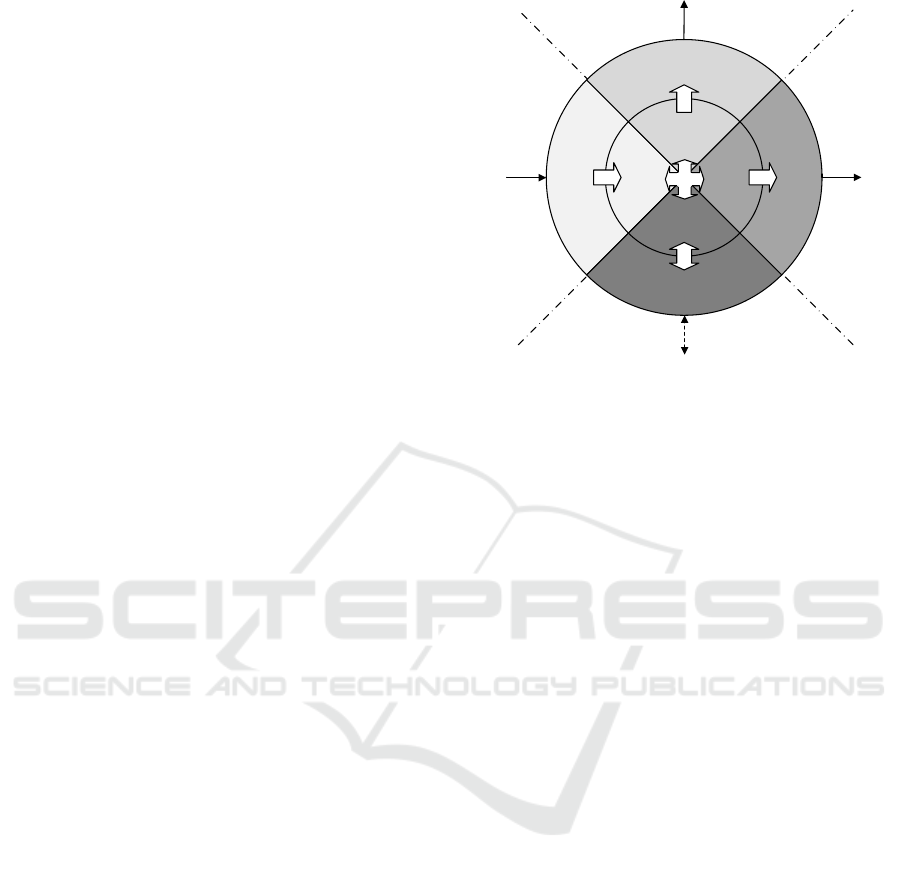

The phases of our model presented in this article

are grouped under two layers:

• a cognitive frontier (represented by the outer cir-

cle in Fig. 3), in charge of interaction with exter-

nal entities in the process of:

– acquiring (raw) information (sometimes re-

ferred to as data),

– storing and fetching accumulated knowledge,

– sharing instructions, and

– actuating decisions;

• a metacognitive hub (represented by the inner

disc in Fig. 3), in charge of controlling cognitive

phenomena and cognitive actions, i.e., of the in-

terwork of the internal processes in:

– processing the acquired information,

– building knowledge from experience (both

from outside information/knowledge and own

decisions),

– preparing instructions, and

– generating the needed decisions.

The metacognitive hub generates a new level of in-

formation. For example, the correlation between the

decide phase with the perceive for an endogenous

stimulus to discriminate self/nonself happens near the

boundary of the metacognitive hub.

Fig.3 shows our model for a networked cognitive

entity. Four categories span across two layers. En-

tity’s input u, output y, internal state y, stored knowl-

edge z and shared instructions (information, knowl-

edge or commands) x are also shown in the figure.

The phases introduced above belong to the fol-

lowing four categories (represented by the sectors in

Fig. 3):

• observation (perceive, analyse),

• consolidation (learn, remember),

• interworking (synthesise, share) and

• operation (decide, apply).

The phases are respectively linked across the cog-

nitive frontier and the metacognitive hub, as sum-

marised in Table 1.

perceive

analyse

decide

apply

share

synthesise

learn

remember

y

z

u v

x

OBSERVATION

OPERATION

INTERWORKING

CONSOLIDATION

Figure 3: Model for a networked cognitive entity. Revised

from (Celentano, 2014).

We can say that the cognitive entity has observ-

able information u that is used to store knowledge

z and operate instructions v. Instructions, i.e., in-

formation, knowledge or commands x, can be exter-

nalised. All the above – u, v, z and x – are at the

border of the cognitive entity. The internal steps, oc-

curring at the boundary between the cognitive frontier

and the metacognitive hub, imply the presence of, re-

spectively, perceived information

˜

u, learnt knowledge

˜

z, decision

˜

v and synthesised instructions

˜

x. Every-

thing within the core of the metacognitive hub con-

tributes to the internal state y of the cognitive entity.

Fig. 3 illustrates the above notation with the model.

The nature of the modelled system has an impact on

the architecture of the cognitive frontier.

The interaction of a cognitive entity with other

entities or its environment occurs with different di-

rections in different phases, which has an impact on

the realisation of those phases in a cognitive device.

The cognitive entity gathers inputs or stimuli from

an outside entity or the environment, in the perceive

phase, whereas it actuates taken decisions in the ap-

ply phase. In the share phase, a cognitive entity com-

municates instructions, i.e., information, knowledge

or commands, as needed. In the remember phase, it

remembers the learnt or consolidated knowledge and

provides both ways access to it. All the above phases

possess interfaces to the outside of the cognitive en-

tity. The latter, remember, may not necessarily have

such, but in general it can. For example, this is needed

when access to information is distributed as in clouds,

whereas a working memory is likely to be local to the

entity. The four outer phases above represent the in-

terfaces towards the entity’s outside world, the cogni-

Multi-robot Systems, Machine-Machine and Human-Machine Interaction, and Their Modelling

121

Table 1: The cognitive and metacognitive phases (left and right half of the table respectively) in the four categories (rows).

Revised from (Celentano, 2014).

cognitive frontier metacognitive hub

perceive acquiring information processing information analyse

remember storing/fetching knowledge building knowledge learn

share sharing instructions preparing instructions synthesise

apply actuating decisions generating decisions decide

tive frontier. Those interfaces are used as inputs and

outputs for commands, ports for communication and

data storage and retrieval.

While the outer phases of each category exchange

information with their corresponding inner phases

only, the four inner phases exchange their own inputs

and outputs among themselves, so they form what we

call the metacognitive hub. In the analyse phase, in-

puts are filtered, converted and analysed. Conversely,

in synthesise, instructions are selected and adapted

for the specific sharing use. So, in the learn phase,

the cognitive entity processes commands (actions are

not directly observable at metacognitive hub, but de-

cisions are) and information (including the feedback

from actions, when available), to build knowledge.

Learning may also be exploited for pre-processing in-

coming stimuli or process instructions for a specific

sharing scope. All the above is exploited to take de-

cisions (including prioritisation and planning of ac-

tions) in the decide phase.

The generic model described here incorporates the

functionalities of a cognitive entity. Clearly, in a given

cognitive entity, only a subset of those may be present

(or, equivalently, be active).

6 INTERACTING COGNITIVE

ROBOTS

This section illustrates how the model presented in

Sect. 5 is combined into a generic scenario incorpo-

rating those is Sect. 4.

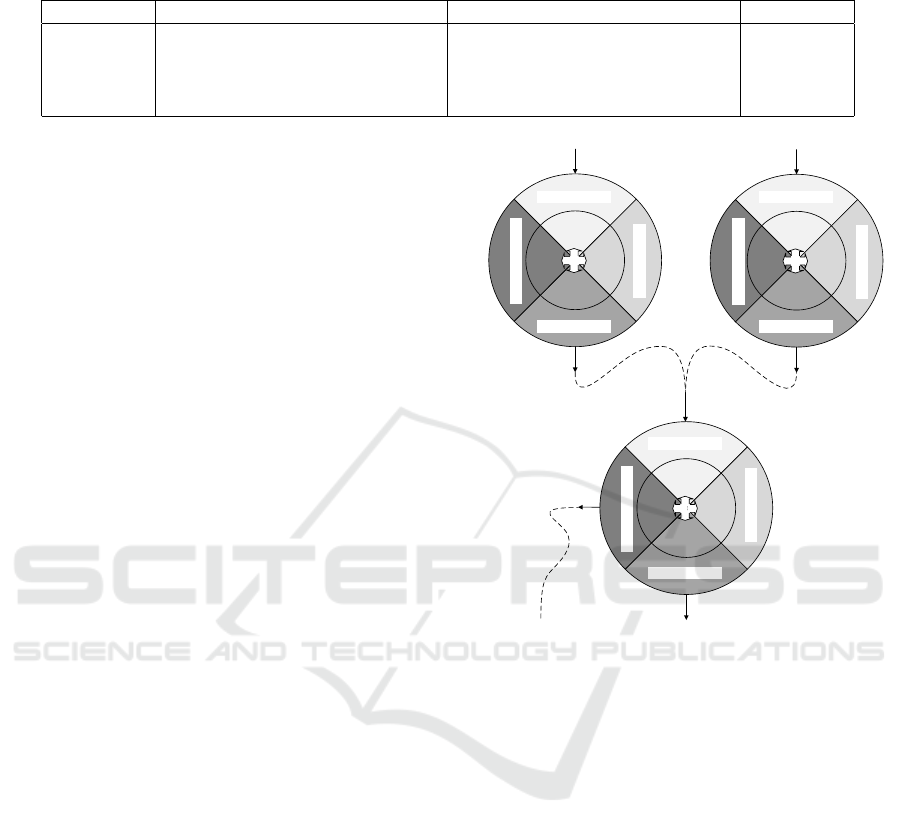

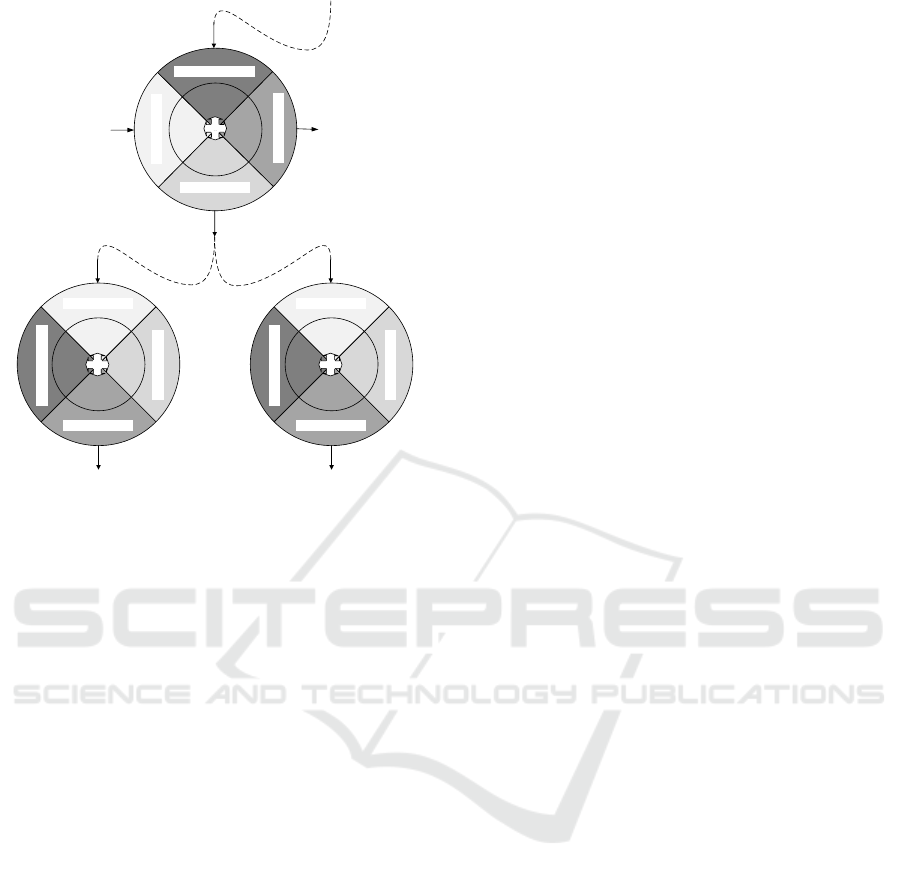

Fig. 4 depicts a scenario in which the interaction

follows an act-and-observe (Sect. 4) model. The robot

at the bottom senses the actuations of the robots at

the top of the figure. In this example, the first robot

may consolidate the related knowledge for (possibly

shared) further use.

Fig. 5 depicts a scenario in which the interaction

follows a share-and-act (Sect. 4) model. The robots

at the bottom acquire the instructions shared by the

robot at the top of the figure. Here, the latter robot

gathers before that additional information from the

consolidated knowledge.

y

u

v

OBSERVATION

OPERATION

INTERWORKING

CONSOLID ATION

y

u

v

OBSERVATION

OPERATION

INTERWORKING

CONSOLIDATION

y

u

v

OBSERVATION

OPERATION

INTERWORKING

CONSOLIDATION

z

Figure 4: A robot (bottom) sensing actuations of other

robots (top).

7 EVALUATION

Some related models have been mentioned in Sect. 2.

A thorough collation of the proposed model with re-

lated ones is given in (Celentano, 2014) and it is not

repeated here, but the key points are summarised in

the following.

Cognitive entity models can be assigned to dif-

ferent classes, depending on the relation among their

cognitive functions or phases (Celentano, 2014):

A. strictly cycle-based models (phases are visited

according to a pre-defined sequence) (Shewhart,

1939; Polya, 1957; Nilsson, 1984; Dobson et al.,

2006);

B. nested loops or parallel loops (Bryant, 2004);

C. cycle-based models with a shared phase (all

phases except one are visited according to a pre-

defined sequence, but they are connected to a

shared phase): (Kephart and Chess, 2003; Albus,

1991);

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

122

y

vu

x

OPERATION

OBSERVATION

INTERWORKING

CONSOLIDATION

y

u

v

OBSERV AT ION

OPER ATION

INTERWORKING

CONSOLIDATION

y

u

v

OBSERV AT ION

OPER ATION

INTERWORKING

CONSOLIDATION

z

Figure 5: Robots (bottom) acquiring the instructions shared

by another robot (top).

D. branched cycles with a shared phase (a cycle is

somehow defined but short-cuts can be taken)

(Mitola, 2000);

E. purely phase-based models (phases are visited

with a pattern depending on a specific instantia-

tion or condition) (Anderson et al., 2004; Langley

et al., 1987; Kieras and Meyer, 1997; Artz and

Armour-Thomas, 1992; Celentano, 2014).

Models with a pre-defined visiting sequence (A, B,

C) are less flexible in their applicability over a wider

range of cases, which is conversely better for models

allowing more free dynamics (D, E). The proposed

model belongs to the latter class E.

Looking into the peculiarities of the above phases,

the cognitive functions in an intelligent entity can be

categorised as (Celentano, 2014):

1. collecting the inputs;

2. evaluating the inputs;

3. interworking (sharing instructions);

4. decision-making (including planning);

5. implementing the decisions;

6. verifying and generalising the lessons learnt.

As noted earlier, one notable novelty of the proposed

model is the explicit presence of the phases collec-

tively labelled above with 3. The explicit presence in

the model of those functions is important since they

are related to the specification of the (natural) inter-

faces involved in social interaction.

The importance of social interaction has been dis-

cussed in the above examples (Sect. 4 and 6).

8 DISCUSSION

Already in the case of an isolated intelligent entity,

self-awareness is crucial for a more conscious exe-

cution of tasks. In the case of flocks or swarms, self-

awareness improves the behaviour of the entire group.

In fact, in those cases it is important to discriminate

what I am doing from what the others are doing, even

if the final outcome (e.g., relative positions) may look

similar. Self-awareness is a pre-requisite for a more

effective self-organisation. Self-awareness may ex-

ploit explicit and/or implicit interaction among enti-

ties.

The model presented in the previous Sect. 5 spec-

ifies cognitive phases belonging to interacting cog-

nitive entities, together with the interfaces related to

those cognitive functions. Those functions may be re-

alised by separate and integrated element, therefore

it makes sense to bring these specifications to estab-

lished or standardised frameworks, such as ROS or

SISO.

The cognitive phases of the present model can be

realised as concurrent processes similarly as in the

ROS architecture. In particular, the share phase of the

model presented in this article is used to communicate

instructions before the apply and sometimes even be-

fore the decide phase).

Instructions in the model of Sect. 5 correspond to

orders, reports and requests in BML. Due to its prop-

erties of unambiguity and applicability to interna-

tional environments, BML is a possible candidate for

robotic applications also in other more general cases

such as civilian scenarios, including industry environ-

ments. Extensions of it might be needed, though, also

to let it cope with such diverse situations.

9 CONCLUSION

This article discussed some fundamental issues con-

cerning multi-entity intelligent systems. Particular at-

tention has been given to the observation and related

decision-making for system control and examples for

multi-robot systems have been discussed. The ma-

jor means for the needed interaction of robots are the

sensing of actuation of other robots, and the coordi-

nation based on sharing and acquisition of relevant

instructions. The latter implies the possibility of in-

teraction beyond the simplest actuator-sensor acquisi-

tion of information.

Multi-robot Systems, Machine-Machine and Human-Machine Interaction, and Their Modelling

123

A cognitive mobile robot should therefore explic-

itly include such interworking capabilities, which are

not found in present models for artificial entities. The

model presented in this article fills this gap. Illus-

trative examples show the role the cognitive phases

of this model have in the above two classes of multi-

robot social interaction. Specifically, this article dis-

cussed with the aid of examples how self-awareness

can be exploited for self-organisation by detecting

and managing behavioural anomalies, i.e., abnormal-

ities in group dynamics.

The cognitive phases of the present model are in-

spired by both artificial and natural cognitive enti-

ties. This makes such a holistic model suitable for use

not only for machine-machine, but also for human-

machine interaction.

Interoperability across diverse operational do-

mains and system design reuse across different appli-

cation domains are both timely topics, and for that

robust specifications of functionalities and interfaces

to suit the above goals are needed. This article aims

at this target.

It is evident that explicitly considering social in-

teraction in the model for an intelligent entity brings

advantages to flexible design and specifications. A

comparative evaluation of the proposed model has

been given here. The evaluation of the concepts dis-

cussed in this article by implementation into mobile

robots is part of our future work in this area.

ACKNOWLEDGEMENTS

The authors would like to thank Infotech Oulu for the

financial support.

REFERENCES

Albus, J. (1991). Outline for a theory of intelligence. IEEE

Trans. Syst. Man Cybernet., 21(3):473–509.

Anderson, J., Bothell, D., Byrne, M., Douglass, S., Lebiere,

C., and Qin, Y. (2004). An integrated theory of the

mind. Psychol. Rev., 111(4):1036–1060.

Artz, A. and Armour-Thomas, E. (1992). Development of a

cognitive-metacognitive framework for protocol anal-

ysis of mathematical problem solving in small groups.

Cognition and Instruction, 9(2):137–175.

Brooks, R. (1986). A robust layered control system for a

mobile robot. IEEE J. Robot. Autom., RA-2(1):14–23.

Bryant, D. (2004). Modernizing our cognitive model. In

Command Control Res. Technol. Symp., pages 1–14,

San Diego, CA, US.

Celentano, U. (2014). Dependable cognitive wireless net-

working: Modelling and design. Number 488. Acta

Universitatis Ouluensis Series C: Technica.

Celentano, U. and R

¨

oning, J. (ms). Modelling interactive

cognitive entities. Manuscript.

Dobson, S., Denazis, S., Fern

´

andez, A., Gaiti, D., Gelenbe,

E., Massacci, F., Nixon, P., Saffre, F., Schmidt, N., and

Zambonelli, F. (2006). A survey of autonomic com-

munications. ACM Trans. Autonomous Adapt. Syst.,

1(2):223–259.

Dupourqu

´

e, V. (1984). A robot operating system. In IEEE

Int. Conf. Robot. Autom., volume 1, Atlanta, GA, US.

Kephart, J. and Chess, D. (2003). The vision of autonomic

computing. IEEE Comput., 36(1):41–50.

Kerr, J. and Nickels, K. (2012). Robot operating systems:

Bridging the gap between human and robot. In South-

eastern Symp. Syst. Theory (SSST), pages 99–104,

Jacksonville, FL, US.

Kieras, D. and Meyer, D. (1997). An overview of the EPIC

architecture for cognition and performance with appli-

cation to human-computer interaction. Hum.-Comput.

Interact., 12:391–438.

La, H., Lim, R., and Sheng, W. (2015). Multirobot coop-

erative learning for predator avoidance. IEEE Trans.

Control Syst. Technol., 23(1):52–63.

Langley, P., Laird, J., and Rogers, S. (2009). Cognitive ar-

chitectures: Research issues and challenges. Cogn.

Sys. Res., (10):141–160.

Langley, P., Newell, A., and Rosenbloom, P. (1987). Soar:

An architecture for general intelligence. Artif. Intell.,

33(1):1–64.

M

¨

aenp

¨

a

¨

a, T., Tikanm

¨

aki, A., Riekki, J., and R

¨

oning, J.

(2004). A distributed architecture for executing com-

plex tasks with multiple robots. In IEEE Int. Conf.

Robot. Autom. (ICRA), pages 3449–3455, New Or-

leans, LA, US.

Mitola, J. (2000). Cognitive radio: An integrated agent

architecture for software defined radio. Kungliga

Tekniska H

¨

ogskola (KTH), Stockholm.

Nilsson, N., editor (1984). Shakey the robot. Number Tech-

nical Note 323. Artificial Intelligence Center, SRI In-

ternational, Menlo Park, CA.

Peschl, M., R

¨

oning, J., and Link, N. (2012). Human integra-

tion in task-driven flexible manufacturing systems. In

Katalinic, B., editor, Ann. DAAAM 2012 & Proc. 23rd

Int. DAAAM Symp., pages 85–88, Zadar, Croatia.

Polya, G. (1957). How to solve it: A new aspect of math-

ematical method. Doubleday Anchor Books, Garden

City, NY, 2nd edition.

Quigley, M., Gerkey, B., Conley, K., Faust, J., Foote, T.,

Leibs, J., Berger, E., Wheeler, R., and Ng, A. (2009).

ROS: an open-source robot operating system. In ICRA

Workshop Open Source Software, Kobe, Japan.

Rein, K., Schade, U., and Hieb, M. (2009). Battle man-

agement language (BML) as an enabler. In IEEE Int.

Conf. Commun. (ICC), Dresden, Germany.

Remmersmann, T., Br

¨

uggemann, B., and Miłosław, F.

(2010). Robots to the ground. In Mil. Commun. In-

form. Systems Conf. (MCC), pages 61–68, Warsaw,

Poland.

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

124

Shewhart, W. (1939). Statistical method from the viewpoint

of quality control. Graduate School of the Department

of Agriculture, Washington, DC. Reprint by Dover

Publications, Mineola, NY, 1986.

Thomas, R., DaSilva, L. A., and MacKenzie, A. (2005).

Cognitive networks. In IEEE Int. Symp. New Fron-

tiers Dynam. Spectrum Access Netw. (DySPAN), pages

352–360, Baltimore, MD, US.

Multi-robot Systems, Machine-Machine and Human-Machine Interaction, and Their Modelling

125