EMOTION CLASSIFICATION BASED ON PHYSIOLOGICAL

RESPONSES INDUCED BY NEGATIVE EMOTIONS

Discrimination of Negative Emotions by Machine Learning Algorithms

Eun-Hye Jang

1

, Byoung-Jun Park

1

, Sang-Hyeob Kim

1

and Jin-Hun Sohn

2

1

BT Convergence Technology Research Department, Electronics and Telecommunications Research Institute

Daejeon, Republic of Korea

2

Department of Psychology/Brain Research Institute, Chungnam National University, Daejeon, Republic of Korea

Keywords: Emotion Classification, Negative Emotion, Machine Learning Algorithm, Physiological Signal.

Abstract: The one of main topic of emotion recognition or classification research is to recognize human’s feeling or

emotion using physiological signals, which is one of the core processes to implement emotional intelligence

in HCI research. The aim of this study was to identify the optimal algorithm to discriminate negative

emotions (sadness, anger, fear, surprise, and stress) using physiological features. Physiological signals such

as EDA, ECG, PPG, and SKT were recorded and analysed. 28 features were extracted from these signals.

For classification of negative emotions, five machine learning algorithms, namely, LDF, CART, SOM,

Naïve Bayes and SVM were used. Result of emotion classification showed that an accuracy of emotion

classification using SVM was the highest (100.0%) and that of LDA was the lowest (41.3%). 78.2%, 45.8%,

and 73.3% were shown as the accuracy of emotion classification in CART, SOMs and Naïve Bayes,

respectively. This can be helpful to provide the basis for the emotion recognition technique in HCI.

1 INTRODUCTION

Emotion recognition or classification is one of the

core processes to implement emotional intelligence

in human computer interaction (HCI) research

(Wagner, Kim, Andre, 2005). It is highly desirable

(even mandatory) that the response of the computer

takes into account the emotional or cognitive state of

the user in HCI applications such as computer aided

tutoring and learning (Sebe, Cohen, Huang, 2005).

In basic emotions, negative emotions are primarily

responsible for gradual declination or downfall of

our normal thinking process, which is essential for

our natural (unforced) survival, even in the struggle

for existence.

Emotion classification has been studied using

facial expression, gesture, voice, and physiological

signals. In particular, physiological signals have

advantages which are less affected by environment

than any other modalities as well as possible to

observe user’s state in real time. Also, they aren’t

caused by responses to social masking or factitious

emotion expressions and are related to emotional

state (Drummond, Quah, 2001).

Recently, emotion classification using

physiological signals has been performed by various

machine learning algorithms, e.g., Fisher Projection

(FP), k-Nearest Neighbor algorithm (kNN), Linear

Discriminant Function (LDF), Sequential Floating

Forward Search (SFFS), and Support Vector

Machine (SVM). Previous works conducted a

recognition accuracy of over 80% on average seems

to be acceptable for realistic applications (Picard,

Vyzas, Healey, 2001; Cowie et al., 2001; Haag et al.,

2004; Healey, 2000; Nasoz et al., 2003; Calvo,

Brown, Scheding, 2009).

In this paper, we were to identify the best

emotion classifier with feature selections using

physiology signals induced by negative emotions

(sadness, anger, fear, surprise, and stress). For this,

electrodermal activity (EDA), electrocardiogram (ECG),

skin temperature (SKT), and photoplethysmography

(PPG) are acquired and analysed to extract features

for emotional pattern dataset. Also, to identify the

best algorithm being able to classify negative

emotions, we used 5 machine learning algorithms,

which are Linear Discriminant Analysis (LDA),

Classification And Regression Tree (CART), Self

453

Jang E., Park B., Kim S. and Sohn J..

EMOTION CLASSIFICATION BASED ON PHYSIOLOGICAL RESPONSES INDUCED BY NEGATIVE EMOTIONS - Discrimination of Negative

Emotions by Machine Learning Algorithms.

DOI: 10.5220/0003871304530457

In Proceedings of the International Conference on Bio-inspired Systems and Signal Processing (BIOSIGNALS-2012), pages 453-457

ISBN: 978-989-8425-89-8

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

Organizing Map (SOM), Naïve Bayes, and SVM,

which are used the well-known emotion algorithms.

2 EMOTION CLASSIFICATION

TO DISCRIMINATE NEGATIVE

EMOTIONS

12 college students (21.0 years ± 1.48) have

participated in this study. They reported that they

have no history of medication due to heart disease,

respiration disorder, or central nervous system

disorder. They filled out a written consent before the

beginning of the study and compensated $20 per

session for their participation.

2.1 Emotional Stimuli

Fifty emotional stimuli (5 emotions x 10 sets), which

are the 2-4 min long audio-visual film clips captured

originally from movies, documentary, and TV shows,

were used to successfully induce emotions (sadness,

anger, fear, surprise, and stress) in this study (Figure 1).

(a) sadness

(b) anger

(c) fear

(d) surprise (e) stress

Figure 1: The example of emotional stimuli.

The stimuli were examined their appropriateness

and effectiveness by preliminary study which 22

college students rated the category and intensity of

their experienced emotion on questionnire after they

were presented each film clip. The appropriateness

means the consistency between emotion intended by

experimenter and participanats’ experienced. The

effectiveness was determined by the intensity of

emotions reported and rated by the participants on a

1 to 11 point Likert-type scale (e.g., 1 being “least

surprising” or “not surprising” and 11 being “most

surprising”). The result showed that emotional

stimuli had the appropriateness of 91% and the

effectiveness of 9.4 point on average.

2.2 Experimental Settings and

Procedures

The laboratory is a room of 5mⅹ2.5m size having a

sound-proof (lower than 35dB) of the noise level

where any outside noise or artifact are completely

blocked. A comfortable chair is placed in the middle

of the laboratory and 38 inch TV monitor set for

presentation of film clips is placed in front of the

chair. An intercommunication device is placed to the

right side of chair for subjects to communicate with

an experimenter. A CCTV is installed on the top of

the monitor set to observe participants’ behaviours.

Prior to the beginning of experiment, experiment

procedure was introduced to participants, and

electrodes on their wrist, finger, and ankle were

attached for measurement of physiological signals.

Physiological signals were measured for 1 min prior

to the presentation of the stimulus (baseline) and for

2 to 4 min during the presentation of the stimulus

(emotional state) then for 1min after presentation of

the stimulus as recovery term.

2.3 Physiological Measures

The physiological signals were acquired by the

MP100 system (Biopac system Inc., USA). The

sampling rate of signals was fixed at 256 samples for

all the channels. EDA was measured from two

Ag/AgCl electrodes attached to the index and middle

fingers of the non-dominant hand. ECG was

measured from both wrists and one left ankle

(reference) with the two-electrode method based on

lead I. PPG and SKT were measured from the little

finger and the ring finger of the non-dominant hand,

respectively. Appropriate amplification and band-

pass filtering were performed.

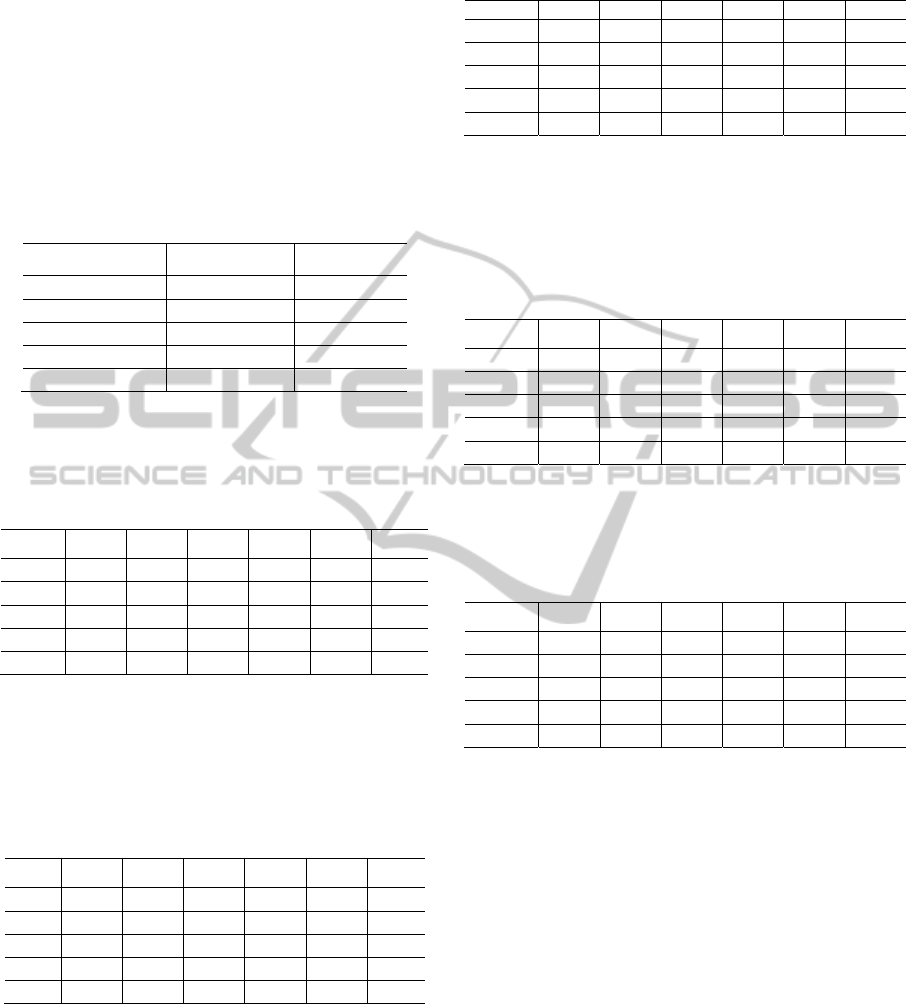

The obtained signals were analyzed for 30 sec from

the baseline and the emotional states by

AcqKnowledge (ver 3.8.1) software (USA). The 28

features were extracted and analyzed from the

physiological signals as shown in Table 1 and Figure 2.

Skin conductance level (SCL), average of skin

conductance response (mean SCR) and number of

skin conductance response are obtained from EDA.

The mean (mean SKT) and maximum skin

temperature (max SKT) and the mean amplitude of

blood volume changes (mean PPG) are gotten from

SKT and PPG, respectively.

ECG was analysed in the view point of time

(statistical and geometric approaches) and frequency

domain (FFT and AR). RRI is the interval time of R

peaks on the ECG signal. RRI and heart rate (HR)

offers the mean RRI (mean RRI) and standard

BIOSIGNALS 2012 - International Conference on Bio-inspired Systems and Signal Processing

454

Table 1: Features extracted from physiological signals.

Signals Features

EDA SCL, NSCR, meanSCR

SKT meanSKT, maxSKT

PPG meanPPG

ECG

Time

domain

Statistical

Parameter

meanRRI, stdRRI,

meanHR, RMSSD, NN50,

pNN50

Geometric

parameter

SD1, SD2, CSI, CVI,

RRtri, TINN

Frequency

domain

FFT

FFT_apLF, FFT_apHF,

FFT_nLF, FFT_nHF,

FFT_LF/HF ratio

AR

AR_apLF, AR_apHF,

AR_nLF, AR_nHF,

AR_LF/HF ratio

Figure 2: The example of acquired physiological signals.

deviation (std RRI), the mean heart rate (mean HR),

RMSSD, NN50 and pNN50. RMSSD is the square

root of the mean of the sum of the squares of

differences between successive RRIs. NN50 is the

number of RRI with 50msec or more and the

proportion of NN50 divided by total number of RRI

is pNN50. In addition to those, RRI triangular index

(RRtri) and TINN are extracted from the histogram

of RRI density as a geometric parameter. RRtri is to

divide the entire number of RRI by the magnitude of

the histogram of RRI density and TINN is the width

of RRI histogram (M-N) as shown in Figure 3.

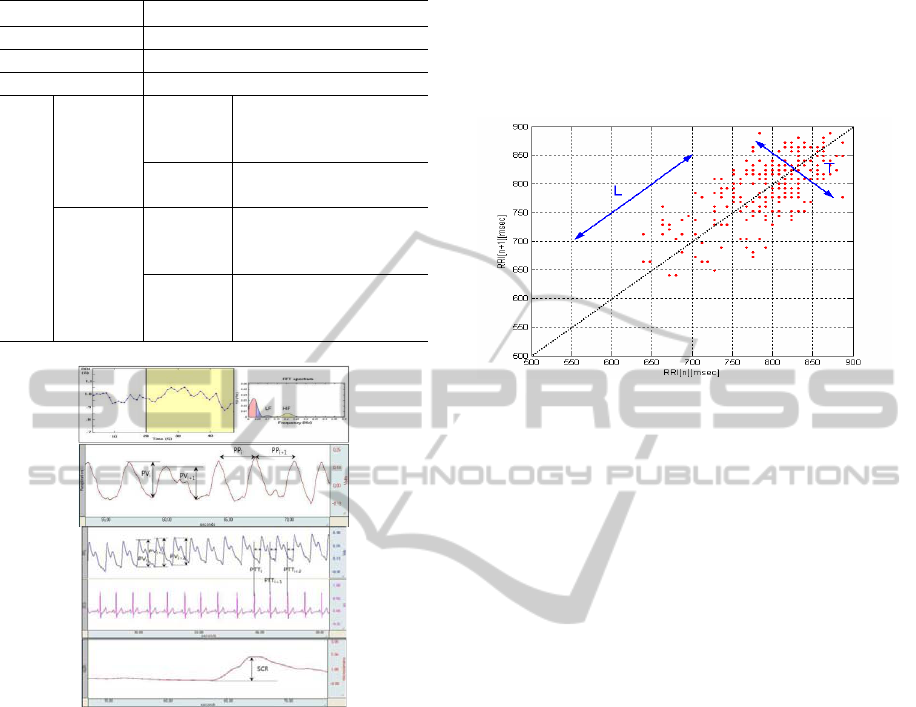

The relations between RRI(n) and RRI(n+1) are

shown in Fig. 6 called Lorentz plot or Poincare plot.

Here, n and n+1 are n-th and n+1-th values of RRI,

respectively. In the figure, L is the direction that is

efficient for representing data, and T is the

orthogonal direction of L. The standard deviations,

SD1 and SD2, are gotten for T and L directions,

respectively. The cardiac sympathetic index (CSI) is

calculated by CSI = 4SD2/4SD1 and the cardiac

vagal index (CVI) is obtained from CVI = log10

(4SD1 * 4SD2) as an emotional feature. SD1, SD2,

CSI and CVI reflect short term HRV (Heart Rate

Variability), long term HRV, sympathetic nerve

activity and parasympathetic activity, respectively.

Figure 3: Lorentz plot of RRI.

For extracting an emotional feature based on

physiological signals, we use the fast Fourier

transform (FFT) and the auto regressive (AR) power

spectrum. The band of low frequency (LF) is

0.04~0.15 Hz and the high frequency (HF) is

0.15~0.4Hz. The total spectral power between 0.04

and 0.15 Hz is apLF and the normalized power of

apLF is nLF. apHF and nHF are the total spectral

power between 0.15 and 0.4 Hz and the normalized

power, respectively. L/Hratio means the ratio of low

to high frequency power. These parameters are

resulted by means of FFT and AR. LF and HF are

used as indexes of sympathetic and vagus activity,

respectively. The L/Hratio reflects the global

sympatho-vagal balance and can be used as a

measure of this balance.

2.4 Data Analysis

450 physiological signal data (5 emotions x 10

stimuli x 9 cases) were used for emotion

classification except for severe artifact effect by

movements, noises, etc. To identify the emotion

classification algorithm being able to best recognize

5 emotions by the 28 physiological features, five

machine learning algorithms, namely, LDA which is

one of the oldest mechanical classification systems,

CART which is a robust classification and

regression tree, unsupervised SOM, Naïve Bayes

classifier based on density, and SVM with the

Gaussian radial basis function kernel were used.

EMOTION CLASSIFICATION BASED ON PHYSIOLOGICAL RESPONSES INDUCED BY NEGATIVE EMOTIONS

- Discrimination of Negative Emotions by Machine Learning Algorithms

455

3 RESULTS OF EMOTION

CLASSIFICATION

The 28 features extracted from physiological signals

were applied to emotion classification algorithms,

i.e., LDA, CART, SOMs, Naïve Bayes and SVM for

emotion classification of 5 emotions. Table 2 shows

the result of emotion classification by 5 algorithms.

Table 2: Result of emotion classification by 5 machine

learning algorithms.

Algorithm Accuracy (%) Features (N)

LDA 41.3 28

CART 78.2 28

SOMs 45.8 28

Naïve Bayes 73.3 28

SVM 100.0 28

In analysis of LDA, sadness was recognized by

LDA with 35.6%, anger 33.3, fear 50.5%, surprise

38.8%, and stress 49.0% (Table 3).

Table 3: Result of emotion classification by LDA.

Sadness Anger Fear Surprise Stress Total

Sadness

35.6 22.1 14.4 6.7 21.2

100.0

Anger

14.3 33.3 23.8 6.7 21.9

100.0

Fear 9.9 12.9 50.5 13.9 12.9 100.0

Surprise 10.7 16.5 15.5 38.8 18.4 100.0

Stress 12.0 15.0 9.0 15.0 49.0 100.0

CART provided accuracy of 78.2% when it

classified all emotions. In sadness, accuracy of

85.6% was achieved with CART, 77.1% in anger,

79.2% in fear, 72.8% in surprise and 76.0% in stress

(Table 4).

Table 4: Result of emotion classification by CART.

Sadness Anger Fear Surprise Stress Total

Sadness

85.6 3.8 2.9 2.9 4.8

100.0

Anger

9.5 77.1 1.9 3.8 7.6

100.0

Fear 2.0 7.9 79.2 2.0 8.9 100.0

Surprise 4.9 6.8 9.7 72.8 5.8 100.0

Stress 9.0 5.0 6.0 4.0 76.0 100.0

The result of emotion classification using SOMs

showed that according to orders of sadness, anger,

fear, surprise, and stress, recognition accuracy of

73.1%, 42.9%, 38.6%, 41.7%, and 32.0% were

obtained by SOMs (Table 5).

Table 5: Result of emotion classification by SOMs.

Sadness Anger Fear Surprise Stress Total

Sadness

73.1 7.7 4.8 9.6 4.8

100.0

Anger

24.8 42.9 11.4 10.5 10.5

100.0

Fear 31.7 14.9 38.6 9.9 5.0 100.0

Surprise 23.3 9.7 15.5 41.7 9.7 100.0

Stress 27.0 13.0 9.0 19.0 32.0 100.0

The accuracy of Naïve Bayes algorithm to classify

all emotion was 73.3%. And each emotion was

recognized by Naïve Bayes with 77.9% of sadness,

72.4% of anger, 78.2% of fear, 59.2% of surprise

and 79.0% of stress (Table 6).

Table 6: Result of emotion classification by NAÏVE BAYES.

Sadness Anger Fear Surprise Stress Total

Sadness

77.9 3.8 2.9 4.8 10.6

100.0

Anger

1.9 72.4 8.6 4.8 12.4

100.0

Fear 5.0 6.9 78.2 3.0 6.9 100.0

Surprise 5.8 12.6 8.7 59.2 13.6 100.0

Stress 14.0 3.0 2.0 2.0 79.0 100.0

Finally, accuracy of SVM was 100.0% and

classifications of each emotion were 100.0% in all

emotions (Table 7).

Table 7: Result of emotion classification by SVM.

Sadness Anger Fear Surprise Stress Total

Sadness

100.0 0.0 0.0 0.0 0.0

100.0

Anger

0.0 100.0 0.0 0.0 0.0

100.0

Fear 0.0 0.0 100.0 0.0 0.0 100.0

Surprise 0.0 0.0 0.0 100.0 0.0 100.0

Stress 0.0 0.0 0.0 0.0 100.0 100.0

4 CONCLUSIONS

This study was to identify the optimal emotion

recognition algorithm for classifying negative

emotions, sadness, anger, fear, surprise, and stress.

Our result showed that SVM is the best algorithm

being able to classify these emotions. The SVM

showed that an accuracy much higher chance

probability when applied to physiological signal

databases. SVM is designed for two class

classification by finding the optimal hyperplane

where the expected classification error of test

samples is minimized. This was utilized as a pattern

classifier to overcome the difficulty in pattern

classification due to the large amount of within-class

variation of features and the overlap between classes,

although the features were carefully extracted

(Takahashi, 2004). However, our result is the

BIOSIGNALS 2012 - International Conference on Bio-inspired Systems and Signal Processing

456

classification accuracy using only training set which

didn’t divide training and test sets. An average

accuracy of classification is necessary for repeated

sub-sampling validation using training and test sets

as the choice of training and test sets can affect the

results. Therefore, we will perform the average

classification in further analysis.

LDA and SOM had the lowest accuracy in

emotion recognition. We think that this result in

variability of physiological signals. The more or less

unique and person-independent physiological

response among different emotions may fall off the

recognition rate with the number of emotion

categories (Kim, Bang, Kim, 2004). These

uncertainties could be an important cause that

deteriorated the recognition ratio and troubled the

model selection of the LDA or SOM. Also, it is

possible that result of LDA which is one of the

linear models or SOM didn’t perform well because

our physiological signals didn’t linear variables and

the extracted features didn’t linearly separable and

large variability between the features used. To

overcome this, we needed performance of some

normalization of features being able to reduce large

variability.

Nevertheless, our results led to better chance to

recognize human emotions and to identify the

optimal emotion classification algorithm by using

physiological signals. This will be able to apply to

the realization of emotional interaction between man

and machine and play an important role in several

applications, e.g., the human-friendly personal robot

or other devices.

ACKNOWLEDGEMENTS

This research was supported by the Converging

Research Center Program funded by the Ministry of

Education, Science and Technology (No.

2011K000655 and 2011K000658).

REFERENCES

Wagner, J., Kim, J., Andre, E., 2005. From physiological

signals to emotions: Implementing and comparing

selected methods for feature extraction and

classification, IEEE International Conference on

Multimedia and Expo, Amsterdam, pp. 940-943.

Sebe, N., Cohen, I., Huang, T. S., 2005. Multimodal

emotion recognition, in Handbook of Pattern

Recognition and Computer Vision, Amsterdam:

Publications of the Universiteit van Amsterdam, pp. 1-23.

Picard, R. W., Vyzas, E., Healey J., 2001. Toward

machine emotional intelligence: Analysis of

affective physiological state, IEEE Transaction on

Pattern Analysis and Machine Intelligence, vol. 23,

pp. 1175-1191.

Cowie, R., Douglas-Cowie, E., Tsapatsoulis, N., Votsis,

G., Kollias, S., Fellenz, W., Taylor, J. G., 2001.

Emotion recognition in human computer interaction,

IEEE Signal Processing Magazine, Vol. 18, pp. 32-80.

Haag, A., Goronzy, S., Schaich, P., Williams, J., 2004.

Emotion recognition using bio-sensors: First steps

towards an automatic system, Affective Dialogue

Systems, vol. 3068, pp. 36-48.

Healey, J. A., 2000. Wearable and automotive systems for

affect recognition from physiology, Doctor of

Philosophy, Massachusetts Institute of Technology,

Cambridge, MA.

Nasoz, F., Alvarez, K., Lisetti, C. L., Finkelstein, N., 2003.

Emotion recognition from physiological signals for

user modelling of affect, International Journal of

Cognition, Technology and Work-Special Issue on

Presence, Vol. 6, pp. 1-8.

Drummond, P. D., Quah, S. H., 2001. The effect of

expressing anger on cardiovascular reactivity and

facial blood flow in Chinese and Caucasians,

Psychophysiology, vol. 38, pp. 190-196.

Calvo, R., Brown, I., Scheding, S., 2009. Effect of

experimental factors on the recognition of affective

mental states through physiological measures, AI

2009: Advances in Artificial Intelligence, vol. 5866,

pp. 62-70.

Kim, K. H., Bang, S. W., Kim, S. R., 2004. Emotion

recognition system using short-term monitoring of

physiological signals, Medical & Biological

Engineering & Computing, vol. 42, pp.419-427.

Takahashi, K., 2004. Remarks on emotion recognition

from bio-potential signals, 2nd International

Conference on Autonomous Robots and Agents,

Palmerston North, pp. 186-191.

EMOTION CLASSIFICATION BASED ON PHYSIOLOGICAL RESPONSES INDUCED BY NEGATIVE EMOTIONS

- Discrimination of Negative Emotions by Machine Learning Algorithms

457