VIDEO BASED FLAME DETECTION

Using Spatio-temporal Features and SVM Classification

Kosmas Dimitropoulos, Filareti Tsalakanidou and Nikos Grammalidis

Informatics and Telematics Institute, CERTH, 1st km Thermi-Panorama Rd., Thessaloniki, Greece

Keywords: Wildfires, Video Surveillance, Flame Detection, 2D Wavelet Analysis, Feature Fusion, SVM Classification.

Abstract: Video-based surveillance systems can be used for early fire detection and localization in order to minimize

the damage and casualties caused by wildfires. However, reliability of these systems is an important issue

and therefore early detection versus false alarm rate has to be considered. In this paper, we present a new

algorithm for video based flame detection, which identifies spatio-temporal features of fire such as colour

probability, contour irregularity, spatial energy, flickering and spatio-temporal energy. For each candidate

region of an image a feature vector is generated and used as input to an SVM classifier, which discriminates

between fire and fire-coloured regions. Experimental results show that the proposed methodology provides

high fire detection rates with a reasonable false alarm ratio.

1 INTRODUCTION

Wildfires are considered one of the most dangerous

natural disasters having serious ecological,

economic and social impacts. Beyond taking

precautionary measures to avoid a forest fire, early

warning and immediate response to a fire breakout is

the only way to minimize its consequences. Hence,

the most important goal in fire surveillance is quick

and reliable detection and localization of fire. Video-

based forest surveillance is one of the most

promising solutions for automatic forest fire

detection, offering several advantages such as: low

cost and short response time for fire and smoke

detection, ability to monitor large areas and easy

confirmation of the alarm by a human operator

through the surveillance monitor (Ko, 2011).

However, the main disadvantage of these optical

based systems is increased false alarm rate due to

atmospheric conditions (clouds, shadows, dust

particles), light reflections etc (Stipaničev, 2006).

Video-based flame detection techniques have

been widely investigated during the last decade. The

main challenge that researchers have to face is the

chaotic and complex nature of the fire phenomenon

and the large variations of flame appearance in

video. In (Toreyin, 2005), Toreyin et al. proposed an

algorithm in which flame and fire flicker is detected

by analyzing the video in the wavelet domain, while

in (Toreyin, 2006) a hidden markov model was used

to mimic the temporal behavior of flame. Zhang et al

(Zhang, 2008) proposed a contour based forest fire

detection algorithms using FFT and Wavelet,

whereas Celik and Demiral (Celik, 2009) presented

a rule-based generic color model for fire-flame pixel

classification. More recently, Ko et al (Ko, 2010)

used hierarchical Bayesian networks for fire-flame

detection and a fire-flame detection method using

fuzzy finite automata (Ko, 2011).

Despite of extensive research results listed in

literature, video flame detection remains an open

issue. This is due to the fact that many natural

objects have similar colours as those of the fire

(including the sun, various artificial lights or

reflections of them on various surfaces) and can

often be mistakenly detected as flames. In this paper,

we present a new algorithm for video based flame

detection, which identifies spatio-temporal features

of fire such as color probability, countour

irregularity, spatial energy, flickering and spatio-

temporal energy. More specifically, we propose a

new colour analysis approach using non-parametric

modelling and we apply a 2D wavelet analysis only

on the red channel of image to estimate the spatial

energy. In addition to the detection of flickering

effect, we introduce a new feature concerning the

variance of spatial energy in a region of the image

within a temporal window in order to further reduce

the false alarm rate. Finally, a Support Vector

Machine (SVM) classifier is applied for the

453

Dimitropoulos K., Tsalakanidou F. and Grammalidis N..

VIDEO BASED FLAME DETECTION - Using Spatio-temporal Features and SVM Classification.

DOI: 10.5220/0003858104530456

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2012), pages 453-456

ISBN: 978-989-8565-03-7

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

discrimination between fire and non-fire regions of

an image.

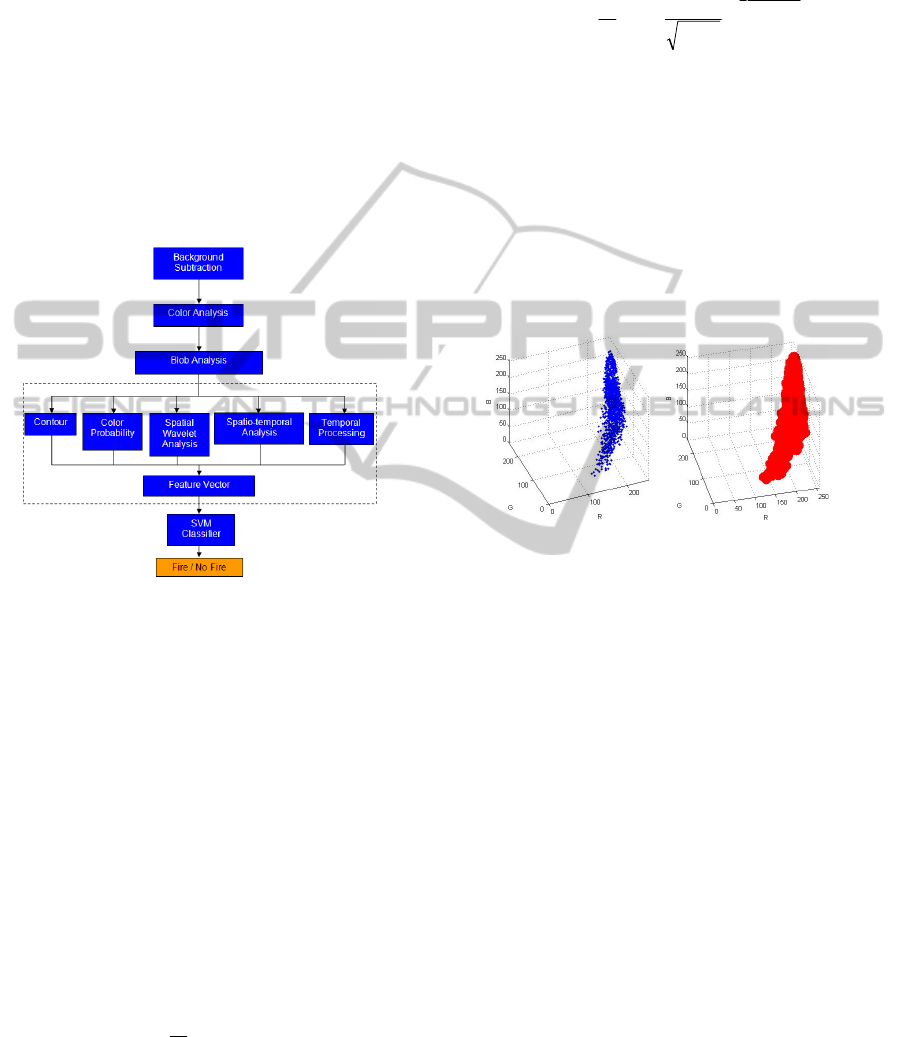

2 METHODOLOGY

The proposed algorithm initially applies background

subtraction and colour analysis processing to

identify candidate flame regions on the image and

subsequently distinguishes between fire and non-fire

objects based on a set of extracted features as shown

in Figure 1. The different processing steps of the

proposed algorithm are described in detail in the

following sections.

Figure 1: The proposed methodology.

In the first processing step moving pixels are

detected using a simple median average background

subtraction algorithm (McFarlane, 1995). The

second processing step aims to filter out non-fire

coloured moving pixels. Only the remaining pixels

are considered for blob analysis, thus reducing the

required computational time. To filter out non-fire

moving pixels, we compare their values with a

predefined RGB colour distribution created by a

number of pixel-samples from video sequences

containing real fires.

Let x

1

, x

2

,......x

Ν

be the fire-coloured samples of

the predefined distribution. Using these samples, the

probability density function of a moving pixel x

t

can

be non-parametrically estimated using the kernel K

h

(Elgammal, 2000) as:

N

i

itht

xxK

N

x

1

)(

1

)Pr(

If we choose our kernel estimator function, K

h

, to

be a Gaussian kernel, K

h

=N(0,S), where S represents

the kernel function bandwidth, and we assume

diagonal correlation matrix S with a different kernel

bandwidths σ

j

for the j

th

colour channel, then the

density can be estimated as:

N

i

d

j

xx

j

t

j

j

i

j

t

e

N

x

1

1

)(

2

1

2

2

2

2

11

)Pr(

Using this probability estimation, the pixel is

considered as a fire-coloured pixel if P

r

(x

t

)<th,

where the threshold th is a global threshold for all

samples of the predefined distribution and can be

adjusted to achieve a desired percentage of false

positives. Hence, if the pixel has an RGB value,

which belongs to the distribution of Figure 2(b), then

it is considered as a fire-coloured pixel. After the

blob analysis step, the colour probability of each

candidate blob is estimated by summing the colour

probabilities of all pixels in the blob.

Figure 2: (a) RGB color distribution and (b) flame color

distribution with a global threshold around each sample.

The next processing step concerns the countour

of the blob. In general, shapes of flame objects are

often irregular, so high irregularity/variability of the

blob contour is also considered as a flame indicator.

This irregularity is identified by tracing the object

contour, starting from any pixel on it. Thus a

direction arrow is defined for each pixel on the

contour, which can be specified by a label L, where

80

L

, assuming 8-connected pixels, as shown

in the figure below.

The variability of the contour for each contour

pixel can be measured by calculating the difference

(distance) between two consecutive directions (from

and to the specific pixel) using the following

formula returning a distance between 0 and 4 for

each pixel:

otherwisedd

ddifdd

d

maxmin

minmaxminmax

8

4

Where d

min

=min(d

1

,d

2

), d

max

=max(d

1

,d

2

) and d

1

, d

2

are the codes of two consecutive directions.

The average value of this distance function can

be used as a measure of the irregularity of the

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

454

contour and is used as the second extracted feature

in the proposed approach.

The third feature concerns the spatial variation in

a blob. Usually, there is higher spatial variation in

regions containing fire compared to fire-coloured

objects. To this end, a two-dimensional wavelet is

applied on the red channel of the image, and the

final mask is obtained by adding low-high, high-low

and high-high wavelet sub-images. For each blob,

spatial wavelet energy is estimated by summing the

individual energy of each pixel. However, the spatial

energy within a blob region changes, since the shape

of fire changes irregularly due to the airflow caused

by wind or the type of burning material. For this

reason, another (fourth) feature is extracted

considering the spatial variation in a blob within a

temporal window of N frames.

The final feature concerns the detection of

flickering within a region of a frame. In our

approach, we use a temporal window of N frames (N

equals 50 in in our experiments), yielding an 1-D

temporal sequence of N binary values for each pixel

position. Each binary value is set to 0 or 1 if the

pixel was labeled as “no flame candidate” or “flame

candidate” respectively after the background

extraction and color analysis steps. To quantify the

effect of flickering, we traverse this temporal

sequence for each “flame candidate” pixel and

measure the number of transitions between “flame

candidate” and “no flame candidate” (0->1). The

number of transitions can directly be used as a flame

flickering feature, with flame regions characterized

by a sufficiently large value of flame flickering.

For the classification of the 5-dimensional

feature vectors, we employed a Support Vector

Machines (SVM) classifier using RBF kernel. The

training of the SVM classifier was based on

approximately 500 feature vectors extracted from

500 frames of fire and non-fire video sequences.

3 EXPERIMENTAL RESULTS

To evaluate the performance of the proposed

method, videos containing fire or fire-colored

objects were used. Figure 3(a) shows the detection

of flame along with the intermediate feature masks

(background, color, spatial wavelet, spatiotemporal

and temporal map), while in Figure 3(b), an example

with a video containing a moving fire-coloured

object is presented. As can be clearly seen from the

intermediate masks, feature values are higher in case

of flame detection due to the random behavior of

fire.

(a) (b)

Figure 3: (a) True detection of fire and (b) True rejection

of a fire-colored object (First row: Frame along with the

detected fire region, background subtraction mask, colour

analysis mask. Second row: Spatial wavelet analysis,

spatio-temporal mask and flickering mask).

Table 1: Test videos used for the evaluation of the

proposed algorithm.

Fire

Video 1

Non

Fire

Video 1

Fire

Video 2

Non

Fire

Video 2

Fire

Video 3

Non

Fire

Video 3

Fire

Video 4

Non

Fire

Video 4

Fire

Video 5

Non

Fire

Video 5

Fire

Video 6

Non

Fire

Video 6

Fire

Video 7

Non

Fire

Video 7

Fourteen test videos were used for the evaluation

of the algorithm. The first seven videos contain

actual fires, while the remaining seven contain fire

colored moving objects e.g. car lights, sun

VIDEO BASED FLAME DETECTION - Using Spatio-temporal Features and SVM Classification

455

reflections, etc. Screenshots from these videos are

presented in Table 1 (the first column presents flame

detection results in real fire scenes, while the second

column contains screenshots from videos with fire

colored moving objects). Results are summarized in

Table 2 and Table 3 in terms of the true positive,

false negative, true negative and false positive ratios,

respectively.

Experimental results show that the proposed

method provides high detection rates in all videos

containing fire, with a reasonable false alarm ratio in

videos without fire. The high false positive rate in

“Non_fire_video3” is due to the continuous

reflections of car lights on the road, however, we

believe that the results may be improved in the

future with a better training of the SVM classifier.

The proposed method runs at 9 fps when the size of

the video sequences is 320x240. The experiments

were performed with a PC that has a Core 2 Quad

2.4 GHz processor with 3GB RAM. In the future,

the speed of the algorithm can be further improved

by dividing the image in blocks instead of using blob

analysis, which increases the processing time.

Table 2: Experimental results with videos containing fires.

Video Name

True Positive (%) False Negative (%)

Fire Video 1 98.89 1.11

Fire Video 2 93.46 6.54

Fire Video 3 99.59 0.41

Fire Video 4 99.03 0.97

Fire Video 5 90.00 10.0

Fire Video 6 99.50 0.50

Fire Video 7 99.59 0.41

Total 97.65 2.35

Table 3: Experimental results with videos containing fire

coloured objects.

Video Name

True Negative

(%)

False Positive

(%)

Non Fire Video 1 100.00 0.00

Non Fire Video 2 97.41 2.59

Non Fire Video 3 74.37 25.63

Non Fire Video 4 100.00 0.00

Non Fire Video 5 99.68 0.32

Non Fire Video 6 100.00 0.00

Non Fire Video 7 97.96 2.04

Total 98.01 1.99

4 CONCLUSIONS

Early detection of fire is crucial for the suppression

of wildfires and minimization of its effects. Video

based surveillance systems for automatic forest fire

detection is a promising technology that can provide

real-time detection and high accuracy. In this paper,

we presented a flame detection algorithm, which

identifies spatio-temporal features of fire such as

color probability, countour irregularity, spatial

energy, flickering and spatio-temporal energy. The

final decision is made by an SVM classifier, which

classifies candidate image regions as fire or non-fire.

The proposed technique was evaluated in a database

of 14 video sequences and demonstrated increased

detection accuracy.

ACKNOWLEDGEMENTS

The research leading to these results has received

funding from the European Community's Seventh

Framework Programme (FP7-ENV-2009-1) under

grant agreement no FP7-ENV-244088 ''FIRESENSE''.

We would like to thank all project partners for their

fruitful cooperation within FIRESENSE project.

REFERENCES

Ko, B. C., Ham, S. J., Nam, J. Y., Modeling and

Formalization of Fuzzy Finite Automata for Detection

of Irregular Fire Flames, IEEE Transactions on

Circuits and Systems for Video Technology, May

2011.

Stipaničev, D., Vuko, T., Krstinić, D., Štula, M., Bodrožić,

Lj., Forest Fire Protection by Advanced Video

Detection System - Croatian Experiences, Third

TIEMS Workshop – Improvement of Disaster

Management System, Trogir, 26-27 September, 2006

Töreyin, B., Dedeoglu, Y., Gudukbay, U., Cetin, A.,

"Flame detection in video using hidden markov

models," IEEE Int. Conf. on Image Processing, pp.

1230-1233, 2005.

Toreyin, B.U., Dedeoglu, Y., Gudukbay, U. Cetin, A.E.,

Computer vision based method for real-time fire and

flame detection, Pattern Recognition Letters, 27, pp.

49–58, 2006.

Zhang, Z., Zhao, J., Zhang, D., Qu, C., Ke, Y., and Cai,

B. “Contour Based Forest Fire Detection Using FFT

and Wavelet”, In Proceedings of CSSE (1). 2008, 760-

763.

Celik, T., Demirel, H., "Fire detection in video sequences

using a generic color model," Fire Safety Journal, Vol.

44, pp. 147-158, 2009.

Ko, B., Cheong, K., Nam, J., "Early fire detection

algorithm based on irregular patterns of flames and

hierarchical Bayesian Networks," Fire Safety Journal,

Vol 45, Issue 4, pp. 262-270, 2010.

Elgammal, A. Harwood, D., Davis L. “Non-parametric

model for background subtraction” 6th European

Conference on Computer Vision, Vol. 1843, pp. 751-

767, Dublin, Ireland, 2000.

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

456