User Modelling and Emotion Recognition of Drivers

through a Multi-modal GPS Interface

Maria Virvou

Department of Informatics, University of Piraeus,

80 Karaoli & Dimitriou St., Piraeus 18534

Abstract. Drivers play an important role on road traffic. Traffic frequently has

a big impact on drivers’ emotions and drivers’ emotions have a big impact on

traffic. Traffic congestions may be the cause of human drivers’ frustration, loss

of their patience and control, leading to aggressiveness and so on. On the other

hand, drivers’ aggressiveness may cause dangerous driving, car accidents and

drivers’ fighting. This results in an endless loop of traffic problems that is

propagating along many drivers. However, even excessive enjoyment may also

lead to dangerous driving, since people may underestimate the road dangers

and drive carelessly. Thus, it is very important to aim at keeping drivers calm,

happy and alert when they drive. In view of this, it would be extremely useful

to extend the functionalities of existing GPSs to include user modelling and

emotion recognition abilities so that they may provide spontaneous assistance

that would be dynamically generated based on the results of the user modelling

and emotion recognition module. The action of GPSs would be to provide au-

tomatic recommendation to drivers that would be compatible with their own

preferences concerning alternative routes and make them feel happier and

calmer.

Keywords. User modelling, Traffic, Emotion recognition, Affective comput-

ing, Multi-modal GPS.

1 Introduction

With more and more people in the world and in the workforce, roads are becoming

increasingly crowded; when we’re all frustrated with traffic, sometimes people make

mistakes or pull impolite driving maneuvers, which can lead to anger from other

frustrated drivers; this often results in road rage, which can pose a significant threat to

health and safety for everyone on the road [1].

Counseling psychologist Jerry Deffenbacher and his colleagues [2] point out that:

“Those high-anger drivers are a source of alarm. Even typically calm, reasonable

people can sometimes turn into warriors behind the wheel; when provoked, they yell

obscenities, wildly gesture, honk and swerve in and out of traffic, and may endanger

their lives and others.”

In the official site of the city of Santa Rosa at the Section of Traffic [3] there is a

question: Which is an example of aggressive driving?

Virvou M. (2011).

User Modelling and Emotion Recognition of Drivers through a Multi-modal GPS Interface.

In Proceedings of the 1st International Workshop on Future Internet Applications for Traffic Surveillance and Management, pages 83-82

DOI: 10.5220/0004473300830082

Copyright

c

SciTePress

According to the same site, among other things the above question includes the

following examples:

Speeding up to make it through a yellow light.

Switching a lane without signaling first.

Going over the speed limit in a school zone or neighborhood.

Approaching so fast that the driver of another car that is stopped, feels threatened.

Tailgating a car to pressure the driver to go faster or move over.

Tailgating a car to punish the driver for something.

Driving with an alcohol level above the legal limit.

Drive while drowsy enough to have droopy eyes.

Making an obscene gesture at another road user.

Moreover, the Official U.S. Government site for distracted driving [4] warns peo-

ple, that: “Distracted driving is unsafe, irresponsible and in a split second, its conse-

quences can be devastating.” On the other hand, research and experience demonstrate

that happy drivers are better drivers [5].

In view of the above, it seems that human emotions play a very important role to

traffic management and it is to the benefit of traffic to put research energy on recog-

nizing automatically human emotions of drivers and building systems that would

react accordingly. In view of this, it would be extremely useful to extend the func-

tionalities of existing GPSs to include user modelling and emotion recognition abili-

ties so that they may provide spontaneous assistance that would be dynamically gen-

erated based on the results of the user modelling and emotion recognition module.

The action of GPSs would be to provide automatic personalized recommendation to

drivers that would be compatible with their own preferences concerning alternative

routes and make them feel happier and calmer.

The main body of this paper is organized as follows: In Section 2, related work on

research of ourselves and others is surveyed and discussed. In Section 3, the aims of

the proposed research is presented. In Section 4, the proposed solution is presented.

Finally in Section 5 the conclusions of this paper are drawn and also connections to

proposals of other participants are highlighted.

2 Related Work

Affective Computing is a recent area of Computer Science that studies human emo-

tions:

z Emotion Recognition by the computer

z Emotion Generation from the computer

Until recently, human emotions were not considered at all by the designers of user

interfaces. However, research that flourished during the past decade has been based

on the important argument that human feelings play an important role on human deci-

sion making and affect all areas of human computer interaction. There has been a lot

of research on automatically recognizing human feelings and generating emotions

from the computing. This kind of research is labeled affective computing. So far,

84

there has been significant progress in this field. Nevertheless, there is still a lot of

basic research needed and thus affective computing remains a hot research topic.

Another area that has been investigated by many researchers during the past dec-

ade is that of user modeling and generation of personalized recommendations to com-

puter users. Recommender systems constitute an area of research that attracts re-

searchers from a wide area of computer science and applications varying from e-

commerce to electronic libraries.

2.1 Previous Work on Affective Computing and GPS Recommender Systems

in our Lab

In our own research lab we have made extensive research in the areas of affective

computing and recommender systems [e.g. 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16].

This research has resulted in four Ph.D.s that were completed successfully, two

monographs and many papers in the area.

One recent relevant basic research project that we had was entitled:

“Technologies of affective human-computer interaction and application in mobile

learning” and it was developed in the Software Engineering Lab of the Department of

Informatics at the University of Piraeus. Our Industrial Partner was Sony Ericsson.

The main research problem that was investigated was recognition and generation of

emotions through multi-modal hand-held devices. In the context of this project, two

Ph.Ds were supervised and completed:

1. Ph.D. student: Efthimios Alepis (Supervisor M.Virvou)

2. Ph.D. student: : Ioanna-Ourania Stathopoulo (Supervisor G. Tsihrintzis).

The research topics that we dealt with were:

• Emotion recognition through microphone and keyboard

• Emotion generation in animated agents

• Incorporation of animated agents in mobile learning

• Visual Analysis of facial expressions

• Construction of our own database of facial expressions

2.2 Previous Work of Affective Computing and Traffic

Two powerful partners — a well-funded consumer-facing company and a top re-

search university, such as MIT – joined forces to produce inventive solutions to real-

world problems. Audi of America wanted to be involved in conversations about

America’s urban future and provide cars that fit into tomorrow’s tech-dominated

cities. The carmaker wanted to encourage people to admire and buy Audi cars by

giving them an online tool with information about their roadways. The MIT lab Re-

search Laboratory of Electronics studied exactly the kind of data that the Audi idea

needed. The result is the Audi Road Frustration Index (Fig. 2), an entertaining web-

85

Analysis of facial expressions....

We have built our own database

Front View

Side View Front View Side View

Angry

Angry

Disgust

Disgust

Bored

Bored

-

-

Sleepy

Sleepy

Smile

Smile

Sad

Sad

Surprise

Surprise

Neutral

Neutral

Fig. 1. Facial expressions denoting human emotions.

Fig.2. Audi’s Frustration Index.

86

site that launched in beta in mid-September. It tells users at any given hour how the

roadways and drivers’ moods in their city rank compared to others nationwide. For

instance, Sacramento, Calif., is often as miserable as New York City [17].

Thus, Audi's latest ad campaign for the new 2012 A6 claims to want to make the

road "a more intelligent place," starting with asking drivers to pledge to be on their

best behavior while behind the wheel. At the top of the German automaker's list of

sins is driving while drinking a latte, leading us to perceive the effort as only half

serious [18].

Another joint effort on researching the influence of emotions on drivers arose

from a leading University, Stanford, and a leading car company, Toyota [19]. This

effort resulted in a study that examines whether characteristics of a car voice can

influence driver performance and affect.

• In a 2 (driver emotion: happy or upset) x 2 (car voice emotion: energetic vs.

subdued) experimental study, participants (N=40) had emotion induced

through watching one of two sets of 5-minute video clips. Participants then

spent 20 minutes in a driving simulator where a voice in the car spoke 36

questions (e.g., "How do you think that the car is performing?") and com-

ments ("My favorite part of this drive is the lighthouse.") in either an ener-

getic or subdued voice.

• Participants were invited to interact with the car voice. When user emotion

matched car voice emotion (happy/energetic and upset/subdued), drivers had

fewer accidents, attended more to the road (actual and perceived), and spoke

more to the car.

• To assess drivers' engagement with the voice, participants were invited to

speak to the Virtual Passenger.

Other recent research efforts include Using Paralinguistic Cues in Speech to Rec-

ognise Emotions in Older Car Drivers by Christian Jones and Ing-Marie Jonsson [20].

Finally, there has been research on “Analysis of Real-World Driver's Frustration”

[21], to name some very recent research projects in the area.

2.3 Conclusions from the Related Work

It seems that there is interest from World Leading Universities, such as MIT and

Stanford and leading car manufacturers such as AUDI and TOYOTA respectively, to

produce new affective systems for the drivers.

However, there are not yet many such research attempts. This means that there is a

lot of scope in this particular research topic that seems to be gaining research interest.

In this respect, our proposed approach is very innovative to the field.

3 Aims of the Research Proposed

The aims of this work package are the following:

87

1. Recognition of basic emotions of drivers based on visual-facial and audio-

lingual recognition and contextual information.

a. Visual facial recognition through a camera

b. Audio lingual recognition through a microphone

c. Recording of contextual information that contributes to change of

drivers’ feelings.

2. Monitoring and recording drivers’ preferences with respect to traffic and

making inferences leading to recommendations.

Technically, the above aims are going to be pursued using the following:

• Neural network-based and support vector machine-based classifiers for the visual

facial recognition,

• User stereotypes and multi-criteria decision making theories for the audio-lingual

recognition and the contributing contextual information,

• User stereotypes, machine learning algorithms and user monitoring for the acqui-

sition of user models of drivers with respect to their needs, preferences and

knowledge level of routes.

• Multi-criteria decision making for the selection of appropriate recommendations.

• Advanced multi-level recommender systems, which produce recommendations

by combining a specific driver’s preferences with preferences of ‘similar’ driv-

ers.

4 Proposed Solution

We propose to build a user modeling module that will take into account

• Individual features of drivers such as route preferences, age, car type.

• Emotions of drivers in particular situations

• Traffic information

The driver would be monitored by a camera into the car so that image analysis of

his/her face may take place. The driver will also have a microphone to interact with

the system. The habits and behaviour of drivers will be analysed and recorded in a

long term user model over the web.

In return, the driver will receive

1. Personalised recommendations about routes

2. Personalised advice on handling emotions of drivers in particular

situations

The proposed solution will include the following:

• A navigation system which will provide location-based services with a per-

sonalized way, taking into account the preferences and the interests of each

user.

• Location-Based Services are provided via Web Services

• A personalization mechanism

88

The term “location-based services” (LBS) is a rather recent concept that integrates

geographic location with the general notion of services.

The five categories in Fig. 3 characterize what may be thought of as standard loca-

tion-based services.

Fig.3. Standard location-based services.

One of the most basic characteristics of the LBS, is their potential of personaliza-

tion as they know which user they are serving, under what circumstances and for

what reason.

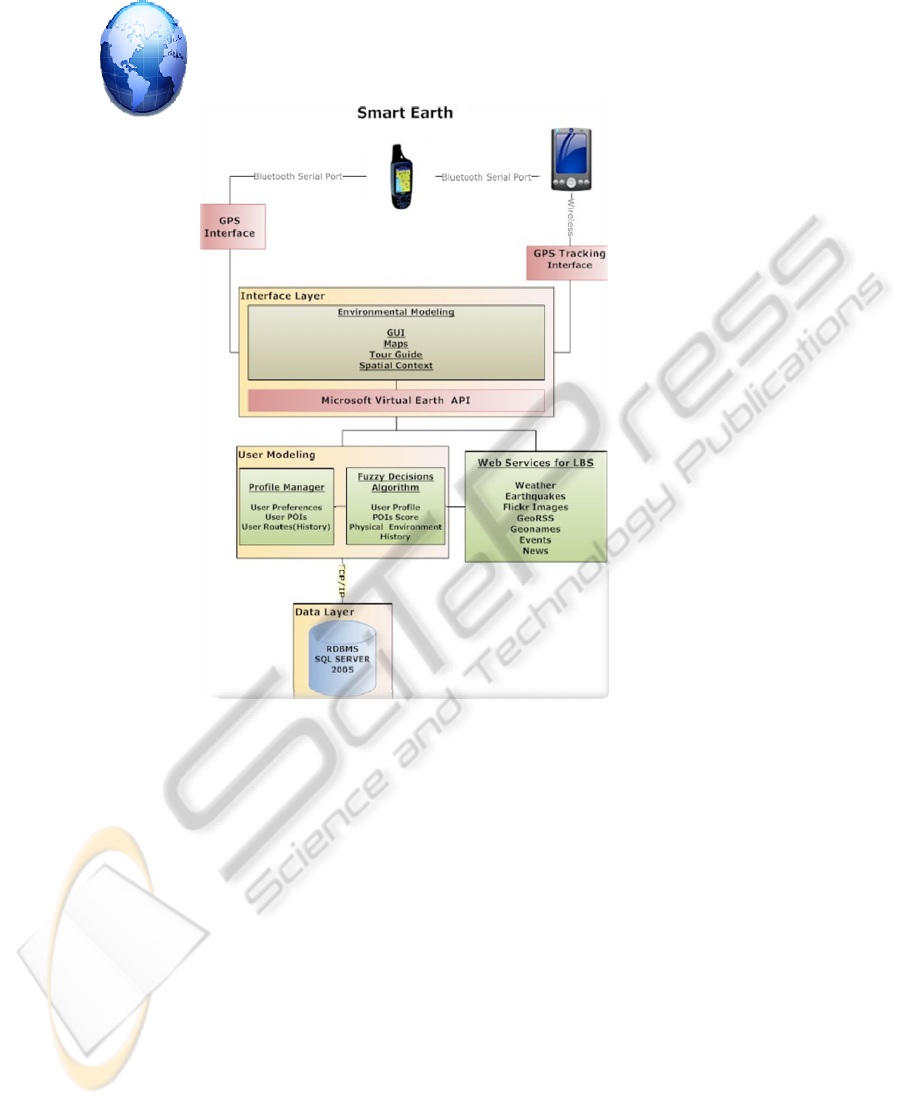

A system architecture that can be used is illustrated in Fig. 4. This architecture il-

lustrates how user modeling can be incorporated in order to record drivers’ prefer-

ences and the use an inference engine to produce hypotheses on future preferences of

users on other similar situations. Moreover, it shows how information from LBS can

also be used. This information can be processed and passed to a user interface device,

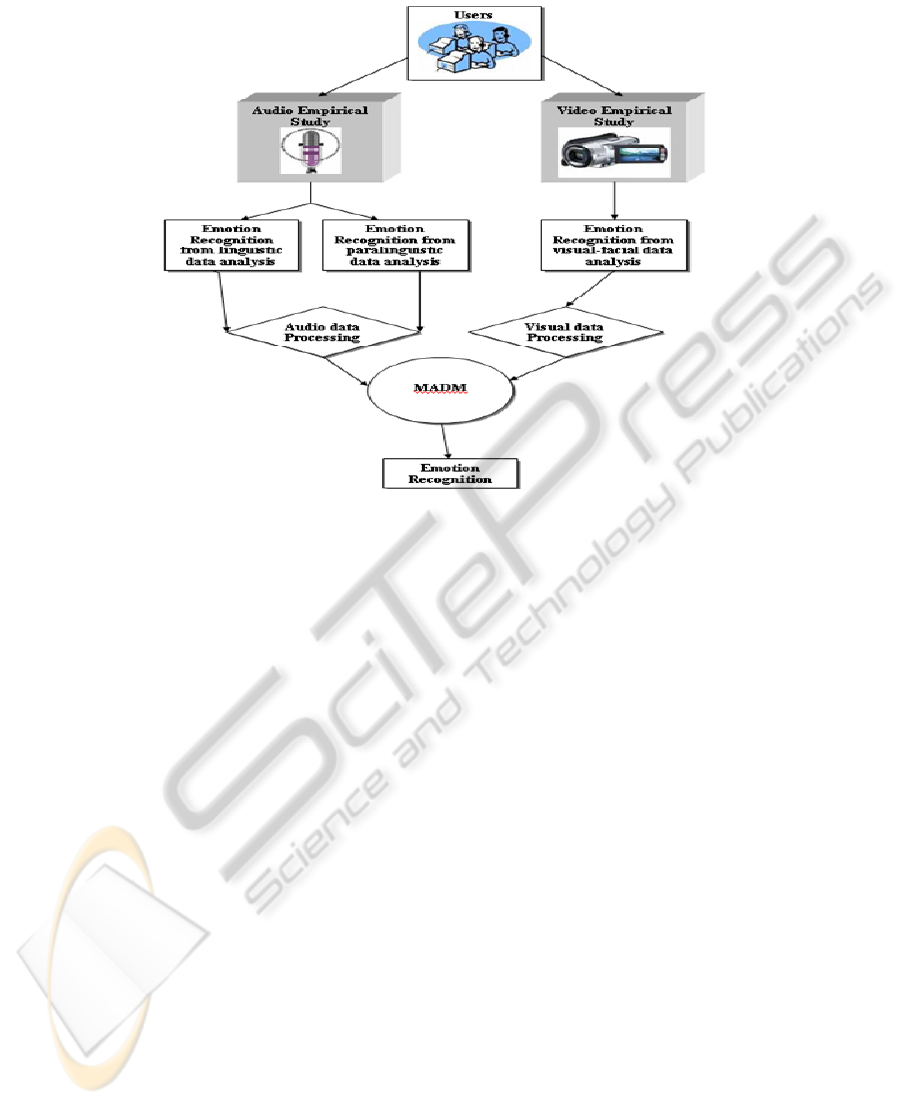

such as a GPS in a car. Moreover, Figure 5 illustrates three input devices, such as

camera, microphone and keyboard that can be used to process information about a

driver in terms of his/her emotional state. This kind of processing will be incorpo-

rated in the user modeling component as illustrated in Fig. 4.

5 Conclusions and Connection with other Research

We propose to extend the functionalities of existing GPSs to include user modelling

and emotion recognition abilities so that they may provide spontaneous assistance

that would be dynamically generated based on the results of the user modelling and

emotion recognition module. In return, the action of GPSs would be to provide auto-

matic recommendation to drivers that would be compatible with their own prefer-

ences concerning alternative routes and make them feel happier and calmer.

Our contribution could use information on traffic and routes that could be devel-

oped by other partners of the project such as Thomas Jackson and Tom Thomas.

Relevant goals to Tom Thomas individual preferences of drivers concerning favourite

routes. Also we see ourselves in the user interface (human computer interaction) as

mentioned by Brahmananda Sapkota in his talk about functionalities to drivers. Final-

ly, we can provide individualised information to the “informed driver” of Apostolos

Kotsialos.

89

Fig. 4. A system architecture.

References

1. Elizabeth Scott, M.S. (2010), Article on Road Rage: How to Manage Road Rage to Stay

Healthier and SaferAbout.com Guide, Updated November 22, 2010.

2. Deffenbacher, J.L., Filetti, L.B., Lynch, R.S., Dahlen, E.R. & Oetting E.R. (2002): Cogni-

tive-behavioral treatment of high anger drivers. Behaviour Research and Therapy, Volume

40, Issue 8, August 2002, Pages 895-910

3. http://ci.santarosa.ca.us/departments/publicworks/traffic/streetsmarts/drivers/Pages/Aggress

iveDrivers

4. Official U.S. Government site for distracted driving http://www.distraction.gov/

5. Groeger, J.A. (2000): Understanding driving: Applying cognitive psychology to a complex

everyday task. Philadelphia, PA: Psychology Press.

6. Alepis, E. & Virvou, M. (2012): “Object Oriented User Interfaces For Personalized Learn-

ing” Book Series: Intelligent Systems Reference Library, SPRINGER, to appear.

90

Fig. 5. Affective recognition through 2 modalities of interaction.

7. Alepis, E. & Virvou, M. (2011): Automatic generation of emotions in tutoring agents for

affective e-learning in medical education. Expert Systems with Applications 38(8): 9840-

9847 (2011)

8. Alepis, E., Stathopoulou, I.O., Virvou, M., Tsihrintzis, G.A. & Kabassi, K.(2010): Audio-

lingual and Visual-facial Emotion Recognition: Towards a Bi-modal Interaction System.

IEEE International Conference on Tools in Artificial Intelligence ICTAI (2) 2010: 274-281

9. Alepis, E., Virvou, M., & Kabassi, K. (2011): Combining Two Decision Making Theories

for Affective Learning in Programming Courses. CSEDU (1) 2011: 103-109

10. Chalvantzis, C. & Virvou, M. (2008): Fuzzy Logic Decisions and Web Services for a Per-

sonalized Geographical Information System. New Directions in Intelligent Interactive Mul-

timedia 2008: 439-450

11. Katsionis, G. , Virvou, M. (2008): Personalised e-learning through an educational virtual

reality game using Web services. Multimedia Tools Appl. 39(1): 47-71 (2008)

12. Stathopoulou, I.O. & Tsihrintzis, G.A “Visual Affect Recognition” , Book Series: Frontiers

in Artificial Intelligence and Applications, Volume 214, 2010

13. Stathopoulou, I.O., Alepis, E., Tsihrintzis, G.A & Virvou, M (2010): On assisting a visual-

facial affect recognition system with keyboard-stroke pattern information. Knowledge -

Based Systems 23(4): 350-356 (2010)

14. Stathopoulou, I.O., Alepis, E., Tsihrintzis, G.A. & Virvou, M. (2009) On Assisting a Visu-

al-Facial Affect Recognition System with Keyboard-Stroke Pattern Information. SGAI

Conf. 2009: 451-463

15. Virvou, M., Tsihrintzis, G.A., Alepis, E. & Stathopoulou, I.O (2008).: Designing a multi-

modal affective knowledge-based user interface: combining empirical studies. Joint Con-

ference on Knowledge Based Software Engineering JCKBSE 2008: 250-259

91

16. Virvou, M. & Katsionis, G. 2008. “On the usability and likeability of virtual reality games

for education: The case of VR-ENGAGE” COMPUTERS & EDUCATION, Vol. 50 (1)

(Jan. 2008), pp. 154–178, Elsevier Science.

17. Voight, J. (2011) Salt Lake City Roads Worse Than New York's? Road Frustration Index

Says Yes. CNBC.com, http://www.cnbc.com/id/45150075/Salt_Lake_City_Roads_

Worse_Than_New_York_s_Road_Frustration_Index_Says_Yes

18. Autoblog: http://www.autoblog.com/2011/10/07/audis-road-frustration-index-plugs-new-

a6/

19. Nass, C., Jonsson, I.M., Harris, H., Reaves, B., Endo, J., Brave, S. & Takayama, L. (2005)

Improving automotive safety by pairing driver emotion and car voice emotion, CHI '05,

April 2005, Publisher: ACM

20. Jones, C. & Jonsson I.E. (2008) Using Paralinguistic Cues in Speech to Recognise Emo-

tions in Older Car Drivers. AFFECT AND EMOTION IN HCI, LNCS 2008, Vol. 4868

21. Malta, L.; Miyajima, C.; Kitaoka, N.; Takeda, K. (2011). Analysis of Real-World Driver's

Frustration” IEEE Transactions on Intelligent Transportation Systems, Volume: 12 Issue:1,

pp: 109 - 118

92