MANAGING MULTIPLE MEDIA STREAMS IN HTML5

The IEEE 1599-2008 Case Study

S. Baldan, L. A. Ludovico and D. A. Mauro

LIM - Laboratorio di Informatica Musicale, Dipartimento di Informatica e comunicazione (DICo)

Universit´a degli Studi di Milano, Via Comelico 39/41, I-20135 Milan, Italy

Keywords:

HTML5, Web player, Media streaming, IEEE 1599.

Abstract:

This paper deals with the problem of managing multiple multimedia streams in a Web environment. Multime-

dia types to support are pure audio, video with no sound, and audio/video. Data streams refer to the same event

or performance, consequently they both have and should maintain mutual synchronization. Besides, a Web

player should be able to play different multimedia streams simultaneously, as well as to switch from one to

another in real time. The clarifying example of a music piece encoded in IEEE 1599 format will be presented

as a case study.

1 INTRODUCTION

The problem of managing digital multimedia docu-

ments including audio and video in a Web environ-

ment is still a challenging matter. Often this problem

is solved by requiring the installation of extensions

and third-party plugins into Web browsers. As ex-

plained in Section 2, the draft of HTML5 tries to face

the problem by adopting a multimedia-oriented archi-

tecture based on ad hoc tags and APIs. It is worth

recalling that currently HTML5 is not a standard but

a draft, however it is under development by a W3C

working group and the most recent versions of the

main browsers available on the marketplace already

support its key features. Among others, we can cite

Google Chrome, Mozilla Firefox 4, Opera 11, and

Microsoft Internet Explorer 9.

HTML5 provides Web designers with some syn-

tactical instruments to support multimedia streams

natively. An interesting aspect that has not been

deeply explored yet is the simultaneous transmission

over the Web of a number of multimedia streams,

all related to the same object and having constraints

of mutual synchronization. On one hand, traditional

players for audio/video streams implemented through

HTML5 are already available, as the environment

offered by this (future) standard makes this activity

easy. On the other hand, the problem analyzed here

goes one step beyond, as the multimedia player has to

support multiple synchronized streams and it has to

implement advanced seek functions.

2 RELATED WORKS

In this section some related works based both on

HTML 5 or other platforms are presented. Consider-

ing the relative novelty of HTML5, most approaches

focus on the use of competing technologies such as

Adobe Flash and Microsoft Silverlight platforms.

• In (Daoust et al., 2010) the authors first ana-

lyze the new possibilities opened by <video>

tag, then they focus on the different strategies for

streaming media on the Web, such as HTTP Pro-

gressive Delivery, HTTP Streaming, and HTTP

Adaptive Streaming;

• (Harjono et al., 2010) studies the matter in depth,

pointing out how open standards can reduce the

dependencyon third party products such as Adobe

Flash or Microsoft Silverlight;

• MMP Video Editor is a browser-based video edi-

tor that consumes all Silverlight supported codecs

and produces a project file that can be transformed

into an EDL (Edit Decision List) or into a Smooth

Streaming Composite Stream Manifest (CSM). It

relies on Microsoft components such as IIS (In-

ternet Information Services) and Windows Media

Services in order to stream media. For instance,

this software framework was used in an initiative

where videos of theatrical performances are pre-

sented in a synchronized fashion with related ma-

terials (Barat´e et al., 2011);

• (Faulkner et al., 2010) presents a survey on Web

193

Baldan S., A. Ludovico L. and A. Mauro D..

MANAGING MULTIPLE MEDIA STREAMS IN HTML5 - The IEEE 1599-2008 Case Study.

DOI: 10.5220/0003651401930199

In Proceedings of the International Conference on Signal Processing and Multimedia Applications (SIGMAP-2011), pages 193-199

ISBN: 978-989-8425-72-0

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

video editors, comparing in particular EasyClip to

Youtube Video Editor;

• (Vaughan-Nichols, 2010) is a general introduction

to the new possibilities offered by HTML5;

• In (Laiola Guimar˜aes et al., 2010) the main con-

cern is the annotation within videos. For our

goals, this feature can be used for the presentation

of lyrics synchronized with audio/video contents.

The paper compares the possibilities of HTML5

with respect to NCL and SMIL;

• (Pfeiffer and Parker, 2009) poses the accent on the

accessibility of tag <video>;

What clearly emerges from this overview is the

absence of scientific works focusing on multiple me-

dia streams, e.g. the simultaneous presence of an au-

dio and a video track to be synchronized within a me-

dia player as well as the management of a number of

alternative media.

3 THE IEEE 1599 FORMAT

IEEE 1599-2008 is a format to describe single music

pieces. For example, an IEEE 1599 document can

be related to a pop song, to an operatic aria, or to a

movement of a symphony.

Based on XML (eXtensible Markup Language),

it follows the guidelines of IEEE P1599, “Recom-

mended Practice Dealing With Applications and Rep-

resentations of Symbolic Music Information Using

the XML Language”. This IEEE standard has been

sponsored by the Computer Society Standards Activ-

ity Board and it was launched by the Technical Com-

mittee on Computer Generated Music (IEEE CS TC

on CGM) (Baggi, 1995).

The innovative contribution of the format is pro-

viding a comprehensive description of music and

music-related materials within a unique framework.

In fact, the symbolic score - intended here as a se-

quence of music symbols - is only one of the many

descriptions that can be provided for a piece. For in-

stance, all the graphical and audio instances (scores

and performances) available for a given piece are fur-

ther descriptions; but also text elements (e.g. cata-

logue metadata, lyrics, etc.), still images (e.g. photos,

playbills, etc.), and moving images (e.g. video clips,

movies with a soundtrack, etc.) can be related to the

piece itself. Please refer to (Haus and Longari, 2005)

for a complete treatment of the subject. Among other

applications, such a rich description allows the design

and implementation of advanced browsers.

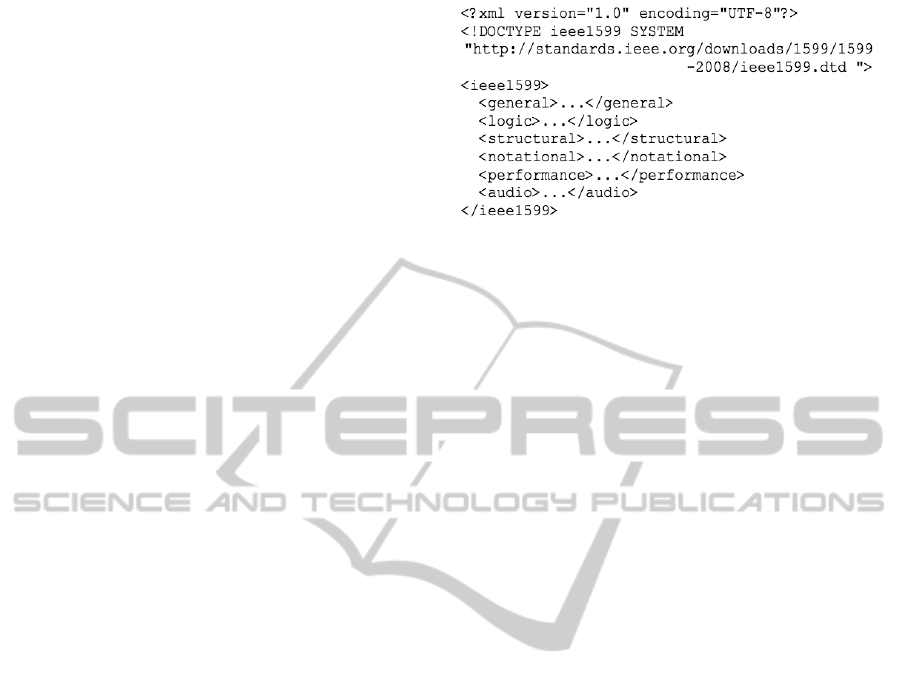

Figure 1: The XML stub corresponding to the IEEE 1599

multi-layer structure.

3.1 Definition of Music Event

The mentioned comprehensiveness in music descrip-

tion is realized in IEEE 1599 through a multi-layer

environment. The XML format provides a set of rules

to create strongly structured documents. IEEE 1599

implements this characteristic by arranging music and

music-related contents within six layers (Ludovico,

2008):

• General - music-related metadata, i.e. catalogue

information about the piece;

• Logic - the logical description of score in terms of

symbols;

• Structural - identification of music objects and

their mutual relationships;

• Notational - graphical representations of the

score;

• Performance - computer-based descriptions and

executions of music according to performance

languages;

• Audio - digital or digitized recordings of the piece.

In IEEE 1599 code, this 6-layers layout corresponds

to the one shown in Figure 1, where the root element

IEEE 1599

presents 6 sub-elements.

Since contents are displaced over various levels,

what is the device that keeps heterogeneous descrip-

tions together and allows to jump from one descrip-

tion to another? The Logic layer contains an ad hoc

data structure that answers the question. When a user

encodes a piece in IEEE 1599 format, he/she must

specify a list of music events to be organized in a lin-

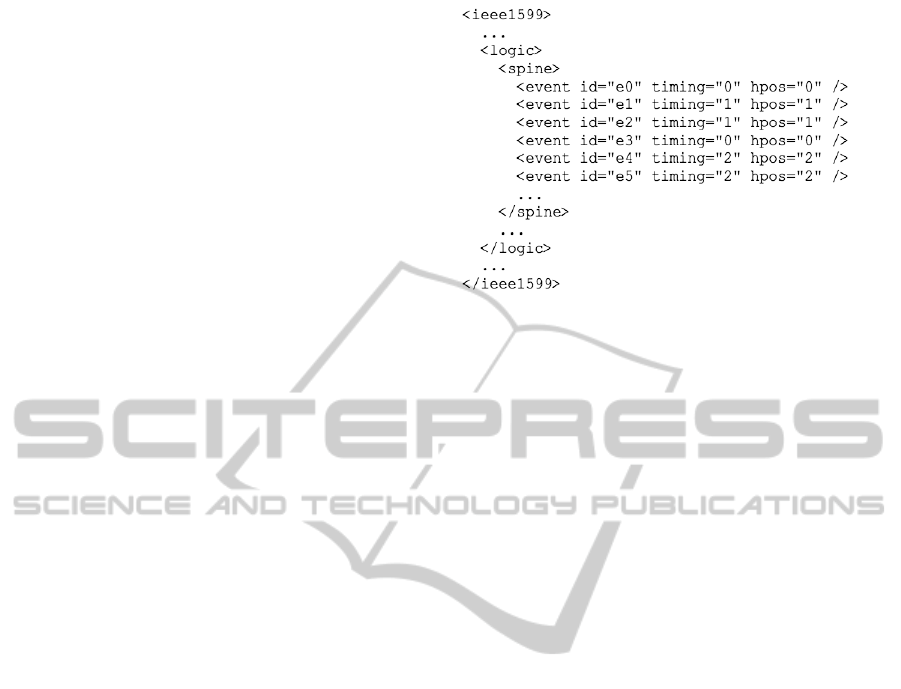

ear structure called “spine”. Please refer to Figure 2

for a simplified example of spine. Inside this struc-

ture, music events are uniquely identified by the

id

attribute, and located in space and time dimensions

through

hpos

and

timing

attributes respectively.

Each event is “spaced” from the previous one in

a relative way. In other words, a 0 value means si-

SIGMAP 2011 - International Conference on Signal Processing and Multimedia Applications

194

multaneity in time and vertical overlapping in space,

whereas a double value means a double distance from

the previous music event with respect to a virtual unit.

The measurement units are intentionally unspecified,

as the logical values expressed in spine for time and

space can correspond to many different absolute val-

ues in the digital objects available for the piece.

Let us consider the example shown in Figure 2, in-

terpreting it as a music composition. Event

e3

forms

a chord together with

e2

, belonging either to the same

or to another part/voice, as the attributes’ values of the

former are 0s. Similarly, we can affirm that event

e3

happens after

e0

(and

e1

), as

e4

occurs after 2 time

units whereas

e1

(and

e2

) occurs after only 1 time

unit. For further details please refer to the official

document about IEEE 1599 standard (IEEE TCCGM,

2008).

In conclusion, the role of the structure known as

spine is central for an IEEE 1599 encoding: it pro-

vides a complete and sorted list of events which will

be described in their heterogeneous meanings and

forms inside other layers. Please note that only a

correct identification inside spine structure allows an

event to be described elsewhere in the document, and

this is realized through references from other layers to

its unique

id

(see Section 3.2). Inside the spine struc-

ture only the entities of some interest for the encoding

have to be identified and sorted, ranging from a very

high to a low degree of abstraction.

In the context of music encoding in IEEE 1599,

how can be a music event defined from a semantic

point of view? One of the most relevant aspects of

the format, which confers both descriptive power and

flexibility, consists in the loose but versatile defini-

tion of event. In the music field, which is the typi-

cal context where the format is used, a music event

is a clearly recognizable music entity, characterized

by well-defined features, which presents aspects of

interest for the author of the encoding. This defini-

tion is intentionally vague in order to embrace a wide

range of situations. A common case is represented by

a score where each note and rest are considered music

events. The corresponding spine will list such events

by as many XML sub-elements (also referred as spine

events).

However, the interpretation of the concept of mu-

sic event can be relaxed. A music event could be the

occurrence of a new chord or tonal area, in order to

describe only the harmonic path of a piece instead of

its complete score, made of notes and rests.

Figure 2: An example of simplified spine.

3.2 Events in a Multi-layer

Environment

After introducing the concept of spine event, and after

the creation of the spine structure, events are ready to

be described in the multi-layer environment provided

by IEEE 1599.

This section will show that the concept of hetero-

geneous description is implemented in IEEE 1599 by

heterogeneous descriptions of each event contained in

spine. As stated in Section 3, the format includes six

layers. While heterogeneity is supported by the vari-

ety of layers, inside each layer we find homogeneous

contents, namely contents of the same type. The Au-

dio layer, for example, can link n different perfor-

mances of the same piece. In order to obtain a valid

IEEE 1599 document, not all the layers must be filled;

however their presence provides richness to the de-

scription.

In musical terms, the layer-based mechanism al-

lows heterogeneous descriptions of the same piece.

For a composition, not only its logical score, but also

the corresponding music sheets, performances, etc.

can be described. In this context instead, heterogene-

ity is employed in order to provide a wide range of au-

dio descriptions of the same environment. This con-

cept will become clear in the following.

Now let us focus on the presence and meaning of

events inside each layer. The General layer contains

mainly catalogue metadata that are not referable to

single music events (e.g. title, authors, genre, and so

on).

The Logic layer, which is the core of the format,

provides music description from a symbolic point of

view: it contains both the spine, i.e. the main time-

space construct aimed at the localization and synchro-

nization of events, and the symbolic score in terms of

pitches, durations, etc.

MANAGING MULTIPLE MEDIA STREAMS IN HTML5 - The IEEE 1599-2008 Case Study

195

Originally, the Structural layer was designed to

contain the description of music objects and their

causal relationships, from both the compositional and

the musicological point of view. This layer is aimed at

the identification of music objects as aggregations of

music events and it defines how music objects can be

described as a transformation of previously described

objects. As usual, eventlocalization in time and space

is realized through spine references.

For the remaining layers, the meaning of events is

more straightforward. The Notational layer describes

and links the graphical implementations of the logic

score, where music events - identified by their spine

id - are located on digital objects by absolute space

units (e.g. points, pixels, millimeters, etc.). In the case

of environmental sounds, the places where they are

recorded can be identified over a map. These maps

can be the counterpart of the graphical scores as re-

gards our work.

The Performance layer is devoted to computer-

based performances of a piece, typically in sub-

symbolic formats such as Csound, MIDI, and

SASL/SAOL. This layer is not used for our goals.

In the Audio layer events are described and linked

to audio digital objects. Multiple audio tracks and

video clips, in a number of different formats, are sup-

ported. The device used to map audio events is based

on absolute timing values expressed in milliseconds,

frames, or other time units.

Finally, let us concentrate layer by layer on the

cardinalities supported for events. In the Logic/Spine

sub-layer, the cardinality is 1 - namely the presence

is strictly required - as all the events must be listed

within the spine structure. In the Audio layer, on the

contrary, the cardinality is [0..n] as the layer itself can

be empty (0 occurrences), it can encode one or more

partial tracks where the event is not present (0 oc-

currences), it can link a complete track without rep-

etitions (1 occurrence), a complete track with repeti-

tions (n occurrences), and finally a number of differ-

ent tracks with or without repetitions (n occurrences).

Similarly, the Notational layer supports [0..n] occur-

rences.

In the case study described in this paper, we will

concentrate on an IEEE 1599 document having mul-

tiple videos, namely multiple instances inside the Au-

dio layer.

4 THE IEEE 1599 WEB PLAYER

The IEEE 1599 format has been already used with

success to synchronize multiple and heterogeneous

media streams within domain-specific applications, in

an offline scenario. In this chapter we will see how

Javascript and HTML5 together (especially the new

audio and video APIs) can be used to build a stream-

ing Web player, which is able to support advanced

multimedia synchronization features in a Web envi-

ronment, running inside a common browser.

4.1 Preparing IEEE 1599 for the Web

The IEEE 1599 standard supports a huge variety of

container formats and codecs for its media streams,

greater than what can be handled by any HTML5

browser implementation. An almost complete list

of what is currently supported by the most popular

browsers can be found in (Pilgrim, 2010). For this

reason, audio and video streams referred inside an

IEEE 1599 file must be first converted into an appro-

priate format to be consumed by browsers.

For this particular case study a Python script has

been written to load the IEEE 1599 file inside a DOM

(Document Object Model). It looks for any refer-

ence to audio or video files and automatically con-

verts them into Theora video and Vorbis audio format

inside an Ogg container. This particular combination

has been chosen because its components are all open

standards and also because it is well supported inside

browsers such as Mozilla Firefox, Google Chrome

and Opera. Other combinations may be supported

in the next future to extend compatibility to a wider

range of browsers.

Other related media are not converted, because

they mainly consist of images (e.g. booklets, music

sheets, etc.) which have been supported by almost ev-

ery browser for quite a long time. All media files are

then organized inside a uniform folder structure and

their references inside IEEE 1599 document are up-

dated. Broken references are deleted, as well as the

parts of the IEEE 1599 specification which are not

useful for this application.

IEEE 1599 files can be huge: most of them are

several megabytes in size and contain thousands lines

of XML tags. This is a problem for a streaming player

both if we parse the whole document at once and store

it inside a DOM and if we use event-driven parsing

techniques such as SAX: in the former case we have

to download the whole IEEE 1599 file, causing an an-

noying delay before actually playing something; in

the latter we can stream the XML together with me-

dia, but this adds a big amount of overhead and re-

duces considerably the bandwidth available to actual

contents.

Compression can help us solve the problem. XML

is an extremely verbose and redundant text-based for-

mat, so dictionary-based compression works great

SIGMAP 2011 - International Conference on Signal Processing and Multimedia Applications

196

on this kind of information (usually more than 95%

compression rate). Every modern browser support-

ing HTML5 also supports HTTP compression, so ex-

changing compressed data needs little effort. During

the preparation phase explained above, IEEE 1599

files are gzip-compressed and stored with a custom

file extension (.xgz). The web server is configured to

associate the right MIME-type (text/xml) and content

encoding (x-gzip) to that file extension. When a client

requests an IEEE 1599 file, the server HTTP response

instructs the browser to enable its HTTP compression

features in order to decompress the file before using

it. In this way all the advantages of DOM parsing can

be exploited and no overhead is added to the stream,

at the cost of a little initial delay.

4.2 Infrastructure and Application

Design

After preparing the material to make it usable by

a HTML5 browser, let us choose how to make it

available over the net. There are two main alterna-

tives: either using a common Web server and adopt-

ing the progressive download approach, like almost

every “streaming” player for the Web, or setting up

a full fledged streaming server. While the latter op-

tion permits the use of protocols specifically designed

for streaming (like RTP and RTSP) and may therefore

be more flexible, our choice has fallen on the former

one because it is effective for our purposes, easier to

implement and widely used in similar application do-

mains. Moreover, HTTP/TCP traffic is usually bet-

ter accepted by the most common firewall configu-

rations, and less subject to NAT traversal problems.

Finally, Web users seem to be less annoyed by some

little pauses during the playback rather than by quality

degradation or loss of information.

On the client side, the fundamental choice is what

media streams to request, when to request them and

how to manage them without clogging the wire or the

buffer. Three possible cases have been studied:

• One Stream at a Time. Among all the available

contents, just the stream currently chosen by the

user for watching or listening is requested and

buffered. When another stream is selected, the

audio (or video) buffer is emptied and the new

stream is loaded. The main advantage of this solu-

tion is that only useful data are sent on the wire: at

every time, the user receives just the stream he/she

requested. The principal drawback, on the other

side, is that every time the user decides to watch or

listen to other media streams, he/she has to wait a

considerable amount of time for the new contents.

• All the Streams at the Same Time. All the available

contents are requested by the client and sent over

the net. This approach drastically reduces delays

when jumping from one media stream to another,

at the cost of a huge waste of bandwidth caused

by the dispatch of unwanted streams. In order to

reduce network traffic, contents which are not cur-

rently selected by the user may be sent in a low-

quality version, and upgraded to full quality only

when selected. With this approach, a smooth tran-

sition occurs: when the user selects a new media

stream, the client instantly plays the degraded ver-

sion, and switches to full quality as soon as possi-

ble, namely when the buffer is sufficiently full.

• Custom Packetized Streams. Borrowing some

principles from the piggyback forward error cor-

rection technique (Perkins et al., 1998), streams

can be served all together inside a single packet,

containing the active streams in full quality and

the inactive ones in low quality. This implies the

existence of a “smart” server, which does all the

synchronization and packing work, and a “dumb”

client which does not even need to know anything

about IEEE 1599 and its structure.

Even if the last option presents a certain interest, it

requires a custom server-side application and burdens

the server with lots of computation for each client.

In this paper we will focus on the first two scenar-

ios, using the first (which is also the simplest) to draw

our attention on the synchronization aspects, evolving

then to the second to support multiple media streams

simultaneously.

4.3 Audio and Video Synchronization

One of the key features of the IEEE 1599 format is the

description of information which can be used to syn-

chronize otherwise asynchronous and heterogeneous

media. As presented in Section 3.1, every musical

event of a certain interest (notes, time/clef/key sig-

nature changes etc.) should have its own unique id

inside the spine. Those identifiers can be used to ref-

erence the occurrence of a particular event inside the

various resources available for the piece: the area cor-

responding to a note inside an image of the music

sheet, a word in a text file representing the lyrics, a

particular frame of a video capturing the performance,

a given instant in an audio file, and so on.

For the IEEE 1599 streaming Web player, the Au-

dio layer is the most interesting. Each related audio

or video stream is represented by the tag <track>,

whose attributes give information about its URI and

encoding format. Inside each <track> there are many

<track event> tags, which are the actual references

MANAGING MULTIPLE MEDIA STREAMS IN HTML5 - The IEEE 1599-2008 Case Study

197

to the events in the spine. Every <track event> has

two main attributes: the unique id of the related event

in the spine, and the time (usually expressed in sec-

onds) at which that event occurs inside the audio or

video stream. A known limitation of the application

is the following: each event must be referenced inside

each track, even if it does not actually take place in

that track, otherwise the synchronization mechanism

will not work properly (as explained later). For exam-

ple, the piano reduction track of a symphonic piece

should contain the references to all the events related

to the orchestra, even if they do not actually happen in

that track. A check can be enforced server-side during

the preparation step in order to guarantee this condi-

tion.

The HTML5 audio and video API already offers

all the features needed to implement a synchronized

system: media files can be play/paused and sought.

On certain implementations (later versions of Google

Chrome, for example) there is even the possibility

to modify the playback rate, thus slowing down or

speeding up the track performance. It is sufficient use

the information stored inside the IEEE 1599 file to

drive via JavaScript the audio and video objects in-

side the Web page.

The currently active media stream is used as the

master timing source: while it is playing, the client

constantly keeps trace of the last event occurred inside

the track. When another stream is selected the client

loads it, looks for the event of the new track with the

same id as the last one occurred, then uses the time

encoded for this event to seek the new stream. Conse-

quently, the execution resumes exactly from the same

logical point where we left it, and media synchroniza-

tion is achieved. Since event identifiers are used as a

reference to jump to the exact instant inside the new

stream, it should now be clear why all the events re-

lated to the whole piece should be present inside each

track. In other case, we could fall back to the pre-

vious (or next) event in common to both tracks, po-

tentially very distant from the exact synchronization

point. Please note that such a common event could

not exist.

As stated before the event list could be large, so

we have to use efficient algorithms to navigate it and

find the events we need in a small time amount. De-

pending on the situation, certain techniques may be

more convenient than others:

• During continuous playback of a single stream, a

linear search over the event list is maybe the most

appropriate for keeping trace of occurring events.

Of course, the list has to be sorted by the occurring

instant of each event;

• When the user seeks, movements inside the

stream become non-linear, thus making linear

search inefficient. In this case, a binary search

is preferable. It is rare to find an event exactly

at the seeking position, so event search must be

approximated to the event immediately before (or

immediately after) the current instant;

• When changing media streams, events are

searched by their unique identifier. Therefore, a

dictionary using it as its key becomes really use-

ful.

After the IEEE 1599 file has been downloaded and

stored as a DOM by the player, an event list is pop-

ulated for each track and quick-sorted by occurrence

time. Then, for every list, an associative array is used

to bind the index of each event to its unique identi-

fier. This data structure enables the use of all the three

search techniques mentioned above, thus making the

retrieval of events efficient in every possible case.

4.4 Handling Simultaneous Media

The principles explained in the previous subsection

can also be applied when handling and playing multi-

ple media streams at a time. In particular, we are re-

ferring to a number of video or audio tracks to be per-

formed at the same time. In this case, the track which

acts as master will be played smoothly and without

interruption, while every other slave track will be pe-

riodically sought and/or stretched to keep it synchro-

nized with the master. The choice of the master track

can deeply influence the quality of the playback. In

particular, it is well known that human perception is

more disturbed by discontinuities in acoustic rather

than in visual information. If both of them are present

at the same time, it is better to choose one of the audio

tracks as master.

Resynchronization of slave tracks can happen at

fixed intervals or when needed. In the former case a

timer is set and all the slave tracks are re-aligned when

it expires, following the same procedure explained

above for changing media streams. In the latter case,

whenever an event occurs in the master track, the cor-

responding event is searched in all the slave tracks.

Repositioning of a slave track occurs when the abso-

lute difference between its playing position and the

occurring time of the related event is greater than a

certain given threshold.

SIGMAP 2011 - International Conference on Signal Processing and Multimedia Applications

198

5 CONCLUSIONS AND FUTURE

WORKS

In this paper we have illustrated an application to

manage multiple media streams through HTML5 in

a synchronized way. The IEEE 1599 format rep-

resented for us a test case with demanding require-

ments, such as the presence of heterogeneous mate-

rials to keep mutually synchronized. For this kind

of applications, our approach based on HTML5 and

JavaScript has proved to be effective.

As regards future works, multiple media repro-

duction in the IEEE 1599 Web player is not supported

yet. An experiment of fixed interval repositioning has

been made, choosing a period of 2 seconds. While the

results of resynchronization of video over a continu-

ous and smooth audio playback are quite acceptable,

the audio over audio case produced glitches which

are annoying to listen. This problem could be re-

duced by using the volume control in the HTML5 au-

dio and video API in order to rapidly fade out just

before a repositioning and fade in again immediately

after. Another improvement may be obtained by dy-

namically changing the playback rate of the slaves

(through the implementations that support this fea-

ture). Playback rate can be estimated by comparing

the time difference between the next and last event in

the master with the time difference between the two

corresponding events in the slave.

Other future works may include the implementa-

tion of further features like synchronizing and high-

lighting graphic elements (musical sheets, lyrics etc.),

or using information of the General layer for music

information retrieval and linking purposes.

ACKNOWLEDGEMENTS

The authors wish to acknowledge the partial support

of this work by the International Scientific Cooper-

ation project EMIPIU (Enhanced Music Interactive

Platform for Internet User).

REFERENCES

Baggi, D. L. (1995). Technical committee on computer-

generated music. TC, 714:821–4641.

Barat´e, A., Haus, G., Ludovico, L. A., and Mauro, D. A.

(2011). A web-oriented multi-layer model to interact

with theatrical performances. In International Work-

shop on Multimedia for Cultural Heritage (MM4CH

2011), Modena, Italy.

Daoust, F., Hoschka, P., Patrikakis, C., Cruz, R., Nunes,

M., and Osborne, D. (2010). Towards video on the

web with HTML5. NEM Summit 2010.

Faulkner, A., Lewis, A., Meyer, M., and Merchant, N.

(2010). Creating an HTML5 web based video editor

for the general user. CGT 411, Group 13.

Harjono, J., Ng, G., Kong, D., and Lo, J. (2010). Build-

ing smarter web applications with HTML5. In Pro-

ceedings of the 2010 Conference of the Center for

Advanced Studies on Collaborative Research, pages

402–403. ACM.

Haus, G. and Longari, M. (2005). A multi-layered, time-

based music description approach based on XML.

Computer Music Journal, 29(1):70–85.

Laiola Guimar˜aes, R., Cesar, P., and Bulterman, D. (2010).

Creating and sharing personalized time-based annota-

tions of videos on the web. In Proceedings of the 10th

ACM symposium on Document engineering, pages

27–36. ACM.

Ludovico, L. A. (2008). Key concepts of the IEEE 1599

standard. In Proceedings of the IEEE CS Conference

The Use of Symbols To Represent Music And Multime-

dia Objects, IEEE CS, Lugano, Switzerland.

Perkins, C., Hodson, O., and Hardman, V. (1998). A sur-

vey of packet loss recovery techniques for streaming

audio. Network, IEEE, 12(5):40–48.

Pfeiffer, S. and Parker, C. (2009). Accessibility for the

HTML5 <video> element. In Proceedings of the

2009 International Cross-Disciplinary Conference on

Web Accessibililty (W4A), pages 98–100. ACM.

Pilgrim, M. (2010). HTML5: Up and running. Oreilly &

Associates Inc.

IEEE TCCGM (2008). IEEE Std 1599-2008 - IEEE Rec-

ommended Practice for Defining a Commonly Accept-

able Musical Application Using XML. The Institute of

Electrical and Electronics Engineers, Inc.

Vaughan-Nichols, S. (2010). Will HTML 5 restandardize

the web? Computer, 43(4):13 –15.

MANAGING MULTIPLE MEDIA STREAMS IN HTML5 - The IEEE 1599-2008 Case Study

199