APPEARANCE-BASED VISUAL ODOMETRY

WITH OMNIDIRECTIONAL IMAGES

A Practical Application to Topological Mapping

Lorenzo Fern´andez, Luis Pay´a, Oscar Reinoso and Francisco Amor´os

Departamento de Ingeniera de Sistemas y Automtica, Miguel Hern´andez University

Avda. de la Universidad s/n, Alicante, Elche, Spain

Keywords:

Visual odometry, Global appearance, Omnidirectional images, Fourier descriptor, Visual compass.

Abstract:

In this paper we deal with the problem of map creation and localization of a mobile robot using omnidirectional

images. We describe a real-time algorithm for topological mapping, using as input data only a set of images

captured by a single omnidirectional camera mounted at a fixed position on the mobile robot. To compute the

topological relationships between locations in the map, we use techniques based in the global appearance of

the images. When using these methods, it is important to remove redundant information to get an acceptable

computational cost when comparing locations. With this aim, we describe each omnidirectional image by a

single Fourier descriptor that represents the appearance as well as the relative orientation between images.

This algorithm permits computing the relative topological position of a location with respect to the previous

one, acting as a visual compass. We have carried out a complete set of experiments to study the validity of

the proposed visual odometry and topological mapping and to perform an objective comparison between the

results obtained using the robot odometry, our visual odometry and the ground truth. We have also checked

the time consumption to carry out the process and the geometrical accuracy obtained comparing to the ground

truth.

1 INTRODUCTION

The design of algorithms to perform some tasks au-

tonomously in a real environment is a key point in

mobile robotics. In this field, an essential problem

to consider is the computation of the localization of

the autonomous vehicle in the environment. It is im-

portant that the mobile robot has a map or an in-

ternal representation of the environment, so that the

robot can make decisions about its localization and

about the path to follow to move from its current po-

sition to the target points. Omnidirectional vision sys-

tems are commonly used at this kind of applications

due to their relative low cost and the richness of the

information they provide. Different representations

of the visual information can be used when working

with these catadioptric systems, such as the omnidi-

rectional, panoramic and bird eye view images. In

this paper, we use the panoramic representation of the

scenes as it can offer invariance to ground-plane ro-

tations, and it allows us to use only the information

provided by the vision sensor to perform the process.

Different authors have studied how to use the om-

nidirectional images both to solve the mapping and

the localization problems. We can categorize these

solutions into two main groups:

• Feature-based solutions, in which a number of

significant points or landmarks from each image

are extracted and each point is described using

an invariant descriptor. For example, (Murillo

et al., 2007) and (Valgren and Lilienthal, 2010)

use SURF features (Bay et al., 2008) extracted

from a set of omnidirectional images to find the

localization of the robot in a given map.

• Appearance-based solutions, in which the whole

appearance of the omnidirectional image is repre-

sented by a single descriptor, with no local feature

extraction. For example (Menegatti et al., 2004b)

present a method to build a topological map us-

ing a Fourier descriptor of omnidirectional images

and (Pay´a et al., 2010) perform a probabilistic lo-

calization in some environments.

Using appearance-based techniques is useful

when working in unstructured environments and of-

fer an intuitive way to construct the map and to get

205

Fernández L., Payá L., Reinoso O. and Amorós F..

APPEARANCE-BASED VISUAL ODOMETRY WITH OMNIDIRECTIONAL IMAGES - A Practical Application to Topological Mapping.

DOI: 10.5220/0003533602050210

In Proceedings of the 8th International Conference on Informatics in Control, Automation and Robotics (ICINCO-2011), pages 205-210

ISBN: 978-989-8425-75-1

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

the position. However, as no relevant information is

extracted from the images, it is necessary to apply a

compression method to reduce the computational cost

of the mapping and localization processes. Several

researchers have developed DFT (Discrete Fourier

Transform) methods to get the most relevant infor-

mation from the images (Menegatti et al., 2004a).

These descriptors present rotational ground-plane in-

variance, concentrate the most relevant information in

the low frequency components of the transformed im-

ages and each image descriptor is computed indepen-

dently of the rest of images.

For these reasons, and based on some prior works

(Pay´a et al., 2009; Pay´a et al., 2010; Fern´andez et al.,

2010), we have decided to describe each omnidirec-

tional image by means of a Fourier descriptor. We

use the Fourier Signature (Menegatti et al., 2004a) to

compress each image captured. The processing time

needed to compute the Fourier Signature is noticeably

lower than in other common feature extraction algo-

rithms (Pay´a et al., 2009), and it permits a fast com-

parison between the images in the map by means of

a vector distance measurement. Also, when using the

Fourier Signature, we exploit better the invariance to

ground-planerotations in panoramic images, property

that will be of utmost importance when deploying our

visual compass.

In this paper we present a methodology to build

a topological map using the global appearance of the

panoramic images to model the topological relation-

ships between successive nodes in the map. We use

the Fourier Signature to get a robust descriptor that

allow us to work in real-time. However, the meth-

ods described here are independent of the descriptor

used to represent the images, and other appearance-

based descriptors may also be applied. To represent

the distance between two consecutive poses we have

used the normalized Euclidean distance between their

Fourier Signatures and to get the relative angle be-

tween them we have implemented a Fourier-based vi-

sual compass. Our main objective consists in evaluat-

ing the feasibility of using purely global-appearance

methods in these tasks and how the main features of

the descriptor influence the final result.

The paper is organized as follows. Section 2

presents the fundamentals of topological mapping ap-

proaches. In section 3, we describe the Fourier de-

scriptor and howto use it with omnidirectionalimages

to implement the visual compass. Section 4 deals with

the problem of localization and map creation using

visual odometry. Next, Section 5 presents the exper-

imental setting and the results obtained. Finally, we

present the conclusions and future work in Section 6.

2 TOPOLOGICAL MAP

BUILDING. STATE OF THE ART

With respect to the mapping problem we can establish

two approaches: metric and topological. The first one

consists in modeling the environment using a metric

map obtained with geometrical accuracy when repre-

senting the position of the robot in it. For example

(Gil et al., 2010) present an approach to carry out

the mapping process with a team of mobile robots

and visual information. On the other side, topolog-

ical mapping consists in the creation of maps that

represent graphical models of the environment that

capture places and their connectivity in a compact

form. An example of this approach is presented in

(Pay´a et al., 2010) where a topological representation

of the environment is obtained by applying a method

based on the physics of harmonic oscillators. Also,

(Tully et al., 2009) describe a probabilistic method for

topological SLAM (Simultaneous Localization and

Mapping), solving the topological graph loop-closing

problem. At last, (Fern´andez et al., 2010) describe

a Monte-Carlo Localization using the robot odome-

try and the appearance of omnidirectional images to

localize in a topological map.

Recovering relative robot poses from a set of cam-

era images has been a largely studied problem in re-

cent years. For example (Nist´er et al., 2006) present

a system that estimates the motion of a stereo head

using a feature tracker or (Scaramuzza and Siegwart,

2008) describe a real-time algorithm for computing

the ego-motion of a vehicle using as input only om-

nidirectional images and (Scaramuzza et al., 2010)

study how to close the loop by using the omnidirec-

tional visual odometry and a vocabulary tree. This

work shows how it is possible to carry out a process

of robot localization and mapping simultaneously us-

ing as input data only omnidirectional images and a

loop-closing process.

In this paper, we face the mapping problem as a

relative camera pose recovering problem, using the

overall appearance of the panoramic images, without

any feature extraction process. We describe a real-

time algorithm for computing an appearance-based

topological map through visual odometry. The main

contributions of the work are the development of a vi-

sual compass that permits computing the position and

orientation of each new location in the map, with a

low computational cost.

ICINCO 2011 - 8th International Conference on Informatics in Control, Automation and Robotics

206

3 FOURIER SIGNATURE

3.1 Fourier Signature

The Fourier Signature presents several advantages

among other Fourier-based methods. It is simple to

compute, it presents a low computational cost in terms

both of computation time and memory required, and it

exploits well the invariance against ground-plane ro-

tations using panoramic images (Pay´a et al., 2009).

The Fourier Signature presents the same proper-

ties as the 2D Discrete Fourier Transform. The most

important information is concentrated in the low fre-

quency components of each row, so we can work only

with the information from the k

1

first columns in the

signature (k

1

< N

y

), and its presents rotational invari-

ance when working with panoramic images. It is pos-

sible to prove that if each row of the original image

is represented by the sequence {a

n

} and each row of

the rotated image by {a

n−q

} (being q the amount of

shift), when the Fourier Transform of the shifted se-

quence is computed, we obtain the same amplitudes

A

k

than in the non-shifted sequence, and there is only

a phase change, proportional to the amount of shift q,

(Eq. 1).

F[{a

n−q

}] = A

k

exp

− j

2πql

N

y

; l = 0, .. . , N

y

− 1

(1)

Thanks to this shift theorem we can separate the

computation of the robot position and the orientation.

With this aim, we decompose the Fourier Signature of

the image I

j

∈ ℜ

N

x

,N

y

in two matrices, one contain-

ing the modules d

j

∈ ℜ

N

x

,k

1

and the other the phases

p

j

∈ ℜ

N

x

,k

2

of this signature. Finally, it is interest-

ing to highlight also that the Fourier Signature is an

inherently incremental method.

3.2 Visual Compass

Once we have studied the kind of information to store

in the database, we have to establish some relation-

ships between the stored poses to carry out the relative

camera pose recovering. When we have the Fourier

Signature of two panoramic images that have been

captured in two points that are geometrically close in

the environment, it is possible to compute their rela-

tive orientation using the shift theorem (eq. 1).

Since the Fourier Signature is invariant to ground-

plane rotations and there is a relationship between

phases of the Fourier Signature of a panoramic image

taken at one position and the phases of the Fourier

Signature of another panoramic image taken at the

same point but with different orientation (eq. 1), we

can expand this property and calculate the approxi-

mate rotation φ

t+1,t

between two panoramic images

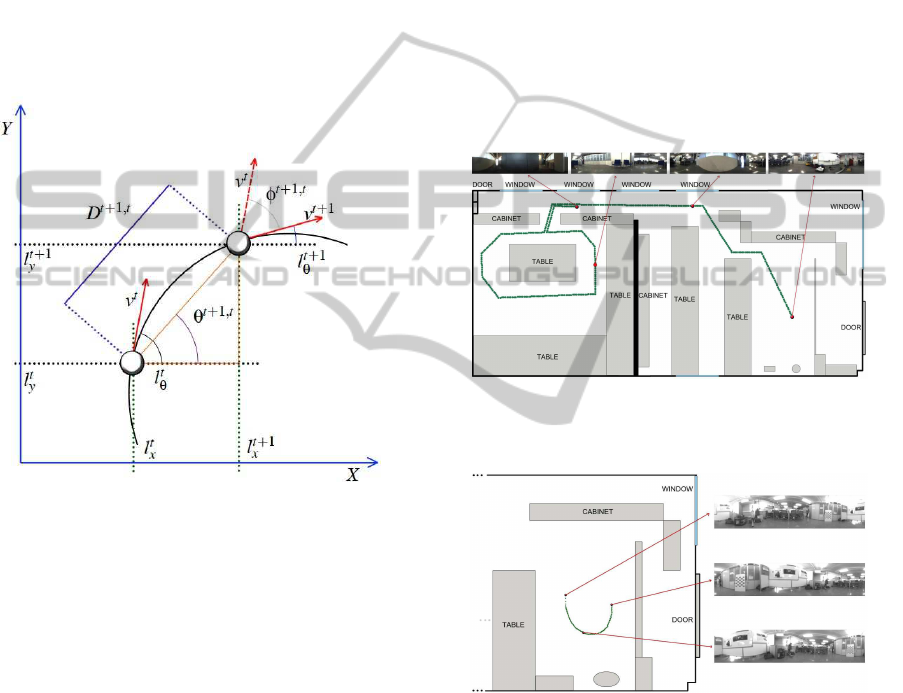

taken on two consecutive poses. In fig. 1, v

t

is the ve-

locity of the robot at time t, v

t+1

at time t + 1 and the

relative orientation φ

t+1,t

. The angle obtained corre-

sponds to the rotation the robot has performed in the

ground-plane when going from the first to the second

point as shown in fig. 1.

To obtain φ

t+1,t

we have implemented a convolu-

tion operation between the phases of the Fourier Sig-

natures of the panoramic images of the two poses, by

applying eq. 1.

4 TOPOLOGICAL MAP

CREATION

We build a graph-based map where when a new image

is captured, a new node is added to the map, and the

topological relationships with the previous node are

computed using the global appearance information of

the scenes. With our procedure, this computation is

made online, as the robot is going through the envi-

ronment, in a simple and robust way.

We consider that our map is composed of a set of

nodes L = {l

1

, l

2

, . .. , l

N

}. Each node l

j

is represented

by an omnidirectional image I

j

∈ ℜ

N

x

,N

y

associated

and a Fourier descriptor that describes the global ap-

pearance of the omnidirectionalimage, composed of a

modules matrix d

j

∈ ℜ

N

x

,k

1

and a phases matrix p

j

∈

ℜ

N

x

,k

2

. Also, with the algorithm implemented, we can

compute the position (l

j

x

, l

j

y

) and the orientation l

j

θ

of

each node in the map thus l

j

= {(l

j

x

, l

j

y

, l

j

θ

), d

j

, p

j

, I

j

}

(fig. 1).

We consider that the robot captures a new im-

age at time t + 1 and then, the Fourier descriptors

d

t+1

and p

t+1

are computed. Comparing it with the

descriptors of the previously captured image d

t

and

p

t

we can find the topological relationships between

these two nodes. We can separate the computation

of the robot position and the orientation at time t + 1

(l

t+1

x

, l

t+1

y

, l

t+1

θ

) thanks to the shift theorem (eq. 1).

In the surroundings of the point where one image

is taken, the distance between Fourier Signatures is

approximately proportional to the actual geometrical

distance (Fern´andez et al., 2010). To compute the dif-

ference between the appearance of two scenes, we use

the Euclidean distance between the modules of the

Fourier signature. If d

i

is the Fourier signature of the

image I

i

and d

j

is the Fourier signature of the image

I

j

, then the distance between scenes i and j is:

D

i, j

=

q

∑

N

x

u=0

∑

k

1

v=0

(d

i

(u, v) − d

j

(u, v))

2

(2)

APPEARANCE-BASED VISUAL ODOMETRY WITH OMNIDIRECTIONAL IMAGES - A Practical Application to

Topological Mapping

207

On the other hand, thanks to the visual compass

implemented, we can estimate the relative orientation

between images. After this process, the position of

the current node is computed from the previous node

as:

l

t+1

x

= l

t

x

+ D

t+1,t

· cos(θ

t+1,t

) (3)

l

t+1

y

= l

t

y

+ D

t+1,t

· sin(θ

t+1,t

) (4)

l

t+1

θ

= l

t

θ

+ (φ

t+1,t

) (5)

These relationships are shown graphically in fig.

1.

Figure 1: Position and orientation of the new node in the

map computed incrementally from the previous node.

Once the topological map is built from the Vi-

sual Odometry data, we need a mechanism to test

the performance of our approach. We have decided

to evaluate how similar is the layout of the resulting

map comparing to the actual map (Ground Truth or

Real Map) of the images captured. With this aim, we

use a method based on a shape analysis, as in (Pay´a

et al., 2010). As a result of this analysis, a parameter

µ ∈ [0, 1] can be obtained. µ is a measure of the shape

correspondencebetween the sets of points where orig-

inal images were taken and the set of points in the

map. The lower is µ, the more similar are these sets.

We name this parameter shape difference along the

paper. We use this difference with the only purpose to

know the feasibility of our appearance-based Visual

Odometry, and its use is possible due to the fact that

we know the coordinates of the points in the original

map (ground truth).

5 EXPERIMENTS

To carry out the experiments, we haveused two differ-

ent sets of omnidirectional images taken from a cata-

dioptric system consisting of a CCD camera and a hy-

perbolic mirror. The first set has been captured in an

office environment, when the robot performs the tra-

jectory shown in fig. 2 (ground truth), which includes

a loop closing. This set is composed by 200 images

with a distance of 10cm between images. The second

set (fig. 3) has been captured in a laboratory environ-

ment. It is composed by 150 images and the image

acquisition has been automated so that a new image

is captured when the difference with the previous one

goes over a threshold.

Figure 2: Trajectory followed by the robot when capturing

the first set of images.

Figure 3: Trajectory followed by the robot when capturing

the second set of images.

We have designed a complete set of experiments

in order to test the validity of the global appearance-

based approach in topological map building. With this

aim, we study some features that define the feasibility

of the procedures, such as the accuracy of the map

built (how similar it is comparing to the actual map)

and the computational cost of the method.

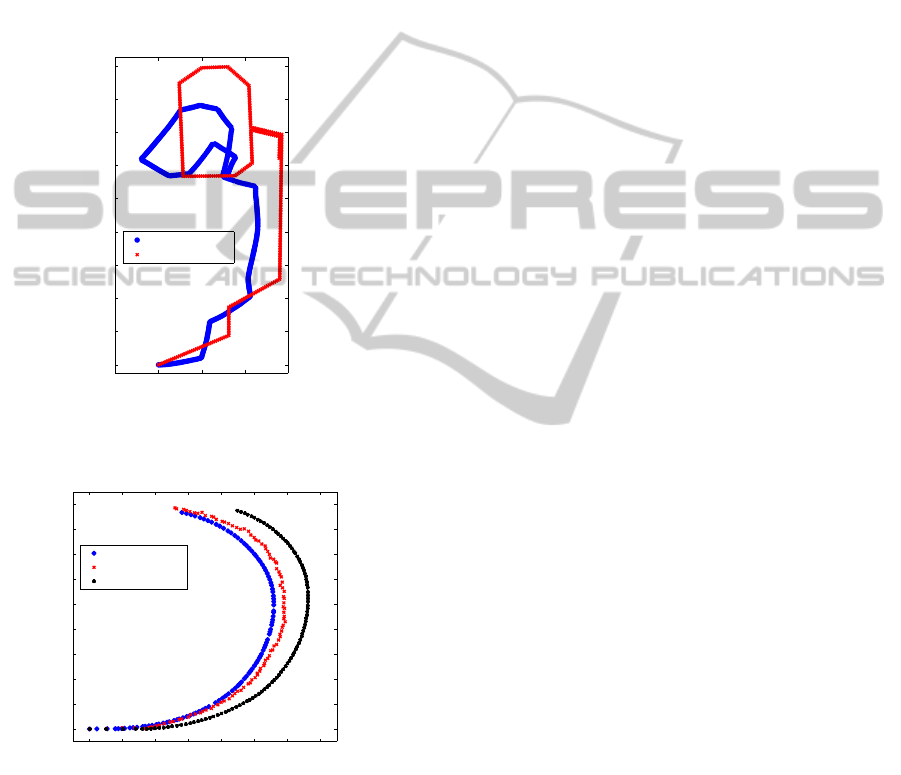

Fig. 4 shows an example of the map computed

with the first set of images and the actual map (ground

truth). This map has been built using all the images of

the set (geometrical distance between images equals

ICINCO 2011 - 8th International Conference on Informatics in Control, Automation and Robotics

208

10 cm.), k

1

= 64 module components to compute dis-

tance between Fourier descriptors and k

2

= 64 phase

components used to compute relative orientations in

the visual compass. Fig. 5 shows an example with

the second set of images. The visual odometry map

has been built with all the images in the set, and

k

1

= k

2

= 64. In this case, we compare it with the ac-

tual map and with the map computed using the odom-

etry of the robot. The map obtained with our visual

odometry algorithm clearly outperforms the map ob-

tained with the odometry of the robot.

−2 0 2 4 6

0

2

4

6

8

10

12

14

16

18

X (m)

Y (m)

Visual Odometry

Ground Truth

Figure 4: Example of map built with the visual odometry

and the first set of images and ground truth.

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

X (m)

Y (m)

Visual Odometry

Ground Truth

Robot Odometry

Figure 5: Example of map built with the visual odometry

and the second set of images, ground truth and map built

using the robot odometry.

In the set of experiments we have implemented

to study the performance of our algorithm, we have

tested the influence of the number of module com-

ponents to compute the distance D

t+1,t

(k

1

) and the

number of components to compute the phase differ-

ence (k

2

). We also test the influence of the geomet-

rical distance between the points where the images

were captured.

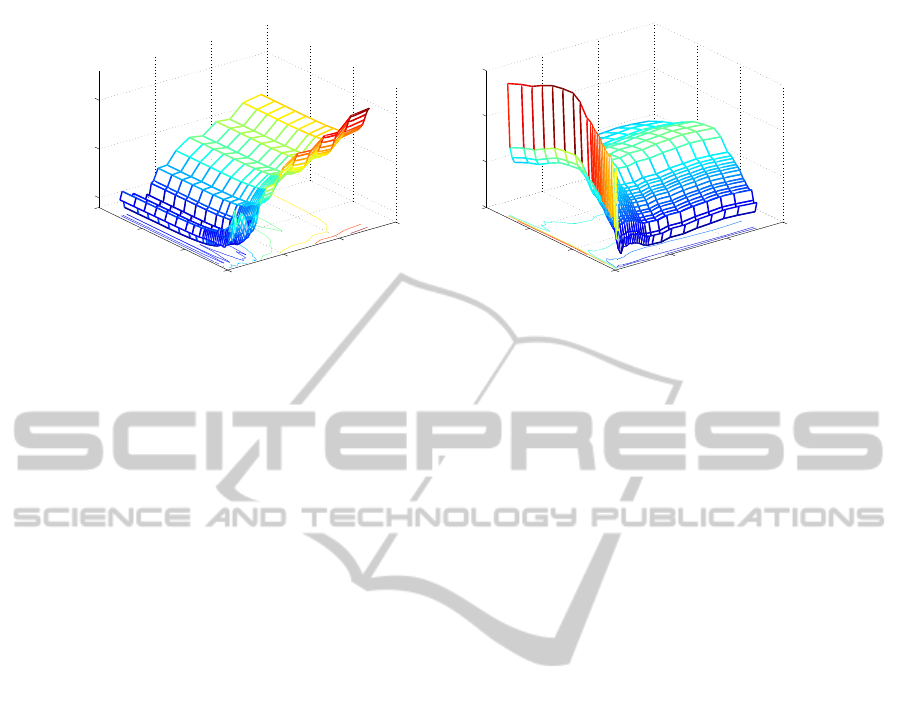

Fig. 6 (a) shows the shape difference of the re-

sulting map comparing to the actual map when using

the first set of images. Fig. 6 (b) shows the same

result when building the map with the second set of

images. When we use all the images of set 1, the min-

imum shape distance is around 0.04 when k

1

= 26 and

k

2

= 20. In set 2, this factor is 0.015 when k

1

= 4 and

k

2

= 8. The shape distance tends to increase when k

2

does, due to the fact that the first components contain

the main information so, the high frequency compo-

nent may be adding noise to the computation. As far

as k

1

is concerned, the tendency is not clear but, in

general, the shape distance is quite insensitive to this

parameter.

To test the feasibility of the method presented to

work in real time, we performed a series of experi-

ments in which we obtained the average time needed

at each step depending on the number of components

both in magnitude (k

1

) and phase (k

2

). When we use

all the images of set 1, the averagetime needed at each

step to place the new location in the map is around

0.153 seconds when k

1

= 26 and k

2

= 20. In set 2,

this factor is 0.054 seconds when k

1

= 4 and k

2

= 8.

6 CONCLUSIONS

In this paper we have studied the applicability of the

approaches based on the global appearance of omni-

directional images in topological mapping, using a set

of images a robot has captured when traversing a tra-

jectory in an environment. The main contributions of

the paper include the development of a visual com-

pass that allows building a map of the environment

online, while the robot is going through the environ-

ment, the development of a method to compare the

accuracy of the layout of the map computed and the

study of the influence of the parameters of the pro-

cess both in the layout of the resulting map and in the

processing time.

All the experiments have been carried out with

two sets of omnidirectionalimages captured by a cata-

dioptric system mounted on the mobile robot. Each

scene is described through a Fourier-based signature

that presents a good performance in terms of amount

of memory and processing time, and it is also invari-

ant to ground-plane rotations and an inherently incre-

mental method.

We present a methodology to build graph-based

maps of the environment. As we use a topological

approach, these maps represent the real world except

for a scale factor and a rotation. To make an homo-

geneous comparison between the map computed and

APPEARANCE-BASED VISUAL ODOMETRY WITH OMNIDIRECTIONAL IMAGES - A Practical Application to

Topological Mapping

209

0

50

100

150

0

50

100

150

0.05

0.1

0.15

k

2

k

1

µ

(a)

0

50

100

150

0

50

100

150

0.014

0.016

0.018

0.02

k

2

k

1

µ

(b)

Figure 6: Shape difference versus number of module components (k

1

) and phase components (k

2

) for (a) the set of images 1

and (b) the set of images 2.

the real map, we have developed a method based in

the Procrustes analysis. As shown in the results, when

the parameters of the system are correctly tuned, ac-

curate results can be obtained, maintaining a reason-

able computational cost.

We are now working in this approach to build a

topological SLAM algorithm (Simultaneous Local-

ization and Map Building) using just the global ap-

pearance of omnidirectionalimages and different map

topologies.

ACKNOWLEDGEMENTS

This work has been supported by the Spanish govern-

ment through the project DPI2010-15308. ”Explo-

raci´on Integrada de Entornos Mediante Robots Co-

operativos para la Creaci´on de Mapas 3D Visuales y

Topol´ogicos que Puedan ser Usados en Navegaci´on

con 6 Grados de Libertad”.

REFERENCES

Bay, H., Ess, A., Tuytelaars, T., and Gool, L. V. (2008).

Speeded-up robust features (surf). In Comput. Vis. Im-

age Underst.

Fern´andez, L., Gil, A., Pay´a, L., and Reinoso, O. (2010).

An evaluation of weighting methods for appearance-

based monte carlo localization using omnidirectional

images. In Proc. of Workshop on Omnidirectional

Robot Vision ICRA.

Gil, A., Reinoso, O., Ballesta, M., Juli´a, M., and Pay´a, L.

(2010). Estimation of visual maps with a robot net-

work equipped with vision sensors. Sensors.

Menegatti, E., Maeda, T., and Ishiguro, H. (2004a). Image-

based memory for robot navigation using properties of

omnidirectional images. Robot. Auton. Syst.

Menegatti, E., Zoccarato, M., Pagello, E., and Ishiguro, H.

(2004b). Image-based monte-carlo localisation with

omnidirectional images. In Robot. Auton. Sys.

Murillo, A. C., Guerrero, J. J., and Sagues, C. (2007). Surf

features for efficient robot localization with omnidi-

rectional images. In Proc. of ICRA.

Nist´er, D., Naroditsky, O., and Bergen, J. (2006). Visual

odometry for ground vehicle applications. Journal of

Field Robot.

Pay´a, L., Fern´andez, L., Gil, A., and Reinoso, O. (2010).

Map building and monte carlo localization using

global appearance of omnidirectional images. Sen-

sors.

Pay´a, L., Fern´andez, L., Reinoso,

´

O., Gil, A., and

´

Ubeda,

D. (2009). Appearance-based dense maps creation. In

Proc. of ICINCO.

Scaramuzza, D., Fraundorfer, F., and Pollefeys, M. (2010).

Closing the loop in appearance-guided omnidirec-

tional visual odometry by using vocabulary trees.

Robot. Auton. Syst.

Scaramuzza, D. and Siegwart, R. (2008). Appearance-

guided monocular omnidirectional visual odometry

for outdoor ground vehicles. IEEE Trans. on Robot.

Tully, S., Kantor, G., Choset, H., and Werner, F. (2009). A

multi-hypothesis topological slam approach for loop

closing on edge-ordered graphs. In Proc. of IEEE/RSJ.

Valgren, C. and Lilienthal, A. (2010). Sift, surf and seasons:

Appearance-based long-term localization in outdoor

environments. In Robot. Auton. Sys.

ICINCO 2011 - 8th International Conference on Informatics in Control, Automation and Robotics

210