A MULTI-MODAL VIRTUAL ENVIRONMENT TO TRAIN

FOR JOB INTERVIEW

Hamza Hamdi

1,2

, Paul Richard

1

, Aymeric Suteau

1

and Mehdi Saleh

2

1

Laboratoire d’Ing

´

enierie des Syst

`

emes Automatis

´

es (LISA), Universit

´

e d’Angers, 62 Avenue ND du Lac, Angers, France

2

I-MAGINER, 8 rue Monteil, Nantes, France

Keywords:

Virtual reality, Human-computer interaction, Emotion recognition, Affective computing, Job interview.

Abstract:

This paper presents a multi-modal interactive virtual environment (VE) to train for job interview. The pro-

posed platform aims to train candidates (students, job hunters, etc.) to better master their emotional state and

behavioral skills. The candidates will interact with a virtual recruiter represented by an Embodied Conversa-

tional Agent (ECA). Both emotional and behavior states will be assessed using human-machine interfaces and

biofeedback sensors. Contextual questions will be asked by the ECA to measure the technical skills of the can-

didates. Collected data will be processed in real-time by a behavioral engine to allow a realistic multi-modal

dialogue between the ECA and the candidate. This work represents a socio-technological rupture opening the

way to new possibilities in different areas such as professional or medical applications.

1 INTRODUCTION

Emotion modeling is a topic in computer science

since about twenty years (Picard, 1995). This interest

has grown by using breakthroughs coming from neu-

rophysiology and psychology which have established

a fine connection between emotion, rationality and

decision making (Damasio, 1994). Regarding its ex-

pressive dimension, emotions constitute a privileged

support in order to model Embodied Conversational

Agents (ECAs). Because of their ”embodiment”,

these agents are able to communicate through spo-

ken language but also through gestures and facial ex-

pressions. Another interesting but challenging topic is

real-time multi-modal communication between ECAs

and humans. Different systems have been developed

in the last decade (Helmut et al., 2005). However,

none of these systems allow realistic immersive multi-

modal emotion-based dialogue between an ECA and

a human.

In this paper, we describe a multi-modal interactive

virtual environment (VE) to train for job interview.

The proposed platform aims to train candidates (stu-

dents, job hunters, etc.) to better master their emo-

tional state and behavioral skills. The candidates

will interact with an Embodied Conversational Agent

(ECA). The first goal is to measure the emotional

and behavior states of the candidates using differ-

ent human-machine interfaces such as Brain Com-

puter Interfaces (BCI), eye tracking systems, and bio-

sensors. Collected data will allow to analyze nonver-

bal part of the communication such as posture, facial

expressions, gestures and emotions.

In the next section, we survey the related work con-

cerning the classification, the recognition and the

modeling of human emotions. In section three we will

present the platform architecture, the human-machine

interfaces and the proposed multi-modal approach for

emotion recognition. The paper ends by a conclusion

that provides some ideas for future work.

2 RELATED WORK

2.1 Classification of Emotions

An emotion can be defined as a ”hypothetical con-

struct indicating a reaction process of an organization

to a significant event” (Scherer, 2000). Emotions are

now recognized as involving other components such

as cognitive and physiological changes, trends in the

551

Hamdi H., Richard P., Suteau A. and Saleh M..

A MULTI-MODAL VIRTUAL ENVIRONMENT TO TRAIN FOR JOB INTERVIEW.

DOI: 10.5220/0003401805510556

In Proceedings of the 1st International Conference on Pervasive and Embedded Computing and Communication Systems (SIMIE-2011), pages 551-556

ISBN: 978-989-8425-48-5

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

action (e.g. run away) and motor expressions. Each

of these components has various functions. Darwin

postulated the existence of a finite number of emo-

tions present in all cultures and having a function of

adaptation (Darwin, 1872). This postulate was subse-

quently confirmed by Ekman which divided the emo-

tions into two classes: the primary emotions (joy, sad-

ness, anger, fear, disgust, surprise) which are natural

responses to a given stimuli and ensure the survival of

the species. The second class involves emotions that

evoke a mental image which correlates with the mem-

ory of a primary emotion (Ekman, 1999).

Emotions can be represented by discrete categories

(e.g. ”anger”) or defined by continuous dimensions

such as ”Valence”, ”Activation”, or ”Dominance”.

The ”Valence” dimension allows to describe the ”neg-

ative - positive” axis. The ”Activation” dimension al-

lows to describe the ”not very active - very active”

axis. The ”Dominance” axis is used to represent the

feeling of control. These three dimensions were com-

bined into a space called PAD (Pleasure, Arousal,

Dominance) originally defined by Mehrabian (Mehra-

bian, 1996).

2.2 Emotion recognition

2.2.1 Facial Expression Recognition

Facial expressions provide important information

about emotions. Therefore, several approaches based

on facial expression recognition have been pro-

posed to classify human emotional states (Pantic and

Rothkrantz, 2003). The features used are typically

based on local spatial position or displacement of spe-

cific points and face regions. Tian (Tian et al., 2000)

has attempted to recognize Action Units (AU), de-

veloped by Ekman and Friesen in 1978 (Ekman and

Friesen, 1978) using permanent and transient features

of the face and lips, the nasolabial fold and wrinkles.

Geometric models were used to locate the forms and

appearances of these characteristics. They reached

96% of precision. Hammal proposed an approach

based on the combination of two models for segmen-

tation of emotions and dynamic recognition of facial

expressions (Hammal and Massot, 2010). For a com-

plete review of recent emotion recognition systems

based on facial expression the readers are referred

to (Calvo and D’Mello, 2010).

2.2.2 Speech Recognition

Recognition of the emotional state is a topic of grow-

ing interest in the domain of speech analysis. Several

approaches that aim to recognize the emotions from

speech have been reported (Pantic and Rothkrantz,

2003) (Scherer, 2003) (Calvo and D’Mello, 2010).

Most researchers employed global prosodic devices in

order to ensure acoustic selection of emotional recog-

nition. Statistics on the expression level are calcu-

lated, (e.g. mean, standard deviation, maximum and

minimum height and contour of the energy in expres-

sions). Roy and Pentland classified the emotions by

using a Fisher linear classifier (Roy and Pentland,

1996). Using short sentences, they have recognized

two kinds of emotions: approval and disapproval.

They obtained a precision going from 65% to 88%.

2.2.3 Emotion Recognition from Physiological

Signals

The analysis of physiological signals is another pos-

sible approach for emotion recognition (Healey and

Picard, 2000) (Picard et al., 2001). Several types of

physiological signals can be used to recognize emo-

tions. For example, heart rate, skin conductance,

muscle activity (EMG), temperature variations of the

skin, variation of blood pressure are signals regularly

used in this context (Lisetti and Nasoz, 2004) (Villon,

2007). Each signal is usually studied in conjunction

with other ones.

2.3 Multi-modal Emotion Recognition

Multi-modal emotion recognition requires the fu-

sion of collected data. Physiological signals are

then mixed with other signals collected through

human-machine interfaces such as video or in-

frared cameras (gestures, etc.), microphones (speech),

brain computer interfaces (BCIs) (Lisetti and Nasoz,

2004) (Sebe et al., 2005). Multi-modal information

fusion may be performed at different levels. Usually

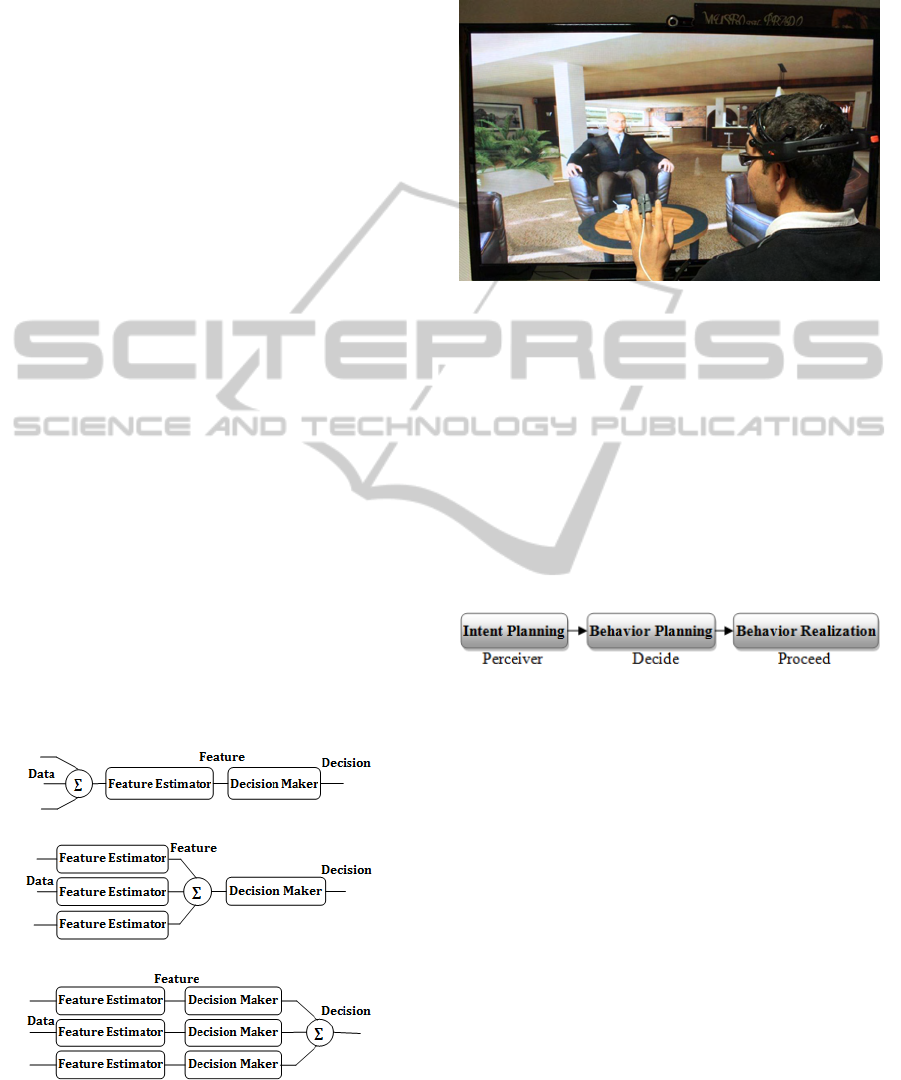

the three following levels are considered (see Fig. 1):

Signal level, Feature level, and Decision or Concep-

tual level.

Fusing information at the signal level actually

means to mix two or more, generally electrical, sig-

nals. The signals are fused before extracting features

required by the decision maker. This method is not

possible on signals issued from different modalities

because of the difference regarding their nature. How-

ever, this method can improve accuracy by using mul-

tiple sensors for a single modality (Paleari and Lisetti,

2006) (fig. 1. a).

Fusing information at the feature level means to

mix together the features issued from different signal

processors. Features extracted from each modality

are fused before being passed to the decision maker

module. This is the fusion technique used by humans

while combining information from different modali-

ties (Pantic and Rothkrantz, 2003). The input signals

PECCS 2011 - International Conference on Pervasive and Embedded Computing and Communication Systems

552

of the decision maker have to be synchronized. Not

all signals are present at the same time, but could be

dephased (fig. 1.b).

Combining information at the conceptual level

does not mean mixing together features or signals but

directly the extracted semantic information. The de-

cision from these modalities is then combined using

each apriori rule or machine learning technique. In

this technique, it is possible to extend each modality

independently before finally putting together the var-

ious decisions (fig. 1.c).

Decision level fusion of multi-modal information

is preferred by most researchers. Busso (Busso et al.,

2004) compared the feature level and the decision

level fusion techniques, observing that the overall per-

formance of the two approaches is the same.

Our goal is to propose a model allowing to analyze

various signals and to build a real-time emotion detec-

tion system based on multi-modal fusion. We aim to

identify the six universal emotions proposed by Ek-

man and Friesen (Ekman and Friesen, 1978) (anger,

disgust, fear, happiness, sadness and joy), to which

we add despise, stress, concentration and excitation.

3 SYSTEM OVERVIEW

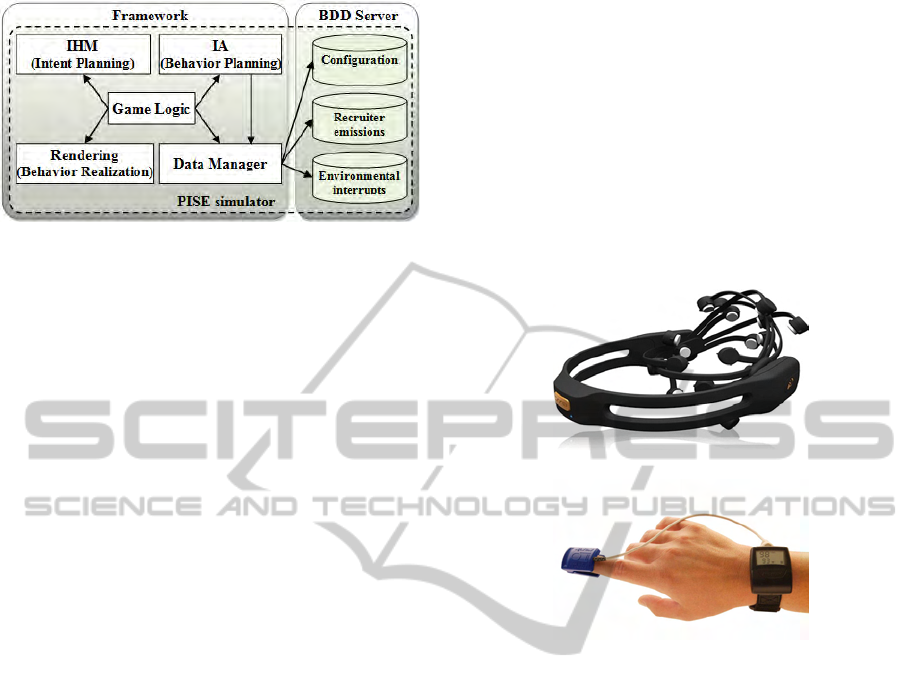

3.1 System Architecture

The proposed platform aims to support real-time sim-

ulation (fig. 2) that allow emotion-based face-to-face

dialogue between an ECA (the virtual recruiter) and

a human (candidate). Different virtual environments

(office, lobby, bar, etc.) can be selected. In addition,

(a)

(b)

(c)

Figure 1: Three levels for multimodal fusion (Sharma et al.,

1998): a) data or signal level, b) feature level, c) decision

level.

the selected ECA can have specific personality and

behavior (gentle, aggressive, passive, etc.)

Figure 2: Snapshot of interview simulation.

The ECA is able to communicate using gestures

and facial expressions. The interview follows a

script including a predefined number of topics to be

addressed during the interview. In order to make the

simulation more realistic and less predictable, the

ECA will adapt the predefined scenario depending on

the emotional state and current behavior of the can-

didate. The proposed platform is based on a specific

architecture extracted from the SAIBA (Situation,

Agent, Intention, Behavior, Animation) (Vilhjalms-

son et al., 2007) framework (see fig. 3):

Figure 3: Basic concept of SAIBA Framework.

The SAIBA model is split into three main modules,

from a high-level point of view:

• The perception module (Intent Planning), allow-

ing the ECA to obtain information about its envi-

ronment and its interlocutor,

• The decision module (Behavior Planning), which

chooses the best reaction according to what the

ECA perceives as well as other parameters do

such as its memory,

• The action module or rendering (Behavior Real-

ization) that generates the behavior and the sen-

tences selected by the previous module.

The system architecture is not limited to an

ECA, these three modules are integrated into more

interconnected modules (see fig. 4):

AI module understands the decision engine of the

ECA (the Behavior planning), but also AI from the

A MULTI-MODAL VIRTUAL ENVIRONMENT TO TRAIN FOR JOB INTERVIEW

553

Figure 4: System architecture.

environment. Similarly, the Graphical and Audio ren-

dering engine contains the rendering engine of our

virtual recruiter, but also the management of 3D en-

vironment display and User Graphical Interfaces (dis-

play of the connection screen, menus, etc.).

3.2 Human Computer Interfaces

Human-machine interfaces have two main objectives.

The first one consists in collecting data related to

the behavioral and emotional states of the candidate.

These data will be directly used to control the

reaction of the ECA during the interview session.

The main challenge is to identify and classify the

behavior and the emotional state of a candidate in

order to make them interpretable by the ECA. The

second objective is related to the ergonomic aspect of

the simulation. Thus human-machine interfaces have

to be non-intrusive in order to enable a high level of

immersion and not constraint the user movements.

The following interfaces and sensors have been

selected:

Brain computer interface: EPOC (fig. 5 (a));

Biofeedback sensor: Nonin (fig. 5 (b));

Microphone and webcam.

3.2.1 Modalities for Emotion Recognition

Human beings are able to communicate and express

emotions through various channels involving facial

expressions, speech, static and dynamic gestures (Pi-

card, 1995). Different signals and input modalities

have been considered:

1. Physiological Signals:

• Facial Electromyography (EMG) : facial ex-

pression,

• Electrocardiogram (ECG) : heartbeat-related

information,

• Electroencephalography (EEG) : discharge of

neurons in the brain,

• The galvanic skin response (GSR) : electrical

resistance of the skin.

2. Speech: the user’s emotional state is estimated

through speech analysis (pitch, tone, speed).

3. Text: the user’s emotional state is estimated

through textual content.

4. Gestures: the user’s emotional state is estimated

through static and dynamic gestures.

(a)

(b)

Figure 5: Human-machine interfaces: (a) Emotiv EPOC,

(b) Nonin oximeter.

3.2.2 Annotation and Standardization

of Emotions

Emotion Mark-up Language (EmotionML) uses

XML for data and annotation information. XML

(Extensible Mark-up Language) is a general-purpose

specification for creating custom mark-up language.

XML includes a set of rules for encoding documents

in machine-readable format. It allows the user to

define the mark-up elements and facilitate the infor-

mation systems in sharing structured data. Luneski

and Bamidis proposed an XML annotation technique

for the emotional data (Luneski and Bamidis, 2007).

Wang also proposed the ecgML (ECG Mark-up Lan-

guage) to annotate ECG data during the acquisi-

tion (Wang et al., 2003).

The objective of EmotionML is to transcribe the

expression of emotions using XML language. It is

able to represent several types of emotions (basic

emotions, secondary emotions and the combination of

several emotions). It makes it possible to describe the

nature of emotions like intensity. This language is not

specific to a model or an approach, it is rather simple

PECCS 2011 - International Conference on Pervasive and Embedded Computing and Communication Systems

554

and could be used to define beacons such as (<cate-

gory>, <appraisals>, <dimensions>). EmotionML

will be implemented as a communication protocol in

our framework.

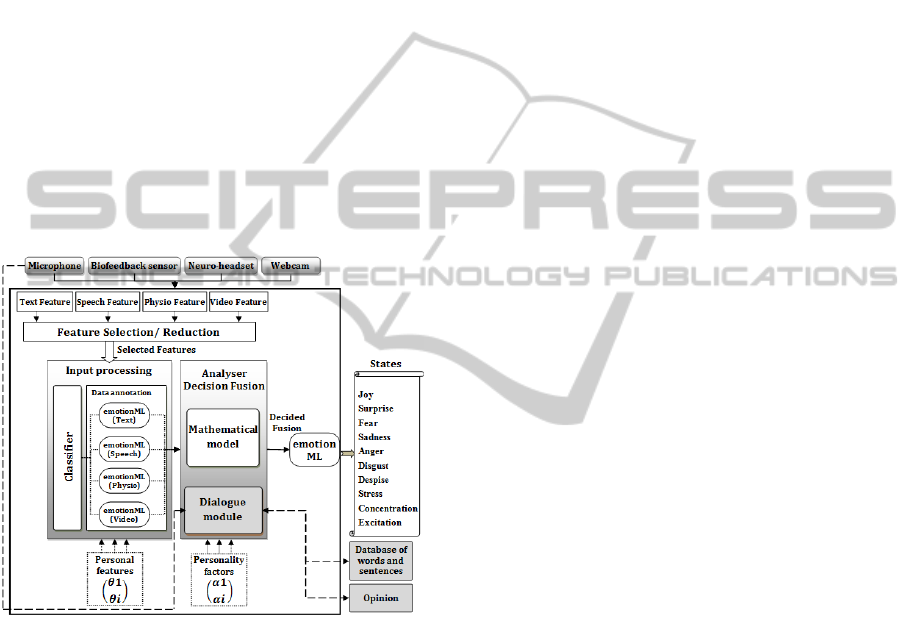

3.3 Framework for Emotion

Recognition

Our approach takes into account the multi-modal na-

ture of emotions and is therefore based on multi-

ple sensors and human-machine interfaces (cameras,

biofeedback sensors, microphones, brain computer

interfaces, etc.). A diagram of the proposed frame-

work is given in fig. 6. The framework has the fol-

lowing significant modules:

1. Feature extraction,

2. Feature selection/reduction,

3. Classification,

4. Decision fusion : Analyzer,

5. Information annotation.

Figure 6: Proposed model for emotion recognition.

Features of the collected data/signals will be ex-

tracted from all the channels and the most suitable

ones will be selected. Most data/signals are not pre-

pared i.e. are not identified and compared to the be-

havioral state to which they correspond. Thus, a clas-

sifier will accept the selected features and will pro-

ceed to the classification of individual input modal-

ities. All information related to classification re-

sults for the given stimuli and subject will be anno-

tated/stored using EmotionML.

The decision-fusion module (analyzer) will take

the decisions for all the individual channels and per-

form the data fusion to estimate the candidate emo-

tional state. Thus, the analyzer will integrate them

in a mathematical model (equation 1). In fact, as-

suming that the emotional state at time t depends on

the emotional state at time t − 1, we will sum up

pondered data at two successive moments for each

human-machine interfaces related to a given emo-

tional state. Finally, the resulted emotions will be

stored using EmotionML.

f

1

...

f

n

=

∑

n

i=1

(β·(α

i

·x

i

))

t

+((1−β)·(α

i

·x

i

))

t−1

...

∑

n

i=1

(β·(α

i

·x

i

))

t

+((1−β)·(α

i

·x

i

))

t−1

(1)

Where f

i

: emotional state i (joy, surprise, fear,

sadness, anger, disgust, despise, stress, concentration,

excitation).

n: represents the set of human-machine interfaces

related to the emotional state i.

α

i

: coefficient to be determined by experiments.

n

∑

i=1

α

i

= 1

β

i

: user-defined coefficient.

x

i

: data annotation, filtered from input devices.

4 CONCLUSIONS

We presented a multi-modal immersive interactive

virtual environment (VE) to train for job interview.

The proposed platform aims to train candidates (stu-

dents, job hunters, etc.) to better master their emo-

tional state and behavioral skills. An Embodied Con-

versational Agent (ECA) will be used to enable real-

time immersive multi-modal and emotion-based sim-

ulations. In order to assess the emotional state of the

candidates, different human-machine interfaces and

bio-sensors have been proposed. In the near future we

will carry out some experiments to calibrate the HMIs

and identify the signals they provide for different

emotional situations. This work opens the way to new

possibilities in different areas such as professional or

medical applications, and contributes to the democra-

tization of new human-machine interfaces and tech-

niques for affective human-computer communication

and interaction.

REFERENCES

Busso, C., Deng, Z., Yildirim, S., Bulut, M., Lee,

C., Kazemzadeh, A., Lee, S., Neumann, U., and

Narayanan, S. (2004). Analysis of emotion recogni-

tion using facial expressions, speech and multimodal

information. In 6

th

international conference on Mul-

timodal interfaces, pages 205–211, New York, NY,

USA. ACM.

A MULTI-MODAL VIRTUAL ENVIRONMENT TO TRAIN FOR JOB INTERVIEW

555

Calvo, R. A. and D’Mello, S. (2010). Affect detection: An

interdisciplinary review of models, methods, and their

applications. IEEE Transaction on Affective Comput-

ing, 1:18–37.

Damasio, A. (1994). L’Erreur de Descartes. La raison des

motions. Odile Jacob.

Darwin, C. (1872). The expression of emotion in man and

animal. University of Chicago Press (reprinted in

1965), Chicago.

Ekman, P. (1999). Basic emotions, pages 301–320. Sussex

U.K.: John Wiley and Sons, Ltd, New York.

Ekman, P. and Friesen, W. V. (1978). Facial Action Cod-

ing System: A Technique for Measurement of Facial

Movement. Consulting Psychologists Press Palo Alto,

California.

Hammal, Z. and Massot, C. (2010). Holistic and feature-

based information towards dynamic multi-expressions

recognition. In VISAPP 2010. International Confer-

ence on Computer Vision Theory and Applications,

volume 2, pages 300–309.

Healey, J. and Picard, R. W. (2000). Smartcar: Detecting

driver stress. In In Proceedings of ICPR’00, pages

218–221, Barcelona, Spain.

Helmut, P., Junichiro, M., and Mitsuru, I. (2005). Recog-

nizing, modeling, and responding to users’ affective

states. In User Modeling, pages 60–69.

Lisetti, C. and Nasoz, F. (2004). Using noninvasive wear-

able computers to recognize human emotions from

physiological signals. EURASIP J. Appl. Signal Pro-

cess, 2004:1672–1687.

Luneski, A. and Bamidis, P. D. (2007). Towards an emotion

specification method: Representing emotional physi-

ological signals. Computer-Based Medical Systems,

IEEE Symposium on, 0:363–370.

Mehrabian, A. (1996). Pleasure-arousal-dominance: A gen-

eral framework for describing and measuring individ-

ual differences in temperament. Current Psychology,

14(4):261–292.

Paleari, M. and Lisetti, C. L. (2006). Toward multimodal fu-

sion of affective cues. In Proceedings of the 1

st

ACM

international workshop on Human-Centered Multime-

dia, pages 99–108, New York, NY, USA. ACM.

Pantic, M. and Rothkrantz, L. (2003). Toward an

affect-sensitive multimodal human-computer interac-

tion. volume 91, pages 1370–1390. Proceedings of the

IEEE.

Picard, R. (1995). Affective Computing, rapport interne du

MIT Media Lab, TR321. Massachusetts Institute of

Technology, Cambridge, USA.

Picard, R., Vyzas, E., and Healey, J. (2001). Toward

machine emotional intelligence: Analysis of affec-

tive physiological state. IEEE Transactions on Pat-

tern Analysis and Machine Intelligence, 23(10):1175–

1191.

Roy, D. and Pentland, A. (1996). Automatic spoken affect

classification and analysis. automatic face and gesture

recognition. In Proceedings of the 2nd International

Conference on Automatic Face and Gesture Recogni-

tion (FG ’96), pages 363–367, Washington, DC, USA.

IEEE Computer Society.

Scherer, K. R. (2000). Emotion. in Introduction to Social

Psychology: A European perspective, pages 151–191.

Blackwell, Oxford.

Scherer, K. R. (2003). Vocal communication of emotion:

A review of research paradigms. Speech Communica-

tion, 40(7-8):227–256.

Sebe, N., Cohen, I., and Huang, T. (2005). Multimodal

Emotion Recognition. World Scientific.

Sharma, R., Pavlovic, V. I., and Huang, T. S. (1998). Toward

multimodal human-computer interface. roceedings of

the IEEE, 86(5):853–869.

Tian, Y., Kanade, T., and Cohn, J. (2000). Recognizing

lower face action units for facial expression analysis.

pages 484–490. Proceedings of the 4th IEEE Inter-

national Conference on Automatic Face and Gesture

Recognition (FG’00).

Vilhjalmsson, H., Cantelmo, N., Cassell, J., Chafai, N. E.,

Kipp, M., Kopp, S., Mancini, M., Marsella, S., Mar-

shall, A. N., Pelachaud, C., Ruttkay, Z., Thorisson,

K. R., van, H. W., and van der, R. J. W. (2007). The

behavior markup language: Recent developments and

challenges. In Intelligent Virtual Agents, pages 99–

111, Berlin. Springer.

Villon, O. (2007). Modeling affective evaluation of multi-

media contents: user models to associate subjective

experience, physiological expression and contents de-

scription. PhD thesis, Thesis.

Wang, H., Azuaje, F., Jung, B., and Black, N. (2003). A

markup language for electrocardiogram data acquisi-

tion and analysis (ecgml). BMC Medical Informatics

and Decision Making, 3(1):4.

PECCS 2011 - International Conference on Pervasive and Embedded Computing and Communication Systems

556