MULTIPLE PEOPLE ACTIVITY RECOGNITION

USING SIMPLE SENSORS

Clifton Phua, Kelvin Sim and Jit Biswas

Institute for Infocomm Research, Agency for Science, Technology and Research (A*STAR)

1 Fusionopolis Way, #21-01, Connexis (South Tower), 138632 Singapore

Keywords:

Multiple people, Activity recognition, Ambient intelligence, Sensors and sensor networks.

Abstract:

Activity recognition of a single person in a smart space, using simple sensors, has been an ongoing research

problem for the past decade, as simple sensors are cheap and non-intrusive. Recently, there is rising interest

on multiple people activity recognition (MPAT) in a smart space with simple sensors, because it is common

to have more than one person in real-world environments. We present the existing approaches of MPAT, such

as Hidden Markov Models, and the available multiple people activities datasets. In our experiments, we show

that surprisingly, without the use of existing approaches of MPAT, even standard classification techniques can

yield high accuracy. We conclude that this is due to a set of assumptions that hold for the datasets that we

used and this may be unrealistic in real life situations. Finally, we discuss the open challenges of MPAT, when

these set of assumptions do not hold.

1 INTRODUCTION

Activity recognition aims to recognize the intention

or actions of one or more people/residents. Their in-

tentions are inferred from a series of sensed obser-

vations on the actions of the people/residents and the

environmental conditions. It is also known as plan, in-

tent, or behavior recognition. Depending on the appli-

cation, good activity recognition requires the careful

selection and use of hardware/sensor-based and soft-

ware/algorithmic combinations, in order to produce

cheap but accurate outcomes.

For the identification of the activities of different

people, we can use either complex sensors or simple

ones. Complex sensors employ technologies such as

Ultra Wide Band (UWB) and Radio Frequency Identi-

fication (RFID) to reliably distinguish the identities of

people without much inference (that is, learning and

predicting each person’s movement and activity pat-

terns). However, complex sensors are generally ex-

pensive, and requires people to wear sensors or tags,

which can be intrusive to the privacy of the people. In

simple sensors, inference is required on the identifi-

cation of the activities of the different people, and a

combination of simple sensors is usually needed for

reliable and accurate inferences, as they have limited

sensing range. However, they are cheap, and are less

intrusive to the privacy of the people. Examples of

simple sensors are motion detectors, contact switches,

pressure mats, and vibration sensors.

Almost all prior activity recognition work using

simple sensors is on a single person, but it makes

more sense in recognition of activities of multiple

people, as humans are social creatures and it is com-

mon to have more than one person in an environment.

Recently, multiple people recognition algorithms are

developed in the area of computer vision, which use

video cameras in outdoor or common environments.

For example, there are some computer vision works in

nursing home, where multiple people interactions in

the corridor and dining room are monitored by video

cameras and microphones (Chen et al., 2007; Haupt-

mann et al., 2004). However in many indoor and pri-

vate environments, the use of video cameras is not

practical due to:

• computational constraints

• privacy concerns (such as in the home and office

situations),

• budgetary limitations (such as high unit, installa-

tion, maintenance cost of each video camera; and

situations usually require multiple units), and

• accuracy challenges (such as distance from cam-

era, occlusion, and requirement of correct facial

and gait alignment to camera).

224

Phua C., Sim K. and Biswas J..

MULTIPLE PEOPLE ACTIVITY RECOGNITION USING SIMPLE SENSORS.

DOI: 10.5220/0003399902240231

In Proceedings of the 1st International Conference on Pervasive and Embedded Computing and Communication Systems (PECCS-2011), pages

224-231

ISBN: 978-989-8425-48-5

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

Multiple People Activity Recognition (MPAR) us-

ing simple sensors is an emerging and multi-faceted

research area; closely related to ambient intelligence,

sensor networks, and data mining. Its key application

is in home-based elderly care due to the availability

of realistic simple sensor data of normal people living

in smart homes, and also the reporting of good results

by some algorithms in this application.

Common sense tells us that MPAR is more com-

plex than the single person version due to the addi-

tional task of assigning the recognized activity to one

of the n number of people. However, contrary to con-

ventional wisdom, we show that MPAR using many

simple sensors can be trivial. In other words, if we

relax a number of assumptions in the current state-of-

the-art studies, such as using very few simple sensors

or reduce the dependency on person-specific activity

labels for training, the existing techniques will yield

either poor results or are infeasible to use.

This rest of this paper is organized as follows.

First, we introduce existing approaches. Second, we

list some available datasets, mostly in home-based el-

derly care applications. We apply the simplest tech-

niques on the simplest dataset, and present early re-

sults to support our argument. Finally, we present and

describe four open challenges in MPAR using sim-

ple sensors. This is in hope to see more researchers

working on this interesting area of research, new tech-

niques which address these open challenges, or be-

come our future work.

2 EXISTING MULTIPLE PEOPLE

ACTIVITY RECOGNITION

(MPAR) APPROACHES USING

SIMPLE SENSORS

2.1 Hidden Markov Models (HMMs)

The hidden Markov model (HMM) is the most com-

mon approach used for activity recognition of multi-

ple people in smart spaces (Panangadan et al., 2010;

Cook et al., 2010; Crandall and Cook, 2009; Singla

et al., 2010; Wilson and Atkeson, 2005). The HMM is

a suitable approach, as it can probabilistically model

the complexities and dynamics of the activities of the

multiple people in a smart space. Each person’s ac-

tivities (Chiang et al., 2010) or each activity (Cook

and Schmitter-Edgecombe, 2009) can be represented

as a Markov model, as the assumption is that dif-

ferent activities map to distinct probability distribu-

tions. The Markov model is shown to have slightly

better accuracy that the naive Bayes classifier (Cook

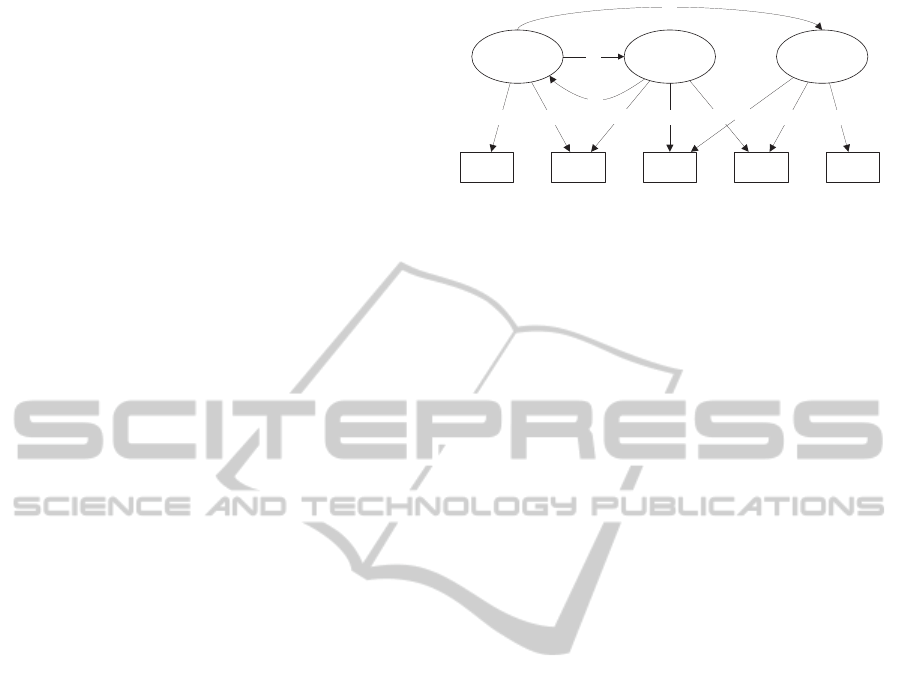

Person 1

consuming meal

(State 1)

Person 2

Watching TV

(State 3)

Person 1

taking medication

(State 2)

a

13

a

12

a

21

Sensor 1

ON

Sensor 2

ON

Sensor 3

ON

Sensor 4

ON

Sensor 5

ON

b

11

b

12

b

22

b

35

b

34

b

23

b

24

b

33

Figure 1: Example of a HMM modeling the activities of

multiple people in a smart space. The activities of multiple

people are represented as hidden states (circles) and sensor

values are represented as observations. The edge labeled

with a

i j

is the transition probability from hidden state i to

hidden state j, while the edge labeled with b

i j

is emission

probability of generating observation j in hidden state i.

and Schmitter-Edgecombe, 2009), probably due to its

ability to capture sequence information.

Figure 1 shows an example of a HMM for activ-

ity recognition of multiple people. The HMM con-

sists of hidden states and observable states. In the

context of activity recognition, the activities are mod-

eled as a Markov process, and they are represented as

the hidden states, as the activities are not directly ob-

servable. The sensors values are modeled as observa-

tions, which are generated from the hidden states. The

edges between the hidden states denote the transition

probability between states (activities), while the edges

from the hidden states to the observations denote the

emission probabilities of generating the observations

in the hidden states.

The HMM is first trained with the training data,

and the Viterbi algorithm is used to detect the activi-

ties. Given a sequence of observations (sensors read-

ings), the Viterbi algorithm is used to find the most

likely sequence of hidden states (activities) that re-

sults in the given sequence of observations.

Note that the example shown in Fig. 1 is a simple

example of how HMM is used, and different papers

describe different variations of HMM to solve their

own specific problems. For example, to detect human

interaction, a HMM is used to model each person’s

activities, and two HMMs/people’s activities are com-

bined by considering the relationship between them;

while parallel HMM does not consider any relation-

ship (Chiang et al., 2010).

Although HMM is a popular approach in activity

recognition, it has some limitations. First, HMM is

not scalable to model large and complex smart spaces.

As each activity of a person is modeled as a hidden

state, a large number of activities and people will lead

to an explosion of hidden states of HMM, which will

decrease the efficiency of the model. Moreover, the

Viterbi algorithm is a dynamic programming algo-

rithm - expensive in running time and memory space.

MULTIPLE PEOPLE ACTIVITY RECOGNITION USING SIMPLE SENSORS

225

Second, the activities and the number of people in

the smart space must be known prior to the training

of the model. Hence, the training data must be accu-

rately labeled, which can be a time consuming process

if manual annotating is used.

Third, HMM does not exclusively exploit the

knowledge of multiple people to improve its accuracy,

as it treats each hidden state equally, even though it is

obvious that hidden states that correspond to a per-

son should be related. Hence, there is no difference in

modeling the activities of a person and the activities

of multiple people.

Fourth, HMM has the stationary assumption,

which means that the state transition probabilities do

not change over time as system evolves. This is not

realistic as activities of people may evolve over time,

particularly for home-based elderly with dementia.

2.2 Emerging Patterns

Emerging patterns for activity recognition of multi-

ple people has been proposed (Gu et al., 2009b). Let

there be n datasets D

1

,... , D

n

, where each dataset cor-

responds to the sensors readings of a person in the

smart space. Each row R of a dataset D

i

is the sensor

readings of a continuous period of time, which cor-

responds to an activity. Each sensor and its reading

is represented as an item, and so a row R represents

a set of items. Let X be a pattern, which is a sub-

set of row R. The support of pattern X, sup

D

(X), is

occ

D

(X)/|D|, where occ

D

(X) is number of rows in D

containing X, and |D| is total number of rows in D.

Definition 1. Let D

i

and D

j

be datasets of two people

i and j respectively. The growth rate of a pattern X

from D

i

to D

j

is defined as GrowthRate(X ) =

sup

j

(X)

sup

i

(X)

.

In special situations, GrowthRate(X) = 0 if

sup

i

(X) = 0 and sup

j

(X) = 0, and GrowthRate(X) =

∞ if sup

i

(X) = 0 and sup

j

(X) > 0.

Definition 2. Given a growth rate threshold ρ > 1, a

pattern X is an emerging pattern from a background

dataset D

i

to a target dataset D

j

if GrowthRate(X) ≥

ρ.

An emerging pattern X with high support in tar-

get dataset D

j

and low support in other background

datasets D

i

can be considered as a ‘signature’ of per-

son j doing a particular activity. Therefore, for each

person, each activity has its set of emerging patterns.

Gu et al. then use these sets of emerging patterns to

detect activities of multiple people in the smart space.

Using emerging patterns for activity recognition

has some common weakness with HMM, such as

the necessity that the activities and number of peo-

ple must be known and the assumption that the activ-

ities of people do not evolve over time. Beside these,

another weakness of emerging patterns is its sensitiv-

ity to the parameter ρ. Setting the appropriate ρ is a

difficult task as it is not semantically meaningful and

the user will most likely set it based on his or her bi-

ased assumptions. Thus, the emerging patterns are

determined by the user, and they are not necessarily

patterns that are intrinsically prominent in the data.

2.3 Filters

Rao-Blackwellised particle filter has been applied

in MPAR using only motion detectors and contact

switches (Wilson and Atkeson, 2005). The reported

activity recognition accuracy is high - about 98% for

2-people and 85% for 3-people. Sigma-point Kalman

filters have been proposed to fuse Infra Red sen-

sors and binary foot-switches to track multiple people

(Paul and Wan, 2008).

3 APPLICATIONS

MPAR using simple sensors are used in a wide range

of applications, such as home-based elderly care, as-

sistance of sick and disabled, environmental moni-

toring, security-related applications, logistics support,

and location-based services.

Of the above applications, home-based elderly

care is probably the most important and is the fo-

cus of this paper. This is because proven technology

from MPAR using simple sensors can deliver large

social and commercial impact. The social impact is

to mitigate aging population and effects, particularly

in developed countries. There are so many elderly to

care for, but so few carers. For example, there is in-

sufficient supply of nursing homes, trained geriatric

nurses and doctors to handle the demand. The com-

mercial impact is also significant. Long term home

care and nursing home information systems market

are projected to triple by 2016. The current home care

and nursing home technology is applicable only to a

single person, and key companies involved are Quiet-

Care (GE Healthcare), Grandcare, and HealthSense.

3.1 Available Datasets

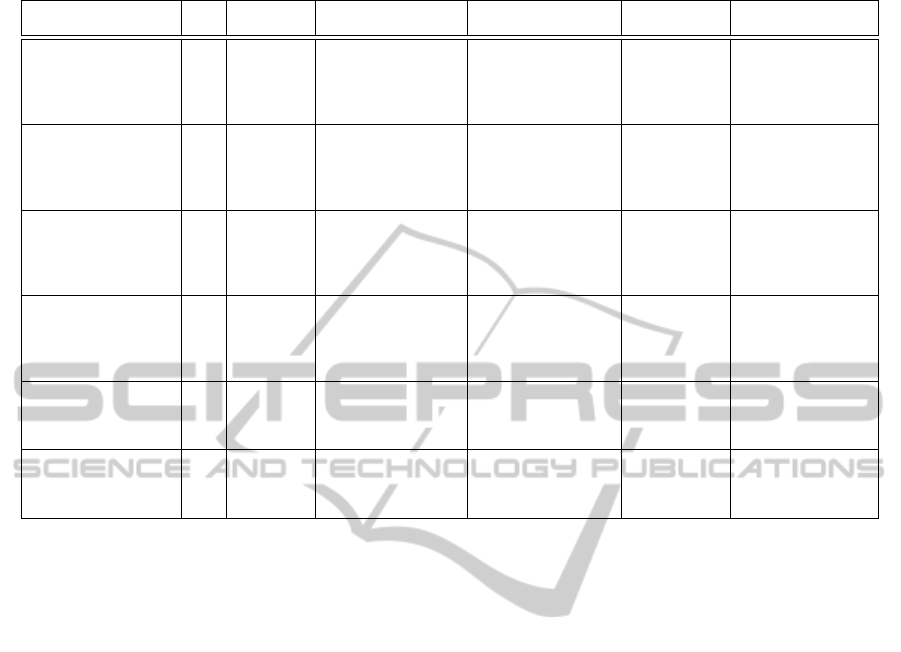

In Table 1, we describe and compare 6 MPAR datasets

which and can be either downloaded from the Web or

requested from the relevant researchers. The features

are these 6 datasets are:

• Dataset ID refers to our naming convention

where the last two digits refer to the year

the data was collected. Most datasets are

PECCS 2011 - International Conference on Pervasive and Embedded Computing and Communication Systems

226

Table 1: Some Available Datasets For Multiple People Activity Recognition Using Simple Sensors.

Dataset ID n People

profile

Sensor profile Activity profile Location

profile

Label profile

TWOR09 (Cook

and Schmitter-

Edgecombe, 2009)

2 1 couple,

1 dog

4 types of sensors

(pressure, RFID,

motion, door),

about 90 sensors

about 8 2-people

activities of daily

living, about 800

occurrences for

about 2 months

all locations

in home

only activities la-

belled

TWORSUMMER09 2 same as

TWOR09

in addition to

TWOR09, 2 more

types of sensors

(temperature,

electricity)

about 4-5 2-people

activities of daily

living

same as

TWOR09

same as TWOR09

TULUM09 2 1 couple 2 types of sensors

(motion, tempera-

ture), about 18 sen-

sors

about 2 2-people

activities of daily

living, about 1000

occurrences for

about 3 months

locations

(pantry, din-

ing, living

rooms)

same as TWOR09

CAIRO09 2 same as

TWOR09

2 types of sensors

(motion, tempera-

ture), about 30 sen-

sors

about 3 2-people

activities of daily

living, about 600

occurrences for

about 2 months

same as

TWOR09

same as TWOR09

YAMAZAKI05

(Yamazaki and

Toyomura, 2008)

2 1 elderly

couple in

60s

3 types of sensors

(pressure, RFID,

motion), about

1800 sensors

unknown 2-people

activities of daily

living for about 16

days

same as

TWOR09

no labels (video

provided for label-

ing)

WMD07 (Wren

et al., 2007)

>2 many re-

searchers

and

visitors

1 type of sensor

(motion), about

200 sensors

unknown n-people

office activities for

about a year

research lab-

oratory over

2 floors

no labels (map,

calendar, weather

data provided for

labeling)

from Washington State University’s CASAS lab’s

website, http://ailab.wsu.edu/casas/datasets.html.

The CASAS datasets, TWOR09, TWORSUM-

MER09, TULUM09, CAIRO09, were all col-

lected in 2009. More of their datasets, such as KY-

OTO and PARIS, have recently been made avail-

able online (Cook and Schmitter-Edgecombe,

2009). YAMAZAKI05 and WMD07 datasets

are available from the researchers (Yamazaki and

Toyomura, 2008; Wren et al., 2007), and are the

largest datasets in terms of size and duration. The

datasets used in the publications (Sim et al., 2010;

Phua et al., 2009) can also be made available upon

request.

• n is the number of people known to be in the

dataset. Most of the multiple people datasets con-

tain activities of mostly two people-of-interest;

but there can be more than two entities captured

by sensors, such as pets, visiting guests, and maid.

• People profile is typically a couple, except for

WMD07, which has many researchers/visitors.

• All sensor profiles include motion sensors. The

number of deployed sensors range from 18 to

1,800, where the majority are motion sensors.

• As for activity profile, the CASAS datasets typi-

cally have several activities with several hundred

occurrences over a few months. YAMAZAKI05

and WMD07 datasets span 16 days and 1 year re-

spectively.

• All activity recognition datasets are for home-

based elderly care, except for WMD07, which is

based on the office environment.

• All CASAS datasets have people-specific activity

labels, while the rest are unlabeled.

In the next two subsections, using the TWOR09

dataset with activities from two residents R1 and R2,

we demonstrate that MPAR using a large number of

simple sensors can be trivial.

3.2 Data Preprocessing of TWOR09

Dataset

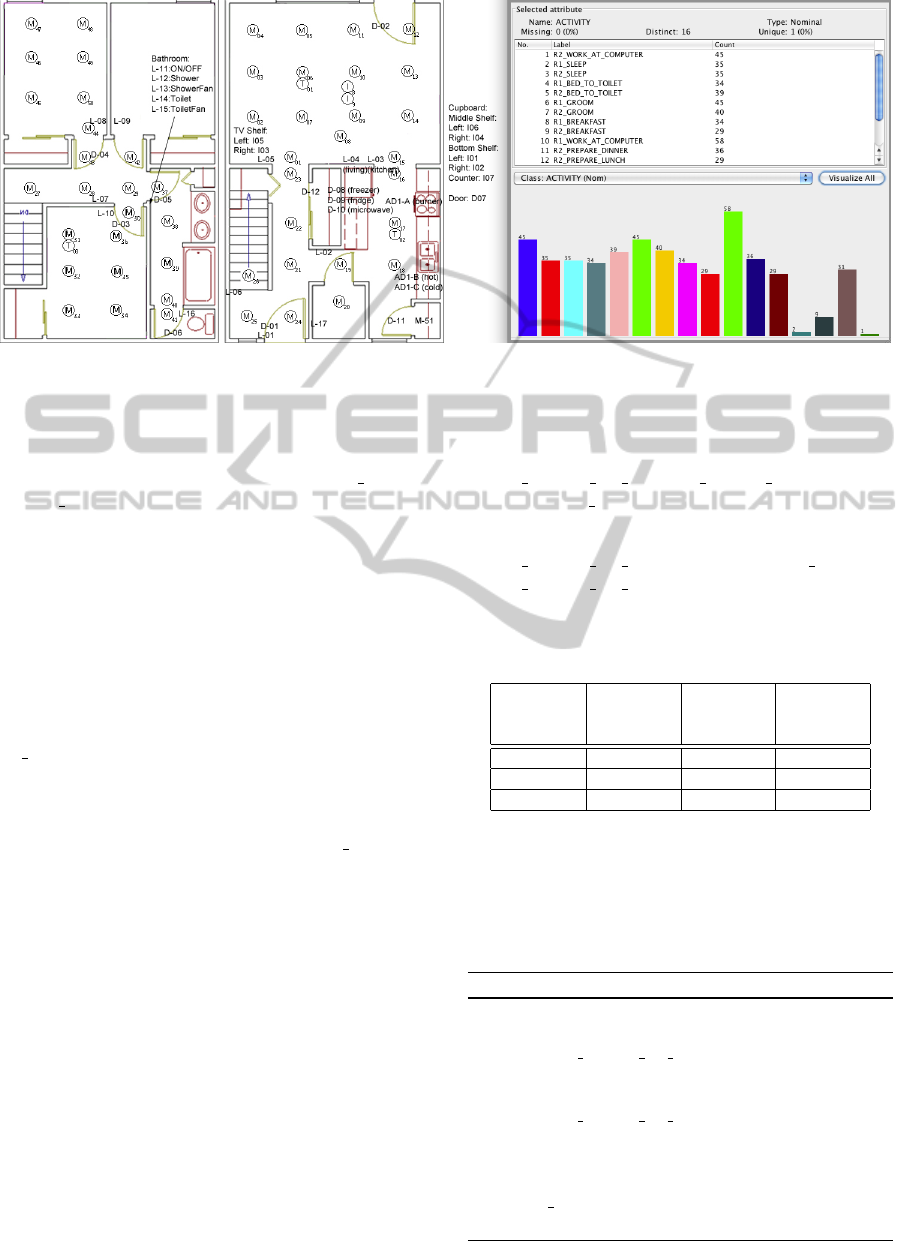

On the left side of Figure 2, the sensor layout for the

upper and ground floor of the smarthome is shown.

The sensors can categorized by:

• Mxx - motion sensor

• Ixx - item sensor for selected items in the kitchen

• Dxx - door sensor

• AD1-A - burner sensor, AD1-B - hot water sensor,

AD1-C - cold water sensor

• Txx - temperature sensors (not used in TWOR09)

• P001 - electricity usage (not used in TWOR09)

(Cook and Schmitter-Edgecombe, 2009)

MULTIPLE PEOPLE ACTIVITY RECOGNITION USING SIMPLE SENSORS

227

Figure 2: Sensor Layout in TWOR09 Dataset Smarthome (Cook and Schmitter-Edgecombe, 2009) and Some Person-Specific

Activity Labels from WEKA.

On the right side of Figure 2, the bar chart shows

16 person-specific activity labels displayed using

WEKA (Hall et al., 2009). For example, R1 SLEEP

and R2 SLEEP are considered two separate activi-

ties. Most of the activities are preparing meals, eating,

working, and sleeping; more than 80% of activities

usually occur > 20 times.

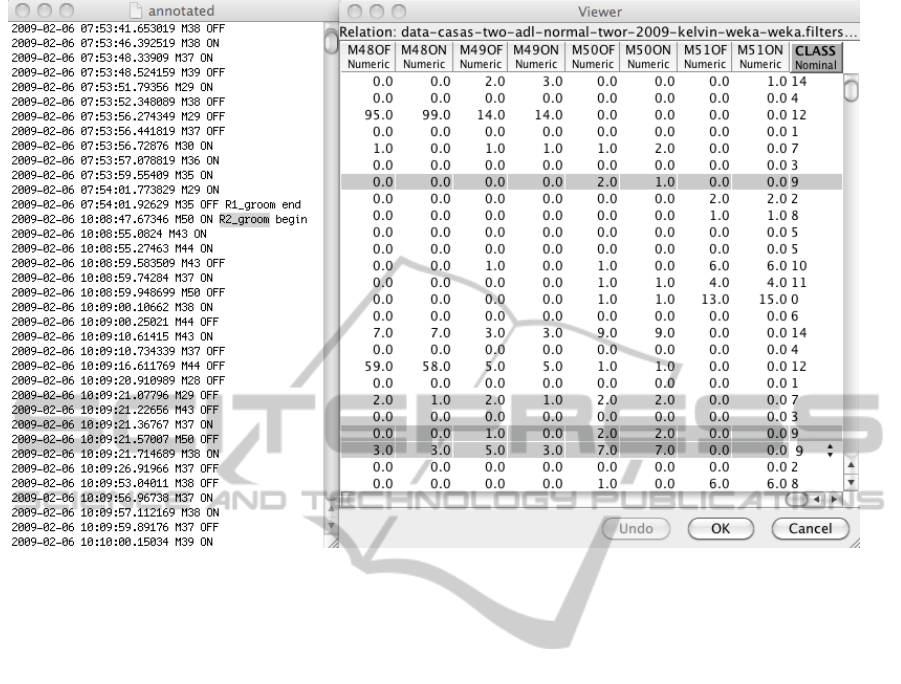

On the left side of Figure 3, the raw TWOR09

data consists of 138,039 sensor events, 502 activity

events, and 4 features. The raw features are times-

tamped with millisecond, sensor ID, sensor state, and

the start/end label of activity event. The processed ac-

tivity label is also transformed from a descriptive label

to a nominal number starting from 0. For example,

R2 GROOM is transformed by WEKA into number

9. The right side of Figure 3 shows there are 184 pro-

cessed TWOR09 features, each representing a sensor

state and the value is the sensor state frequency for a

particular activity event. For example, R2 GROOM

triggers M48 and M50 which are motion sensors pre-

sumably in the master bedroom.

3.3 Experiment Results on TWOR09

Dataset

We used a range of techniques for MPAR on the pro-

cessed TWOR09 dataset, and we report results from

classification algorithms (Naive Bayes, C4.5 Deci-

sion Tree, Support Vector Machine using sequential

minimal optimization) with default parameters from

WEKA (Hall et al., 2009). We also tried cluster-

ing (expectation maximization), and association rules

(Apriori), but their results are too insignificant to be

reported. The 3 subsets we used for 10-fold cross-

validated experiments were:

• all 16 activities, 502 activity events

• 13 activities, each with at least

20 events (removed CLEANING,

R1 WORK AT DINING ROOM TABLE,

and WASH BATHTUB), 490 activity events

• 3 activities, each with at least 40 events (left with

R2 WORK AT COMPUTER, R1 GROOM,

R1 WORK AT COMPUTER), 148 activity

events

Table 2: Classification Accuracy.

Activities Naive C4.5 Support

Bayes Decision Vector

Tree Machine

16 77.9% 76.5% 75.9%

13 80.8% 75.7% 75.9%

3 100% 100% 95.9%

Table 2 shows that Naive Bayes and C4.5 decision

tree classifiers can achieve 100% accuracy in the 3

most common activities. Algorithm 1 shows the ac-

curate and simple decision tree rules for the 3 most

common activities.

Algorithm 1: C4.5 Rules on 3 Activities.

if M37OF ≤ 0 then

if M45ON ≤ 3 then

R1 WORK AT COMPUTER

end

if M45ON > 3 then

R2 WORK AT COMPUTER

end

end

if M37OF > 0 then

R1 GROOM

end

PECCS 2011 - International Conference on Pervasive and Embedded Computing and Communication Systems

228

Figure 3: Snapshots of Raw TWOR09 Data (Cook and Schmitter-Edgecombe, 2009) and Processed TWOR09 Data for

Classification from WEKA.

In other words, MPAR using simple sensors can

be very accurate if 3 assumptions are true:

• there are many simple sensors

• there is availability of people-specific activity la-

bels

• there are few activities with high number of events

In the next section, we discuss if the above 3 as-

sumptions do not hold true.

4 OPEN CHALLENGES

4.1 Handling Noisier Data with Fewer

Sensors

Sensor readings are known to be noisy, as sensors

are prone to breakdown and give erroneous read-

ings. Readings will be even noisier with fewer sen-

sors. Hence it is crucial that activity recognition ap-

proaches are able to handle noisy data. To the best

of our knowledge, existing MPAR approaches do not

handle noisy data, and this problem is yet to be solved

even in activity recognition of single person. A possi-

ble solution is to treat the data in a probabilistic man-

ner and represent the data as probability distributions,

which is known as uncertain data. There is ongo-

ing research on data mining techniques for uncertain

data (Aggarwal and Yu, 2009), and techniques such

as classification and clustering for uncertain data may

be used for activity recognition in noisy data.

4.2 Less Dependency on Training and

Labels

The paradigm of existing approaches requires a train-

ing phase on their models using a set of training data.

This training data is collected from multiple people in

the smart space where the model is to be deployed,

with the assumption that the group of people in the

smart space is unchanged after model deployment.

There are two weakness to this paradigm. First,

the model needs to be trained when deployed in a

smart space. Hence, the model is overfitting the smart

space that it is trained on, and not on other smart

spaces. A possible solution is to develop a model

which is able to detect the activities of multiple people

in a general sense, so that the model can be deployed

across different smart spaces. A good start in this area

is by (Rashidi and Cook, 2009), which proposed the

usage of transfer learning in activity recognition of

a single person. In transfer learning, knowledge or

models from other smart spaces can be exploited to

MULTIPLE PEOPLE ACTIVITY RECOGNITION USING SIMPLE SENSORS

229

train the model of a targeted smart space, so that the

trained model is accurate and not overfitted.

Second, this paradigm requires collection of train-

ing data from the smart space, and depending on

the model, the data collection may range from min-

utes (Sim et al., 2010) to weeks (Crandall and Cook,

2009). Hence, this paradigm is not practical for large

deployment in multiple smart spaces. Moreover, the

training data has to be annotated in order for the

model to understand it, and annotation of the data

is usually manual and laborious work (Sim et al.,

2010). Various studies addressed it with visualization

or calendar/diary of activities (Szewcyzk et al., 2009;

Wren et al., 2007) after data collection, video match-

ing (Sim et al., 2010; Phua et al., 2009; Yamazaki

and Toyomura, 2008) after data collection, or actors

pressing their identity button on the keypad during

data collection (Wilson and Atkeson, 2005).

4.3 Incorporating Complex Situations

The existing approaches do not recognize activities of

multiple people in complex situations. In complex sit-

uations, a person may perform his or her activities in

interleaved or concurrent manner. In interleaved ac-

tivities, a person may perform two or more activities

by switching between steps of the activities. An ex-

ample will be watching TV while consuming food.

In concurrent activities, a person may perform

two or more activities by concurrently conducting the

steps of these activities. An example will be consum-

ing food and drinking water simultaneously.

Activity recognition of a person in complex sit-

uations is a non-trivial task, and this task is further

complicated when there are multiple people in com-

plex situations; multiple people may be in the same

location, and each of them may be performing either

interleaved or concurrent activities. For example, two

people may be in the living room, where one person

is watching TV while consuming food, and the other

person is reading a book.

Emerging patterns are proposed to detect inter-

leaved and concurrent activities of a single person

(Gu et al., 2009a). Perhaps, these complex activities

of multiple people can be detected by extending this

work.

4.4 Capturing Evolving Activities and

Labels

The existing approaches have an important assump-

tion which forms the cornerstone of their works. They

assume that multiple people perform their activities

in a habitual way and do not change over time. In

their training phase, they basically attempt to capture

the patterns that represent the activities of the mul-

tiple people, and use these patterns for future activ-

ity recognition. However, in real-world scenarios, it

is possible that people may change their habits over

time, and change the way they conduct their activi-

ties. This possibility is higher for home-based elderly

with dementia, as their cognitive skills are dependent

on the severity of their dementia. Therefore, there is

a need for an approach which is able to continuously

capture the evolving habits of the people and the way

they conduct their activities.

5 CONCLUSIONS AND FUTURE

WORK

Multiple people activity recognition (MPAT) using

simple sensors is an emerging multi-faceted research

area which is related to ambient intelligence, sen-

sor networks and data mining. In this position pa-

per, we discussed existing techniques of MPAT, and

showed that standard classification techniques sur-

prisingly yield high accuracy on MPAT using simple

sensors, if (1) the number of simple sensors is large,

(2) the training data is accurately labeled, (3) the ac-

tivities are simple, and (4) activities are done in a ha-

bitual way. These assumptions may be unrealistic in

real life situations, and we presented open challenges

of MPAT using simple sensors, when the assumptions

do not hold.

For future work, as the MPAR approaches we dis-

cussed are bottom-up (data-driven), another approach

can be top-down using ontologies (Lecce et al., 2009).

Also, we focused only on MPAR with identification

of the individuals, but it might also be interesting to

recognize the activities of subgroups of people with-

out bothering to identify them.

REFERENCES

Aggarwal, C. and Yu, P. (2009). A survey of uncertain data

algorithms and applications. IEEE Transactions on

Knowledge and Data Engineering, 21(5):609–623.

Chen, D., Yang, J., Malkin, R., and Wactlar, H. D. (2007).

Detecting social interactions of the elderly in a nursing

home environment. ACM Transactions on Multimedia

Computing, Communications and Applications, 3.

Chiang, Y.-T., Hsu, K.-C., Lu, C.-H., Hsu, J. Y.-j., and Fu,

L.-C. (2010). Interaction models for multiple-resident

activity recognition in a smart home. In Proceedings

of the IEEE/RSJ International Conference on Intelli-

gent Robots and Systems (IROS).

PECCS 2011 - International Conference on Pervasive and Embedded Computing and Communication Systems

230

Cook, D. J., Crandall, A., Singla, G., and Thomas, B.

(2010). Detection of social interaction in smart spaces.

Cybernetics and Systems, 41:90–104.

Cook, D. J. and Schmitter-Edgecombe, M. (2009). Assess-

ing the quality of activities in a smart environment.

Methods of Information in Medicine, 48(5):480–485.

Crandall, A. S. and Cook, D. J. (2009). Coping with multi-

ple residents in a smart environment. Journal of Ambi-

ent Intelligence and Smart Environments, 1:323–334.

Gu, T., Wu, Z., Tao, X., Pung, H., and Lu, J. (2009a). ep-

SICAR: An emerging patterns based approach to se-

quential, interleaved and concurrent activity recogni-

tion. In Proceedings of the 7th IEEE International

Conference on Pervasive Computing and Communi-

cations (PerCom), pages 1–9.

Gu, T., Wu, Z., Wang, L., Tao, X., and Lu, J. (2009b).

Mining Emerging Patterns for recognizing activities

of multiple users in pervasive computing. In Proceed-

ings of the 6th International Conference on Mobile

and Ubiquitous Systems: Computing, Networking and

Services (MobiQuitous), pages 1–2.

Hall, M., Frank, E., Holmes, G., Pfahringer, B., Reute-

mann, P., and Witten, I. H. (2009). The WEKA data

mining software: An update. SIGKDD Explorations,

11(1):10–18.

Hauptmann, A. G., Gao, J., Yan, R., Qi, Y., Yang, J., and

Wactlar, H. D. (2004). Automated analysis of nurs-

ing home observations. IEEE Pervasive Computing,

3:15–21.

Lecce, D., Calabrese, V., and Piuri, V. (2009). An ontology-

based approach to human telepresence. In Proceed-

ings of IEEE International Conference on Computa-

tional Intelligence for Measurement Systems and Ap-

plications (CIMSA), pages 56–61.

Panangadan, A., Mataric, M., and Sukhatme, G. (2010).

Tracking and modeling of human activity using

laser rangefinders. International Journal of Social

Robotics, 2:95–107.

Paul, A. and Wan, E. (2008). Wi-Fi Based Indoor Local-

ization and Tracking Using Sigma-Point Kalman Fil-

tering Methods. In Proceedings of IEEE/ION Position

Location and Navigation Symposium (PLANS), pages

646–659.

Phua, C., Foo, V. S.-F., Biswas, J., Tolstikov, A., Aung,

A.-P.-W., Maniyeri, J., Huang, W., That, M.-H., Xu,

D., and Chu, A. K.-W. (2009). 2-layer erroneous-

plan recognition for dementia patients in smart homes.

In Proceedings of the 11th International Conference

on e-Health Networking, Applications and Services

(Healthcom), pages 21–28.

Rashidi, R. and Cook, D. (2009). Multi home transfer

learning for resident activity discovery and recogni-

tion. In Proceedings of the 4th International work-

shop on Knowledge Discovery from Sensor Data (Sen-

sorKDD).

Sim, K., Yap, G., Phua, C., Biswas, J., Aung, A.-P.-W., Tol-

stikov, A., Huang, W., and Yap, P. (2010). Improving

the accuracy of erroneous-plan recognition system for

Activities of Daily Living. In Proceedings of the 12th

IEEE International Conference on e-Health Network-

ing Applications and Services (Healthcom), pages 28–

35.

Singla, G., Cook, D. J., and Schmitter-Edgecombe, M.

(2010). Recognizing independent and joint activi-

ties among multiple residents in smart environments.

Journal of Ambient Intelligence and Humanized Com-

puting, 1(1):57–63.

Szewcyzk, S., Dwan, K., Minor, B., Swedlove, B., and

Cook, D. (2009). Annotating smart environment sen-

sor data for activity learning. Technology and Health

Care, 17:161–169.

Wilson, D. and Atkeson, C. (2005). Simultaneous tracking

and activity recognition (STAR) using many anony-

mous, binary sensors. Pervasive computing, pages 62–

79.

Wren, C. R., Ivanov, Y. A., Leigh, D., and Westhues, J.

(2007). The MERL motion detector dataset. In Pro-

ceedings of the workshop on Massive datasets, pages

10–14.

Yamazaki, T. and Toyomura, T. (2008). Sharing of real-

life experimental data in smart home and data analy-

sis tool development. In Proceedings of the 6th In-

ternational Conference on Smart Homes and Health

Telematics (ICOST), pages 161–168.

MULTIPLE PEOPLE ACTIVITY RECOGNITION USING SIMPLE SENSORS

231