SIMULTANEOUS ESTIMATION OF LIGHT SOURCES POSITIONS

AND CAMERA ROTATION

Masahiro Oida, Fumihiko Sakaue and Jun Sato

Nagoya Institute of Technology, Gokiso, Showa, Nagoya 466-8555, Japan

Keywords:

Mixed reality, Photometric calibration, Geometric calibration.

Abstract:

For mixed reality and other applications, it is very important to achieve photometric and geometric consistency

in image synthesis. This paper describes a method for calibrating camera and light source simultaneously from

photometric and geometric constraints. In general, feature points in a scene are used for computing camera

positions and orientations. On the other hand, if the cameras and objects are sticked and move together, the

changes in shading information of the objects in images also include useful information on geometric camera

motions. In this paper, we show that if we use both shading information and feature point information, we can

calibrate cameras from smaller number of feature points than the existing methods. Furthermore, it is shown

that the proposed method can calibrate light sources as well as cameras. The accuracy of the proposed method

is evaluated by using real and synthetic images.

1 INTRODUCTION

Recently, mixed reality systems are studied exten-

sively(Milgram and Kishino, 1994). In these systems,

real images are taken by cameras and virtual informa-

tion is added to images. In order to achieve realistic

mixed reality, it is important to obtain scene environ-

ment such as lighting information and geometric in-

formation such as camera parameters.

In general, geometric information of cameras is

obtained from coordinates of feature points in images

by using camera calibration techniques(Hartley and

Zisserman, 2000; Faugeras and Luong, 2001). On the

other hand, lighting information is obtained from im-

ages of specular sphere(Powell et al., 2001), Lamber-

tian sphere(Takai et al., 2003) and so on(Sato et al.,

1999b; Sato et al., 1999a). In these existing meth-

ods, geometric information and photometric informa-

tion were obtained separately in different way. How-

ever, these informations are actually closely related

to each other. For example, if an object is fixed to the

camera, the illumination of the object changes accord-

ing to camera motions, and the change in intensity of

the object in the camera image provides us very use-

ful information for estimating camera motions. Also,

if a light source is attached on a camera and moves

together with the camera, the illumination of a static

object changes according to the camera motions, and

the change in intensity of the scene provides us useful

information for estimating camera motions.

In this paper, we propose a method which enables

us to calibrate lighting information and geometric in-

formation simultaneously by combining photometric

and geometric information. In this method, lighting

information is obtained from observation of a refer-

ence object, and camera parameters are computed by

combining photometric and geometric information,

such as shading information and image coordinates of

feature points in images. Since there are many light

sources in general real scenes and their distributions

vary, we consider a geodesic dome around the 3D

scene and light sources are distributed on the geodesic

sphere. Then, the distribution of light sources is esti-

mated and used for recovering camera motions from

photometric and geometric informations. By using

both photometric and geometric informations, cam-

era calibration can be achieved from smaller number

of feature points.

2 ESTIMATION OF LIGHT

SOURCE POSITIONS

We first consider the estimation of light source posi-

tions from images. In this paper, we consider a scene,

where a known object with Lambertian surface exists

with other unknown objects, and they are illuminated

168

Oida M., Sakaue F. and Sato J..

SIMULTANEOUS ESTIMATION OF LIGHT SOURCES POSITIONS AND CAMERA ROTATION.

DOI: 10.5220/0003374901680174

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2011), pages 168-174

ISBN: 978-989-8425-47-8

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

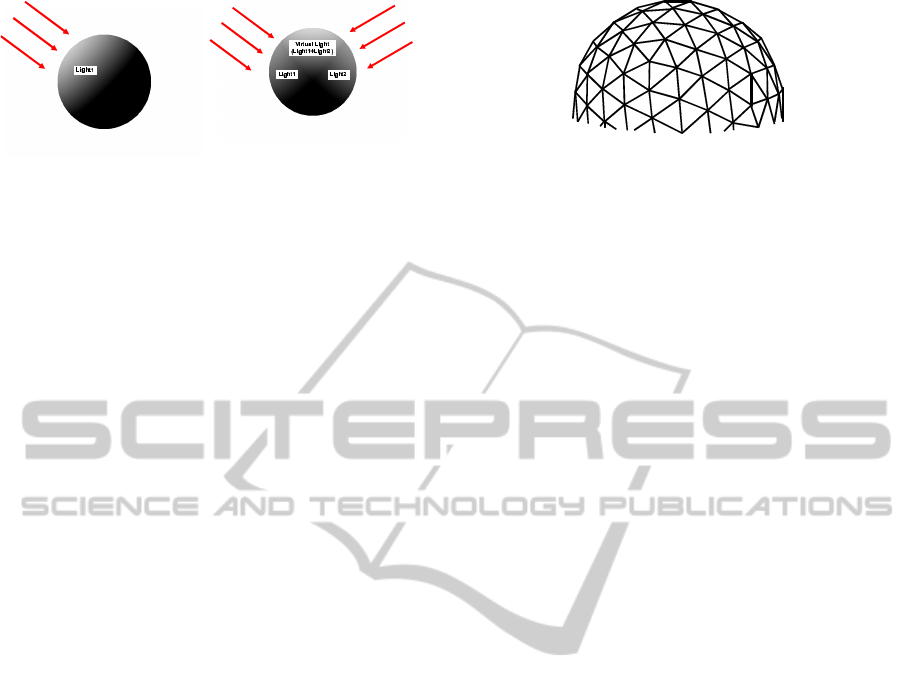

(a) under single light source

(b) under multiple light sources

Figure 1: Image intensities under (a) single light source and

(b)multiple light sources.

by infinite light sources. Suppose a point X on the

known object is projected to a point m in the image.

Then, the intensity I of the image point m is deter-

mined by a surface normal n at point X on the object

and a light source direction s as follows:

I = max(Eρn

⊤

s, 0), (1)

where ρ is the albedo of the surface and E is the power

of the light source. If there is a single light source in

the scene as shown in Fig.1 (a), we can estimate the

light source position from an image by using Eq. (1).

However, if there are multiple light sources as shown

in Fig.1 (b), it is not easy to estimate light source po-

sitions, since some areas are illuminated by multiple

light sources, and some areas are not. The number of

light sources which illuminate a point on the surface

varies depending on the surface normal of the point

and the distribution of the light sources. Under such

environment, we consider a method which can esti-

mate camera motions and light source distributions

simultaneously.

In this paper, we consider two cases separately.

We first consider a case where there is only a single

light source in the scene. We next consider a case

where there are multiple light sources.

2.1 Light Source Estimation under

Single Light

We first consider a case where there is only a single

light source in the scene. Let us consider an object

with Lambertian surface in the scene, whose shape

(including surface normal and albedo) is known. If

the distance from a light source to the object is suf-

ficiently large, the intensity of a point on this object

can be described by Eq. (1). Suppose we have M

points X

i

(i = 1, ··· , M) on the object surface, and

their surface normal and albedo are n

i

and ρ

i

respec-

tively. Then, the following matrix W, which repre-

sents the surface geometry, is known:

W =

ρ

1

n

1

··· ρ

p

n

p

⊤

(2)

Let I be a vector which consists of the image intensity

I

i

(i = 1, ·· · , M) at these points:

Figure 2: Sampling of light source directions using

geodesic dome.

I =

I

1

··· I

p

⊤

(3)

Then the intensity I can be described by using the

light power E, the light source direction s and the sur-

face geometry W as follows:

I = EW

⊤

s (4)

Note, s

⊤

s = 1. By using the least means square

method, Es can be estimated from I and W as fol-

lows:

Es =

W

+

⊤

I (5)

where W

+

= W

⊤

(W

⊤

W)

−1

is the pseudo inverse of

matrix W. The number of components of a vector Es

is 3, and thus we can estimate light source direction

from 3 or more than 3 different intensities.

Note that if the intensity estimated from a surface

normal and a light source direction is negative, we

cannot use the pixel for the linear light source estima-

tion. Thus, we have to eliminate such pixels as out-

liers by using the robust estimation methods, such as

RANSAC(Hartley and Zisserman, 2000) for correct

estimation.

2.2 Light Source Estimation under

Multiple Lights

We next consider a case where there are multiple light

sources in the scene. In this case, intensity I

i

of i-th

pixel can be described by using the surface normal n

i

and light source directions s

j

as follows :

I

i

=

∑

j

max(E

j

ρ

i

n

⊤

i

s

j

, 0), (6)

where ρ

i

denotes the albedo of i-th point and E

j

de-

notes the light power of j-th light source. In this

case, we cannot linearly estimate light sources posi-

tions from the equation, since non-linear mapping by

max(I

j

, 0) is included in this equation.

In order to estimate light source distributions un-

der multiple lights, Sato et al(Sato et al., 1999b)

represented the light source distributions by using a

geodesic dome as shown in Fig.2. In this model, a

light source distribution is represented by light source

power E

k

(k = 1, · · · , N) in N light source directions

SIMULTANEOUS ESTIMATION OF LIGHT SOURCES POSITIONS AND CAMERA ROTATION

169

s

k

(k = 1, · · · , N). Then, the image intensity I

i

of ith

point can be described as follows:

I

i

∼

N

∑

k=1

E

k

ρ

i

v(i, k)n

⊤

i

s

k

, (7)

where v(i, k) denotes a function which takes 1 if the

i-th pixel is illuminated from the k-th light source

direction, and takes 0 in the other case. Since s

k

(k = 1, ··· , N) is predefined on the geodesic dome, the

estimation of a light source distribution is same as the

estimation of E

k

(k = 1, ··· , N).

In our case, we knowv(i, k) since we know the ob-

ject shape, and thus E

k

is the only unknown variable

in Eq.(7). Therefore, we can estimate E

k

from I by

solving the following linear equations:

I =

ρ

1

v(1, 1)n

⊤

1

s

1

··· ρ

1

v(1, N)n

⊤

1

s

N

.

.

.

ρ

M

v(M, 1)n

⊤

M

s

1

··· ρ

M

v(M, N)n

⊤

M

s

N

E

1

.

.

.

E

N

(8)

Thus, we can linearly estimate light source distribu-

tions on the geodesic dome from the image. Al-

thought estimated E

k

may not be identical with the

real light source environment, the estimated light

source distributions can be used for generating images

which are identical with the input images.

3 SIMULTANEOUS ESTIMATION

OF LIGHT SOURCES AND

CAMERA MOTIONS

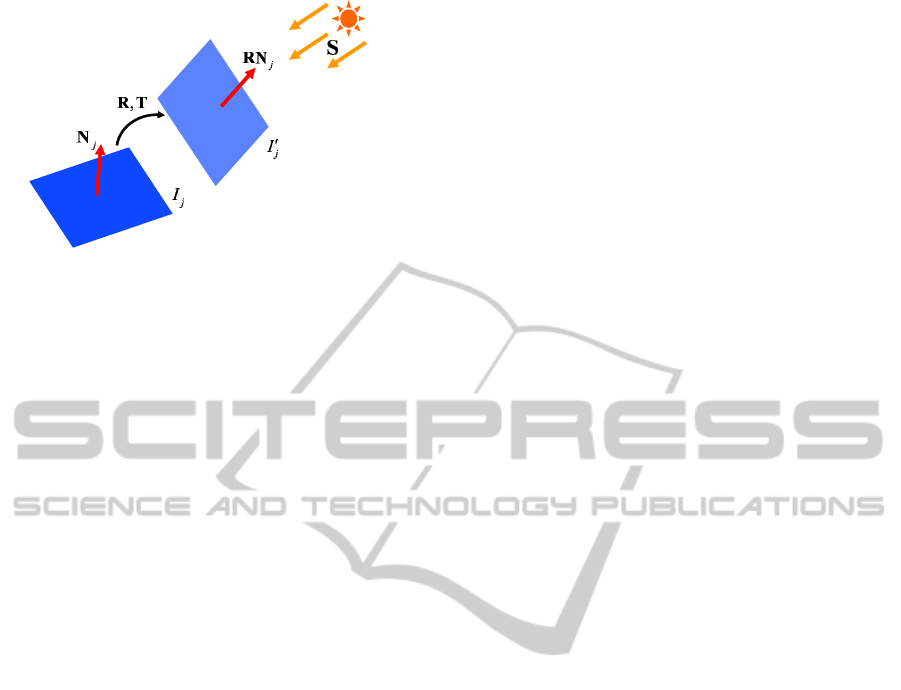

In this section, we consider a method which enables

us to estimate camera motions and lighting environ-

ments simultaneously. Let us consider a case, where

a camera moves in the scene and images are taken by

the camera under light sources which are attached on

the camera. This is equivalent to the case where ob-

jects move under a fixed camera and lights as shown

in Fig.3. We consider the estimation of camera mo-

tions in such cases.

3.1 Estimation of Camera Position

using Geometric Information

In general, camera motions are estimated from geo-

metric information such as markers in the scene. Let

a 3D point X in a 3D scene be projected into the cam-

era image as m as follows:

λ

e

m = A

R T

e

X, (9)

where ˜· denotes the homogeneouscoordinates, and A,

R and T denote the intrinsic parameters, rotation and

the translation of the camera.

C

i

m

i

m

′

i

X

′

i

X

TR,

j

N

j

RN

j

I

j

I

′

Figure 3: Camera motion and scene motion.

If the intrinsic parameters A and object shape

X are known, the number of unknown variables in

Eq.(9) is 6. We can obtain 2 constraints from Eq.(9)

for each image point m. Thus, we can calibrate ex-

trinsic parameters, R and T, from the projections of 3

known points.

3.2 Estimation of Camera Rotation

under Single Light Source

As shown in the previous section, we need 3 projected

points in order to calibrate the extrinsic parameters in

general. However, we can calibrate camera parame-

ters from fewer feature points. if we use lighting in-

formation for calibration.

Suppose a light source, such as a projector, is

fixed to the camera, and they move together in the

3D scene. Then, the intensity of the scene object in

the camera image changes according to the camera

motion. Then, the information of the camera motion

is included in the change in intensity of the object as

shown in Fig.4. Therefore, by using the intensity in-

formation, extrinsic parameters of the camera can be

estimated from fewer feature points than usual.

Now, let us consider a method for estimating cam-

era motions from intensity information. We first con-

sider a scene where a single light source exists. Let us

consider 2 images taken by a camera at two different

positions under the same lighting condition. In this

case, intensities I

j

and I

′

j

of j-th pixel in two camera

images can be described as follows:

I

j

= Eρ

j

n

⊤

j

s (10)

I

′

j

= Eρ

j

n

⊤

j

s

′

= Eρ

j

n

⊤

j

R

⊤

s (11)

In this equation, there is no effect of translation T,

since we assume infinite light source. Thus, we can

obtain constraints for rotation R. Since we can esti-

mate lighting direction s and s

′

for two images using

VISAPP 2011 - International Conference on Computer Vision Theory and Applications

170

Figure 4: Change in intensity caused by object/camera mo-

tion.

Eq.(5), constraints for rotation R can be obtained as

follows:

s

′

= R

⊤

s (12)

From this equation, we cannot estimate a rotation

around s, since the intensity does not change when

a camera rotates around s. Thus, we have to combine

geometric information for complete estimation of ex-

trinsic parameters. From the intensity constraints,

we can obtain 2 constraints for extrinsic parameters.

Thus, for estimating complete extrinsic parameters,

we require 2 points in the scene, which provides us 4

additional constraints for extrinsic parameters.

Furthermore, we can estimate optimized light

source directions as well as extrinsic parameters by

minimizing the following cost function.

e =

∑

l

||N (A[R T]

˜

X)− m||

2

+

∑

l

∑

i

(I

i,l

− Eρ

i

n

⊤

i

Rs)

2

,

(13)

where N (·) indicates the normalization of homoge-

neous coordinates, and I

i,l

denotes the intensity of ith

pixel in lth image. The equation includes non-linear

components, and we use Newton-Raphson method in-

order to minimize the equation.

3.3 Estimation of Camera Rotation

under Multiple Light Sources

We next consider scenes where multiple light sources

exist. In our method, we observe a Lambert sphere

for estimating camera and light source information.

In general the appearance of a sphere in the image

does not change except size and illumination, even if

the camera is moved.

When we have multiple images taken under cor-

responding light sources, an intensity I

i,l

of ith pixel

in lth image can approximately be represented as fol-

lows:

I

i,l

∼

N

∑

k=1

E

k

ρ

i

v(i, k)n

⊤

i

R

−1

l

s

k

, (14)

where, R

l

is the camera rotation of lth camera. By

estimating E

k

and R

l

from the equation, we can es-

timate light source environment and camera motion

simultaneously.

In order to estimate these components, we use it-

erative estimation method. At first, light source en-

vironment for 1st image is estimated from Eq.(8).

We next estimate camera motion of lth camera (l =

2, ··· , M) by minimizing the following cost function.

e

l

=

∑

i

(I

i,l

−

N

∑

k=1

E

k

ρ

i

v(i, k)n

⊤

i

R

−1

l

s

k

, ) (15)

The minimization can be achieved by using a non-

linear minimization method. In this paper, we used

Gauss-Newton method. By using estimated R

l

, E

k

is

updated from the following linear estimation.

I =

M

1

.

.

.

M

L

E

1

.

.

.

E

M

(16)

where

M

l

=

ρ

1

v(1, 1)n

⊤

1

R

−1

l

s

1

··· ρ

1

v(1, N)n

⊤

1

R

−1

l

s

N

.

.

.

ρ

M

v(M, 1)n

⊤

M

R

−1

l

s

1

··· ρ

M

v(M, N)n

⊤

M

R

−1

l

s

N

(17)

and, I is a vector which consists of the intensity vector

I

l

of lth image as follow:

I =

I

1

··· I

L

⊤

(18)

The estimation of E

k

and R

l

is iterated until conver-

gence. Then, we can obtain the camera motion R

l

and light source environmentE

k

simultaneously. Note

that, in this method we can estimate light source en-

vironment more accurately than the existing single

image method, since we can obtain more informa-

tion about light sources from multiple images in our

method.

4 EXPERIMENTS

In this section, we show some experimental results

from the proposed method.

4.1 Experiments under Single Light

Source

We first show experimental results under single light

source. Experimental environments are shown in

Fig.5. In this experiment, a dodecahedron which has

Lambertian surface as shown in Fig.6 was used. The

SIMULTANEOUS ESTIMATION OF LIGHT SOURCES POSITIONS AND CAMERA ROTATION

171

Figure 5: Experimental environment.

(a) Image 1 (b) Image 2

Figure 6: Input images.

distance from the target to the light source is suffi-

ciently large. In this experiment, we moved target

object instead of camera. The camera and the light

source were fixed. Thus, the relative relationship be-

tween camera and target object was changed and the

relative relationship between the object and the light

source was also changed. Therefore, we estimate the

motion of the target object from images instead of

camera motions by using our method.

The images were taken before and after the object

motion. These images are shown in Fig.6. The ver-

tices of the dodecahedron in the input images were

used as feature points, and the position of these points

in images were extracted manually. We estimated the

object motions and light source directions simultane-

ously by using the coordinates of feature points and

the image intensity of each plane.

The estimated light source directions and object

rotations were evaluated by reproducing the second

image from the first image by using the estimated

light source directions and rotations, and comparing

intensities of the reproduced image with those of the

real image. The RMS error of intensity was 8.1, and

we find the proposed method can estimate light source

directions and rotations accurately.

The estimated object motions were also evaluated

by reprojecting the feature points by using the esti-

mated object motions, and computing the errors in the

reprojected positions. The average error of the repro-

jected point was 6.2 pixels, and it indicates that our

method can estimate relative motions between cam-

eras and objects as well as light source directions un-

der single light source environments.

Figure 7: Input images taken from different positions.

4.2 Experiments under Multiple Light

Sources

We next show the experimental results under multi-

ple light source environments. In this experiment, a

Lambertian sphere is used for estimating the distribu-

tion of light sources and the rotational motions of the

camera simultaneously.

The sphere was illuminated by 2 lights, and im-

ages were taken by a camera placed at two different

positions with different orientations. These images

are shown in Fig.7. In this experiment, the target ob-

ject was fixed and the camera was moved.

By using the left and right images in Fig.7, the

light source distribution and rotation R were esti-

mated simultaneously from the proposed method.

Note that, the position and the size of the sphere are

different in these two images. Therefore, we com-

puted the position and the size of the sphere by us-

ing the Hough transformation and normalized them

in two images, so that the corresponding points on the

sphere are at the same position in these two images.

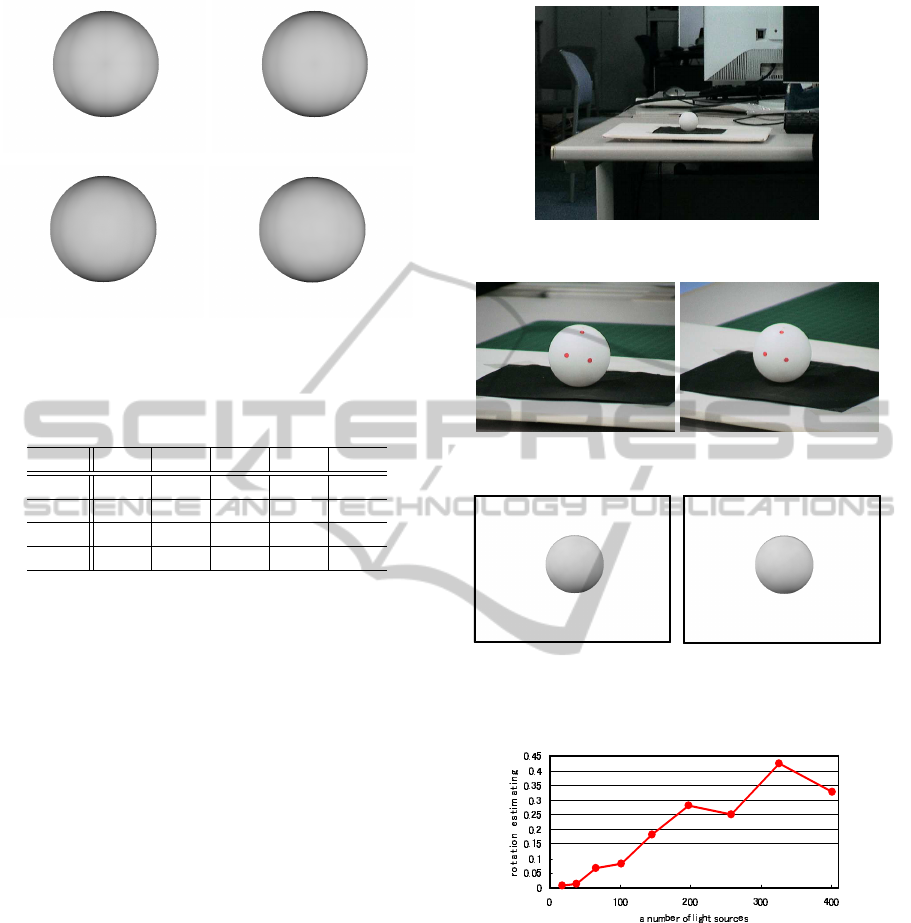

The accuracy of the recovered light source distri-

bution and rotation components were evaluated by re-

producing the intensity images by using the recovered

light source distribution and rotation. For represent-

ing the light source distribution, we used 18, 66, 102

and 146 light source directions. The reproduced im-

age in each case is shown in Fig.8.

As shown in this figure, the reproduced images

are almost identical to the real input images shown in

Fig.7, and the intensity errors become small when the

number of sampled lights become large. The results

indicate that the proposed method works very well for

image reconstruction, and it can estimate light source

information properly. Table 1 shows estimated rota-

tions and their ground truth. The results indicate that

the proposed method needs some improvements for

obtaining better accuracy.

We next show the results under natural lighting en-

vironment as shown in Fig.9. The images observed

before and after camera motion are shown in Fig.10.

The markers on the sphere are used for estimating the

ground truth motions. The light source distribution

VISAPP 2011 - International Conference on Computer Vision Theory and Applications

172

18 light sources

RMS: 5.39

66 light sources

RMS: 3.99

102 light sources

RMS: 4.35

146 light sources

RMS: 3.73

Figure 8: The number of light sources in the light source

representation and reconstructed images with RMS errors.

Table 1: Estimated rotations and ground truth(GT) [degree].

GT 18 66 102 146

θ 2.52 0.38 0.45 0.43 0.49

φ 16.6 8.12 7.16 8.51 7.23

δ 2.70 2.35 2.27 2.38 2.21

error - 5.09 5.63 4.86 5.58

and the camera rotation were estimated from these

images. The number of sampled lights is fixed to

102. From the estimated light source distribution and

rotation, we reproduced images before and after the

camera motion. The reproduced images are shown in

Fig. 11. The RMS intensity errors of the reproduced

images were 4.77 and 5.37 respectively, and the aver-

age error of estimated rotation was 6.38 degrees. The

results indicate that the proposed method works well

even under natural lighting environments.

4.3 Accuracy Evaluation under

Multiple Lights

In this section, we evaluate the accuracy of the pro-

posed method under multiple light sources by using

synthetic images. We first evaluate the relationship

between the number of actual light sources in the

scene and the accuracy of estimation. In this evalua-

tion, the number of actual light sources was changed,

and camera rotation was estimated by our method.

Figure.12 shows the relationship between the number

of actual lights and the errors in estimated rotation.

As shown in this figure, although the accuracy

degrades slightly as the number of actual lights in-

creases, the proposed method still provides us suffi-

cient accuracy even under hundreds of lights.

We next evaluate the relationship between the

number of sampled lights and the accuracy of rota-

Figure 9: Experimental environment in a nutural room.

Figure 10: Input images taken under natural room.

RMS: 4.77

RMS: 5.37

Figure 11: Reconstructed image from estimated results and

RMS errors.

Figure 12: Relationship between a number of actual light

sources and errors in rotation estimation.

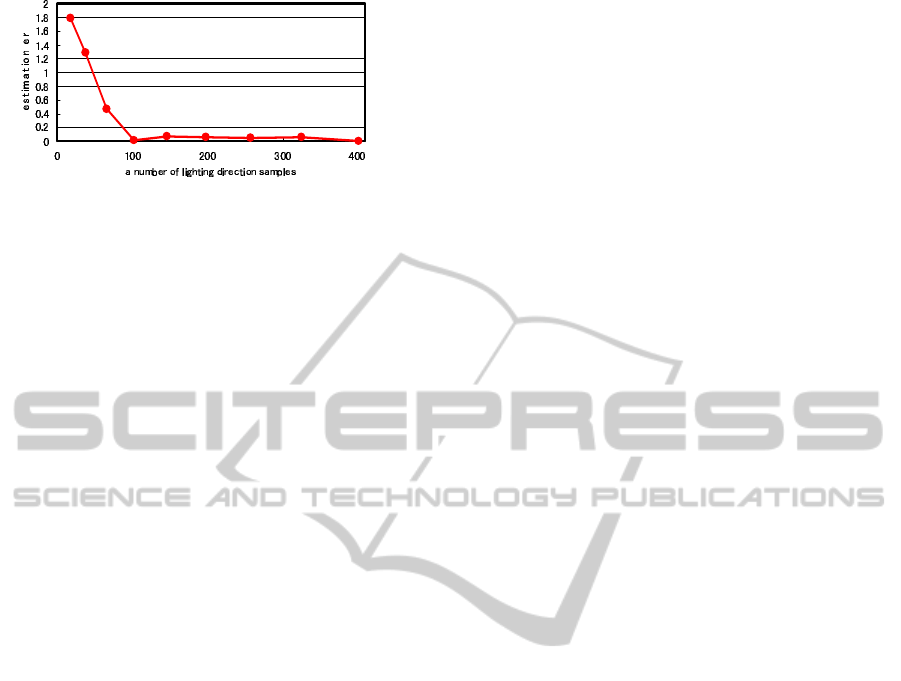

tion estimation. The number of sampled lights was

changed from 18 to 400. Figure.13 shows the re-

sults. Although the estimation errors are relatively

large when the number of sampled lights is small,

they are still acceptable, and they become small when

we use sufficient number of sampled lights. Com-

putational cost for the estimation does not become

extremely large even under hundreds of lights, since

our method consists of linear estimations. Thus, we

should use a large number of sampled lights for accu-

rate estimation.

SIMULTANEOUS ESTIMATION OF LIGHT SOURCES POSITIONS AND CAMERA ROTATION

173

Figure 13: Relationship between a number of sampled light

sources and errors in rotation estimation.

5 CONCLUSIONS

In this paper we proposed a method for estimating

light source distribution and camera motions simul-

taneously from photometric and geometric informa-

tions. For this objective, we proposed two types of

method. One is for the case where a single light

source exists in the scene, and the other is for mul-

tiple light sources. Under a single light source, we

estimated light source direction, camera rotation and

translation from image intensities and image coordi-

nates of feature points. Under multiple light sources,

we estimated the distribution of light sources and

camera rotations simultaneously just from image in-

tensity.

The experimental results indicate that the pro-

posed methods can estimate lighting information and

camera rotation simultaneously, even if there are a lot

of light sources such as natural rooms. In the future

work, we improve the accuracy of camera motion es-

timation.

REFERENCES

Faugeras, O. and Luong, O. (2001). The Geometry of Mul-

tiple Images. MIT Press.

Hartley, R. and Zisserman, A. (2000). Multiple View Geom-

etry in Computer Vision. Cambridge University Press.

Milgram, P. and Kishino, A. F. (1994). Taxonomy of mixed

reality visual displays. IEICE Transactions on Infor-

mation Systems, E77-D(12):1321–1329.

Powell, M. W., Sarkar, S., and D.Goldgof (2001). A simple

strategy for calibrating the geometry of light sources.

IEEE Transaction on Pattern Analysis and Machine

Intelligence, 23:1022–1027.

Sato, I., Sato, Y., and Ikeuchi, K. (1999a). Acquiring a radi-

ance distribution to superimpose virtual objects onto a

real scene. IEEE Trans. Visualization and Computer

Graphics, 5(1):1–12.

Sato, I., Sato, Y., and Ikeuchi, K. (1999b). Illumination dis-

tribution from shadows. In Proc.IEEE Conference on

Computer Vision and Pattern Recognition 99, pages

306–312.

Takai, T., Niinuma, K., and Matsuyama, T. (2003). 3d light-

ing environment sensing with reference spheres. Com-

puter Vision and Image Media, 137(15):117–124.

VISAPP 2011 - International Conference on Computer Vision Theory and Applications

174