CONTENT-SENSITIVE SCREENING IN BLACK AND WHITE

Hua Li and David Mould

Carleton University, Ottawa, Canada

Keywords:

Screening, Contrast-aware Halftoning, Pattern assignment, Error Diffusion, Non-photorealistic rendering.

Abstract:

Traditional methods produced unsatisfactory uniform screening. Often additional tones are added to improve

the quality of tone and structure. In this paper, we try to produce screening with diverse patterns and with

natural structure preservation while still using only one color of ink. We propose several extensions to contrast-

aware halftoning including new weight distributions and variations on the priority-based scheme. Patterns can

be generated by excluding pixels in a purposeful way and by organizing different groups of input edges based

on user interest. Segmented regions are assigned different patterns, based on statistical measurements of the

content of each region. Our method can automatically and efficiently produce effective screening with a lot of

flexibility and without artifacts from segmentation or false edges.

1 INTRODUCTION

Screening in printing refers to a process of passing

ink through a perforated screen (or pattern) over a re-

gion. Existing halftoning methods such as dithering

methods (Knuth, 1987; Ostromoukhov et al., 1994;

Ostromoukhov and Hersch, 1995b; Buchanan, 1996;

Ulichney, 1998; Verevka and Buchanan, 1999; Very-

ovka and Buchanan, 2000) or hatching (Streit and

Buchanan, 1998; Yano and Yamaguchi, 2005) usu-

ally generate uniform screening simply based on im-

age tone without consideration of image contents.

Though a wide variety of screens will definitely en-

rich visual effects, the question remains: how can dif-

ferent screens automatically support halftoning while

still preserving tone and structure? Similar questions

were asked since the beginning of screening (Ulich-

ney, 1987). Artistic screening (Ostromoukhov and

Hersch, 1995a) presented interesting effects. How-

ever, the good tone transition relied on both extremely

large original images and low-pass filters without

structure concerns. Recently some researchers (Os-

tromoukhov and Hersch, 1999; Rosin and Lai, 2010;

Qu et al., 2008) abandoned solving this problem in bi-

nary images and instead introduced additional tones

or colors. However, it is still worthwhile to address

the black and white version of the problem for appli-

cations such as monochrome printing. Also an unfor-

tunate aspect of recent methods is that image content

preserved by segmentation methods or edge detection

may possess serious artifacts or false edges.

Our motivation comes from recent structure-

aware halftoning (Pang et al., 2008; Chang et al.,

2009; Li and Mould, 2010) which produced excellent

structure details. In particular, contrast-aware halfton-

ing (Li and Mould, 2010) due to its priority-based

scheme has a lot of potential for our problem. In this

paper, we extend contrast-aware halftoning in the di-

rection of nonuniform screening with natural looking

structure details. We propose new weight distribu-

tions and introduce a multi-stage process for different

groups of textural edges. Our pattern assignment is

sensitive to the content of the image. Our contribu-

tion is an automatic method smoothly unifying differ-

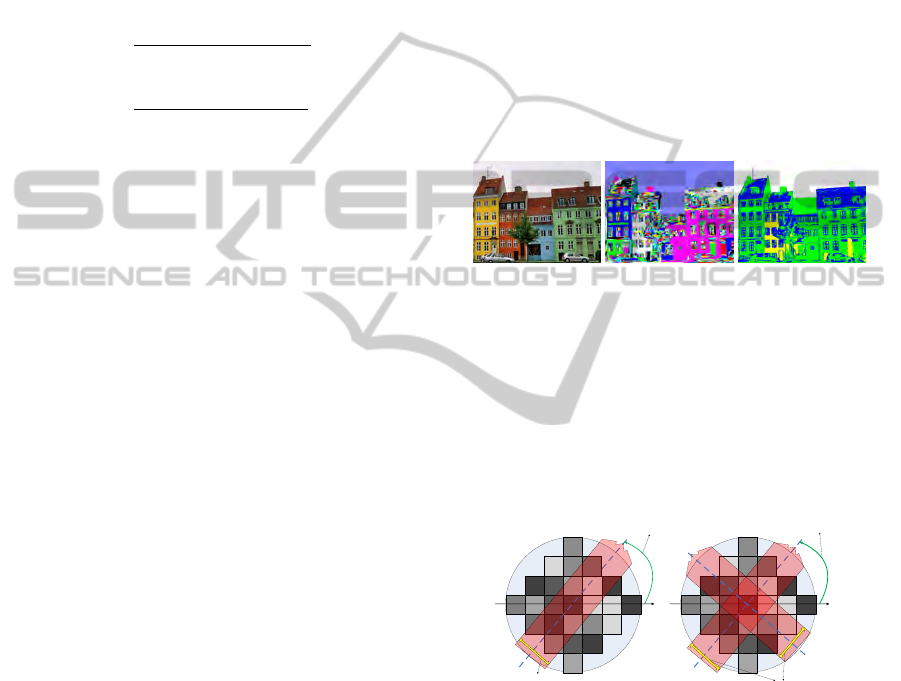

ent patterns with the image content. In Figure 1, our

tree in front of the building is clearer than the result

from the manga screening (Qu et al., 2008) and dif-

ferent patterns applied to the building’s wall provide

a rich viewing experience. Visually our result con-

tains no segmentation artifacts, which are obvious in

(a) despite the use of greyscale rather than a single

tone.

2 PREVIOUS WORK

Screening is a special kind of halftoning with some

control over patterns, which convey a given inten-

sity in a block. The techniques include classic or-

166

Li H. and Mould D..

CONTENT-SENSITIVE SCREENING IN BLACK AND WHITE.

DOI: 10.5220/0003357801660172

In Proceedings of the International Conference on Computer Graphics Theory and Applications (GRAPP-2011), pages 166-172

ISBN: 978-989-8425-45-4

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

(a) Manga screening (b) Our screening

Figure 1: Building.

dered dither algorithms (Ulichney, 1987), algorithms

combined with error diffusion (Knuth, 1987), the

variation of rotated dispersed dither (Ostromoukhov

et al., 1994; Ostromoukhov and Hersch, 1995b),

and aperiodic patterns of clustered dots (Velho and

Gomes, 1991). Whether image-independent dithering

or image-dependent, these methods share the same

problem: the unsatisfactory uniform patterns. Only

considering tone but ignoring structure preservation

makes appearance unattractive. Subsequently, a lot

of researchers have made tremendous effort in pattern

generation to try to change the unappealing factors.

Buchanan (Buchanan, 1996) introduced controlled

artifacts with limited success. Ulichney (Ulichney,

1998) proposed a way for generating dither patterns

by recursive tessellation but did not mention how to

apply to an image in a unified way. Some procedu-

ral screening methods (Ostromoukhov and Hersch,

1995a) freely generated artistic screening elements

with limited shapes. The smooth transition is a big

problem, which they avoided in later work by adding

more tones (Ostromoukhov and Hersch, 1999; Ostro-

moukhov, 2000). A more powerful way for different

patterns is from image-based dither screens (Verevka

and Buchanan, 1999; Veryovka and Buchanan, 2000;

Yano and Yamaguchi, 2005). However, those im-

provements are still focused on tone matching.

In order to maintain image content, either sharp-

ening (Velho and Gomes, 1991; Buchanan, 1996)

or user-defined segmentation (Streit and Buchanan,

1998) can be employed. Recently, structure-aware

screening (Qu et al., 2008) designed for manga ef-

fects proposed color to pattern ideas to connect the

image content with patterns. This approach pro-

duces continuous-tone output, however, whereas we

are concerned with binary output. Our goal is to pro-

pose an automatic method with flexibility to show dif-

ferent patterns and to naturally represent image con-

tent in black and white.

3 OUR METHOD

Contrast-aware halftoning (CAH) (Li and Mould,

2010) is a type of error diffusion method (Floyd and

Steinberg, 1976) with two variations. First, it dis-

tributes error in a contrast-aware way. Second, it

processes pixels in a priority-based order. Because

the strategy respects the pixel’s initial predisposition

towards dark or light when distributing error, these

two modifications guarantee good structure preserva-

tion. In order to adapt it to screening effects, we

extend CAH in two ways: new weight distributions

(exclusion-based masks) and new priority configura-

tion (a multi-stage process).

3.1 Overview

Given an original image, our system first segments it

into regions and calculates a gradient map. Then, the

system assigns different screens for different regions.

This is done by calculating how much sensitive con-

tent occupies each region. Different classifications are

handled with different strategies to promote content.

In the end, the system produces specific screening ef-

fects through contrast-aware halftoning with our new

variations.

Segmentation is done using mean shift (Comani-

ciu and Meer, 2002). Oversegmentation will not have

visible disadvantages for our final screening since our

consideration of structure removes the artifacts based

on the content of the original image. The segmenta-

tion is to guide the separation of content when making

pattern assignments. The content-sensitive approach

to assignment helps understand the image and further

emphasizes structural details. As for pattern creation,

either exclusion-based masks or the multi-stage pro-

cess can provide ways for creating patterns. We put

more effort on the former type since it is easy to con-

trol. In addition, users are still able to control interest-

CONTENT-SENSITIVE SCREENING IN BLACK AND WHITE

167

ing edges and the quality of tones by simply adjusting

a few parameters.

3.2 Pattern Assignment

After we have the segmentation map and gradient

map, we calculate how much of each region R

i

is

occupied by sensitive content. A statistical measure-

ment H based on thresholding gradient magnitudes is

computed as follows.

H

h

=

number(g(m, n) > T

h

)

N

∈R

i

(1)

H

l

=

number(g(m, n) > T

l

)

N

∈R

i

(2)

The gradient is written as g(m,n) for the pixel at po-

sition (m,n) with intensity values I(m,n). In Equa-

tion 1, H

h

obtains the proportion of the pixels with

high gradient magnitudes while in Equation 2, H

l

ob-

tains the percentage of the pixels with low gradient

magnitudes, where T

h

and T

l

are thresholds holding

T

h

T

l

. The function number(.) counts the number

based on the given predicate. N

∈R

i

is the number of

pixels in the region R

i

. The examples shown in this

paper usually use T

h

= 100 and T

l

= 35. The type of

assignment is classified according to Equation 3.

C

i

=

0 : Empty(white) if H

l

< r

l

and I(m, n) > W

1 : Low(yellow) if H

l

< r

l

and I(m, n) ≤ W

2 : Medium(green)if H

l

≥ r

l

and H

h

> r

h

3 : High(blue) otherwise.

(3)

In the above, r

l

and r

h

are thresholds for ratio of

sensitive content and W is a threshold for very light

area. We use r

l

= 0.25, r

h

= 0.6, and W = 200 in

this paper. This C

i

allows us to classify each region.

We employ different assignment strategies for differ-

ent C

i

. In this way, we stylize the image content.

1. If C

i

is zero, this area (shown in white) might be

background or trivial area. In order to satisfy the

tone requirement, halftoning these kind of areas

will use very few black pixels to represent those

very light areas, which can be distracting. For

those unimportant regions we leave them empty

or use an arbitrary pattern to fill in.

2. If C

i

is 1, it is a uniform region (shown in yellow)

with medium intensity values. We can randomly

assign the patterns. Since the contrast-aware strat-

egy and the priority-based error diffusion preserve

the structure and tone, the random assignment still

promotes our quality.

3. If C

i

is 2, it is a region (shown in green) having

medium degree of content. We either match the

pattern direction with the main direction of the re-

gion or use the edge-exclusion approach to match

patterns with content.

4. The remaining regions (shown in red) have C

i

equal to 3. These regions are highly textured and

it is better to preserve all the information. In

this case, we use basic contrast-aware halftoning

without our variations, or combine it with edge-

exclusion masks as described later.

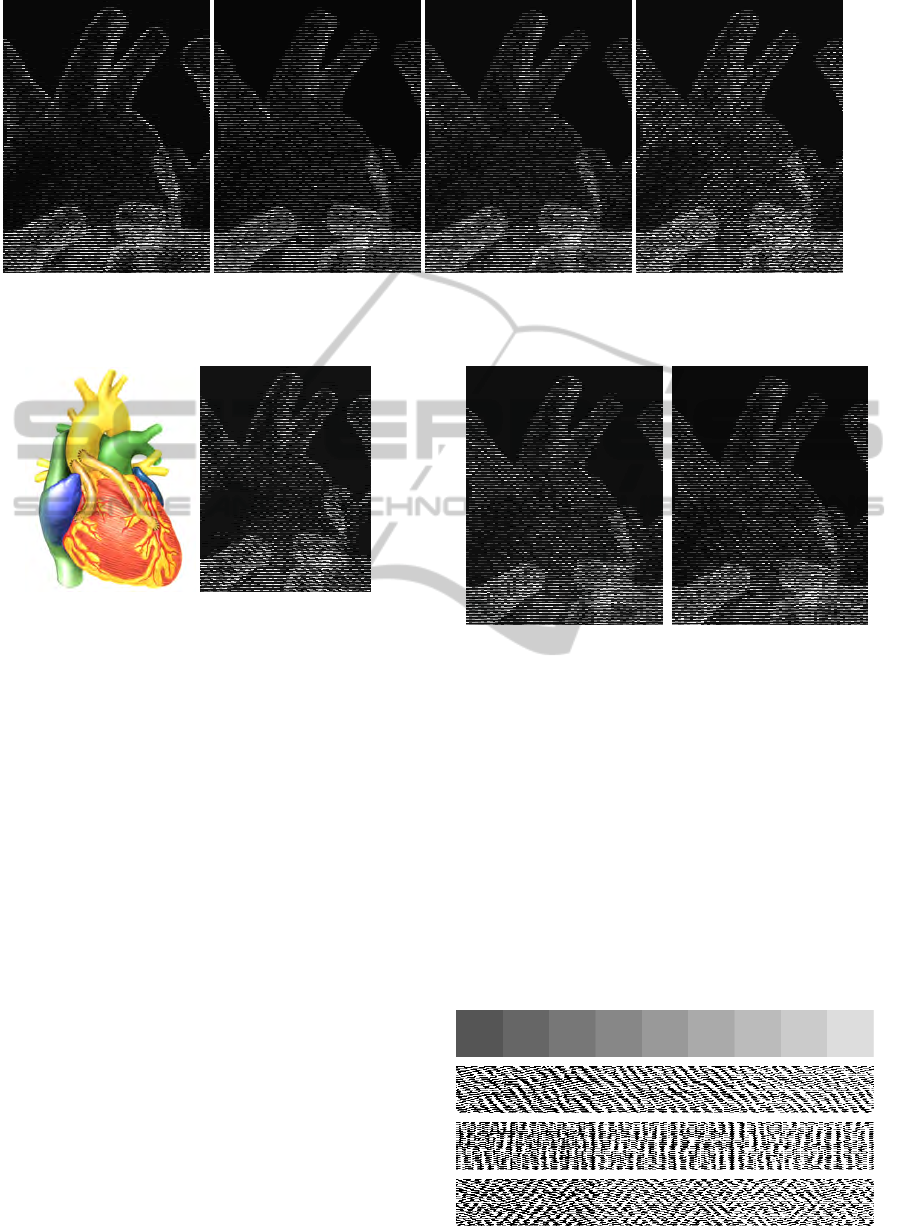

Figure 2 shows the segmentation and corresponding

content-based classification according to this strategy.

In Figure 2 (c), the blue regions show highly textured

hair. Less textured regions are displayed in green.

Other yellow regions mean there is not much change

in content, so we consider them uniform regions.

(a) (b) (c)

Figure 2: Content-based assignment. (a) Original image;

(b) Segmentation; (c) Our assignment.

3.3 Pattern Generation

We propose two different ways to generate patterns.

Type I controls the order of error diffusion in an or-

ganized way. Type II uses a multi-stage process to

preserve edges from input images.

Rotation Angle =θ

Width =w(m,n)

(a)

Rotation Angle =θ

Width = w(m,n)

(b)

Figure 3: Type I: Exclusion-based masks. (a) Exclude one

direction; (b) Exclude two directions.

3.3.1 Type I: Exclusion-based Masks

After each step of priority-based error diffusion, the

pixels under the mask lower their priorities to main-

tain a good spatial distribution. Instead of processing

all pixels in the mask, we exclude specific subsets of

pixels. The excluded pixels will have unchanged pri-

orities, thus promoting their chances to be chosen as

the next pixels. In this way, we promote the formation

of interesting patterns.

GRAPP 2011 - International Conference on Computer Graphics Theory and Applications

168

(a) (b) (c) (d)

Figure 5: Comparisons. (a) Manga screening (greyscale); (b) C

i

= 2 with edge-exclusion masks; (c ) C

i

= 2 with main

direction; (d) Thicker version of (c).

(a) Original image (b) Dithering

Figure 4: A heart.

Figure 3 shows two designs for creating this kind

of patterns. The first shown in (a) is to cover the pixels

under a direction with angle θ and with width w(m,n)

within a mask. This way can represent horizontal,

vertical, or other directional patterns. For example,

suppose that horizontal exclusion is chosen. After

each error diffusion step, the pixels under the hori-

zontal lines are not affected thus do not change their

original priorities. In this way, the horizontal lines

create a pattern. We also propose an edge-based ex-

clusion mask, which excludes the pixels on the direc-

tion of edge tangent along the center pixel. This edge-

oriented weight distribution enhances structures; we

use it for regions with C

i

= 3 to preserve highly tex-

tured regions or sometimes for regions with C

i

= 2.

Another way for regions with C

i

= 2 is to use the main

direction of the region to guide the rotation angle θ.

Similarly, in Figure 3 (b), the pixels under a cir-

cular mask are covered with two directions of strips,

also along with parameters θ and w(m,n). This can

provide crossed patterns.

Figure 5 shows the method applied to a heart im-

age. Compared with dithering shown in Figure 4 (b),

our results are non-uniform and look much better.

Also compared with manga screening, even though

we are using only black and white, not greyscale, even

Figure 6: Thickness control. Left: 4 patterns; Right: 6 pat-

terns.

the small veins are very clear. We can also control the

thickness of the exclusion width. We calculate thick-

ness w(m,n) as follows;

w(m,n) = MAX × (1 − I(m,n)/255) (4)

where MAX is the maximum thickness: we use 6

here. A different heart example appears in Figure 6,

showing thickness control for different patterns. Both

enhance the pattern effects without losing structure

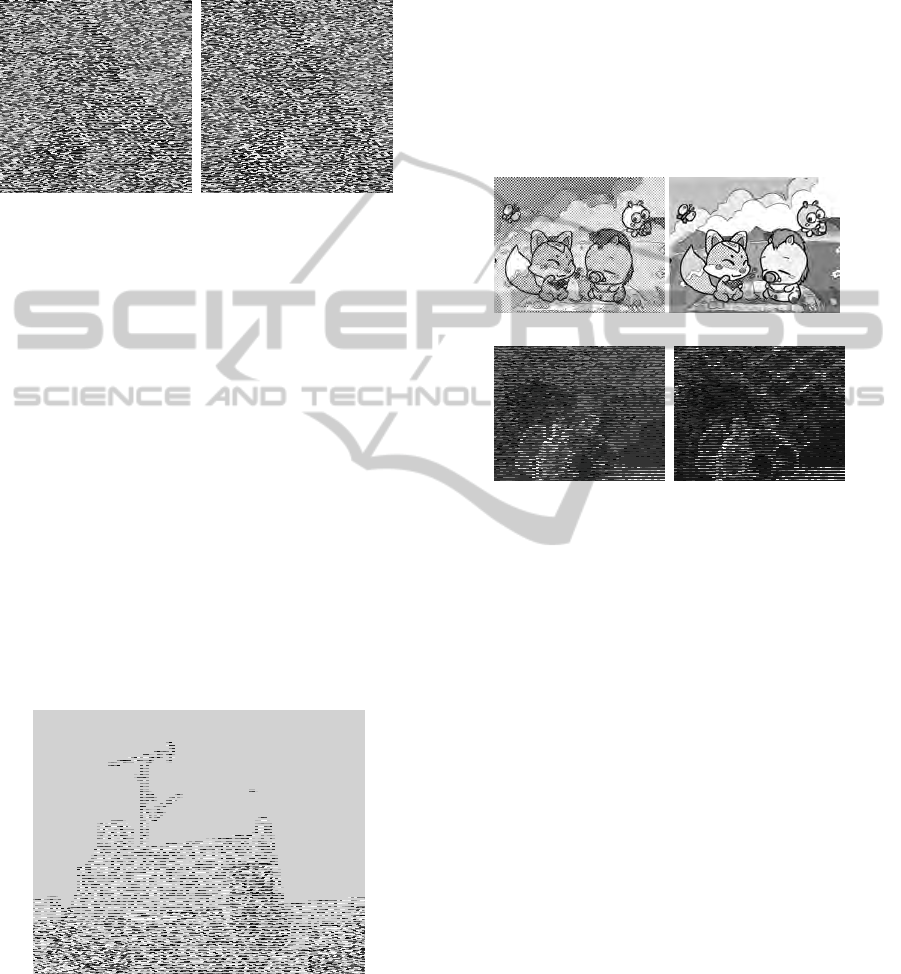

details. For tone we show a ramp in Figure 7 for some

patterns. Visually, they are reasonably continuous and

able to convey tone differences.

Figure 7: Ramp with different patterns.

CONTENT-SENSITIVE SCREENING IN BLACK AND WHITE

169

Edge-exclusion Masks and Main Directions: Gen-

erally, the results from edge exclusion are more ap-

pealing than the method using only the main direc-

tion.

(a) Main direction (b) Edge exclusion

Figure 8: Content-sensitive assignment.

Figure 5 and Figure 8 show the difference between

exclusion-based masks and main directions. Visually,

both preserve structure details quite well.

3.3.2 Type II: A Multi-stage Process

The priority-based scheme provides good flexibility

for promoting edges: we can vary our priority con-

figuration guided by edges. Edge pixels will be pro-

cessed first, which increases the chance of those pix-

els being chosen. It lowers the influence from the re-

maining pixels. If there are different types of edges

to be promoted, the process is separated into several

stages. Through this process, the patterns from in-

put textures can be easily adapted. Since the existing

texture is in a very wide range, the content-sensitive

strategy cannot play a large role. Figure 9 and Fig-

ure 10 show some variations based on different as-

signment and different texture inputs. They give di-

verse effects considering both tone and structure.

Figure 9: Type II patterns for building.

4 RESULTS AND DISCUSSION

Our method is automatic. Previous methods such as

manga screening (Qu et al., 2008) will manually re-

group small regions to avoid fragile output. Since the

small regions will not destroy our quality, we can skip

this step and hence save time. Our assignment is not

treated as a colorization problem. We let the intrinsic

tone and content guide the patterns, which saves fur-

ther time. Based on an Intel Core Duo CPU E8400@

3.0GHz with 3GB RAM and processing 800 × 1000

image, including the segmentation time (72 seconds),

it takes 110 seconds to gain our results, which is faster

than the four minutes needed by manga screening in

the same situation. Excluding segmentation time, our

process only takes a few seconds to tens of seconds.

(a) Dithering (b) Manga screening

(c) Our Type I (thick) (d) Our Type II

Figure 10: More comparisons.

A comparison is shown in Figure 12. It is

clear that our results are much better than dither-

ing. Manga screening places the segmentation bound-

aries on top of patterns to distinguish objects. They

have man-made edges between cloth and the desk-

top, and annoying boundaries between objects on the

desk, which looks unnatural. Our results avoid seg-

mentation artifacts. Our structure details are grace-

fully preserved by contrast-aware masks and priority-

based scheme with promotion from content-sensitive

assignment. Further, the edge-exclusion mechanism

and main direction grasped for medium sensitive con-

tent give another promotion for structural contents.

Another comparison is shown in Figure 10.

Figure 11 shows that our scheme is also open to

color extension. It uses different textures as input

patterns. It gives us interesting and distinct effects,

though not as yet fully explored.

5 CONCLUSIONS

In this paper, we demonstrate the possibility to show

different screening patterns in black and white with

good structure details. Our ideas for exclusion-based

masks and the multi-stage process can be adapted

GRAPP 2011 - International Conference on Computer Graphics Theory and Applications

170

Figure 11: Extension to colored screening.

(a) (b)

(c) Manga screening

(d) Our type I (thick)

Figure 12: More comparisons. (a) Original image; (b)

dithering.

to a lot of applications in image processing or non-

photorealistic rendering. As for future work, we

should refine the content-based assignment for pat-

terns and improve the multi-stage process. Color

halftoning with color harmonization for screening

will be a very interesting direction too.

ACKNOWLEDGEMENTS

We thank Yingge Qu for permission to use their im-

ages. This work was supported by NSERC REPIN

299070-04.

REFERENCES

Buchanan, J. W. (1996). Special effects with half-toning.

In Computer Graphics Forum (Eurographics), vol-

ume 15, pages 97–108.

Chang, J., Alain, B., and Ostromoukhov, V. (2009).

Structure-aware error diffusion. ACM Transactions on

Graphics, 28(4). Proceedings of SIGGRAPH-ASIA

2009.

Comaniciu, D. and Meer, P. (2002). Mean shift: A ro-

bust approach toward feature space analysis. In IEEE

Transactions on Pattern Analysis and Machine Intel-

ligence, volume 24, pages 603–619.

Floyd, R. W. and Steinberg, L. (1976). An Adaptive Algo-

rithm for Spatial Greyscale. Proceedings of the Soci-

ety for Information Display, 17(2):75–77.

Knuth, D. E. (1987). Digital halftones by dot diffusion.

ACM Trans. Graph., 6(4):245–273.

Li, H. and Mould, D. (2010). Contrast-aware halfton-

ing. Computer Graphics Forum (Eurographics),

29(2):273–280.

Ostromoukhov, V. (2000). Artistic halftoning - between

technology and art. SPIE, 3963:489–509.

Ostromoukhov, V. and Hersch, R. D. (1995a). Artistic

screening. In Proceedings of the 22nd annual con-

ference on Computer graphics and interactive tech-

niques, SIGGRAPH ’95, pages 219–228.

Ostromoukhov, V. and Hersch, R. D. (1995b). Halfton-

ing by rotating non-bayer dispersed dither arrays.

SPIE - International Society for Optical Engineering,

2411:180–197.

Ostromoukhov, V. and Hersch, R. D. (1999). Multi-color

and artistic dithering. In Proceedings of the 26th an-

nual conference on Computer graphics and interac-

tive techniques, SIGGRAPH ’99, pages 425–432.

Ostromoukhov, V., Hersch, R. D., and Amidror, I. (1994).

Rotated dispersed dither: a new technique for digital

halftoning. In Proceedings of the 21st annual con-

ference on Computer graphics and interactive tech-

niques, SIGGRAPH ’94, pages 123–130.

CONTENT-SENSITIVE SCREENING IN BLACK AND WHITE

171

Pang, W.-M., Qu, Y., Wong, T.-T., Cohen-Or, D., and

Heng, P.-A. (2008). Structure-aware halftoning. ACM

Transactions on Graphics (SIGGRAPH 2008 issue),

27(3):89:1–89:8.

Qu, Y., Pang, W.-M., Wong, T.-T., and Heng, P.-A. (2008).

Richness-preserving manga screening. ACM Trans-

actions on Graphics (SIGGRAPH Asia 2008 issue),

27(5):155:1–155:8.

Rosin, P. L. and Lai, Y.-K. (2010). Towards artistic minimal

rendering. In ACM Symposium on Non Photorealistic

Rendering, pages 119–127.

Streit, L. and Buchanan, J. (1998). Importance driven

halftoning. In Computer Graphics Forum (Euro-

graphics), pages 207–217.

Ulichney, R. (1987). Digital Halftoning. MIT Press.

Ulichney, R. (1998). One-dimensional dithering. Technical

report, Int. Symposium on Electronic Image Capture

and Publishing (EICP’98).

Velho, L. and Gomes, J. (1991). Digital halftoning with

space filling curves. SIGGRAPH Comput. Graph.,

25:81–90.

Verevka, O. and Buchanan, J. W. (1999). Halftoning with

image-based dither screens. In Proceedings of the

1999 conference on Graphics interface 99, pages 167–

174.

Veryovka, O. and Buchanan, J. (2000). Texture-based dither

matrices. Computer Graphics Forum, 19(1):51–64.

Yano, R. and Yamaguchi, Y. (2005). Texture screening

method for fast pencil rendering. Journal for Geome-

try and Graphics, 9(2):191–200.

GRAPP 2011 - International Conference on Computer Graphics Theory and Applications

172