A NEW OBJECT RECOGNITION SYSTEM

Nikolai Sergeev and Guenther Palm

Institute of Neural Information Processing, Ulm University, Ulm, Germany

Keywords:

Object recognition system, Invariant object representation.

Abstract:

This paper presents a new 2D object recognition system. The object representation used by the system is

rotation, translation, scaling and reflection invariant. The system is highly robust to partial occlusion, defor-

mation and perspective change. The last makes it applicable to 3D tasks. Color information can be ignored

as well as combined with form representation. The boundary of an object to be recognized doesn’t need to

be path-connected. The time demand to learn a new object doesn’t depend on the number of objects already

learned. No object segmentation prior to recognition is needed. To evaluate the system the 3D object library

COIL-100 was used.

1 INTRODUCTION

1.1 System Architecture

An object recognition system usually consists of

three parts. Part one extracts image primitives e.g.

edges(Canny, 1986), lines(Hough, 1962), orientation

histograms (Dalal and Triggs, 2005) or moments (Hu,

1962),(Reiss, 1993). Part two constructs feature vec-

tors. Finally part three is responsible for learning

and retrieval of the information e.g. support vector

machines (Vapnik, 1998), artificial neural networks

(Rosenblatt, 1962; Bishop, 2007) or regression esti-

mators (Gyofri et al., 2002). The system to introduce

in this paper also has this architecture. Image prim-

itives are half ellipses. One feature vector encodes a

combination of half ellipses. To learn and compare

the feature vectors a new storage as well as a new re-

trieval algorithm were developed.

1.2 Motivation

Affine invariant object representation methods nor-

mally used are either not suitable for not path-

connected objects as fourier descriptors (Arbter et al.,

1990) or need segmentation prior to recognition as

moments (Reiss, 1993). One common problem of

these approaches is discrimination. Invariant features

deliver no unique description of an object. So it can

happen that two objects with similar features have

nothing in common for an observer. The represen-

tation to be introduced in this paper overcomes theses

problems. However it is not suitable for standard ma-

chine learning algorithms as support vector machines

or neural networks. An image to analyze doesn’t pro-

duce just a single representation vector but e.g. more

than 50

10

feature vectors. It makes standard retrieval

algorithms unusable. For that reason a new type of

storage together with a new search algorithm was de-

veloped.

In sum all the three characteristic components of

this object recognition system (features, representa-

tion, machine learning algorithm) are new.

2 OUTLINE OF

IMPLEMENTATION

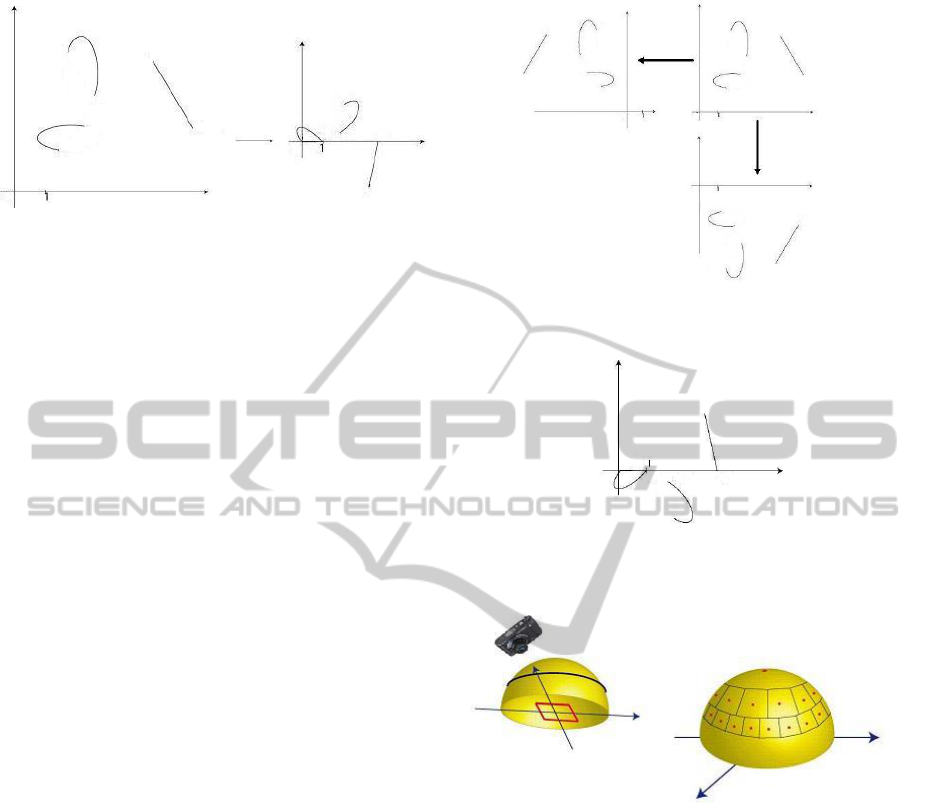

An object is represented as a set of half ellipse com-

binations A as shown in Figure1. Combinations don’t

need to be of equal length.

For each a ∈ A the system looks for a correspond-

ing half ellipses combination b in the image to ana-

lyze. b should be as long as possible.

More precisely expressed: From the image to

analyze the system extracts a set of half ellipses

B as shown in Figure 2. For each combina-

tion (a

i

)

i∈{1,...,n}

∈ A a maximal m ∈ {1, ..., n} has

to be determined for which a subsequence π ∈

{1, ..., n}

{1,...,m}

with π(1) = 1 and (b

i

)

i∈{1,...,m}

∈ B

m

exist so that (a

π(i)

)

i∈{1,...,m}

can be approximately

transformed into (b

i

)

i∈{1,...,m}

through translation, ro-

tation, scaling, reflection and perspective change as

395

Sergeev N. and Palm G..

A NEW OBJECT RECOGNITION SYSTEM.

DOI: 10.5220/0003307103950400

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2011), pages 395-400

ISBN: 978-989-8425-47-8

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

Figure 1: An object to learn and its representation.

Figure 2: An object to analyze with a set of extracted half

ellipses.

Figure 3: A way to transform one combination into anther.

shown in Figure 3. All in all the entire number of

combination pairs to be compared is

∑

(a

1

,...,a

n

)∈A

n

∑

m=1

n − 1

m − 1

|B|

m

!

. (1)

With A containing only one combination of length n =

10 and B consisting of 50 half ellipses the number of

pairs is at least 50

10

.

As the system makes the check for each subse-

quence (a

π(i)

)

i∈{1,...,m}

it is robust to partial occlusion.

Without any further extension this representation

is color invariant.

3 INVARIANT

REPRESENTATION OF A

COMBINATION OF HALF

ELLIPSES

3.1 Overview

In this section a number of functions F

i

are intro-

duced. There are needed to obtain an invariant rep-

resentation of a half ellipses combination. To under-

stand their geometrical meaning it is unnecessary to

read their mathematical description. The correspond-

ing figures are enough. The most important figure is

number 7. It shows the rotation, translation and scal-

ing invariant representation of a combination of half

ellipses.

3.2 Half Ellipses

For C = R

2

and P(C) standing for power set of C

a half ellipse is defined as a pair (e, B) ∈ C

2

× P(C)

with e

1

6= e

2

for which (a, b,t

0

, δ) ∈ [0, ∞) × [0, ∞) ×

R × {−1, 1} as well as (c, β) ∈ C × R exist so that

B =

T

c

R

β

acost

bsint

t ∈

[t

0

,t

0

+ δπ]

∪

[t

0

+ δπ,t

0

]

(2)

and

e

1

= T

c

R

β

acost

0

bsint

0

, (3)

e

2

= T

c

R

β

acos(t

0

+ π)

bsin(t

0

+ π)

. (4)

T

c

, R

β

stand for translation, scaling and rotation re-

spectively. The set of half ellipses will be denoted

with HE. In other words a half ellipse consists of end-

points e

1

, e

2

∈ C and of a set of bow points B ∈ P(C).

The endpoints of a half ellipse play a very important

Figure 4: Examples of half ellipses.

role for the invariant representation. There are mainly

two reasons to use half ellipses. An affine transfor-

mation A 6= 0 always maps a half ellipse onto another

half ellipse. The second reason is the variety of half

ellipses as Figure 4 shows.

VISAPP 2011 - International Conference on Computer Vision Theory and Applications

396

3.3 Rotation, Translation and Scaling

Invariant Representation of a Half

Ellipse

Now a unique rotation, translation and scaling invari-

ant representation of a half ellipse will be introduced.

At first two preliminary definitions are needed. For

x, y ∈ C with x 6= y the function F

1

x,y

is defined as

F

1

x,y

:

(

C → C

z 7→

z−x

y−x

. (5)

Figure 5 shows the geometric meaning of the trans-

Figure 5: Geometric meaning of F

1

x,y

(z).

formation. F

1

x,y

is an affine transformation with

F

1

x,y

(x) = 0 and F

1

x,y

(y) = 1. The second function

F

2

: HE → C is defined as

F

2

(e, B) =

max

x∈B

F

1

e

1

+e

2

2

, e

1

(x)

1

max

x∈B

F

1

e

1

+e

2

2

, e

1

(x)

2

. (6)

Finally the invariant representation F

3

: HE → C is

defined in such a way that for always existent x,y ∈ B

with

F

2

(e, B) =

F

1

e

1

+e

2

2

, e

1

(x)

1

F

1

e

1

+e

2

2

, e

1

(y)

2

(7)

F

3

(e, B) =

z − SIGNUM(z)

F

1

e

1

+e

2

2

, e

1

(y)

2

(8)

with

z =

F

1

e

1

+e

2

2

, e

1

(x)

1

. (9)

For (e, B) in Figure 6

F

3

(e, B) =

M

1

− 1

M

2

. (10)

Figure 6: Representation of a half ellipse.

It can be shown that for each x ∈ C a half ellipse

(e, B) ∈ HE exists with F

3

(e, B) = x. Additionally for

two half ellipses (e, B), ( ˜e,

˜

B) ∈ HE with F

3

(e, B) =

F

3

( ˜e,

˜

B) it can be shown that they can be transformed

in each other through translation, rotation and scaling.

On the other side F

3

(e, B) is invariant to translation,

rotation and scaling of (e, B).

3.4 Extraction of a Half Ellipses

In the literature one can find several methods to ex-

tract ellipses. Most of them are based on Hough trans-

form e.g. (Tsuji and Matsumoto, 1978). However

they are not suitable for half ellipse detection as they

do not deliver endpoints.

Endpoints of a half ellipse to be extracted don’t

need to be labeled or explicitly visible e.g. as corners.

For that reason from a circle the system extracts sev-

eral half circles. The exact number depends on the

size of the circle. A bigger one can deliver over 100

half circles.

The invariant representation introduced above of-

fers a convenient way to extract a half ellipse. As the

Figure 6 shows the system determines two extremes

for a chain of edge points. In the next step it calcu-

lates the unique half ellipse which would also have

such extremes and endpoints. Then it checks if all the

edge points of the chain are in an ε-neighborhood of

the calculated unique half ellipse.

3.5 Rotation, Translation and Scaling

Invariant Representation of a

Combination of Half Ellipses

The set of combinations C =

S

n∈N

HE

n

consists of

ordered sequences of half ellipses. Rotation, trans-

lation and scaling invariant representation F

4

: C →

S

n∈N

R

6n

is defined as

F

4

(e

i

, B

i

)

i

=

F

1

e

1

1

,e

1

2

(e

i

1

), F

1

e

1

1

,e

1

2

(e

i

2

), F

3

(e

i

, B

i

))

i

(11)

with i ∈ {1, ..., n}. Values of the representation of the

A NEW OBJECT RECOGNITION SYSTEM

397

Figure 7: Representation of the endpoints of a combination.

endpoints of the combination showed in the left part

of Figure 7 can be directly read off from the right part

of the figure.

3.6 Reflection Invariance

The rotation, translation, scaling invariant representa-

tion introduced above will be now extended to a re-

flection invariant one. The purpose is to find a rep-

resentation which doesn’t change with the axis of re-

flection. For n ∈ N and diagonal matrix M

n

∈ R

6n×6n

defined as

M

n

=

1 0 . . . 0 0

0 −1 . . . 0 0

.

.

.

0 0 . . . 1 0

0 0 . . . 0 −1

(12)

rotation, translation, scaling and reflection invariant

representation F

5

is defined as

F

5

:

(

C →

S

n∈N

P(R

6n

)

c 7→ {F

4

(c), M

n

(F

4

(c))}

. (13)

In other words the representation consists of two fea-

ture vectors. On the one hand this representation

doesn’t change despite rotation,. . . , reflection. On the

other hand two combinations with identical represen-

tations can be transformed in each other through rota-

tion,. . . , reflection.

An example makes plausible why the additional

vector is invariant to the axis of reflection. Figure

8 shows one combination reflected horizontally and

vertically. Figure 9 shows the identical code of the

endpoints of both reflected combinations.

4 ROBUSTNESS TO

PERSPECTIVE CHANGE

Figure 10 offers a sketch of the rather technical for-

mulation and implementation of the view point tol-

erance of the system. As shown in the left part of

Figure 8: A combination reflected horizontally and verti-

cally.

Figure 9: Representation of endpoints of the reflected com-

binations.

Figure 10: Formulation and implementation of perspective

robustness.

the figure the task is to recognize an object within the

frame if a camera is placed at some point of the sphere

above the dark line and its projection surface is par-

allel to the tangential plane of the point. To model

a camera the perspective projection was used as it is

described e.g. in (Jaehne, 2005).

To solve this task the system builds an in some

sense minimal coverage of the sphere above the dark

line as shown in the right part of the figure. For each

point of the coverage the system makes a perspective

transformation of the original rotation,. . . , reflection

invariant representation and learns it. Albeit storage

intensive this solution is simple and mathematically

precise.

VISAPP 2011 - International Conference on Computer Vision Theory and Applications

398

5 THE TASK OF THE

RETRIEVAL ALGORITHM

The storage of the system saves a set A ⊆

S

n∈N

R

6n

of feature vectors representing combinations of half

ellipses not the original combinations.

From an image to analyze the system extracts a set

of half ellipses B ⊆ HE. For each a = (a

i

)

i∈{1,...,n}

∈

A∩R

6n

= A∩

∏

i∈{1,...,n}

R

6

the retrieval algorithm de-

termines maximal m ∈ {1, ...,n} for which a subse-

quence π ∈ {1, ..., n}

{1,...,m}

with π(1) = 1 and b ∈ B

m

exists with

∀i ∈ {1, ..., m} :

a

π(i)

− c

i

max

≤ ε

(14)

with F

4

(b) = c ∈ R

6m

and ε > 0. With other words

two feature vectors get compared with respect to max-

imum norm.

To find such a maximal m ∈ {1, ..., n} the system

tries all m, π, b. The new type of machine learning

algorithm allows to compare each

a

π(i)

i

with each

c = F

4

(b). Thus the task is to find the highest m with

a successful comparison.

ε > 0 can be chosen only once prior to the initial-

ization of the system.

Comparing two feature vectors with respect to

maximum norm the system tolerates deformation of

an object within ε.

6 EXPERIMENTAL RESULTS

6.1 COIL-100

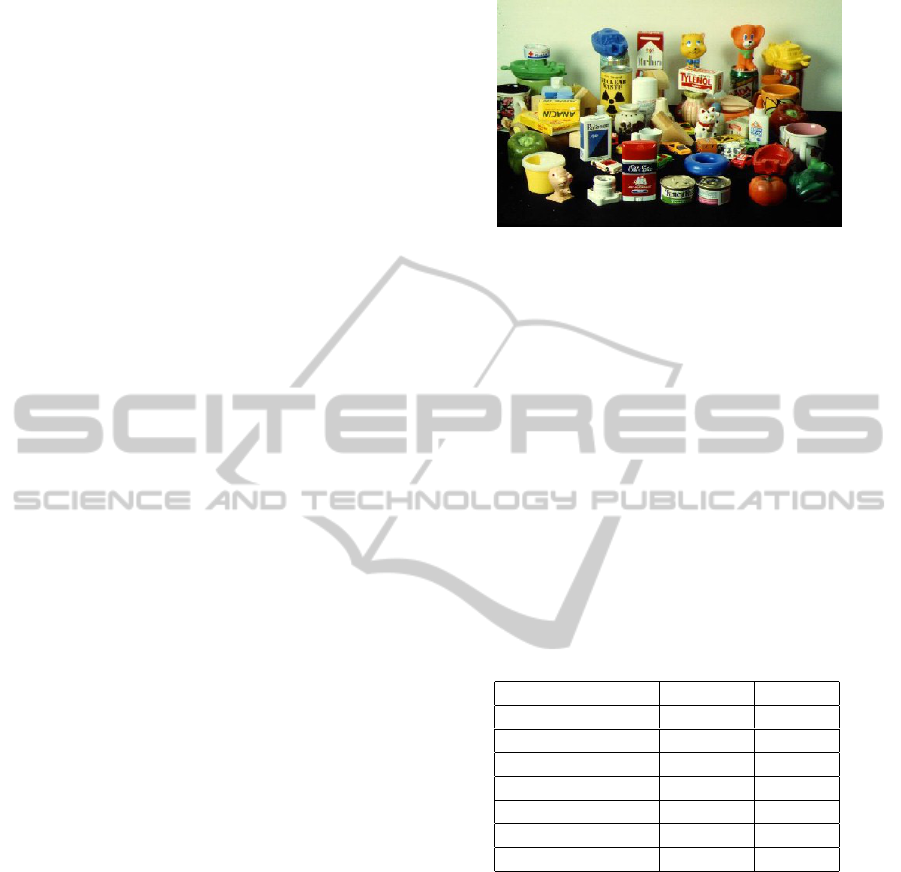

To evaluate the system the well known

database COIL-100 (Columbia Object Im-

age Library) was used. It is available at

http://www1.cs.columbia.edu/CAVE/software/softlib/

coil-100.php. The data set is described in (Nene

et al., 1996). It contains 7200 color images of 100 3D

objects shown in Figure11. One image is taken per

5

◦

of rotation.

6.2 Experiment Settings and Results

The computer used in the experiments has a proces-

sor Intel(R) Core(TM)2 Duo CPU P8600 @2.40 GHz

2.40 GHz and 4.00 GB RAM. The system is imple-

mented in Java.

There were made 2 experiments with slightly dif-

ferent parameter settings.

In the first experiment 18 views(1 per 20

◦

) were

used to learn each object. The remaining 5400 im-

ages were analyzed. A recognition rate of 99.2% was

Figure 11: COIL-100 objects.

reached. The time demand to learn all objects is 277

seconds. The average time demand to analyze one

image is 980 milliseconds.

In the second experiment 8 views(1 per 45

◦

) were

used to learn an object. The other 6400 were ana-

lyzed. A recognition rate of 96.3% was reached. The

system needs 142 seconds to learn all objects. The

time demand to analyze a single image is 1593 mil-

liseconds.

6.3 Comparison to other Methods

The Table 1 is based on the results described in (Yang

et al., 2000) and (Caputo et al., 2000).

Table 1: Comparison with Alternative Results.

Method 18 views 8 views

LAFs 99.9% 99.4%

Half Ellipses 99.2% 96.3%

SNoW / edges 94.1% 89.2%

SNoW / intensity 92.3% 85.1%

Linear SVM 91.3% 84.8%

Spin-Glass MRF 96.8% 88.2%

Nearest Neighbor 87.5% 79.5%

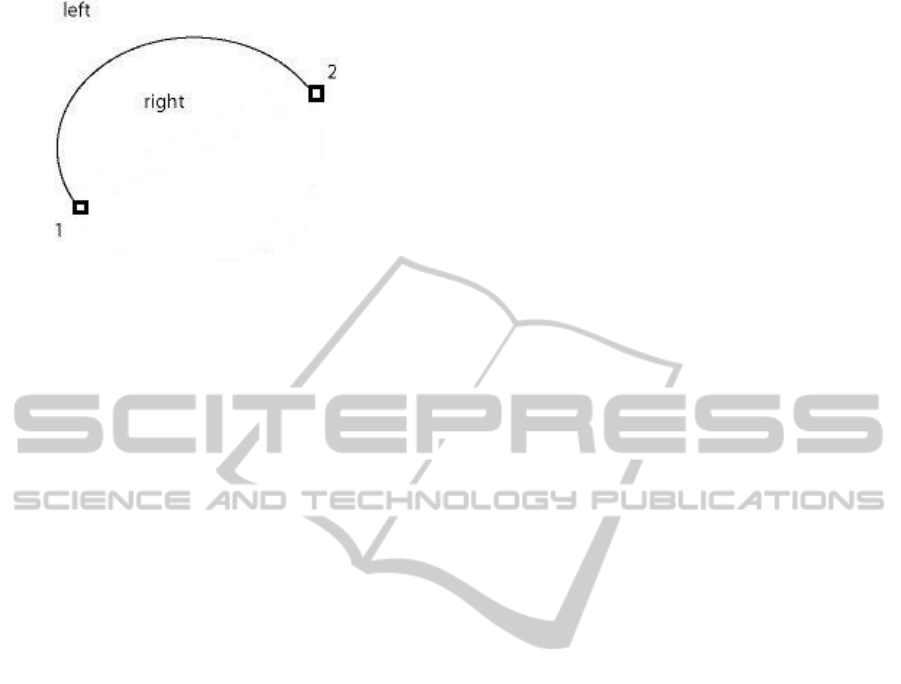

6.4 Color Information

The pure form representation described above was ex-

tended with color information. A half ellipse has the

first and the last point. Hence it also has the right

and the left side as the Figure12 shows. After the ex-

traction of a half ellipse the system determines arith-

metic RGB average along the right side of the half

ellipse as well as along the left one. Thus it deter-

mines two RGB vectors l, r ∈ R

3

. Color code c ∈ R

6

is just Cartesian product of this two vectors c = (l, r).

A representation vector a ∈ R

6n

of a half ellipse com-

bination b ∈ HE

n

gets extended to ˜a ∈ R

6n+6n

with

color code (c

i

)

i∈{1,...,n}

∈

∏

i∈{1,...,n}

R

6

for each half

ellipse of the combination. An additional threshold

A NEW OBJECT RECOGNITION SYSTEM

399

Figure 12: The left and the right side of a half ellipse.

value

˜

ε > 0 is used to compare the color information

of two representation vectors with respect to maxi-

mum norm. An upcoming article explicitly describes

the extraction of half ellipses and its color.

6.5 Learning and Recognition Scheme

used for COIL-100

As mentioned above the system uses e.g. 8 images

to learn an object. For one image it constructs e.g.

10 combinations of half ellipses. Each combination is

represented with e.g 6 feature vectors. Each vector is

labeled with the number N ∈ {1, ..., 100} of the object

it refers to.

Analyzing an image the system at first determines

the maximal length m ∈ N of the matched subse-

quences for each learned feature vector. Let the set

of such lengths be denoted as M. For ˜m = max M

the system depicts all feature vectors for which sub-

sequences of the length ˜m were matched. The ob-

ject with the greatest number of such feature vectors

will be returned as the recognized one. Having sev-

eral such objects the system chooses one of them ran-

domly.

7 SUMMARY AND FUTURE

WORK

The object recognition system presented in this paper

combines several important characteristics. It’s capa-

ble of handling 3D objects. The half ellipse extraction

is at least stable enough to wield COIL-100 images.

The trivial color representation used now has yet

to become illumination invariant. The optimization of

the running time doesn’t appear to be a great problem

as the central retrieval algorithm is highly paralleliz-

able. The greatest challenge seems to be the reduction

of the storage consumption without lost of perspective

robustness.

At the present the authors develop a flow estima-

tor based on the comparison of half ellipse combina-

tions. The flow estimator learns thousands of half

ellipse combinations on the first frame and tries to

match them on the second one. So in a near future

the system could gain an universal character being si-

multaneously capable of object recognition as well as

flow estimation.

REFERENCES

Arbter, K., Snyder, W. E., Burkhardt, H., and Hirzinger,

G. (1990). Application of affine-invariant fourier de-

scriptors to recognition of 3d objects. In IEEE Trans.

Pattern Analysis and Machine Learning.

Bishop, C. (2007). Neural Networks for Patternrecognition.

Oxford University Press.

Canny, J. (1986). A computational approach to edge detec-

tion. In IEEE Transactions on Pattern Analysis and

Machine Intelligence.

Caputo, B., Hornegger, J., Paulus, D., and Niemann, H.

(2000). A spin-glass markov random field for 3d ob-

ject recognition. NIPS 2000.

Dalal, N. and Triggs, B. (2005). Histograms of oriented

gradients for human detection. In IEEE Conference

Computer Vision and Pattern Recognition , San Diego.

Gyofri, L., Kohler, M., Krzyzak, A., and Walk, H. (2002).

A Distribution-Free Theory of Nonparametric Regres-

sion. Springer.

Hough, P. V. C. (1962). Method and Means of Recognising

Complex Patterns. US Patent 3069654.

Hu, M. K. (1962). Visual pattern recognition by moment

invariants. In IRE Transactions on Information The-

ory.

Jaehne, B. (2005). Digital Image Processing. Springer-

Verlag Berlin.

Nene, S. A., Nayar, S. K., and Murase, H. (1996). Columbia

Object Image Library (COIL-100).

Reiss, T. H. (1993). Recognizing Planar Objects Using In-

variant Image Features. Springer-Verlag Berlin Hei-

delberg.

Rosenblatt, F. (1962). Principles of Neurodynamics. Spar-

tan, New York.

Tsuji, S. and Matsumoto, F. (1978). Detection of ellipses by

a modified hough transform. In IEEE Trans. Comput.

Vapnik, V. N. (1998). Statistical Learning Theory. Wiley,

New York.

Yang, M. H., Roth, D., and Ahuja, N. (2000). Learning to

recognize 3d objects with snow. In ECCV 2000.

VISAPP 2011 - International Conference on Computer Vision Theory and Applications

400