GAZE TRAJECTORY AS A BIOMETRIC MODALITY

Farzin Deravi and Shivanand P. Guness

School of Engineering and Digital Arts, University of Kent, Canterbury, CT2 7NT, U.K.

Keywords: Biometrics, Gaze tracking.

Abstract: Could everybody be looking at the world in a different way? This paper explores the idea that every

individual has a distinctive way of looking at the world and thus it may be possible to identify an individual

by how they look at external stimuli. The paper reports on a project to assess the potential for a new

biometric modality based on gaze. A gaze tracking system was used to collect gaze information of

participants while viewing a series of images for about 5 milliseconds each. The data collected was firstly

analysed to select the best suited features using three different algorithms: the Forward Feature Selection,

the Backwards Feature Selection and the Branch and Bound Feature Selection algorithms. The performance

of the proposed system was then tested with different amounts of data used for classifier training. From the

preliminary experimental results obtained, it can be seen that gaze does have some potential as being used

as a biometric modality. The experiments carried out were only done on a very small sample; more testing is

required to confirm the preliminary findings of this paper.

1 INTRODUCTION

Biometric systems aim to establish the identity of

individuals using data obtained from their physical

or behavioural characteristics. In recent years there

has been an increasing range of systems developed

using a wide variety of biometric modalities –

including fingerprints, face, voice and gait. In this

work we propose the human gaze as a new modality

that may also be used to establish identity.

Gaze tracking is the process of continuously

measuring the point or direction of gaze of the eyes

of an individual. Up to now we are not aware of any

studies on the use of gaze as a source of biometric

information. In particular, the possibility of using

gaze direction as a means for identifying individuals

will be explored in this work.

Gaze may be considered a type of behavioural

biometrics. Such behavioural modalities are based

on acquired behaviour, style, preference, knowledge,

motor-skills or strategy used by people while

accomplishing different everyday tasks such as

driving an automobile, talking on the phone or using

a computer (Goudelis, Tefas, & Pitas, 2009;

Gutiérrez-García, Ramos-Corchado, & Unger, 2007)

Human Computer Interaction (HCI) is the

interaction between the user and devices such as

Personal Computers, Smart phones etc. HCI can also

be used as a source of biometric information because

the interaction of a user and his computer may be

quite distinctive if not unique. One attraction for

using HCI as a biometric modality is the potential to

develop a non-intrusive authentication mechanism

(Yampolskiy, 2007).

Gaze may therefore be considered in part as a

behavioural biometrics. At the same time it may also

have physiological aspects determined by the tissues

and muscles that determine its capabilities and

limitations. In this respect, it may be likened to text-

dependent automatic speaker recognition where a

user is asked to read a pre-defined text and the sound

generated is analysed and compared with a database

to establish his or her identity. In a similar fashion

the user of gaze biometrics may be shown a

predefined sequence of images and the gaze

information is analysed and compared with a

database of previously stored gaze data to recognize

the individual.

In particular the data generated may be analysed

in a similar fashion to Online Signature Verification

because the gaze data is very similar to online

signature, except for the fact that the gaze data is

collected with reference to a screen where the

stimulus images are presented and the signature data

is obtained on a digitalised pad. A study of HCI-

based biometric modalities such as Keystroke and

335

Deravi F. and Guness S..

GAZE TRAJECTORY AS A BIOMETRIC MODALITY.

DOI: 10.5220/0003275803350341

In Proceedings of the International Conference on Bio-inspired Systems and Signal Processing (BIOSIGNALS-2011), pages 335-341

ISBN: 978-989-8425-35-5

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

Mouse Dynamics may also be helpful in the

development of gaze as a biometric modality.

The rest of the paper is organized as follows: In

Section 2 a review of some related sources of

biometric information is presented. Section 3

describes the design of our proposed system while

Section 4 presents the experimental setup and some

preliminary test results. Finally Section 5 provides

tentative conclusions and suggestions for further

work.

2 BACKGROUND

In this section of the dissertation we are looking at

traditional behavioural biometrics such as Online

Signature Verification and also HCI based

biometrics such as Keystroke and Mouse Dynamics.

The behavioural aspect of gaze and eye movement is

also investigated.

2.1 Biometric Modalities

2.1.1 Online Signature Verification

The study done by Lei et al was to investigate the

consistency of the features used for signature

verification. The speed, coordinate points and,

angles between the speed vector and the X-axis are

found to be the most consistent and reliable. The

False Accept Rate (FAR), False Reject Rate (FRR)

and the Equal Error Rate (EER) are calculated for

each feature and this data is used to find the most

consistent features (Lei & Govindaraju, 2005). In

Chapran et al, the 35 most widely used features used

in handwritten signatures. ANOVA (Analysis of

Variance) statistic method was used to find the

variance of the different features. This enables

finding the features that changed when a forged

signature was entered. This also helped to find the

features which are most suitable for the different

types of writing activities such as writing cheques

and signing forms (Chapran, Fairhurst, Guest, &

Ujam, 2008).

2.1.2 Keystroke Biometrics

Keystroke biometric modality involves collecting

data about the typing pattern of the user. There are

two different types of keystroke biometrics. The

keystroke biometrics can be either static or

continuous. In static keystroke biometrics, keystroke

biometric is only used during login time whereas

with continuous keystroke biometrics; the modality

is continuously being monitored. The advantage of

continuous keystroke over static is that an imposter

user can be detected even if an imposter is

substituted for a genuine user after the initial

authentication process. The features that can be used

are the time between keystrokes, the duration of the

keystroke, finger placement and applied pressure on

the keys in the paper proposed by Monrose & Rubin

(Monrose & Rubin, 2000).

2.1.3 Mouse Dynamics

Mouse Dynamics uses information such as the

direction of the movement of the mouse, timing and

monitoring when actions such as clicking are

performed to authenticate a user. There are two ways

in which data is captured for this modality. Data can

be collected by the continuous monitor the activities

of the user. Another method used is by capturing the

mouse interaction in an application such as a game.

In a paper proposed by Revett et al, a survey is

conducted to investigate mouse movement based

biometric authentication systems. Revett et al also

proposed a novel graphical authentication system

called Mouse Lock. The preliminary result showed

that mouse movement or mouse dynamics could be a

viable biometric modality. For testing their

application with 5 user and they showed that the

FAR of the system to be in the range of 2% to 6%

and FRR to be in the range of 0% to 7% (Revett,

Jahankhani, Magalhães, & Santos, 2008).

2.1.4 Eye Behaviour

In a paper by Adolphs the relationship between the

size of the pupils and emotions were investigated.

The study was looking at how the size of the pupil of

individual is changes while looking at sad faces.

Pupil size is well-known to be influenced by

stimulus luminance, but it turns out also to be

influenced by other factors, including salience and

emotional meaning (Adolphs, 2006).

In Harrison et al, the size of the pupil was

investigated to see how it changes while viewing the

expressions of another person. The study showed

that the size of the pupil becomes smaller while

viewing sad facial expressions and there is no visible

change caused by expressions of happiness, neutral

expression or expressions of anger (Harrison,

Singer, Rotshtein, Dolan, & Critchley, 2006).

Wang et al used eye tracking and pupil dilation

to see if a person is telling the truth. It was observed

that the pupils were dilated when deceptive

messages were sent and that the dilation was related

to the magnitude of the deception (Wang, Spezio, &

BIOSIGNALS 2011 - International Conference on Bio-inspired Systems and Signal Processing

336

Camerer, 2010).

In this study, Castelhano et al (2008) investigated

the influence of the gaze of another person on the

direction of gaze of an observer. In their experiment

the participants were shown a sequence of scene

photographs that told a story. Some of the scenes

contained an actor fixating an object in the scene. It

was observed that the first fixation point of the

participants were the face of the actor, the eye then

moved to focus on the object the actor was focusing

on. Furthermore it was observed that even in the

presence of other object in the scene the participants

would always focus their gaze on the object the actor

is looking at.(Castelhano, Wieth, & Henderson,

2008)

Another study conducted by Castelhano (2009),

the influence of task on the movement of the eye is

being investigated. The experiments consisted of 20

participants who were asked to view color

photographs of natural scenes but under two

different instruction sets. The first instruction set

was to do a visual search of the image and the

second task was to memorization of the image. The

results of the experiments show that the fixation

points and the gaze duration of the different

participants were influenced by the task they were

performing. It was also seen that the areas of

fixation were different for both tasks but the

movement amplitude and the duration of the fixation

were not affected (Castelhano, Mack, & Henderson,

2009).

2.2 Tracking Performance

An important consideration is the accuracy with

which gaze data can be captured for further analysis.

The result in the paper by Chao-Ning et al shows

their technique can be used to calculate gaze with an

accuracy of 85% to 96% within 2 meters. This was

done by calculating the estimated gaze of the system

and comparing the result to the coordinate of the dot

the user was viewing on the screen. The test was

carried out at various distances (Chao-Ning Chan,

Oe, & Chern-Sheng Lin, 2007).

3 DESIGN

3.1.1 Overview

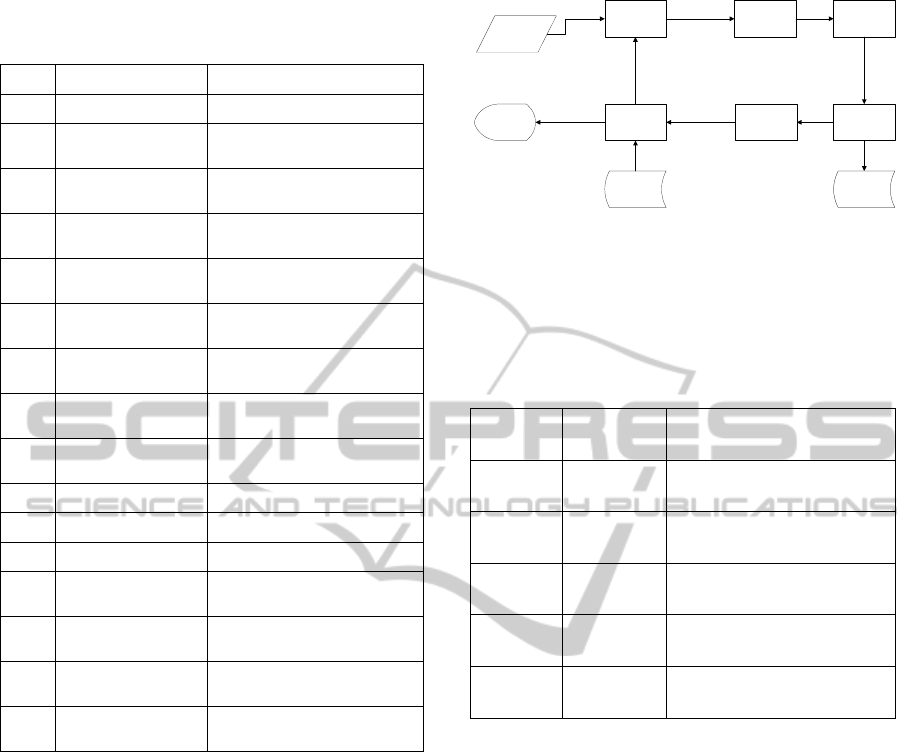

The overall system setup is shown in Figure 1. This

consists of a display screen where stimulus images

are presented and a webcam facing the user to

capture their gaze information. Software in the

computer attached to the webcam is then used to

analyse the images captured by the camera to extract

gaze information which is subsequently further

processed to extract gaze features. Once the features

are extracted, a suitable classifier is used to compare

the captured data with previously stored data for this

and other users. In this way it is possible to establish

error rates for the system and explore its feasibility

as a biometric system.

3.1.2 Stimulus

The stimuli used were obtained from an image

quality database (Engelke, Maeder, & Zepernick,

2009; Le Callet & Autrusseau, 2005). Five images

were chosen from the database. Alternate images of

objects or nature and human are displayed to the

user. This is done so as to avoid the user’s gaze to be

influenced by the content of the image and to offer

the user a variety of image types. The images are all

assumed to be of the same quality and thus the gaze

of the user would not be influenced by the quality of

the image.

Figure 1: Stimulus images.

3.1.3 Gaze Data

Table 1 describes the structure of the data to be

stored for the gaze information that is captured. This

data has to contain enough information to enable

further processing for biometric feature extractions.

3.1.4 Gaze Capture

Figure 2 show the flow diagram for the enrolment

process. User enrolment is the process whereby the

GAZE TRAJECTORY AS A BIOMETRIC MODALITY

337

Table 1 : Data structure of the data retrieved from the

gaze.

# Field Name Description

1 Frame Number Unique incremental number

2 Time The time the gaze was

captured

3 Interval The interval time used by the

sensor

4 X coordinate of left

pupil

The X coordinate of the

centre of the left pupil

5 Y coordinate of left

pupil

The Y coordinate of the

centre of the left pupil

6 Tracking status of

left pupil

The tracking status of the left

pupil

7 X coordinate of

right pupil

The X coordinate of the

centre of the right pupil

8 Y coordinate of

right pupil

The Y coordinate of the

centre of the right pupil

9 Tracking status of

right pupil

The tracking status of the

right pupil

10 Type of data The type of the data

11 Size of left pupil The size of the left pupil

12 Size of right pupil The size of the right pupil

13 Stimulus The stimulus being presented

to the user

14 X coordinate of

gaze point

The X coordinate of the

estimated gaze point

15 Y coordinate of

gaze point

The Y coordinate of the

estimated gaze point

16 Interoccular

distance

The distance between the left

and right pupil of the user

biometrics of the user is processed and added to the

system. The user is presented with a number of

images. The images are presented to the user in a

full screen and modal mode i.e. the user would not

see any other activity on the screen and the

application would have the focus the during the gaze

capture session so as to prevent the user from getting

distracted from other operations on the screen. The

gaze data of the user on the images are recorded.

Between images the screen is greyed out and the

user is asked to fix the location in the middle of the

screen. This data is used to calibrate the gaze of the

user and also to ensure that the user starts looking at

each of the images from the same location. The

mapping from the location of the pupil to the centre

of the screen is stored as the calibration data and is

used to estimate the gaze of the user when the user is

looking at screen/images.

Figure 2: Flow chart of user enrolment.

3.1.5 Gaze Features

Table 2 is a list of features to be extracted from the

gaze data:

Table 2: List of features from gaze data.

Data

Column#

Feature Description

2 Duration

The duration the gaze was at the

current position

4,5 Left Pupil

The location of the left pupil

location

7,8 Right Pupil

The location of the right pupil

location

11,12 Size of Pupil

The size of the pupil

14,15 Gaze Point

The calculated gaze point on

the screen

The data column number corresponds to the data

column in Table 1.

4 EXPERIMENTATION

4.1 Set Up

For the experiment the equipment are placed in a

table mounted configuration. The camera is placed

in front and in the middle of the screen. The user is

placed at a distance of 30-60cm from the screen. The

procedure of the test is based on (Duchowski, 2007;

Judd, Ehinger, Durand, & Torralba, 2009; Van,

Rajashekar, Bovik, & Cormack, 2009).

4.2 Procedure

4.2.1 Initialisation

The purpose of the initialisation phase is to detect

and initialise the location of the face, eyes and nose.

2. Gaze tracking

module

3. Extract

Features

4. Generate

Template

1. Display picture

Template

Database

Picture

Database

5. Store template6. Get Next pictureScreen

Data

From

Sensor

BIOSIGNALS 2011 - International Conference on Bio-inspired Systems and Signal Processing

338

The purpose of this phase is to verify if the user is

facing the sensor and viewing the scene. Once a face

is detected and the eye and pupil centres are detected

the calibration phase can begin.

4.2.2 Calibration

For the calibration phase the user is presented with 9

dots on the screen. The dots are shown at one

location at a time. The user has to fix their gaze on

the dots when they appear. Each dot is displayed to

the user for a period of 5 seconds (5000

milliseconds).

4.2.3 Gaze Capture

Once the calibration is complete, the user is shown a

set of images. The images used were obtained from

a quality image database (Engelke et al., 2009; Le

Callet & Autrusseau, 2005) so as to prevent the

quality of the images used to influence the data

captured. The images are assumed to be of similar

quality. The user is shown the images for a period of

5 seconds (5000 milliseconds). For each set of

images 2 gaze capture sessions are required. This is

because as it is seen in the literature review that the

gaze or eye movement is based on the task being

carried out (Castelhano et al., 2009).

4.2.4 Rest Period

The rest period is used to rest the gaze of the user by

making the user look at the centre of the screen. The

rest period is shown between showing the different

stimulus images for gaze capture. The duration of

the rest period is 3 seconds (3000 milliseconds).

4.3 Results

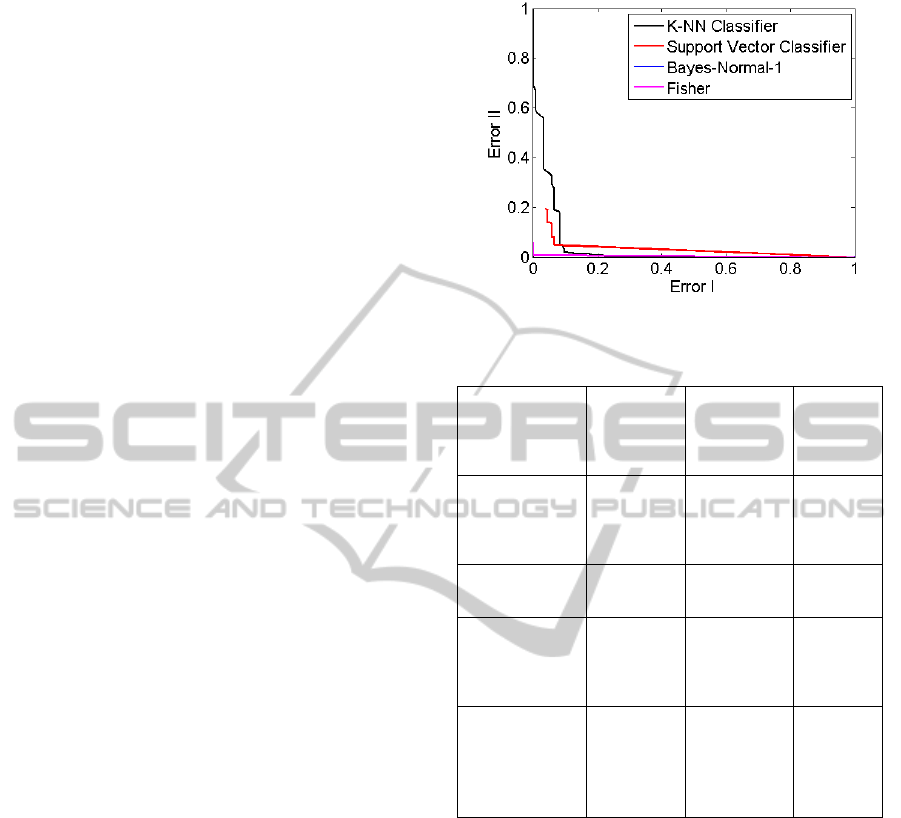

4.3.1 Performance

For this test the experiment the whole feature list as

described in Table 1 : Data structure of the data

retrieved from the gaze.Table 1.

4.3.2 Feature Analysis

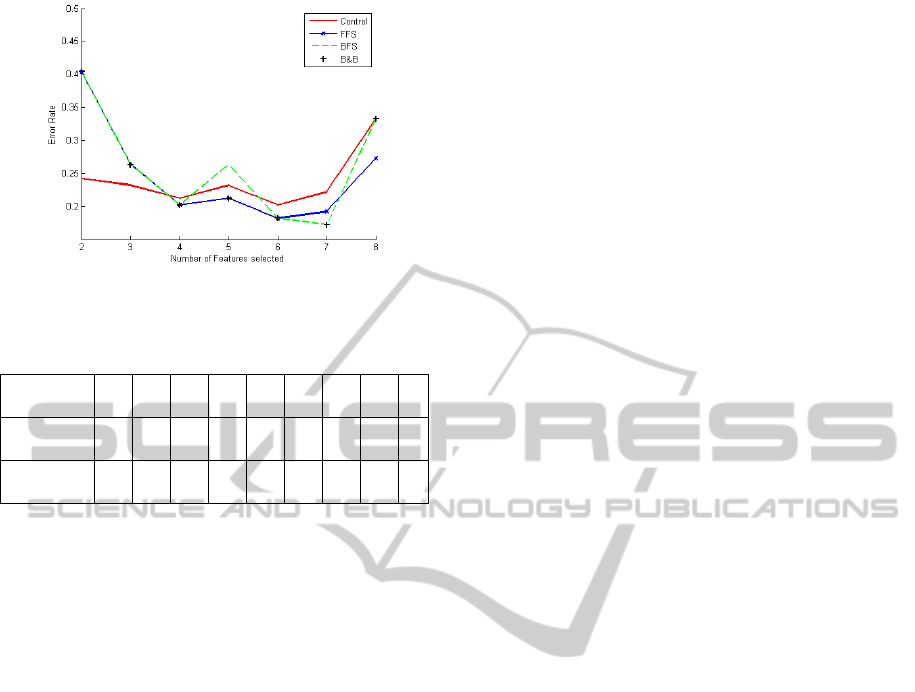

Figure 4, shows the difference in the performance of

the system using features selected using the Forward

Feature Selection (FFS), Backwards Feature

Selection (BFS) and Branch and Bound (B&B)

algorithms. The performance data is compared

against the performance using all the features which

is used as a control. As it can be seen when selecting

2 to 3 features, the performance did not improved

Figure 3: ROC curve with 20% of data as Training data.

Table 3: Results from testing with classifiers.

Classifier

Error Rate

(20%

training

data)

Error Rate

(50%

training data)

Error Rate

(80%

training

data)

K-Nearest

Neighbor

Classifier

(KNNC)

0.152 0.064 0.030

Support Vector

Classifier (SVC)

0.077 0.004 0.010

Normal

densities based

linear classifier

(LDC)

0.077 0.004 0.010

Fisher

Minimum Least

Square Linear

Classifier

(FISHERC)

0.005 0.004 0.000

using the features selection algorithms. On selecting

4 features, there was a slight improvement in the

performance of the system with the feature selection

algorithms. With 5 to 7 features being selected, it

can be seen that the features obtained from the

feature selection algorithms improved the

performance of the system. When 8 features were

selected, using the FFS algorithm only a slightly

improvement of the performance was noticed and

with the BFS and B&B algorithms the performance

was equal to the control performance using all the

features. The best performance is obtained using the

BFS and the B&B algorithms with 7 features

selected from Table 2. The features selected are:

GAZE TRAJECTORY AS A BIOMETRIC MODALITY

339

Figure 4: Error rate before and after feature selection.

Table 4: Best Feature selection algorithms and features

selected.

Algorithm 1 2 3 4 5 6 7 8 9

BFS 1 2 3 4 5 6 7

B&B 2 4 7 5 1 6 3

5 CONCLUSIONS

This work has relied on techniques developed in the

field of behavioural biometric; HCI based biometric

modalities and combining results with techniques

from gaze tracking, pupillometry and facial feature

extraction to create a new biometric modality based

on gaze. From the preliminary result obtained, it can

be seen that gaze information may have some

potential for being used as a biometric modality. The

experiments carried out were only done on a very

small sample; more testing is required to confirm the

preliminary findings of this project.

A gaze-based biometric modality would be both

an affordable and nonintrusive way of verifying the

user’s identity. In addition, a gaze-based biometric

modality would also open the way to a number of

new application areas. It would be well suited for

verification of users which interact with a whole

range of devices containing a camera such as smart

phones, personal computers etc. Gaze-based

biometric systems could also be used as a remote

authentication system for web sites or e-commerce

sites. Another potential area where such

technologies can be used is in liveness detection. In

such an approach, liveness detection may be based

on the movement of the pupil using as stimulus

either the variation of lighting condition or using

images.

Future research on this topic should be directed

at increasing overall accuracy of the gaze tracking

system as well as looking into possibility of

developing multimodal biometric system based on

other existing biometric modalities such as iris,

fingerprint or HCI-based biometric modalities such

as keystroke or mouse dynamics.

REFERENCES

Adolphs, R. (2006). A landmark study finds that when we

look at sad faces, the size of the pupil we look at

influences the size of our own pupil. Social Cognitive

and Affective Neuroscience, 1(1), 3-4. doi:10.1093/

scan/nsl011

Castelhano, M. S., Mack, M. L., & Henderson, J. M.

(2009). Viewing task influences eye movement control

during active scene perception. Journal of Vision, 9(3)

doi:10.1167/9.3.6

Castelhano, M. S., Wieth, M., & Henderson, J. M. (2008).

I see what you see: Eye movements in real-world

scenes are affected by perceived direction of gaze.,

251-262. doi:http://dx.doi.org/10.1007/978-3-540-

77343-6_16

Chao-Ning Chan, Oe, S., & Chern-Sheng Lin. (2007).

Active eye-tracking system by using quad PTZ

cameras. Industrial Electronics Society, 2007. IECON

2007. 33rd Annual Conference of the IEEE, 2389-

2394.

Chapran, J., Fairhurst, M. C., Guest, R. M., & Ujam, C.

(2008). Task-related population characteristics in

handwriting analysis. Computer Vision, IET, 2(2), 75-

87.

Duchowski, A. T. (2007). Eye tracking methodology:

Theory and practice. Secaucus, NJ, USA: Springer-

Verlag New York, Inc.

Engelke, U., Maeder, A., & Zepernick, H. -. (2009).

Visual attention modelling for subjective image

quality databases. , Rio De Janeiro

Goudelis, G., Tefas, A., & Pitas, I. (2009). Emerging

biometric modalities: A survey. Journal on

Multimodal User Interfaces, , 1-19.

doi:10.1007/s12193-009-0020-x

Gutiérrez-García, J. O., Ramos-Corchado, F. F., & Unger,

H. (2007). User authentication via mouse biometrics

and the usage of graphic user interfaces: An

application approach. 2007 International Conference

on Security and Management, SAM'07, Las Vegas,

NV. 76-82.

Harrison, N. A., Singer, T., Rotshtein, P., Dolan, R. J., &

Critchley, H. D. (2006). Pupillary contagion: Central

mechanisms engaged in sadness processing. Social

Cognitive and Affective Neuroscience, 1(1), 5-17.

doi:10.1093/scan/nsl006

Judd, T., Ehinger, K., Durand, F., & Torralba, A. (2009).

Learning to predict where humans look. IEEE

International Conference on Computer Vision (ICCV),

Le Callet, P., & Autrusseau, F. (2005). Subjective quality

BIOSIGNALS 2011 - International Conference on Bio-inspired Systems and Signal Processing

340

assessment IRCCyN/IVC database.

Lei, H., & Govindaraju, V. (2005). A comparative study

on the consistency of features in on-line signature

verification. Pattern Recognition Letters, 26(15),

2483-2489. doi:DOI: 10.1016/j.patrec.2005.05.005

Monrose, F., & Rubin, A. D. (2000). Keystroke dynamics

as a biometric for authentication. Future Generation

Computer Systems, 16(4), 351-359. doi:DOI:

10.1016/S0167-739X(99)00059-X

Revett, K., Jahankhani, H., Magalhães, S. T., & Santos, H.

M. D. (2008). A survey of user authentication based

on mouse dynamics. In H. Jahankhani, K. Revett & D.

Palmer-Brown (Eds.), Global E-security (, pp. 210-

219) Springer Berlin Heidelberg.

Van, D. L., Rajashekar, U., Bovik, A. C., & Cormack, L.

K. (2009). Doves: A database of visual eye

movements. Spatial Vision, 22(2), 161-177.

doi:10.1163/156856809787465636

Wang, J. T., Spezio, M., & Camerer, C. F. (2010).

Pinocchio's pupil: Using eyetracking and pupil

dilation to understand truth telling and deception in

sender-receiver games

Yampolskiy, R. V. (2007). Human computer interaction

based intrusion detection. Information Technology,

2007. ITNG '07. Fourth International Conference on,

837-842.

GAZE TRAJECTORY AS A BIOMETRIC MODALITY

341