A LINGUISTIC GROUP DECISION MAKING METHOD

BASED ON DISTANCES

Edurne Falc´o and Jos´e Luis Garc´ıa-Lapresta

PRESAD Research Group, Dept. of Applied Economics, University of Valladolid, Valladolid, Spain

Keywords:

Group decision making, Linguistic assessments, Distances.

Abstract:

It is common knowledge that the political voting systems suffer inconsistencies and paradoxes such that Arrow

has shown in his well-known Impossibility Theorem. Recently Balinski and Laraki have introduced a new

voting system called Majority Judgement (MJ) which tries to solve some of these limitations. In MJ voters

have to asses the candidates through linguistic terms belonging to a common language. From this information,

MJ assigns as the collective assessment the lower median of the individual assessments and it considers a

sequential tie-breaking method for ranking the candidates. The present paper provides an extension of MJ

focused to reduce some of the drawbacks that have been detected in MJ by several authors. The model assigns

as the collective assessment a label that minimizes the distance to the individual assessments. In addition, we

propose a new tie-breaking method also based on distances.

1 INTRODUCTION

Social Choice Theory shows that there does not ex-

ist a completely acceptable voting system for electing

and ranking alternatives. The well-known Arrow Im-

possibility Theorem (Arrow, 1963) proves with math-

ematic certainty that no voting system simultaneously

fulfills certain desirable properties

1

.

Recently (Balinski and Laraki, 2007a; Balinski

and Laraki, 2007c) have proposed a voting system

called Majority Judgement (MJ) which tries to avoid

these unsatisfactory results and allows the voters to

assess the alternatives through linguistic labels, as

Excellent, Very good, Good, . .., instead of rank or-

der the alternatives. Among all the individual assess-

ments given by the voters, MJ chooses the median as

the collective assessment. Balinski and Laraki also

describe a tie-breaking process which compares the

number of labels above the collective assessment and

those below of it. These authors also have an exper-

imental analysis of MJ (Balinski and Laraki, 2007b)

carried out in Orsay during the 2007 French presiden-

tial election. In that paper the authors show some in-

teresting properties of MJ and they advocate that this

1

Any voting rule that generates a collective weak order

from every profile of weak orders, and satisfies indepen-

dence of irrelevant alternatives and unanimity is necessarily

dictatorial, insofar as there are at least three alternatives and

three voters.

voting system is easily implemented and that it avoids

the necessity for a second round of voting.

Desirable properties and advantages have been

attributed to MJ against the classical Arrow frame-

work of preferences’ aggregation. Among them are

the possibility that voters show more faithfully and

properly their opinions than in the conventional vot-

ing systems, anonymity, neutrality, independence of

irrelevant alternatives, etc. However, some authors

(Felsenthal and Machover, 2008), (Garc´ıa-Lapresta

and Mart´ınez-Panero, 2009) and (Smith, 2007) have

shown several paradoxes and inconsistencies of MJ.

In this paper we propose an extension of MJ which

diminishes some of the MJ inconveniences. The ap-

proach of the paper is distance-based, both for gener-

ating a collective assessment of each alternative and

in the tie-breaking process that provides a weak order

on the set of alternatives. As in MJ we consider that

individuals assess the alternatives through linguistic

labels and we propose as the collective assessment

a label that minimizes the distance to the individual

assessments. These distances between linguistic la-

bels are induced by a metric of the parameterized

Minkowski family. Depending on the specific met-

ric we use, the discrepancies between the collective

and the individual assessments are weighted in a dif-

ferent manner, and the corresponding outcome can be

different.

The paper is organized as follows. In Section 2,

458

Falcó E. and Luis García-Lapresta J..

A LINGUISTIC GROUP DECISION MAKING METHOD BASED ON DISTANCES.

DOI: 10.5220/0003273104580463

In Proceedings of the 3rd International Conference on Agents and Artificial Intelligence (ICAART-2011), pages 458-463

ISBN: 978-989-8425-40-9

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

the MJ voting system is formally explained. Section

3 introduces our proposal, within a distance-based ap-

proach (the election of the collective assessment for

each alternative and the tie-breaking method). In Sec-

tion 4 we include an illustrative example showing the

influence of the metric used in the proposed method

and its differences with respect to MJ and Range Vot-

ing (Smith, 2007). Finally, in Section 5 we collect

some conclusions.

2 MAJORITY JUDGEMENT

We consider

2

a finite set of voters V = {1, . . . , m},

with m ≥ 2, who evaluate a finite set of alternatives

X = {x

1

, . . . , x

n

}, with n ≥ 2. Each alternative is as-

sessed by each voter through a linguistic term be-

longing to an ordered finite scale L = {l

1

, . . . , l

g

},

with l

1

< · ·· < l

g

and granularity g ≥ 2. Each voter

assesses the alternatives in an independent way and

these assessments are collected by a matrix

v

i

j

,

where v

i

j

∈ L is the assessment that the voter i gives

to the alternative x

j

.

MJ chooses for each alternative the median of the

individual assessment as the collective assessment.

To be precise, the single median when the number

of voters is odd and the lower median in the case

that the number of voters is even. We denote with

l(x

j

) the collective assessment of the alternative x

j

.

Given that several alternatives might share the same

collective assessment, Balinski and Laraki (Balinski

and Laraki, 2007a) propose a sequential tie-breaking

process. This can be described through the following

terms (Garc´ıa-Lapresta and Mart´ınez-Panero, 2009):

N

+

(x

j

) = #{i ∈ V | v

i

j

> l(x

j

)},

N

−

(x

j

) = #{i ∈ V | v

i

j

< l(x

j

)}

and

t(x

j

) =

−1, if N

+

(x

j

) < N

−

(x

j

),

0, if N

+

(x

j

) = N

−

(x

j

),

1, if N

+

(x

j

) > N

−

(x

j

).

Taking into account the collective assessments and

the previous indices, we define a weak order on X

in the following way: x

j

x

k

if and only if one of the

following conditions hold:

1. l(x

j

) > l(x

k

).

2

The current notation is similar to the one introduced by

(Garc´ıa-Lapresta and Mart´ınez-Panero, 2009). This allows

us to describe the MJ process, presented by (Balinski and

Laraki, 2007a), in a more precise way.

2. l(x

j

) = l(x

k

) and t(x

j

) > t(x

k

).

3. l(x

j

) = l(x

k

), t(x

j

) = t(x

k

) = 1 and

N

+

(x

j

) > N

+

(x

k

).

4. l(x

j

) = l(x

k

), t(x

j

) = t(x

k

) = 1, N

+

(x

j

) = N

+

(x

k

)

and N

−

(x

j

) ≤ N

−

(x

k

).

5. l(x

j

) = l(x

k

), t(x

j

) = t(x

k

) = 0 and

m− N

+

(x

j

) − N

−

(x

j

) ≥ m− N

+

(x

k

) − N

−

(x

k

).

6. l(x

j

) = l(x

k

), t(x

j

) = t(x

k

) = −1 and

N

−

(x

j

) < N

−

(x

k

).

7. l(x

j

) = l(x

k

), t(x

j

) = t(x

k

) = −1,

N

−

(x

j

) = N

−

(x

k

) and N

+

(x

j

) ≥ N

+

(x

k

).

The asymmetric and symmetric parts of are de-

fined in the usual way:

x

j

≻ x

k

⇔ not x

k

x

j

x

j

∼ x

k

⇔ (x

j

x

k

and x

k

x

j

).

Next an example of how MJ works is shown.

Example 1. Consider three alternatives x

1

, x

2

and x

3

that are evaluated by seven voters through a set of six

linguistic terms L = {l

1

, . . . , l

6

}, the same set used in

MJ (Balinski and Laraki, 2007b), whose meaning is

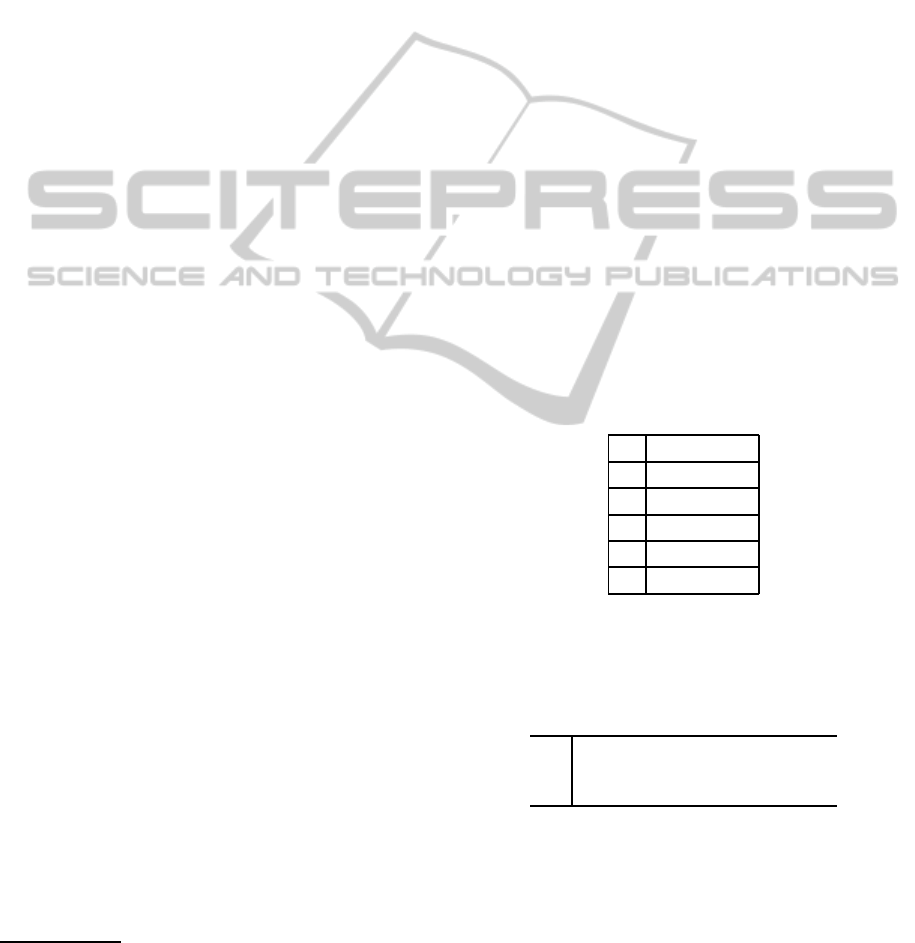

shown in Table 1.

Table 1: Meaning of the linguistic terms.

l

1

To reject

l

2

Poor

l

3

Acceptable

l

4

Good

l

5

Very good

l

6

Excellent

The assessments obtained for each alternative are

collected and ranked from the lowest to the highest in

Table 2.

Table 2: Assessments of Example 1.

x

1

l

1

l

1

l

3

l

5

l

5

l

5

l

6

x

2

l

1

l

4

l

4

l

4

l

4

l

5

l

6

x

3

l

1

l

3

l

4

l

4

l

5

l

5

l

5

For ranking the three alternatives, first we take the

median of the individual assessments, that will be the

collective assessment for each one of the mentioned

alternatives: l(x

1

) = l

5

, l(x

2

) = l

4

and l(x

3

) = l

4

.

Given that x

1

has the best collective assessment, it

will be the one ranked in first place. However, the

alternatives x

2

and x

3

share the same collective as-

sessment, we need to turn to the tie-breaking pro-

cess, where we obtain N

+

(x

2

) = 2, N

−

(x

2

) = 1 and

A LINGUISTIC GROUP DECISION MAKING METHOD BASED ON DISTANCES

459

t(x

2

) = 1; N

+

(x

3

) = 3, N

−

(x

3

) = 2 and t(x

3

) =

1. Since both alternatives have the same t (t(x

2

) =

t(x

3

) = 1), we should compare their N

+

: N

+

(x

2

) =

2 < 3 = N

+

(x

3

). Therefore, the alternative x

3

defeats

the alternative x

2

, and the final order is x

1

≻ x

3

≻ x

2

.

3 DISTANCE-BASED METHOD

In this section the alternative method to MJ that we

propose through a distance-based approach is intro-

duced. The first step for ranking the alternatives is

to assign a collective assessment l(x

j

) ∈ L to each

alternative x

j

∈ X. For its calculation, the vectors

(v

1

j

, . . . , v

m

j

) that collect all the individual assessments

for each alternative x

j

∈ X are taken into account.

The proposal, that is detailed below, involves

how to choose a l(x

j

) ∈ L that minimizes the dis-

tance between the vector of individual assessments

(v

1

j

, . . . , v

m

j

) and the vector (l(x

j

), . . . , l(x

j

)) ∈ L

m

.

The election of that term is performed in an indepen-

dent way for each alternative. This guarantees the ful-

fillment of the independence of irrelevant alternatives

principle

3

.

Once a collective assessment l(x

j

) has been as-

sociated with each alternative x

j

∈ X , we rank the

alternatives according to the ordering of L. Given the

possible existence of ties, we also propose a sequen-

tial tie-breaking process based on the difference be-

tween the distance of l(x

j

) to the assessments higher

than l(x

j

) and the distance of l(x

j

) to the assess-

ments lower than l(x

j

).

3.1 Distances

A distance or metric on R

m

is a mapping

d : R

m

× R

m

−→ R

that fulfills the following conditions for all

(a

1

, . . . , a

m

), (b

1

, . . . , b

m

), (c

1

, . . . , c

m

) ∈ R

m

:

1. d((a

1

, . . . , a

m

), (b

1

, . . . , b

m

)) ≥ 0.

2. d((a

1

, . . . , a

m

), (b

1

, . . . , b

m

)) = 0 ⇔

(a

1

, . . . , a

m

) = (b

1

, . . . , b

m

).

3. d((a

1

, . . . , a

m

), (b

1

, . . . , b

m

)) =

d((b

1

, . . . , b

m

), (a

1

, . . . , a

m

)).

3

This principle says that the relative ranking between

two alternatives would only depend on the preference or as-

sessments on these alternatives and must not be affected by

other alternatives, that must be irrelevant on that compari-

son.

4. d((a

1

, . . . , a

m

), (b

1

, . . . , b

m

)) ≤

d((a

1

, . . . , a

m

), (c

1

, . . . , c

m

)) +

d((c

1

, . . . , c

m

), (b

1

, . . . , b

m

)).

Given a distance d : R

m

×R

m

−→ R, the distance

on L

m

induced by d is the mapping

¯

d :L

m

×L

m

−→ R

defined by

¯

d((l

a

1

, . . . , l

a

m

), ((l

b

1

, . . . , l

b

m

)) =

d((a

1

, . . . , a

m

), (b

1

, . . . , b

m

)).

A relevant class of distances in R

m

is constituted

by the family of Minkowski distances {d

p

| p ≥ 1},

which are defined by

d

p

((a

1

, . . . , a

m

), (b

1

, . . . , b

m

)) =

m

∑

i=1

|a

i

− b

i

|

p

!

1

p

,

for all (a

1

, . . . , a

m

), (b

1

, . . . , b

m

) ∈ R

m

.

We choose this family due to the fact that it is

parameterized and it includes from the well-known

Manhattan (p = 1) and Euclidean (p = 2) distances,

to the limit case, the Chebyshev distance (p = ∞).

The possibility of choosing among different values of

p ∈ (1,∞) gives us a very flexible method, and we

can choose the most appropriate p according to the

objectives we want to achieve with the election.

Given a Minkowski distance on R

m

, we consider

the induced distance on L

m

which works with the as-

sessments vector through the subindexes of the corre-

sponding labels:

¯

d

p

((l

a

1

, . . . , l

a

m

), (l

b

1

, . . . , l

b

m

)) =

d

p

((a

1

, . . . , a

m

), (b

1

, . . . , b

m

)).

Clearly, this approach means that the labels that

form L are equidistant. In this sense, the distance be-

tween two labels’ vectors is based on the number of

positions that we need to cover to go from one to an-

other, in each of its components. To move from l

a

i

to l

b

i

we need to cover |a

i

− b

i

| positions. For in-

stance between l

5

and l

2

we need to cover |5−2| = 3

positions.

3.2 Election of a Collective Assessment

for each Alternative

Our proposal is divided into several stages. First

we assign a collective assessment l(x

j

) ∈ L to each

alternative x

j

∈ X which minimizes the distance

between the vector of the individual assessments,

(v

1

j

, . . . , v

m

j

) ∈ L

m

, and the vector of m replicas of the

desired collective assessment, (l(x

j

), . . . , l(x

j

)) ∈ L

m

.

For this, first we establish the set L(x

j

) of all the

labels l

k

∈ L satisfying

¯

d

p

((v

1

j

, . . . , v

m

j

), (l

k

, . . . , l

k

)) ≤

¯

d

p

((v

1

j

, . . . , v

m

j

), (l

h

, . . . , l

h

)),

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

460

for each l

h

∈ L, where (l

h

, . . . , l

h

) and (l

k

, . . . , l

k

) are

the vectors of m replicas of l

h

and l

k

, respectively.

Thus, L(x

j

) consists of those labels that minimize

the distance to the vector of individual assessments.

Notice that L(x

j

) = {l

r

, . . . , l

r+s

} is always an inter-

val, because it contains all the terms from l

r

to l

r+s

,

where r ∈ {1, . . . , g} and 0 ≤ s ≤ g−r. Two different

cases are possible:

1. If s = 0, then L(x

j

) contains a single label, which

will automatically be the collective assessment

l(x

j

) of the alternative x

j

.

2. If s > 0, then L(x

j

) has more than one label. In

order to select the most suitable label of L(x

j

),

we now introduce L

∗

(x

j

), the set of all the labels

l

k

∈ L(x

j

) that fulfill

¯

d

p

((l

k

, . . . , l

k

), (l

r

, . . . , l

r+s

))≤

¯

d

p

((l

h

, . . . , l

h

), (l

r

, . . . , l

r+s

)),

for all l

h

∈ L(x

j

), where (l

k

, . . . , l

k

) and

(l

h

, . . . , l

h

) are the vectors of s + 1 replicas of l

k

and l

h

, respectively.

(a) If the cardinality of L(x

j

) is odd, then L

∗

(x

j

)

has a unique label, the median term, that will

be the collective assessment l(x

j

).

(b) If the cardinality of L(x

j

) is even, then L

∗

(x

j

)

has two different labels, the two median terms.

In this case, similarly to the proposal of (Balin-

ski and Laraki, 2007a), we consider the low-

est label in L

∗

(x

j

) as the collective assessment

l(x

j

).

It is worth pointing out two different cases when

we are using induced Minkowski distances.

1. If p = 1, we obtain the same collective assess-

ments that those given by MJ, the median

4

of the

individual assessments. However, the final results

are not necessarily the same that in MJ because we

use a different tie-breaking process, as is shown

later.

2. If p = 2, each collective assessment is the clos-

est label to the “mean” of the individual assess-

ments

5

, which is the one chosen in the Range Vot-

ing (RV) method

6

(see (Smith, 2007)).

4

It is more precise to speak about the interval of medi-

ans, because if the assessments’ vector has an even number

of components, then there are more than one median. See

(Monjardet, 2008).

5

The chosen label is not exactly the arithmetic mean of

the individual assessments, because we are working with a

discrete spectrum of linguistic terms and not in the continu-

ous one of the set of real numbers.

6

RV works with a finite scale given by equidistant real

numbers, and it ranks the alternatives according to the arith-

metic mean of the individual assessments.

Notice that when we choose p ∈ (1, 2), we find

situations where the collective assessment is located

between the median and the “mean”. This allows us

to avoid some of the problems associated with MJ and

RV.

3.3 Tie-breaking Method

Usually there exist more alternatives than linguistic

terms, so it is very common to find several alterna-

tives sharing the same collective assessment. But irre-

spectively of the number of alternatives, it is clear that

some of them may share the same collective assess-

ment, even when the individual assessments are very

different. For these reasons it is necessary to intro-

duce a tie-breaking method that takes into account not

only the number of individual assessments above or

below the obtained collective assessment (as in MJ),

but the positions of these individual assessments in

the ordered scale associated with L.

As mentioned above, we will calculate the differ-

ence between two distances: one between l(x

j

) and

the assessments higher than l(x

j

) and another one be-

tween l(x

j

) and the assessments lower than the l(x

j

).

Let v

+

j

and v

−

j

the vectors composed by the assess-

ments v

i

j

from

v

1

j

, . . . , v

m

j

higher and lower than

the term l(x

j

), respectively. First we calculate the two

following distances:

D

+

(x

j

) =

¯

d

p

v

+

j

, (l(x

j

), . . . , l(x

j

))

,

D

−

(x

j

) =

¯

d

p

v

−

j

, (l(x

j

), . . . , l(x

j

))

,

where the number of components of (l(x

j

), . . . ,l(x

j

))

is the same that in v

+

j

and in v

−

j

, respectively (obvi-

ously, the number of components of v

+

j

and v

−

j

can

be different).

Once these distances have been determined, a new

index D(x

j

) ∈ R is calculated for each alternative

x

j

∈ X: the difference between the two previous dis-

tances:

D(x

j

) = D

+

(x

j

) − D

−

(x

j

).

By means of this index, we provide a kind of com-

pensation between the individual assessments that are

bigger and smaller than the collectiveassessment, tak-

ing into account the position of each assessment in the

ordered scale associated with L.

For introducing our tie-breaking process, we fi-

nally need the distance between the individual assess-

ments and the collective one:

E(x

j

) =

¯

d

p

(v

1

j

, . . . , v

m

j

), (l(x

j

), . . . , l(x

j

))

.

A LINGUISTIC GROUP DECISION MAKING METHOD BASED ON DISTANCES

461

Notice that for each alternative x

j

∈ X, E(x

j

)

minimizes the distance between the vector of individ-

ual assessments and the linguistic labels in L, such as

has been considered above in the definition of L(x

j

).

The use of the index E(·) is important in the

tie-breaking process because if two alternatives share

the same couple (l(·), D(·)), the alternative with the

lower E(·) is the alternative whose individual assess-

ments are more concentrated around the collective as-

sessment, i.e., the consensus is higher.

Summarizing, for ranking the alternatives we will

consider the following triplet

T(x

j

) = (l(x

j

), D(x

j

), E(x

j

)) ∈ L× R × [0, ∞)

for each alternative x

j

∈ X. The sequential process

works in the following way:

1. We rank the alternatives through the collective as-

sessments l(·). The alternatives with higher col-

lective assessments will be preferred to those with

lower collective assessments.

2. If several alternativesshare the same collective as-

sessment, then we break the ties through the D(·)

index. The alternatives with a higher D(·) will be

preferred.

3. If there are still ties, we break them through the

E(·) index, in such a way such that the alterna-

tives with a lower E(·) will be preferred.

Formally, the sequential process can be introduced

by means of the lexicographic weak order on X

defined by x

j

x

k

if and only if

1. l(x

j

) ≥ l(x

k

) or

2. l(x

j

) = l(x

k

) and D(x

j

) > D(x

k

) or

3. l(x

j

) = l(x

k

), D(x

j

) = D(x

k

) and E(x

j

) ≤ E(x

k

).

Remark. Although it is possible that ties still exist,

whenever two or more alternatives share T(·), these

cases are very unusual when considering metrics with

p > 1.

7

For instance, consider seven voters that assess

two alternatives x

1

and x

2

by means of the set of lin-

guistic terms given in Table 1. Table 3 includes these

assessments arranged from the lowest to the highest

labels.

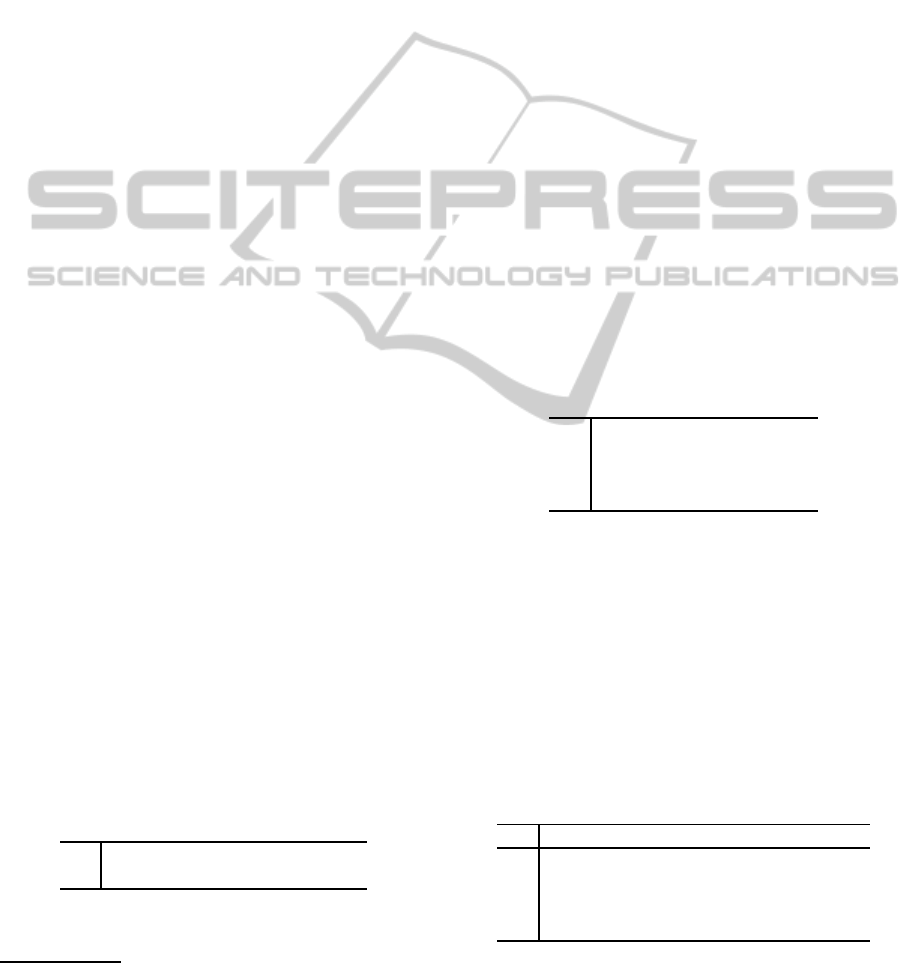

Table 3: Individual assessments.

x

1

l

2

l

2

l

2

l

2

l

4

l

4

l

6

x

2

l

2

l

2

l

2

l

2

l

3

l

5

l

6

It is easy to see that for p = 1 we have T(x

1

) =

T(x

2

) = (l

2

, 8, 8), then x

1

∼ x

2

(notice that MJ and

7

The Manhattan metric (p = 1) produces more ties than

the other metrics in the Minkowski family because of the

simplicity of its calculations.

RV also provide a tie). However, if p > 1, the tie

disappears. So, we have x

2

≻ x

1

, excepting wherever

p ∈ (1.179, 1.203), where x

1

≻ x

2

.

4 AN ILLUSTRATIVE EXAMPLE

This section focus on how the election of the parame-

ter p is relevant in the final ranking of the alternatives.

We show this fact through an example. We consider a

case where the median of the individual assessments

is the same for all the alternatives. In this example

we use the set of six linguistic terms L = {l

1

, . . . , l

6

}

whose meaning is shown in Table 1.

As mentioned above, the sequential process for

ranking the alternativesis based on the triplet T(x

j

) =

(l(x

j

), D(x

j

), E(x

j

)) for each alternative x

j

∈ X.

However, by simplicity, in the following example we

only show the first two components, (l(x

j

), D(x

j

)). In

this example we also obtain the outcomes provided by

MJ and RV.

Example 2. Table 4 includes the assessments given

by six voters to four alternatives x

1

, x

2

, x

3

and x

4

ar-

ranged from the lowest to the highest labels.

Table 4: Assessments in Example 2.

x

1

l

1

l

2

l

4

l

4

l

4

l

6

x

2

l

1

l

1

l

3

l

4

l

6

l

6

x

3

l

2

l

2

l

2

l

4

l

5

l

6

x

4

l

1

l

1

l

4

l

5

l

5

l

5

Notice that the mean of the individual assess-

ments’ subindexes is the same for the four alterna-

tives, 3.5. Since RV ranks the alternatives accord-

ing to this mean, it produces a tie x

1

∼ x

2

∼ x

3

∼ x

4

.

However, it is clear that this outcome might not seem

reasonable, and that other rankings could be justified.

Using MJ, where l(x

1

) = l(x

4

) = l

4

> l

3

= l(x

2

) >

l

2

= l(x

3

) and, according to the MJ tie-breaking pro-

cess, we have t(x

1

) = −1 < 1 = t(x

4

). Thus, MJ pro-

duces the outcome x

4

≻ x

1

≻ x

2

≻ x

3

.

Table 5: (l(x

j

), D(x

j

)) in Example 2.

p = 1 p = 1.25 p = 1.5

x

1

(l

4

, −3) (l

4

, −2.375) (l

4

, −2.008)

x

2

(l

3

, 10) (l

3

, 2.264) (l

3

, 1.888)

x

3

(l

2

, 9) (l

3

, 2.511) (l

3

, 2.254)

x

4

(l

4

, −3) (l

4

, −2.815) (l

4

, −2.682)

We now consider the distance-based procedure for

six values of p.In Table 6 we can see the influence of

these values on (l(x

j

), D(x

j

)), for j = 1, 2, 3, 4.

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

462

Table 6: (l(x

j

), D(x

j

)) in Example 2.

p = 1.75 p = 2 p = 5

x

1

(l

4

, −1.770) (l

3

, 1.228) (l

3

, 0.995)

x

2

(l

3

, 1.669) (l

3

, 1.530) (l

3

, 1.150)

x

3

(l

3

, 2.104) (l

3

, 2.010) (l

4

, −0.479)

x

4

(l

4

, −2.585) (l

3

, 0.777) (l

3

, 0.199)

For p = 1 we have T(x

1

) = (l

4

, −3, 7), T(x

2

) =

(l

3

, 10, 11), T(x

3

) = (l

2

, 9, 9) and T(x

4

) = (l

4

, −3, 9).

Then, we obtain x

1

≻ x

4

≻ x

2

≻ x

3

, a different out-

come than obtained using MJ. For p = 1.25, p = 1.5

and p = 1.75 we obtain x

1

≻ x

4

≻ x

3

≻ x

2

; and for

p = 2 and p = 5 we have x

3

≻ x

2

≻ x

1

≻ x

4

.

5 CONCLUDING REMARKS

In this paper we have presented an extension of

the Majority Judgement voting system developed by

(Balinski and Laraki, 2007a; Balinski and Laraki,

2007b; Balinski and Laraki, 2007c). This extension is

based on a distance approach but it also uses linguis-

tic labels to evaluate the alternatives. We choose as

the collective assessment for each alternative a label

that minimizes the distance to the individual assess-

ments. It is important to note that our proposal coin-

cides in this aspect with Majority Judgement when-

ever the Manhattan metric is used.

We also provide a tie-breaking process through the

distances between the individual assessments higher

and lower than the collective one. This process is

richer than the one provided by Majority Judgement,

that only counts the number of alternatives above

or below the collective assessment, irrespectively of

what they are. We also note that our tie-breaking

process is essentially different to Majority Judgement

even when the Manhattan metric is considered.

It is important to note that using the distance-

based approach we pay attention to all the individual

assessments that have not been chosen as the collec-

tive assessment. With the election of a specific met-

ric of the Minkowski family we are deciding how to

evaluate these other assessments. This aspect pro-

vides flexibility to our extension and it allows to de-

vise a wide class of voting systems that may avoid

some of the drawbacks related to Majority Judgement

and Range Voting without losing their good features.

This becomes specially interesting when the value of

the parameter p in the Minkowski family belongs to

the open interval (1, 2), since p = 1 and p = 2 cor-

respond to the Manhattan and the Euclidean metrics,

respectively, just the metrics used in Majority Judge-

ment and Range Voting. For instance, the election of

p = 1.5 allows us to have a kind of compromise be-

tween both methods.

As shown in the previous examples, when the

value of parameter p increases, the distance-based

procedure focuses more and more on the extreme as-

sessments. However, if the individual assessments are

well balanced on both sides, the outcome is not very

affected by the parameter p.

In further research we will analyze the proper-

ties of the presented extension of Majority Judgement

within the Social Choice framework.

REFERENCES

Arrow, K. J. (1963). Social Choice and Individual Values.

John Wiley and Sons, 2nd edition.

Balinski, M. and Laraki, R. (2007a). A theory of measuring,

electing and ranking. In Proceedings of the National

Academy os Sciences of the United States of America,

volume 104, pages 8720–8725.

Balinski, M. and Laraki, R. (2007b). Election by majority

judgement: Experimental evidence. Ecole Polytech-

nique – Centre National de la Recherche Scientifique,

Cahier 2007-28.

Balinski, M. and Laraki, R. (2007c). The majority judge-

ment. In http://ceco.polytechnique.fr/judgement-

majoritaire.html.

Felsenthal, D. S. and Machover, M. (2008). The majority

judgement voting procedure: a critical evaluation. In

Homo Oeconomicus, volume 25, pages 319–334.

Garc´ıa-Lapresta, J. L. and Mart´ınez-Panero, M. (2009).

Linguistic-based voting through centered owa oper-

ators. In Fuzzy Optimization and Decision Making,

volume 8, pages 381–393.

Monjardet, B. (2008). Math`ematique sociale and mathe-

matics. a case study: Condorcet’s effect and medians.

In Electronic Journal for History of Probability and

Statistics 4.

Smith, W. D. (2007). On Balinski & Laraki’s majority

judgement median-based range-like voting scheme. In

http://rangevoting.org/MedianVrange.html.

A LINGUISTIC GROUP DECISION MAKING METHOD BASED ON DISTANCES

463