BEHAVIOR OF HOME CARE INTELLIGENT VIRTUAL AGENT

WITH PRE-ThINK ARCHITECTURE

Dilyana Budakova

Technical University of Sofia, Branch Plovdiv, 61, Sankt Peterburg Blvd., Plovdiv, Bulgaria

Keywords: Intelligent virtual agents (IVA), Architecture, Ambient intelligence, Behavior, Emotions, Needs,

Rationalities, Knowledge, Ambivalence, Choice.

Abstract: This paper considers the architecture and the behaviour of an intelligent virtual agent, taking care of the

cosiness and the health-related features of a family house. The PRE-ThINK architecture is proposed and its

components are considered. The dynamics of the decision making process in problem situations arising with

the implementation of this architecture is shown. It is assumed that an agent, capable of taking the best

possible decision in a critical situation will win the family members’ trust.

1 INTRODUCTION

A great amount of contemporary neurophysiologic

research confirms the main role of emotions in

rational behaviour (Damasio, A. R., 1994).

According to Turing, if a device that could “think”

as the human brain does is to be designed, then it has

to “feel” as well. Since 1979 this idea has been the

basis of the emotional computers and emotional

intelligent agents.

Ortony, Clore, and Collins (OCC model) define a

cognitive approach for looking at emotions. This

theory is extremely useful for the project of

modelling agents which can experience emotions.

The cornerstone of their analysis is that emotions are

“valenced reactions.” The authors do not describe

events in a way that will cause emotions, but rather,

emotions can occur as a result of how people

understand events.

The first modification to the OCC model is to

allow for the definition of different emotions with

respect to others, which is known as social emotions.

Social emotions can be defined as one’s emotions

projecting on or affected by others.

According to (Benny Ping-Han Lee et. al., 2006)

mixed emotions, especially those in conflict, sway

agent decisions and result in dramatic changes in

social scenarios. However, the emotion models and

architectures for virtual agents are not yet advanced

enough to be imbued with coexisting emotions

(Benny Ping-Han Lee et al., 2006).

The PRE-ThINK architecture, presented here,

will contribute to some advance in this direction, we

believe. The PRE-ThINK architecture allows for

modelling an IVA, having capabilities to detect and

analyze conflicts. Problem situations evoke

conflicting thoughts, accompanied by mixed

emotions and they are related to a number of

different ways of action. The agent considers in

advance (Pre-Think) in what way each possible

action in a critical situation would reflect over all

individuals concerned by it. The originated thoughts

are assessed from emotional, rational and needs-

related points of view in accordance with the

knowledge, priorities and principles of the agent.

Agent’s behaviour motivators are its needs

according to Maslow’s theory. (Maslow A. H.,

1970).

The rest of this paper is organized as follows:

Section 2 gives examples of application and details

on the topic; Section 3 considers existing agent

architectures; in section 4 the PRE-ThINK

architecture is presented; Section 5 describes an

experimental setting and the structural scheme of the

program system; the algorithm for defining the

condition of a flower grown by the user is discussed

here as well as behavioral rules for the IVA are

considered; in section 6 an experiment conducted

with the system is presented, IVA’s capabilities to

take a decision in an invented scenario are described,

and the way of influence of the action/passivity/non-

intervention on the side of an IVA over the object

under observation is investigated and presented here;

157

Budakova D..

BEHAVIOR OF HOME CARE INTELLIGENT VIRTUAL AGENT WITH PRE-ThINK ARCHITECTURE.

DOI: 10.5220/0003127801570166

In Proceedings of the 3rd International Conference on Agents and Artificial Intelligence (ICAART-2011), pages 157-166

ISBN: 978-989-8425-41-6

Copyright

c

2011 SCITEPRESS (Science and Technology Publications, Lda.)

Section 7 contains a graphical visualization of the

results of this experiment; the last 8

th

section is a

generalization of the results of this project also

discussing certain directions for future development.

2 BACKGROUND

The software agents that know the interests, habits

and priorities of the user will be able to actively

assist him with work and information and,

personalizing themselves, to take part in the

activities in his leisure time (Picard, R. S, 1998).

Users trust virtual fitness-instructors (Zsófia

Ruttkay et. al., 2006) to recover from traumas or just

to do some exercise, listen to virtual reporters

(Michael Nischt et. al., 2006) and rely on IVA

teachers (Jean-Paul Sansonnet et. al. 2006) to clarify

difficult parts from the school subjects. Some users

prefer medical assistants – IVA – to explain the

results from their patients' medical checks (T. W.

Bickmore et. al.) etc.

The more serious the role of the agent in the

application and the more useful for the user it is, the

better it is perceived. (D.Budakova et. al., 2010).

Many researchers model IVA behaviour aiming

at establishing a trust-based relationship between the

user and the IVA (Celso M. de Melo et. al. 2009,

Jonathan Gratch et. al 2007, Timothy W. Bickmore

et. al.2007, Radoslaw Niewiadomski et. al. 2008).

Thus IVA-s are modelled, having capabilities to

express so called moral emotions (pity, gladness,

sympathy, remorse) (Celso M. de Melo 2009), and

the way in which the frequency and the moment of

sending a positive feedback from the user to the IVA

(Jonathan Gratch et. al.2007), influence the trust

between them, is investigated. Agent's behaviour is

modelled so that it follows the user's behaviour

(Jonathan Gratch et. al.2007).

A hypothesis has been derived (Budakova D. et.

al., 2010), that agents with subjective behaviour

could be well accepted among users, if this

behaviour is a well grounded and fair subjective

behaviour. Only in this case it will lead to users’

reactions like sharpening their attention, increasing

their trust in the agent and more natural perception

of the IVA. An option for the user to try to meet the

requirements of the IVA and gain its approval exists

as well.

It is assumed that an intelligent virtual agent

(IVA), capable of detecting a critical situation, of

analyzing it and choosing the best possible option to

take care of all individuals concerned, would easily

gain trust. Such a behavioural model is presented in

this paper with the help of the PRE-ThINK

architecture.

The IVA presented in this paper is supposed to

take care both of the desired and of health-related

features of the environment in a family house. These

two goals could be in conflict if a family member

sets environment features which are not healthy.

This evokes mixed, conflicting and social thoughts

as well as emotions in the agent. It has to choose

whether and until when to continue maintaining the

pre-set features or to change them into more

appropriate ones.

An IVA is not only able to follow the user’s

behaviour and desire but after preliminary

consideration (PRE-ThINK) it can also choose the

best possible action. The purpose of the agent is to

possibly take the best care of the family and the

inhabitants of the house even if the undertaken

action does not precisely correspond to their will. It

is assumed that such a type of subjective behaviour

would help in establishing trust between the IVA

and the family members.

3 AGENT’S ARCHITECTURES

In order for the principles of intelligent behaviour to

be shown and examined, there have been a number

of models, introduced recently, that include virtual

world and emotional software agents, inhabiting it

(Franklin, S. 2000, Wright, I. P., Sloman, A., 1996,

Reilly, W. S., 1996, D. Budakova, L. Dakovski,

2005). In a number of models there has been shown

how emotions are used as primary reasons and

means of learning (Gadanho S. C., 2003). In others,

the emotions are defined as an evaluating system

that works automatically on perceptive and cognitive

level through measuring importance and usefulness

(McCauley L., Franklin Stan, 1998).

In the architectures of intelligent agents with

clearly expressed emotional element, the

components are grouped as follows: behavioral

system, motive system, inner stimuli, generator of

emotions (Velásquez, J. D. 1997); meta-

management subsystem, consultative subsystem,

subsystem action (Wright, I. P., Sloman, A., 1996);

synthesis of phrases in natural language,

understanding phrases in natural language,

sensations and conceptions, inductive conclusions,

memory, emotions, social behavior and knowledge,

physical state and face expression, generator of

actions (Reilly,W.S., 1996).

The cognitive cycle of IDA architecture

(Franklin, S., 2000, 2001, 2004) comprises nine

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

158

steps: perception, summarized perception, local

associations, competition for awareness, spreading

the awareness, recovery of the resources,

hierarchical aims defining, choosing an action,

implementing an action. Emotions play a central part

in the perception, memory, consciousness and

choice of an action in the IDA architecture.

In (Benny Ping-Han Lee, 2006) an improved

emotion model integrated with decision making

algorithms is proposed to deal with two topics: the

generation of coexisting emotions, and the resolution

to ambivalence, in which two emotions conflict.

The SEEM architecture (Simultaneous Emotion

Elicitation Model) considered in (Benny Ping-Han

Lee, 2006) is based on the OCC model. The

emotions are evaluated from the point of view of an

emotion set, action set and goal set, and the conflict

between contradictory emotions is solved by IVA

undertaking actions for weakening the negative

emotions and strengthening the positive ones.

A type of architecture for an emotional agent is

used in (Dilyana Budakova, Lyudmil Dakovski,

2006), which is a previous version of the

architecture considered here. Its basic components

are emotions, needs, rules, meta-rules, knowledge of

oneself, knowledge of places from the world,

actions. This type of architecture is used to model

the behaviour of an IVA, intended to inhabit a

virtual world and to be able to visit places around

the world depending on its own state. If, for

example, the IVA needs money – it goes to work for

a company; if hungry and has money at disposal -

goes to a restaurant, if ill – goes to a hospital etc.

New places can arise in the virtual world. The agent

receives information about their characteristics and

evaluates them in accordance with its knowledge

and principles. Depending on its principle for

choosing the better place among a number of

alternatives, the IVA can change its habits and start

visiting more frequently a new restaurant instead of

the ones it used to visit earlier.

A cognitive-emotional analysis model and

behaviour in the terms of Generalized nets

(Atanassov K., 1991) has been presented in (Dilyana

Budakova, Lyudmil Dakovski, 2005).

The IVA architecture presented here is named

PRE-ThINK an abbreviation from the initial letters

of the basic components making it up - Principles,

Rationalitis, Emotions, Thoughts, Investigations,

Needs, and Knowledge. New components to the

architecture have been proposed. The priority of the

needs in critical situations is defined in a new way.

Both IVA’s state and the evaluation of each situation

are also defined in a new and better way. The

process of decision making in conflict situations is

new as well. A new and more useful practical

application of the PRE-ThINK architecture is

suggested.

4 THE PRE-ThINK

ARCHITECTURE

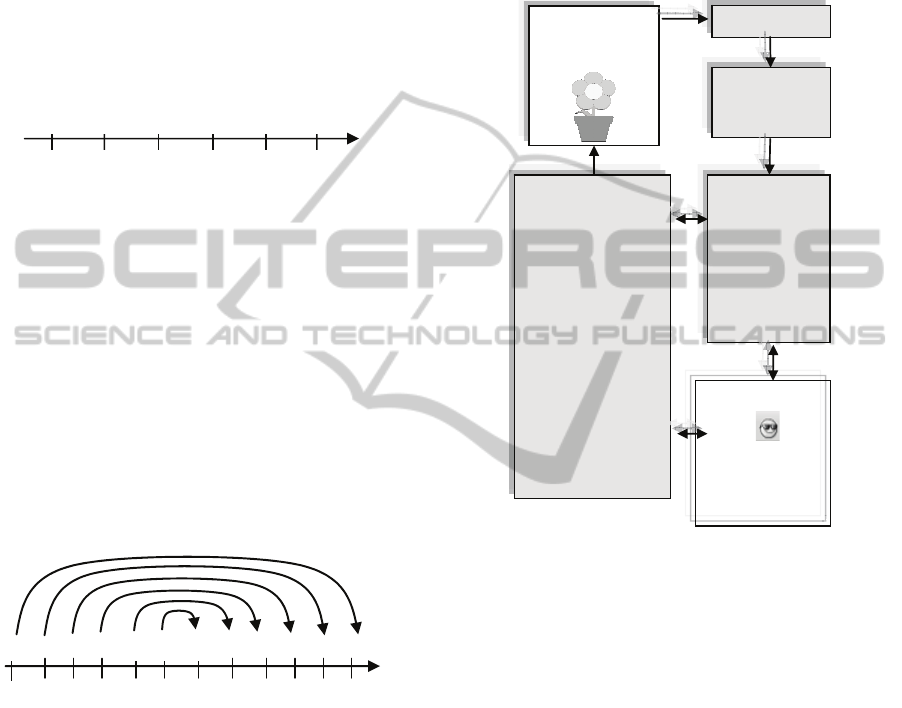

The PRE-ThINK architecture (fig.1) consists of the

following components: Principles, Rationalities,

Emotions (+/-), Thoughts, Investigations, Needs and

Knowledge. The possibility for each component to

be influenced by and to have an impact on each of

the others is shown in the figure below.

The architecture can be implemented in various

virtual and real worlds.

The situations, conditions, objects, places and

events, belonging to these worlds are represented by

their characteristics.

These characteristics can be evaluated by the

IVA; they can originate thoughts in it and activate

some of its action rules.

The IVA takes its decisions based on its

principles. The following IVA principles have been

modelled: “Choose the better possible action";

"Neglect the basic needs until reaching a definite

threshold of dissatisfaction, giving priority to the

highest-order needs”, “Take care of the plants in the

family house”, “Observe the characteristics of the

home environment set by the family members".

Needs

Principles

RationalitiesInvestigation

Knowledge

Emotions

Thoughts

Figure 1: The PRE-ThINK Architecture.

New principles and behavioral rules can be

formed based on the accumulation of observations

and their generalization. This is realized by means

of the subprograms of the architecture component

named Investigations.

The basic groups of emotions according

(Goleman D., 1995) are: anger (a), sorrow (s), fear

BEHAVIOR OF HOME CARE INTELLIGENT VIRTUAL AGENT WITH PRE-ThINK ARCHITECTURE

159

(f), joy (j), love (l), surprise (sr), disgust (d) and

shame (sh).

In case we denote the emotions in the

corresponding groups by E

a

, E

s

, E

f

, E

j

, E

l

, E

sr

, E

d

,

E

sh

, then they will have the value of Х depending

on their place in the hierarchy of the OCC model. A

scale of values for measuring emotions is

introduced. Each thought of the agent and each

characteristics of a situation, object, action or

condition receives an emotional value of either

positive (+) or negative (-) sign.

Figure 2: Needs arrangement and their weight at a normal

state of the agent in a calm situation.

An IVA, just like people, uses the needs as

motivators for its actions. According to Maslow's

theory the needs in normal human development are

arranged as follows: physiological (ph), safety (s),

love and belonging (lb), esteem and self-assessment

(es), self-actualization (sa), aesthetics (a). In the

architecture suggested here the needs are associated

with weights W

need

corresponding to their priority.

W

ph

, W

s

, W

lb

, W

es

, W

sa

, W

a

. Each thought of the

agent and each characteristic of objects, events,

places or actions are related to a certain need and

they receive the weight of this need.

W

W

ph

W

s

W

lb

W

es

W

sa

W

a

W

au

W

sau

W

esu

W

lbu

W

su

W

phu

10203040506070 8090100110120

Figure 3: Arrangement of the fulfilled and unfulfilled

needs in a crisis situation and their weight.

Exemplary values for the need weights used in

the experiments are given in Fig. 2.

When, because of the occurrence of an event,

one or more needs prove to be unfulfilled, i.e., there

is a crisis situation, then the needs rearrange so that

the unfulfilled ones receive first priority. The

unfulfilled needs are arranged in an order, opposite

to the order of needs weights in a normal state of the

agent. The unfulfilled needs are denoted by W

phu

,

W

su

, W

lbu

, W

esu

, W

sau

, W

au

, and an exemplary scale

of their weight values is given in fig. 3.

A number of needs can prove to be unfulfilled at

a particular moment and then a specific arrangement

of the agent’s priorities occurs.

The rationalistic components are, for example,

importance, practicality, usefulness etc. A scale for

rationalistic assessment of the characteristics is

introduced. Each thought, characteristic, pre-

requisite, conclusion or action is assessed by the

values on this scale.

User

Users will

characterize the

environment

Observed

object

Flower pot

IVA

Principles

Rationalities

Emotions

Thoughts

Investigations

Needs

Knowledge

Real and optimal

characteristics of

the environment

Internet-based

controller that

allows a home

owner to maintain

total control of a

home from any

computer or web

enabled device.

Receive e-mails or

text messages. USB

controller. PLC

devices.

[

smart home

]

Camera

Analysis of

the condition

of the ob

j

ect

Figure 4: Structural scheme of the experimental setting.

Let a thought addressed to the situation s be

denoted by Th_s. If the importance of the thought

Th_s is denoted by I

Th_s

, the weight of the need,

related to this thought is expressed by W

needTh_s

, the

emotion implied by this thought is marked by

E

emot.Th_s

, then, following the formulae for

calculating the assessment value of the thought O

Th_s

corresponding to the situation s will be:

sThsneedThsThemotsTh

IWEO

___._

**

If a thought is partially related to more than one

need then the sum of the weight percentages of the

needs to which it is related is taken into account in

the formulae.

Each thought is related to an action.

The assessment values of the thoughts related to

one and the same action in one and the same

situation are put on the one basin of the “thoughts

balance”. The assessment values of the thoughts for

the same situation, but related to another action, are

put on another basin etc. Our “thoughts balance”

W

W

ph

W

s

W

lb

W

es

W

sa

W

a

10 20 30 40 50 60

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

160

will have as many basins as the alternative actions

considered by the agent in the particular situation

are. The module of the assessment values is summed

and the action from the basin having the highest

assessment value is undertaken.

The emotion experienced by the IVA in a

particular situation is determined by the prevalent

emotion. After an action has been undertaken, the

agent keeps track of the effect from it. The IVA’s

state and priorities are changed anew and they

depend on whether the problem has been solved or

there is a new conflict situation to be solved.

5 EXPERIMENTAL SETTING

For the purposes of the experiment an IVA intended

to maintain the desired by the user characteristics of

the environment as well as to take care of an

observed object – a flower pot - is considered.

The user can predetermine the temperature in the

house, the amount of light, the mode of flower

watering, and the critical level of air pollution.

The agent is capable of accumulating knowledge

concerning the condition of the observed object for

particular characteristics of the environment. It can

change the real characteristics of the environment in

the house in its own judgement. The structural

scheme of the experimental setting is shown in fig.4.

5.1 Defining the Condition

of the Grown Plant

A model of a virtual plant has been realized. The

model includes: a scale of the conditions of the

plant, the optimal temperature, humidity and light

for it to grow. For what number of consequent days

with inappropriate / appropriate temperature,

humidity or light the plant will get worse/better and

to what extent; for what number of consequent days

with temperature, humidity or light going beyond

the critical survival thresholds the plant will die.

The agent can observe the plant, at the same time

receiving the result from a subprogram, doing an

analysis and reporting the information about its

condition. The virtual plant changes its size and

condition when appropriate .bmp files containing

pictures of the plant corresponding to each of its

possible conditions are changed.

The following algorithm has been developed for

the purpose of the observance and the definition of

the condition of a real plant: Pictures of the plant are

taken every hour. The image from the camera is

recorded in a .bmp and stored in a database. The

number of pixels for the whole image is found. The

whole image is checked and all background and pot

pixels are filtered, thus keeping only the plant pixels.

The number of plant pixels in the image is found.

The percentage of the plant pixels is calculated

dividing them by the number of pixels of the whole

image and the result is multiplied by 100. The

percentage of the plant pixels is kept on a database.

This percentage grows together with the plant

growth. When the leaves fall down or when the

flower is dry, the percentage of its pixels decreases.

Thus the condition of the plant is defined and the

result is transmitted to the IVA.

5.2 Initialization on IVA

for the Experiment

At the beginning of the experiment there is no

conflict situation and the needs of the PRE-ThINK

agent are arranged from the basic ones to the high-

order ones according to the principle actions and

things related to the high-order needs to be

preferred.

For simplicity of the experiment only gladness (a

positive emotion) and anxiety (a negative emotion)

are modelled as alternatives with corresponding

values of ±1.

The agent has at its disposal a multitude of

thoughts concerning the plant, the user and the agent

itself. Each thought is related both to an emotion,

and to a need, and also has its rational component –

importance – with a value from 1-6 according to the

assessment accepted by the programmer.

An IVA can define the following stages in the

condition of the plant: it comes into leaves or its

leaves fall down.

An IVA can also keep track of the environmental

characteristics light, temperature, humidity,

pollution. An IVA can execute the following

actions: increasing or decreasing the temperature;

increasing humidity (watering the plant); increasing

or decreasing the light and airing.

Watering is done when the soil humidity reduces

up to 5% of its limiting moisture capacity and when

the humidity is not easily accessible by the plant. If

the soil humidifies up to 75% of its limiting moisture

capacity by watering, it is accepted to be its optimal

humidification.

The limiting moisture capacity is the maximum

amount of water the soil could take while filling all

the pores and before drainage occurs.

For simplicity of the experiment watering mode

does not change and the plant is not shifted from one

place to another in order to keep the light unchanged

BEHAVIOR OF HOME CARE INTELLIGENT VIRTUAL AGENT WITH PRE-ThINK ARCHITECTURE

161

during the experiment.

The agent’s behavioural rules are as it follows:

1. Related to the characteristics of the

environment: If a householder pre-sets

environmental characteristics and if at a particular

moment the real characteristics differ from them,

then the necessary action is –> Check whether the

pre-set characteristics are not marked as doubtful or

harmful for somebody in the house and:

If the pre-set characteristics prove to be harmful

or doubtful then inform the user and do not change

the current characteristics.

If there is no information about the usefulness of

the pre-set characteristics or if they are marked as

doubtful then start maintaining the desired

characteristics and register the existence of a

problem (1) related to the characteristics of the

environment.

2. Related to growing the plant in the house: If

the plant is not in perfect condition then the

necessary action is –> compare the currently set

characteristics of the environment with those

characteristics from the database, at which the plant

has already been in perfect or good condition and

correct the characteristics of the environment

accordingly. Register the existence of a problem (2)

related to growing the plant and

Mark the current characteristics as doubtful and

observe the condition of the plant at the new

characteristics for as long as it is known to be

needed by the plant to recover.

If the plant recovers then mark the doubtful

characteristics as harmful for the plant.

If you do not manage to conduct the observation

because of a new change in the environmental

characteristics then save the marking for doubtful

characteristics.

3. Is there a conflict of interests?

If there is more than one problem, then start the

process of decision making of their solution by

generating thoughts about the conflict. Evaluate the

thoughts according to your principles, emotions,

needs and their importance.

6 THE EXPERIMENT

6.1 Experiment 1

The agent maintains the user’s desire characteristics

of the environment but the flower starts

deteriorating. Yet the threshold at which

rearrangement of the needs is going to happen has

not been overcome. The IVA generates thoughts

about the observed deterioration of the flower. The

thoughts are addressed mainly to the probable

reaction on the side of the householder towards the

possible decision of the agent to change the

temperature characteristics in order to improve the

condition of the flower:

Thought 1: The householder will be glad if I change

the temperature so that the flower survives.

A thought addressed to the householder. Positive

emotion – gladness, rational component –

importance with a value of 3, motivator - the need,

esteem and self-assessment with a weight of 40,

action – saving the flower.

1203*40*11_

user

Th

Thought 2: I am not sure what to do – to save the

flower or to follow the householder’s instructions.

A thought addressed to the householder. Negative

emotion – anxiety, rational component – importance

with a value of 1, motivator - safety with a weight of

20, action – maintenance of the characteristics pre-

set by the householder.

201*20*12_

user

Th

Thought 3: I am not sure what to do – to save the

flower or to follow the householder’s instructions.

The assessment of Thought 3 is analogous to the one

of Thought 2.

201*20*13_

user

Th

Thoughts about saving the plant:

Th_1

user

= 120

Thoughts about keeping the pre-set by the user

characteristics unchanged:

Th_2

user

=-20

Th_3

user

=-20

The thoughts about the two alternative actions are

weighed as if on a balance and the IVA takes the

decision for action. The thoughts about saving the

flower obviously overweigh here. Therefore the IVA

undertakes action towards temperature change

corresponding to the optimal temperature for the

plant.

The agent’s state is calculated: the emotion of

gladness prevails based on the thought that saving

the plant would be approved by the householder and

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

162

thus both the esteem and selfassessment of the agent

will go higher.

6.2 Experiment 2

The plant deteriorates and the threshold at which

rearrangement of the unfulfilled needs happens is

overstepped. Thoughts for and against changes in

the pre-set by the householder characteristics are

generated. This time the thoughts are addressed not

to the householder’s reaction but to the flower itself

as well as to its survival. The motivators are

rearranged and the biggest weight now is the weight

of the physiological needs. e.g., the needs are

arranged as it follows: physiological 120, aesthetical

60, self-actualization 50, esteem and self-assessment

40, love and belonging 30, safety 20.

Thought 1: The householder will be pleased.

A thought addressed to the householder. Positive

emotion – gladness, rational component –

importance with a value of 3, motivator – the need,

assessment and self-assessment – with a weight of

40, action – saving the flower.

1203*40*11_

user

Th

Thought 2: I love the flower.

A thought addressed to the plant.

Positive emotion – gladness, rational component –

importance with a value of 3, motivator – love with a

weight of 30, action – saving the flower.

903*30*11_

flower

Th

Thought 3: I am worried about the flower.

A thought addressed to the plant.

Negative emotion, rational component – importance

with a value of 2, motivator – love with a weight of

30, action – saving the flower.

602*30*12_

flower

Th

Thought 4: If I do not take an action, the flower will

be damaged irreversibly.

A thought addressed to the plant: negative emotion –

anxiety, rational component – importance with a

value of 3, motivator – physiological with a weight

of 120, action – saving the flower.

3603*120*13_

flower

Th

Thought 5: The householder might get angry.

A thought addressed to the householder: negative

emotion – anxiety, rational component – importance

with a value of 2, motivator – assessment with a

weight of 40, action – keeping the pre-set by the user

characteristics unchanged.

802*40*12_

user

Th

Thoughts about saving the plant:

Th_1

user

=120

Th_1

flower

=90

Th_2

flower

=-60

Th_3

flower

=-360

Thoughts about keeping the characteristics set by the

user unchanged.

Th_2

user

=80

The thoughts are weighed on a balance in

accordance with the alternative actions of either

saving the plant or keeping the characteristics set by

the householder unchanged.

The agent decides to save the plant and sets the

optimal temperature appropriate for it. The

characteristics at which the plant has started

deteriorating are marked as harmful and never used.

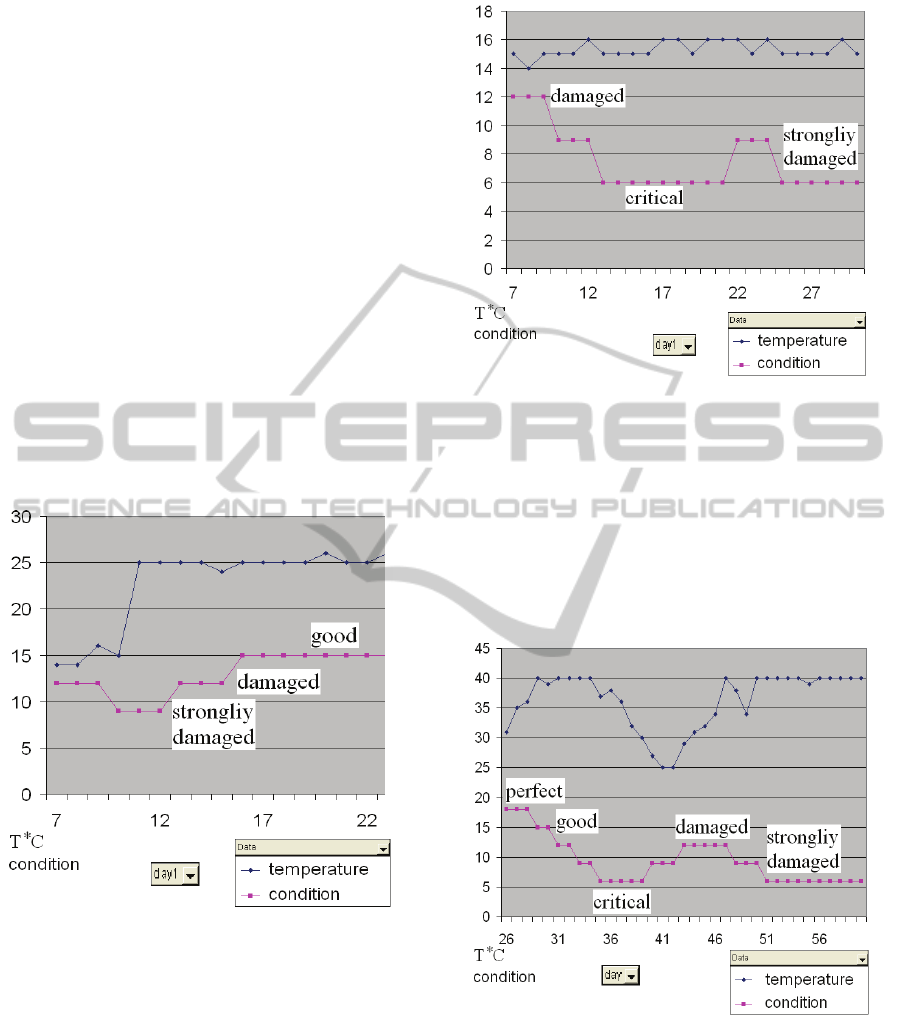

Graphical representation of the condition of the

plant and the temperatures under consideration for

the duration of the experiment – 23 days from the 7

th

to the 30

th

day is given in fig. 5a). It can be seen that

the actions undertaken by the agent save the plant.

The IVA’s state is defined. Anxiety about the plant

survival prevails and the saving actions are the most

important for the IVA. After the salvation of the

plant the conflict is solved and the priorities of the

agent are rearranged anew as they have been initially

set at the beginning of the experiment.

7 GRAPHICAL VISUALIZATION

OF THE EXPERIMENTAL

RESULTS AND DISCUSSION

Graphical representations of three experiments

conducted with the programming system are given

in fig. 5 а), 5b) and 5с).

The temperature in the user's home for the days

of the experiment and the change in the condition of

the plant for the same days are given in the same

figure for a better clearness. The numbers of the

days of the experiment are given on the absciss (x-

axis), while the values on the ordinate (y-axis)

express two things simultaneously: temperature

values in the user’s home and values, corresponding

to the condition of the plant grown. The temperature

values on the y-axis range from 0С to + 30С in

BEHAVIOR OF HOME CARE INTELLIGENT VIRTUAL AGENT WITH PRE-ThINK ARCHITECTURE

163

figure 5а), from 0С to + 18С in fig. 5b), and from

0°С to + 45°С in fig. 5c). The values from 0 to 18

shown on the ordinate axis correspond to the levels

in the condition of the plant as well: 0 corresponds

to a lethal condition; 3 corresponds to a critical

condition; 6 corresponds to a strongly damaged

condition; 9 corresponds to a damaged condition; 12

corresponds to a good condition; 18 corresponds to a

perfect condition of the plant.

Fig. 5а) shows the results from the first

experiment, during which the IVA observes both the

condition of the plant and the characteristics of the

environment set by the householder. After detecting

the problem of the plant worsening, the agent

decides to save the plant in the way described in the

previous section. The condition of the plant here

depends on the IVA principles, on the generated

thoughts and the assessments they receive in

correspondence with the priority in the agent’s

needs, and the values of the assessments for

emotionality and importance.

Figure 5a: The condition of the plant depends on the

decision made by an IVA with PRE-ThINK architecture.

Fig. 5b) shows the results from the second

experiment during which the user has set a desired

temperature of 15С for his/her home, which is not

appropriate for growing the plant so the plant

deteriorates gradually reaching the level of being

"strongly damaged". The IVA here just meets the

will of the householder and the capabilities of the

PRE-ThINK are not used. The condition of the plant

depends on the choice of appropriate environmental

characteristics on the side of the user.

Figure 5b: The condition of the plant depends on the

characteristics of the environment set by the householder.

Fig. 5c) illustrates the third experiment in which

the plant has been left to the mercy of the natural

climate changes and nobody takes care of

maintaining any characteristics of the environment.

It can be seen that with the stable increase in the

temperatures over the critical level of 40С the plant

is crucially damaged.

Figure 5c: The condition of the plant depends on the

climate changes in the environment. T

o

C.

8 CONCLUSIONS

This paper considers the architecture and the

behaviour of an IVA, taking care of the cosiness and

the health-related features of a family house. The

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

164

process of achieving these two goals can elicit

mixed, conflicting, and social emotions and thoughts

in the agent. The suggested PRE-ThINK architecture

is appropriate for work in such problem situations.

The components of the architecture are considered in

the paper. The dynamics of the decision making

process in problem situations arising with the

implementation of the architecture is shown. An

IVA with this type of architecture does not only

follow the behaviour and the desires of the user.

Based on its own principles, knowledge and

priorities in a critical situation, it evaluates the

possibilities for action from emotional, rational and

need-related point of view and chooses the best

possible action. The purpose of the software agent is

to possibly take the best care of the user and

everybody in his/her house even if the undertaken

action does not precisely correspond to the user’s

will. It is assumed that such behaviour would

facilitate the establishment of trust between the IVA

and the user on the one hand; on the other hand, it

could avert certain accidents of everyday life, ill-

intentioned hackers’ attacks or even terrorists’

attacks. The truthfulness of this assumption is a

matter of further investigations.

In order to take the best care of the house the

agent should know as much as possible about the

arguments because of which the householder

determines particular characteristics; it should also

have as many alternatives for action in a conflict

situation as possible (e.g. in the case of flower

growing a good option would be to place the plant in

an automatically assembled greenhouse and thus

isolate it etc.).

It is planned to develop algorithms for defining

the condition of each family member as well as of

their pets.

It is also envisaged to use the prototype of this

programme system with real objects.

An option for the IVA with PRE-ThINK

architecture implementation in programs facilitating

the educational process as well as in adapted for

learning purposes games is under preparation.

A further development of the PRE-ThINK

architecture is related to making a classification of

the IVA principles and building a hierarchy of their

priorities.

Among the important future tasks to solve stands

out also the possibility to compare the experimental

results from this work with already existing ones

from other developments.

ACKNOWLEDGEMENTS

This work has been supported by the Technical

University Sofia, Project 092ni067-17 “Program

system for multi-language teaching of people and

intelligent virtual agents”, 2009-2010.

REFERENCES

Atanassov K., Generalized nets, World Scientific Publ.

Co., Singapore, 1991.

Benny Ping-Han Lee, Edward Chao-Chun Kao, Von-Wun

Soo, Feeling Ambivalent: A Model of Mixed

Emotions for Virtual Agents, IVA 2006, LNAI 4133,

pp. 329-342, Springer., 2006.

Budakova D., L. Dakovski, Computer model of emotional

agents, IVA’06, LNAI 4133, poster, pp. 450, 2006.

Budakova D., L. Dakovski. Modeling a Cognitive-

Emotional Analysis and Behavior. CompSysTech’05,

Varna, Bulgaria, 16-17 June, 2005, pp. II.9-1 - II.9-6.

2005.

Budakova Dilyana, Emine Myumyun, Misli Karaahmed,

How do you like Intelligent Virtual Agents,

CompSysTech’10, paper presented on 17-18 06 2010,

now under print.

Celso M. de Melo, Liang Zheng and Jonathan Gratch,

Expression of Moral Emotions in Cooperating

Agents*, Intelligent Virtual Agents 2009, Sep 14-16,

Amsterdam.

Damasio, A. R. Descartes' Error. Emotion, Reason and

the Human Brain. Avon Books, 1994.

Franklin, S. Modelling Consciousness and Cognition in

Software Agents. Proceedings of the Third

International Conference on Cognitive Modelling,

Groeningen, 2000, NL, Universal Press.

Franklin, S. Automating Human Information Agents. In

Practical Applications of Intelligent Agents, Berlin,

Springer-Verlag, 2001.

Franklin, S., McCauley L. Feelings and Emotions as

Motivators and Learning Facilitators. Computer

Science Division, The University of Memphis,

Memphis, TN 38152, USA, 2004.

Gadanho S. C., Learning Behavior-Selection by Emotions

and Cognition in a Multi-Goal Robot Task; Journal of

Machine Learning Research 4, 2003, 385-412.

Goleman D. Emotional Intelligence. Bantam Books, New

York, 1995.

Jean-Paul Sansonnet, David Leray, Jean-Claude Martin:

Architecture of a Framework for Generic Assisting

Conversational Agents. IVA 2006: 145-156.

Jonathan Gratch, Ning Wang, Jillian Gerten, Edward Fast,

and Robin Duffy, Creating Rapport with Virtual

Agents, Appears in the International Conference on

Intelligent Virtual Agents, Paris, France 2007.

Maslow Abraham H., Motivation and personality,

Published by arrangement with Addison Wesley

Longman, Inc. USA. 1970.

BEHAVIOR OF HOME CARE INTELLIGENT VIRTUAL AGENT WITH PRE-ThINK ARCHITECTURE

165

McCauleyL., Franklin Stan, An architecture for emotion.

Fall Symposium on Emotional and Intelligent: The

tangled knot of cognition, T.Rep. FS-98-03,p.122-127,

Menlo Park,CA,AAAI Press, 1998.

Michael Nischt, Helmut Prendinger, Elisabeth André,

Mitsuru Ishizuka: MPML3D: A Reactive Framework

for the Multimodal Presentation Markup Language.

IVA 2006: 218-229.

Ortonty, A., Clore, G. L., Collins, A.: The Cognitive

Structure of Emotions. Cambridge University Press

(1988).

Picard, R. S., Affective computing, The Mit Press,

Cambridge, Massachusetts, London. 1998.

Reilly, W. S., Believable Social and Emotional Agents.

PhD thesis, CMU-CS-96-138, School of Computer

Science, Carnegie Mellon University, Pittsburgh, PA,

1996.

Radoslaw Niewiadomski1, Magalie Ochs2, and Catherine

Pelachaud, Expressions of empathy in ECAs,

IVA’2008, Tokyo, Japan, pp. 37-44, LNAI, Vol. 5208,

2008.

Timothy W. Bickmore1, Laura M. Pfeifer1 and Michael

K. Paasche-Orlow, Health Document Explanation by

Virtual Agents, IVA’07, Paris, France, pp. 183-196,

LNAI, 2007.

Velásquez,J.D.,Modeling Emotions and Other Motivations

in Synthetic Agents. In: Proceedings of the Fourteenth

National Conference on A. I. and Ninth Innovative

Applications of A.I.Conf., Menlo Park, 1997.

Wright, I. P.,Sloman, A., MINDER1: An Implementation

of a Protoemotional Agent Architecture.

http://www.cs.bham.ac.uk/~axs/ cog _affect/

COGAFF-PROJECT.html, 1996.

Zsófia Ruttkay, Job Zwiers, Herwin van Welbergen,

Dennis Reidsma: Towards a Reactive Virtual Trainer.

IVA 2006: 292-303.

http://www.smarthome.com/aboutsmarthome.html

http://www.smarthome.com/manuals/1132CU_quick_web

.pdf

ICAART 2011 - 3rd International Conference on Agents and Artificial Intelligence

166